1. Introduction

Let

be an infinite-dimensional real Hilbert space equipped with the standard inner product

and the induced norm

for

. We are mainly concerned with the following monotone inclusion:

where both

and

are maximal monotone operators, and the notation “double arrows” means that the mapping may be multi-valued. Throughout this article, we always assume that (

1) has at least zero point. This problem model covers monotone variational inequalities, convex minimizations, optimal control and feature selection in deep learning and so on [

1,

2].

A popular iterative scheme is the following Douglas–Rachford (DR for short) splitting method (cf. Equation (

10) of [

3]). Choose

. Choose

. At

k-th iteration, compute the following:

Denote the DR operator by the following:

Then (

2) becomes the following:

To improve numerical efficiency of the DR splitting method, we may resort to its directed version, written as follows: [

4]

where

and

further satisfy some assumptions (to be specified later), and

is called directed factors. Obviously, in the case of

, it reduces to the DR splitting method above.

For the directed DR splitting method (

5), an important task is to find the largest possible upper bound of directed factors. As we know, the traditional technique of estimating this bound is as follows. First, view (

5) as the Krasnosel’skiĭ–Mann iteration for non-expansive DR operator. Then, borrow the corresponding estimate concerning this iteration as the upper bound of directed factors [

2,

4].

In this article, we aim at deriving the best possible estimates of the directed factors. To this end, we skillfully exploit

firm non-expansiveness (Lemma 1 of [

3]) of the DR operator for the first time. Thus, as long as the directed factors are concerned, our new estimates of their upper bounds are much larger than those [

2,

4].

Clearly, the new technique corresponds to firm non-expansiveness of the DR operator, whereas the traditional one corresponds to its non-expansiveness.

For an application to deep learning, we model rare feature selection [

5,

6] (see (

33) below) into an appropriate monotone inclusion (

36) below, which has been a special case of (

1). As shown in

Section 6, both DR splitting method and its directed version can be used to solve this inclusion, without worrying about the issue of choosing multiple proximal parameters involved in those splitting methods [

7]. Numerical results indicate that they can solve (

36) to similar accuracy to those [

7] in about 7 or 8 seconds for given stopping criteria, but they avoid the time-consuming (tens of minutes or more) trial and error process of determining the parameters. In this sense, the directed the DR splitting method is user-friendly and efficient in solving this feature selection model.

The rest of this article is organized as follows. In

Section 2, we review the definitions of firmly non-expansive operator and maximal monotone operator, and give some related properties. In

Section 3, we formally state the aforementioned directed DR splitting method, with new and much weaker conditions on the directed factors; see (

7) and (

8) below. In

Section 4, we prove its weak convergence of the directed DR splitting method. In

Section 5, we compare new conditions on the directed factors with existing ones, and we numerically demonstrate upper bounds derived by the former are much larger. In

Section 6, we model rare feature selection in deep learning in a new way, and we conduct numerical experiments to confirm the efficiency of our directed DR splitting method in solving the corresponding test problems. Finally, in

Section 7, we conclude this article.

2. Preliminaries

In this section, we first give some basic definitions and then provide some auxiliary results for later use.

Definition 1 ([

3,

8]).

Let be a nonempty subset. An operator is called non-expansive if and only if the following is met:firmly non-expansive if and only if the following is met: Definition 2 ([

3,

8]).

Let be an operator. It is called monotone iff, written as follows:maximal monotone iff it is monotone and for given and the following implication relation holds: For any given operator , its effective domain is defined by the following: .

Assume that

is maximal monotone. A fundamental property is that, as proved by Minty [

9], for any given positive number

and

, there exists a unique

such that

or

, where

I is the identity operator, i.e.,

for all

. Thus,

is well-defined and is called the resolvent of

A.

Definition 3. Let be a convex function. Then for any given the sub-differential of f at x is defined by the following:Each s is called a sub-gradient of f at x. Moreover, if f is further continuously differentiable, then , where is the gradient of f at x. As is well known, the sub-differential of any closed proper convex function in an infinite-dimensional Hilbert space is maximal monotone as well. An important example is the sub-differential of the indicator function defined by

where

is some nonempty closed convex set in

. Moreover, for any given positive number

, we have

where

is usual projection onto

.

Lemma 1 (Theorem 2 of [

8]).

Let μ be any positive number. An operator A on H is monotone if and only if its resolvent is firmly non-expansive. Below, we give a lemma, which characterizes the relation between the problem’s zero point and the fixed-point of N.

Lemma 2 ([

3]).

If solves (1), then there exists such that the following is written: Lemma 3. Assume that both and are maximal monotone operators. Then the resulting eigenoperator is calculated as follows:which must be maximal monotone. Proof. Note that

A and

B are maximal monotone. Thus, the first operator on the right-hand side is maximal monotone. Meanwhile, the linearity of the identity operator means that the second is also maximal monotone [

9]. Maximality of

T follows from [

10]. □

Lemma 4 ([

11,

12]).

Consider any maximal monotone operator . Assume that the sequence in converges weakly to w, and the sequence on domT converges strongly to s. If for any k, then the relation must hold. 3. Direcdted DR Splitting

In this section, we describe in details the directed DR splitting method, i.e., Algorithm 1 just below and make assumptions on

and the directed factors.

| Algorithm 1 Directed DR splitting method |

- 1:

Choose . Choose , and . Set . - 2:

Choose and satisfying ( 7) and ( 8). Compute Set

|

In this article, to guarantee its weak convergence, we make the following assumptions.

Assumption 1. Assume that and in directed DR splitting method satisfy the following:where ε is a prescribed sufficiently small positive number.where σ is chosen in in advance. In particular, in the case of

and

, (

7) and (

8) can be replaced by the following:

In contrast, other existing upper bounds in

Section 5 are around

.

As to

in (

6), we may evaluate it in the following way. First solve the following:

to obtain

, then solve the following:

to obtain

. Thus, we write the following:

The directed DR splitting method above reminds us of the Krasnosel’skiĭ–Mann iteration, which is used for finding a fixed point of a non-expansive operator

N (whose non-expansiveness is implied by firm expansiveness); see [

2,

4,

13] for recent discussions and the references cited therein.

4. Weak Convergence

In this section, we prove weak convergence of Algorithm 1 in the Hilbert space.

For brevity, we may write as throughout this section.

Lemma 5. Assume that . If , then the following: Notice that this lemma was given in the first author’s manuscript, further mentioned in Remark 1. Its proof is elementary and based on the following:

To simplify proof of weak convergence of Algorithm 1, we first give the following result.

Theorem 1. If Assumption 1 holds, then the following is calculated: Proof. It follows from (

6) that the following is valid:

On the other side, Lemma 2 implies that there must exist

such that the following is written:

Thus, we have the following:

So, we can obtain the following:

where the inequality follows from the firm non-expansiveness (Lemma 1 of [

3]) of the DR operator. As to the

term, it is direct to obtain the following:

Combining this with (

13) and the following:

yields the following:

Below, we bound the inner product

in (

14). In view of (

10)–(

12), we have

Thus, we obtain the following:

where the inequality follows from (Lemma 4 of [

14]). Combining this with the following:

yields the following:

Next, we bound the inner product

in (

14). Since the following is calculated:

and

we have the following:

In a word, using the last equality and (

17) to bound (

14) yields the desired result. □

Now we state and prove weak convergence of the sequence generated by Algorithm 1.

Theorem 2. Let be the sequence generated by Algorithm 1. If (7) and (8) hold, then the sequence weakly converges to a fixed point of N. Proof. In view of Theorem 1 and (

7), we can obtain the following:

where

and the following is calculated:

Then we obtain the following:

Combining this with Lemma 5 and (

8) results in the following:

yields the following:

Meanwhile, by Lemma 2 and (

8) we obtain the following:

we can obtain the following:

Obviously, based on (

19)–(

21), we can conclude the following:

It follows from (

7), (

6) and (

23) the following:

On the other hand, as proved in (

22),

is bounded in norm, thus there exists at least one weak cluster point

, i.e., written as follows:

Finally, since is continuous and monotone, it must be maximal monotone. In view of Lemma 4, .

Denoted as the following:

Then, it follows from (

18), (

22) and (

23) that

exists as well.

Below, we show that

weakly converges to

. Let

and

be two weak cluster points of

. Then, repeating the arguments above yields that

and

are solutions. Correspondingly, we set the following:

Consider the following:

where the inequality follows from (

8), which indicates the following:

Meanwhile, we obtain the following:

Combing this with (

23) and taking the limit along

, where

such that

along

weakly converges to

, we obtain the following:

Similarly, we also obtain the following:

Adding these two inequalities yields the following:

and

converges weakly. □

Finally, with the help of the theorem above, we further state and prove weak convergence of the main sequence .

Theorem 3. Let be the sequence generated by Algorithm 1. If (7) and (8) hold, then the main sequence weakly converges to a solution of (1). Proof. In view of (

10)–(

12), we have the following:

Thus, we obtain the following:

Note that (

22) and the following:

imply that

is bounded in norm as well.

On the other hand side, by the definition of

T, we have the following:

where the relation ∋ follows from (

27) and (

28).

Since

is bounded in norm, so does

, where

corresponds to the one in (

25). Thus, there exists at least one weak cluster point, written as follows:

Combining this with (

29), Lemma 3 and Lemma 4 yields the following:

This shows the following:

Finally, we prove uniqueness of the weak cluster point

. Let

and

be two weak cluster points of

. In view of (

27), we have the following:

Since

A is monotone, we further have the following:

as proved in [

14]. Combining this with (

26) yields

. □

Remark 1. In the proof of Theorem 1, there are two crucial parts. One is that, by fully exploiting firm non-expansiveness of the DR operator, we obtain the inequality (13). The other is an appropriate application of (Lemma 4 of [14]) in deriving the inequality (15). In the proof of Theorem 2 except for uniqueness, we point-by-point follow the analytical techniques recently developed in the first author’s manuscript entitled “On an accelerated Krasnosel’skiĭ-Mann iteration”. This manuscript and a revised version entitled “Directed Krasnosel’skiĭ-Mann iteration” can be found in a single pdf file via https://ydong2024.github.io/downloads/directedKM.pdf, (accessed on 22 August 2025). Almost all the main results in the three theorems above are taken from the second author’s thesis, completed in April of 2024, and here are some of them with slight revisions. The exceptions are two proofs of uniqueness of weak cluster point in Theorem 2 and Theorem 3, which are absent in this thesis. Later on, in the revised version just mentioned, the first author completed a detailed proof. Furthermore, proof of the uniqueness of weak cluster points in Theorem 3 is due to the first author.

5. Comparisons

In this section, we numerically demonstrate the assumption (

8) to some extent. Meanwhile, we compare (

8) with other recently proposed ones.

For brevity, we simply set

. Then (

8) reduces to the following:

Be aware that, in contrast to (

8), we no longer introduce the extra

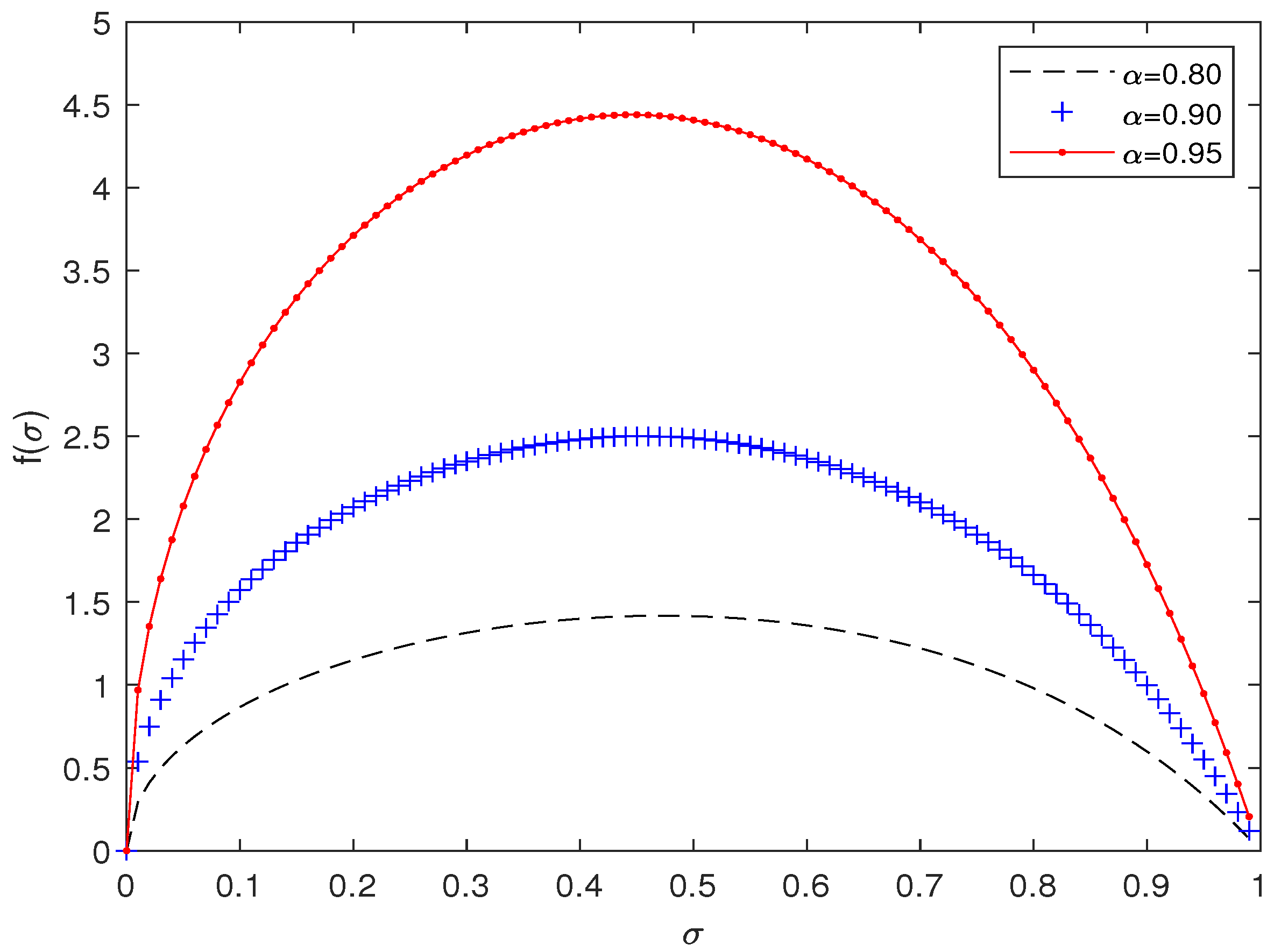

above. First, we present the function of

and

under different values of

, as shown in

Figure 1:

Numerical demonstration of (

30) is given in

Table 1, where

stands for a slightly lower approximation of the maximum of

f in (

30) with respect to

,

stands for the directed factor.

Next, we consider those assumptions made in the first author’s manuscript mentioned in Remark 1. We set

. Thus, they become the following:

Numerical demonstration of (

31) is given in

Table 2.

Finally, we consider the assumptions made in [

2] very recently. We set the following:

in our notation, the algorithm therein can also be written in the form (

6), and the corresponding assumptions become the following:

Numerical demonstration of (

32) is given in

Table 3.

From

Table 1,

Table 2 and

Table 3, we can observe that our computed values of

are consistently larger than the other two for each sampling point. In particular, in the case of

, our new upper bound of

is

, in a sharp contrast to

in

Table 2 and

in

Table 3, respectively.

6. Modeling and Numerical Experiments

In this section, we modeled rare feature selection in deep learning in a new way and confirmed efficiency of Algorithm 1 in solving the corresponding test problems. In our writing style, rather than striving for maximal test problems, we tried to make the basic ideas and techniques as clear as possible.

We performed all numerical experiments via Python 3.9.2.

We compared Algorithm 1 with the classical DR splitting method, selected for their similarities in features, applicability, and implementation effort.

Our test problem is motivated by “rare feature selection” formulated in Johnstone and Eckstein (Section 6.3 of [

6]) (TripAdvisor data are available at

https://github.com/yanxht/TripAdvisorData, accessed on 1 May 2024), which can be stated as follows:

where

X is the

n-by-

d data matrix,

H is the

d-by-

r coefficient matrix,

is the target vector,

is the all-one vector, and

is an offset and

. In [

5,

6], the authors gave a detailed description of the relationship of

(see (Section 6.3 of [

6]) and (Section 3 of [

5]) for more details). The

norm on

enforces sparsity of

, which in turn fuses together coefficients associated with similar features. The

norm on

additionally enforces sparsity on these coefficients, which is also desirable.

As described in [

5,

6], we applied this model on the TripAdvisor hotel-review dataset. The response variable

y was the overall rating of the hotel, in the set

. The features were the counts of certain adjectives in the review. Many adjectives were very rare, with 95% of the adjectives appearing in less than 5% of the reviews. There were 7573 adjectives from 169,987 reviews, and the auxiliary similarity tree

had 15,145 nodes. The 169,987 × 7573 design matrix

X and the 7573 × 15,145 matrix

H arising from the similarity tree

were both sparse, having 0.32% and 0.15% nonzero entries, respectively.

By replacing the least squares term with the

norm term, we obtained:

(note that

here corresponds to

in (Equation (7.4) of [

7]) when mu there was taken to be

) and the corresponding optimality condition is written as follows:

where

In this article, we rewrote (

34) as follows:

Denoted as follows:

and the corresponding optimality condition is as follows:

Thus, we obtain the following:

or

where

Note that the classical DR splitting method reads as follows:

So, we obtained the intermediate iterates, written as follows:

Moreover, the resolvent of the

norm term can be computed by the following:

As for Algorithm 1, we first used the same way of generating intermediate iterates, then we considered the following:

Equivalently, we obtain the following:

Thus, we first solve the following:

to obtain

, then the following:

Finally, we compute the following:

Note that we implemented the celebrated conjugate gradient method with one iteration (not too much!) to solve subproblem (

40).

DR: The classical DR splitting method.

dDR

,

: Algorithm 1 with an upper bound given in [

2].

dDR , : Algorithm 1 with an upper bound given in this article.

For the starting points, we chose

For the parameter , we considered the following two cases.

In this case, we set

(corresponds to

in [

7]), and we took

, where

. We used the stopping criterion, written as follows:

In this case, we set

(corresponds to

in [

7]), and we took

, where

49,233. We used the following stopping criterion:

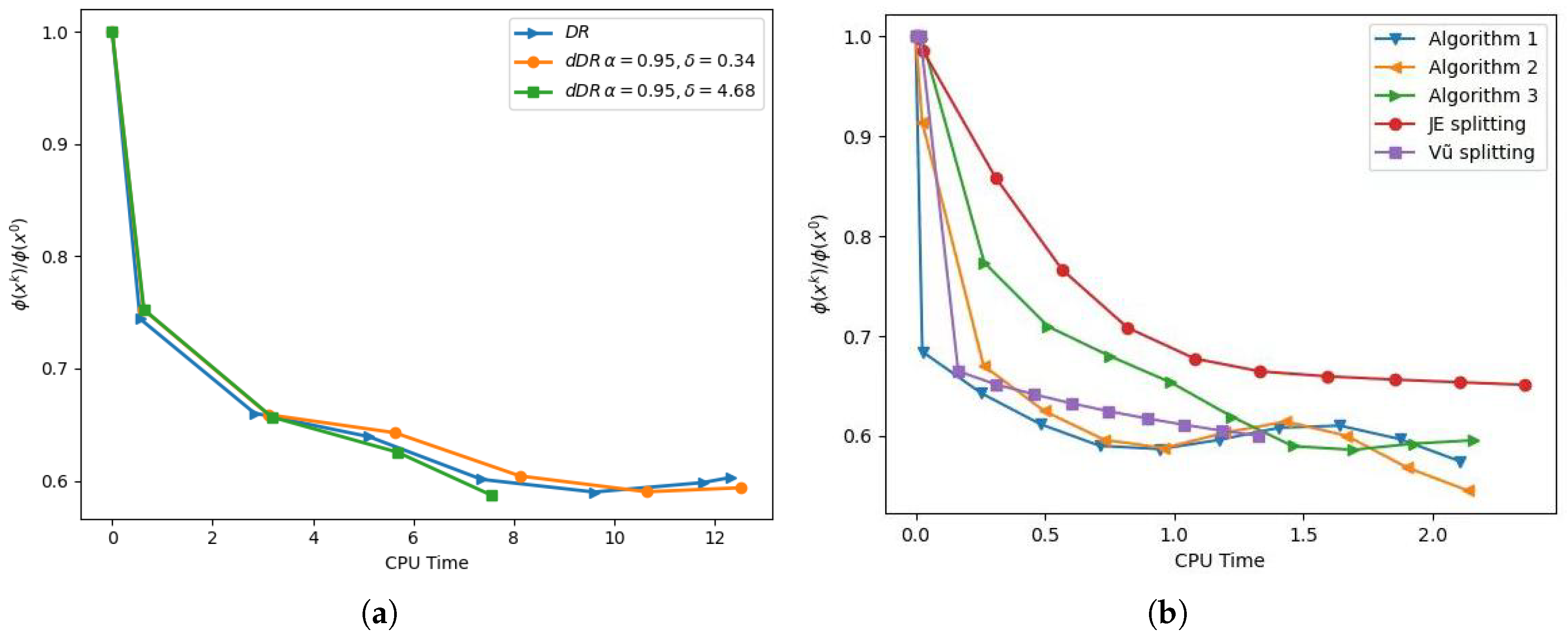

The corresponding numerical results were plotted in the figures (left) in

Figure 2 and

Figure 3, where “CPU Time” stands for the elapsed time using tic and toc in seconds. Notice that the figures (right) were taken from [

7], in which “Algorithm 1”, “Algorithm 2” and “Algorithm 3” are those algorithms recently proposed in [

7], “JE splitting” is a splitting method of (Algorithm 1 of [

6]), and “Vũ splitting” is a splitting method proposed in [

15].

From

Figure 2 and

Figure 3, it can be seen that dDR with

,

was faster than the other two.

Be aware that the ratio

is actually identical to the counterpart given in [

7] provided that

,

are the same. So, comparisons within those numerical results in [

7] (i.e., the figures (right) in

Figure 2 and

Figure 3) are meaningful. At first sight, those algorithms [

7] outperformed dDR with

,

. However, we would like to stress that the latter solved (

36) to similar accuracy to those [

7] in about 7 or 8 s, compared to the time-consuming (tens of minutes or more) trial and error process of determining those proximal parameters, rooted in those algorithms [

7]. In this sense, the directed DR splitting method is user-friendly and efficient in solving this feature selection model.