1. Introduction

In materials science, geometric optimization is important in advancing the development of materials. Researchers can use computational simulations to explore a broad spectrum of geometric configurations and material properties with remarkable efficiency [

1]. The primary goal of this process is to identify the most stable and energetically favorable configuration of a molecular structure [

2]. Geometric optimization involves systematically adjusting atomic positions to minimize the system’s total energy, which is critical for conducting simulations that explain the fundamental properties and behaviors of materials.

Carbon nanotubes (CNTs) have emerged as a cornerstone in nanotechnology research due to their extraordinary physical and chemical properties. Geometric optimization of CNTs is particularly useful for understanding and enhancing their potential applications [

3,

4,

5,

6,

7]. One of the most relevant representatives is hydrogen storage, which has direct implications for developing advanced energy storage technologies [

8,

9,

10,

11,

12]. In this field, integrating computational simulation tools has proven to be a powerful strategy for innovation in the design and study of nanostructured materials [

13,

14,

15].

However, the geometric optimization of CNTs is computationally intensive, with the processing time increasing substantially as the number of atoms in the system grows [

16]. This computational challenge arises from the necessity to locate the global minimum energy state amidst numerous local minima [

17]. For CNTs, this complexity can impede the feasibility of large-scale simulations, especially in scenarios involving intricate molecular structures. Thus, exploring innovative methods to accelerate geometric optimization without compromising accuracy is imperative.

To address these challenges, this study investigates the potential of deep neural networks (DNNs) to reduce the computational cost associated with the geometric optimization of CNTs. The research focuses on a hybrid approach where DNNs generate preoptimized (suboptimal) configurations of CNTs, which serve as starting points for further optimization using the software BIOVIA Materials Studio

®, version 2024, CASTEP Module [

18].

In this work, BIOVIA Materials Studio

® was used to construct and optimize the geometry of CNTs. This software is widely employed in materials science for its powerful molecular modeling tools and its support for universal force fields, including Lennard-Jones potentials [

19]. The licensed availability of this tool at our institution facilitated its use. The generated CNT structures were used for data extraction and validating our artificial neural network (ANN) predictions.

In this sense, the methodology proposed in this work comprises three phases:

- 1.

Initial CNT configurations are generated using BIOVIA Materials Studio®, which are then used to train DNN models.

- 2.

Suboptimal configurations of CNTs are produced using the trained DNNs. These configurations are subsequently refined through optimization in BIOVIA Materials Studio®.

- 3.

The effectiveness of using DNNs as a precursor step to optimization in BIOVIA Materials Studio® is evaluated.

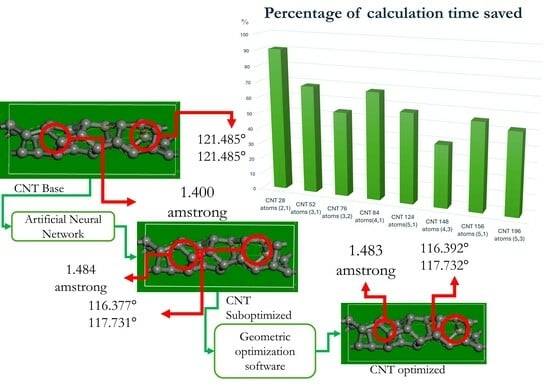

The proposed approach contributes significantly to the geometric optimization of complex systems by introducing deep neural networks as a preprocessing step, thereby reducing the computational time required by BIOVIA Materials Studio®. The reported time savings, ranging from 39.68% to 90.62%, are based on benchmark experiments carried out in this study, comparing conventional optimization procedures with the ANN-assisted approach across various CNT configurations.

Thus, this study demonstrates the viability of integrating artificial intelligence into materials science workflows, providing a more efficient exploration of CNT properties. The research aims to accelerate innovation in designing and applying advanced materials by addressing computational bottlenecks using deep learning tools.

2. Related Works

In recent years, advancements in artificial intelligence, particularly in artificial neural networks (ANNs), have revolutionized various research domains, including materials science [

20,

21]. In this field, ANNs have successfully contributed to developing new materials, such as carbon nanotubes, frequently studied through computational approaches to uncover their potential and utility in diverse applications. This section reviews notable studies highlighting the application of ANNs for improving and discovering new materials.

Vivanco et al. [

22] examined machine learning techniques for modeling carbon nanotubes, emphasizing using ANNs, support vector machines (SVMs), and random forests to analyze these nanostructures. Valentina et al. [

23] utilized multilayer perceptrons (MLPs) and One-Dimensional Convolutional Neural Networks (1D CNNs) to predict stress–strain curves in CNTs, achieving high accuracy in their predictions.

Similarly, Fakhrabadi et al. [

24] applied an MLP neural network to predict fundamental vibrational frequencies in CNTs, while Kaushal et al. [

25] developed an ANN-based model to forecast the yield and diameter of single-walled carbon nanotubes (SWCNTs) with over 90% accuracy. Akbari et al. [

26] explored the impact of gas interactions on the conductivity of CNTs by employing ANNs and SVMs to model current–voltage (I-V) characteristics, finding that SVMs delivered superior predictions.

Marko [

27] developed an ANN model to predict mechanical properties based on data derived from molecular dynamics simulations, showcasing the precision of deep learning techniques. Anderson et al. [

28] integrated molecular simulations with ANN methods to analyze hydrogen storage in porous crystals, enabling rapid preassessment of storage capacities.

In predicting nanomaterial properties, Salah et al. [

29] utilized an MLP system to achieve a remarkable 99.7997% accuracy in forecasting electromagnetic absorption in polycarbonate and CNT films. Nguyen et al. [

30] reported a significant enhancement in the thermal conductivity of hybrid nanofluids, emphasizing their relevance in heat transfer applications and applied neural networks for precise modeling.

Despite these advancements, the computational time required for developing carbon structures has received limited attention. A notable exception is the study by Aci and Avci [

31], which employed feedforward neural networks (FFNN) and generalized regression neural networks (GRNN) for the geometric optimization of structures. Their approach achieved an 85% reduction in the number of iterations required for calculations, underscoring the efficiency of these techniques in computational optimization and highlighting their potential for similar applications.

The successful application of deep learning neural networks in predicting properties and optimizing materials demonstrates their potential to significantly reduce simulation times. These advancements open new opportunities for accelerating research and development in advanced materials while making efficient ways of exploring nanostructures like CNTs and other innovative materials.

4. Experimental Design

The methodology of this study is divided into three phases to ensure a systematic analysis of the computational efficiency of the proposed ANN-based approach for CNT geometric optimization.

Phase I: Involves generating the dataset comprising initial and optimized CNT molecules. These structures are obtained using BIOVIA Materials Studio® (CASTEP module), which performs first-principles simulations to optimize the geometric properties of the CNTs.

Phase II: Suboptimized CNT structures are generated using selected ANN architectures. Subsequently, these suboptimized structures are used as the starting point for geometric optimization via the CASTEP module.

Phase III: The computational times required for the geometric optimization of CNTs is analyzed. Two approaches are evaluated: one using the structures directly optimized in CASTEP module and the other using the suboptimized CNTs generated by the ANN as the initial input.

4.1. Phase I: Dataset Generation

The dataset for training the selected ANN models was generated by first constructing CNTs in BIOVIA Materials Studio®, where key parameters such as atomic type (carbon) and bond length (1.42 Å as the default value) were specified. These initial structures were then subjected to geometric optimization using the CASTEP module, with the electronic energy tolerance parameter set to eV per atom to ensure high precision in the computational results.

All CNT structures in this study were optimized using energy minimization protocols at 0 K, as implemented by default in BIOVIA Materials Studio®. This approach allows for the determination of equilibrium geometries without thermal noise.

While temperature effects are crucial in atomistic simulations involving molecular dynamics or thermal stability assessments, they were not considered here, as the objective was to evaluate the structural prediction accuracy of the ANN under idealized conditions. Moreover, since many CNT synthesis techniques aim for processes compatible with room temperature or mild conditions, the resulting geometries remain relevant for experimental applications [

44].

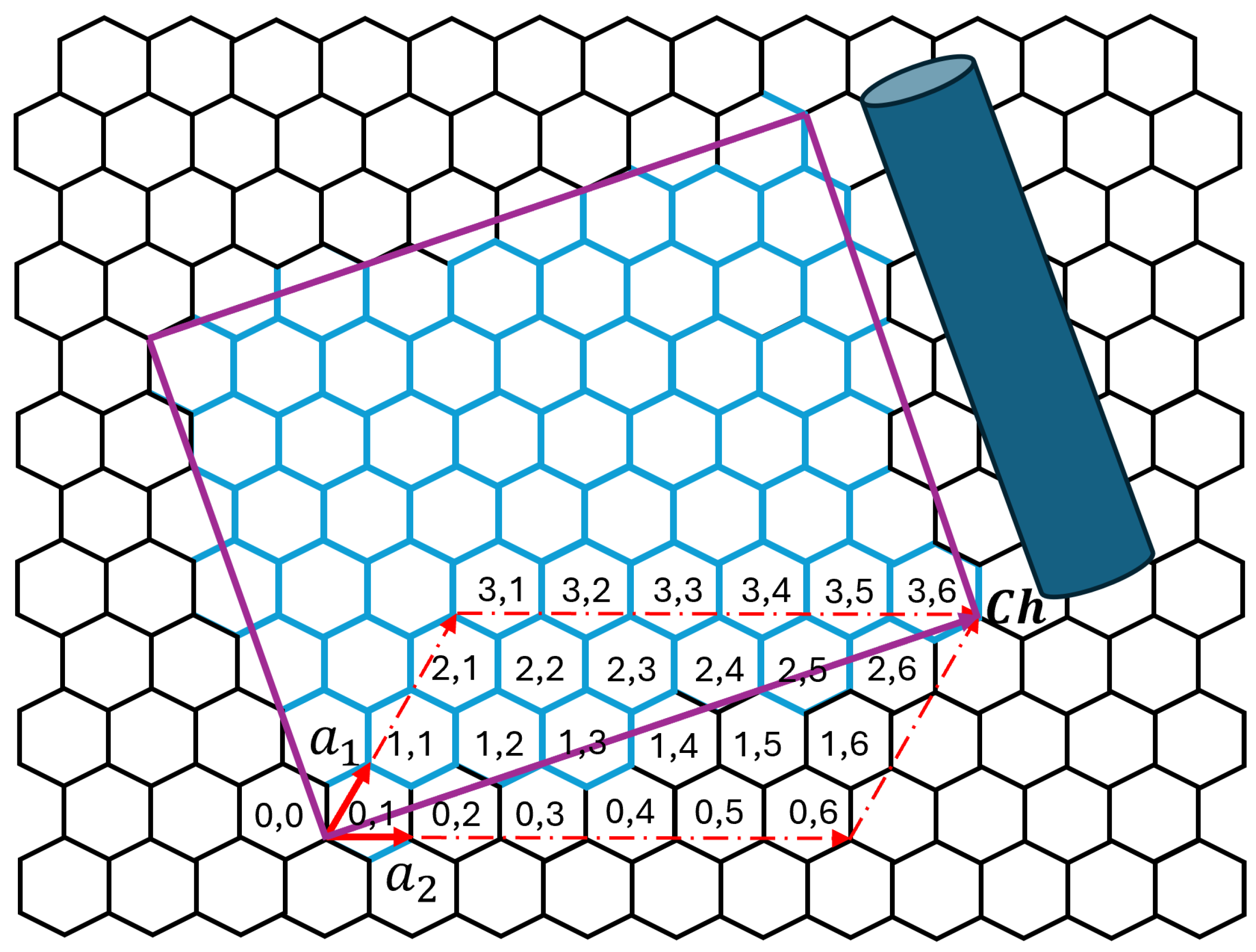

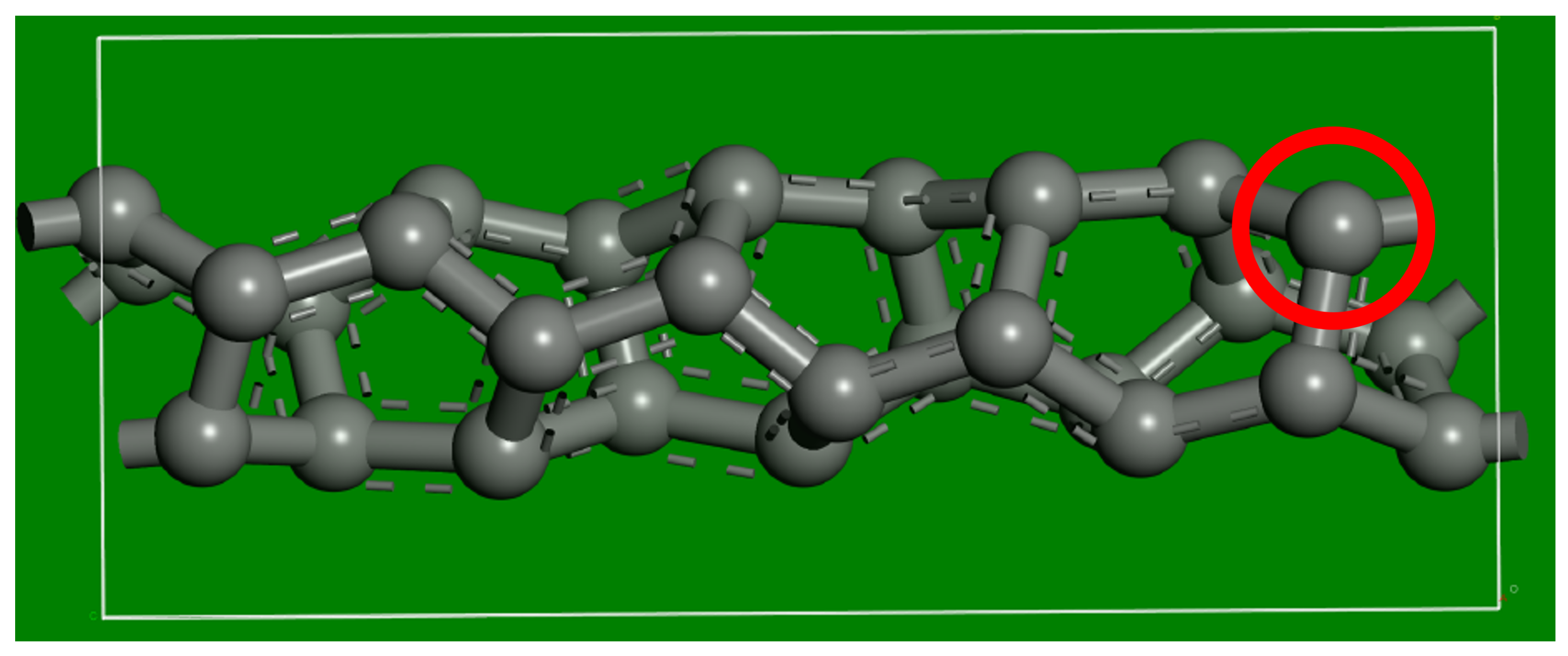

As a reference,

Figure 2 illustrates a CNT with chirality

and

. Chirality parameters define how the graphene sheet is rolled to form the nanotube. The specific CNT depicted contains 28 atoms, and its spatial coordinates (

x,

y,

z) were used as inputs for the CASTEP module. After optimization, the corresponding coordinates (

,

,

) served as the output targets.

The number of atoms corresponds to the number of carbon atoms within the translational unit cell of the chiral CNT (2,1). This value results from the construction of the nanotube based on its chiral vector , and the corresponding translational vector along the tube axis. Thus, the unit cell formed is the smallest repeating structure that fully captures the atomic arrangement of this chirality.

The number of atoms in the unit cell of a chiral carbon nanotube is given by the expression Equation (

15) [

45]:

where

is the greatest common divisor of

. Then, the (2,1) chirality yields

atoms, corresponding to the minimal translational unit cell along the nanotube axis.

Table 1 details the spatial coordinates of the 28 atoms comprising the CNT. The first three columns represent the unoptimized CNT’s initial coordinates (

x,

y,

z). In comparison, the last three columns list the optimized coordinates (

,

,

) after processing with the Materials Studio software. Then, eight CNTs with different chiralities were constructed, resulting in structures with varying numbers of atoms, as shown in

Table 2.

It is important to note that the carbon nanotubes analyzed in

Table 2, especially those with chiral indices (2,1), (3,1), etc., correspond to structures with very small diameters (i.e., diameter < 0.5 nm). While valid from a theoretical standpoint, these geometries are known to present high curvature-induced strain, which can lead to reduced thermodynamic stability under ambient conditions.

Nonetheless, such ultra-small CNTs have been predicted and, in some cases, observed experimentally, particularly in confined environments or synthesized using specialized techniques [

45]. One study by Zhao et al. [

46] found that a stable 3 Å carbon nanotube can be grown inside a multi-walled carbon nanotube (MWNT) using high-resolution transmission electron microscopy. Density functional calculations indicated that this 3 Å CNT is the armchair CNT (2,2). While this study does not directly address the observation by Zhao et al. [

46], it provides evidence that very small carbon nanotubes can be stable and observable under certain conditions.

The study of low chiralities CNTs remains relevant for understanding the limits of CNT structural stability and validating computational nanotube behavior models. Moreover, other studies suggest that carbon nanotubes with low chiralities should be studied because they exhibit unique electronic, optical, and mechanical properties, have a strong preference in growth processes, and are crucial for high-purity separation and specific applications [

34,

47,

48].

The datasets created under conditions specified in

Table 1 and

Table 2 are grouped into three categories, as follows:

CNT-BCO: Contains both the initial (random) and CNT data optimized by CASTEP module of BIOVIA Materials Studio® software.

CNT-BCO2: Includes the initial CNTs and those optimized twice using the CASTEP module to augment the data volume.

CNT-BCO-ALL: A comprehensive dataset combining the first two datasets.

The three categories were generated based on the process in

Table 3. First, we used the base CNT and the BIOVIA Materials Studio

® sofware to optimize the atom coordinates; then, an optimized CNT dataset was produced.

Afterward, we processed the base CNT in BIOVIA Materials Studio® twice, thus creating a new augmented dataset (CNT-BCO2). The CNT-BCO-ALL contains the dataset from the two previous stages.

These datasets were the foundation for ANN training, enabling the models to learn from the initial and optimized CNT geometries. By incorporating diverse examples, the datasets ensured the robustness of the ANN predictions, ultimately enhancing the geometric optimization process of CNTs.

4.2. Phase II: Construction of Models for Generating Suboptimized CNTs

To generate suboptimized CNTs, the three ANN architectures explained in

Section 3.2 were employed: a Multilayer Perceptron (MLP), a Bidirectional Long Short-Term Memory (BiLSTM) network, and a 1D Convolutional Neural Network (1D-CNN). These architectures were configured with the hyperparameters detailed in

Table 4.

The datasets, previously presented in

Table 3, were split into three subsets to ensure proper training, evaluation, and validation of the models: (a) 60% for training, used to adjust the model’s parameters during the learning phase, (b) 30% for testing, utilized to evaluate the model’s performance and prevent over-fitting, and (c) 10% for validation, applied during training to monitor generalization and fine-tune hyperparameters.

Each ANN was trained using a supervised learning approach. The Early Stopping criterion was implemented to optimize computational efficiency and ensure convergence. This mechanism halts training if the validation loss does not improve for ten consecutive epochs, thereby preventing over-fitting and excessive computation.

The primary goal of this phase was to generate suboptimized CNT structures that serve as intermediate geometries between the unoptimized and fully optimized states. These were later used in Phase III, allowing for a detailed comparison of computational efficiency in contrast with the original processed datasets (see

Section 4.1).

This systematic approach ensured that each ANN architecture was rigorously tested and could reliably produce suboptimized CNTs, thus reducing the processing time in the final optimization process with Materials Studio software.

5. Results and Discussion

The results focus on Phase III, which evaluates the effectiveness of the neural network models as a preoptimization step for CNTs. After training the ANN models with the CNTs detailed in

Table 2, suboptimized structures were generated. These were then used as initial inputs for geometric optimization via the CASTEP module (BIOVIA Materials Studio

®), aiming to assess the reduction in computational time.

Table 5 summarizes the results obtained using the BiLSTM network. It demonstrated a notable reduction in computation time, ranging from 3.69% for the CNT with 196 atoms to 65.23% for the CNT with 84 atoms. This trend indicates that the reduction percentage decreases as the number of atoms in the CNTs increases. Similar behavior was observed in the other neural network models, albeit to a lesser extent.

In

Table 6, results from six trials conducted on a CNT with chirality

,

(196 atoms) are summarized. Identical hyperparameters were configured across tests; also, the used ANN was 1D-CNN, and the dataset for this experiment was CNT-BCO2. Five iterations in the initial CNT dataset were performed. The reference time for time-saving estimation was reported as 6342.31 s, which was the CASTEP module processing time without ANN preprocessing. Each trial represents the results of varying the iterations in the suboptimized CNT, as depicted in the first column.

It must be noted in

Table 6 that the performance varied notably among the trials. For instance, in trial 2 (2nd row), the network achieved a modest 1.11% reduction in computational time, indicating limited learning effectiveness. In contrast, trials 3 and 6 (highlighted in bold) yielded substantial improvements, with up to 52.11% reduction in computational time. Also, please note that suboptimized CNT with 7 iterations (first row) increased the computational time.

Following this variability analysis, the most favorable results in terms of time reduction were selected for CNTs with varying chiralities. These are summarized in

Table 7, where the suboptimization with 1D-CNN and MLP architectures was also tested. Datasets CNT-BCO and CNT-BCO2 were used for this analysis.

For smaller CNTs (28, 52, and 76 atoms), the 1D-CNN architecture was the most effective overall. A notable exception was the CNT with chirality and , where the MLP network outperformed others, achieving a remarkable 68.27% reduction in computational time. Notably, for the CNT with 28 atoms (, ), the 1D-CNN achieved an outstanding 90.62% reduction, marking the highest efficiency in time cost improvement across all tested cases.

On the other hand, for larger CNTs (148, 124, and 196 atoms), the MLP architecture demonstrated superior efficiency in most scenarios. However, for the CNT with chirality , , the 1D-CNN surpassed the MLP, achieving a 55.93% reduction in computational time. From a general point of view, in many cases, computational time reductions exceeded 50%, demonstrating a significant enhancement in the optimization process’s efficiency in terms of saving time.

The results confirm that deep ANNs, particularly 1D-CNNs, and MLPs significantly reduce the computational time for geometric optimization by generating suboptimized CNT structures that serve as effective starting points for CASTEP. These reductions, reaching up to 90.62% (CNT with 28 atoms), represent a breakthrough in computational efficiency for material simulations. The demonstrated effectiveness of these ANN-based methods highlights their potential for accelerating similar processes in nanotechnology and materials science.

Nonetheless, as shown in

Table 5,

Table 6 and

Table 7, the relative time savings achieved through neural network preprocessing decrease as the chirality indices increase (and thus, the number of atoms). This effect becomes more pronounced starting from chirality (5,3), where the computational overhead of the neural network inference and the subsequent geometric optimization in BIOVIA Materials Studio

® begins to surpass the initial speedup. This behavior is anticipated, as the scaling of the energy minimization process is highly sensitive to system size.

Despite this, exploring CNTs with a wide range of chiralities, including small-diameter variants, remains relevant. Small CNTs such as (2,2), (3,3), and (4,2), though less frequently synthesized, have been shown to exhibit mechanical stability under specific conditions. For example, Zhao et al. [

46] reported that such structures can be stable despite higher strain energy than larger tubes. In other work, Peng et al. [

49] found that CNTs with diameters as small as 0.33 nm can remain stable at temperatures exceeding 1000 °C. Including these cases in the analysis allows us to identify the threshold at which the preprocessing method begins to lose efficiency, providing valuable information for future scalability studies.

Therefore, while larger and more stable CNTs hold clear experimental and technological interest, the present work offers a broader perspective on how neural networks operate across various structural configurations, aiding in defining practical boundaries for their application in computational materials design.

6. Conclusions

This study has demonstrated that deep-learning-based artificial neural networks can reduce computational time for geometric optimization of CNTs by up to 90.62%, significantly improving the time consumed by CASTEP Materials Studio Software. It could extend this methodology to a broader range of carbon nanotube chiralities and possibly other molecular systems. With further refinement of ANN architectures and weight optimization, achieving even more significant reductions in computation time is plausible, particularly for more complex molecular structures. Moreover, once trained, these ANNs do not necessarily require retraining for similar tasks, further enhancing computational efficiency.

Unlike previous studies, such as the work of Aci and Avci [

31], which focused on reducing the number of iterations using ANNs, this work implemented deep-learning-based ANNs, including MLP, BiLSTM, and 1D-CNN architectures. Experimental results indicate that these models demonstrate a more accurate learning ability for the numerical patterns that define the energy minimum of molecular structures. This improvement directly leads to a significant decrease in computational time related to geometric optimization.

One notable challenge identified is the dependence on proprietary software, specifically the Material Studio platform and its CASTEP module, for generating the dataset used in ANN training. This reliance presents limitations in scalability and accessibility for broader applications. Future research could explore alternative strategies, such as using unsupervised or reinforcement learning approaches. These methods could potentially eliminate dependency on specific simulation tools while maintaining or even improving the computational efficiency and accuracy of the optimization process.

To successfully implement these advanced methodologies, it would be important to consider the numerical methods and algorithms CASTEP utilizes during geometric optimization. By integrating these principles into the training process, future models could further enhance their ability to predict optimal configurations while reducing computational overhead.