Characterizing Computational Thinking in the Context of Model-Planning Activities

Abstract

1. Introduction

2. Background

2.1. Computational Thinking (CT)

2.2. Solution Planning

2.3. Model-Eliciting Activity (MEA)

3. Methods

3.1. Participants and Context

3.2. Learning Intervention

- What equations would they use?

- What food properties were needed for their model?

- What assumptions would they need to make?

- What computational technique would they use?

- How would they use all of these things together to construct a computational model?

3.3. Data Collection

3.4. Data Analysis

3.5. Trustworthiness

4. Results

4.1. Overview of the Results

4.2. Abstraction

4.3. Algorithmic Thinking

4.4. Evaluation

4.5. Generalization

4.6. Decomposition

5. Discussion

5.1. Implications for Teaching and Learning

5.2. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Finzer, W. The Data Science Education Dilemma. Technol. Innov. Stat. Educ. 2013, 7. Available online: http://escholarship.org/uc/item/6jv107c7 (accessed on 25 May 2022). [CrossRef]

- Irizarry, R.A. The Role of Academia in Data Science Education. Harv. Data Sci. Rev. 2020, 2. [Google Scholar] [CrossRef]

- Magana, A.J.; Coutinho, G.S. Modeling and simulation practices for a computational thinking-enabled engineering workforce. Comput. Appl. Eng. Educ. 2017, 25, 62–78. [Google Scholar] [CrossRef]

- Fennell, H.W.; Lyon, J.A.; Magana, A.J.; Rebello, S.; Rebello, C.M.; Peidrahita, Y.B. Designing hybrid physics labs: Combining simulation and experiment for teaching computational thinking in first-year engineering. In Proceedings of the 2019 IEEE Frontiers in Education Conference (FIE), Covington, KY, USA, 16–19 October 2019. [Google Scholar] [CrossRef]

- Magana, A.; Falk, M.; Reese, M.J. Introducing Discipline-Based Computing in Undergraduate Engineering Education. ACM Trans. Comput. Educ. 2013, 13, 1–22. [Google Scholar] [CrossRef]

- Lyon, J.A.; Magana, A.J. The use of engineering model-building activities to elicit computational thinking: A design-based research study. J. Eng. Educ. 2021, 110, 184–206. [Google Scholar] [CrossRef]

- Vieira, C.; Magana, A.J.; García, R.E.; Jana, A.; Krafcik, M. Integrating Computational Science Tools into a Thermodynamics Course. J. Sci. Educ. Technol. 2018, 27, 322–333. [Google Scholar] [CrossRef]

- Diefes-Dux, H.A.; Hjalmarson, M.; Zawojewski, J.S.; Bowman, K. Quantifying aluminum crystal size part 1: The model-eliciting activity. J. STEM Educ. Innov. Res. 2006, 7, 51–63. [Google Scholar]

- Lyon, J.A.; Fennell, H.W.; Magana, A.J. Characterizing students’ arguments and explanations of a discipline-based computational modeling activity. Comput. Appl. Eng. Educ. 2020, 28, 837–852. [Google Scholar] [CrossRef]

- Lyon, J.A.; Magana, A.J. A Review of Mathematical Modeling in Engineering Education. Int. J. Eng. Educ. 2020, 36, 101–116. [Google Scholar]

- Jung, H.; Diefes-Dux, H.A.; Horvath, A.K.; Rodgers, K.J.; Cardella, M.E. Characteristics of feedback that influence student confidence and performance during mathematical modeling. Int. J. Eng. Educ. 2015, 31, 42–57. [Google Scholar]

- Louca, L.T.; Zacharia, Z. Modeling-based learning in science education: Cognitive, metacognitive, social, material and epistemological contributions. Educ. Rev. 2012, 64, 471–492. [Google Scholar] [CrossRef]

- Shiflet, A.B.; Shiflet, G.W. Introduction to Computational Science: Modeling and Simulation for the Sciences; Princton University: Princeton, NJ, USA, 2014. [Google Scholar]

- Magana, A.J. Modeling and Simulation in Engineering Education: A Learning Progression. J. Prof. Issues Eng. Educ. Prof. 2017, 143, 04017008. [Google Scholar] [CrossRef]

- Kalelioğlu, F.; Gülbahar, Y.; Kukul, V. A Framework for Computational Thinking Based on a Systematic Research Review. Balt. J. Mod. Comput. 2016, 4, 583–596. [Google Scholar]

- Lyon, J.A.; Magana, A.J. Computational thinking in higher education: A review of the literature. Comput. Appl. Eng. Educ. 2020, 28, 1174–1189. [Google Scholar] [CrossRef]

- Wing, J.M. Computational thinking. Commun. ACM 2006, 49, 33–35. [Google Scholar] [CrossRef]

- Selby, C.C.; Woollard, J. Computational Thinking: The Developing Definition. In Proceedings of the 18th Annual Conference on Innovation and Technology in Computer Science Education, Canterbury, UK, 29 June–3 July 2013; pp. 5–8. [Google Scholar]

- Weintrop, D.; Beheshti, E.; Horn, M.; Orton, K.; Jona, K.; Trouille, L.; Wilensky, U. Defining Computational Thinking for Mathematics and Science Classrooms. J. Sci. Educ. Technol. 2016, 25, 127–147. [Google Scholar] [CrossRef]

- Curzon, P.; Dorling, M.; Selby, C.; Woollard, J. Developing computational thinking in the classroom: A framework. Comput. Sch. 2014. Available online: http://eprints.soton.ac.uk/id/eprint/369594 (accessed on 26 May 2022).

- Grover, S.; Pea, R. Computational Thinking in K-12. Educ. Res. 2013, 42, 38–43. [Google Scholar] [CrossRef]

- Ilic, U.; Haseski, H.I.; Tugtekin, U. Publication Trends Over 10 Years of Computational Thinking Research. Contemp. Educ. Technol. 2018, 9, 131–153. [Google Scholar] [CrossRef]

- Ehsan, H.; Rehmat, A.P.; Cardella, M.E. Computational thinking embedded in engineering design: Capturing computational thinking of children in an informal engineering design activity. Int. J. Technol. Des. Educ. 2021, 31, 441–464. [Google Scholar] [CrossRef]

- Rehmat, A.P.; Ehsan, H.; Cardella, M.E. Instructional strategies to promote computational thinking for young learners. J. Digit. Learn. Teach. Educ. 2020, 36, 46–62. [Google Scholar] [CrossRef]

- Tsarava, K.; Moeller, K.; Román-González, M.; Golle, J.; Leifheit, L.; Butz, M.V.; Ninaus, M. A cognitive definition of computational thinking in primary education. Comput. Educ. 2022, 179, 104425. [Google Scholar] [CrossRef]

- Kanaki, K.; Kalogiannakis, M. Assessing Algorithmic Thinking Skills in Relation to Gender in Early Childhood. Educ. Process Int. J. 2022, 11, 44–45. [Google Scholar] [CrossRef]

- Kalliopi, K.; Michail, K. Assessing Computational Thinking Skills at First Stages of Schooling. In ACM International Conference Proceeding Series; ACM: Barcelona, Spain, 2019; pp. 135–139. [Google Scholar] [CrossRef]

- Ung, L.-L.; Labadin, J.; Mohamad, F.S. Computational thinking for teachers: Development of a localised E-learning system. Comput. Educ. 2022, 177, 104379. [Google Scholar] [CrossRef]

- Lawanto, O. Students’ metacognition during an engineering design project. Perform. Improv. Q. 2010, 23, 117–136. [Google Scholar] [CrossRef]

- Dvir, D.; Raz, T.; Shenhar, A.J. An empirical analysis of the relationship between project planning and project success. Int. J. Proj. Manag. 2003, 21, 89–95. [Google Scholar] [CrossRef]

- Lucangeli, D.; Tressoldi, P.E.; Cendron, M. Cognitive and Metacognitive Abilities Involved in the Solution of Mathematical Word Problems: Validation of a Comprehensive Model. Contemp. Educ. Psychol. 1998, 23, 257–275. [Google Scholar] [CrossRef]

- Shin, N.; Jonassen, D.H.; McGee, S. Predictors of well-structured and ill-structured problem solving in an astronomy simulation. J. Res. Sci. Teach. 2003, 40, 6–33. [Google Scholar] [CrossRef]

- Magana, A.J.; Fennell, H.W.; Vieira, C.; Falk, M.L. Characterizing the interplay of cognitive and metacognitive knowledge in computational modeling and simulation practices. J. Eng. Educ. 2019, 108, 276–303. [Google Scholar] [CrossRef]

- Diefes-Dux, H.; Moore, T.; Zawojewski, J.; Imbrie, P.K.; Follman, D. A framework for posing open-ended engineering problems: Model-eliciting activities. In Proceedings of the 34th Annual Frontiers in Education 2004 (FIE 2004), Savannah, GA, USA, 20–23 October 2004. [Google Scholar] [CrossRef]

- Moore, T.J.; Miller, R.L.; Lesh, R.A.; Stohlmann, M.S.; Kim, Y.R. Modeling in Engineering: The Role of Representational Fluency in Students’ Conceptual Understanding. J. Eng. Educ. 2013, 102, 141–178. [Google Scholar] [CrossRef]

- Lesh, R.; Hoover, M.; Hole, B.; Kelly, A.; Post, T. Principles for developing thought-revealing activities for students and teachers. In The Handbook of Research Design in Mathematics and Science Education; Kelly, A., Lesh, R., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2000; pp. 591–646. [Google Scholar] [CrossRef]

- Liu, Z.; Xia, J. Enhancing computational thinking in undergraduate engineering courses using model-eliciting activities. Comput. Appl. Eng. Educ. 2021, 29, 102–113. [Google Scholar] [CrossRef]

- Moore, T.J.; Guzey, S.S.; Roehrig, G.H.; Stohlmann, M.; Park, M.S.; Kim, Y.R.; Callender, H.L.; Teo, H.J. Changes in Faculty Members’ Instructional Beliefs while Implementing Model-Eliciting Activities. J. Eng. Educ. 2015, 104, 279–302. [Google Scholar] [CrossRef]

- Hjalmarson, M.; Diefes-Dux, H.A.; Bowman, K.; Zawojewski, J.S. Quantifying aluminum crystal size part 2: The model-development sequence. J. STEM Educ. Innov. Res. 2006, 7, 64–73. [Google Scholar]

- Kapur, M. Productive Failure. Cogn. Instr. 2008, 26, 379–424. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Sengupta, P.; Kinnebrew, J.S.; Basu, S.; Biswas, G.; Clark, D. Integrating computational thinking with K-12 science education using agent-based computation: A theoretical framework. Educ. Inf. Technol. 2013, 18, 351–380. [Google Scholar] [CrossRef]

- Busch, T. Gender Differences in Self-Efficacy and Attitudes toward Computers. J. Educ. Comput. Res. 1995, 12, 147–158. [Google Scholar] [CrossRef]

- Hutchison, M.A.; Follman, D.K.; Sumpter, M.; Bodner, G.M. Factors Influencing the Self-Efficacy Beliefs of First-Year Engineering Students. J. Eng. Educ. 2006, 95, 39–47. [Google Scholar] [CrossRef]

- Magana, A.J.; Vieira, C.; Fennell, H.W.; Roy, A.; Falk, M.L. Undergraduate Engineering Students’ Types and Quality of Knowledge Used in Synthetic Modeling. Cogn. Instr. 2020, 38, 503–537. [Google Scholar] [CrossRef]

- Louca, L.; Zacharia, Z.C. Toward an Epistemology of Modeling-Based Learning in Early Science Education. In Towards a Competence-Based View on Models and Modeling in Science Education; Springer: Cham, Switzerland, 2019; pp. 237–256. [Google Scholar] [CrossRef]

- Dewey, J. Experience and Education; Macmillan: New York, NY, USA, 1938. [Google Scholar] [CrossRef]

- McKenna, A.F. An Investigation of Adaptive Expertise and Transfer of Design Process Knowledge. J. Mech. Des. 2007, 129, 730–734. [Google Scholar] [CrossRef]

- Hatano, G.; Inagaki, K. Two courses of expertise. Res. Clin. Cent. Child Dev. Annu. Rep. 1984, 6, 27–36. [Google Scholar]

- Jonassen, D.H. Externally Modeling Mental Models. In Learning and Instructional Technologies for the 21st Century; Moller, L., Bond Huett, J., Harvey, D.M., Eds.; Springer: New York, NY, USA, 2009; pp. 49–74. [Google Scholar] [CrossRef]

- Nersessian, N.J. Model-Based Reasoning in Distributed Cognitive Systems. Philos. Sci. 2007, 73, 699–709. [Google Scholar] [CrossRef][Green Version]

- Hmelo-Silver, C.E. Comparing expert and novice understanding of a complex system from the perspective of structures, behaviors, and functions. Cogn. Sci. 2004, 28, 127–138. [Google Scholar] [CrossRef]

- Carbonell, K.B.; Stalmeijer, R.E.; Könings, K.; Segers, M.; van Merriënboer, J.J. How experts deal with novel situations: A review of adaptive expertise. Educ. Res. Rev. 2014, 12, 14–29. [Google Scholar] [CrossRef]

- Delany, D. Advanced concept mapping: Developing adaptive expertise. In Concept Mapping-Connecting Educators: Proceedings of the Third International Conference on Concept Mapping: Vol. 3. Posters, Tallinn, Estonia and Helsinki, Finland, 22–25 September 2008; Concept Mapping Conference; Volume 3, pp. 32–35. Available online: https://cmc.ihmc.us/cmc-proceedings (accessed on 26 May 2022).

- Vieira, C.; Magana, A.J.; Roy, A.; Falk, M.L. Student Explanations in the Context of Computational Science and Engineering Education. Cogn. Instr. 2019, 37, 201–231. [Google Scholar] [CrossRef]

- Jaiswal, A.; Lyon, J.A.; Zhang, Y.; Magana, A.J. Supporting student reflective practices through modelling-based learning assignments. Eur. J. Eng. Educ. 2021, 46, 987–1006. [Google Scholar] [CrossRef]

- Barab, S.; Squire, K. Design-Based Research: Putting a Stake in the Ground. J. Learn. Sci. 2004, 13, 1–14. [Google Scholar] [CrossRef]

- The Design-Based Research Collective. Design-Based Research: An Emerging Paradigm for Educational Inquiry. Educ. Res. 2003, 32, 5–8. [Google Scholar] [CrossRef]

| Data Source | N | Description |

|---|---|---|

| Audio/Video Files of In-Lab Discussions | 4 groups (15 students total) | Four of the groups were randomly selected to be audio/video recorded during their entire planning process during lab time. |

| Planning Model Templates | 15 (one for each group) | All groups turned in a planning model template, one of which is used in this analysis for each group in the study. |

| Computational Thinking Practice | Definition |

|---|---|

| Abstraction | Making problems easier to solve/think about by selectively removing/hiding unnecessary complexity by making assumptions about how the problem should operate or by neglecting or adding components to the problem. |

| Algorithmic Thinking | Setting up or talking about problems so that they can be solved in a stepwise manner by looking at the procedural steps/aspects of the solution; or by acknowledging the uses and effects of solution structures temporally and procedurally throughout the solution. |

| Evaluation | Comparing one’s own solution against various criteria either given by the problem itself or against criteria brought to the problem by the student themselves. This can also be a statement describing desirable qualities of the final solution in terms of function, display, or other final form characteristics. |

| Generalization | Reusing previous solutions, methods, or constructs for the current problem; or the use of current solutions, methods, or constructs from the current problem to hypothesize about their use in future contexts. |

| Decomposition | Thinking about how the pieces of the problem can be used separately, strategically breaking down the problem for easier solution or use, or breaking the problem down into subcomponent structures, pieces, or areas. |

| Abstraction | Algorithmic Thinking | Evaluation | Generalization | Decomposition |

|---|---|---|---|---|

| Ranges to values Multiple to single Dynamic to static Geometric relationships Similar systems Infinite to finite | Indication of later use/effect Stepwise approach Parallel methods Conditional logic | Solution accuracy Solution complexity Time efficiency Design criteria Solution usability Solution flexibility | Previous coursework or experience External applications | Organization of larger solution method Allocation of resources |

| Outcome | Definition | Quote |

|---|---|---|

| Ranges to values | Simplifying a range or list of values into a single value (e.g., worst-case scenario, average across a range, and picking the highest value) or a single function (assume that x follows function y). | “So z-value is the number of degrees that requires that variation. So I feel like we should choose the highest one again.” |

| Multiple to single | Simplifying aspects of the problem that have multiple dimensions or factors and simplifying the dimensions or factors considered in the solution through choice of one of neglect of factors; or creating limits or boundaries to what is considered to be affecting or influencing the considered system. | “A: The tin has, can we assume like no heat loss with the… I don’t know what I’m trying to think. Can we assume the can is negligible, like the metal part?D: Oh yeah, conduction through can wall is negligible because it’s so thick.” |

| Dynamic to static | Making factors or variables constant, uniform, or unchanging that would normally vary in respect to other variables or aspects of the problem. | “D: Density, like constant pressure means you have a density that’s not relying on pressure. It’s more relying on the temperature.” |

| Geometric relationships | Simplifying problems by assuming a geometric characteristic or relationships between variables, constants, or factors within the problem space. | “D: Yeah so we will have to assume.. uhC: Cylindrical.D: Cylindrical yeah. So we’ll get.” |

| Similar systems | Aligning properties of a current unknown system or property of a system with knowns of a system that is well known or familiar. | “B: What assumptions will you make to solve the problem?C: Assume, pumpkin pie filling is about like applesauce.” |

| Infinite to finite | Making aspects of the problem or system that are infinite or continuous and making them finite or discrete in nature. | “But uh depending on our can dimensions, we could do the whole like infinity, can split by infinity slabs, or just infinite cylinder.” |

| Outcome | Definition | Quote |

|---|---|---|

| Indication of later use/effect | Indicating that information will be useful for a later process or a later point in the code. Indication of what certain variables or structures will cause later in the solution or code or explanation of location of code for effect. | “All I know is that we are going to need to determine like the alpha, so we need like the k’s and all of those things so that might be important then, like how much moisture there is, to determine the k value and like the cp” |

| Using a stepwise approach | Giving things a logical order or listing steps to follow in order to solve the problem. This can include listing steps explicitly (e.g., 1, 2, 3…), diagramming, giving things logical order (i.e., we should do x first, y second, and then z third), or thought experiments (i.e., if we do x, then y will happen, and then z will likely follow). | “It’s saying, yeah. It is trying to say, okay, I don’t know my next value, but I know my temperatures right now throughout my area. Okay. Estimate, if I step one point in time, like one second, what will the temperature at that point I want to look at become. And then you take that value, you just guessed that your model and then plug it back into the equation for the next time around.” |

| Considering parallel methods | Indication that there are parallel or redundant solution methods or information given. | “D: So in this equation they use Ea, since uh temperature changes [inaudible] effect. And that’s what z value does. A: Oh okay, so z already takes that into account?D: Yeah so z and D are intertwined like Ea and D are intertwined. […] Since we have both D and z we don’t need Ea.” |

| Conditional logic | Student making an if/else statement or conditional statement based on previous or future conditions (i.e., if I do x, y will happen) | “I don’t, I don’t remember them. But like for a tuna can you probably shouldn’t assume it, because it’s really flat and not much curvature. But for like a soup can you might get away with it. [laughs] Why’d you choose it? Engineers instinct.” |

| Outcome | Definition | Quote |

|---|---|---|

| Solution accuracy | Evaluates the solution or the code in regards to the actual or perceived accuracy of the solution method decisions. | “The exact properties of Nacho Cheese may not be available. I think it will limit accuracy.” |

| Solution complexity | Evaluates the solution or code in regards to the amount of work, time, or effort needed to create the solution. | “What are the benefits of this technique? Uh, it’s simplistic, it’s not insane.” |

| Time efficiency | Evaluates the solution method based on the amount of time the code or computer takes or wastes to arrive at the final solution. | “I chose this method because it is computationally fast.” |

| Design Criteria | Evaluates the code or solution against the actual or perceived wishes and requirement of the stakeholders or problem statement. | “This way if the moisture is content is lower, we will still be within the desired amount of sterilization.” |

| Solution usability | Evaluates the solution or code in regards to ease of use for themselves or for others. | “… allow us to create a usable solution.” |

| Solution flexibility | Evaluates the solution or code based on the ability to easily change or pursue future changes. | “This method gives us a lot of control over the parameters and the ‘machinery’ of the program.” |

| Outcome | Definition | Quotes |

|---|---|---|

| Drawing from previous experiences | Use of previous coursework or personal experiences to inform the current solution method. This can include previous coursework, professional experiences, or explicitly related concepts from previous courses. | “C: Oh I remember this equation from [another course], the d equals d0, times…B: Oh yeahC: or [another course] I don’t know, d equals d0 times ten to the t0 minus t over z.” |

| Projecting to other or future applications | Reference to other problem spaces, external to the current problem, where the current solution would be useful or not useful based on that nature of the current problem or solution. | “So the model will not account for really hot or really cold days.” |

| Outcome | Definition | Quote |

|---|---|---|

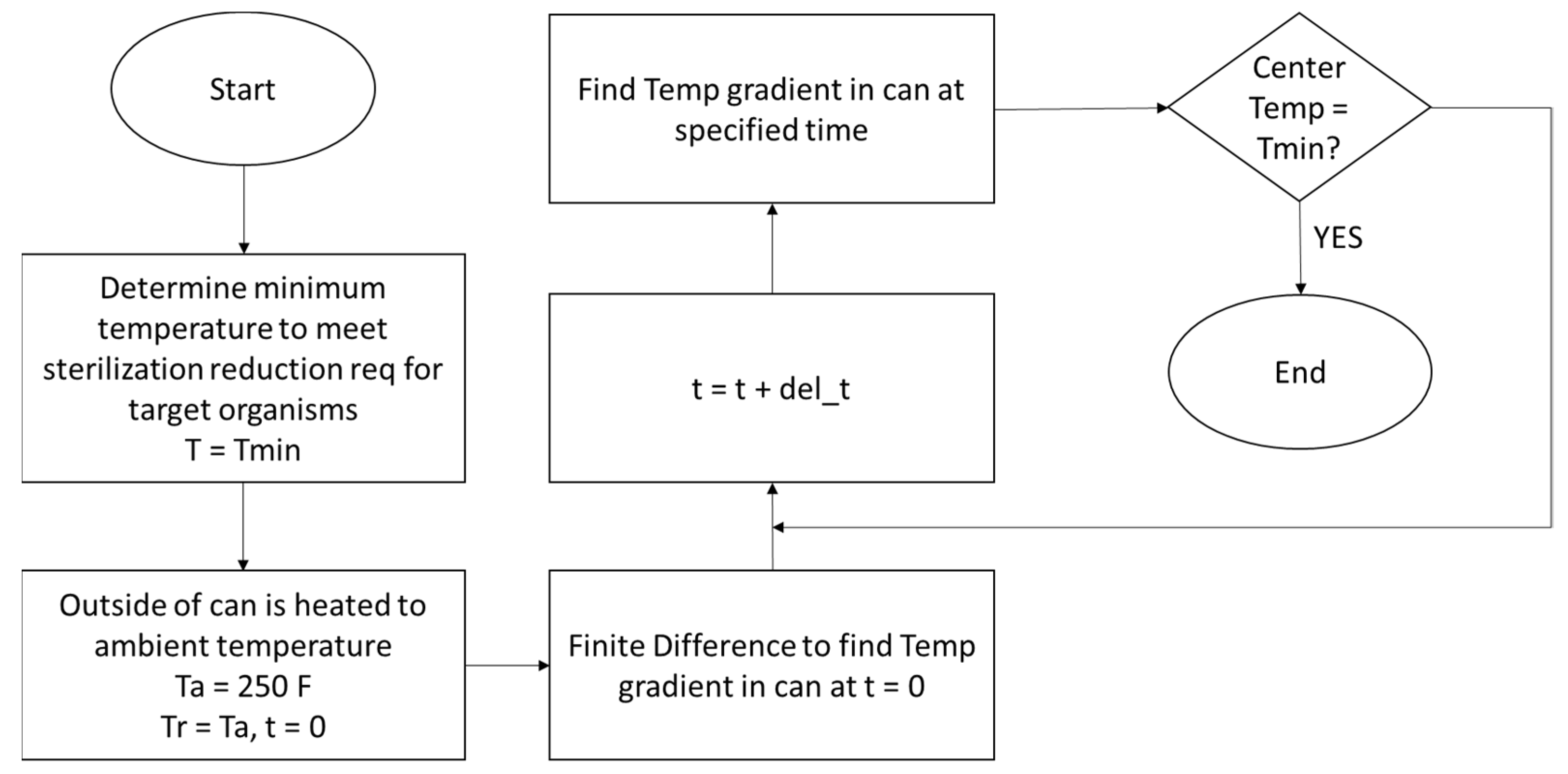

| Organization of larger solution method | Decomposing the code or solution method as an organizational tool. | See Figure 2 |

| Allocating resources | Breaking up a problem amongst group members in order to reduce complexity of the work required or to increase quality of the solution method | “Do we wanna split up this in any way? Is that allowed? What we’re doing. Um. So like we’ll have to individually look up a bunch of equations and the limitations and benefits of the computer systems we decide to use. Or like the solving method we decide to use.” |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyon, J.A.; Magana, A.J.; Streveler, R.A. Characterizing Computational Thinking in the Context of Model-Planning Activities. Modelling 2022, 3, 344-358. https://doi.org/10.3390/modelling3030022

Lyon JA, Magana AJ, Streveler RA. Characterizing Computational Thinking in the Context of Model-Planning Activities. Modelling. 2022; 3(3):344-358. https://doi.org/10.3390/modelling3030022

Chicago/Turabian StyleLyon, Joseph A., Alejandra J. Magana, and Ruth A. Streveler. 2022. "Characterizing Computational Thinking in the Context of Model-Planning Activities" Modelling 3, no. 3: 344-358. https://doi.org/10.3390/modelling3030022

APA StyleLyon, J. A., Magana, A. J., & Streveler, R. A. (2022). Characterizing Computational Thinking in the Context of Model-Planning Activities. Modelling, 3(3), 344-358. https://doi.org/10.3390/modelling3030022