An Anti-Noise-Designed Residual Phase Unwrapping Neural Network for Digital Speckle Pattern Interferometry

Abstract

1. Introduction

2. Anti-Noise-Designed Residual U-Net Phase Unwrapping Network Design

2.1. Residiual Block

2.2. U-Net Phase Unwrapping Network

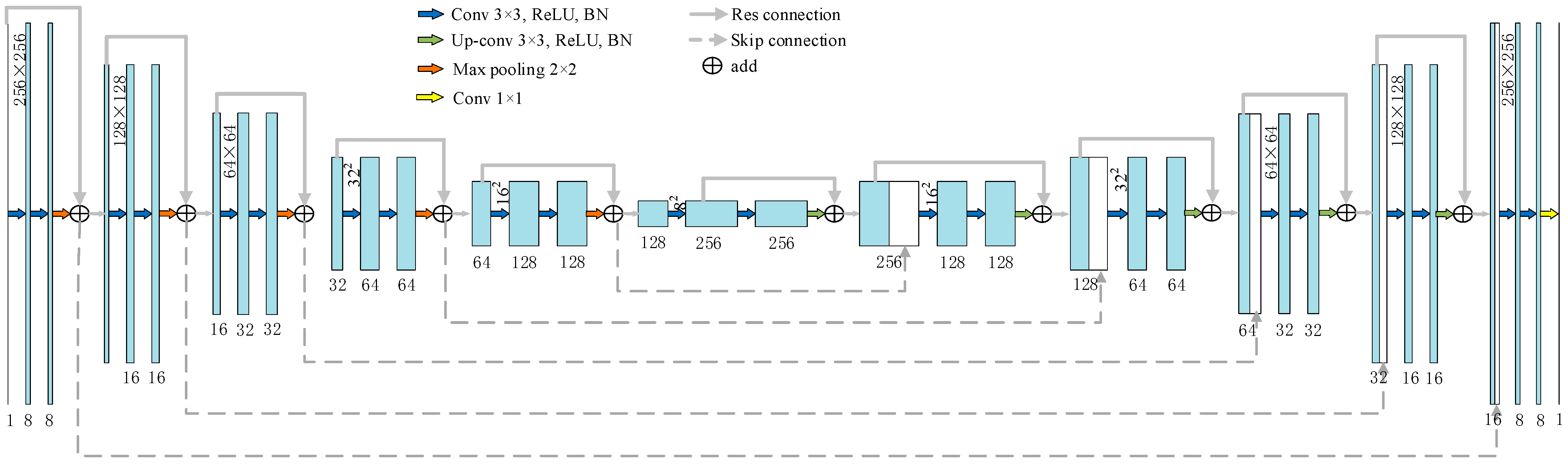

2.3. Neural Network Architecture

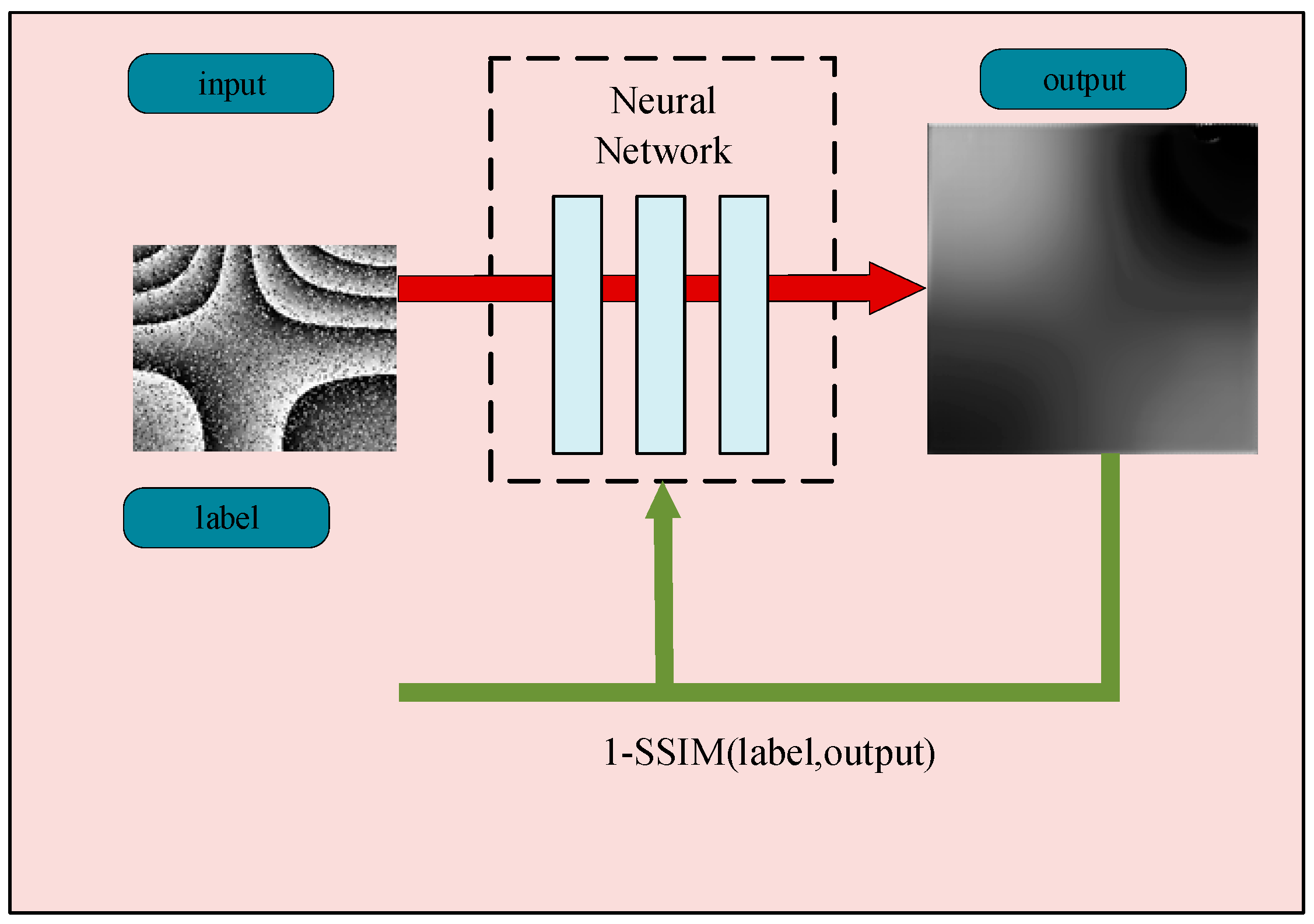

2.4. SSIM Loss Function

3. Dataset Establishment, Network Training, and Enhancement

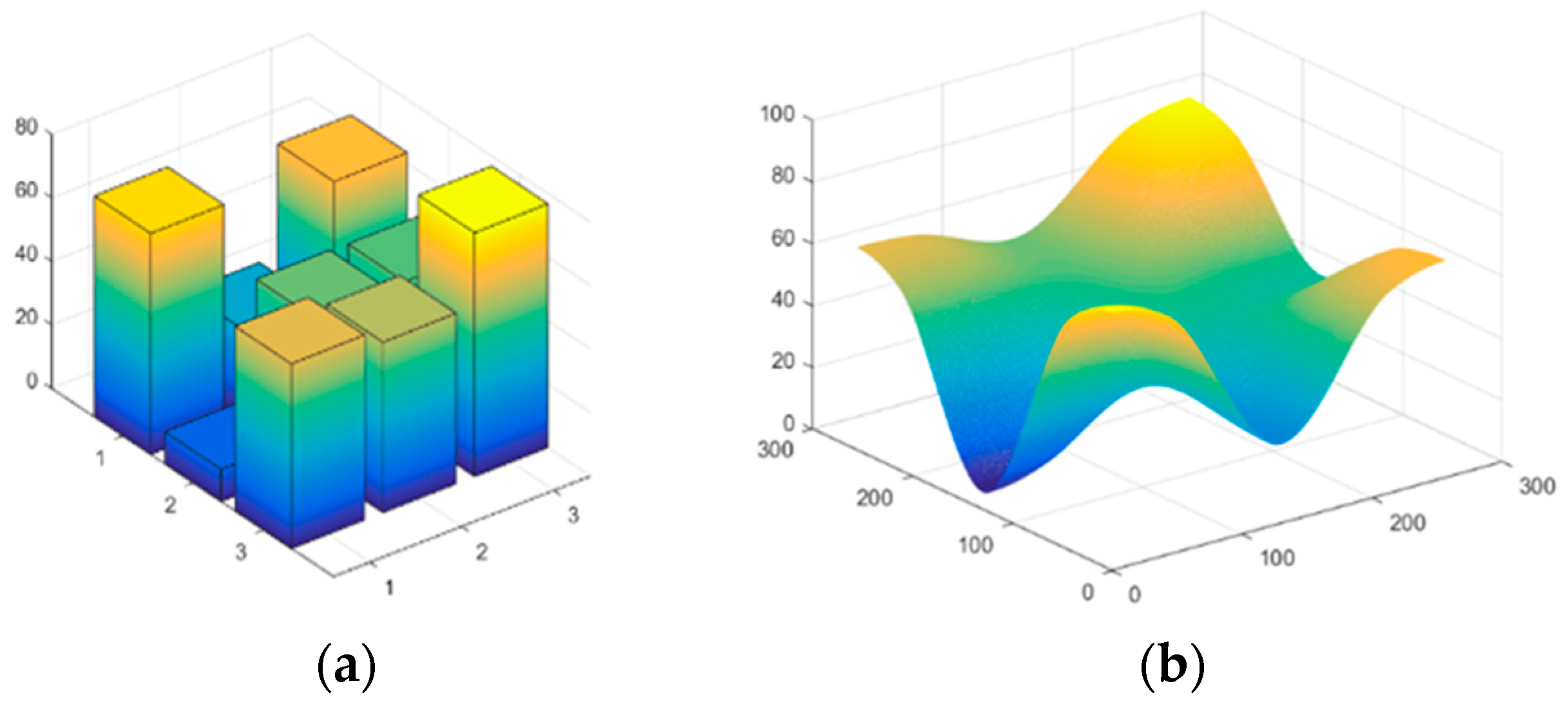

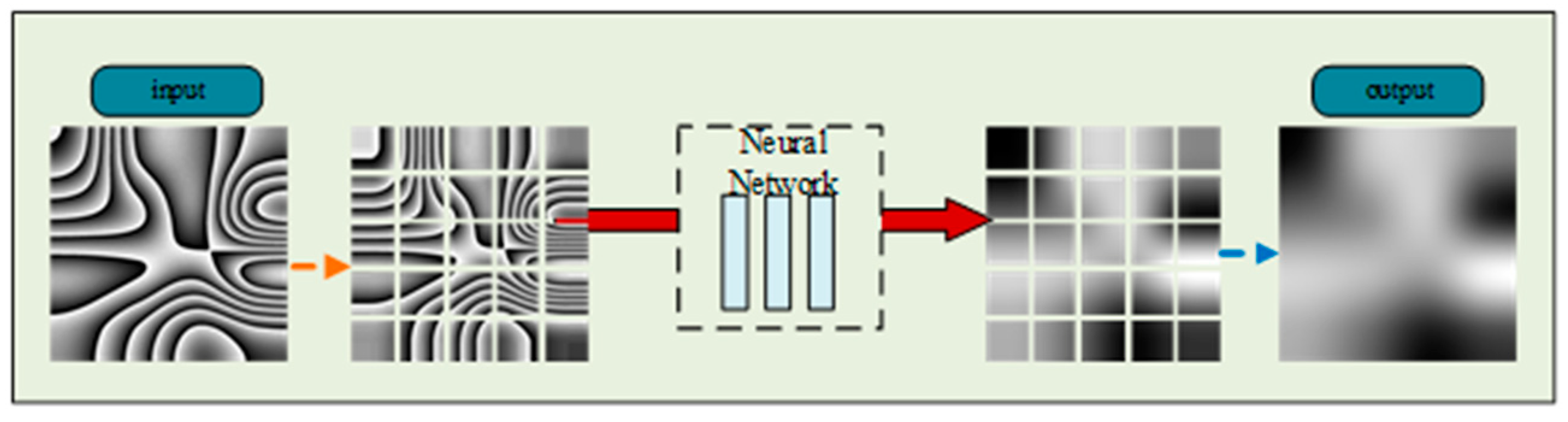

3.1. Dataset Establishment

3.2. Network Implementation Details, Tables, and Schemes

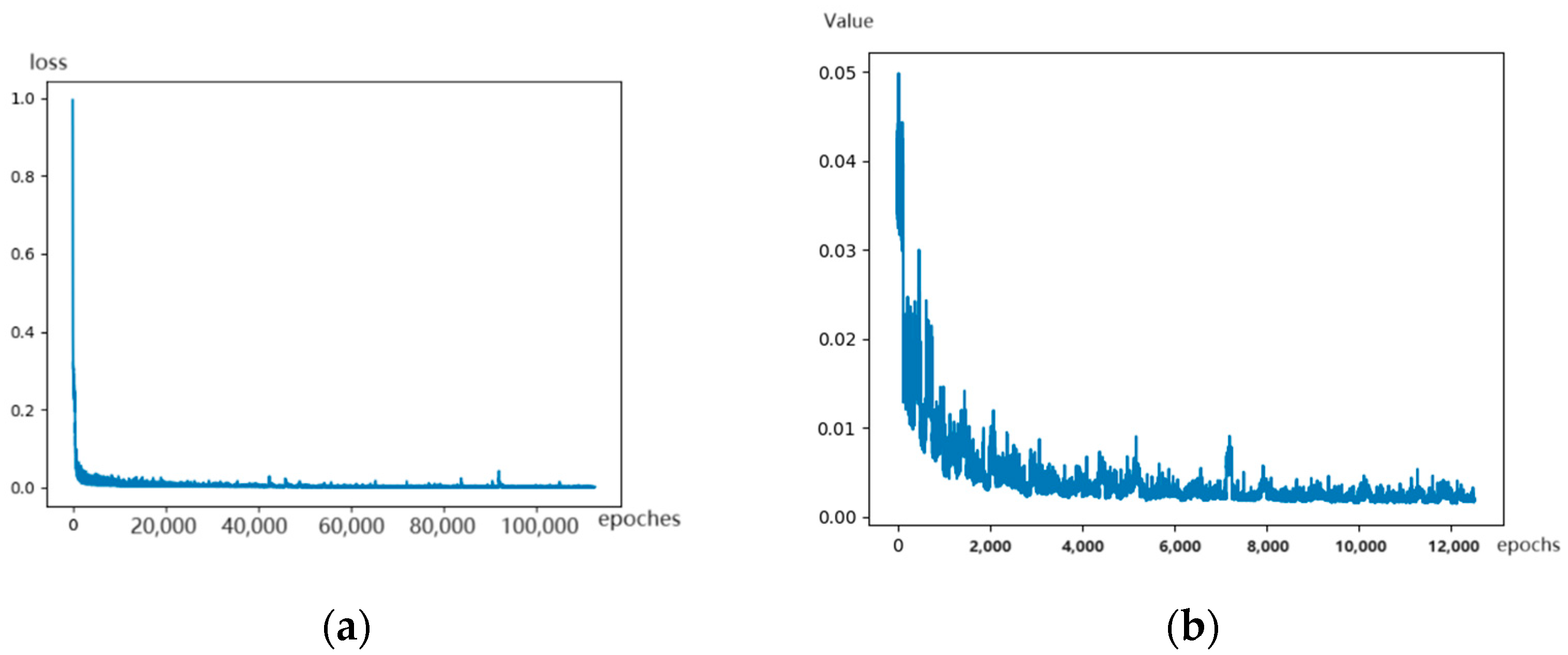

3.3. Network Training Process

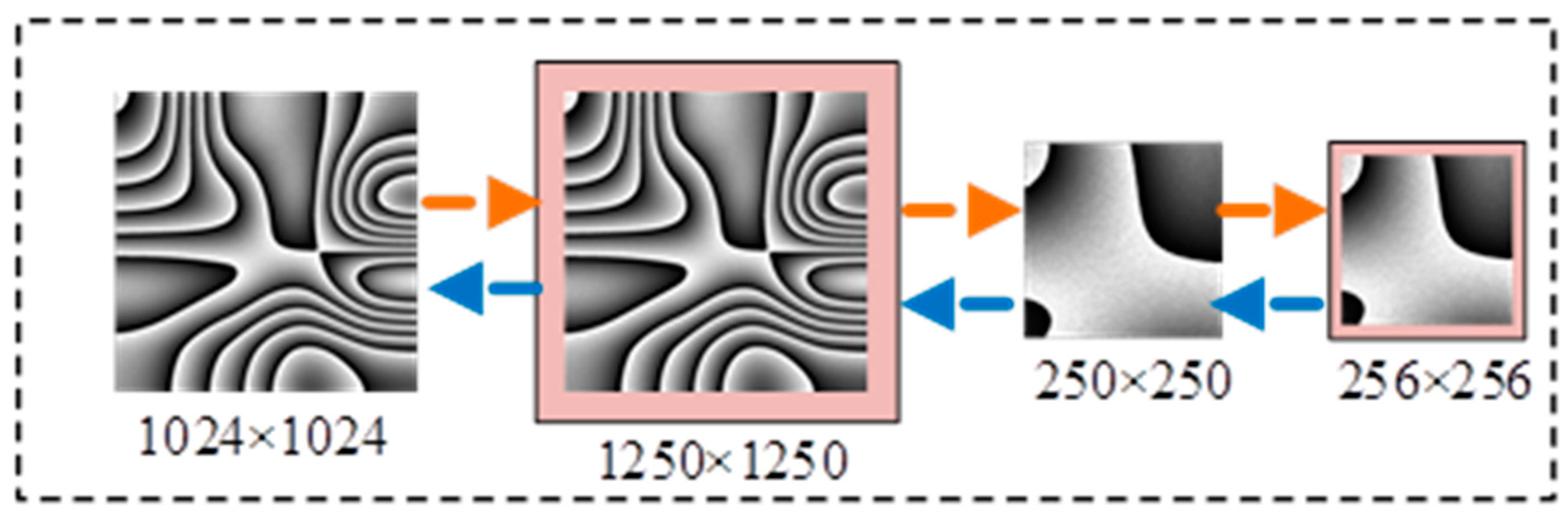

3.4. Image Stitching Function Design

4. Experimental Results and Analysis

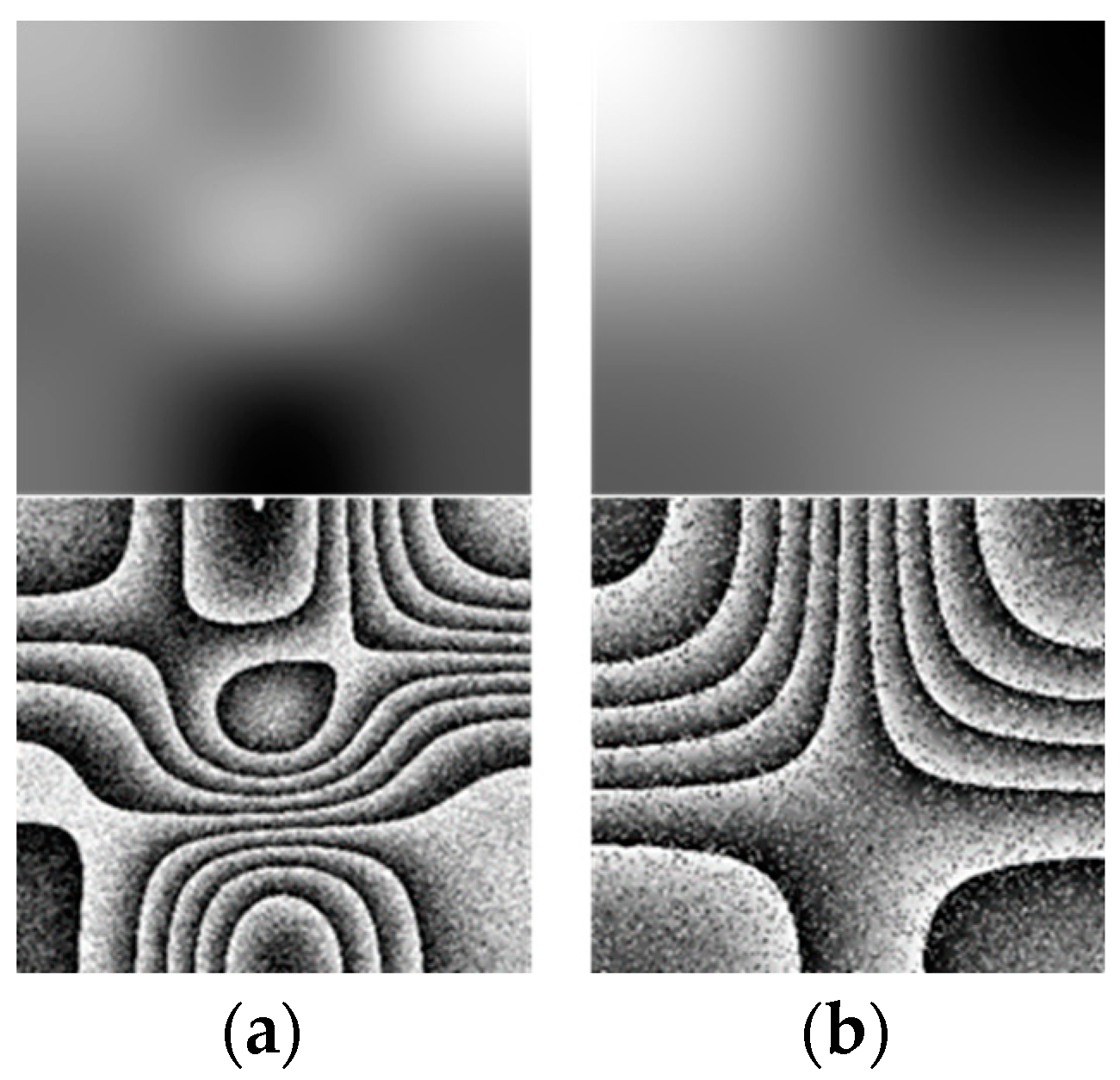

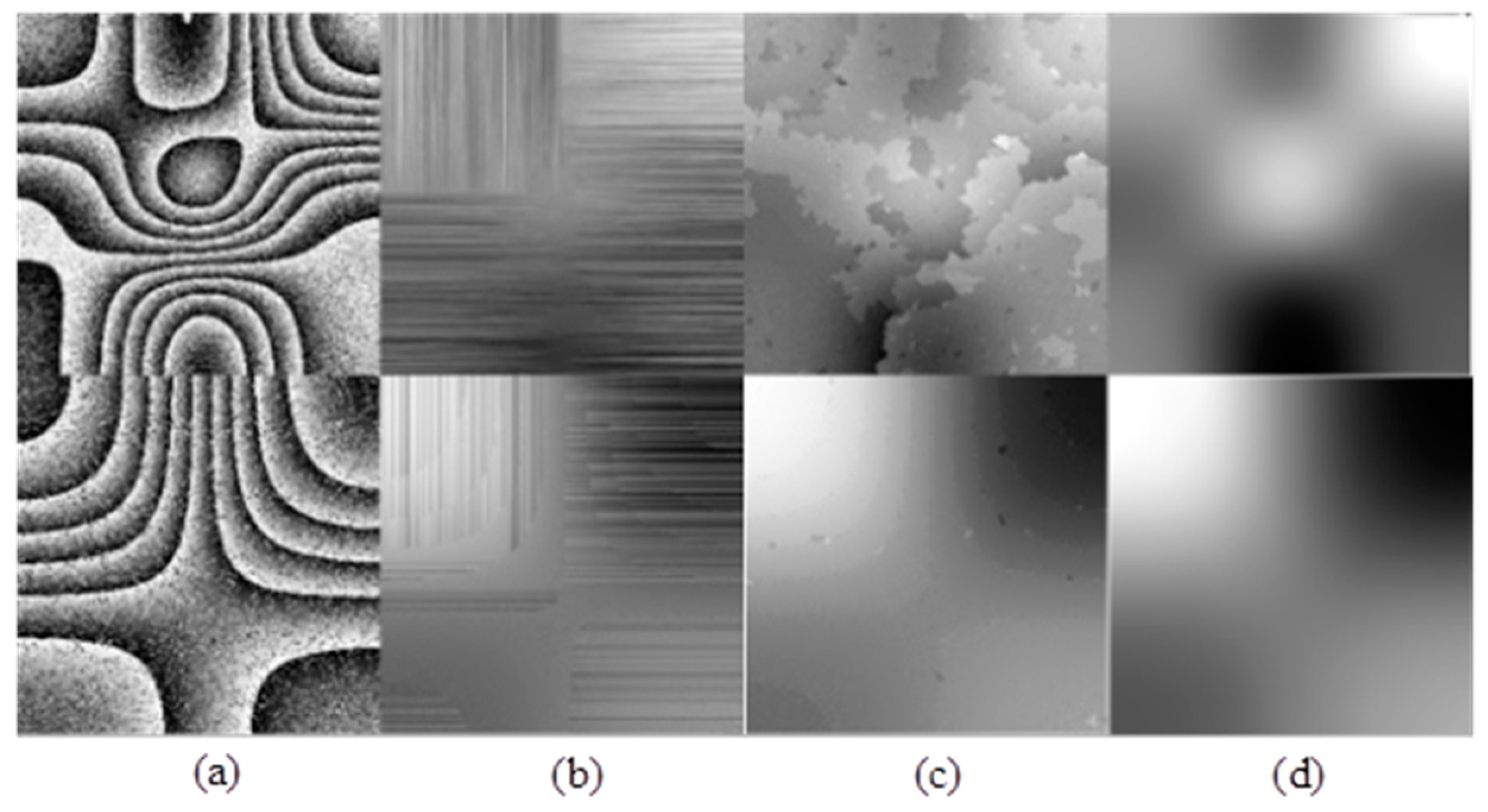

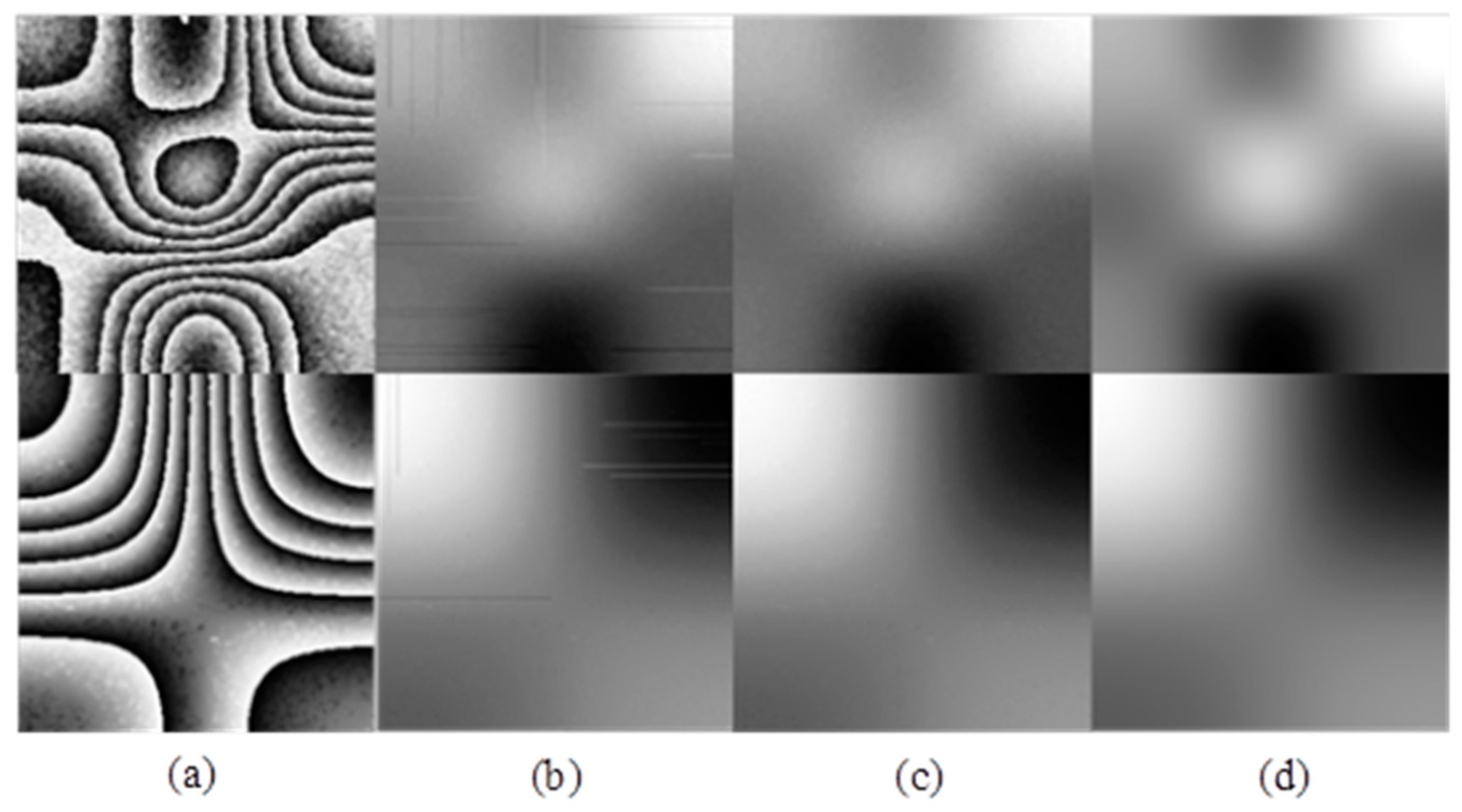

4.1. Image Results of Simulation and Experimental Data Testing

4.2. Result Analysis and Effectiveness Verification

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, K.; Kemao, Q.; Di, J.; Zhao, J. Deep learning spatial phase unwrapping: A comparative review. Adv. Photonics Nexus 2022, 1, 014001. [Google Scholar] [CrossRef]

- Marquet, P.; Depeursinge, C.; Magistretti, P.J. Exploring neural cell dynamics with digital holographic microscopy. Annu. Rev. Biomed. Eng. 2013, 15, 407–431. [Google Scholar] [CrossRef] [PubMed]

- An, H.; Cao, Y.; Zhang, Y.; Li, H. Phase-Shifting Temporal Phase Unwrapping Algorithm for High-Speed Fringe Projection Profilometry. IEEE Trans. Instrum. Meas. 2023, 72, 5009209. [Google Scholar] [CrossRef]

- Zhang, Z.; Qian, J.; Wang, Y.; Yang, X. An Improved Least Square Phase Unwrapping Algorithm Combined with Convolutional Neural Network. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3384–3387. [Google Scholar] [CrossRef]

- Chavez, S.; Xiang, Q.S.; An, L. Understanding phase maps in MRI: A new cutline phase unwrapping method. IEEE Trans. Med. Imaging 2002, 21, 966–977. [Google Scholar] [CrossRef] [PubMed]

- Lv, S.; Jiang, M.; Su, C.; Zhang, L.; Zhang, F.; Sui, Q.; Jia, L. Improved unwrapped phase retrieval method of a fringe projection profilometry system based on fewer phase-coding patterns. Appl. Opt. 2019, 58, 8993–9001. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, R.M.; Zebker, H.A.; Werner, C.L. Satellite radar interferometry: Two-dimensional phase unwrapping. Radio Sci. 1988, 23, 713–720. [Google Scholar] [CrossRef]

- Flynn, T.J. Two-dimensional phase unwrapping with minimum weighted discontinuity. J. Opt. Soc. Am. A (Opt. Image Sci. Vis.) 1997, 14, 2692–2701. [Google Scholar] [CrossRef]

- Flynn, T.J. Consistent 2-D phase unwrapping guided by a quality map. In Proceedings of the 1996 International Geoscience and Remote Sensing Symposium ‘Remote Sensing for a Sustainable Future’, Lincoln, NE, USA, 27–31 May 1996; Volume 4, pp. 2057–2059. [Google Scholar] [CrossRef]

- Xu, W.; Cumming, I. A region-growing algorithm for InSAR phase unwrapping. IEEE Trans. Geosci. Remote Sens. 1999, 37, 124–134. [Google Scholar] [CrossRef]

- Schwartzkopf, W.; Milner, T.E.; Ghosh, J.; Evans, B.L.; Bovik, A.C. Two-dimensional phase unwrapping using neural networks. In Proceedings of the 4th IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI 2000), Austin, TX, USA, 2–4 April 2000; pp. 274–277. [Google Scholar] [CrossRef]

- Dardikman, G.; Shaked, N.T. Phase Unwrapping Using Residual Neural Networks. In Imaging and Applied Optics 2018; OSA Technical Digest; Optica Publishing Group: Washington, DC, USA, 2018. [Google Scholar] [CrossRef]

- Sinha, A.; Lee, J.; Li, S.; Barbastathis, G. Lensless computational imaging through deep learning. Optica 2017, 4, 111711–111725. [Google Scholar] [CrossRef]

- Rivenson, Y.; Zhang, Y.; Günaydın, H.; Teng, D.; Ozcan, A. Phase recovery and holographic image reconstruction using deep learning in neural networks. Light-Sci. Appl. 2018, 7, 17141. [Google Scholar] [CrossRef] [PubMed]

- Spoorthi, G.E.; Gorthi, S.; Gorthi, R.K.S.S. PhaseNet: A Deep Convolutional Neural Network for Two-Dimensional Phase Unwrapping. IEEE Signal Process. Lett. 2019, 26, 54–58. [Google Scholar] [CrossRef]

- Spoorthi, G.E.; Gorthi, R.K.S.S.; Gorthi, S. PhaseNet 2.0: Phase Unwrapping of Noisy Data Based on Deep Learning Approach. IEEE Trans. Image Process. 2020, 29, 4862–4872. [Google Scholar] [CrossRef]

- Liu, K.; Zhang, Y. Temporal phase unwrapping with a lightweight deep neural network. In Proceedings of the SPIE 11571, Optics Frontier Online 2020: Optics Imaging and Display, Shanghai, China, 19–20 June 2020; Volume 11571, p. 115710N. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, T.; Wang, Y.; Ge, D. A New Phase Unwrapping Method Combining Minimum Cost Flow with Deep Learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3177–3180. [Google Scholar] [CrossRef]

- Zhou, L.; Yu, H.; Lan, Y.; Xing, M. Deep Learning-Based Branch-Cut Method for InSAR Two-Dimensional Phase Unwrapping. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5209615. [Google Scholar] [CrossRef]

- Chen, X.; Wu, Q.; He, C. Feature Pyramid and Global Attention Network Approach to Insar Two-Dimensional Phase Unwrapping. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2923–2926. [Google Scholar] [CrossRef]

- Sica, F.; Calvanese, F.; Scarpa, G.; Rizzoli, P. A CNN-Based Coherence-Driven Approach for InSAR Phase Unwrapping. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4003705. [Google Scholar] [CrossRef]

- Ye, X.; Qian, J.; Wang, Y.; Yu, H.; Wang, L. A Detail-Preservation Method of Deep Learning One-Step Phase Unwrapping. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 1115–1118. [Google Scholar] [CrossRef]

- Yang, K.; Yuan, Z.; Xing, X.; Chen, L. Deep-Learning-Based Mask-Cut Method for InSAR Phase Unwrapping. IEEE J. Miniaturization Air Space Syst. 2023, 4, 221–230. [Google Scholar] [CrossRef]

- Xiao, X.; Lian, S.; Luo, Z.; Li, S. Weighted Res-UNet for High-Quality Retina Vessel Segmentation. In Proceedings of the 2018 9th Interna-tional Conference on Information Technology in Medicine and Education (ITME), Hangzhou, China, 19–21 October 2018; pp. 327–331. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Li, Y.; Kemao, Q.; Di, J.; Zhao, J. One-step robust deep learning phase unwrapping. Opt. Express 2019, 27, 15100–15115. [Google Scholar] [CrossRef] [PubMed]

| Unwrapping Method | 256 × 256 | 512 × 512 | 1024 × 1024 |

|---|---|---|---|

| Algorithm based on spiral path | 0.21 | 0.24 | 0.4237 |

| Algorithm based on reliability | 0.31 | 6.5 | 74.84 |

| Res-unet | 1.087 | 1.085 | 1.169 |

| Unwrapping Method | Figure 8a | Figure 8a after Denoising | Figure 8b | Figure 8b after Denoising |

|---|---|---|---|---|

| Algorithm based on spiral path | 0.3069 | 0.8933 | 0.4237 | 0.9312 |

| Algorithm based on reliability | 0.5947 | 0.9797 | 0.8499 | 0.9828 |

| Res-unet | 0.9876 | 0.9876 | 0.9932 | 0.9936 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Cao, X.; Lan, M.; Wu, C.; Wang, Y. An Anti-Noise-Designed Residual Phase Unwrapping Neural Network for Digital Speckle Pattern Interferometry. Optics 2024, 5, 44-55. https://doi.org/10.3390/opt5010003

Wang B, Cao X, Lan M, Wu C, Wang Y. An Anti-Noise-Designed Residual Phase Unwrapping Neural Network for Digital Speckle Pattern Interferometry. Optics. 2024; 5(1):44-55. https://doi.org/10.3390/opt5010003

Chicago/Turabian StyleWang, Biao, Xiaoling Cao, Meiling Lan, Chang Wu, and Yonghong Wang. 2024. "An Anti-Noise-Designed Residual Phase Unwrapping Neural Network for Digital Speckle Pattern Interferometry" Optics 5, no. 1: 44-55. https://doi.org/10.3390/opt5010003

APA StyleWang, B., Cao, X., Lan, M., Wu, C., & Wang, Y. (2024). An Anti-Noise-Designed Residual Phase Unwrapping Neural Network for Digital Speckle Pattern Interferometry. Optics, 5(1), 44-55. https://doi.org/10.3390/opt5010003