In this subsection, we evaluate the performance of our proposed models for the NILM task using real household smart meter data. Our evaluation is conducted on the UK-DALE and REDD datasets using four standard evaluation metrics: accuracy, F1-score, mean relative error (MRE), and mean absolute error (MAE). These metrics are chosen because they are widely used in previous benchmark studies, allowing for a direct and meaningful comparison with existing methods.

This metric is especially useful for comparing errors across appliances with different power ranges.

4.4.1. Training Results

To establish a fair comparison, we first trained the publicly available models BERT4NILM [

7] and ELECTRIcity [

8] and then compared their performance with that of our proposed models. BERT4NILM was trained for 20 epochs following its original implementation [

7], which aligns with the configuration used for our proposed GRU+BERT and Bi-GRU+BERT models. On the other hand, ELECTRIcity [

8] was trained for 90 epochs as specified in its original implementation in [

8].

Table 3 presents the detailed training results for five common appliances: kettle, fridge, washing machine, microwave, and dishwasher. The results demonstrate that our proposed models consistently achieve a competitive performance in terms of all four metrics while maintaining comparable training times.

All models were trained using an NVIDIA A100 GPU, and the corresponding training times are reported to ensure transparency and reproducibility. The best results for each model were obtained after several training runs to ensure stability. This training analysis, which is reported in

Table 3, serves two purposes: (1) to identify signs of overfitting by examining discrepancies between training and test scores; and (2) to evaluate the training efficiency in terms of convergence speed and computational overhead. These insights help highlight the trade-offs between performance and practicality, particularly in real-world NILM deployments where fast, efficient training is critical.

In

Table 4, we summarize the model complexity and computational cost of the compared approaches, reporting trainable parameters, approximate floating-point operations per second (FLOPs) per input window, memory usage, and wall-clock inference times on the CPU and NVIDIA A100 GPU. Our analysis shows that Bi-GRU+BERT has the highest parameter count, which is approximately four times and three times that of BERT4NILM and GRU+BERT, respectively, while ELECTRICITY exhibits high FLOPs despite the lowest number of parameters, indicating more expensive per-sample operations. Memory usage follows a similar trend, with Bi-GRU+BERT requiring less memory than ELECTRICITY but more than the other baselines. Inference times on both the CPU and GPU scale are approximately consistent with FLOPs, where both proposed models are consistently faster than ELECTRICITY on the GPU, and GRU+BERT is also faster on the CPU.

In addition, training times per epoch on A100 remain comparable across models, suggesting that the increased model size does not lead to prohibitive training overhead. These results indicate that the model complexity, while higher for Bi-GRU+BERT, does not translate into impractical deployment constraints compared to the existing models. Specifically, GRU+BERT achieves strong predictive performance with modest computational cost, requiring only 18 ms per input window on the CPU and 0.5 ms on the GPU, making it well-suited for resource-limited environments such as residential smart meters. Bi-GRU+BERT, while larger and more computationally intensive, achieves the highest predictive accuracy and still maintains real-time GPU inference (1 ms per window) and reasonable CPU inference (42 ms per window). Therefore, both models are viable for practical NILM deployment: GRU+BERT provides an efficient and lighter-weight solution for residential scenarios, whereas Bi-GRU+BERT is more suitable when higher accuracy is prioritized and GPU acceleration is available.

4.4.2. Test Results

We benchmark the test performance of our models against several state-of-the-art baselines. As shown in

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9, we provide an appliance-wise comparison across the four evaluation metrics, enabling a comprehensive assessment of both accuracy and generalization. These test results are critical in confirming that our proposed models not only perform well on the training data but also can generalize effectively to unseen scenarios, which is an essential requirement for real-world NILM applications. Moreover we determined the optimal hyperparameters through a combination of manual tuning and by aligning with configurations commonly used in recent transformer-based NILM studies [

7,

8,

9,

25], ensuring both fair comparison and optimal performance in our specific setup. We also acknowledge that reproduced results for these baselines may vary slightly from the originally reported numbers due to differences in initialization, data preprocessing, train/test splits, and implementation details, which are often not fully specified in the original publications.

To maintain consistency with previous state-of-the-art models, we initially trained our models for 20 epochs, following common practices reported in prior works such as GRU+, LSTM+, CNN, CTA-BERT, and BERT4NILM. The reported results for GRU+, LSTM+, CNN, and CTA-BERT were taken from [

9]. For BERT4NILM [

7] and ELECTRIcity [

8], we utilized the publicly available code to reproduce and evaluate them on our setup and also provide the results reported in their original implementations.

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 present the performance of all models on the UK-DALE test set, with the best results highlighted in bold.

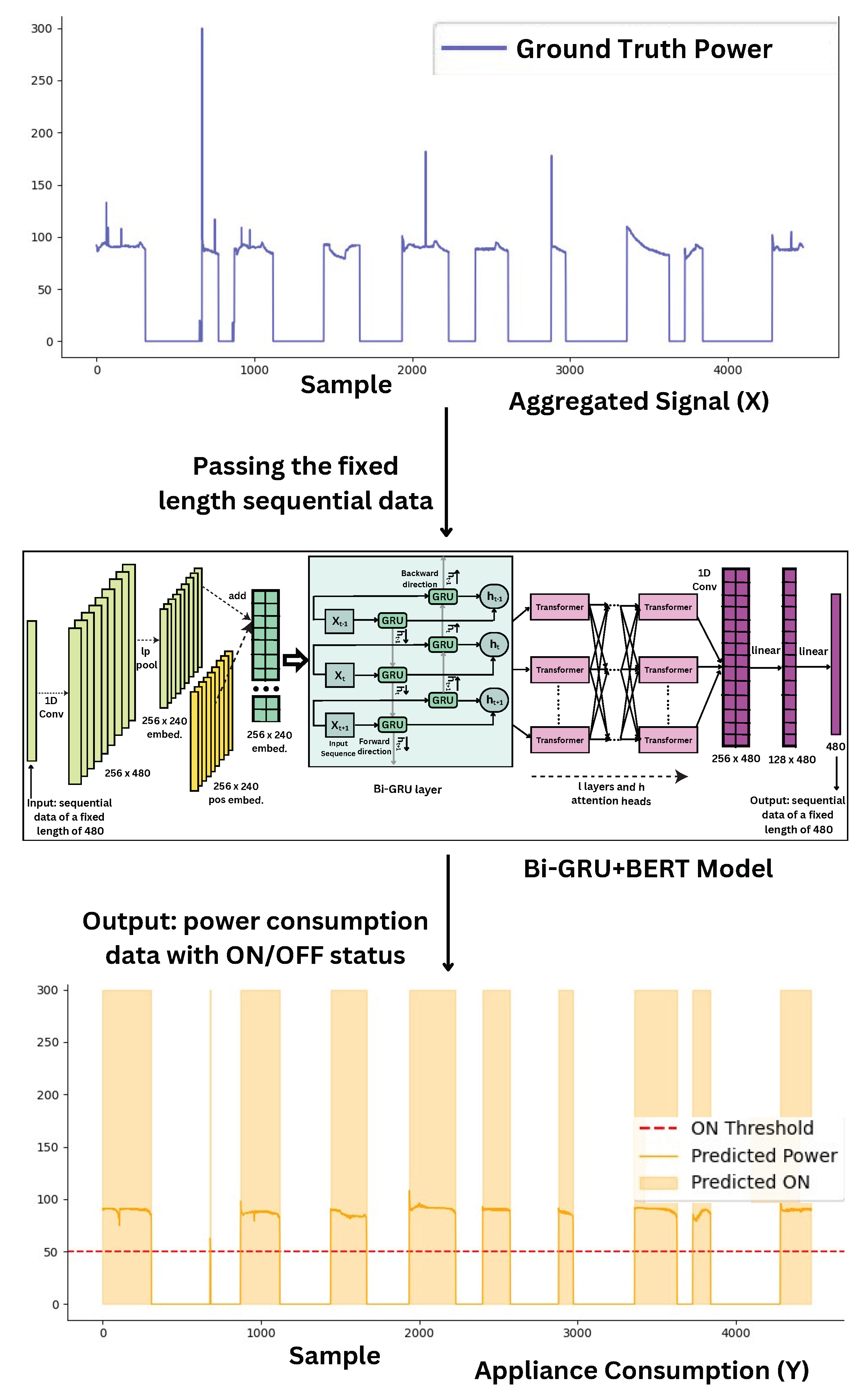

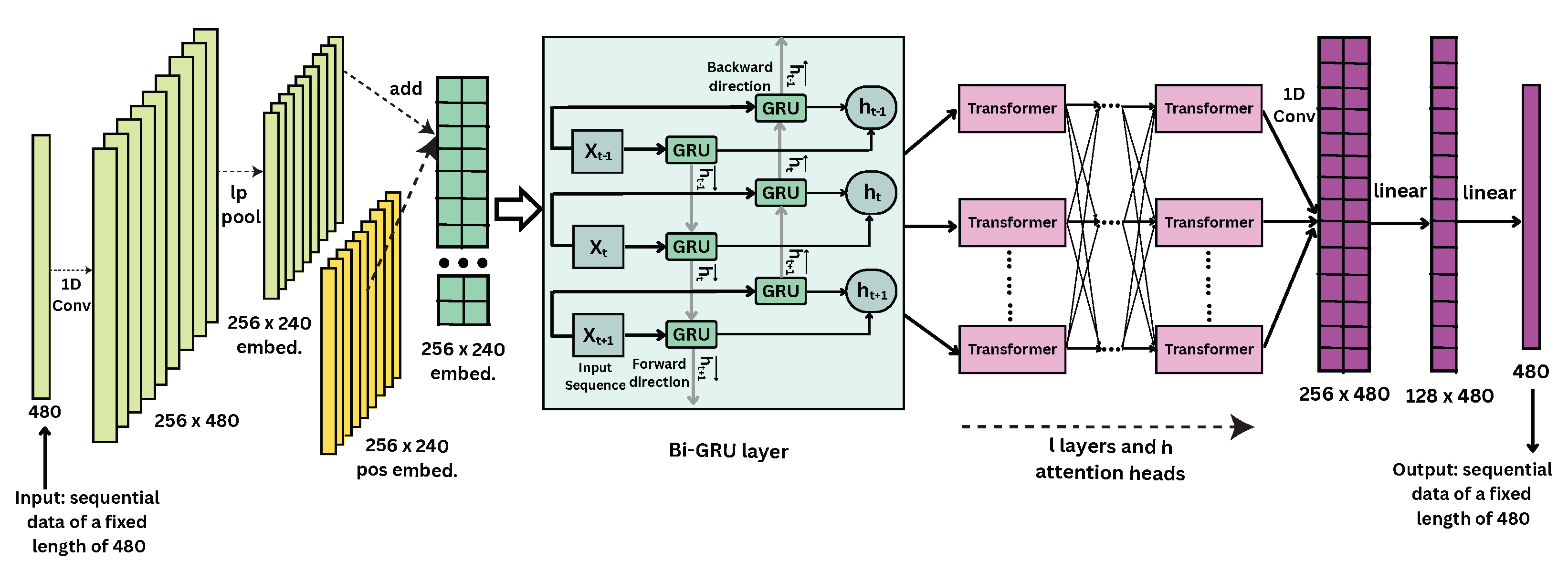

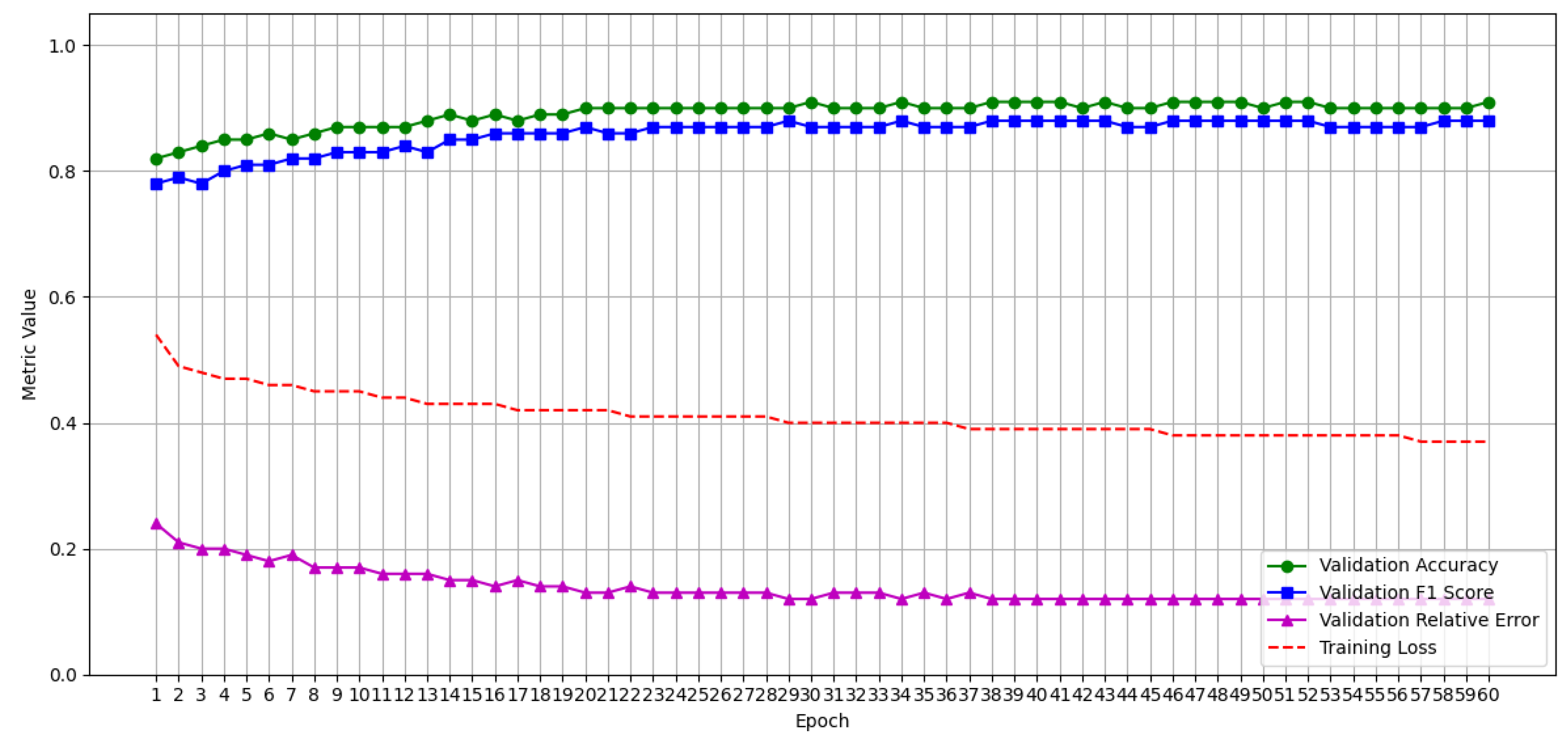

Additionally, to conduct a more comprehensive comparison with models trained for longer durations such as ELECTRIcity [

8], which was trained for 90 epochs, we extended our training until the error rates of our proposed models reached convergence, which occurred around 60 epochs, as shown in

Figure 3. With this extended training, our models outperformed ELECTRIcity [

8] across all appliances except for the kettle and dishwasher, where they achieved comparable performance. Moreover, our training budget reflects realistic NILM deployment settings, where faster convergence and lower computational overhead are key performance indicators [

29,

30]. Even within these constraints, our models consistently demonstrate robust generalization and competitive performance.

As shown in

Table 5 for the kettle, GRU+BERT and Bi-GRU+BERT attain F1-scores of 0.798 and 0.804, respectively, with accuracy at 0.997 and low MRE and MAE values. Although slightly behind CTA-BERT in F1-score, our models still deliver highly reliable disaggregation with minimal error—especially considering the short duration and high-frequency switching nature of kettle usage. These brief and abrupt power bursts are harder to detect consistently, which may explain the slight drop in F1-score compared to models [

9] specifically designed with mechanisms such as time-aware masking or dilation for capturing transient behaviors.

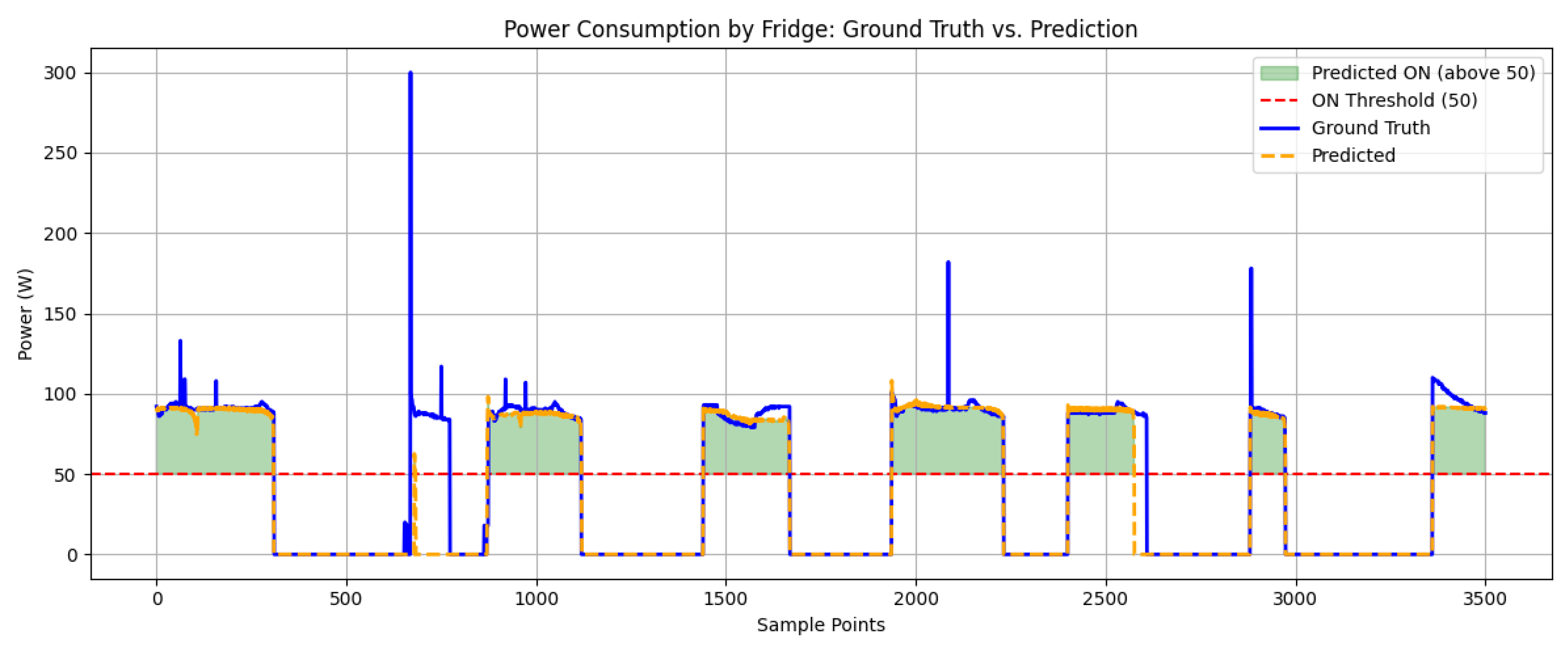

The fridge results in

Table 6 show that both GRU+BERT and Bi-GRU+BERT outperform existing approaches, achieving the highest F1-scores (0.871) and improved accuracy (0.887 and 0.889). Despite being trained for significantly fewer epochs, our proposed models surpass other models, demonstrating their robustness in detecting long, continuous appliance cycles.

Figure 4 further illustrates the effectiveness of the Bi-GRU+BERT model in tracking real-time fridge power usage and corresponding ON/OFF states, capturing the cyclical nature of the appliance with high temporal accuracy.

The performance of the washing machine is summarized in

Table 7, where both of our proposed models, trained for 20 epochs, demonstrate substantial improvements over the baseline methods. They achieve F1-scores of 0.765 and maintain high accuracy levels of 0.992 and 0.993, respectively. Additionally, both models achieve comparatively low MRE values (0.015), indicating strong precision in estimating appliance-level power consumption. These results suggest that the combination of transformer layers with GRU-based temporal modeling effectively captures the washing machine’s long and complex operational cycles. When extended to 60 epochs, both proposed models further improve their performance, surpassing the previously best-performing model, ELECTRIcity. Specifically, GRU+BERT and Bi-GRU+BERT attain F1-scores of 0.877 and 0.857, respectively, with an accuracy of 0.996 and MREs of 0.010 and 0.011. These findings highlight the robustness and scalability of our models in learning appliance behavior across different training durations.

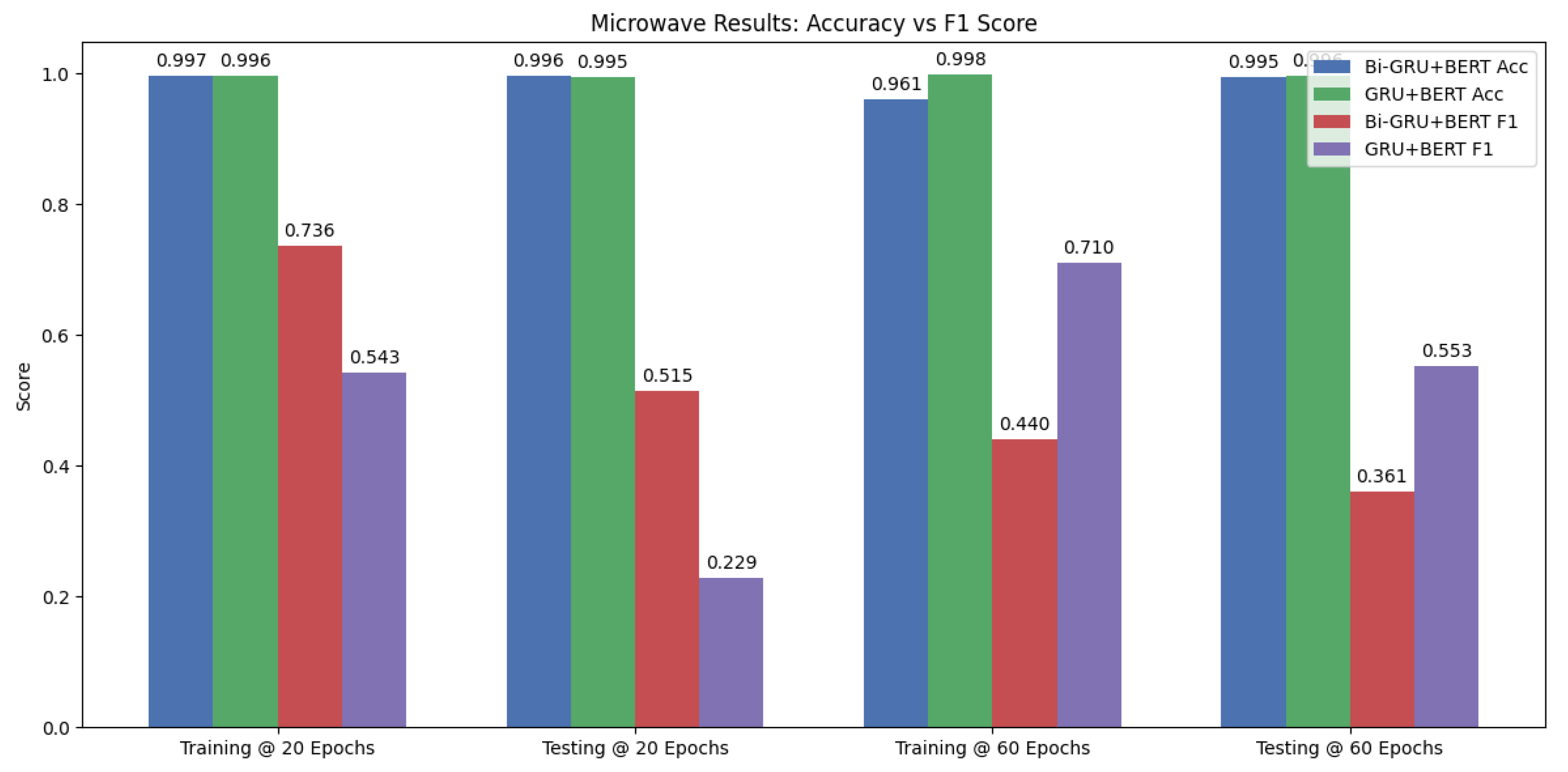

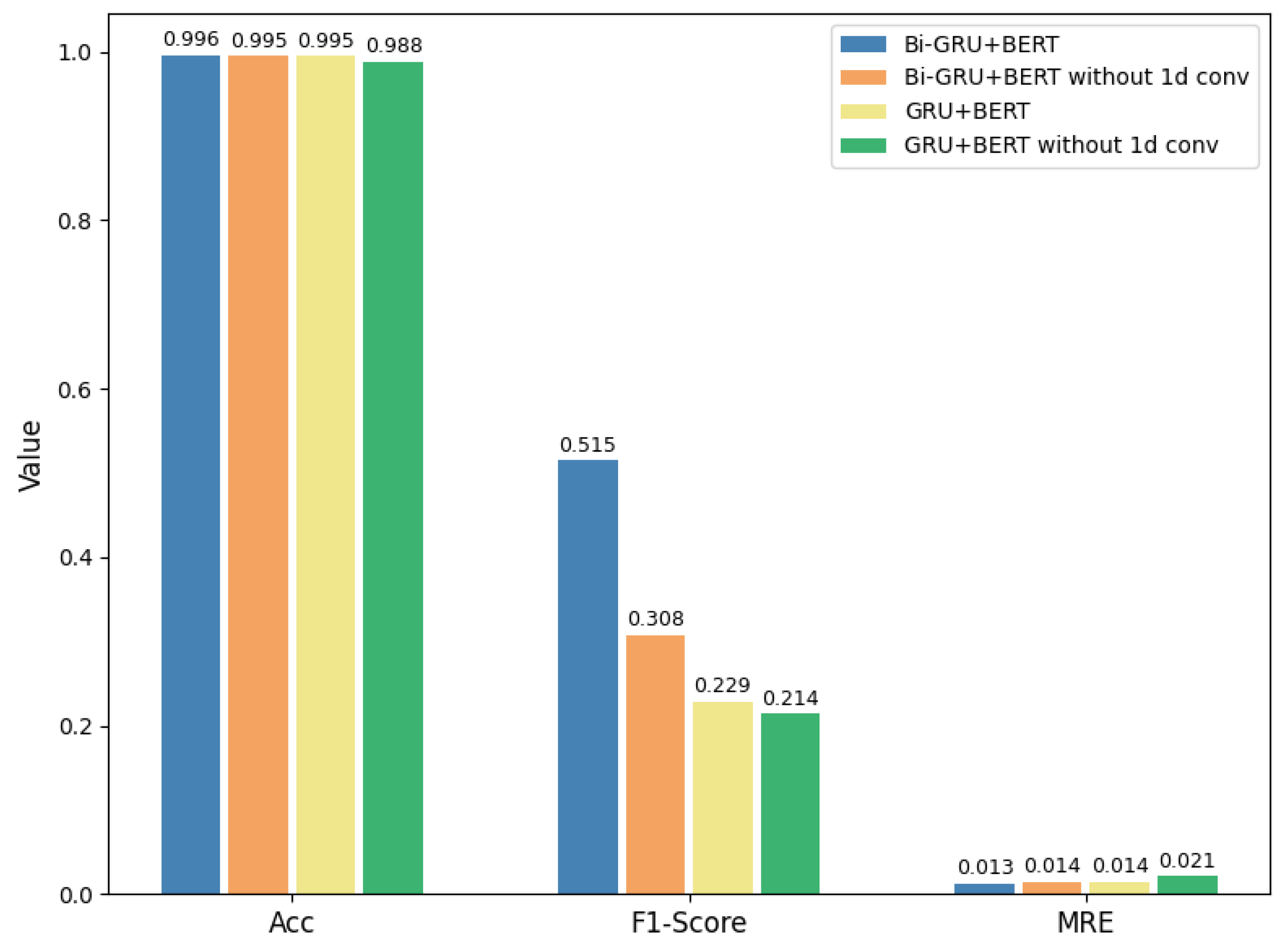

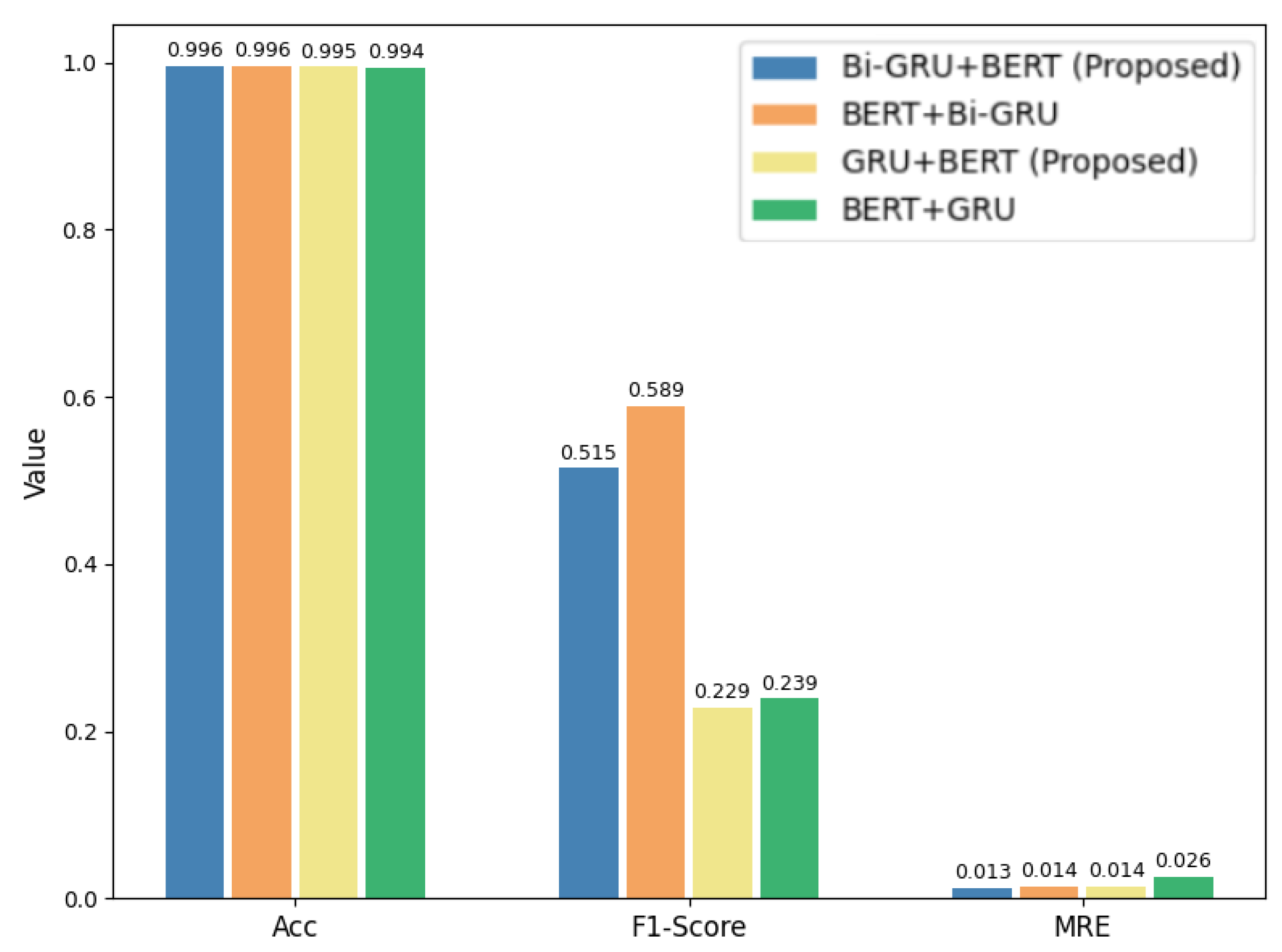

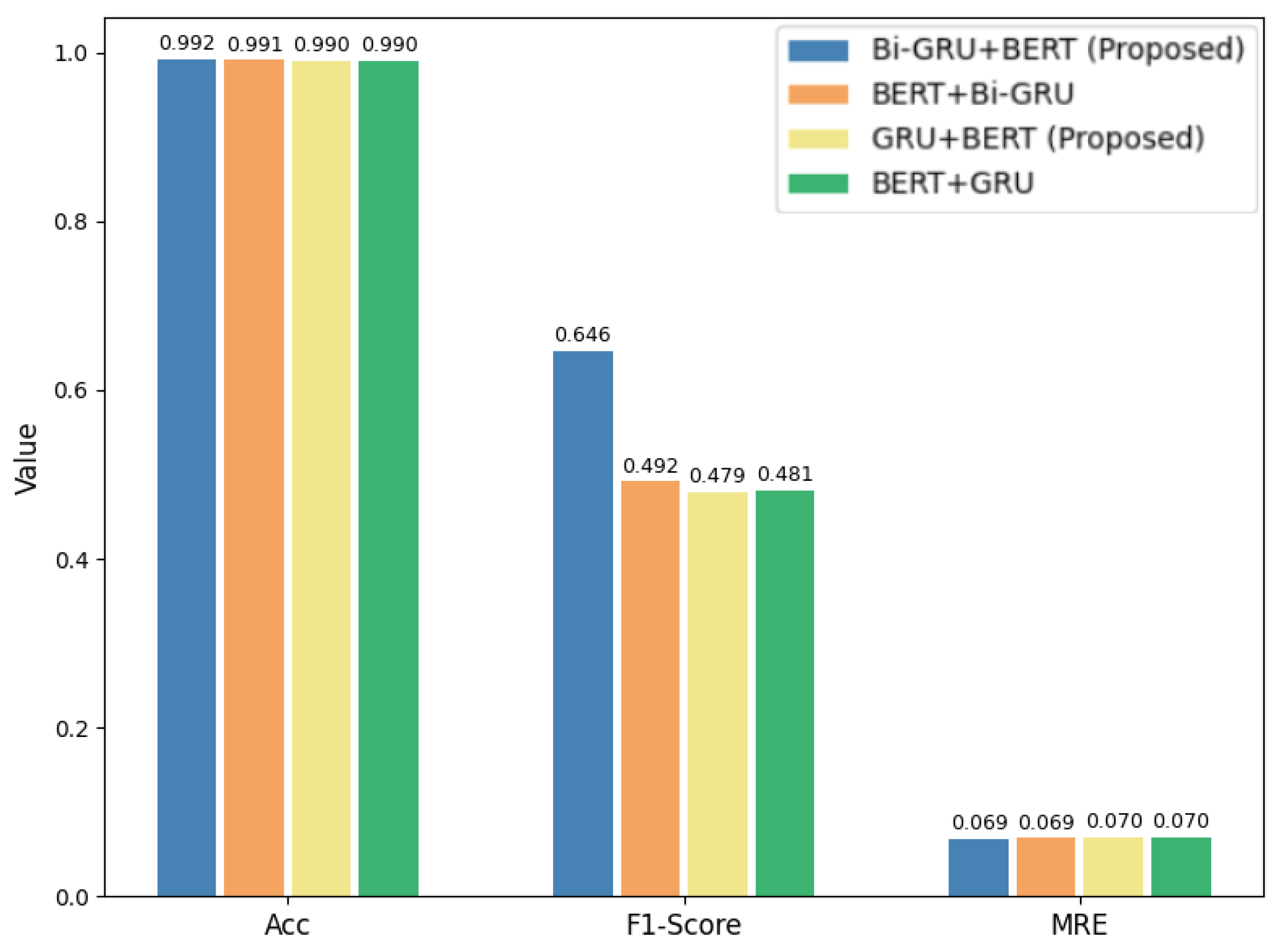

The results on the microwave shown in

Table 8 demonstrate that our proposed Bi-GRU+BERT model achieves a test F1-score of 0.515, clearly outperforming BERT4NILM (0.014), ELECTRIcity (0.277), and CTA-BERT (0.209). It also achieves a lower MAE of 5.59 and MRE of 0.013, highlighting its effectiveness in accurately detecting the short and irregular usage patterns of this appliance. However, when trained for 60 epochs, Bi-GRU+BERT exhibits clear signs of overfitting: its test F1-score declines to 0.361 while the MAE increases, despite the training F1-score improving to 0.440. This suggests that the model’s bidirectional recurrence, while helpful for modeling temporal dependencies, may lead to memorization of sparse usage data such as microwave events when exposed to extended training. In contrast, GRU+BERT benefits from its simpler architecture and demonstrates better generalization at 60 epochs, achieving the highest test F1-score of 0.553 and the lowest MAE of 5.24.

The bar chart in

Figure 5 illustrates this trend. While Bi-GRU+BERT performs best at 20 epochs, its generalization degrades at 60 epochs. GRU+BERT, on the other hand, shows the opposite behavior with stronger test performance after longer training. This indicates that GRU+BERT can better leverage extended training cycles, whereas Bi-GRU+BERT requires stricter regularization or early stopping to avoid overfitting [

31]. This behavior is further supported by the training and validation curves for one training instance shown in

Figure 6, where Bi-GRU+BERT’s validation F1-score begins to plateau and then decline after around 20 epochs despite a steady decrease in training loss. This divergence between training and validation performance is a clear sign of overfitting.

Additionally, models like GRU+BERT, BERT4NILM, and ELECTRIcity show signs of overfitting even at early training stages. For example, GRU+BERT achieves a training F1-score of 0.543 at 20 epochs, which already drops to 0.229 on the test set, indicating limited generalization. Similarly, BERT4NILM and ELECTRIcity report high training F1-scores of 0.697 and 0.677, respectively, but their test scores fall to 0.014 and 0.277. This drop is primarily due to the microwave’s infrequent and irregular usage, resulting in very few positive instances during training. As a result, models tend to memorize these limited patterns rather than generalize to unseen data.

The bidirectional GRU layer in our architecture addresses this issue by learning temporal dependencies from both past and future contexts, enabling the model to better capture transient power consumption behaviors. This makes Bi-GRU+BERT, trained for 20 epochs, particularly well-suited for handling the short-duration nature of appliances like microwaves.

Figure 7 illustrates this advantage:

Figure 7a presents BERT4NILM’s predictions, while

Figure 7b shows the output from Bi-GRU+BERT. As is evident, our model generates a more stable and consistent power consumption profile over time, demonstrating its superior generalization capability. Furthermore,

Figure 8 provides a zoomed-in view of Bi-GRU+BERT’s predictions, accurately capturing the appliance’s ON/OFF transitions and fine-grained power fluctuations at 20 epochs.

The dishwasher results in

Table 9 show that GRU+BERT outperforms Bi-GRU+BERT, achieving F1-scores of 0.777 versus 0.687 at 20 epochs and 0.750 versus 0.688 at 60 epochs. This performance gap suggests that while Bi-GRUs are often beneficial for capturing temporal dependencies, their added complexity may introduce redundancy or noise when modeling appliances with highly structured and sequential multi-phase cycles [

31]. Specifically, over-smoothing from bidirectional context can occur because Bi-GRUs combine information from both past and future time steps, which may blur the sharp transitions between operational phases such as wash, rinse, and dry. In such scenarios, the unidirectional GRU appears more effective at preserving the temporal causality needed to track and distinguish operational phases, resulting in better generalization and more reliable event detection.

Lastly, we also report the averaged results in

Table 10 that represent the average of each metric across all five appliances in the test set from

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9, following the practice adopted by several related works [

7,

9]. Here, our proposed models demonstrate consistently strong and competitive performance across both short and extended training durations. At 20 epochs, Bi-GRU+BERT achieves the highest average F1-score (0.728) and accuracy (0.971), outperforming all baseline and state-of-the-art models. This highlights the model’s effectiveness in capturing rich temporal dynamics and contextual patterns under limited training.

However, when trained for 60 epochs, GRU+BERT becomes the top performer, achieving the highest average F1-score (0.770) and matching the best accuracy (0.971), while also maintaining competitive error metrics (MRE: 0.143, MAE: 13.01). In contrast, Bi-GRU+BERT shows a slight drop in F1-score (0.712) despite maintaining the lowest average MRE (0.142), suggesting that the added complexity of bidirectional modeling may introduce diminishing returns during extended training. These averaged results confirm that combining GRU-based temporal learning with transformers leads to robust NILM performance, effectively balancing accuracy, generalization, and error reduction across a variety of appliance types and training settings.

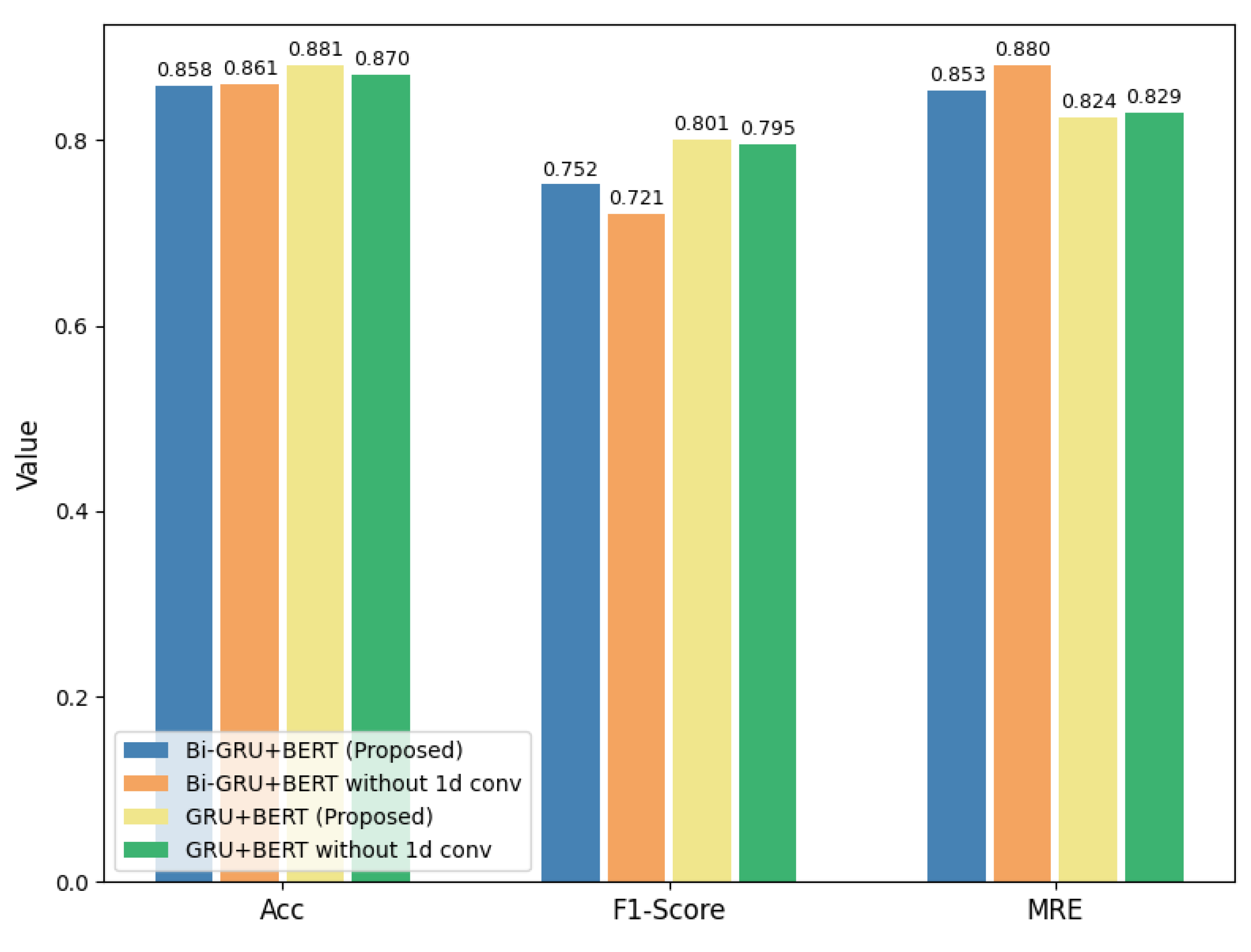

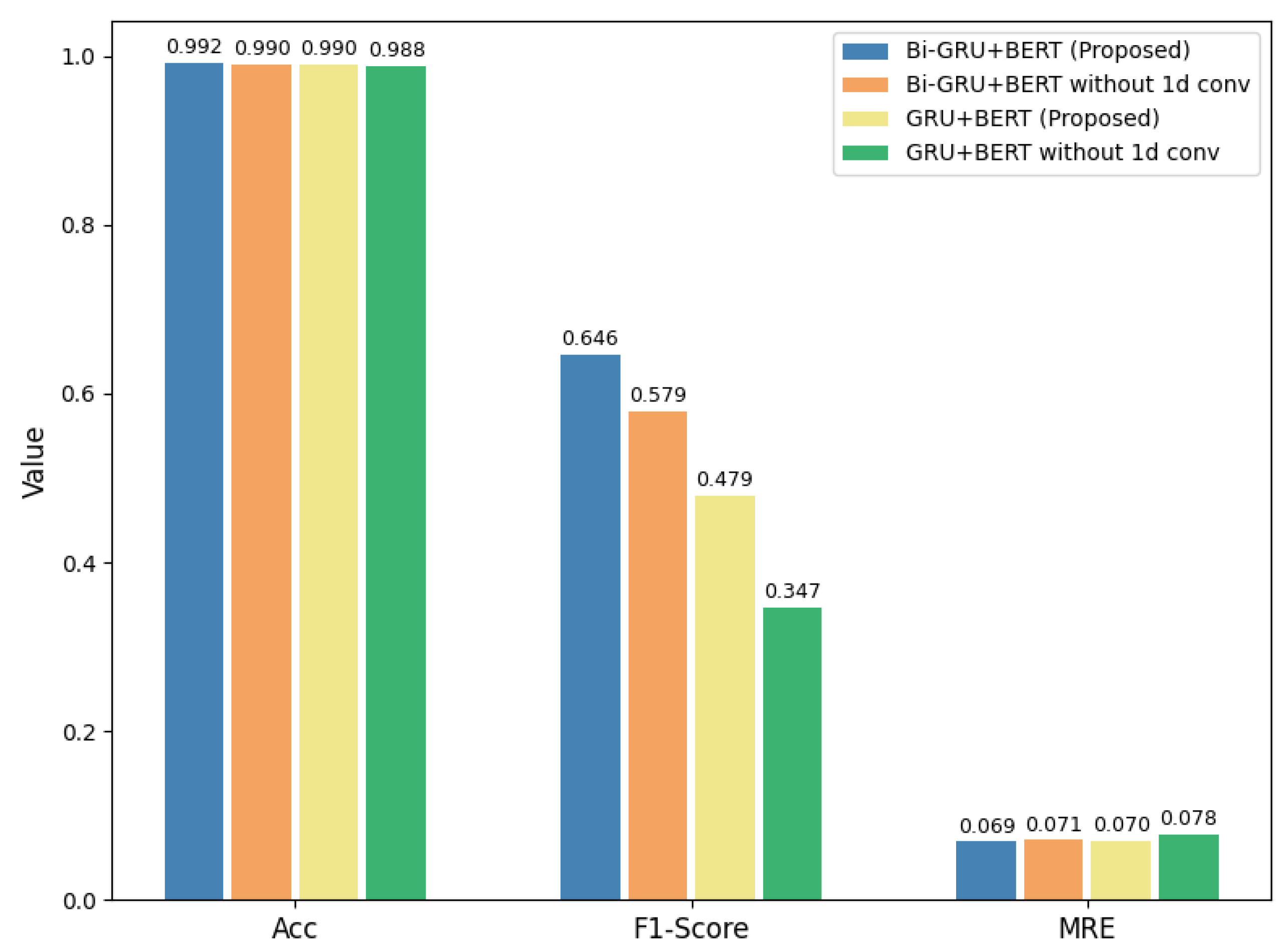

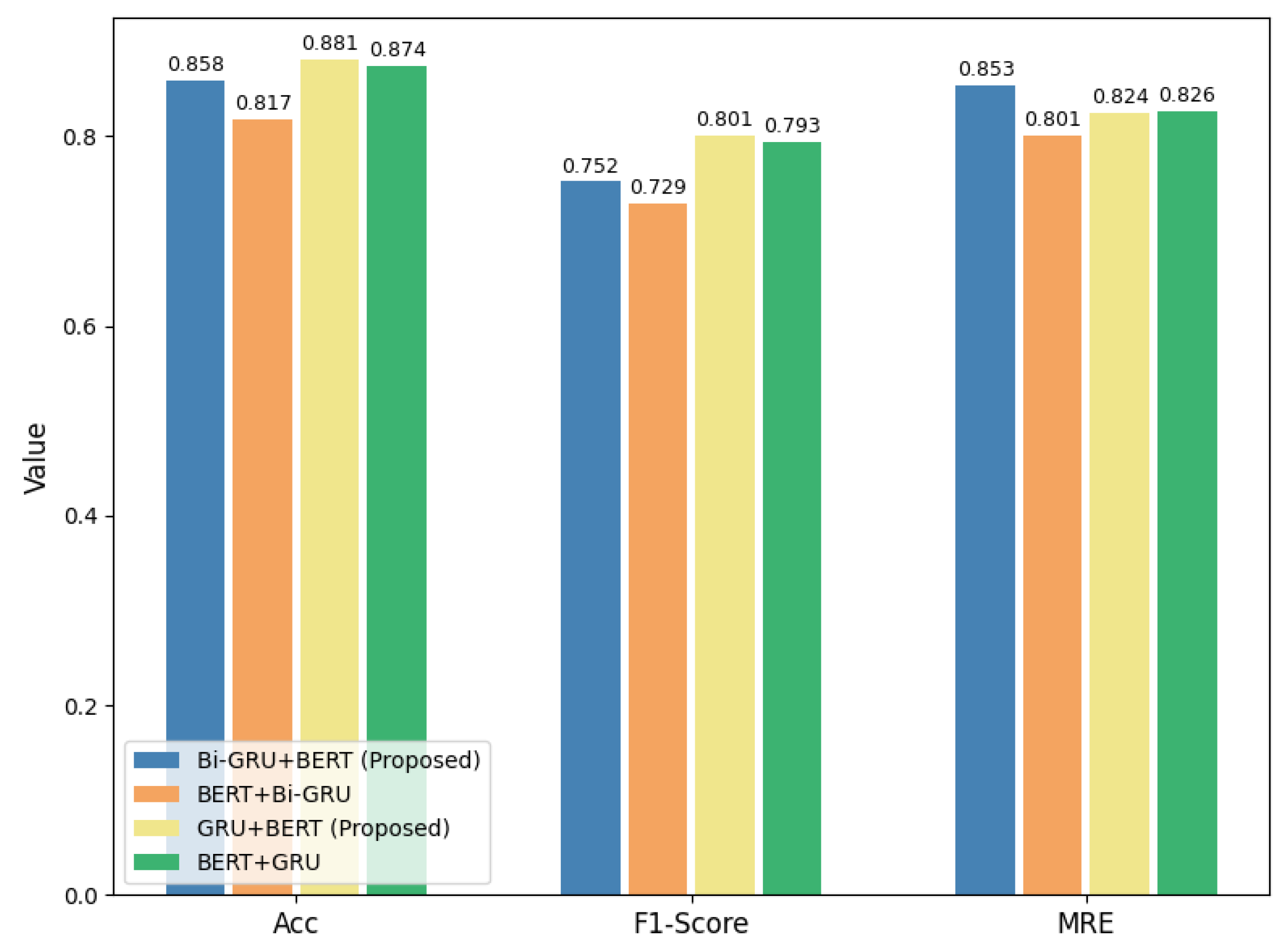

Table 11 presents a comparison of our proposed models against existing benchmark approaches on the REDD dataset. The results show that our GRU+BERT and Bi-GRU+BERT models achieve competitive performance across multiple appliances. For the refrigerator, CTA-BERT achieves the highest accuracy (0.887) and lowest MAE (30.69 W), while GRU+BERT achieves the best F1-score (0.801). On the microwave, Bi-GRU+BERT obtains the highest F1-score (0.646) with low error, whereas CTA-BERT and ELECTRIcity perform best in terms of accuracy and MRE. For the dishwasher, BERT4NILM demonstrates superior accuracy (0.969) and lowest MAE (20.49 W), with Bi-GRU+BERT attaining the highest F1-score (0.580). Averaged across appliances, CTA-BERT consistently provides the best balance of accuracy (0.960), F1-score (0.632), and error metrics, but our GRU-based BERT variants remain competitive, particularly in terms of F1-score and error reduction.

4.4.3. Cross-Dataset Performance Evaluation

In order to assess the generalization ability of our proposed models, we conduct a cross-dataset evaluation by comparing them with BERT4NILM [

7]. For fairness, BERT4NILM was run under the same training settings as our models, using the same houses from UK-DALE for training and the same house from REDD for testing.

Table 12 presents the cross-dataset evaluation results. For the microwave, both proposed models substantially outperformed the BERT4NILM in terms of F1-score, with GRU+BERT and Bi-GRU+BERT achieving 0.403 and 0.328, respectively, compared to 0.0 from the BERT4NILM. These results demonstrate improved detection of ON/OFF transitions, while MAE and MRE remained nearly identical across all models.

Similarly for the fridge, our proposed models again delivered superior F1-scores (0.574 for GRU+BERT and 0.542 for Bi-GRU+BERT), whereas BERT4NILM failed to detect any events, obtaining an F1-score of 0.0. Although accuracy slightly decreased for the proposed models, the gain in F1-score indicates a stronger capability in capturing appliance state changes. Both proposed variants also reduced the MRE relative to BERT4NILM, reflecting more consistent energy estimation.

In contrast, results were mixed for the dishwasher. The GRU+BERT achieved very high accuracy (0.946) and the lowest MAE (29.20 W) and MRE (0.050), but its F1-score remained 0.0, suggesting difficulty in distinguishing ON/OFF events despite accurate aggregate consumption prediction. The Bi-GRU+BERT, however, underperformed across all metrics, even compared to the BERT4NILM.

These findings are consistent with observations in a recent work by Varanasi et al. [

21] where they evaluated cross-dataset performance using the UK-DALE and REFIT datasets for training and REDD for testing. Importantly, they noted that many models attained high accuracies but low F1-scores and even zero scores for certain appliances, e.g., dishwasher, due to very sparse activations in the testing period. This aligns with our findings, where accuracy and MAE can appear strong even when ON/OFF detection, indicated by the F1-score, is weak.

Overall, the cross-dataset results suggest that integrating GRUs with BERT significantly enhances the ability to generalize appliance ON/OFF event detection across unseen datasets, particularly for microwave and fridge. While challenges remain for appliances with sparse or irregular usage patterns, e.g., dishwasher, the proposed models demonstrate stronger robustness compared to BERT4NILM in cross-dataset NILM scenarios.