Improving Remote Access Trojans Detection: A Comprehensive Approach Using Machine Learning and Hybrid Feature Engineering

Abstract

1. Introduction

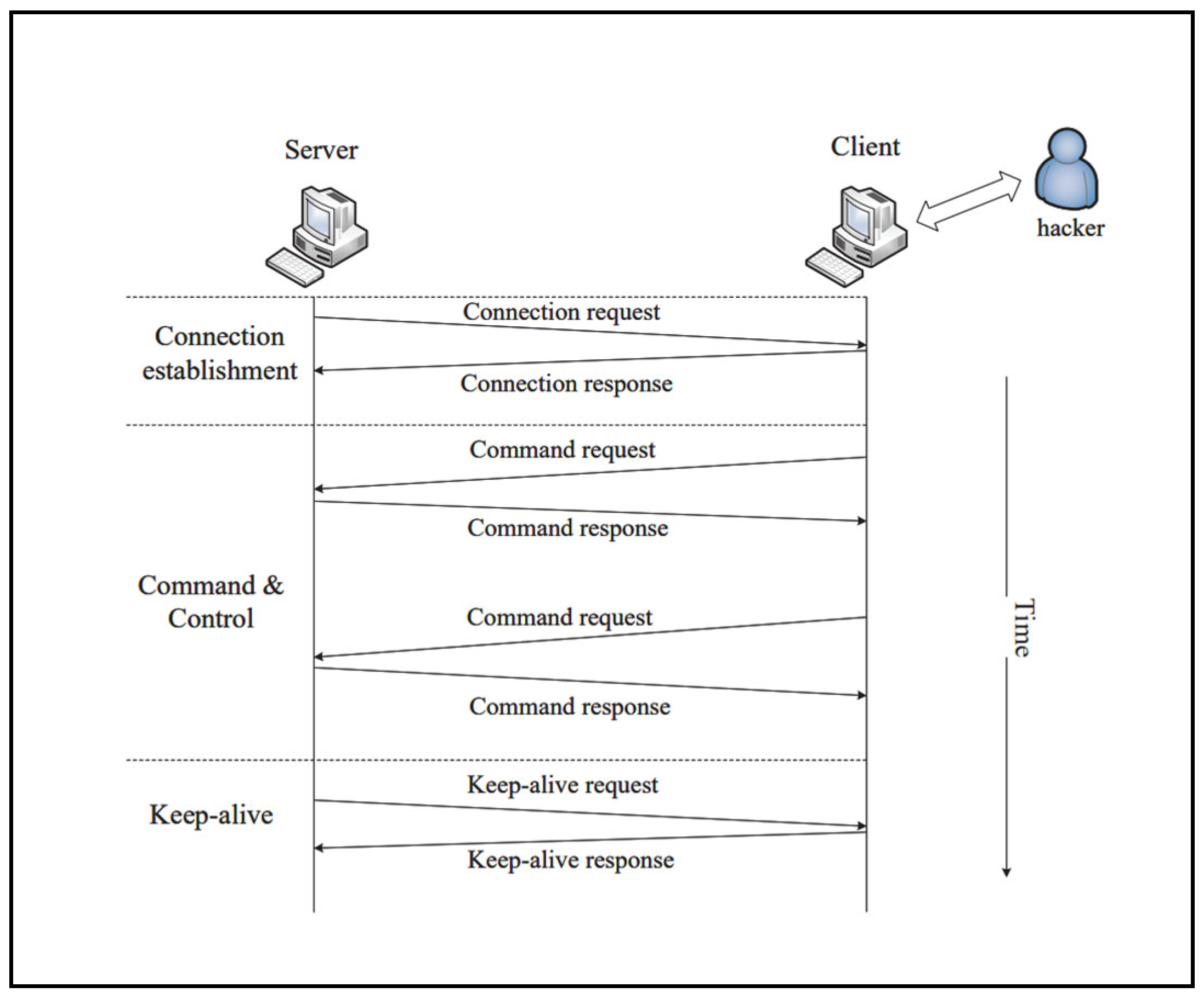

1.1. Background

1.2. Related Studies

1.2.1. Host-Based Studies

1.2.2. Network-Based Studies

1.2.3. Hybrid-Based Studies

- Hybrid detection approach: A novel framework is proposed that combines host-based and network-based features to enhance RAT detection.

- Improved accuracy and lower false positives: By integrating various features, the approach enhances classification accuracy and reduces false alarms.

- Broader threat coverage: The hybrid method captures both external network behaviors (e.g., command-and-control activity) and internal host indicators (e.g., unauthorized access attempts).

- Highlighting the significance of feature engineering: The study introduces and evaluates 10 newly engineered behavioral features that significantly improve model performance.

2. Materials and Methods

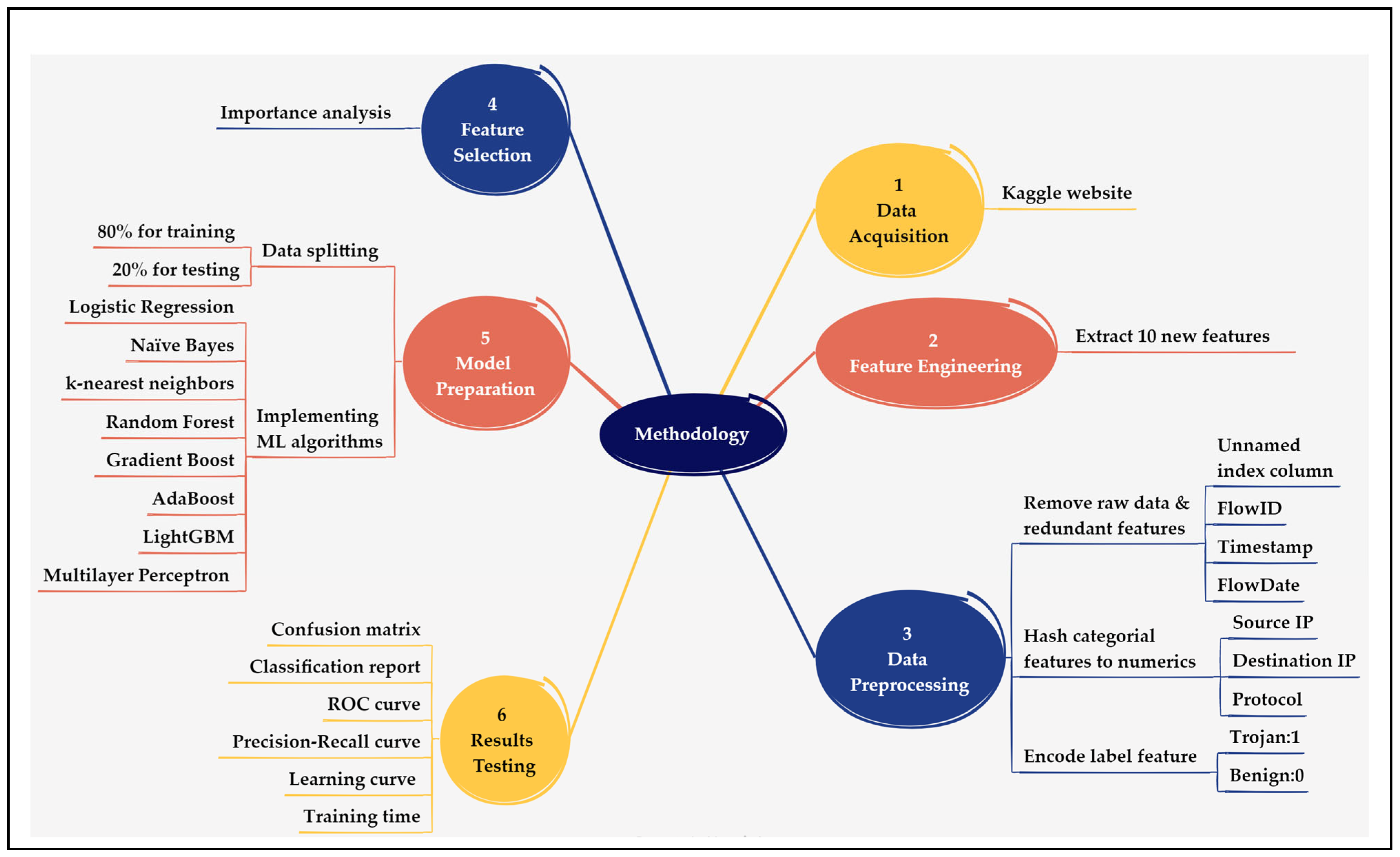

2.1. Methodology

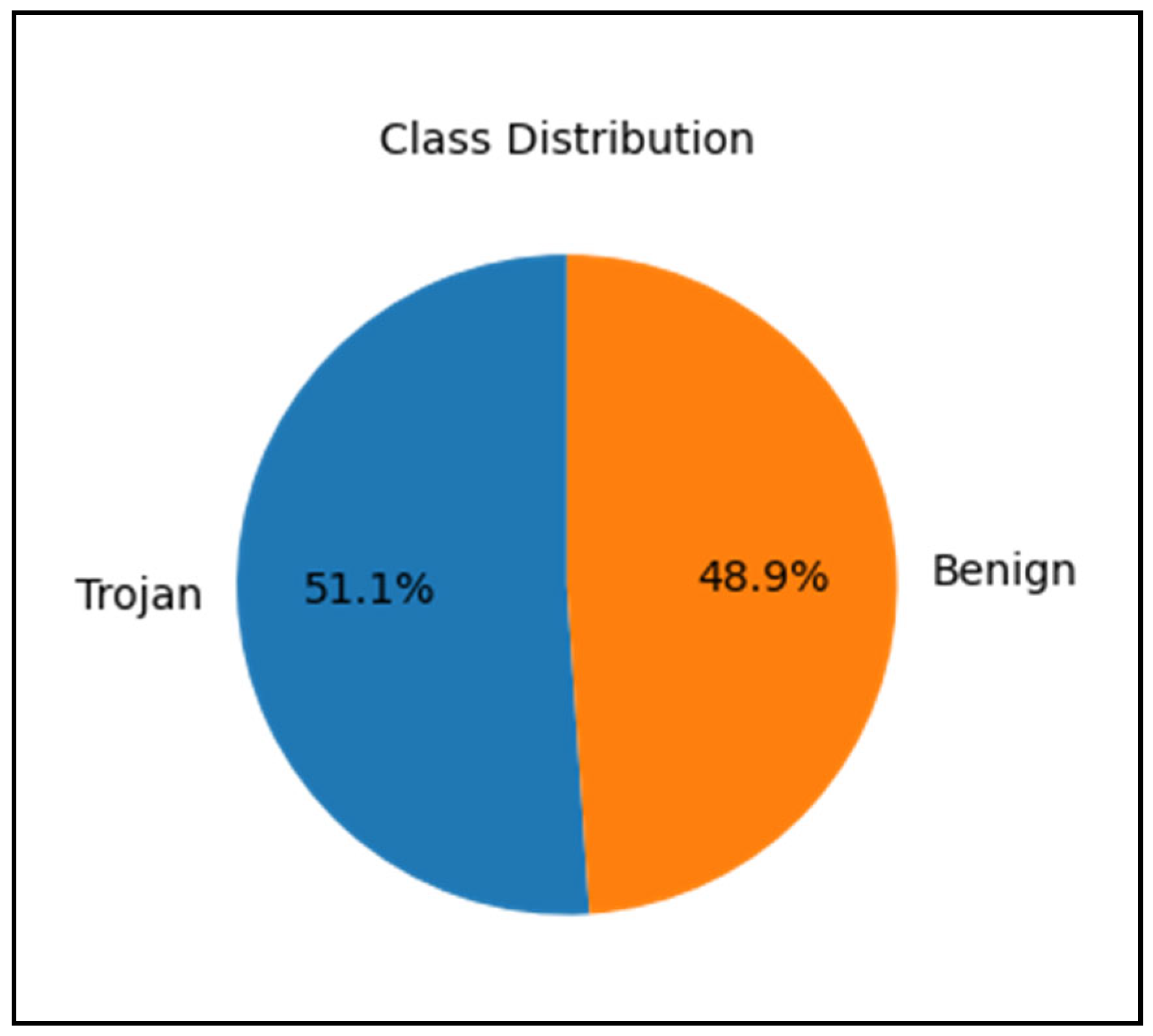

2.2. Data Acquisition

2.3. Feature Engineering

2.4. Data Cleaning and Preprocessing

2.5. Feature Selection

2.6. Data Splitting and Model Preparation

3. Results

3.1. Experiments

3.1.1. Hardware and Software Configuration

3.1.2. Classifier Design and Tuning

3.1.3. Ablation Experiments

3.2. Metrics and Results

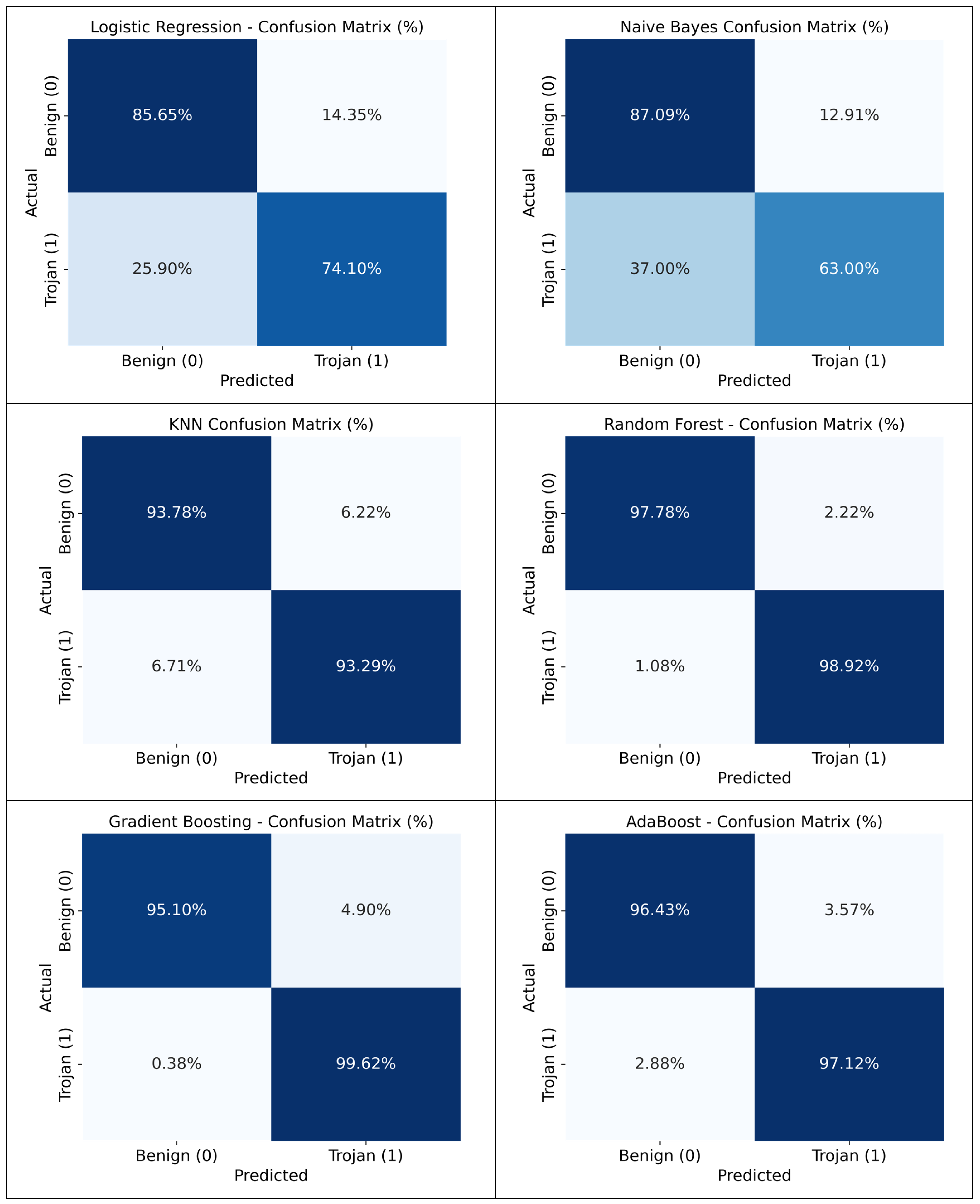

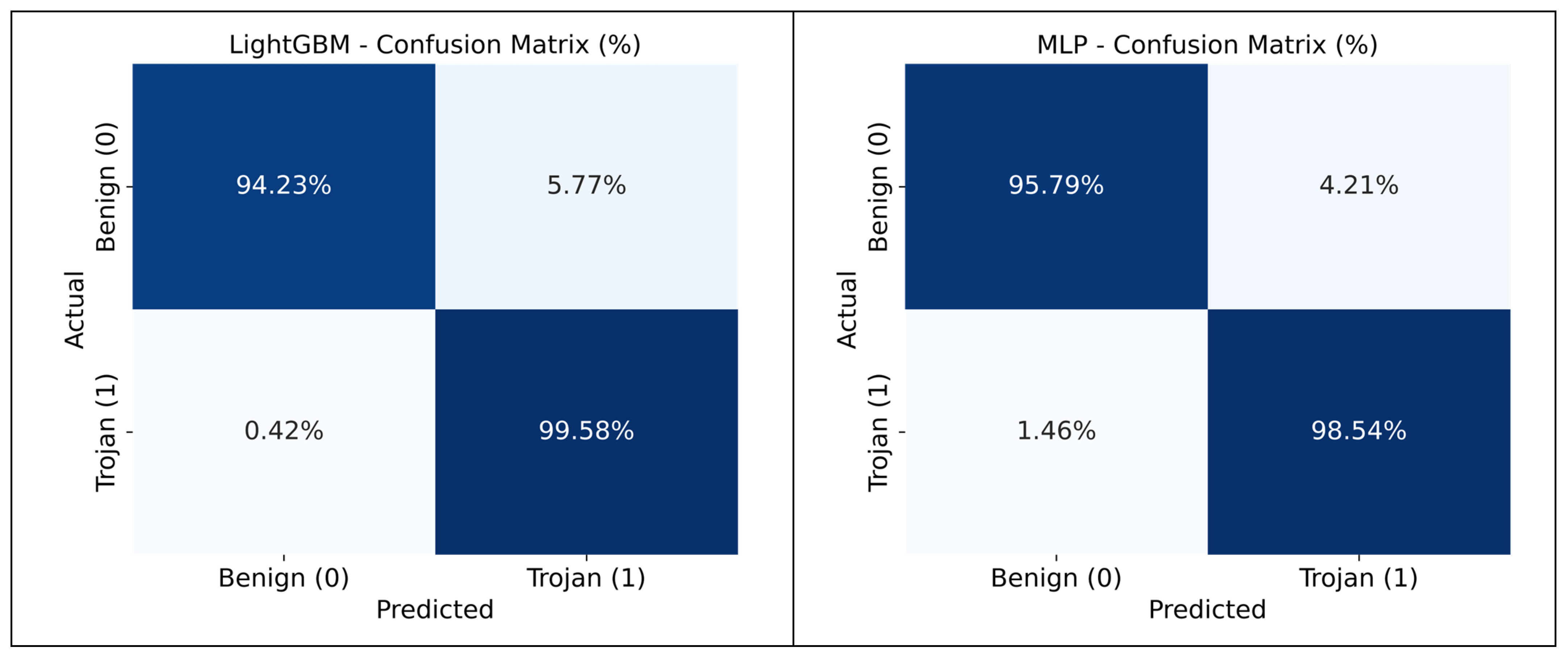

3.2.1. Confusion Matrix

3.2.2. The Classification Report

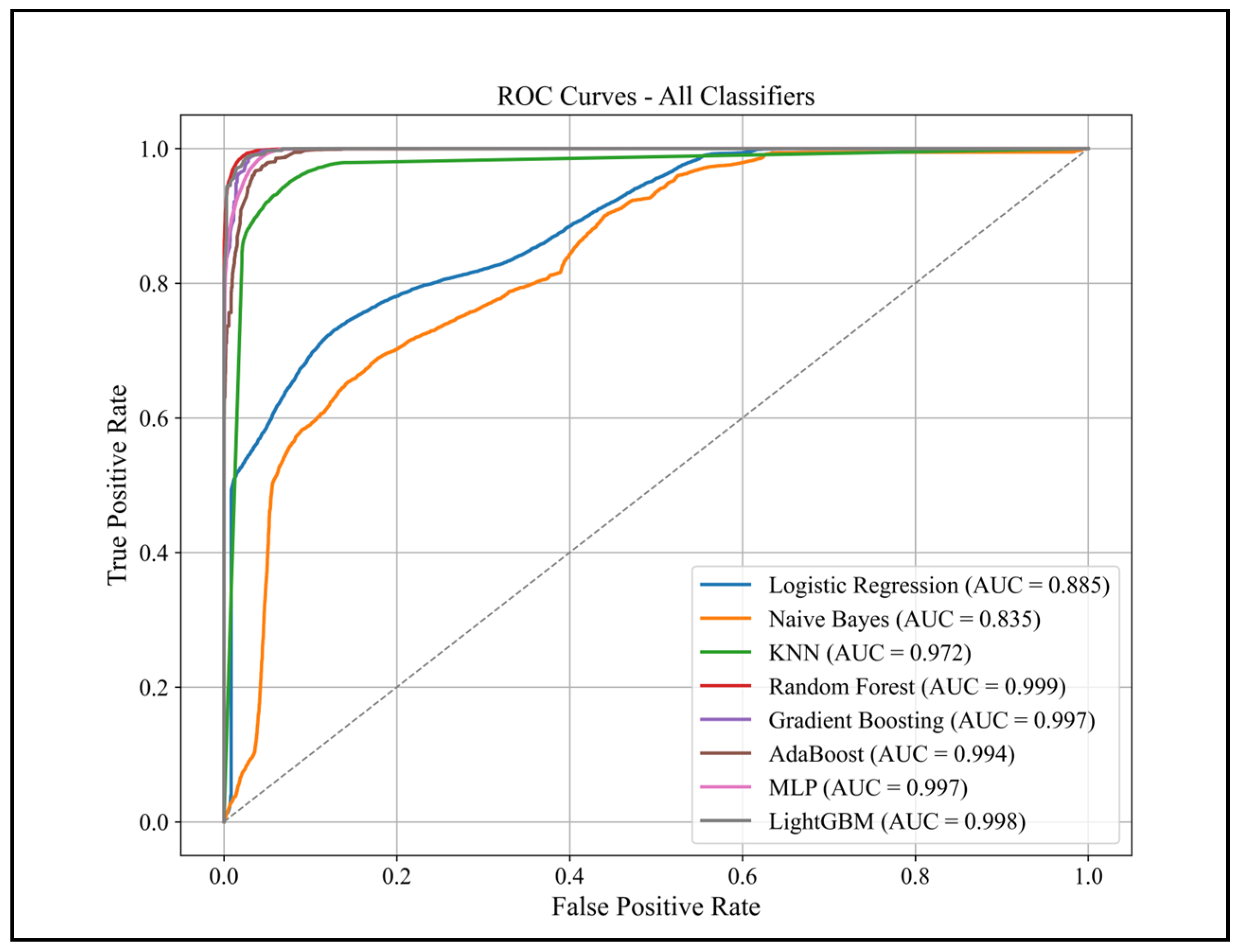

3.2.3. ROC Curve

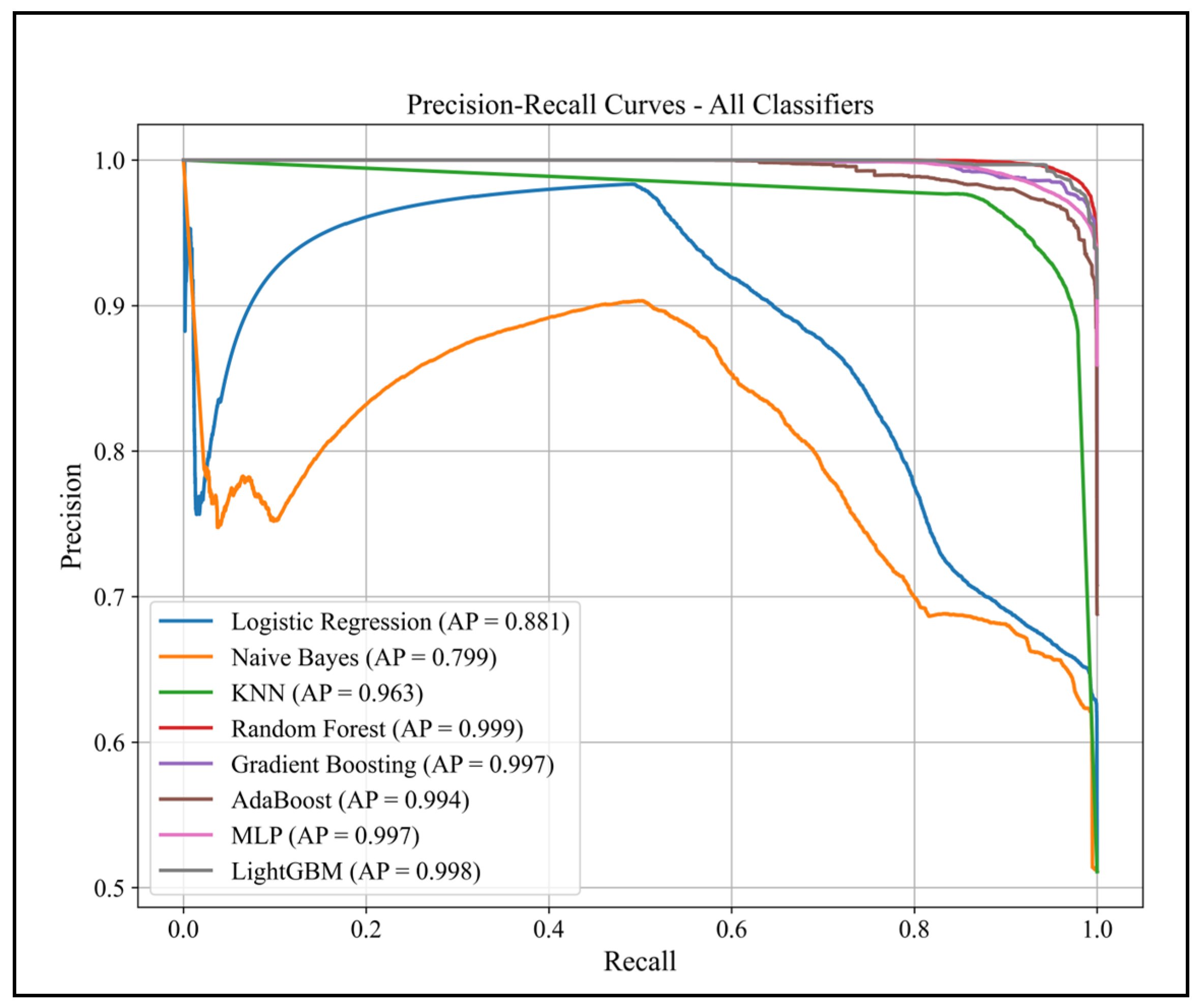

3.2.4. Precision–Recall Curves

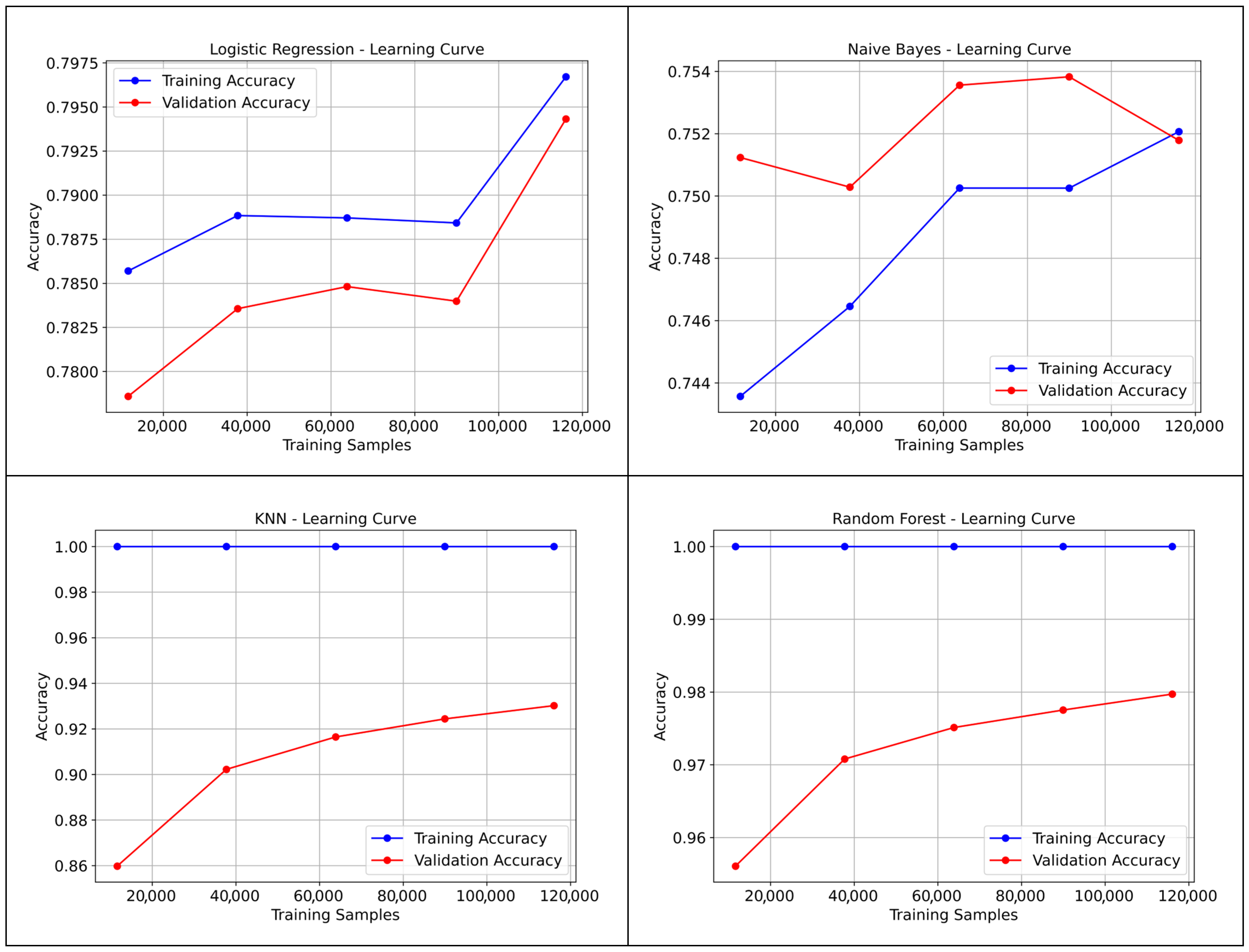

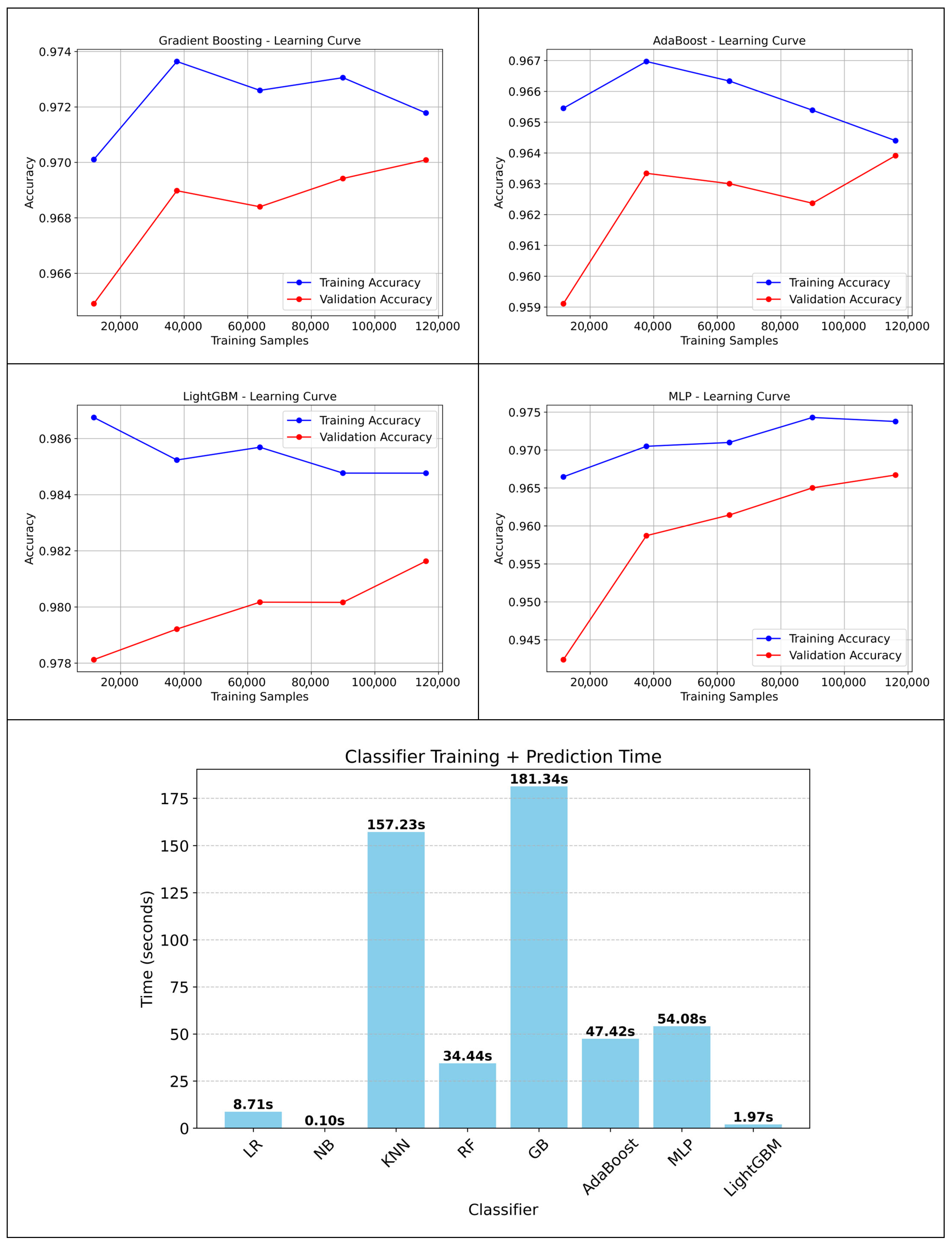

3.2.5. Learning Curve and Training Time Results

3.2.6. Inference Performance

3.2.7. Feature Contribution Analysis for Ensemble ML Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- El-Metwaly, A.E.S.; Abdelfattah, M.A.; Maher, N.M.; Hamed, M.; Tayel, E.M.; Al-Rifai, M.A.; Takieldeen, A.E. Remote Access Trojan (RAT) Attack: A Stealthy Cyber Threat Posing Severe Security Risks. In Proceedings of the International Telecommunications Conference (ITC), Cairo, Egypt, 22–25 July 2024. [Google Scholar] [CrossRef]

- Jiang, W.; Wu, X.; Cui, X.; Liu, C.A. Highly Efficient Remote Access Trojan Detection Method. Int. J. Digit. Crime Forensics 2019, 11, 1–13. [Google Scholar] [CrossRef]

- Sai, F.; Wang, X.; Yu, X.; Yan, P.; Ma, W. Recognition and Detection Technology for Abnormal Flow of Rebound Type Remote Control Trojan in Power Monitoring System. In Proceedings of the IEEE 6th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 24–26 February 2023. [Google Scholar] [CrossRef]

- Jiang, D.; Omote, K. An Approach to Detect Remote Access Trojan in the Early Stage of Communication. In Proceedings of the International Conference on Advanced Information Networking and Applications (AINA), Gwangju, Republic of Korea, 24–27 March 2015. [Google Scholar] [CrossRef]

- Guo, C.; Song, Z.; Ping, Y.; Shen, G.; Cui, Y.; Jiang, C. PRATD: A Phased Remote Access Trojan Detection Method with Double-Sided Features. Electronics 2020, 9, 1894. [Google Scholar] [CrossRef]

- Piet, J.; Anderson, B.; McGrew, D. An In-Depth Study of Open-Source Command and Control Frameworks. In Proceedings of the 13th International Conference on Malicious and Unwanted Software (MALWARE), Nantucket, MA, USA, 22–24 October 2018. [Google Scholar] [CrossRef]

- Valeros, V.; Garcia, S. Growth and Commoditization of Remote Access Trojans. In Proceedings of the 5th IEEE European Symposium on Security and Privacy Workshops (Euro S&PW), Genoa, Italy, 7–11 September 2020. [Google Scholar] [CrossRef]

- Bridges, R.; Hernandez Jimenez, J.; Nichols, J.; Goseva-Popstojanova, K.; Prowell, S. Towards Malware Detection via CPU Power Consumption: Data Collection Design and Analytics. In Proceedings of the 17th IEEE International Conference on Trust, Security And Privacy in Computing and Communications/12th IEEE International Conference On Big Data Science and Engineering (TrustCom/BigDataSE), New York, NY, USA, 1–3 August 2018. [Google Scholar] [CrossRef]

- Adachi, D.; Omote, K. A Host-Based Detection Method of Remote Access Trojan in the Early Stage. In Proceedings of the 12th International Conference on Information Security Practice and Experience (ISPEC), Zhangjiajie, China, 16–18 November 2016. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, W.; Lv, Z.; Sangaiah, A.K.; Huang, T.; Chilamkurti, N. MALDC: A Depth Detection Method for Malware Based on Behavior Chains. World Wide Web 2020, 23, 991–1010. [Google Scholar] [CrossRef]

- Moon, D.; Pan, S.B.; Kim, I. Host-Based Intrusion Detection System for Secure Human-Centric Computing. J. Supercomput. 2016, 72, 2520–2536. [Google Scholar] [CrossRef]

- Chandran, S.; Hrudya, P.; Poornachandran, P. An Efficient Classification Model for Detecting Advanced Persistent Threat. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics (ICACCI), Kochi, India, 10–13 August 2015. [Google Scholar] [CrossRef]

- Liang, Y.; Peng, G.; Zhang, H.; Wang, Y. An Unknown Trojan Detection Method Based on Software Network Behavior. Wuhan Univ. J. Nat. Sci. 2013, 18, 369–376. [Google Scholar] [CrossRef]

- Moser, A.; Kruegel, C.; Kirda, E. Limits of Static Analysis for Malware Detection. In Proceedings of the Annual Computer Security Applications Conference, ACSAC, Miami Beach, FL, USA, 10–14 December 2007; pp. 421–430. [Google Scholar] [CrossRef]

- Pendleton, M.; Garcia-Lebron, R.; Cho, J.H.; Xu, S. A Survey on Systems Security Metrics. ACM Comput. Surv. 2016, 49, 1–35. [Google Scholar] [CrossRef]

- Floroiu, I.; Floroiu, M.; Niga, A. Remote Access Trojans Detection Using Convolutional and Transformer-Based Deep Learning Techniques. Rom. Cyber Secur. J. 2024, 6, 47–58. [Google Scholar] [CrossRef]

- Li, S.; Yun, X.; Zhang, Y.; Xiao, J.; Wang, Y. A General Framework of Trojan Communication Detection Based on Network Traces. In Proceedings of the IEEE 7th International Conference on Networking, Architecture and Storage (NAS), Xiamen, China, 28–30 June 2012. [Google Scholar] [CrossRef]

- Jiang, D.; Omote, K. A RAT Detection Method Based on Network Behavior of the Communication’s Early Stage. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2016, E99A, 145–153. [Google Scholar] [CrossRef]

- Jinlong, W.; Haidong, G.; Yixin, X. Closed-Loop Feedback Trojan Detection Technique Based on Hierarchical Model. In Proceedings of the 2015 Joint International Mechanical, Electronic and Information Technology Conference, Chongqing, China, 18–20 December 2015; pp. 240–243. [Google Scholar] [CrossRef]

- Yin, K.S.; Khine, M.A. Network Behavioral Features for Detecting Remote Access Trojans in the Early Stage. In Proceedings of the VI International Conference on Network, Communication and Computing (ICNCC), Kunming, China, 8–10 December 2017. [Google Scholar] [CrossRef]

- Sebakara, E.; Jonathan, K.N. Encrypted Remote Access Trojan Detection: A Machine Learning Approach with Real-World and Open Datasets. J. Inf. Technol. 2025, 5, 30–42. [Google Scholar] [CrossRef]

- Awad, A.A.; Sayed, S.G.; Salem, S.A. Collaborative Framework for Early Detection of RAT-Bots Attacks. IEEE Access 2019, 7, 71780–71790. [Google Scholar] [CrossRef]

- Aburbeian, A.M.; Ashqar, H.I. Credit Card Fraud Detection Using Enhanced Random Forest Classifier for Imbalanced Data. In Proceedings of the 2023 International Conference on Advances in Computing Research (ACR’23), Orlando, FL, USA, 8–10 May 2023. [Google Scholar] [CrossRef]

- Banerjee, R.; Bourla, G.; Chen, S.; Kashyap, M.; Purohit, S. Comparative Analysis of Machine Learning Algorithms through Credit Card Fraud Detection. In Proceedings of the IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 5–7 October 2018. [Google Scholar] [CrossRef]

- Rashid, S.J.; Baker, S.A.; Alsaif, O.I.; Ahmad, A.I. Detecting Remote Access Trojan (RAT) Attacks Based on Different LAN Analysis Methods. Eng. Technol. Appl. Sci. Res. 2024, 14, 17294–17301. [Google Scholar] [CrossRef]

- Cop, C. Trojan Detection. Available online: https://www.kaggle.com/datasets/subhajournal/trojan-detection/data (accessed on 6 July 2023).

- Verdonck, T.; Baesens, B.; Óskarsdóttir, M.; vanden Broucke, S. Special Issue on Feature Engineering Editorial. Mach. Learn. 2024, 113, 3917–3928. [Google Scholar] [CrossRef]

- Caelen, O. A Bayesian Interpretation of the Confusion Matrix. Ann. Math. Artif. Intell. 2017, 81, 429–450. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3408, pp. 345–359. [Google Scholar] [CrossRef]

- Boyd, K.; Costa, V.S.; Davis, J.; Page, C.D. Unachievable Region in Precision-Recall Space and Its Effect on Empirical Evaluation. In Proceedings of the 2012 11th International Conference on Machine Learning and Applications, Washington, DC, USA, 12–15 December 2012; pp. 349–368. Available online: https://pmc.ncbi.nlm.nih.gov/articles/PMC3858955 (accessed on 5 June 2025).

- Aburbeian, A.H.M.; Fernández-Veiga, M. Secure Internet Financial Transactions: A Framework Integrating Multi-Factor Authentication and Machine Learning. AI 2024, 5, 177–194. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The Relationship between Precision-Recall and ROC Curves. In Proceedings of the 23rd International Conference on Machine Learning (ICML), Pittsburgh, PA, USA, 25–29 June 2006. [Google Scholar] [CrossRef]

- Mohr, F.; van Rijn, J.N. Learning Curves for Decision Making in Supervised Machine Learning: A survey. Mach. Learn. 2024, 113, 8371–8425. [Google Scholar] [CrossRef]

- Awad, A.A.; Sayed, S.G.; Salem, S.A. A Host-Based Framework for RAT Bots Detection. In Proceedings of the International Conference on Computer and Applications (ICCA), Doha, Qatar, 6–7 September 2017. [Google Scholar] [CrossRef]

- Awad, A.A.; Sayed, S.G.; Salem, S.A. A Network-Based Framework for RAT-Bots Detection. In Proceedings of the 8th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 3–5 October 2017. [Google Scholar] [CrossRef]

- Dehkordy, D.T.; Rasoolzadegan, A. DroidTKM: Detection of Trojan Families Using the KNN Classifier Based on Manhattan Distance Metric. In Proceedings of the 10th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29–30 October 2020. [Google Scholar] [CrossRef]

- Kanaker, H.; Karim, A.; Awwad, S.A.B.; Ismail, N.H.A.; Zraqou, J.; Al Ali, A.M.F. Trojan Horse Infection Detection in Cloud Based Environment Using Machine Learning. Int. J. Interact. Mob. Technol. 2022, 16, 81–106. [Google Scholar] [CrossRef]

- Razak, M.F.A.; Jaya, M.I.; Ismail, Z.; Firdaus, A. Trojan Detection System Using Machine Learning Approach. Indones. J. Inf. Syst. 2022, 5, 38–47. [Google Scholar] [CrossRef]

- Pi, B.; Guo, C.; Cui, Y.; Shen, G.; Yang, J.; Ping, Y. Remote Access Trojan Traffic Early Detection Method Based on Markov Matrices and Deep Learning. Comput. Secur. 2024, 137, 103628. [Google Scholar] [CrossRef]

- Tran, G.; Hoang, A.; Bui, T.; Tong, V.; Tran, D. A Deep Learning Approach to Early Identification of Remote Access Trojans. In Proceedings of the International Symposium on Information and Communication Technology (SOICT), Danang, Vietnam, 13–15 December 2024. [Google Scholar] [CrossRef]

- Ritzkal; Hendrawan, A.H.; Kurniawan, R.; Aprian, A.J.; Primasari, D.; Subchan, M. Enhancing Cybersecurity Through Live Forensic Investigation of Remote Access Trojan Attacks Using FTK Imager Software. Int. J. Saf. Secur. Eng. 2024, 14, 217. [Google Scholar] [CrossRef]

- Safdar, H.; Seher, I.; Elgamal, E.; Prasad, P.W.C. A Review of Machine Learning-Based Trojan Detection Techniques for Securing IoT Edge Devices. In Proceedings of the 3rd International Conference on Intelligent Education and Intelligent Research (IEIR), Macau, China, 6–8 November 2024. [Google Scholar] [CrossRef]

- Khan, S.U.; Nabil, M.; Mahmoud, M.M.E.A.; AlSabaan, M.; Alshawi, T. Trojan Attack and Defense for Deep Learning Based Power Quality Disturbances Classification. IEEE Trans. Netw. Sci. Eng. 2025, 12, 3962–3974. [Google Scholar] [CrossRef]

- Jin, L.; Wen, X.; Jiang, W.; Zhan, J.; Zhou, X. Trojan Attacks and Countermeasures on Deep Neural Networks from Life-Cycle Perspective: A Review. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar] [CrossRef]

| Source Feature | Derived Feature | Classification | Description |

|---|---|---|---|

| Timestamp | FlowDate | Network-based | The calendar date of the flow. Used for grouping flows by day for aggregation. |

| Hour | Network-based | The hour of the day when the flow occurred (0–23). Helps identify time-of-day attack patterns. | |

| DayOfWeek | Network-based | Day of the week (0 = Monday, 6 = Sunday). Used to detect weekday vs. weekend behavior variations. | |

| SecondsSinceMidnight | Network-based | Total seconds elapsed since midnight. Provides more precise temporal behavior within a day. | |

| Source IP | SourceIP_FlowCount | Network-behavioral | Total number of flows initiated by the source IP. Helps distinguish between active/inactive devices. |

| Source IP + Timestamp | TimeDiffFromLastFlow | Network-behavioral | Time difference (in seconds) between the current flow and the previous one from the same source IP. Indicates communication frequency. |

| Source IP + Destination IP | UniqueDestinations | Network-behavioral | Number of unique destination IPs contacted by a source IP. A higher number may indicate scanning or RAT behavior. |

| Source IP + Destination IP | UniqueSources | Network-behavioral | Number of distinct source IPs contacting a destination. Can highlight unusual popularity. |

| Source IP + Flow Duration | AvgFlowDuration | Network-behavioral | Average flow duration per source IP. Helps characterize the typical length of communications from a source. |

| DayOfWeek | IsWeekend | Network-based | Binary value indicating whether the flow occurred on a weekend (1) or a weekday (0). |

| Feature | Description | Importance Score | |

|---|---|---|---|

| 1 | DayOfWeek | Day of the week the flow was captured (0 = Monday, 6 = Sunday) | 0.247309 |

| 2 | SecondsSinceMidnight | Time of the flow in seconds since midnight | 0.116366 |

| 3 | IsWeekend | Whether the flow occurred during the weekend (1) or not (0) | 0.108793 |

| 4 | Hour | Hour of the day when the flow occurred | 0.070363 |

| 5 | SourceIP_FlowCount | Number of flows originating from the same Source IP | 0.052595 |

| 6 | UniqueDestinations | Number of distinct Destination IPs contacted by a Source IP | 0.047872 |

| 7 | Source IP | IP address of the sender of the flow (hashed) | 0.043232 |

| 8 | AvgFlowDuration | Average duration of flows for a given Source IP | 0.038080 |

| 9 | UniqueSources | Number of unique Source IPs contacting a Destination IP | 0.018321 |

| 10 | Destination IP | IP address of the receiver of the flow (hashed) | 0.013639 |

| 11 | Source Port | Port number at the source device | 0.011500 |

| 12 | Flow Duration | Duration of the flow in microseconds | 0.009645 |

| 13 | Flow IAT Min | Minimum inter-arrival time between packets in the flow | 0.009489 |

| 14 | Flow IAT Max | Maximum inter-arrival time between packets in the flow | 0.009452 |

| 15 | Flow IAT Mean | Average inter-arrival time between packets in the flow | 0.009449 |

| 16 | Flow Packets/s | Rate of packets per second in the flow | 0.009331 |

| 17 | Fwd Packets/s | Rate of forward direction packets per second | 0.009276 |

| 18 | Init_Win_bytes_forward | Initial window size in bytes in the forward direction | 0.008862 |

| 19 | Destination Port | Port number at the destination device | 0.007974 |

| 20 | Bwd Packets/s | Rate of backward direction packets per second | 0.006739 |

| 21 | Fwd IAT Max | Maximum inter-arrival time in the forward direction | 0.006585 |

| 22 | Fwd IAT Total | Total inter-arrival time in the forward direction | 0.006416 |

| 23 | Init_Win_bytes_backward | Initial window size in bytes in the backward direction | 0.006255 |

| 24 | Fwd IAT Mean | Average inter-arrival time in the forward direction | 0.006102 |

| 25 | Fwd IAT Min | Minimum inter-arrival time in the forward direction | 0.005927 |

| 26 | TimeDiffFromLastFlow | Time since last flow from the same Source IP | 0.005879 |

| 27 | Flow Bytes/s | Rate of bytes per second in the flow | 0.004903 |

| 28 | Fwd IAT Std | Standard deviation of inter-arrival time in forward direction | 0.004480 |

| 29 | Flow IAT Std | Standard deviation of inter-arrival time in the flow | 0.003865 |

| 30 | Fwd Header Length | Header length of packets in the forward direction | 0.003789 |

| 31 | Packet Length Mean | Average length of packets in the flow | 0.003656 |

| 32 | Fwd Packet Length Mean | Average packet length in forward direction | 0.003577 |

| 33 | Packet Length Std | Standard deviation of packet lengths | 0.003453 |

| 34 | Fwd Header Length.1 | Duplicate of forward header length | 0.003444 |

| 35 | Avg Fwd Segment Size | Average segment size in forward direction | 0.003373 |

| 36 | Average Packet Size | Average size of packets in the flow | 0.003372 |

| 37 | Fwd Packet Length Max | Maximum packet length in forward direction | 0.003364 |

| 38 | Subflow Fwd Bytes | Total bytes sent in subflow forward direction | 0.003334 |

| 39 | Total Length of Fwd Packets | Sum of lengths of forward packets | 0.003305 |

| 40 | Packet Length Variance | Variance of packet lengths | 0.003305 |

| 41 | Bwd Packet Length Mean | Average packet length in backward direction | 0.003262 |

| 42 | Subflow Bwd Bytes | Total bytes sent in subflow backward direction | 0.002940 |

| 43 | Bwd Header Length | Header length of packets in the backward direction | 0.002908 |

| 44 | Avg Bwd Segment Size | Average segment size in backward direction | 0.002906 |

| 45 | Total Length of Bwd Packets | Sum of lengths of backward packets | 0.002833 |

| 46 | Bwd Packet Length Max | Maximum packet length in backward direction | 0.002690 |

| 47 | Bwd IAT Min | Minimum inter-arrival time in backward direction | 0.002667 |

| 48 | Max Packet Length | Maximum packet length in the flow | 0.002644 |

| 49 | min_seg_size_forward | Minimum segment size in forward direction | 0.002493 |

| 50 | Bwd IAT Total | Total inter-arrival time in backward direction | 0.002486 |

| 51 | Bwd IAT Max | Maximum inter-arrival time in backward direction | 0.002480 |

| 52 | Fwd Packet Length Std | Standard deviation of packet lengths (forward) | 0.002471 |

| 53 | Bwd IAT Mean | Average inter-arrival time in backward direction | 0.002301 |

| 54 | Bwd IAT Std | Standard deviation of inter-arrival times (backward) | 0.001968 |

| 55 | Bwd Packet Length Std | Standard deviation of packet lengths (backward) | 0.001885 |

| 56 | Min Packet Length | Minimum packet length in the flow | 0.001853 |

| 57 | Bwd Packet Length Min | Minimum packet length in backward direction | 0.001795 |

| 58 | Subflow Fwd Packets | Number of packets sent in subflow forward | 0.001663 |

| 59 | Total Fwd Packets | Total number of forward packets | 0.001584 |

| 60 | Total Backward Packets | Total number of backward packets | 0.001560 |

| 61 | Fwd Packet Length Min | Minimum packet length in forward direction | 0.001463 |

| 62 | Subflow Bwd Packets | Number of packets sent in subflow backward | 0.001425 |

| 63 | Idle Mean | Average idle time between flows | 0.001324 |

| 64 | Idle Min | Minimum idle time between flows | 0.001224 |

| 65 | Idle Max | Maximum idle time between flows | 0.001181 |

| 66 | Active Min | Minimum active time between packets | 0.001162 |

| 67 | Active Mean | Average active time between packets | 0.001141 |

| 68 | Active Max | Maximum active time between packets | 0.001110 |

| 69 | URG Flag Count | Number of packets with the URG flag set | 0.001096 |

| 70 | Class | Target label (0 = Benign, 1 = Trojan) | 1.000000 |

| Class 0 (Benign) | Class 1 (Trojan) | Total Samples | Class Balance | |

|---|---|---|---|---|

| Training Set | 69,439 | 72,546 | 141,985 | ~49/51 |

| Testing Set | 17,360 | 18,137 | 35,497 | ~49/51 |

| Balanced Training Set | 72,546 | 72,546 | 145,092 | 50/50 |

| Algorithm | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Logistic Regression (LR) | 0 | 76% | 86% | 81% |

| 1 | 85% | 74% | 79% | |

| accuracy | 80% | |||

| Naïve Bayes (NB) | 0 | 70% | 86% | 77% |

| 1 | 83% | 64% | 72% | |

| accuracy | 75% | |||

| k-nearest neighbors (KNN) | 0 | 93% | 94% | 93% |

| 1 | 94% | 93% | 94% | |

| accuracy | 94% | |||

| Random Forest (RF) | 0 | 99% | 98% | 98% |

| 1 | 98% | 99% | 98% | |

| accuracy | 98% | |||

| Gradient Boosting (GB) | 0 | 100% | 96% | 98% |

| 1 | 96% | 100% | 98% | |

| accuracy | 98% | |||

| AdaBoost | 0 | 97% | 96% | 97% |

| 1 | 96% | 98% | 97% | |

| accuracy | 97% | |||

| LightGBM | 0 | 100% | 94% | 97% |

| 1 | 95% | 100% | 97% | |

| accuracy | 97% | |||

| Multilayer Perceptron (MLP) | 0 | 98% | 96% | 97% |

| 1 | 96% | 98% | 97% | |

| accuracy | 97% | |||

| Algorithm | Class | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Logistic Regression (LR) | 0 | 49% | 51% | 50% |

| 1 | 51% | 50% | 51% | |

| accuracy | 50% | |||

| Naïve Bayes (NB) | 0 | 50% | 87% | 64% |

| 1 | 59% | 17% | 27% | |

| accuracy | 52% | |||

| k-nearest neighbors (KNN) | 0 | 53% | 53% | 53% |

| 1 | 55% | 54% | 54% | |

| accuracy | 54% | |||

| Random Forest (RF) | 0 | 78% | 86% | 82% |

| 1 | 85% | 77% | 81% | |

| accuracy | 81% | |||

| Gradient Boosting (GB) | 0 | 68% | 89% | 77% |

| 1 | 86% | 60% | 70% | |

| accuracy | 74% | |||

| AdaBoost | 0 | 68% | 85% | 76% |

| 1 | 81% | 62% | 70% | |

| accuracy | 73% | |||

| LightGBM | 0 | 70% | 90% | 79% |

| 1 | 87% | 63% | 73% | |

| accuracy | 76% | |||

| Multilayer Perceptron (MLP) | 0 | 70% | 84% | 77% |

| 1 | 81% | 66% | 73% | |

| accuracy | 75% |

| Classifier | Avg Latency (MS/Sample) | Memory Usage (MB) | CPU Utilization (%) |

|---|---|---|---|

| LR | 0.000625 | 1616.863281 | 201.6 |

| NB | 0.001455 | 1616.898438 | 101.9 |

| KNN | 1.986421 | 1695.187500 | 157.1 |

| RF | 0.015431 | 1690.187500 | 97.1 |

| GB | 0.002177 | 1703.367188 | 100.2 |

| AdaBoost | 0.011036 | 1703.367188 | 96.2 |

| LightGBM | 0.002385 | 1645.976562 | 180.3 |

| MLP | 0.002038 | 1703.417969 | 380.6 |

| Reference | Year | Classifier | Accuracy |

|---|---|---|---|

| [18] | 2016 | RF | 97.1% |

| KNN | 91.9% | ||

| NB | 43% | ||

| [34] | 2017 | RF | 95.2% |

| [35] | 2017 | RF | 99.7% |

| [20] | 2017 | NB | 96.5% |

| [22] | 2019 | RF | 99.5% |

| [36] | 2020 | KNN | 97.8% |

| [5] | 2020 | AdaBoost | 92% |

| [37] | 2022 | MLP | 95.8% |

| RF | 95.6% | ||

| [38] | 2022 | NB | 88.2% |

| RF | 100% | ||

| [21] | 2025 | RF | 74.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aburbeian, A.M.; Fernández-Veiga, M.; Hasasneh, A. Improving Remote Access Trojans Detection: A Comprehensive Approach Using Machine Learning and Hybrid Feature Engineering. AI 2025, 6, 237. https://doi.org/10.3390/ai6090237

Aburbeian AM, Fernández-Veiga M, Hasasneh A. Improving Remote Access Trojans Detection: A Comprehensive Approach Using Machine Learning and Hybrid Feature Engineering. AI. 2025; 6(9):237. https://doi.org/10.3390/ai6090237

Chicago/Turabian StyleAburbeian, AlsharifHasan Mohamad, Manuel Fernández-Veiga, and Ahmad Hasasneh. 2025. "Improving Remote Access Trojans Detection: A Comprehensive Approach Using Machine Learning and Hybrid Feature Engineering" AI 6, no. 9: 237. https://doi.org/10.3390/ai6090237

APA StyleAburbeian, A. M., Fernández-Veiga, M., & Hasasneh, A. (2025). Improving Remote Access Trojans Detection: A Comprehensive Approach Using Machine Learning and Hybrid Feature Engineering. AI, 6(9), 237. https://doi.org/10.3390/ai6090237