1. Introduction

Sensor-based HAR makes use of sensor data from various sensors, e.g., accelerometers and gyroscopes of mobile phones, to recognize and classify human activities [

1,

2,

3,

4]. It facilitates numerous applications, e.g., health monitoring, fitness tracking, personalized services, etc., and thus is a key element in context-aware systems in the growing Internet of Things (IoT) ecosystem. The advent of Artificial Intelligence (AI), particularly Deep Learning (DL), has enhanced HAR technology by overcoming limitations in adaptability and accuracy. The DL approach provides the possibility of automated feature extraction from raw sensor data without requiring hand-designed features [

5,

6,

7]. In contrast to traditional machine learning models, DL models can model intricate patterns in inertial data, which can significantly improve activity classification performance.

However, applying DL models to HAR applications has its shortcomings, with the foremost being in centralized processing, as well as problems surrounding data privacy [

8,

9,

10]. The majority of classic DL models prefer to have their foundation rooted in the centralized collection and processing of data, wherein sensitive individualized data, e.g., activity patterns that can reflect the current health state of an individual, are stored and processed within one central facility. This centralized system significantly increases the risk of personal data breaches because a single point of failure can be attacked. For example, HAR data theft from a centralized database would lead to the unauthorized release of personal data in large numbers, such as thousands or even millions of victims. Such attacks not only violate user privacy but also raise immense ethical and legal issues.

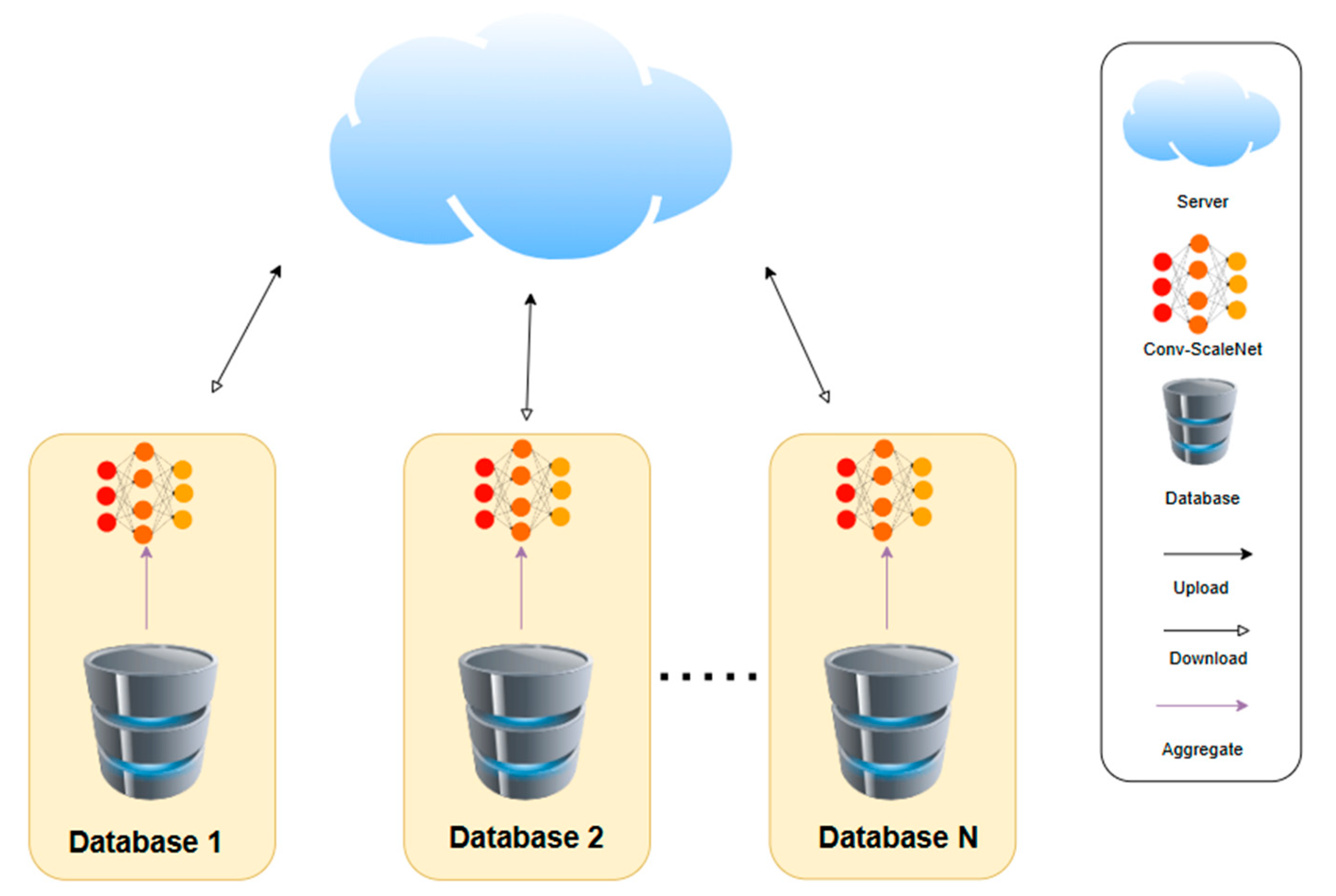

To address these challenges, FL has emerged as a promising alternative. In the FL framework, data learning and analysis are conducted in a decentralized practice [

11,

12,

13]. Specifically, model training occurs locally on each client device/system. With this, raw sensor data remains on local devices, and only model updates are transmitted to a central server for model update aggregation to form a global model. This approach decentralizes computation, reducing the risk of data leakage that might normally occur in centralized frameworks. Nevertheless, the application of FL in sensor-based HAR presents significant challenges, mainly due to the inherent heterogeneity of the data. Environmental conditions and noises (i.e., different weathers, surface types, crowded versus spacious areas, obstacles, etc.), as well as distinctions in motion styles, gestures, and pace among individuals, result in behavioral variability, even when performing the same activities and using the same device. This data heterogeneity can substantially degrade the performance of the HAR system. Local models trained on data from one group of users may not generalize well to the other groups of users. Additionally, this variability across users can cause client drift, where local model updates diverge, impacting the convergence and overall performance of the global model.

The factors mentioned above pose challenges to achieving reliable and accurate HAR in the FL framework, particularly when traditional models such as 1-dimensional Convolutional Neural Networks (1D CNNs) are employed in HAR applications. The models may struggle to handle the data heterogeneity effectively. This heterogeneity results in complex data features, and the 1D CNNs may be suboptimal to extract these intrinsic complex features from the inertial data, which are important for accurate activity recognition. FL operates in a decentralized mode, where local models are trained at the clients using their respective local datasets. Only the model updates are shared with a central server and aggregated to construct a global model, which is then distributed back to the clients. This process is repeated iteratively until the global model converges; see

Figure 1. Local training models are vital in this context as they enable each client to capture unique and informative features of their data.

Therefore, this study focuses on improving the 1D CNN architecture for local data training in FL. In the FL framework, decentralized datasets are stored on user devices, e.g., smartphones or wearable sensors, and no raw inertial sensor data is transmitted to the server. In the proposed 1D CNN model termed Conv-ScaleNet, the Pyramid Pooling Module (PPM) and Global Average Pooling (GAP) are incorporated to capture multiscale features of the inertial data, facilitating effective feature extraction that aggregates local and global data characteristics. This multiscale feature representation enhances the model’s ability to handle diverse data distributions. Experiments on publicly available HAR datasets, i.e., WISDM and UCI-HAR, demonstrate that the proposed Conv-ScaleNet outperforms existing approaches in federated settings, achieving a higher accuracy and F1-score while preserving user privacy.

The following are the contributions of this paper:

To develop a smartphone-based HAR system that protects privacy by decentralizing model training through an FL architecture. Raw sensor data remains on each user’s device, and only model updates are exchanged with the central server for aggregation.

To integrate Pyramid Pooling Module (PPM) and Global Average Pooling (GAP) in the DL architecture to produce a multiscale feature representation. The application enables multiscale feature extraction from the inertial sequence through pooling operations at different scales. The aggregation of local and global characteristics enhances the model’s adaptability to varied data distributions.

The performance of the proposed Conv-ScaleNet is assessed using a publicly accessible dataset. This assessment shows that the proposed model has superior performance compared to the other existing approaches in the FL environment.

2. Related Work

Advancements in DL have significantly enhanced HAR with increased accuracy and robustness in various applications such as healthcare monitoring and smart environments [

13,

14,

15,

16,

17]. DL models could intrinsically extract meaningful spatiotemporal features from raw sensor data, outperforming traditional methods dependent on handcrafted features. There are numerous DL models proposed in human activity recognition. For instance, Meng et al. focused on optimizing sensor configurations for specific populations [

18]. In the work, a comparative study was performed to investigate single-sensor HAR for stroke survivors and able-bodied individuals, using accelerometers, gyroscopes, and sEMG sensors to identify the optimal sensor type and placement. Additionally, the authors demonstrated that a model trained on healthy individuals could effectively classify activities in stroke survivors. This finding highlights opportunities for rehabilitation monitoring with minimal sensor setups. Similarly, research on hand gesture recognition for prosthesis and armband-based human–machine interfaces has examined how factors such as measurement location, gesture type, and validation protocol affect classification accuracy [

19]. Using high-density surface electromyography (HD-sEMG) to simulate various electrode placements, the authors evaluated ten commonly-used dynamic and gesture-and-hold gestures under intraday, interday, and intersubject protocols. The results showed that while traditional pattern recognition approaches achieved high accuracy within-subjects, performance decreased across days or users due to sEMG variability and electrode shifts. This underscores the need for robust configurations that balance gesture set size, accuracy, and generalizability for practical applications.

Furthermore, Abdel-Basset et al. proposed ST-deepHAR, utilizing a spatiotemporal dual-channel model integrated with a Long Short-Term Memory (LSTM) layer and an Attention Mechanism with modified Residual for smartphone HAR recognition [

20]. This approach achieved 97.7% accuracy on the UCI-HAR dataset and 96.4% on the WISDM dataset. Gupta et al. adopted a hybrid CNN-GRU model, attaining an accuracy of 96.54% using smartwatch data and 90.44% with smartphone data [

7]. Additionally, Bashar et al. proposed a smartphone-based HAR model that combines activity-driven handcrafted features with neighborhood component analysis for feature selection, followed by a dense neural network with four hidden layers [

21]. The proposed model attained an accuracy of 95.79%. Similarly, Sekaran et al. devised smartphone-based inertial sensor HAR [

22]. The empirical results revealed that the proposed model achieved 96.4% accuracy while mitigating the computational overhead and privacy concerns associated with vision-based HAR. Kumar and Suresh introduced an ensemble model named Deep-HAR by integrating CNN and a Recurrent Neural Network (RNN) [

23]. The model demonstrated its superiority in smartphone HAR evaluated on WISDM and KU-HAR smartphone datasets.

Hierarchical Hybrid Deep Learning Architecture for Wearable Sensor-Based Human Activity Recognition, coined HiHAR, was proposed by Nguyen et al. [

24]. This is a hierarchical deep model integrating CNN and Bidirectional Long Short-Term Memory (BiLSTM) to extract short-term and long-term dependencies from sensor data. There are two stages in the model: a local stage that captures spatiotemporal features from a single window and a global stage that extracts long-term context from adjacent windows. HiHAR was tested on smartphone HAR datasets: UCI HAPT and MobiAct, and attained high accuracy with scores of 97.98% and 96.16%, respectively. Although DL approaches exhibit promising performance, they often raise privacy concerns. The centralized data collection and analysis in DL increases the risk of exposing sensitive data. Centralized systems are vulnerable to single points of failure, where a single subsystem’s failure can lead to the disruption of the entire system.

To mitigate these challenges, FL has been introduced as an alternative solution. In this decentralized data processing framework, FL enables HAR models to be trained on user devices for the sake of privacy [

12,

25]. Presotto et al. devised FedCLAR, a Federated Clustering approach for HAR [

26]. FedCLAR classifies users with similar activity patterns by examining a subset of the model weights, which are shared with a central server. This selective analysis minimizes communication overhead. The system outperforms conventional FL solutions in HAR tasks. Additionally, the author further enhanced the model by integrating federated clustering and Semi-Supervised learning [

27]. In the proposed model, SS-FedCLAR, each client employs a combination of active learning and label propagation to compute pseudo-labels, which are subsequently used to collaboratively train a Federated Clustering model. On the other hand, Sarkar et al. proposed the Graph Neural Network, called (GraFeHTy), in a federated setting for HAR [

28]. This approach constructs a similarity graph from sensor measurements to leverage relationships between labeled and unlabeled data. The experimental results showed that the proposed model attained an accuracy of 91% in a centralized setting and 81.7% when applied to the WISDM dataset in a federated setting. Xiao et al. proposed HARFLS, a federated learning system for sensor-based human activity recognition [

29]. The model integrates a perceptive extraction network (PEN), consisting of a convolutional feature network and an LSTM–attention relation network, to capture both local and global features from HAR data. Experimental results show that PEN outperforms 14 existing HAR algorithms in F1-score, and HARFLS with PEN achieves better recognition on WISDM and PAMAP2 compared to 11 federated learning systems with different feature extraction methods. PEN achieved F1-scores of 98.97% on WISDM, 96.33% on UCI_HAR2012, 97.78% on PAMAP2, and 96.89% on OPPORTUNITY, resulting in a mean F1-scores of 97.49% (±1.83) across the datasets, demonstrating robust and consistent performance across diverse datasets.

On the other hand, Yussif et al. proposed the Efficient Graph and Temporal Con-volution Network, abbreviated as EGTCN, for human activity classification [

30]. The approach enhances segment-based and frame-wise predictions and decreases computational cost. FL has been incorporated into EGTCN to preserve user privacy by training models locally on edge devices and aggregating central updates for efficient and secure activity recognition in decentralized environments. The proposed EGTCN outperforms current state-of-the-art methods with an F1-score of 99.99% on the WISDM dataset, 98.3% on the UCI-HARD, and 99.92% on the PAMAP2 dataset. This demonstrates that the weight dual-objective function optimization can dramatically improve classification performance on datasets.

Shen et al. introduced Federated Multi-Task Attention (FedMAT), a federated activity recognition framework that addresses mismatches in sensor data contributed by different individuals [

31]. FedMAT extracts shared and private features to combine information from various sensors effectively. FedMAT consistently outperforms the compared baselines in tests over four different datasets. The proposed model achieved an accuracy of 96.88% and an F1 score of 96.81% on the HHAR dataset; 92.61% accuracy and a 91.84% F1-score on PAMAP2; 75.72% accuracy and a 75.03% F1-score on the ExtraSensory dataset; and 89.78% accuracy and an F1 score of 83.02% on the SmartJLU dataset.

Despite significant advances in FL for human activity recognition. Many existing models face challenges in capturing multiscale features from sensor data, which are crucial for activity recognition. Conv-ScaleNet addresses this challenge by incorporating a Pyramid Pooling Module (PPM) with Global Average Pooling (GAP) to improve feature extraction while leveraging FL to train models locally on user devices for privacy protection.

3. Materials and Methods

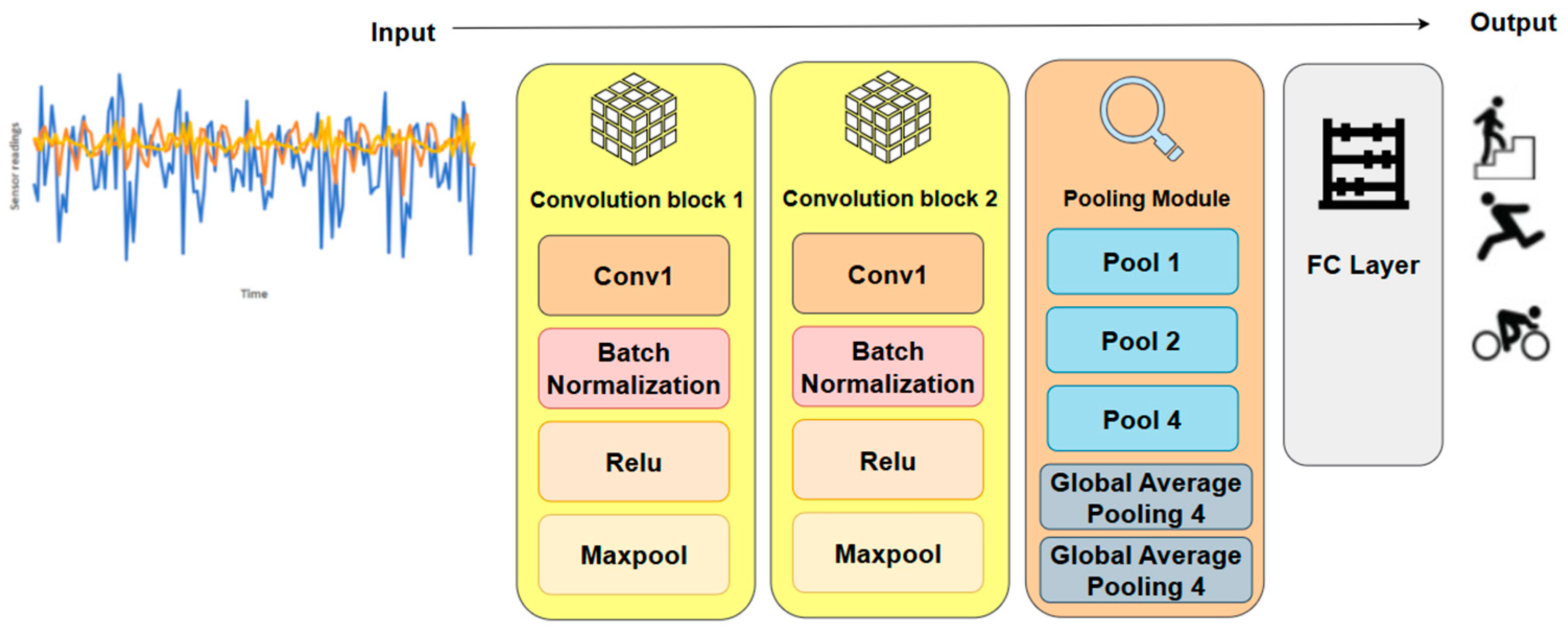

As aforementioned, FL in sensor-based HAR encounters challenges due to data heterogeneity, which can degrade model performance. Traditional models, such as 1D CNNs, may struggle to extract the intrinsic, complex features for reliable activity recognition. Thus, we introduce an FL HAR with an improved convolutional-based local training model (named Conv-ScaleNet), depicted in

Figure 2.

In the proposed decentralized system, each client trains the Conv-ScaleNet using their local inertial data. The Conv-ScaleNet is designed by incorporating the Pyramid Pooling Module (PPM) and Global Average Pooling (GAP) to capture multiscale features of the inertial data. Next, the locally trained model updates are transmitted to a central server, and an aggregation strategy is employed to combine the updates and construct a global model. This collaborative learning ensures data privacy preservation by keeping the raw data locally while enhancing the generalizability of the global model.

3.1. Data Acquisition and Preprocessing

In this study, we employed two publicly available smartphone-based HAR datasets, i.e., WISDM and UCI-HAR, which are both labeled with each activity instance assigned to a predefined class. The Wireless Sensor Data Mining (WISDM) dataset is available at

https://www.cis.fordham.edu/wisdm/dataset.php (accessed on 2 June 2025) [

32]. The dataset consists of 1,098,207 instances of sensor-based activity data from 36 subjects, sampled at 20 Hz, recorded using the accelerometer embedded in a smartphone. Six activities were performed, including walking, jogging, walking upstairs, walking downstairs, sitting, and standing, according to a predefined protocol. On the other hand, the UCI Human Activity Recognition (HAR) dataset is available at

https://archive.ics.uci.edu/ml/datasets/human+activity+recognition+using+smartphones (accessed on 2 June 2025) [

33]. It contains recordings of six daily activities, including walking, walking upstairs, walking downstairs, sitting, standing, and lying, performed by 30 subjects wearing a Samsung Galaxy S II smartphone on the waist. Motion signals were captured at 50 Hz using the phone’s embedded accelerometer and gyroscope. Participants were aged between 19 and 48 years, though no gender distribution is reported. Each sample consists of a 128-time-step window of nine sensor signals: three axial linear accelerations, three axial body accelerations, and three axial angular velocities.

For both datasets, we applied a split of 65% for training, 25% for validation, and 10% for testing, ensuring each data instance appeared in only one partition. The validation set was used for hyperparameter tuning and model selection, while the independent testing set was reserved for model performance evaluation. In this study, data samples from the same participant could be present across training, validation, and testing sets, but no samples overlapped across these sets. This experimental strategy was designed to approximate realistic deployment conditions where an individual may interact with multiple applications or devices. However, care was taken to avoid direct data leakage by ensuring that training samples were excluded from both validation and testing.

3.2. Local Training Model: Conv-ScaleNet Architecture

The proposed Conv-ScaleNet is an enhanced convolutional-based local training model in the FL framework for human activity recognition. It is designed to capture multiscale features from inertial sensor data. As depicted in

Figure 3, the Conv-ScaleNet architecture begins with two convolutional blocks to extract both localized and high-level feature patterns from the input inertial signals. The first convolutional block employs a 1D kernel of size 5 to capture local, short-range dependencies, while the second convolutional block adopts a larger 1D kernel of size 7 to capture broader patterns. These two convolutional blocks complement each other to learn short and long-range dependencies, which are crucial for reliable human activity recognition. Batch normalization and ReLU activation functions are integrated into the convolutional blocks to stabilize the model training. Specifically, batch normalization improves model convergence, and ReLU introduces nonlinearity to facilitate the model’s ability to learn complex patterns from the sensor data. Each convolutional block is followed by a max pooling layer to reduce feature map dimensions.

To enhance the multiscale feature learning, Conv-ScaleNet incorporates a pooling module, using Pyramid Pooling operations at different scales—e.g., pool sizes 1, 2, and 4—to learn features at various levels of granularity. The Pyramid Pooling multiscale behavior allows the model to detect fine-level details as well as broader contextual information in the given data. By pooling features at different scales, the Pyramid Pooling Module captures information across multiple spatial areas and thereby enhances the capacity of the model to learn various sizes and shapes as well as objects. On the other hand, GAP provides global context information, complementing the local context extracted by the former pooling.

3.3. Model Aggregation

Traditional centralized learning faces challenges in a federated setting, particularly due to non-IID data distributions across clients. Hence, the proposed approach performs dynamic weighting for global model aggregation to ensure the contribution of each client is proportional to its dataset size. Global model aggregation is performed by integrating the model updates in a decentralized manner. Clients’ contributions are weighted dynamically depending on the number of data samples per client.

In the proposed Conv-ScaleNet based FL, the central server constructs a global model by merging local model updates, and the aggregation is weighted dynamically based on the clients’ dataset sizes to prevent models from being biased towards clients with larger datasets [

34]. The global model at each iteration is computed as follows:

where

is the local model updated from client

k,

is the number of data samples on client

k, and

n denotes the total number of data samples across all clients.