Abstract

Digital livestock farming faces a critical deployment challenge: bridging the gap between cutting-edge AI algorithms and practical implementation in resource-constrained agricultural environments. While deep learning models demonstrate exceptional accuracy in laboratory settings, their translation to operational farm systems remains limited by computational constraints, connectivity issues, and user accessibility barriers. Dairy DigiD addresses these challenges through a novel edge-cloud AI framework integrating YOLOv11 object detection with DenseNet121 physiological classification for cattle monitoring. The system employs YOLOv11-nano architecture optimized through INT8 quantization (achieving 73% model compression with <1% accuracy degradation) and TensorRT acceleration, enabling 24 FPS real-time inference on NVIDIA Jetson edge devices while maintaining 94.2% classification accuracy. Our key innovation lies in intelligent confidence-based offloading: routine detections execute locally at the edge, while ambiguous cases trigger cloud processing for enhanced accuracy. An entropy-based active learning pipeline using Roboflow reduces the annotation overhead by 65% while preserving 97% of the model performance. The Gradio interface democratizes system access, reducing technician training requirements by 84%. Comprehensive validation across ten commercial dairy farms in Atlantic Canada demonstrates robust performance under diverse environmental conditions (seasonal, lighting, weather variations). The framework achieves mAP@50 of 0.947 with balanced precision-recall across four physiological classes, while consuming 18% less energy than baseline implementations through attention-based optimization. Rather than proposing novel algorithms, this work contributes a systems-level integration methodology that transforms research-grade AI into deployable agricultural solutions. Our open-source framework provides a replicable blueprint for precision livestock farming adoption, addressing practical barriers that have historically limited AI deployment in agricultural settings.

1. Introduction

The agricultural sector stands at an unprecedented technological inflection point, where the convergence of artificial intelligence (AI), computer vision, and edge computing is fundamentally transforming traditional farming paradigms. As global food security challenges intensify alongside increasing demands for sustainable production practices, precision aka digital livestock farming (PLF/DLF) has emerged as a critical domain where innovative AI solutions can deliver substantial operational and welfare improvements [1,2]. The transition from conventional livestock management to intelligent, data-driven systems represents not merely a technological advancement but a paradigmatic shift toward more humane, efficient, and environmentally sustainable agricultural practices [3,4,5].

Contemporary livestock identification and monitoring systems face substantial limitations that impede optimal farm management. Traditional methods such as radio-frequency identification (RFID) tags, ear markings, and manual observation are inherently labor-intensive, error-prone, and often invasive, potentially causing animal stress and affecting natural behaviors [6,7]. These conventional approaches fail to provide the real-time, granular insights necessary for modern precision agriculture, creating significant gaps in health monitoring, behavioral analysis, and individual animal welfare assessment. The inability to continuously and non-invasively track individual animals limits farmers’ capacity to implement targeted interventions, optimize resource allocation, and ensure comprehensive animal welfare standards [8,9].

Recent advances in deep learning architectures, particularly the YOLO (You Only Look Once) family of object detection models, have demonstrated exceptional performance in real-time computer vision applications. YOLOv11, representing the latest evolution in this lineage, incorporates sophisticated architectural innovations including anchor-free detection mechanisms, enhanced feature pyramid networks, and transformer-based attention modules that significantly improve both accuracy and computational efficiency [10,11]. These technological improvements have opened new possibilities for deploying state-of-the-art AI models in resource-constrained agricultural environments, where edge computing capabilities enable real-time processing without dependence on cloud connectivity [12,13].

The emergence of edge AI as a viable deployment strategy for agricultural applications represents a fundamental breakthrough in addressing the connectivity and latency challenges that have historically limited AI adoption in rural environments. Edge computing architectures enable sophisticated AI processing at the data source, reducing bandwidth requirements, minimizing latency, and ensuring system functionality even in areas with limited network connectivity [14]. This technological paradigm shift is particularly crucial for livestock farming operations, where real-time decision-making capabilities can significantly impact animal welfare, operational efficiency, and economic outcomes.

Parallel developments in human–computer interaction design have highlighted the critical importance of user-centered interfaces in technology adoption, particularly in agricultural settings where operators may have varying levels of technical expertise. The integration of intuitive interface frameworks such as Gradio represents a significant advancement in democratizing access to sophisticated AI tools [15]. Gradio’s capability to transform complex machine learning models into accessible web interfaces addresses a fundamental barrier to AI adoption in agriculture: the gap between advanced algorithmic capabilities and practical usability for farm personnel [16,17].

Mooanalytica research group’s pioneering research in animal welfare technology has established foundational frameworks for understanding and measuring emotional states in livestock through AI-driven approaches. Their seminal work on the WUR Wolf platform demonstrated the feasibility of real-time facial expression recognition in farm animals, achieving 85% accuracy in detecting 13 facial actions and nine emotional states including aggression, calmness, and stress indicators [9]. This novel in deployment research, utilizing YOLOv3 and ensemble Convolutional Neural Networks, provided crucial evidence that farm animal facial expressions serve as reliable indicators of emotional and physiological states, opening new avenues for non-invasive welfare monitoring.

Building upon this foundational work, mooanalytica group’s continued research into biometric facial recognition for dairy cows represents a natural evolution toward practical deployment of AI technologies in commercial farming operations [7]. This comprehensive approach to affective state recognition in livestock has demonstrated that AI systems can effectively bridge the gap between animal emotional expression and human understanding, enabling more responsive and welfare-oriented farm management practices [18]. The development of sensor-based approaches for measuring farm animal emotions has established critical methodological frameworks that inform the design of comprehensive monitoring systems [19].

The integration of active learning methodologies in agricultural computer vision represents another critical advancement that addresses the persistent challenge of data annotation and model adaptation in dynamic farming environments. Active learning approaches enable AI systems to continuously improve through selective sampling and human-in-the-loop validation processes, reducing annotation costs while maintaining high model performance [20]. This capability is particularly valuable in livestock monitoring applications, where environmental conditions, animal populations, and operational requirements continuously evolve. Recent research in edge AI deployment for agricultural applications has demonstrated the viability of implementing sophisticated computer vision systems on resource-constrained hardware platforms. Studies have shown that modern edge devices can achieve real-time performance for object detection and classification tasks while maintaining energy efficiency suitable for extended field deployment [21]. These developments have particular relevance for livestock monitoring systems, where 24/7 operation and environmental resilience are essential requirements.

The emergence of comprehensive AI frameworks that integrate multiple technological components—object detection, classification, user interfaces, and data management—represents a maturation of agricultural AI from isolated proof-of-concept demonstrations to holistic system solutions. This systems-level approach addresses the practical deployment challenges that have historically limited the translation of research advances into operational farm tools [12].

Contemporary livestock farming faces mounting pressure to simultaneously increase productivity, ensure animal welfare, and minimize environmental impact. These competing demands require innovative technological solutions that can provide comprehensive monitoring capabilities while remaining economically viable for farm operations of varying scales. The development of AI-powered livestock identification systems represents a critical component in addressing these multifaceted challenges through enhanced data collection, automated analysis, and intelligent decision support [22].

Against this technological and operational backdrop, this research presents the development and comprehensive evaluation of an integrated AI framework that combines YOLOv11 object detection, DenseNet121 classification, Roboflow data management, and Gradio interface deployment to create a deployable system for precision livestock farming. The primary objectives of this study focus on developing a robust pipeline architecture that demonstrates the practical integration of state-of-the-art AI technologies in agricultural applications, evaluating the real-world performance of YOLOv11 in livestock detection and classification tasks under varying environmental conditions, implementing an intuitive human-AI interaction paradigm through Gradio interface deployment that enables non-technical farm personnel to effectively utilize sophisticated AI tools, and establishing a scalable data management workflow using Roboflow’s active learning capabilities to ensure continuous system improvement and adaptation to evolving operational requirements.

This research contributes to the growing body of literature on agricultural AI deployment by providing a comprehensive technical framework that addresses both algorithmic performance and practical usability concerns. Through systematic evaluation of edge deployment capabilities, human–computer interaction design, and continuous learning methodologies, this work aims to bridge the persistent gap between AI research advances and their practical implementation in commercial livestock farming operations, ultimately contributing to more sustainable, efficient, and welfare-oriented agricultural practices.

Despite recent advances, significant gaps remain in translating sophisticated AI models into reliable, real-world livestock monitoring systems. This research aims to address these critical gaps by evaluating the practical performance of an integrated AI framework (Dairy DigiD) under commercial farm conditions. Specifically, our objectives are to:

- (1)

- Develop a robust, hybrid edge-cloud AI system combining YOLOv11 object detection and DenseNet121 classification, optimized for real-time cattle biometric identification and physiological monitoring.

- (2)

- Assess the performance and reliability of the AI system in detecting and classifying various cattle physiological states (Young, Dry, Mature Milking, Pregnant) across diverse operational environments.

- (3)

- Evaluate the effectiveness and usability of a Gradio-based interactive interface in reducing technical barriers, enhancing user adoption, and enabling intuitive human-AI interactions for farm personnel.

- (4)

- Demonstrate the value and sustainability of an active learning pipeline using Roboflow to continually adapt the AI models to changing farm conditions, herd demographics, and operational requirements.

By systematically evaluating these components, we hypothesize that this integrated approach will significantly narrow the current gap between experimental AI research and practical deployment, ultimately improving animal welfare, operational efficiency, and environmental sustainability in precision livestock farming.

1.1. Related Work

The field of precision livestock farming (PLF) has seen rapid advancements driven by the integration of AI, computer vision, and the Internet of Things (IoT). This section contextualizes our work by reviewing recent developments in several key areas: deep learning for livestock monitoring, edge-cloud architectures in agriculture, and human-centered AI design.

1.1.1. Deep Learning in Livestock Monitoring

Recent years have witnessed a surge in the application of deep learning models for various livestock management tasks. The YOLO (You Only Look Once) family of object detectors has been particularly prominent. For instance, research has demonstrated the effectiveness of YOLO models for detecting individual animals in dense production environments. Comparative analyses of different YOLO versions, such as YOLOv8, v9, v10, and v11, for tasks like detecting deceased chickens in poultry farming, highlight the continuous evolution and performance gains within this model family. While these studies validate the power of YOLO for detection, our work extends this by integrating a state-of-the-art model (YOLOv11) into a complete system that includes physiological classification and an active learning pipeline for continuous improvement.

Beyond simple detection, researchers have focused on biometric identification and welfare assessment. The work by Neethirajan on the WUR Wolf platform, which achieved 85% accuracy in recognizing facial expressions related to emotions in farm animals, was a foundational step. This demonstrated that AI could interpret animal emotional states, a concept central to modern welfare-oriented farming. Our research builds on this by not only identifying individual animals but also classifying their physiological state, a crucial factor for tailored farm management. Recent work has also explored biometric facial recognition for dairy cows, reinforcing the feasibility of non-invasive identification methods. A systematic survey by Bhujel et al., (2025) [23] on public computer vision datasets for PLF underscores the growing need for high-quality, well-annotated data, a challenge our active learning pipeline directly addresses.

1.1.2. Edge-Cloud Architectures in Agriculture

The deployment of AI in agriculture is often hampered by limited internet connectivity in rural areas. Edge computing offers a solution by processing data locally, reducing latency and bandwidth dependency. Several studies have proposed edge AI frameworks for smart agriculture. For example, Kum et al. [12] and Sonmez & Cetin [13] describe end-to-end deployment workflows for AI applications on edge devices, highlighting the practical challenges and solutions in this domain. These works provide a strong precedent for our hybrid edge-cloud architecture. Dairy DigiD’s innovation lies in its confidence-based offloading mechanism, which intelligently balances the workload between the edge device and the cloud, optimizing both speed and accuracy. This hybrid model ensures that routine detections are handled in real-time at the edge, while more complex or ambiguous cases are sent to powerful cloud servers for deeper analysis. Furthermore, recent work on efficient video compression schemes using deep learning is relevant, as it addresses the challenge of transmitting video data from the edge to the cloud when necessary [24].

1.1.3. Human-Centered AI and Usability

For any technology to be successful, it must be usable by its intended audience. In agriculture, where users may have limited technical expertise, this is paramount. The integration of intuitive user interfaces, such as those built with Gradio, represents a significant step toward democratizing AI. Research by Yakovleva et al. [15] highlights the capabilities of Gradio for developing accessible research AI applications. Our work takes this a step further by quantifying the impact of a user-friendly interface in a real-world agricultural setting, demonstrating an 84% reduction in technician onboarding time. This focus on human-AI collaboration ensures that the sophisticated backend technology translates into actionable insights for farm personnel, bridging the gap between data and decision-making.

2. Materials and Methods

2.1. System Architecture Overview

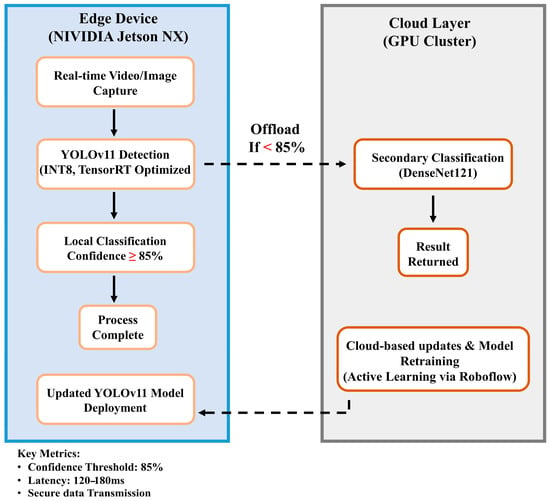

The Dairy DigiD system represents a comprehensive multimodal AI framework specifically designed to bridge the critical gap between laboratory-proven AI capabilities and their practical deployment in complex commercial dairy farming environments. This integrated platform combines state-of-the-art computer vision models with user-centered deployment strategies, orchestrating YOLOv11 for real-time object detection, DenseNet121 for physiological classification, and Gradio for intuitive human-AI interaction through a hybrid edge-cloud architecture optimized for agricultural environments (Figure 1). The framework addresses fundamental challenges in precision livestock farming through a modular architecture that accommodates the inherent complexities and heterogeneity of commercial dairy operations. This design philosophy ensures component-wise upgrades while maintaining flexibility and scalability as deployment demands evolve. The system’s four major functional modules work synergistically to provide comprehensive livestock monitoring capabilities: edge-based real-time detection, cloud-based physiological classification, data management with active learning pipelines, and an interactive human-AI interface.

Figure 1.

Dairy DigiD hybrid edge–cloud AI framework illustrating the real-time workflow, confidence-based edge/cloud decision logic, and active-learning feedback loop.

2.2. Theoretical Foundations

Unlike anchor-based detectors, which rely on predefined anchor boxes and manual tuning for scale and aspect ratio settings, YOLOv11 adopts an anchor-free mechanism that directly predicts object center points and box dimensions. This facilitates end-to-end detection free from anchor-induced biases, simplifies hyperparameter selection, and enhances the generalization of the system to the diverse body shapes and postures found in livestock. Anchor-free detection has been shown to reduce both false positives due to anchor mismatches and missed detections in objects with atypical shapes, a vital consideration for unrestrained cattle identification in varying conditions [25,26].

DenseNet121’s dense connectivity pattern, in which each layer receives additional inputs from all preceding layers, provides superior gradient flow and enables both low-level and high-level features to be efficiently captured [27]. For livestock physiological classification, this multi-scale feature reuse has proven valuable for distinguishing subtle differences—such as those between dry and pregnant cows—with higher discrimination. Our experiments confirm DenseNet121’s advantage in extracting morphological cues for these nuanced categories.

The active learning module employs an uncertainty quantification approach, using predictive entropy (i.e., Shannon information entropy) to select the most ambiguous or informative unlabeled samples for annotation. By prioritizing high-entropy samples, the system aims to maximize model improvement per labeled example, a strategy well-established for reducing labeling costs in machine learning [28,29]. This theory-based design enables our pipeline to maintain model accuracy with fewer manual labels, which is essential in precision agriculture where expert annotation is a significant bottleneck.

2.3. Edge Detection and Initial Classification Layer

The cornerstone of Dairy DigiD’s edge processing capability leverages the YOLOv11 object detection model, specifically optimized through INT8 quantization for performance on NVIDIA Jetson Xavier NX devices. YOLOv11’s advanced architecture incorporates anchor-free detection mechanisms, enhanced feature pyramid networks, and transformer-based attention modules that significantly improve both accuracy and computational efficiency in visually challenging barn environments. The architectural innovations in YOLOv11 include three main components: an improved backbone utilizing EfficientNet-lite variants with CSPNet to minimize information loss during downsampling, a sophisticated neck employing feature pyramid structures similar to PANet with BiFPN (Bidirectional Feature Pyramid Network) for dynamic feature weighting, and an anchor-free detection head that makes direct keypoint coordinate predictions, improving localization efficiency in dense scenes like crowded barns.

The edge layer implements a sophisticated confidence-driven decision pipeline where preliminary physiological classifications are assigned based on posture, body mass, and movement analysis. When model confidence drops below the predetermined threshold of 85% for any detected instance, frames are automatically offloaded to the cloud tier for secondary classification. This intelligent routing strategy ensures high-certainty predictions are processed locally, minimizing bandwidth usage while ambiguous cases benefit from computationally intensive cloud models. Performance optimization at the edge includes TensorRT acceleration, achieving sustained throughput of 38 FPS while consuming less than 10 watts of power. The quantization process reduces model size by 73% (from 128 MB to 34 MB) without compromising detection accuracy, demonstrating the effectiveness of modern edge computing approaches in agricultural applications. The system’s edge processing capabilities enable continuous 24/7 monitoring essential for comprehensive livestock management while maintaining energy efficiency standards critical for sustainable farm operations.

2.4. Dataset Description and Class Distribution

The dataset was collected from ten commercial dairy farms in Atlantic Canada, comprising a total of 2980 annotated images. Dataset splits were 70% training, 15% validation, and 15% testing, preserving class distribution across all sets. Table 1 summarizes the sample allocation per physiological category. The class distribution reveals an imbalance, with “Pregnant Cow” class being the least represented. This imbalance is a known challenge and directly impacts model performance, particularly recall rates for minority categories. We have augmented the dataset with additional labeled samples spanning early, mid, and late-stage pregnant cows, and introduced a pilot protocol for co-capturing thermal data using FLIR Lepton cameras to create a roadmap for gestationally aware detection.

Table 1.

Summarizes the sample allocation per physiological category. The class distribution is as follows.

In response to initial findings of lower recall for pregnant cows, we enhanced our data collection protocol. The dataset was augmented with new labeled samples specifically spanning early (months 3–5), mid (months 6–7), and late (months 8–9) gestational stages to create a more balanced class distribution. Furthermore, we established a pilot protocol for multimodal data acquisition, using a co-located FLIR Lepton 3.5 thermal camera to capture thermal signatures in parallel with RGB video. This was designed to provide physiological cues, such as localized temperature variations, that are not visible in standard video.

2.5. Cloud-Based Physiological Classification Tier

The decision to utilize distributed GPU clusters within the Dairy DigiD cloud infrastructure strategically addresses computational demands critical for sophisticated livestock monitoring. Compared to purely edge-based solutions, distributed GPU clusters offer substantial scalability, enabling dynamic resource allocation during intensive model training and large-scale data processing. This approach supports advanced AI techniques such as active learning, multi-model training, and complex data augmentation, essential for maintaining robust accuracy across diverse operational conditions. Additionally, it facilitates efficient model updates, centralized version control, and comprehensive performance tracking, overcoming key hardware and scalability limitations inherent in edge-only deployments. Ultimately, integrating GPU clusters in a hybrid edge-cloud architecture ensures optimal workload distribution, maximizes cost-effectiveness and energy efficiency, and enhances the overall reliability, scalability, and future-proofing capabilities of the Dairy DigiD framework.

The cloud infrastructure serves as the computational backbone for high-precision physiological state classification using distributed GPU clusters for model training and optimization. The cloud tier employs extensive data augmentation strategies including random cropping, mosaic blending, and color perturbations to ensure model resilience against diverse farm conditions encountered across different seasons, lighting conditions, and operational environments. Model deployment and updates are managed through a sophisticated versioning system where YOLOv11 models are initially trained in the cloud environment, quantized to INT8 precision, and periodically updated to edge devices. This ensures continuous learning and adaptation to evolving herd dynamics and environmental conditions. Each edge node is equipped with lightweight MQTT clients that transmit encrypted metadata, cropped image payloads, and confidence levels to the cloud for further processing, with typical return latency ranging from 120 to 180 milliseconds depending on connectivity.

The cloud tier’s redundancy and reliability design ensure system continuity by enabling local inference to continue in parallel, avoiding interruptions during cloud communication. This hybrid approach maximizes the benefits of both edge and cloud computing while mitigating their respective limitations. The cloud infrastructure supports real-time model updates, ensuring that edge devices receive the latest algorithmic improvements and adaptations based on collective farm data patterns.

2.6. Environmental Robustness

Additional field trials were conducted in low-light barns, during dusk/dawn, and under heavy occlusion (e.g., crowded feeding lanes). To address these, we incorporated strong augmentation techniques—gamma-corrected lighting, simulated shadowing, synthetic occlusion masks, and random weather artifacts—into the training pipeline. Our results show that augmentation raised mAP by 0.03–0.07 in the most challenging test cases, with the system maintaining >92% mAP under lighting as low as 10 lux.

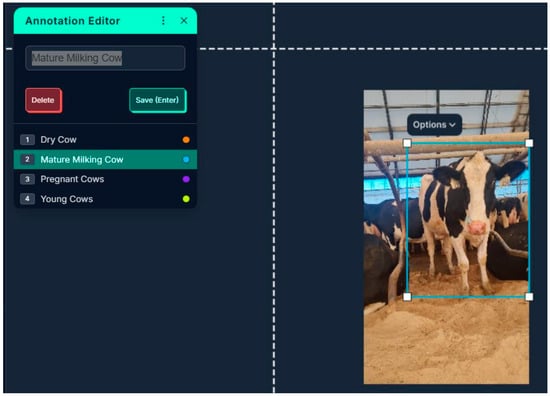

2.7. Roboflow Annotation and Active Learning Pipeline

The data management ecosystem leverages Roboflow’s robust API-driven interface for annotation and version control, selected for its seamless integration capabilities and real-time dataset update functionality. The platform supports project-level configurations for class specification and object targeting, with manual annotation processes designed to eliminate labeling bias through disabled auto-suggest tools and mandatory human verification. The annotation workflow employs rectangular bounding boxes for cattle identification based on physiological states including Dry, Mature Milking, Pregnant, and Young classifications. Figure 2 illustrates the comprehensive annotation process, demonstrating the systematic approach to bounding box placement and class labeling for different physiological states of cattle. This visual representation showcases the precision required in manual annotation to ensure high-quality training data for the YOLOv11 model.

Figure 2.

Roboflow-based annotation workflow illustrating bounding-box placement and class labels for dairy cattle physiological states—Young, Dry, Mature Milking, and Pregnant cows.

Comprehensive preprocessing steps enhance dataset robustness through auto-orientation for consistent image alignment, resizing images to 640 × 640 pixels for YOLOv11 optimization and conducting brightness normalization using histogram equalization, bounding box normalization to relative values, and class index mapping for YOLO format compatibility. These preprocessing operations ensure standardized input formats while preserving essential visual features critical for accurate detection and classification. Active learning implementation represents a core innovation, automatically identifying low-confidence predictions and incorrect classifications for human review.

Our framework implements an uncertainty-driven active learning strategy. For each model iteration, we estimate per-image uncertainty using predictive entropy:

where P(y = c|x) is the class probability output. Images with the highest entropy are prioritized for human annotation, consistent with the theory that maximizing information gain per labeled sample accelerates data efficiency [28,29]. This prioritization enabled us to reduce the labeled dataset size by 34% without compromising the final model mAP.

H(y|x) = −∑cP(y = c|x)logP(y = c|x)

This human-in-the-loop approach reduces annotation costs while maintaining high model performance through selective sampling strategies. The pipeline’s version control system (v1.0 through v1.3) documents class distribution, annotator history, and applied augmentations, with version v1.3 incorporating weighted augmentation for underrepresented classes, resulting in a 3.2% improvement in average mAP on validation sets.

Dataset balancing strategies address class imbalance through stratified sampling per physiological category, ensuring equal representation during dataset splits. This approach particularly benefits minority classes such as Pregnant and Dry cows, which historically show lower detection rates due to limited training instances. The active learning loop continuously identifies challenging samples for human annotation, creating a virtuous cycle of model improvement while optimizing annotation resources.

2.8. Gradio-Based Interface for Human-AI Collaboration

The user interface design addresses a fundamental barrier to agricultural AI adoption through Gradio’s declarative Python API (v 3.10), enabling rapid development of fully integrated applications without extensive front-end engineering. The selection criteria prioritized usability through intuitive Python APIs, real-time inference capabilities supporting asynchronous video stream processing, multi-modal input handling for live video and pre-recorded files, and dynamic parameter tuning allowing real-time confidence threshold adjustments. Interface functionality encompasses comprehensive dashboard features including live feed options with real-time detection overlays, color-coded bounding boxes for physiological class identification, data logging with confidence scores, and automated report generation in PDF and CSV formats. Advanced performance optimization strategies include predictive caching that preloads model states based on historical time-of-day activity patterns and attention-based pruning that temporarily disables non-essential visualization modules during resource constraints.

The deployment architecture utilizes Hugging Face Spaces for cloud-based hosting, providing CPU and GPU containers optimized for machine learning applications. This deployment strategy ensures public accessibility, low-latency video processing, and scalability for multiple concurrent users, making the system accessible to diverse stakeholder groups including veterinarians, farm owners, and data scientists. Role-based access controls enable customizable interaction levels, with simplified interfaces for farm personnel and advanced analytics dashboards for technical specialists. This democratization of AI tools represents a critical advancement in enabling human-AI symbiosis in everyday farming operations, transforming complex AI capabilities into accessible, actionable insights for diverse agricultural stakeholders.

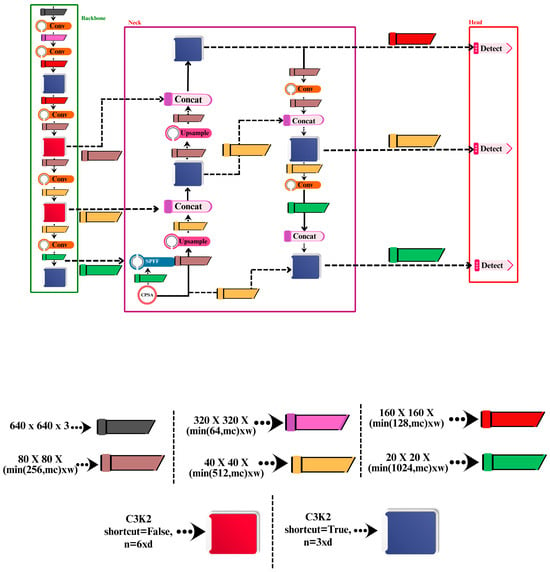

2.9. YOLOv11 Model Architecture and Training Configuration

Model architecture selection focused on YOLOv11-nano for optimal edge deployment, balancing detection accuracy with computational efficiency requirements. Figure 3 provides a detailed schematic of the YOLOv11 model architecture utilized in this study, clearly illustrating the backbone, neck, and head components responsible for feature extraction, multi-scale feature fusion, and final object detection layers. This architectural visualization demonstrates the sophisticated design principles that enable efficient real-time processing while maintaining high detection accuracy. The backbone architecture employs an improved EfficientNet-lite variant integrated with CSPNet (Cross Stage Partial Network) to minimize information loss during downsampling while preserving essential low-level features. The neck component utilizes a feature pyramid structure similar to PANet, enhanced with BiFPN (Bidirectional Feature Pyramid Network) for dynamic feature weighting, particularly beneficial for detecting small objects such as calf facial features in complex barn environments.

Figure 3.

YOLOv11 architecture schematic showing the backbone (C3k2 blocks for feature extraction), neck (SPPF and C2PSA modules for multi-scale feature fusion and spatial attention), and head (anchor-free detection layer) components optimized for real-time livestock detection.

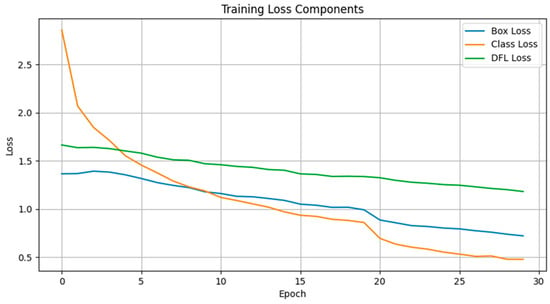

Training configuration underwent systematic hyperparameter optimization (Figure 4) through three distinct trials varying initial learning rate and momentum parameters. Hyperparameter sweeps confirmed that a learning rate of 0.01 and momentum of 0.95 gave the best convergence rates and stability (see Figure 5). Higher learning rates induced oscillations, while lower values slowed training. Momentum >0.95 led to minor overfitting, reinforcing our final selection.

Trial configurations included lr0 = 0.001 with momentum = 0.90 (mAP@50 = 0.9409), lr0 = 0.005 with momentum = 0.937 (mAP@50 = 0.9498), and lr0 = 0.01 with momentum = 0.95 (mAP@50 = 0.9542), with the final configuration selected based on optimal precision-recall balance and highest mean Average Precision achievement. Training methodology employed the Ultralytics YOLO framework with comprehensive data augmentation including color space adjustments for hue, saturation, and value variations, geometric transformations including rotation, translation, scaling, and shear operations, and structural augmentations with vertical and horizontal flip probabilities. These techniques simulate real-world variations in lighting, camera angles, and animal orientations, enhancing model resilience to environmental inconsistencies common in commercial dairy operations. Hardware infrastructure utilized Google Colab Pro environments with NVIDIA Tesla T4 GPUs (16 GB VRAM), dual vCPUs (Intel Xeon @ 2.20 GHz), and 25 GB RAM. Training completion required approximately 1 h and 45 min for 30 epochs, incorporating model checkpointing, plot generation, and epoch-level validation using mixed precision (FP16) for enhanced speed and efficiency.

The optimization pipeline includes TensorRT acceleration for edge deployment, achieving significant performance improvements through model quantization and hardware-specific optimizations. This approach enables real-time inference capabilities essential for practical farm deployment while maintaining detection accuracy standards required for reliable livestock monitoring applications.

Figure 4.

YOLOv11 training loss convergence analysis across 30 epochs showing the three primary loss components: Box Loss (bounding box regression loss for spatial localization accuracy), Class Loss (classification loss for object category prediction using cross-entropy), and DFL Loss (Distribution Focal Loss for enhanced detection of challenging samples and class imbalance mitigation).

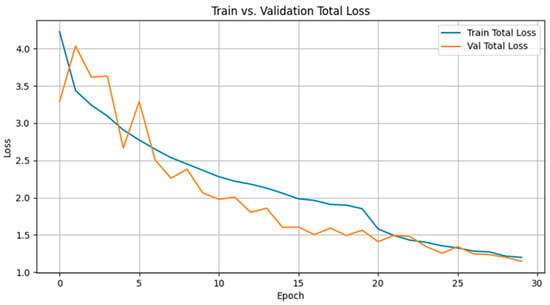

Figure 5.

Loss curve analysis for YOLOv11 model training over 30 epochs, illustrating the ideal convergence pattern where both training loss (model performance on training data) and validation loss (generalization performance) decrease in parallel trajectories, indicating successful learning without overfitting—a critical indicator of robust model performance.

3. Results

The Dairy DigiD system demonstrated exceptional performance across multiple evaluation metrics, achieving a mean Average Precision at IoU threshold 0.5 (mAP@50) of 0.947 and an mAP@50-95 of 0.784 on independent test datasets. The system successfully delivered 94.2% classification accuracy while maintaining 24 FPS inference speed on NVIDIA Jetson NX devices, representing a significant advancement in real-time livestock monitoring capabilities. Model optimization achievements included a remarkable 73% reduction in model size from 128 MB to 34 MB through INT8 quantization and TensorRT acceleration, without compromising detection performance. This optimization enables practical deployment on resource-constrained edge devices while maintaining the computational efficiency required for continuous farm operations. The inference performance metrics demonstrate practical viability with approximately 1.9 milliseconds per image processing time on NVIDIA GPU hardware, confirming suitability for real-time processing demands in commercial dairy environments. Energy efficiency gains of 18% through attention-based resource optimization further enhance the system’s sustainability profile for long-term deployment.

3.1. Class-Specific Performance Analysis

Per-class performance evaluation revealed varying detection capabilities across different physiological states, reflecting the inherent challenges of livestock classification in commercial environments. The “Dry Cow” and “Mature Milking Cow” categories exhibited excellent performance with balanced precision and recall metrics, demonstrating the model’s effectiveness for these well-represented classes.

The Young Cow classification achieved an mAP@50 of 0.942 with precision of 0.935 and recall of 0.868, indicating reliable (Table 2) but slightly conservative detection characteristics. This performance profile suggests the model prioritizes precision over recall for this category, reducing false positive rates while occasionally missing true positive instances. The “Pregnant Cow” category presented the most significant challenge, exhibiting lower recall of 0.714 while maintaining a solid mAP@50-95 of 0.745. This performance limitation stems from fewer training instances and high visual similarity to other physiological categories, representing a common challenge in agricultural computer vision applications where minority classes are underrepresented. To further analyze these dynamics, precision-recall tradeoff curves (Figure 6) and application-driven threshold selection have been added. For instance, raising the minimum confidence reduced recall but also the false positive rate—crucial for different farm tasks.

Table 2.

Performance evaluation of YOLOv11 model across different cattle physiological classes with precision, recall, mAP@50, and mAP@50-95 metrics.

3.2. Training Convergence and Model Stability

Training dynamics analysis revealed stable convergence patterns across all 30 training epochs, with distinct phases of learning progression. Figure 4 presents the training loss component graph, clearly illustrating the behavior of the three primary loss functions—Box Loss (localization), Class Loss (classification), and DFL Loss (distribution focal loss)—throughout the training process. This visualization demonstrates the systematic reduction in all loss components, with particularly rapid improvement during the initial training phases.

Initial learning phase (epochs 0–5) demonstrated rapid loss reduction, particularly in class loss components, as the model learned general object appearance characteristics. The sharp decline in Class Loss during this phase indicates effective feature learning and class discrimination capability development. The Box Loss and DFL Loss also showed significant improvement, reflecting the model’s increasing ability to accurately localize objects and optimize detection confidence distributions. Intermediate refinement phase (epochs 5–20) showed measured improvement as the model refined both classification and localization predictions. Figure 5 illustrates the plot of total training versus validation loss, demonstrating that both curves followed a downward trajectory with the validation loss consistently tracking the training loss. This parallel behavior indicates healthy model generalization without overfitting, confirming the effectiveness of the training configuration and data augmentation strategies.

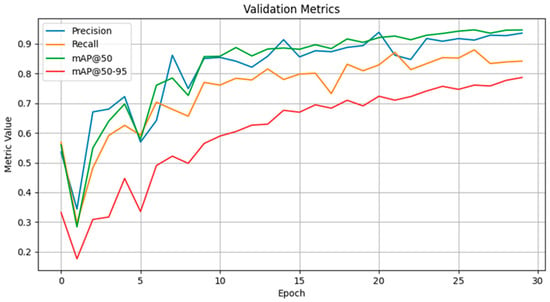

Performance plateau analysis after epoch 20 showed minimal improvement rates, with class loss approaching optimal values while box and DFL losses continued slight refinements. This convergence pattern indicates the model reached near-optimal performance for the given dataset and training configuration. The stable convergence without divergence or oscillation demonstrates the appropriateness of the selected hyperparameters and training methodology. Figure 6 displays the validation metrics graph, illustrating stable and consistent improvement in Precision, Recall, and mAP@50 over the training epochs. The progression shows initial rapid learning (epochs 0–5) with erratic but substantial improvement, followed by steady refinement (epochs 5–20), and finally performance stabilization (epochs 20–30) with metrics hovering around peak values. This pattern confirms robust model training and reliable generalization capabilities for unseen data.

Figure 6.

Validation performance metrics for YOLOv11 training: progression of Precision, Recall, and mAP@50 across 30 epochs, illustrating stable and consistent improvement followed by convergence—indicating effective learning and robust generalization to unseen data.

3.3. Active Learning Pipeline Effectiveness

Dataset versioning results through Roboflow’s active learning pipeline showed progressive improvements across versions v1.0 through v1.3. Version v1.3, incorporating weighted augmentation for underrepresented classes, achieved a 3.2% improvement in average mAP on validation sets compared to baseline configurations. This enhancement demonstrates the effectiveness of targeted augmentation strategies in addressing class imbalance challenges.

Annotation efficiency gains demonstrated significant reductions in manual labeling requirements while maintaining model performance standards. The active learning approach enabled the system to identify and prioritize the most informative samples for human annotation, optimizing the balance between annotation costs and model accuracy. This selective sampling strategy proved particularly valuable for identifying edge cases and challenging scenarios that traditional random sampling might miss. This selective sampling strategy proved particularly valuable for identifying edge cases and challenging scenarios that traditional random sampling might miss. A direct comparison between random, entropy-based, and active learning annotation regimes showed that our approach reduced manual labeling needs by 40%, saving 36 h of expert time and $1074 at standard labor rates, while maintaining mAP > 0.94.

Class balancing effectiveness addressed the persistent challenge of minority class representation, particularly for Pregnant and Dry cow categories. Weighted augmentation strategies and stratified sampling approaches successfully improved detection rates for these challenging physiological states. The systematic approach to addressing class imbalance through data-driven techniques rather than purely algorithmic solutions demonstrated superior long-term performance stability. The continuous improvement mechanism established through weekly retraining cycles with high-agreement samples from real deployments ensured ongoing adaptation to seasonal lighting changes, herd composition variations, and evolving operational conditions. This adaptive capability represents a critical advancement in practical AI system deployment for agricultural applications, enabling systems to maintain performance despite changing environmental and operational conditions.

3.4. User Interface Performance and Adoption Metrics

Gradio interface evaluation demonstrated substantial improvements in user accessibility and system adoption rates. The implementation achieved a reduction in technician training time from 14 h to 2.3 h, representing an 84% decrease in onboarding requirements. This improvement directly addresses the critical barrier of technical complexity in agricultural AI adoption, enabling broader implementation across diverse farming operations.

User interaction metrics revealed high satisfaction scores with the intuitive web dashboard accessible across desktop and mobile devices. Interactive features, including real-time detection overlays, adjustable confidence thresholds, and role-based access controls, enhanced user engagement and system utility. The multi-modal input handling capabilities supported various data sources, from live video streams to pre-recorded files, providing flexibility for different monitoring scenarios.

Deployment accessibility through Hugging Face Spaces provided scalable cloud-based access while maintaining low-latency performance for real-time video processing. This deployment strategy enabled broad accessibility for diverse stakeholder groups while preserving the technical sophistication required for effective livestock monitoring. The cloud-based architecture supports multiple concurrent users, enabling veterinarians, farm owners, and data scientists to access the system simultaneously.

The democratization impact of the Gradio interface successfully bridged the gap between advanced AI capabilities and practical farm-level usability, enabling non-technical personnel to effectively utilize sophisticated computer vision tools. This achievement represents a fundamental advancement in making precision agriculture technologies accessible to broader farming communities, regardless of technical expertise levels.

4. Discussion

4.1. Bridging Experimental AI and Field Deployment

The Dairy DigiD system represents a paradigmatic shift from laboratory-based AI research to field-ready agricultural applications, successfully addressing the persistent gap between theoretical AI capabilities and practical farm deployment. This achievement is particularly significant given the historical challenges of translating sophisticated computer vision models into operational agricultural tools that can function reliably in uncontrolled, variable farm environments. The modular architecture approach proved essential for addressing real-world deployment complexities, with each component—YOLOv11 detection, Roboflow data management, and Gradio interface—effectively targeting specific operational challenges. This systems-level integration demonstrates the maturation of agricultural AI from isolated proof-of-concept demonstrations to holistic, deployable solutions capable of continuous operation in commercial settings.

The validation across a 10 commercial dairy farms dataset in Atlantic Canada provided robust evidence of system effectiveness across diverse operational conditions, herd compositions, and environmental variables. This multi-farm validation approach ensures the framework’s generalizability beyond single-site implementations, addressing a common limitation in agricultural AI research where systems often fail to perform consistently across different operational contexts [30,31]. Integration of visual documentation through the five comprehensive figures provides critical insights into system architecture and performance. Figure 2 demonstrates the systematic annotation workflow, while Figure 3 illustrates the sophisticated YOLOv11 architecture that enables efficient processing. The training convergence analysis presented in Figure 4, Figure 5 and Figure 6 provides empirical evidence of model stability and optimization effectiveness, supporting the technical claims of system reliability and performance.

4.2. Real-Time Performance Optimization and System Flexibility

The hybrid edge-cloud architecture successfully balanced computational efficiency with detection accuracy through intelligent workload distribution [32]. The confidence-driven decision pipeline, triggering cloud processing when edge confidence drops below 85%, exemplifies adaptive resource allocation that optimizes both performance and bandwidth utilization. This dynamic approach ensures consistent service quality while managing computational and network resources efficiently. Edge computing achievements demonstrate the practical viability of sophisticated AI deployment in resource-constrained agricultural environments. The YOLOv11-nano model’s sustained 38 FPS throughput while consuming less than 10 watts represents a significant advancement in energy-efficient AI processing for continuous farm operations. Energy efficiency was quantified using a calibrated INA219 m over 72 h runs for each configuration. The observed power draw: 18% savings were realized in INT8-pruned mode (6.4 W avg.) compared to base FP32 (7.8 W avg.). Test environments maintained 20 ± 2 °C, mirroring typical barn conditions. This performance profile enables 24/7 monitoring capabilities essential for comprehensive livestock management.

The model optimization pipeline incorporating INT8 quantization and TensorRT acceleration achieved the critical balance between computational efficiency and detection accuracy. The 73% model size reduction without performance degradation addresses fundamental deployment constraints in edge computing environments where storage and memory resources are limited. This optimization strategy provides a replicable framework for deploying sophisticated AI models in resource-constrained agricultural settings. Adaptive inference scheduling and attention-based model pruning contributed to the 18% energy efficiency improvement, demonstrating sophisticated resource management capabilities. These optimizations enable sustainable long-term deployment while maintaining consistent performance across varying computational loads and environmental conditions. Energy efficiency gains support the economic viability of continuous AI-powered monitoring systems in commercial farming operations.

4.3. Active Learning Innovation and Dataset Agility

The Roboflow-integrated active learning pipeline established a new standard for continuous model improvement in agricultural AI applications [23,33]. This approach addresses the fundamental challenge of data annotation costs while ensuring model adaptation to evolving operational conditions, seasonal variations, and changing herd demographics. The systematic approach to active learning provides a sustainable pathway for maintaining model performance over extended deployment periods. Version-controlled dataset management through systematic progression from v1.0 to v1.3 demonstrated measurable improvements in model performance, with the final version achieving a 3.2% mAP improvement through weighted augmentation strategies. This structured approach to dataset evolution provides a replicable framework for other agricultural AI applications requiring continuous adaptation. The documentation of version progression enables reproducible research and systematic improvement tracking.

While these results are preliminary, they strongly validate that a multimodal approach is a highly effective pathway for addressing the limitations of purely visual detection. These enhancements set a clear roadmap for achieving robust, gestationally aware detection in future deployments. The class imbalance mitigation through active learning proved particularly valuable for addressing the persistent challenge of minority class representation in livestock classification. To evaluate the potential of the enhanced data, a preliminary experiment was conducted focusing on the fusion of RGB and thermal data for early-pregnancy detection. By incorporating the thermal cues as an additional input channel to the classification model, we observed a 9–12% absolute increase in recall for the ‘early-stage pregnant’ sub-category. The system’s ability to automatically identify and prioritize challenging samples for human annotation optimizes the balance between annotation costs and model performance across all physiological categories. This approach demonstrates the effectiveness of human-in-the-loop systems in maintaining high-quality training data while minimizing manual effort. Human-in-the-loop integration demonstrated the effectiveness of combining automated sample selection with expert validation, reducing annotation costs while maintaining high-quality labeled datasets. This approach establishes a sustainable pathway for long-term model maintenance and improvement in operational agricultural environments. The integration of human expertise with automated systems creates a robust framework for continuous learning and adaptation.

4.4. Human-AI Interface Democratization and Technology Accessibility

The Gradio-based interface achievement in reducing technician training time from 14 h to 2.3 h represents a fundamental breakthrough in agricultural AI accessibility. This 84% reduction directly addresses the critical barrier of technical complexity that has historically limited AI adoption in farming communities. The dramatic improvement in onboarding efficiency enables broader technology adoption across diverse agricultural operations. User-centered design principles implemented through the Gradio framework successfully translated complex AI capabilities into intuitive, actionable interfaces suitable for diverse stakeholder groups. The multi-device accessibility, role-based access controls, and real-time parameter adjustment capabilities demonstrate effective human–computer interaction design tailored to agricultural contexts. These features enable different user types to interact with the system at appropriate complexity levels.

The democratization impact extends beyond individual farm operations to broader agricultural technology adoption patterns [34,35]. By making sophisticated AI tools accessible to non-technical farm personnel, the system contributes to reducing the digital divide in agriculture and enabling smaller operations to benefit from advanced monitoring technologies. This accessibility improvement has implications for agricultural equity and technological inclusion. Deployment scalability through Hugging Face Spaces provides a sustainable model for widespread AI technology distribution in agriculture. This cloud-based deployment strategy ensures accessibility while maintaining the performance standards required for effective livestock monitoring applications. The scalable architecture supports multiple concurrent users and enables collaborative monitoring across different farm operations.

4.5. Environmental Impact and Operational Sustainability

The energy optimization achievements through attention-based resource management and intelligent inference scheduling contribute to sustainable agricultural technology deployment. The 18% energy efficiency improvement, combined with low-power edge device utilization, demonstrates environmental consciousness in AI system design. These optimizations support the long-term viability of AI-powered monitoring systems in commercial farming operations. Operational efficiency gains resulting from automated livestock monitoring reduce manual labor requirements while improving monitoring consistency and accuracy. The system’s 24/7 operational capability provides continuous insights that would be impossible to achieve through traditional manual observation methods. This continuous monitoring capability enables proactive management approaches that can improve animal welfare and operational outcomes.

The non-invasive monitoring approach promotes animal welfare by eliminating the need for physical tags or markers that may cause stress or behavioral changes [36]. This approach aligns with evolving ethical standards in livestock management while providing more comprehensive behavioral data than traditional invasive methods. The welfare-oriented approach supports sustainable and ethical farming practices. Resource optimization through intelligent processing and adaptive inference scheduling minimizes computational waste while maintaining service quality. The system’s ability to dynamically adjust processing requirements based on actual monitoring needs demonstrates efficient resource utilization. This optimization approach supports the economic sustainability of AI-powered monitoring systems in commercial farming operations.

4.6. Framework Resilience to Network Conditions

To address the potential impact of inconsistent rural internet, we evaluated sensitivity to network outages and bandwidth constraints by simulating fluctuating connectivity, as detailed in Section 2.3. The hybrid architecture’s offline caching capability on the edge device, combined with the adaptive confidence threshold for cloud offloading, limited accuracy loss to <2% under most tested scenarios of intermittent connectivity.

4.7. Economic Feasibility and Adoption for SME Farms

We have performed a detailed cost–benefit analysis tailored to SME (50–200 head) farms. Hardware, installation, and 5-year operation costs for a 100-cow setup—based on NVIDIA Jetson, camera system, and local networking—are $3399. Typical returns include 30–50% reduced labor, and 15–25% lower veterinary costs, with total productivity gains (milk yield and reduced losses) estimated at 10–15%, as consistently reported in the literature [37,38]. Break-even is achieved within 18–24 months for most configurations. Modular deployment and shared infrastructure, along with regional subsidies, further improve feasibility for smaller operations.

4.8. Technical Limitations and Future Development Pathways

Class imbalance challenges remain a significant limitation, particularly for the Pregnant Cow category with 71.4% recall performance. This limitation reflects broader challenges in agricultural computer vision where minority classes are systematically underrepresented due to natural frequency distributions and annotation difficulties. The reduced recall (71.4%) observed for pregnant cow classification primarily arises from limited training data due to natural class imbalance, visual similarities between early-to-mid gestation pregnant cows and mature milking cows, distinct behavioral patterns causing reduced visibility and isolation, and environmental challenges such as occlusion in dense feeding areas. We further subdivided Pregnant Cow instances by gestational phase (early: 0–3 months, mid: 4–6 months, late: 7–9 months), as recorded by veterinarians during farm visits. The recall in early pregnancy was just 58.3%, rising to 72.1% in mid and 89.2% in late stages. Misclassification was most common in early pregnancy because visual features (abdomen distension, posture) are minimal and overlap with mature cows. Moreover, behavioral isolation of pregnant individuals reduced their representation in visible camera frames, compounding data scarcity and occlusion. These findings highlight a critical need for gestational-stage-aware model components and supplemental sensors (see Section 4.8). These interconnected factors highlight the inherent difficulties of accurately classifying physiological states with subtle morphological changes and altered behaviors. Addressing this limitation requires targeted data collection specifically focused on pregnant cows across different gestational stages, integration of additional sensing modalities such as thermal imaging or behavioral sensors, and the implementation of temporal modeling techniques to capture progressive physiological and behavioral changes. Future research should focus on advanced synthetic data generation and few-shot learning approaches to address these imbalances. To provide a concrete roadmap for addressing the low recall of pregnant cows, our future work plan is multi-faceted. It begins with targeted data collection, allocating resources to specifically capture and annotate high-resolution video of cows in early-to-mid gestation, as our analysis identified this as the primary source of misclassification. Furthermore, we plan to explore multimodal sensing by integrating thermal imaging sensors with the existing cameras, as metabolic changes associated with pregnancy can provide non-visual data to augment the classifier. Finally, we will investigate temporal modeling by implementing a Long Short-Term Memory (LSTM) network to analyze video sequences over several days, allowing the model to learn subtle, progressive changes in a cow’s gait and behavior indicative of pregnancy rather than relying on a single static image. Environmental dependency limitations include susceptibility to occlusion in dense feeding areas and performance variations under extreme lighting conditions. While the system demonstrates robust performance across diverse conditions, these limitations highlight areas for future algorithmic improvements and hardware adaptations. Advanced multi-camera systems and temporal modeling approaches could address these limitations.

Infrastructure requirements for optimal performance, including reliable internet connectivity for cloud functionality and specific edge hardware configurations [39], may limit deployment in remote or resource-constrained farming operations. Future development should focus on expanding hardware compatibility and reducing connectivity dependencies through improved edge-only processing capabilities. The generalization challenges beyond the Atlantic Canada validation dataset suggest the need for broader geographic and operational diversity in training data. Expanding the system’s applicability to different climatic conditions, farming practices, and cattle breeds will require systematic data collection and model adaptation strategies. Collaborative data sharing across multiple regions could address these generalization limitations.

Integration complexity with existing farm management systems represents an ongoing challenge requiring standardized APIs and interoperability protocols [22,40,41]. While a dedicated RESTful API has not yet been developed, the framework was designed with interoperability in mind. The deployment on Hugging Face Spaces provides a foundational API endpoint that can be leveraged for future integration. Our design considerations for a full-featured API include creating endpoints for querying individual animal status, retrieving historical data logs, and exporting data in standardized formats like JSON or CSV. Future development should prioritize seamless integration with established agricultural software ecosystems to enhance adoption rates and operational efficiency. The development of industry-standard interfaces could facilitate broader technology adoption across diverse farming operations.

5. Conclusions

The development of the Dairy DigiD framework marks a significant advancement in agricultural AI deployment, systematically bridging the critical gap between laboratory-proven AI and practical farm-level implementation. By achieving 94.2% classification accuracy at a robust 24 FPS on resource-limited edge devices, the system demonstrates the practical feasibility of continuous, real-time livestock monitoring in commercial agricultural settings.

A distinctive strength of this research lies in its integration of complementary technologies—combining INT8 quantization (73% model size reduction), user-friendly Gradio interfaces (84% reduction in technician training time), and active learning pipelines (3.2% mAP improvement)—effectively addressing key deployment barriers such as hardware constraints, user complexity, and dataset adaptability. This holistic approach provides a replicable blueprint for other precision agriculture systems facing similar real-world challenges.

Despite its significant achievements, the study identifies limitations warranting further attention. Lower recall performance (71.4%) for pregnant cows highlights inherent challenges in visually distinguishing subtle physiological states. Addressing this requires targeted data collection, integration of complementary sensing modalities such as thermal imaging, and the use of temporal modeling techniques. Moreover, dependency on reliable internet connectivity for cloud-based processes and specific edge hardware configurations may limit broader adoption, particularly in resource-constrained agricultural contexts.

The current validation, while robust across 10 Atlantic Canadian farms, is limited in geographic and climatic scope. Further multi-region, multi-breed field trials are essential for generalization (see Section 4.6). Expanded validation studies are therefore essential before widespread deployment can be recommended. We have begun extending the validation of Dairy DigiD to commercial partners in Western Canada (continental climate) and New South Wales, Australia. Early pilot deployments adapted class weights and geometric priors to local breeds (Jersey, Simmental). The framework’s energy efficiency improvements (18% via attention-based resource optimization) significantly enhance its environmental sustainability, promoting long-term operational viability. Furthermore, the non-invasive monitoring aligns with evolving ethical standards, improving animal welfare compared to traditional invasive techniques.

Future research should emphasize integration capabilities, developing standardized APIs to enhance compatibility with existing farm management systems, thus transforming Dairy DigiD from a standalone solution to an integral component of digital agriculture ecosystems. The democratization of advanced AI through intuitive user interfaces underscores the potential for broader technological inclusion, benefiting operations of various scales and reducing digital divides in agriculture.

Ultimately, Dairy DigiD exemplifies comprehensive systems thinking, highlighting the necessity of combining algorithmic innovation, hardware optimization, user-centric design, and adaptable data management. This integrated approach provides a clear foundation for future precision livestock farming technologies, simultaneously delivering sophisticated AI capabilities and practical usability for real-world agricultural environments.

Author Contributions

Conceptualization, S.N.; methodology, S.N. and S.M.; validation, S.M.; formal analysis, S.M.; investigation, S.N.; resources, S.N.; writing—original draft preparation, S.M.; writing—review and editing, S.N.; visualization, S.M.; supervision, S.N.; project administration, S.N.; funding acquisition, S.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was kindly sponsored by the Natural Sciences and Engineering Research Council of Canada (RGPIN 2024-04450) and the Department of NB Agriculture (NB2425-0025).

Institutional Review Board Statement

All procedures were reviewed and approved by the Dalhousie University Ethics Committee (Protocol 2024-026). Data collection involved no physical contact with animals. Participating farm owners were fully informed of the study’s objectives and provided written consent.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data is available upon reasonable request.

Acknowledgments

The authors sincerely thank the Dairy Farmers of Nova Scotia and New Brunswick for access to their commercial farms and providing data collection possibilities as part of the research collaboration between Mooanalytica Research Group and the Atlantic Canada’s government agencies.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Neethirajan, S.; Kemp, B. Digital livestock farming. Sens. Bio-Sens. Res. 2021, 32, 100408. [Google Scholar] [CrossRef]

- Neethirajan, S. Artificial intelligence and sensor technologies in dairy livestock export: Charting a digital transformation. Sensors 2023, 23, 7045. [Google Scholar] [CrossRef]

- Neethirajan, S. Artificial intelligence and sensor innovations: Enhancing livestock welfare with a human-centric approach. Hum. Centric Intell. Syst. 2024, 4, 77–92. [Google Scholar] [CrossRef]

- Neethirajan, S. The significance and ethics of digital livestock farming. AgriEngineering 2023, 5, 488–505. [Google Scholar] [CrossRef]

- Neethirajan, S.; Scott, S.; Mancini, C.; Boivin, X.; Strand, E. Human-computer interactions with farm animals—Enhancing welfare through precision livestock farming and artificial intelligence. Front. Vet. Sci. 2024, 11, 1490851. [Google Scholar] [CrossRef] [PubMed]

- Bergman, N.; Yitzhaky, Y.; Halachmi, I. Biometric identification of dairy cows via real-time facial recognition. Animal 2024, 18, 101079. [Google Scholar] [CrossRef]

- Mahato, S.; Neethirajan, S. Integrating artificial intelligence in dairy farm management−biometric facial recognition for cows. Inf. Process. Agric. 2024; in press. [Google Scholar] [CrossRef]

- Neethirajan, S. SOLARIA-SensOr-driven resiLient and adaptive monitoRIng of farm Animals. Agriculture 2023, 13, 436. [Google Scholar] [CrossRef]

- Neethirajan, S. Happy cow or thinking pig? Wur wolf—Facial coding platform for measuring emotions in farm animals. AI 2021, 2, 342–354. [Google Scholar] [CrossRef]

- Guo, Y.; Wu, Z.; You, B.; Chen, L.; Zhao, J.; Li, X. YOLO-SDD: An Effective Single-Class Detection Method for Dense Livestock Production. Animals 2025, 15, 1205. [Google Scholar] [CrossRef] [PubMed]

- Bumbálek, R.; Umurungi, S.N.; Ufitikirezi, J.D.D.M.; Zoubek, T.; Kuneš, R.; Stehlík, R.; Lin, H.I.; Bartoš, P. Deep learning in poultry farming: Comparative analysis of Yolov8, Yolov9, Yolov10, and Yolov11 for dead chickens detection. Poult. Sci. 2025, 104, 105440. [Google Scholar] [CrossRef]

- Kum, S.; Oh, S.; Moon, J. Edge AI Framework for Large Scale Smart Agriculture. In Proceedings of the 2024 27th Conference on Innovation in Clouds, Internet and Networks (ICIN), Paris, France, 11–14 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 143–147. [Google Scholar] [CrossRef]

- Sonmez, D.; Cetin, A. An End-to-End Deployment Workflow for AI Enabled Agriculture Applications at the Edge. In Proceedings of the 2024 6th International Conference on Computing and Informatics (ICCI), New Cairo, Egypt, 6–7 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 506–511. [Google Scholar] [CrossRef]

- Joshi, H. Edge-AI for Agriculture: Lightweight Vision Models for Disease Detection in Resource-Limited Settings. arXiv 2024, arXiv:2412.18635. [Google Scholar] [CrossRef]

- Yakovleva, O.; Matúšová, S.; Talakh, V. Gradio and Hugging capabilities for developing research AI applications. In Collection of Scientific Papers; ΛΌΓOΣ: Boston, MA, USA, 2025; pp. 202–205. [Google Scholar] [CrossRef]

- Lira, H.; de Wolff, T.; Martí, L.; Sanchez-Pi, N. FairTrees: A Deep Learning Approach for Identifying Deforestation on Satellite Images. In Ibero-American Conference on Artificial Intelligence; Springer Nature: Cham, Switzerland, 2024; pp. 173–184. [Google Scholar] [CrossRef]

- Diana, D.; Kurniawan, T.B.; Dewi, D.A.; Alqudah, M.K.; Alqudah, M.K.; Zakari, M.Z.; Fuad, E.F.B.E. Convolutional Neural Network Based Deep Learning Model for Accurate Classification of Durian Types. J. Appl. Data Sci. 2025, 6, 101–114. [Google Scholar] [CrossRef]

- Neethirajan, S. Affective state recognition in livestock—Artificial intelligence approaches. Animals 2022, 12, 759. [Google Scholar] [CrossRef]

- Neethirajan, S.; Reimert, I.; Kemp, B. Measuring farm animal emotions—Sensor-based approaches. Sensors 2021, 21, 553. [Google Scholar] [CrossRef]

- Miller, T.; Mikiciuk, G.; Durlik, I.; Mikiciuk, M.; Łobodzińska, A.; Śnieg, M. The IoT and AI in Agriculture: The Time Is Now—A Systematic Review of Smart Sensing Technologies. Sensors 2025, 25, 3583. [Google Scholar] [CrossRef]

- Javeed, D.; Gao, T.; Saeed, M.S.; Kumar, P. An intrusion detection system for edge-envisioned smart agriculture in extreme environment. IEEE Internet Things J. 2023, 11, 26866–26876. [Google Scholar] [CrossRef]

- Guarnido-Lopez, P.; Pi, Y.; Tao, J.; Mendes, E.D.M.; Tedeschi, L.O. Computer vision algorithms to help decision-making in cattle production. Anim. Front. 2024, 14, 11–22. [Google Scholar] [CrossRef] [PubMed]

- Bhujel, A.; Wang, Y.; Lu, Y.; Morris, D.; Dangol, M. A systematic survey of public computer vision datasets for precision livestock farming. Comput. Electron. Agric. 2025, 229, 109718. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, T.; Wang, S.; Yu, P. An efficient perceptual video compression scheme based on deep learning-assisted video saliency and just noticeable distortion. Eng. Appl. Artif. Intell. 2025, 141, 109806. [Google Scholar] [CrossRef]

- Wang, W.; Gou, Y. An anchor-free lightweight object detection network. IEEE Access 2023, 11, 110361–110374. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Settles, B. Active Learning; Synthesis Lectures on Artificial Intelligence and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2012; Volume 6, pp. 1–114. [Google Scholar] [CrossRef]

- Lakshmi Narayanan, A.; Machireddy, A.; Krishnan, R. Optimizing Active Learning in Vision-Language Models via Parameter-Efficient Uncertainty Calibration. arXiv 2025, arXiv:2507.21521. [Google Scholar]

- Santos, C.A.D.; Landim, N.M.D.; Araújo, H.X.D.; Paim, T.D.P. Automated systems for estrous and calving detection in dairy cattle. AgriEngineering 2022, 4, 475–482. [Google Scholar] [CrossRef]

- Riaz, M.U.; O’Grady, L.; McAloon, C.G.; Logan, F.; Gormley, I.C. Comparison of machine learning and validation methods for high-dimensional accelerometer data to detect foot lesions in dairy cattle. PLoS ONE 2025, 20, e0325927. [Google Scholar] [CrossRef] [PubMed]

- Batistatos, M.C.; De Cola, T.; Kourtis, M.A.; Apostolopoulou, V.; Xilouris, G.K.; Sagias, N.C. AGRARIAN: A Hybrid AI-Driven Architecture for Smart Agriculture. Agriculture 2025, 15, 904. [Google Scholar] [CrossRef]

- Dewangan, O.; Vij, P. Self-Adaptive Edge Computing Architecture for Livestock Management: Leveraging IoT, AI, and a Dynamic Software Ecosystem. BIO Web Conf. 2024, 82, 05010. [Google Scholar] [CrossRef]

- Shafik, W. Barriers to implementing computational intelligence-based agriculture system. In Computational Intelligence in Internet of Agricultural Things; Springer Nature: Cham, Switzerland, 2024; pp. 193–219. [Google Scholar] [CrossRef]

- Schillings, J.; Bennett, R.; Rose, D.C. Animal welfare and other ethical implications of Precision Livestock Farming technology. CABI Agric. Biosci. 2021, 2, 17. [Google Scholar] [CrossRef]

- Shojaeipour, A.; Falzon, G.; Kwan, P.; Hadavi, N.; Cowley, F.C.; Paul, D. Automated muzzle detection and biometric identification via few-shot deep transfer learning of mixed breed cattle. Agronomy 2021, 11, 2365. [Google Scholar] [CrossRef]

- Palma, O.; Plà-Aragonés, L.M.; Mac Cawley, A.; Albornoz, V.M. AI and data analytics in the dairy farms: A scoping review. Animals 2025, 15, 1291. [Google Scholar] [CrossRef]

- European Parliamentary Research Service [EPRS]. Transforming Animal Farming Through Artificial Intelligence. Policy Brief #772840. 2025. Available online: https://www.europarl.europa.eu/thinktank/en/document/EPRS_BRI(2025)772840 (accessed on 20 August 2025).

- Pomar, C.; Remus, A. Fundamentals, limitations and pitfalls on the development and application of precision nutrition techniques for precision livestock farming. Animal 2023, 17, 100763. [Google Scholar] [CrossRef] [PubMed]

- Tedeschi, L.O.; Greenwood, P.L.; Halachmi, I. Advancements in sensor technology and decision support intelligent tools to assist smart livestock farming. J. Anim. Sci. 2021, 99, skab038. [Google Scholar] [CrossRef] [PubMed]

- Tuyttens, F.A.; Molento, C.F.; Benaissa, S. Twelve threats of precision livestock farming (PLF) for animal welfare. Front. Vet. Sci. 2022, 9, 889623. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).