Urdu Toxicity Detection: A Multi-Stage and Multi-Label Classification Approach

Abstract

1. Introduction

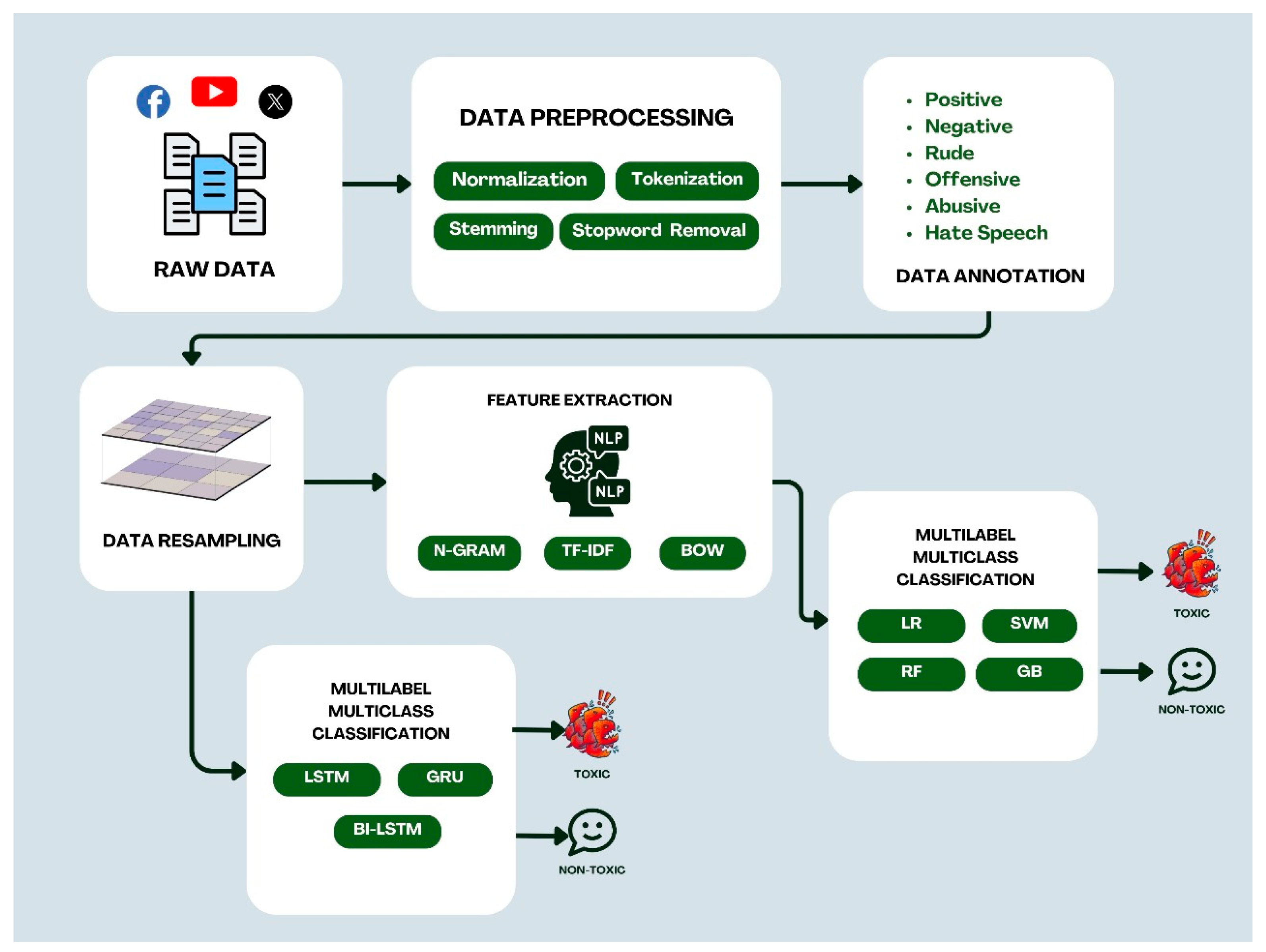

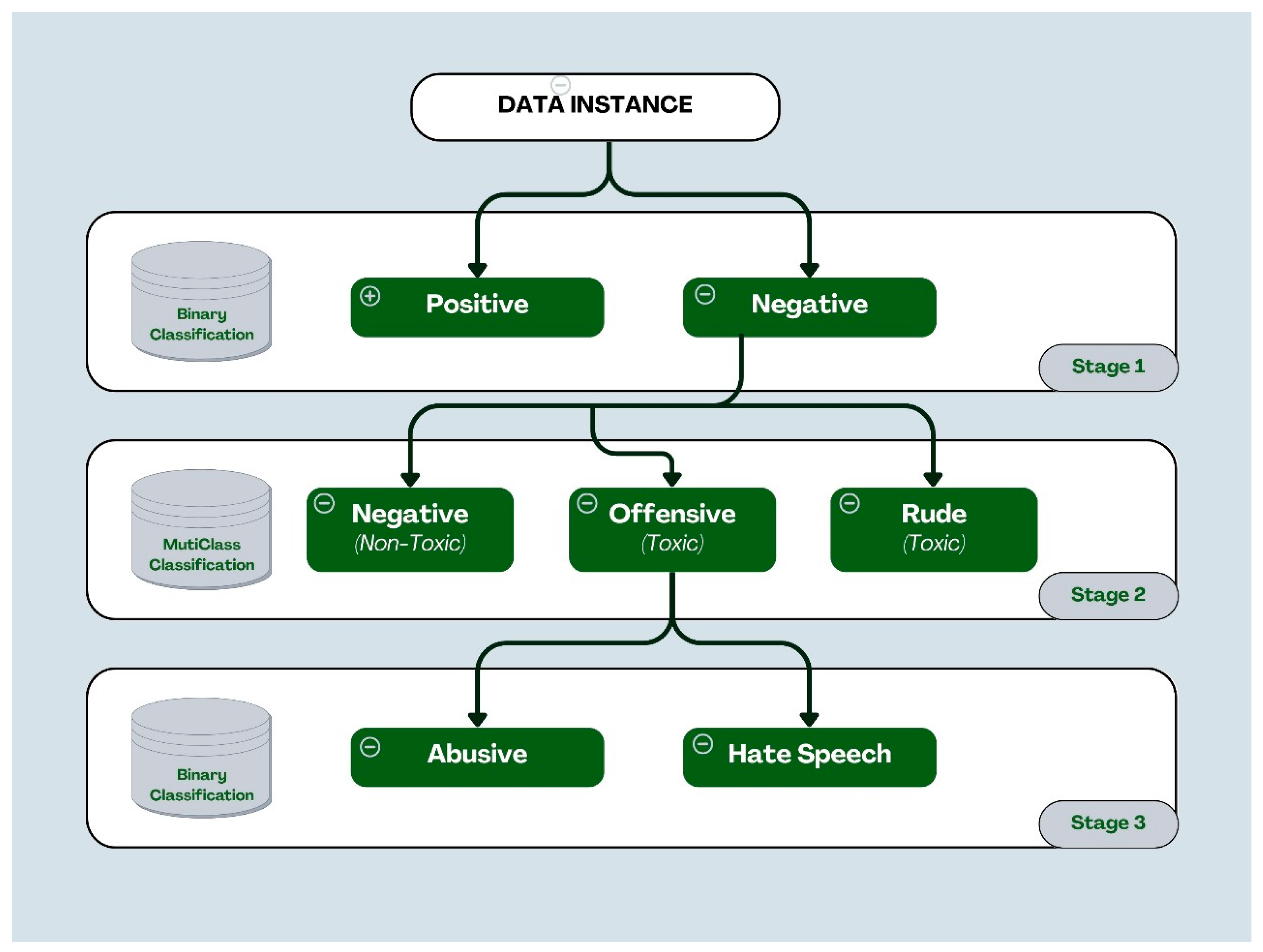

- Develop a comprehensive multi-label UTC dataset comprising 18,000 user-generated comments in Urdu Nastaliq font collected from multiple social media platforms. The dataset consists of five distinct classes: “positive”, “negative”, “negative and rude”, “negative and offensive and abusive”, and “negative and offensive and hate speech”. To the best of our knowledge, this is the first multilabel dataset addressing a significant gap in natural language resources for Urdu.

- Propose a state-of-the-art Urdu Toxicity Detection Model (UTDM) framework for Urdu toxicity detection from user comments across various social media platforms. The proposed model framework includes both ML models (SVM, RF, LR, and GB) and DL models (LSTM, BiLSTM, and GRU). The framework is evaluated using precision, recall, accuracy, and F1 score, demonstrating its effectiveness in a multilabel multiclass toxicity classification task for Urdu text in Nastaliq script.

2. Related Work

2.1. Nastaliq Urdu Toxicity Detection

2.2. Roman Urdu Toxicity Detection

3. Methodology

3.1. Data Collection

3.2. Data Preprocessing

3.3. Remove Other Languages Data

3.4. Remove Punctuation Marks

3.5. Remove Repetitive Words

3.6. Tokenization

3.7. Data Annotation

- Use of rude, dismissive, or sarcastic words in speech.

- Lacks basic respect but it does not necessarily involve outright harm or hatred.

- Tends to take place during arguments or when mocking someone subtly.

- Includes words that tend to insult, provoke, or hurt feelings.

- Seeks sensitive areas such as religion, gender, or looks.

- More intense in tone and effect than “rude”, yet not necessarily abusive.

- Harmful or threatening words meant to intimidate.

- Has slurs, name-calling, or violent verbal attacks.

- Incites psychological distress or fear in the recipient.

- Encourages hatred or violence against individuals or groups based on their identity.

- Is discriminatory in nature—attacks race, religion, ethnicity, gender, etc.

- Legally and ethically grave; can lead to actual harm in real life.

3.8. Feature Extraction

3.8.1. Bag-of-Words

3.8.2. N-Grams

3.8.3. Term Frequency-Inverse Document Frequency

3.9. Data Imbalance Handling

4. Experimental Setup

5. Results and Discussion

5.1. ML Methods

5.2. DL Methods

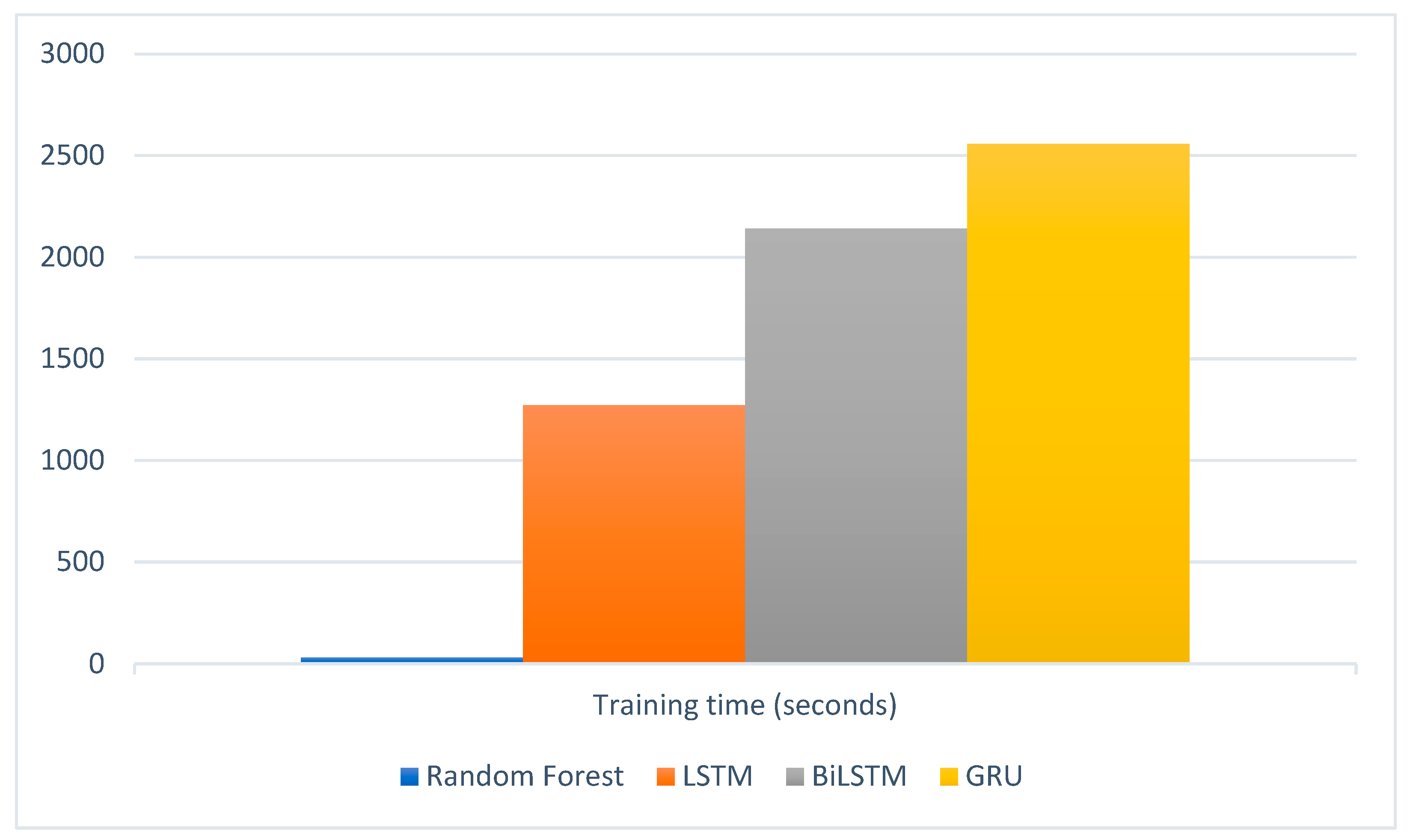

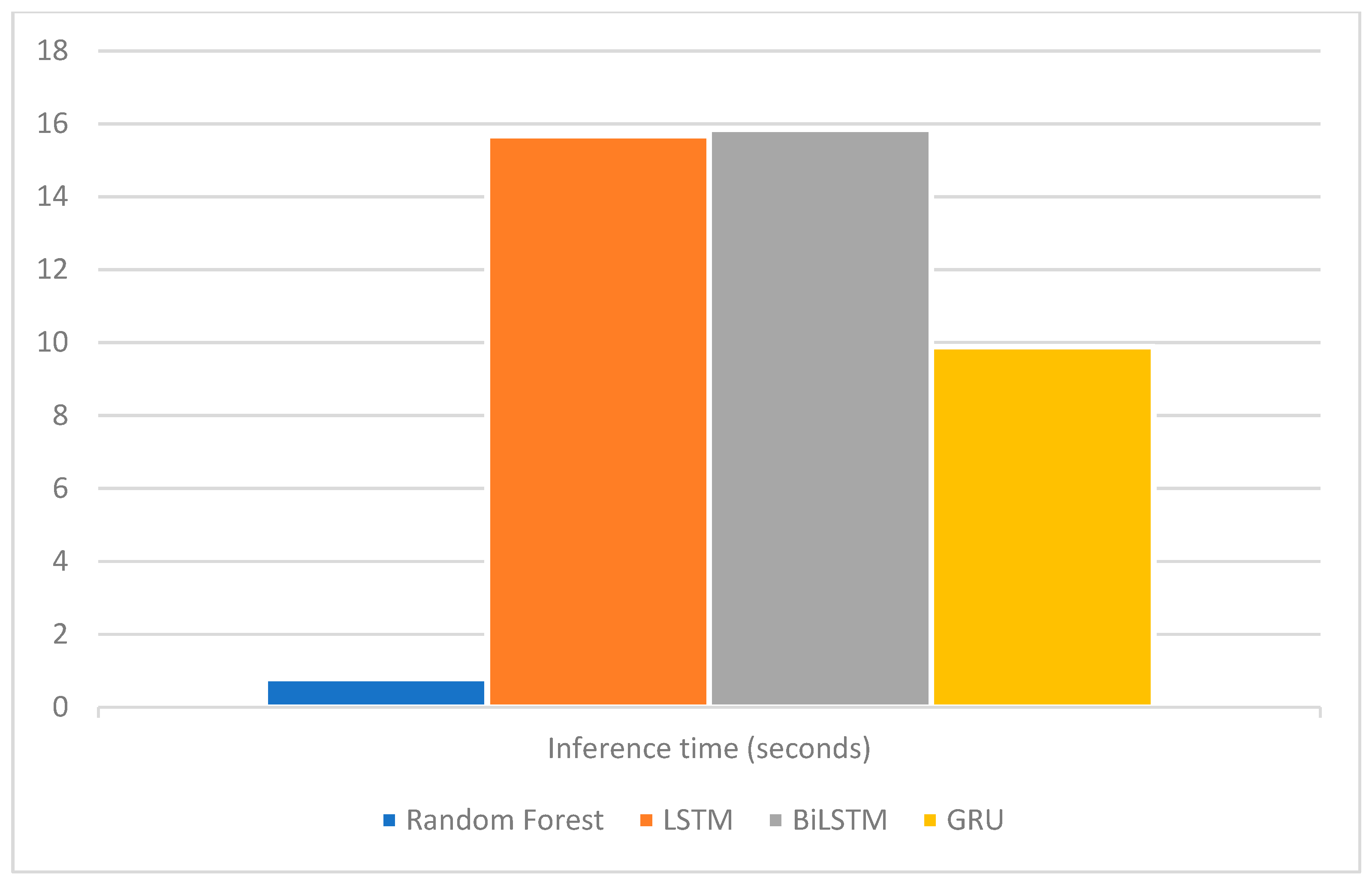

5.3. Computational Time Analysis

5.4. Statistical Comparison of Model Performances

5.5. Comparative Analysis

5.6. Ethical and Deployment Challenges

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Khan, A.; Ahmed, A.; Jan, S.; Bilal, M.; Zuhairi, M.F. Abusive language detection in Urdu text: Leveraging DL and attention mechanism. IEEE Access 2024, 12, 37418–37431. [Google Scholar] [CrossRef]

- Adeeba, F.; Yousuf, M.I.; Anwer, I.; Tariq, S.U.; Ashfaq, A.; Naqeeb, M. Addressing cyberbullying in Urdu tweets: A comprehensive dataset and detection system. PeerJ Comput. Sci. 2024, 10, e1963. [Google Scholar] [CrossRef]

- Khan, S.; Qasim, I.; Khan, W.; Khan, A.; Ali Khan, J.; Qahmash, A.; Ghadi, Y.Y. An automated approach to identify sarcasm in low-resource language. PLoS ONE 2024, 19, e0307186. [Google Scholar] [CrossRef]

- Zoya; Latif, S.; Latif, R.; Majeed, H.; Jamail, N.S.M. Assessing Urdu language processing tools via statistical and outlier detection methods on Urdu tweets. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–31. [Google Scholar] [CrossRef]

- Ashraf, M.R.; Jana, Y.; Umer, Q.; Jaffar, M.A.; Chung, S.; Ramay, W.Y. BERT-based sentiment analysis for low-resourced languages: A case study of Urdu language. IEEE Access 2023, 11, 110245–110259. [Google Scholar] [CrossRef]

- Bonetti, A.; Martínez-Sober, M.; Torres, J.C.; Vega, J.M.; Pellerin, S.; Vila-Francés, J. Comparison between ML and DL approaches for the detection of toxic comments on social networks. Appl. Sci. 2023, 13, 6038. [Google Scholar] [CrossRef]

- Atif, A.; Zafar, A.; Wasim, M.; Waheed, T.; Ali, A.; Ali, H.; Shah, Z. Cyberbullying detection and abuser profile identification on social media for Roman Urdu. IEEE Access 2024, 12, 123339–123351. [Google Scholar] [CrossRef]

- Saeed, R.; Afzal, H.; Rauf, S.A.; Iltaf, N. Detection of offensive language and its severity for low-resource languages. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–27. [Google Scholar] [CrossRef]

- Mehmood, F.; Shahzadi, R.; Ghafoor, H.; Asim, M.N.; Ghani, M.U.; Mahmood, W.; Dengel, A. EnML: Multi-label ensemble learning for Urdu text classification. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–31. [Google Scholar] [CrossRef]

- Haq, N.U.; Ullah, M.; Khan, R.; Ahmad, A.; Almogren, A.; Hayat, B.; Shafi, B. USAD: An intelligent system for slang and abusive text detection in Perso-Arabic-scripted Urdu. Complexity 2020, 2020, 6684995. [Google Scholar] [CrossRef]

- Malik, M.S.I.; Nawaz, A.; Jamjoom, M.M. Hate speech and target community detection in Nastaliq Urdu using transfer learning techniques. IEEE Access 2024, 12, 116875–116890. [Google Scholar] [CrossRef]

- Ali, M.Z.; Ehsan-Ul-Haq; Rauf, S.; Javed, K.; Hussain, S. Improving hate speech detection of Urdu tweets using sentiment analysis. IEEE Access 2021, 9, 84296–84305. [Google Scholar] [CrossRef]

- Akram, M.H.; Shahzad, K.; Bashir, M. ISE-Hate: A benchmark corpus for inter-faith, sectarian, and ethnic hatred detection on social media in Urdu. Inf. Process. Manag. 2023, 60, 103270. [Google Scholar] [CrossRef]

- Ameer, I.; Sidorov, G.; Gomez-Adorno, H.; Nawab, R.M.A. Multi-label emotion classification on code-mixed text: Data and methods. IEEE Access 2022, 10, 8779–8789. [Google Scholar] [CrossRef]

- Akhter, M.P.; Zhang, J.; Naqvi, I.R.; Abdelmajeed, M.; Sadiq, M.T. Automatic detection of offensive language for Urdu and Roman Urdu. IEEE Access 2020, 8, 91213–91226. [Google Scholar] [CrossRef]

- Aziz, K.; Yusufu, A.; Zhou, J.; Ji, D.; Iqbal, M.S.; Wang, S.; Hadi, H.J.; Yuan, Z. UrduAspectNet: Fusing transformers and dual GCN for Urdu aspect-based sentiment detection. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23, 3663367. [Google Scholar] [CrossRef]

- Ullah, A.; Khan, K.U.; Khan, A.; Bakhsh, S.T.; Rahman, A.U.; Akbar, S.; Saqia, B. Threatening language detection from Urdu data with a deep sequential model. PLoS ONE 2024, 19, e0290915. [Google Scholar] [CrossRef]

- Nabeel, Z.; Mehmood, M.; Baqir, A.; Amjad, A. Classifying emotions in Roman Urdu posts using machine learning. In Proceedings of the 2021 Mohammad Ali Jinnah University International Conference on Computing (MAJICC), Karachi, Pakistan, 6–7 December 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Qureshi, M.A.; Asif, M.; Hassan, M.F.; Abid, A.; Kamal, A.; Safdar, S.; Akbar, R. Sentiment analysis of reviews in natural language: Roman Urdu as a case study. IEEE Access 2022, 10, 24945–24954. [Google Scholar] [CrossRef]

- Liaqat, M.I.; Hassan, M.A.; Shoaib, M.; Khurshid, S.K.; Shamseldin, M.A. Sentiment analysis techniques, challenges, and opportunities: Urdu language-based analytical study. PeerJ Comput. Sci. 2022, 8, e1032. [Google Scholar] [CrossRef]

- Sehar, U.; Kanwal, S.; Dashtipur, K.; Mir, U.; Abbasi, U.; Khan, F. Urdu sentiment analysis via multimodal data mining based on DL algorithms. IEEE Access 2021, 9, 153072–153082. [Google Scholar] [CrossRef]

- Naqvi, U.; Majid, A.; Abbas, S.A. UTSA: Urdu text sentiment analysis using DL methods. IEEE Access 2021, 9, 114085–114094. [Google Scholar] [CrossRef]

- Saeed, H.H.; Ashraf, M.H.; Kamiran, F.; Karim, A.; Calders, T. Roman Urdu toxic comment classification. Lang. Resour. Eval. 2021, 55, 971–996. [Google Scholar] [CrossRef]

- Jadhav, R.; Agarwal, N.; Shevate, S.; Sawakare, C.; Parakh, P.; Khandare, S. Cyber bullying and toxicity detection using machine learning. In Proceedings of the 2023 3rd International Conference on Pervasive Computing and Social Networking (ICPCSN), Bengaluru, India, 17–18 February 2023; pp. 66–73. [Google Scholar] [CrossRef]

- Ansari, G.; Kaur, P.; Saxena, C. Data augmentation for improving explainability of hate speech detection. Arab. J. Sci. Eng. 2024, 49, 3609–3621. [Google Scholar] [CrossRef]

- Muralikumar, M.D.; Yang, Y.S.; McDonald, D.W. A human-centered evaluation of a toxicity detection API: Testing transferability and unpacking latent attributes. ACM Trans. Soc. Comput. 2023, 6, 1–38. [Google Scholar] [CrossRef]

- Singh, R.K.; Sanjay, H.A.; SA, P.J.; Rishi, H.; Bhardwaj, S. NLP-based hate speech detection and moderation. In Proceedings of the 2023 7th International Conference on Computation System and Information Technology for Sustainable Solutions (CSITSS), Pune, India, 11–12 November 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Cinelli, M.; Pelicon, A.; Mozetič, I.; Quattrociocchi, W.; Novak, P.K.; Zollo, F. Dynamics of online hate and misinformation. Sci. Rep. 2021, 11, 22083. [Google Scholar] [CrossRef]

- Salminen, J.; Hopf, M.; Chowdhury, S.A.; Jung, S.; Almerekhi, H.; Jansen, B.J. Developing an online hate classifier for multiple social media platforms. Hum.-Centric Comput. Inf. Sci. 2020, 10, 1. [Google Scholar] [CrossRef]

- Teng, T.H.; Varathan, K.D. Cyberbullying detection in social networks: A comparison between ML and transfer learning approaches. IEEE Access 2023, 11, 55533–55560. [Google Scholar] [CrossRef]

- Yang, Z.; Grenon-Godbout, N.; Rabbany, R. Game on, hate off: A study of toxicity in online multiplayer environments. Games Res. Pract. 2024, 9, 3675805. [Google Scholar] [CrossRef]

- Kabakus, A.T. Towards the importance of the type of deep neural network and employment of pre-trained word vectors for toxicity detection: An experimental study. J. Web Eng. 2021, 20, 2243–2268. [Google Scholar] [CrossRef]

- Carta, S.; Correra, A.; Mulas, R.; Recupero, D.; Saia, R. A supervised multi-class multi-label word embeddings approach for toxic comment classification. In Proceedings of the 11th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management, Leipzig, Germany, 6–8 September 2019; pp. 105–112. [Google Scholar] [CrossRef]

- Mnassri, K.; Rajapaksha, P.; Farahbakhsh, R.; Crespi, N. Hate speech and offensive language detection using an emotion-aware shared encoder. In Proceedings of the ICC 2023—IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; pp. 2852–2857. [Google Scholar] [CrossRef]

- Li, J.; Valdivia, K.P. Does media format matter? Investigating the toxicity, sentiment, and topic of audio versus text social media messages. In Proceedings of the 10th International Conference on Human-Agent Interaction, Christchurch, New Zealand, 5–8 December 2022. [Google Scholar]

- Suresh, S.; Yadav, B.; Kumari, S.; Choudhary, A.; Krishika, R.; TR, M. Performance analysis of comment toxicity detection using machine learning. In Proceedings of the 2023 International Conference on Computer Science and Emerging Technologies (CSET), Kuala Lumpur, Malaysia, 5–6 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Abbasi, A.; Javed, A.R.; Iqbal, F.; Kryvinska, N.; Jalil, Z. DL for religious and continent-based toxic content detection and classification. Sci. Rep. 2022, 12, 17478. [Google Scholar] [CrossRef] [PubMed]

- Rachidi, R.; Ouassil, M.A.; Errami, M.; Cherradi, B.; Hamida, S.; Silkan, H. Social media’s toxic comments detection using artificial intelligence techniques. In Proceedings of the 2023 3rd International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Tangier, Morocco, 21–22 June 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Hashmi, E.; Yayilgan, S.Y.; Shaikh, S. Augmenting sentiment prediction capabilities for code-mixed tweets with multilingual transformers. Soc. Netw. Anal. Min. 2024, 14, 86. [Google Scholar] [CrossRef]

- Mehmood, F.; Ghani, M.U.; Ibrahim, M.A.; Shahzadi, R.; Mahmood, W.; Asim, M.N. A precisely Xtreme-Multi Channel hybrid approach for Roman Urdu sentiment analysis. IEEE Access 2020, 8, 192740–192759. [Google Scholar] [CrossRef]

- Mehmood, K.; Essam, D.; Shafi, K. Sentiment analysis system for Roman Urdu. In Intelligent Computing; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer: Cham, Switzerland, 2019; Volume 858, pp. 29–42. [Google Scholar] [CrossRef]

- Ghulam, H.; Zeng, F.; Li, W.; Xiao, Y. Deep learning-based sentiment analysis for Roman Urdu text. Procedia Comput. Sci. 2019, 147, 131–135. [Google Scholar] [CrossRef]

- Hussain, S.; Ali Shah, S.F.; Bostan, H. Analyzing hateful comments against journalists on X in Pakistan. J. Stud. 2025, 26, 1187–1207. [Google Scholar] [CrossRef]

- Kausar, S.; Tahir, B.; Mehmood, M.A. ProSOUL: A framework to identify propaganda from online Urdu content. IEEE Access 2020, 8, 186039–186054. [Google Scholar] [CrossRef]

- Saeed, H.H.; Khalil, T.; Kamiran, F. Urdu Toxic Comment Classification with PURUTT Corpus Development. IEEE Access 2025, 13, 21635–21651. [Google Scholar] [CrossRef]

- Ahmed, U.; Raza, A.; Saleem, K.; Sarwar, A. Development of Voting-Based POS Tagger for the URDU Language. J. Comput. Sci. Appl. (JCSA) 2025, 2, 1–14. [Google Scholar] [CrossRef]

- Bilal, M.; Khan, A.; Jan, S.; Musa, S. Context-aware DL model for detection of Roman Urdu hate speech on social media platform. IEEE Access 2022, 10, 121133–121151. [Google Scholar] [CrossRef]

- Shrestha, A.; Kaati, L.; Akrami, N.; Linden, K.; Moshfegh, A. Harmful communication: Detection of toxic language and threats in Swedish. In Proceedings of the 2023 International Conference on Advances in Social Networks Analysis and Mining, Ottawa, ON, Canada, 27–30 August 2023; pp. 624–630. [Google Scholar] [CrossRef]

- Zain, M.; Hussain, N.; Qasim, A.; Mehak, G.; Ahmad, F.; Sidorov, G.; Gelbukh, A. RU-OLD: A Comprehensive Analysis of Offensive Language Detection in Roman Urdu Using Hybrid Machine Learning, Deep Learning, and Transformer Models. Algorithms 2025, 18, 396. [Google Scholar] [CrossRef]

| Ref. | Toxicity | Classification | Labels | Data Size | Classifier |

|---|---|---|---|---|---|

| [1] | Abusive | Binary | Abusive/non-abusive | 12,082 tweets | BiLSTM |

| [2] | Cyberbullying | Multiclass | insult, offensive, name-calling, profane, threat, curse, or none | 12,000 tweets | Naïve Bayes (NB), LR, LSTM, FastText |

| [3] | sarcasm | binary | Sarcastic/non-sarcastic. | 19,955 tweets | SVM, Muti-NB |

| [7] | Cyberbullying | Multiclass | Neutral, Offensive, Religious Hate, Sexism, and Racism | 36,000 tweets | GRU |

| [8] | Hate speech | Binary | symbolization, insult, and attribution | 20,000 tweets | SVM, LR, CNN, LSTM, BERT |

| [10] | Abusive word | Binary | Abusive/non-abusive | 5000 tweets | SVM, NB |

| [11] | Hate Speech Detection | Binary | Hate speech/non-hate speech | 20,000 Facebook posts | Urdu-RoBERTa Urdu-DistilBERT |

| [12] | Hate speech | Binary | Hateful/normal tweets | 16,000 tweets | SVM, Multinomial NB |

| [13] | Hate speech | Binary | Hateful/non-hateful | 21,759 tweets | BERT, NB |

| [17] | Threatening | Binary | Threatening/non-Threatening | 3564 tweets | LSTM |

| [23] | Toxic | Binary | Toxic/non-toxic | 72,000 Twitter (X) and Facebook posts | NB, SVM, LR, RF, LSTM, CNN |

| [43] | Abusive | binary | Abusive/non-Abusive | 196,226 Facebook posts | LR, SVM, SGD, RF |

| [44] | Propaganda spotting | Binary | Propaganda/non-propaganda | 11,574 Urdu news articles | BERT |

| [45] | Toxicity detection | Binary | Toxic/non-toxic | 72,771 labeled comments | Transfer learning |

| [47] | Hate speech | Binary | Hate speech, Neutral speech | 30,000 Twitter (X) and Facebook posts | Bi-LSTM |

| [48] | Hate speech | Binary | Hate/non-hate | 14,731 tweets | SVM |

| [14] | Threatening | Binary | Threatening/non-threatening | 3564 tweets | LR, SVM, FastText |

| [49] | Offensive language detection | Binary | Offensive/non-offensive | 46,025 YouTube New channels comments | NB, SVM, LR, RF, LSTM, CNN |

| This Paper | Toxic | Multilabel Multiclass | Multiple labels | 18,000 Facebook, Twitter (X), YouTube, Newsgroup | ML & DL |

| Social Media Forums | Category | No. of Comments |

|---|---|---|

| Politics | 1740 | |

| Showbiz | 1600 | |

| Food | 1506 | |

| News | 110 | |

| Sports | 216 | |

| Twitter (X) | Politics | 4034 |

| Showbiz | 2000 | |

| Food | 1506 | |

| News | 2501 | |

| Sports | 700 | |

| YouTube | Politics | 1148 |

| Showbiz | 16 | |

| Food | 149 | |

| News | 90 | |

| Sports | 1982 | |

| Urdu | Politics | 249 |

| Showbiz | 135 | |

| Food | 67 | |

| News | 560 | |

| Sports | 782 | |

| Total Comments | 21,091 |

| Original Text | Original Text in English | After Removing Repeated Words |

|---|---|---|

| منافق منافق جھوٹا دفع ہو جاؤ دفع ہو جاؤ | You are the hypocrite liar, leave. | منافق جھوٹا دفع ہو جاؤ |

| Label | Urdu Example (Script) | Transliteration | English Translation | Notes on Nuance |

|---|---|---|---|---|

| Rude | تم ہمیشہ بیوقوف ہی رہنا | tum hamesha bewaqoof hi rehna | You always stay stupid | Indirect insult (disrespectful, sarcastic) |

| Offensive | پاکستان ترقی کا سب بڑا دشمن | Pakistan Tarraqi ka sb se bara dushman | The biggest enemy of Pakistan’s progress | Targets a group with blame; stronger than rude |

| Abusive | جھوٹا بہتان باز نفرت پھیلانے والا | Jhoota buhtan baz nafrat phelane wala | Lier, Hawk, Hater | Vulgar + threatening language |

| Hate Speech | یہ منحوس جماعت نہ اہل ہے۔ | Ye manhoos jamat na-ahal hai | This inauspicious group is unqualified | Targets a religious, political, social group, event or any news |

| Comment | Comments in English | Positive | Negative | Rude | Offensive | Abusive | Hate Speech |

|---|---|---|---|---|---|---|---|

| گھڑی چور | The Watch theif | 0 | 1 | 1 | 0 | 0 | 0 |

| فتنہ | Temptation | 0 | 1 | 0 | 1 | 1 | 0 |

| سب شیعہ کافر | All Shias are infidels | 0 | 1 | 0 | 1 | 0 | 1 |

| Classes | Labels | Explanation | Instances |

|---|---|---|---|

| Class 1 | Positive only | Instances that are labeled as positive (non-toxic). | 4898 |

| Class 2 | Negative only | Instances that are negative (toxic) but do not have specific toxic attributes (like rude or offensive). | 1009 |

| Class 3 | Negative and Rude | Instances that are negative and contain rude language | 3415 |

| Class 4 | Negative and Offensive and Abusive | Instances that are negative and contain offensive and abusive language. | 5783 |

| Class 5 | Negative and Offensive and Hate Speech | Instances that are negative and contain offensive language and hate speech. | 2894 |

| ML Method | Classes | TP | FP | FN | TN | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|---|---|---|---|

| Logistic Regression | Class 1 | 3472 | 3515 | 1426 | 9587 | 0.4969 | 0.7088 | 0.5842 | 0.7768 |

| Class 2 | 946 | 1584 | 63 | 15,407 | 0.3740 | 0.9379 | 0.5348 | 0.7865 | |

| Class 3 | 2886 | 7164 | 529 | 7421 | 0.2872 | 0.8452 | 0.4288 | 0.6244 | |

| Class 4 | 4569 | 5204 | 1214 | 7013 | 0.4675 | 0.7900 | 0.5874 | 0.6385 | |

| Class 5 | 1882 | 1422 | 1012 | 13,684 | 0.5697 | 0.6504 | 0.6074 | 0.8693 | |

| Random forest | Class 1 | 4834 | 24 | 64 | 13,078 | 0.9951 | 0.9869 | 0.9910 | 0.9960 |

| Class 2 | 1009 | 0 | 0 | 16,991 | 1.0 | 1.0 | 1.0 | 1.0 | |

| Class 3 | 3415 | 251 | 0 | 14,334 | 0.9315 | 1.0 | 0.9645 | 0.9877 | |

| Class 4 | 5712 | 154 | 71 | 12,063 | 0.9738 | 0.9878 | 0.9807 | 0.9874 | |

| Class 5 | 2894 | 40 | 0 | 15,066 | 0.9863 | 1.0 | 0.9931 | 0.9978 | |

| Support vector machine | Class 1 | 3169 | 2318 | 1729 | 10,784 | 0.5775 | 0.647 | 0.6105 | 0.817 |

| Class 2 | 936 | 1820 | 73 | 15,171 | 0.3395 | 0.9272 | 0.4974 | 0.7542 | |

| Class 3 | 3293 | 8682 | 122 | 5903 | 0.2750 | 0.9643 | 0.4280 | 0.570 | |

| Class 4 | 5254 | 5819 | 529 | 6398 | 0.4745 | 0.9086 | 0.6235 | 0.6425 | |

| Class 5 | 1993 | 874 | 901 | 14,232 | 0.6952 | 0.6887 | 0.6919 | 0.9047 | |

| Gradient boosting | Class 1 | 2596 | 357 | 2302 | 12,745 | 0.879 | 0.53 | 0.66 | 0.88 |

| Class 2 | 928 | 9 | 81 | 16,982 | 0.99 | 0.92 | 0.977 | 0.9849 | |

| Class 3 | 1708 | 17 | 1708 | 14,568 | 0.99 | 0.50 | 0.66 | 0.92 | |

| Class 4 | 2892 | 251 | 2892 | 11,966 | 0.92 | 0.50 | 0.65 | 0.82 | |

| Class 5 | 1794 | 18 | 1100 | 15,088 | 0.99 | 0.62 | 0.76 | 0.94 |

| ML Method | Classes | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| Logistic Regression | Class 1 | 0.4833 | 0.6859 | 0.5670 | 0.7672 |

| Class 2 | 0.3928 | 0.9383 | 0.5537 | 0.7931 | |

| Class 3 | 0.2857 | 0.8405 | 0.4265 | 0.6158 | |

| Class 4 | 0.4490 | 0.7726 | 0.5679 | 0.6259 | |

| Class 5 | 0.5553 | 0.6587 | 0.6025 | 0.8671 | |

| Random forest | Class 1 | 0.9740 | 0.9948 | 0.9841 | 0.9928 |

| Class 2 | 1.0 | 1.0 | 1.0 | 1.0 | |

| Class 3 | 0.9342 | 1.0 | 0.9660 | 0.9880 | |

| Class 4 | 0.9715 | 0.9915 | 0.9814 | 0.9881 | |

| Class 5 | 0.9874 | 1.0 | 0.9937 | 0.9981 | |

| Support vector machine | Class 1 | 0.5706 | 0.6357 | 0.6012 | 0.8126 |

| Class 2 | 0.3541 | 0.9377 | 0.5537 | 0.7931 | |

| Class 3 | 0.2860 | 0.9735 | 0.4421 | 0.5824 | |

| Class 4 | 0.4678 | 0.8948 | 0.6142 | 0.6427 | |

| Class 5 | 0.6881 | 0.6937 | 0.6907 | 0.9050 | |

| Gradient boosting | Class 1 | 0.9020 | 0.5204 | 0.6598 | 0.8808 |

| Class 2 | 0.9978 | 0.9522 | 0.9744 | 0.9932 | |

| Class 3 | 0.9811 | 0.5066 | 0.6681 | 0.9145 | |

| Class 4 | 0.9174 | 0.5192 | 0.6630 | 0.8322 | |

| Class 5 | 0.9927 | 0.6450 | 0.7817 | 0.9450 |

| DL Method | Classes | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| LSTM | Class 1 | 0.6528 | 0.5222 | 0.5802 | 0.8038 |

| Class 2 | 0.8333 | 0.9091 | 0.8696 | 0.9913 | |

| Class 3 | 0.6667 | 0.3373 | 0.4480 | 0.9004 | |

| Class 4 | 0.7237 | 0.8291 | 0.7728 | 0.7489 | |

| Class 5 | 0.7917 | 0.3654 | 0.5000 | 0.9452 | |

| GRU | Class 1 | 0.6782 | 0.6556 | 0.6667 | 0.8297 |

| Class 2 | 0.8750 | 0.6364 | 0.7368 | 0.9856 | |

| Class 3 | 0.6154 | 0.5783 | 0.5963 | 0.9062 | |

| Class 4 | 0.7771 | 0.7619 | 0.7648 | 0.7694 | |

| Class 5 | 0.6327 | 0.5962 | 0.6139 | 0.9437 | |

| BiLSTM | Class 1 | 0.741 | 0.5056 | 0.5778 | 0.8081 |

| Class 2 | 0.9524 | 0.9091 | 0.9302 | 0.9957 | |

| Class 3 | 0.5443 | 0.5181 | 0.5309 | 0.8903 | |

| Class 4 | 0.7584 | 0.7563 | 0.7574 | 0.7504 | |

| Class 5 | 0.8500 | 0.3269 | 0.4722 | 0.9452 |

| DL Method | Classes | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| LSTM | Class 1 | 0.6119 | 0.6527 | 0.6300 | 0.8019 |

| Class 2 | 0.8104 | 0.6779 | 0.7255 | 0.9841 | |

| Class 3 | 0.5614 | 0.5711 | 0.5592 | 0.8885 | |

| Class 4 | 0.7700 | 0.7611 | 0.7654 | 0.7597 | |

| Class 5 | 0.5681 | 0.5200 | 0.5391 | 0.9339 | |

| GRU | Class 1 | 0.6035 | 0.6426 | 0.6210 | 0.7970 |

| Class 2 | 0.7679 | 0.6965 | 0.7237 | 0.9835 | |

| Class 3 | 0.5889 | 0.5663 | 0.5755 | 0.9004 | |

| Class 4 | 0.7616 | 0.7577 | 0.7596 | 0.7531 | |

| Class 5 | 0.6173 | 0.4965 | 0.5463 | 0.9391 | |

| BiLSTM | Class 1 | 0.6061 | 0.6392 | 0.6212 | 0.7979 |

| Class 2 | 0.8109 | 0.6238 | 0.6942 | 0.9833 | |

| Class 3 | 0.5369 | 0.5807 | 0.5545 | 0.8874 | |

| Class 4 | 0.7643 | 0.7434 | 0.7533 | 0.7493 | |

| Class 5 | 0.5855 | 0.5080 | 0.5420 | 0.9359 |

| Class | RF vs. GRU (t, p) | RF vs. BiLSTM (t, p) |

|---|---|---|

| Class 1 | t = 39.161, p < 0.001 | t = 61.594, p < 0.001 |

| Class 2 | t = 12.618, p < 0.001 | t = 6.690, p < 0.001 |

| Class 3 | t = 25.023, p < 0.001 | t = 33.556, p < 0.001 |

| Class 4 | t = 42.422, p < 0.001 | t = 35.362, p < 0.001 |

| Class 5 | t = 19.121, p < 0.001 | t = 19.104, p < 0.001 |

| Ref. | Dataset Size | Data Sources | Methods | Objective | Language | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|---|---|---|---|

| [7] | 10,060 | Twitter (X) | LR, RF, SVM, GB, decision tree, NB, GRU | Cyberbullying detection | Roman Urdu | 0.954 | 0.952 | 0.952 | 0.952 |

| [2] | 12,428 | Twitter (X) | LR, SVM, LSTM, FastText | Cyberbullying detection | Urdu (Nastaliq) | 0.83 | 0.83 | 0.83 | 0.83 |

| This Paper | 18,000 | Facebook, Twitter (X), YouTube, Urdu News | RF, LR, SVM, GB, LSTM, BiLSTM, GRU | Toxicity Detection | Urdu (Nastaliq) | 0.977 | 0.994 | 0.985 | 0.993 |

| Model | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|

| LR | 0.6382 | 0.7541 | 0.6912 | 0.75 |

| RF | 0.9891 | 0.9892 | 0.9891 | 0.9892 |

| SVM | 0.82 | 0.81 | 0.81 | 0.82 |

| GB | 0.75 | 0.73 | 0.74 | 0.73 |

| LSTM | 0.7867 | 0.8024 | 0.7878 | 0.802 |

| BiLSTM | 0.7721 | 0.7833 | 0.7691 | 0.7833 |

| GRU | 0.8609 | 0.8796 | 0.8596 | 0.8796 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rashid, A.; Mahmood, S.; Inayat, U.; Zia, M.F. Urdu Toxicity Detection: A Multi-Stage and Multi-Label Classification Approach. AI 2025, 6, 194. https://doi.org/10.3390/ai6080194

Rashid A, Mahmood S, Inayat U, Zia MF. Urdu Toxicity Detection: A Multi-Stage and Multi-Label Classification Approach. AI. 2025; 6(8):194. https://doi.org/10.3390/ai6080194

Chicago/Turabian StyleRashid, Ayesha, Sajid Mahmood, Usman Inayat, and Muhammad Fahad Zia. 2025. "Urdu Toxicity Detection: A Multi-Stage and Multi-Label Classification Approach" AI 6, no. 8: 194. https://doi.org/10.3390/ai6080194

APA StyleRashid, A., Mahmood, S., Inayat, U., & Zia, M. F. (2025). Urdu Toxicity Detection: A Multi-Stage and Multi-Label Classification Approach. AI, 6(8), 194. https://doi.org/10.3390/ai6080194