Abstract

Background/Objectives: Knowledge Graphs (KGs) are often incomplete, which can significantly impact the performance of downstream applications. Manual completion of KGs is time-consuming and costly, emphasizing the importance of developing automated methods for KGC. Link prediction serves as a fundamental task in this domain. The semantic correlation among entity features plays a crucial role in determining the effectiveness of link-prediction models. Notably, the human brain can often infer information using a limited set of salient features. Methods: Inspired by this cognitive principle, this paper proposes a lightweight Bi-level routing attention mechanism specifically designed for link-prediction tasks. This proposed module explores a theoretically grounded and lightweight structural design aimed at enhancing the semantic recognition capability of language models without altering their core parameters. The proposed module enhances the model’s ability to attend to feature regions with high semantic relevance. With only a marginal increase of approximately one million parameters, the mechanism effectively captures the most semantically informative features. Result: It replaces the original feature-extraction module within the KGML framework and is evaluated on the publicly available WN18RR and FB15K-237 dataset. Conclusions: Experimental results demonstrate consistent improvements in standard evaluation metrics, including Mean Rank (MR), Mean Reciprocal Rank (MRR), and Hits@10, thereby confirming the effectiveness of the proposed approach.

1. Introduction

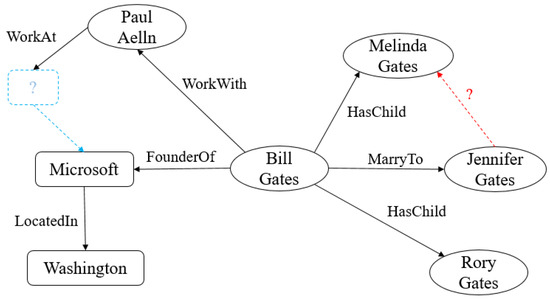

A Knowledge Graph (KG) is a structured graph data structure in which knowledge entities are represented as nodes and knowledge relationships are defined as edges. A knowledge graph is composed of a substantial collection of triplets, as depicted in Figure 1, which are extracted from natural language and represented as (head entity, relation, tail entity) or (h, r, t) triplets. These triplets, due to their intuitiveness, offer significant advantages in interpretability-related challenges and are frequently applied in various domains, such as e-commerce [1], healthcare [2], finance [3], and others.

Figure 1.

Natural language into triplets.

The process of converting natural language into triplets enhances both expression and computational understanding. Extracting the left-hand natural language into the form of right-hand triplets facilitates effective representation and comprehension by computers.

In knowledge graph construction, there are two main approaches: manual construction and automatic construction. Manual construction involves knowledge graphs such as DBpedia [4], WordNet [5], and CARD [6], while automatic construction encompasses knowledge graphs like FreeBase [7], Knowledge Vault [8], and NELL [9]. Most large-scale knowledge graphs constructed through the aforementioned methods are often characterized by incompleteness [10]. Manually completing these knowledge graphs incurs substantial costs, and incomplete knowledge graphs pose significant challenges and errors in recommendation and question-answering tasks. Therefore, automating the completion of these knowledge graphs holds immense significance.

Knowledge graph completion (KGC) primarily encompasses two major categories, entity completion [11] and relationship completion [12], as illustrated in Figure 2. Relation completion, commonly referred to as link prediction (LP), leverages the graphical structure of knowledge graphs. The underlying principle involves modeling and reasoning about various relationship patterns among entities based on known facts within the knowledge graph. For instance, TransE [13] is a knowledge graph-embedding model that learns low-dimensional representations for entities and relationships. It captures semantic associations between entities and relationships through translation-based operations, requiring triplets to satisfy the relation . By learning embedding vectors for entities and relationships, the model strives to satisfy the translation relation as closely as possible, ultimately embedding entities and relations into a shared low-dimensional vector space. Similar models include TransH [14] and TransD [15].

Figure 2.

Task of knowledge graph completion. The black lines and boxes in the figure represent known triplet information, the red lines represent missing relationships between nodes, and the blue boxes represent missing entities targeted by corresponding relationships.

From the diagram, by reasoning based on the known triplets (Bill Gates, MarryTo, JenniferGates) and (Bill Gates, HasChild, MelindaGates), the missing relationship between (JenniferGates, ?, MelindaGates) can be inferred as HasChild, resulting in the completion of the triplet (JenniferGates, HasChild, MelindaGates). Similarly, from the triplets (Bill Gates, Founder of, Microsoft) and (Bill Gates, WorkWith, PaulAllen), it is deduced that the missing tail entity for (PaulAllen, WorkAt, ?) is Microsoft, thereby establishing the new triplet (PaulAllen, WorkAt, Microsoft).

LP is a critical task in KGC, which involves analyzing the characteristics of nodes, edges, graph topology, and other relevant information to predict potential edges between nodes within the graph [16]. LP tasks are commonly employed in various domains, including social network analysis [17], recommendation systems [1], and biological network analysis [18]. The establishment of LP test data typically involves selecting relationships from baseline datasets and removing them to create missing relationships. Commonly used benchmark datasets for LP include the WN18RR dataset, and there is also a specialized dataset for the medical domain called the UMLS dataset [19].

Recent advances in pre-trained language models (PLMs), such as BERT [20], have achieved state-of-the-art performance across various NLP tasks [21,22] and have been increasingly adopted in broader intelligent systems. In embedded robotics, Farkh et al. [23] proposed a hybrid framework that deploys a lightweight TinyLlama LLM on edge devices (e.g., Raspberry Pi 5) alongside STM32 microcontrollers for real-time control, leveraging a selective trigger mechanism to reduce computational overhead. This approach significantly improves deadlock recovery, task efficiency, and collision avoidance compared to standalone Q-learning and DQN methods. In the context of recommendation systems, Ubaid et al. [24] integrated LLMs with real-time data APIs to enhance context awareness and responsiveness. Their hybrid system dynamically adapts to user states and environmental changes, demonstrating notable improvements in recommendation quality and user experience. These studies highlight the potential of combining large language models (LLMs) with real-time systems to address limitations in adaptability and scalability in resource-constrained scenarios.

As a subtask in the field of NLP, PLMs have naturally been applied to KGC tasks. The utilization of PLM in KGC tasks has demonstrated significantly superior performance compared to previous approaches. However, it is worth noting that training PLM requires substantial computational resources. This limitation hinders the effective improvement of performance in scenarios with limited resources. Additionally, the large parameter count of PLM introduces challenges, such as increased computational complexity, which leads to slower convergence and degraded performance.

This study posits that node correlation plays a pivotal role in link-prediction (LP) tasks, as accurately estimating node similarity can substantially improve predictive performance. Inspired by the cognitive observation that the human brain can infer outcomes based on a limited set of salient features, this work introduces an enhanced dual-layer routing attention mechanism specifically designed for LP, aiming to identify highly relevant features efficiently. The Bi-level Routing Attention (BRA) mechanism, initially proposed in the domain of computer vision, enables the ranking of inter-region correlations and highlights features with substantial similarity. It dynamically selects key regions relevant to the current query, thereby avoiding redundant attention computations across the global space. Although its adoption in natural language processing (NLP) remains limited, this paper adapts the core principles of BRA by analogizing individual words to spatial regions and redesigning its architecture to suit NLP tasks. The proposed variant effectively identifies strongly correlated token-level features, enhancing the representational capacity of language models for LP. Furthermore, the lightweight design introduces minimal additional parameter overhead, avoiding adverse effects on the convergence of the pre-trained model.

To address the above challenges, this study aims to enhance the semantic modeling capabilities of pre-trained language models for link prediction in knowledge graphs by introducing a lightweight Bi-level Routing Attention (BRA) mechanism. The proposed module enables the model to capture salient entity–relation features better while maintaining a low parameter overhead.

This paper primarily contributes in the following aspects:

- 1.

- Drawing inspiration from the BiFormer model in computer vision (CV), this study proposes a novel attention module specifically designed for KGC tasks. The proposed module enhances the extraction of semantically relevant information from keys and values within the attention mechanism. Considering the differences between natural NLP and CV tasks, we first modify the feature dimensions of the BRA module in BiFormer. Subsequently, the convolutional operations originally used in BiFormer are replaced with standard Transformer-based attention mechanisms.

- 2.

- The attention module is integrated into the LP baseline model, the Knowledge Graph Language Model (KGLM), replacing its original feature-extraction component. The modified model is evaluated on the publicly available WN18RR dataset. Experimental results demonstrate consistent improvements across standard evaluation metrics, confirming the effectiveness of the proposed approach.

This paper explores a theoretically feasible lightweight structural design aimed at enhancing the language model’s ability to recognize semantic relations without modifying the model’s core parameters, thereby providing a scalable and generalizable modeling paradigm for complex semantic understanding tasks.

2. Related Work

The overall idea of KGC is to employ similarity measures to determine the likely types of missing entities or relations, thus improving the representational capacity of Knowledge Graph Embedding(KGE) models and enhancing the evaluation of similarity. Currently, there are two mainstream approaches in KGC: graph neural network (GNN)-based methods and language model (LM)-based methods. GNN-based KGC leverages graph neural networks, specifically Graph Convolutional Networks (GCN), to effectively model the graph data structure inherent in knowledge graphs. Multiple studies have demonstrated that employing Graph Convolutional Networks (GCNs) to generate expressive entity representations, followed by the utilization of Knowledge Graph Embedding (KGE) models to capture interactions between entities and relationships, leads to improved performance [25,26]. LM-based KGC transforms the problem into a natural language semantic understanding task, aiming to infer missing nodes and edges by leveraging semantic relationships present in natural language. With the advancements of language models in the field of NLP, LM-based KGC has progressively emerged as the mainstream approach.

MedGCN [27] encodes relevant medical entities, including patients, encounters, laboratory tests, and medications, using a Graph Convolutional Network (GCN) network. It integrates the complex relationships and intrinsic characteristics among diverse types of medical entities into a heterogeneous graph. Subsequently, a cross-regularization strategy is employed to mitigate overfitting during multitask training by leveraging inter-task interactions. MedGCN is capable of learning node embedding for tasks such as drug recommendation and laboratory test imputation in scenarios where patient information is missing. It achieves superior performance compared to traditional methods on two publicly available datasets, NMEDW and MIMIC-III.

Utilizing GCN for knowledge entity graph construction enables effective handling of PL and downstream tasks. However, modeling multi-relational graphs using GCN can lead to the issue of over-parameterization in the model. Partha Talukdar et al. [26] introduced a composition operation that replaces the parameter matrices based on different edge types with representation vectors specific to those edge types. This design effectively addresses the issue and improves the modeling of multi-relational graphs. By representing all relationships as weighted combinations of a set of bases, the number of parameter matrices for linear transformations is reduced, thereby achieving the objective of parameter reduction. Based on this principle, the designed CompGCN model enables improved learning of node-edge relationship representations, thus better serving PL tasks. It achieves outstanding performance on the FB15K-237 [13] and WN18RR [28] datasets.

GCN is widely applied in handling graph data structures, enabling better learning of relationship representations by considering entity embedding. It provides a foundation for similarity assessment and improves the performance of knowledge graph-embedding tasks. However, some researchers argue that the current performance of GCN is far inferior to that of newer KGE models. Zhang et al. [29] concluded through experimentation that utilizing Graph Convolutional Networks (GCN) to process knowledge graphs as graph data effectively captures entity representations while considering relationships among entities. However, despite the increase in computational complexity, GCN’s performance remains suboptimal due to the limited ability to characterize entity correlation. Based on this principle, Zhang et al. developed a lightweight similarity assessment model that aggregates entities with distinct semantics, thereby replacing Graph Convolutional Networks (GCN). By employing a more straightforward approach to evaluate the associations between different semantics, this design enhances the performance of KGE models. This observation highlights that the advantage of feature representation in KGE is not exclusively reliant on Graph Convolutional Networks (GCN).

In recent years, with the rapid advancement of pre-trained language models (PLMs), significant improvements have been achieved in semantic understanding and entity delineation. As baseline architectures, PLMs have consistently outperformed convolutional neural networks (CNNs) in related studies, offering more effective solutions for enhancing semantic representation and similarity modeling in various language processing tasks cite devlin2018bert. KG-BERT [30] leverages BERT to enhance semantic understanding through bidirectional modeling by treating knowledge graph triplets as text sequences. It capitalizes on the advantages of BERT in capturing comprehensive semantics and providing richer information about entity relationships. KG-BERT utilizes entity and relationship names or descriptions as input and fine-tunes BERT to compute the likelihood scores of triplets. This approach aims to address the challenges faced in traditional PL tasks, such as one-to-many relationships and heterogeneous graphs. Consequently, KG-BERT exhibits significant performance improvements in capturing the interrelationships among different entities and relationships.

LP tasks are an inductive learning process where understanding the underlying semantics and logical patterns of relationships is particularly crucial. Linearizing triplets can impact the learning of relationship patterns. Estimating the similarity between linearized relationship expressions and entities poses challenges for PLM.

In Transformer-based PLMs, such as BERT, this encoding is achieved through stacked layers of multi-head self-attention. Each attention head captures different types of token-level dependencies—such as local syntactic relations, global semantic associations, or positional links—allowing the model to integrate heterogeneous signals into a unified contextual representation [31,32]. As training progresses, attention heads that align more closely with task-relevant patterns are reinforced via gradient updates, and the model gradually learns to focus on token pairs that are most informative for the prediction objective.

From a task definition perspective, LP with PLMs is typically formulated as a scoring problem over triplets. Given a candidate triplet , the model encodes it into a contextual representation using a pre-trained language model and computes a plausibility score . During inference, the model ranks candidate tail entities by their scores and selects the top-ranked entity as the predicted result.

In such tasks, model performance is typically evaluated using metrics such as Mean Reciprocal Rank (MRR) and Hits@K (e.g., Hits@1, Hits@10). These metrics assess the model’s ability to rank candidate answers in entity or relation-prediction scenarios accurately. However, most existing PLM-based methods linearize triplets into flat textual input, which limits their ability to capture the structural dependencies among entities in knowledge graphs. As a result, these models often struggle to model relational patterns and accurately estimate node-level similarity.

In response to this challenge, Bohua Peng et al. [33] designed a PL framework called Bi-Link, which incorporates contrastive learning and BERT. This framework aims to enhance probabilistic syntactic cues and improve the acquisition of entity similarity between entities. Significant achievements have been made in achieving maximum generalization in LP tasks.

Yao et al. [34] treated knowledge graph triples as textual sequences by leveraging pre-trained language models such as LLaMA-7B and ChatGLM-6B. Specifically, they employ the entity and relation descriptions of each triple as prompts and utilize the model-generated responses to perform link prediction.

He et al. [35] proposed MoCoSA, a momentum contrastive learning framework for knowledge graph completion based on structure-enhanced pre-trained language models. This method enables the PLM to perceive structural information through an adaptive structure encoder. To improve training efficiency, they introduce momentum-based complex negative sampling and intra-relation negative sampling strategies.

To enhance the ability of PLM to learn entity similarity, Jason Youn et al. [36] considered the influence of internal encoding within the KG on similarity assessment. Building upon KG-BERT, a new entity/relation-embedding layer was introduced to enhance the model’s ability to learn entity similarity. This layer leveraged an additional PLM specifically designed to differentiate between different entity and relation types, reducing the similarity between distinct entity and relation types. As a result, the model demonstrated improved learning capacity for the structural characteristics of the KG. The designed KGLM, after being fine-tuned using standard procedures, achieved state-of-the-art performance on popular KGC public datasets such as WN18RR.

The integration of PLMs and KGs has demonstrated excellent performance in tasks such as question answering [37], reasoning [38], and recommendation [39]. By further enhancing the learning capacity of PLM for entity relationship similarity, it enables improved performance of PLM in language-modeling tasks.

To address this issue, this paper introduces a lightweight Bi-level Routing Attention (BRA) module on top of the KGLM framework to enhance the structural modeling capabilities of PLMs. The proposed module is designed to selectively reinforce structurally relevant token interactions while maintaining compatibility with the underlying PLM backbone, thereby improving the model’s capacity to distinguish complex entity–relation semantics.

3. Model

3.1. Framework

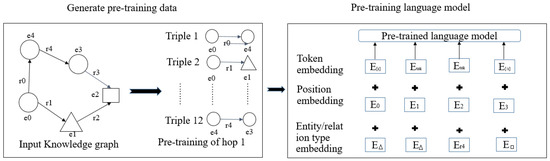

KG-BERT is a specific fine-tuning paradigm designed for handling KG, utilizing the BERT-base model as its foundational framework. It models the knowledge graph by incorporating entity descriptions and entity relationships as inputs. Building upon KG-BERT, Jason Youn et al. combined it with the stRA model and devised entityrelation-type (ER-type) embedding. These embeddings undergo further pretraining, as depicted in the diagram, to facilitate the learning of intrinsic relationships within the knowledge graph using preexisting pre-trained models.

As shown in Figure 3, the KGLM leverages a knowledge graph to extract positive and negative triplets for constructing a pretraining corpus. Subsequently, it employs masked language modeling as the training objective, along with an additional layer for embedding entity and relation types, in the case of our example, RoBERTa. The entity/relation-embedding scheme described here corresponds to KGLM, representing the finest-grained version where each entity and relation type is treated as unique.

Figure 3.

Generate pretraining and KGLM.

By training the ER-type, obtain the ER-type embedding results . These results are then incorporated into the original BERT inputs, whereby the ER-type output replaces the simple relation input (sr) token in generating segment embedding to yield the feature encoding results . The specific calculation formula for is as follows Equation (1):

where, represents the token embedding derived by BERT from the triplet (h, t, r), while denotes the position embedding.

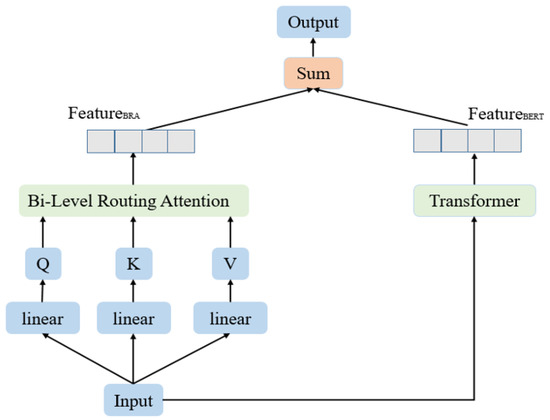

This study posits that enhancing the feature-extraction module during the fine-tuning phase—particularly by improving the network’s capacity to filter out high-similarity features is beneficial for strengthening the semantic discrimination capability of link-prediction tasks, promoting stable convergence, and improving overall prediction accuracy. To this end, we extend the baseline KGLM framework by incorporating a task-specific Bi-level Routing Attention (BRA) module into the fine-tuning stage. This modification is applied on top of the underlying BERT architecture to more effectively capture structurally relevant features. The overall framework is illustrated in Figure 4.

Figure 4.

Feature-extraction module with BRA.

This paper reconstructs the self-attention mechanism within the standard BERT architecture to enhance its feature-extraction capabilities. The revised feature-extraction module consists of two key components: a standard Transformer block for capturing general contextual representations and a BRA module designed to identify highly relevant features through a GPU-optimized sorting and retrieval mechanism. BRA-LP is implemented as a plug-in module that operates in parallel with the original self-attention layer rather than replacing it. Both the Transformer and BRA modules share the exact input token representations. The BRA module employs a top-k routing strategy to selectively focus on semantically salient tokens and compute their attention scores, producing a feature output denoted as . This output is subsequently fused with the standard Transformer output, , via element-wise summation, thereby enhancing the contextual representation without introducing additional dimensional transformations or architectural disruptions. The design maintains full compatibility with the BERT backbone and can be seamlessly integrated into downstream fine-tuning pipelines. Moreover, the BRA module’s routing mechanism is designed explicitly for GPU acceleration, thereby enhancing training efficiency and reducing resource consumption.

The input to the module is a contextualized token representation, as shown in Equation (2):

where L denotes the sequence length and C the embedding dimension. The input is linearly projected to form query, key, and value matrices as follows in Equation (3):

resulting in

Simultaneously, the input is processed through a Transformer encoder to yield as follows in Equation (4):

The final output is produced via element-wise summation as follows in Equation (5):

The feature-extraction network of the improved BRA-BERT proposed in this study replaces the base BERT of KGLM in the fine-tuning stage. The scoring stage utilizes the same scoring function as KGML, which is computed according to the following Equation (6):

where denotes the inverse version of and denotes the weight used for balancing the scores from forward and inverse scores.

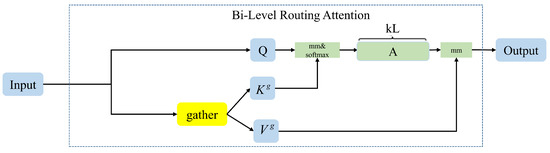

3.2. Bi-Level Routing Attention for Link Prediction

The BRA module is integrated into the existing Knowledge Graph Language Model (KGLM) framework. It refines the attention mechanism by applying a sparse top-k routing strategy to emphasize semantically relevant token representations selectively. The enhanced triplet embeddings are then used in a classification-based scoring function to rank candidate triplets for link prediction. Compared to conventional self-attention, BRA improves the model’s ability to focus on structurally important information and enhances discrimination between true and false triplets.

In the field of computer vision, Zhu et al. designed a BRA module, a lightweight module that is computationally efficient on GPUs. This module serves as a replacement for the self-attention module in the transformer architecture.

The BRA module gathers key-value pairs within the top-k relevant windows, utilizing sparsity to skip irrelevant computations and enhance computational efficiency. Additionally, it enhances the weight of features within the relevant regions, promoting the significance of the relevant correlation.

The 2D input feature map undergoes non-overlapping compression to generate a compressed feature map . By applying linear transformations, three tensors, Q, K, and V, are obtained. The mean of Q, K, and V is computed to obtain . Then, the following operation is performed on to obtain the relationship matrix , as following Equation (7):

The matrix represents the semantic relevance between two regions. By performing a top-K sorting, the indices with the highest relationship degrees are obtained. These indices are used to prune the feature map, focusing attention on the regions with higher relevance. To improve computational efficiency, employ , which contains fine-grained information, to gather the relevant details from the K and V tensors, as demonstrated below in Equation (8):

where,

The Bi-former, designed based on the BRA module, incorporates a traditional CNN as the foundational feature-extraction module.

The Bi-former employs the following attention calculation method to derive the final feature matrix, as shown in Equation (9):

where attention represents the attention computation used in Transformers, and represents the depth-wise separable convolution operation. By employing this approach, it becomes possible to swiftly prune the feature map, capturing the most closely related information and thereby enhancing granularity. Additionally, this method significantly reduces computational parameters.

However, the original BRA module was primarily designed for vision tasks, where the compression is not directly transferable to natural language processing (NLP). To address this, we propose BRA-LP, a novel variant tailored for NLP scenarios. Unlike a direct domain port, BRA-LP introduces architectural and operational modifications—such as token-level routing and the removal of convolutional components—that are specifically designed to align with the characteristics of NLP tasks. BRA-LP retains the core idea of routing-based attention in BRA while undergoing architectural and operational modifications to suit the characteristics of token sequences better. BRE-LP structure is shown in Figure 5.

Figure 5.

BRA module for LP.

The input representations in BRA-LP preserve the standard Transformer format, where linear projections are applied to obtain , with n, where n denotes the number of attention heads, l the sequence length (number of tokens), and c the hidden dimension. Given that textual inputs lack the spatial scaling properties present in images, we remove the original compression step. Instead, perform routing operations directly at the token level, treating each token as an analog to a “region”.

This paper maintains the averaging operation over Q and K to produce compressed forms , and compute the relation matrix using the exact bi-level routing mechanism as the original BRA. Subsequently, we select the top-k relevant positions indexed by and expand the tensor dimensions accordingly to gather the token-specific features . Previous studies have demonstrated that improving the sparsity of feature representations facilitates the more effective encoding of contextual information [40,41]. Although dimension expansion introduces additional computational overhead, it enhances both the expressive capacity and sparsity of token representations. When integrated with sparse routing mechanisms, this approach enables efficient modeling while improving contextual awareness, thereby significantly enhancing the model’s ability to capture fine-grained token-level semantic relationships.

For the input features, this study follows the original transformer module and utilizes linear transformations to obtain . Here, n represents the multi-head attention number, l denotes the sequence length, and C represents the number of channels. Considering the differences between NLP and CV tasks, this study does not compress the input tensors. Unlike images, the input tensors for NLP tasks do not possess scaling characteristics. Similarly, compressing them could lead to degraded performance, resulting in a degradation of performance. During the design phase of region-to-region routing in BRA, the averaging operation is still applied to Q and K to obtain . The relation matrix is obtained using the same indexing method as the original BRA. When obtaining and , this study retains the operation of increasing tensor dimensions based on dictionary indexing. Although this operation may increase computational resources, it is advantageous for NLP problems to enhance the dimensionality. Finally, the token-to-token attention scoring matrix is computed according to the following Equation (10):

where softmax is the normalization layer, C is a scaling constant used to prevent gradient vanishing or overfitting. When computing the final attention, the output dimensionality is reduced to match the same dimension as the input. The temporary dimension increase does not significantly increase the model parameters but contributes to improving the overall performance of the model. Considering that they are not necessarily optimal for NLP tasks, this study does not employ convolutions to process the original tensors; instead, it uses the original transformer module.

Recent studies have shown that Transformer-based architectures, particularly self-attention mechanisms, outperform convolutional neural networks (CNNs) in a wide range of natural language processing (NLP) tasks. Therefore, this work adopts BERT/Transformer as the fundamental feature-extraction module rather than CNNs [42,43]. This architecture enhances semantic modeling capabilities while avoiding the noise introduced by convolutional operations, thereby effectively addressing the limited adaptability of the original BRA module in NLP tasks.

The final output is obtained as shown in Equation (11):

Moreover, the proposed design does not introduce any additional parameters; all operations are implemented within the original attention mechanism of BERT. This ensures model reusability and stable convergence, facilitating integration with existing pre-trained language models.

4. Experiment

4.1. Datasets

In this paper, the proposed method was evaluated on two benchmark datasets: WN18RR [28] and FB15K-237. WN18RR is a subset of the WN18R dataset derived from WordNet, an extensive English lexical database that captures semantic relationships between words.

FB15K-237, on the other hand, is a refined subset extracted from Freebase. It is designed to improve upon the original FB15K dataset by removing redundant triplets that appear in both training and test sets, thereby providing a more realistic assessment of a model’s generalization capability.

As shown in Table 1, FB15K-237 comprises approximately 14,505 entities and 237 relation types, with a total of around 310,000 triplets across the training, validation, and test splits. WN18RR includes approximately 40,943 entities and 11 semantic relations, with a total of about 93,903 triplets.

Table 1.

Comparison of dataset statistics between WN18RR and FB15K-237.

Due to hardware limitations, this study adopted slightly modified configurations compared to the original KGLM model. To evaluate the applicability of the proposed method, BERT-LARGE was used as the encoder backbone for the WN18RR dataset, with experiments conducted on two NVIDIA RTX 3090 GPUs.

4.2. Parameters and Setup

For the FB15K-237 dataset, experiments were conducted using two different architectures: RoBERTa and BERT-LARGE. The RoBERTa-based model was trained on two A100 GPUs, while the BERT-LARGE-based model was trained on two RTX 3090 GPUs. Both RoBERTa and BERT-LARGE consist of 24 Transformer layers, with a hidden size of 1024 and 16 attention heads.

During the sample generation phase, we used a fixed random seed (seed = 530) to ensure consistency in negative sampling and data shuffling. This allowed us to minimize the impact of random variation across different runs and enhance the reproducibility of the training and evaluation process.

The hyperparameter settings for the pretraining phases are shown in Table 2:

Table 2.

Pretraining parameter settings with WN18RR&FB15K-237.

During the pretraining phase, a learning rate of 5 × 10−5, a batch size of 32, an epoch of 32 (WN18RR), and a maximum sequence length of 143 were employed. In the fine-tuning phase, a learning rate of 1 × 10−6, a batch size of 32, and an epoch of 5 were used.

The hyperparameter settings for the Fine-tuning Parameter Settings are shown in Table 3 and Table 4:

Table 3.

Fine-tuning parameter settings with WN18RR.

Table 4.

Fine-tuning parameter settings with FB15K-237.

The following key parameters were used in our task configuration:

- model type = sequence-classification: This setting indicates that each triplet is linearized into a natural language-like sentence and fed into a pre-trained language model for binary classification, determining whether the triplet is valid or not.

- negative train corrupt mode = corrupt-one: In this negative sampling strategy, for each training sample, only either the head or the tail entityis replaced to generate a negative triplet, rather than corrupting both sides. This approach reduces redundancy and improves training efficiency.

- entity relation type mode: This parameter controls whether entity and relation type information are included in the input:

- –

- When set to 0, no type information is used. The model relies solely on the surface names of entities and relations for reasoning.

- –

- When set to 1, explicit type prompts for entities and relations are included in the input sequence (e.g., via hard tokens or soft embeddings), enhancing semantic constraints and generalization ability.

To train the model to distinguish plausible from implausible links, This paper adopted a negative sampling strategy inspired by KGLM. In the context of knowledge graph completion, are artificially constructed triplets assumed to be false. By contrasting these with positive triplets, the model learns discriminative representations for link prediction.

Specifically, for each positive triplet , we generate a total of ten negative triplets by independently corrupting the head and tail entities five times each, following the corrupt-one setting. This strategy considers both the head and tail positions as candidates for replacement, enhancing the model’s ability to capture structural variation.

We construct five negative samples of the form by replacing the head entity and five samples of the form by replacing the tail entity. The corrupted entities and are sampled uniformly from the set of all entities in the knowledge graph, excluding any replacements that would result in known positive triplets. This aligns with the principle of filtered negative sampling. This procedure is consistent with the implementation described in the original KGLM paper.

When LP tasks are applied to downstream tasks, the accuracy of the Top-k predicted results needs to be evaluated. Therefore, LP tasks are assessed using three evaluation metrics: mean rank (MR), mean reciprocal rank (MRR), and Hits@N.

Mean Rank (MR) is defined as in Equation (12):

where is the rank of the positive triple among its corrupted versions, and is the number of positive test triples. represents the processed dataset for LP tasks. A lower value of MR indicates better model performance.

MRR, compared to MR, uses. instead of . Therefore, a higher value of MRR indicates better model performance.

Hit@N is defined as follows in Equation (13):

where is commonly reported. A higher value of Hit@N indicates better model performance.

4.3. Analysis

During the ablation study, experiments were conducted on the WN18RR dataset to evaluate the effectiveness of the BRA module in improving model performance. Additionally, the impact of varying Top-K values within the BRA module on the model’s performance was further examined. The corresponding experimental results are presented in the following tables.

The comparative experimental results of Top-K in BRA of the WN18RR dataset are shown in Table 5.

Table 5.

Ablation and Top-K comparison results of BRA on WN18RR.

All experiments use KGLMbert-large as the baseline model. KGLM-BRA (Top-K) denotes the results of KGLMbert-large with the BRA module under different Top-K settings.

Table 5 presents the impact of different Top-K feature retention settings within the Bidirectional Routing Attention (BRA) module on link-prediction performance. The experimental results demonstrate that the choice of Top-K plays a critical role in determining the model’s effectiveness.

When the number of retained features is small (e.g., ), model performance drops significantly—Hits@1 is only 0.05, MRR is 0.196, and MR increases to 235. This suggests that excessive filtering leads to the loss of critical relational information, weakening the model’s semantic understanding and prediction capability.

As Top-K increases to , the model exhibits substantial performance gains, with Hits@10 reaching 0.60 and MRR rising to 0.32. This suggests that retaining a sufficient number of relevant features enables the model to capture structural and relational patterns better, thereby enhancing prediction accuracy.

However, when Top-K is further increased to 10, the performance no longer improves and slightly degrades—Hits@1 drops from 0.192 to 0.184, MRR decreases from 0.324 to 0.320, and MR increases to 94.32. This decline may be attributed to the introduction of redundant or noisy features, which negatively affect the model’s generalization.

Overall, these results confirm that a proper balance must be maintained between information sufficiency and redundancy. A value of Top-K that is too small may cause information loss, while a value that is too large may introduce noise and harm performance.

To further validate the effectiveness of the BRA module, an ablation study was conducted on the WN18RR dataset, using KGLM as the baseline model with BERT-LARGE as the encoder. In the experimental setup, the original BERT encoder was replaced with the proposed BRA-LP module while keeping all other hyperparameters unchanged.

The results show that incorporating BRA leads to overall improvements in MRR and MR, with particularly notable gains in Hits@10 and Hits@5. Although there is a slight decrease in Hits@1 and Hits@3, this suggests that BRA enhances the model’s ability to distinguish between positive and negative samples at a broader ranking level (Top-K), increasing the probability of correctly identifying the correct entity among the top candidates.

It is also worth noting that if Top-K is set too small, the model may overemphasize relational information while neglecting other contextual cues, which can negatively impact top-ranked predictions (e.g., Top-1 and Top-3). Therefore, careful tuning of the Top-K parameter is essential for maximizing the effectiveness of the BRA module.

This paper compares the parameters and computational costs between KGLM and KGLM-BRA on BERT-LARGE (WN18RR), as shown in Table 6:

Table 6.

Comparison of parameters and computational costs between KGLM and KGLM-BRA on BERT-LARGE (WN18RR).

As shown in Table 6, our proposed module is designed based on the Transformer architecture and incorporates dimensional expansion operations. As a result, under the condition of retaining the original hyperparameter settings, the model exhibits a noticeable increase in FLOPs—approximately three times higher than the baseline model. However, thanks to our structural simplification strategy (e.g., the removal of scaling and convolution operations), the module does not lead to a significant increase in training time, and the total number of model parameters increases by only about 1 million.

Although the FLOPs increase is substantial, the improvements in overall performance metrics (such as MRR and Hits@K) remain relatively modest. This phenomenon can be attributed to several factors. First, the pre-trained backbone models used in our study (e.g., BERT-large and RoBERTa) already exhibit strong baseline performance, making further improvements subject to diminishing returns. Second, the BRA module is primarily designed to enhance semantic representation and relation-modeling capabilities, aiming to improve global ranking metrics (e.g., Hits@10) rather than specifically optimizing Top-1 accuracy. Third, most mainstream evaluation metrics focus on final prediction outputs and may not fully capture the potential benefits brought by attention structure refinements in intermediate layers.

To further verify the generalization and adaptability of the BRA module, we conducted comparative experiments on the FB15K-237 dataset using two different backbone models: KGLM_BERT-LARGE and KGLM_RoBERTa. The corresponding results are presented in Table 7 below. In future work, we plan to explore structural optimization techniques such as low-rank approximation, sparse attention, and dynamic routing mechanisms, aiming to reduce computational complexity while maintaining performance, thereby enhancing the method’s deployability and cost-effectiveness in real-world applications.

Table 7.

Performance comparison of KGLM and KGLM-BRA with different encoder backbones on FB15K-237.

As shown in Table 7, our proposed method achieves consistent performance improvements on both the FB15K-237 and WN18RR datasets. Specifically, the KGLM-BRA model outperforms the baseline KGLM in terms of overall ranking metrics such as Hits@10 and MRR across different encoder backbones. For instance, on FB15K-237, the BRA-enhanced model improves Hits@10 from 0.33 to 0.35 with BERT-large, and from 0.441 to 0.449 with RoBERTa, while also reducing the Mean Rank (MR), indicating better global ranking capability.

Although the improvements in Hits@1 and Hits@3 are marginal or slightly decreased in some cases, this is expected due to the already strong performance of the underlying PLMs and the inherent design of the BRA module, which focuses on enhancing global semantic representation rather than optimizing for exact Top-1 accuracy.

With the continued development of large-scale language models, we argue that returning a semantically plausible Top-K candidate list—rather than a single best prediction—provides more flexibility for real-world professional applications. This allows downstream modules or domain experts to perform further reasoning, validation, or filtering, which is often more aligned with practical decision-making workflows.

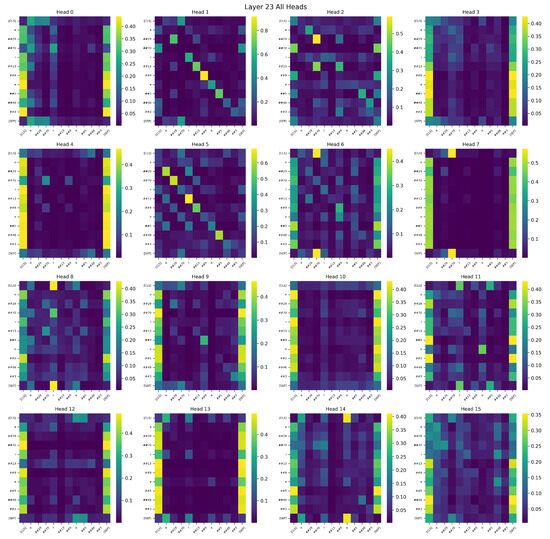

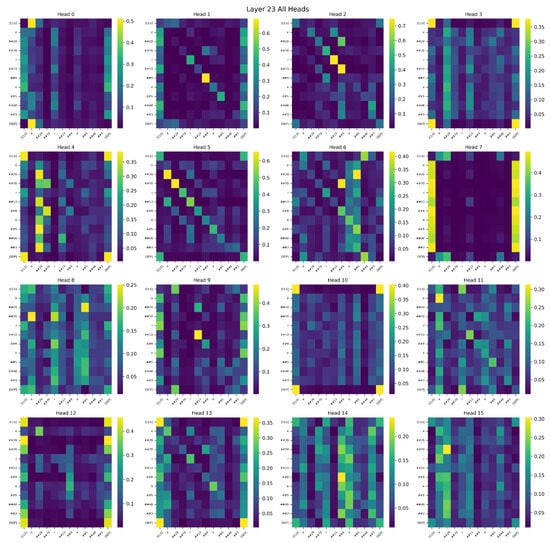

In multi-head attention architectures such as BERT-Large, each attention head is designed to capture distinct types of token-level dependencies, including syntactic, semantic, and positional relationships. During training, attention heads that are more aligned with task-specific objectives—such as modeling entity–relation or relation–tail interactions in link prediction—tend to receive stronger gradient updates. This leads to progressively sharper and more discriminative attention patterns. As illustrated in Figure 6 and Figure 7, which visualizes the attention maps of Layer 23 for sample, the BRA-enhanced model demonstrates that several attention heads consistently focus on semantically critical tokens, highlighting the model’s ability to align with predictive features in an interpretable manner.

Figure 6.

Visualization of the attention map in the KGLM model (Layer 23).

Figure 7.

Visualization of the attention map in the KGLM-BRA model (Layer 23).

Furthermore, the proposed BRA module enhances this mechanism by introducing a semantic-aware reordering of feature importance. By encouraging the model to prioritize highly relevant regions early in training, BRA accelerates convergence and strengthens the model’s ability to capture fine-grained relational semantics. As a lightweight and modular enhancement, BRA requires no additional parameters or architectural modifications, yet effectively guides the standard attention mechanism toward semantically meaningful substructures.

The BRA-based model exhibits more structured and diverse attention behaviors in deeper layers. Multiple attention heads (e.g., Head 1, 2, 5, 6) form highly focused paths over task-relevant tokens, while the functional differentiation among heads becomes more pronounced. These results suggest that BRA substantially enhances the model’s semantic representation capacity, interpretability, and downstream reasoning performance.

To verify the stability of the experimental results, we conducted multiple independent runs during the fine-tuning stage using different random seeds. The experiments were carried out on the FB15k-237 dataset with RoBERTa as the baseline model. The performance metrics of each run are presented in Table 8 below, showing that the model maintains relatively consistent performance across different random initializations. This confirms the robustness and reproducibility of the proposed method.

Table 8.

Performance of KGLM-BRA on FB15k-237 under different random seeds. Results are reported as mean ± standard deviation.

To verify the stability of the model under different random initializations, we supplemented the original experiment (seed = 530) with two additional runs using seeds 42 and 3407. As shown in Table 8, the model exhibits consistent performance across different seeds, with small standard deviations in key metrics (e.g., MRR: 0.280 ± 0.009, Hits@10: 0.442 ± 0.042), indicating the robustness of the proposed method.

We compare the performance of KGLM-BRA against recent state-of-the-art methods on the WN18RR dataset and FB15K-237 as shown in Table 9 and Table 10:

Table 9.

Performance comparison of KGLM-BRA and recent advances on the WN18RR benchmark.

Table 10.

Performance comparison of KGLM-BRA and recent advances on the FB15K-237 benchmark.

As shown in Table 9 and Table 10, our approach exhibits notable advantages compared to recent methods. GCN-based models such as CompGCN and SimKGC generally outperform in Hits@1 and Hits@3 metrics, while PLM-based approaches like KGLM and StAR demonstrate strengths in Hits@10 and Mean Rank (MR), highlighting their superior global ranking capabilities.

Although the overall precision of KGLM-BRA may not yet surpass all state-of-the-art models—particularly in Hits@1 and MRR—it presents a promising direction for enhancing PLM-based architectures in the link-prediction domain. Rather than introducing entirely new model structures, our method focuses on lightweight, modular improvements specifically designed for BERT and RoBERTa backbones. This is achieved without altering the original hyperparameter settings or significantly increasing model size—the parameter count grows by only about one million.

While the computational cost (FLOPs) increases due to token-level routing operations, the training pipeline remains largely unchanged. This allows for improved semantic modeling with minimal additional overhead. Overall, the proposed method provides a practical and extensible solution for improving PLM-based link-prediction systems under constrained parameter budgets, offering a favorable trade-off between efficiency and accuracy.

5. Conclusions

This study explores the potential of leveraging semantic relevance between entities to enhance LP performance, while also alleviating some challenges associated with fine-tuning large language models. To this end, we introduced a lightweight BRA module into the KGLM framework, specifically adapted for LP tasks. Experimental results indicate that the BRA module leads to consistent, albeit modest, performance improvements without altering the original hyperparameter configurations and with only a marginal increase in model parameters. However, these gains come with increased training time and computational overhead.

Due to hardware limitations, we primarily used the BERT-large model, which has fewer parameters, as a substitute for RoBERTa in our experiments. For evaluations involving RoBERTa, hyperparameters were adjusted accordingly. While minor performance differences may arise from architectural discrepancies, the experimental setup was held consistent, showcasing the BRA module’s adaptability and robustness across different pre-trained backbones.

We acknowledge that the performance improvements are relatively limited and may be influenced by random variation. To further substantiate the effectiveness of our approach, future work will include statistical validation through multiple experimental runs, reporting of variance metrics, and significance testing. Moreover, we intend to evaluate the BRA module on additional benchmark datasets and larger, noisier knowledge graphs to better assess its generalizability. Finally, we plan to investigate the effects of varying Top-K settings, explore more efficient feature-selection strategies, and examine the potential of integrating BRA into more powerful backbone models when computational resources permit. These considerations reflect current limitations of our method, and we aim to address them in future work.

Author Contributions

X.Y. and Z.D. provided equipment and proposed the research problems. C.L. were responsible for the overall structure of the paper and providing revision suggestions. Y.W. was in charge of designing, conducting experiments, and writing the paper. S.X. is responsible for data management. All the authors have made meaningful and valuable contributions in revising and proofreading the resulting manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by the Anhui Provincial Major Science and Technology Project (Nos. 202303a07020006, and 202304a05020071), the Anhui Provincial Clinical Medical Research Transformation Project (No. 202204295107020004), the Research Funds of Center for Xin’an Medicine and Modernization of Traditional Chinese Medicine of IHM (No. 2023CXMMTCM012).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This paper uses the publicly available dataset WN18RR for testing experiments. The data presented in this study are available in figshare at https://doi.org/10.6084/m9.figshare.11911272.v1, reference number Wilson, Huon (2020). WN18RR. figshare. Dataset. https://doi.org/10.6084/m9.figshare.11911272.v1. These data were derived from the following resources available in the public domain: [https://figshare.com/articles/dataset/WN18RR/11911272/1]. (accessed on 20 August 2024).

Acknowledgments

This article only used chatgpt 4o spelling corrections. No AI tools were used to generate the textual content.

Conflicts of Interest

Author Cong Liu was employed by the company iFLYTEK Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest The funders were involved in the design of the study; in the collection, analysis, or interpretation of data; in the writing of the manuscript; and in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| BRA | Bi-Level Routing Attention |

| KG | Knowledge Graph |

| KGC | Knowledge Graph Completion |

| LP | Link Prediction |

| PLM | Pre-trained Language Models |

| NLP | Natural Language Processing |

| GNN | Graph Neural Network |

| KGE | Knowledge Graph Embedding |

| LM | Language Models |

| KGLM | Knowledge Graph Language Model |

References

- Wang, X.; Wang, D.; Xu, C.; He, X.; Cao, Y.; Chua, T.S. Explainable reasoning over knowledge graphs for recommendation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5329–5336. [Google Scholar]

- Wang, L.; Xie, H.; Han, W.; Yang, X.; Shi, L.; Dong, J.; Jiang, K.; Wu, H. Construction of a knowledge graph for diabetes complications from expert-reviewed clinical evidences. Comput. Assist. Surg. 2020, 25, 29–35. [Google Scholar] [CrossRef]

- Elhammadi, S.; Lakshmanan, L.V.; Ng, R.; Simpson, M.; Huai, B.; Wang, Z.; Wang, L. A high precision pipeline for financial knowledge graph construction. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 967–977. [Google Scholar]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. Dbpedia: A nucleus for a web of open data. In Proceedings of the International Semantic Web Conference, Busan, Republic of Korea, 11–15 November 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 722–735. [Google Scholar]

- Miller, G.A. WordNet: A lexical database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- McArthur, A.G.; Waglechner, N.; Nizam, F.; Yan, A.; Azad, M.A.; Baylay, A.J.; Bhullar, K.; Canova, M.J.; De Pascale, G.; Ejim, L.; et al. The comprehensive antibiotic resistance database. Antimicrob. Agents Chemother. 2013, 57, 3348–3357. [Google Scholar] [CrossRef]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 10–12 June 2008; pp. 1247–1250. [Google Scholar]

- Dong, X.; Gabrilovich, E.; Heitz, G.; Horn, W.; Lao, N.; Murphy, K.; Strohmann, T.; Sun, S.; Zhang, W. Knowledge vault: A web-scale approach to probabilistic knowledge fusion. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 601–610. [Google Scholar]

- Xiong, W.; Hoang, T.; Wang, W.Y. Deeppath: A reinforcement learning method for knowledge graph reasoning. arXiv 2017, arXiv:1707.06690. [Google Scholar]

- West, R.; Gabrilovich, E.; Murphy, K.; Sun, S.; Gupta, R.; Lin, D. Knowledge base completion via search-based question answering. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Republic of Korea, 7–11 April 2014; pp. 515–526. [Google Scholar]

- Mythrei, S.; Singaravelan, S. Survey on entity linking for domain specific with heterogeneous information networks. Informatologia 2019, 52, 173–184. [Google Scholar] [CrossRef]

- Sun, X.; Yin, H.; Liu, B.; Chen, H.; Meng, Q.; Han, W.; Cao, J. Multi-level hyperedge distillation for social linking prediction on sparsely observed networks. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 2934–2945. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. Adv. Neural Inf. Process. Syst. 2013, 2, 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge graph embedding by translating on hyperplanes. In Proceedings of the AAAI Conference on Artificial Intelligence, Quebec City, QC, Canada, 27–31 July 2014; Volume 28. [Google Scholar]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge graph embedding via dynamic mapping matrix. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China, 26–31 July 2015; pp. 687–696. [Google Scholar]

- Tiddi, I.; Lécué, F.; Hitzler, P. Knowledge Graphs for eXplainable Artificial Intelligence: Foundations, Applications and Challenges; Studies on the Semantic Web; IOS Press: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; Yin, D. Graph neural networks for social recommendation. In Proceedings of the The World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 417–426. [Google Scholar]

- Zhang, P.; Wei, Z.; Che, C.; Jin, B. DeepMGT-DTI: Transformer network incorporating multilayer graph information for Drug–Target interaction prediction. Comput. Biol. Med. 2022, 142, 105214. [Google Scholar] [CrossRef]

- Bodenreider, O. The unified medical language system (UMLS): Integrating biomedical terminology. Nucleic Acids Res. 2004, 32, D267–D270. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Joshi, M.; Chen, D.; Liu, Y.; Weld, D.S.; Zettlemoyer, L.; Levy, O. Spanbert: Improving pre-training by representing and predicting spans. Trans. Assoc. Comput. Linguist. 2020, 8, 64–77. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Ma, Z.; Gao, L.; Xu, Y. A Hybrid Model for Named Entity Recognition on Chinese Electronic Medical Records. Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 20, 1–12. [Google Scholar] [CrossRef]

- Farkh, R.; Oudinet, G.; Deleruyelle, T. Evaluating a Hybrid LLM Q-Learning/DQN Framework for Adaptive Obstacle Avoidance in Embedded Robotics. AI 2025, 6, 115. [Google Scholar] [CrossRef]

- Ubaid, A.; Lie, A.; Lin, X. SMART Restaurant ReCommender: A Context-Aware Restaurant Recommendation Engine. AI 2025, 6, 64. [Google Scholar] [CrossRef]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; Van Den Berg, R.; Titov, I.; Welling, M. Modeling relational data with graph convolutional networks. In Proceedings of the The Semantic Web: 15th International Conference, ESWC 2018, Heraklion, Greece, 3–7 June 2018; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2018; pp. 593–607. [Google Scholar]

- Vashishth, S.; Sanyal, S.; Nitin, V.; Talukdar, P. Composition-based multi-relational graph convolutional networks. arXiv 2019, arXiv:1911.03082. [Google Scholar]

- Mao, C.; Yao, L.; Luo, Y. MedGCN: Medication recommendation and lab test imputation via graph convolutional networks. J. Biomed. Inform. 2022, 127, 104000. [Google Scholar] [CrossRef]

- Wang, B.; Shen, T.; Long, G.; Zhou, T.; Wang, Y.; Chang, Y. Structure-augmented text representation learning for efficient knowledge graph completion. In Proceedings of the Web Conference 2021, Ljubljana Slovenia, 19–23 April 2021; pp. 1737–1748. [Google Scholar]

- Zhang, Z.; Wang, J.; Ye, J.; Wu, F. Rethinking graph convolutional networks in knowledge graph completion. In Proceedings of the ACM Web Conference 2022, Virtual, 25–29 April 2022; pp. 798–807. [Google Scholar]

- Yao, L.; Mao, C.; Luo, Y. KG-BERT: BERT for knowledge graph completion. arXiv 2019, arXiv:1909.03193. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention Is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Nice, France; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc., 2017; Volume 30. [Google Scholar]

- Clark, K.; Khandelwal, U.; Levy, O.; Manning, C.D. What Does BERT Look at? An Analysis of BERT’s Attention. In Proceedings of the 2019 ACL Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP. Association for Computational Linguistics, Florence, Italy, 1 August 2019; p. 276. [Google Scholar]

- Peng, B.; Liang, S.; Islam, M. Bi-Link: Bridging Inductive Link Predictions from Text via Contrastive Learning of Transformers and Prompts. arXiv 2022, arXiv:2210.14463. [Google Scholar]

- Yao, L.; Peng, J.; Mao, C.; Luo, Y. Exploring large language models for knowledge graph completion. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- He, J.; Liu, J.; Wang, L.; Li, X.; Xu, X. Mocosa: Momentum contrast for knowledge graph completion with structure-augmented pre-trained language models. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Youn, J.; Tagkopoulos, I. KGLM: Integrating Knowledge Graph Structure in Language Models for Link Prediction. arXiv 2022, arXiv:2211.02744. [Google Scholar]

- Su, H.; Shen, X.; Zhang, R.; Sun, F.; Hu, P.; Niu, C.; Zhou, J. Improving multi-turn dialogue modelling with utterance rewriter. arXiv 2019, arXiv:1906.07004. [Google Scholar]

- Jambor, D.; Teru, K.; Pineau, J.; Hamilton, W.L. Exploring the limits of few-shot link prediction in knowledge graphs. arXiv 2021, arXiv:2102.03419. [Google Scholar]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. Ripplenet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Turin, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Chen, Y.; Shang, J.; Zhang, Z.; Sheng, J.; Liu, T.; Wang, S.; Sun, Y.; Wu, H.; Wang, H. Mixture of Hidden-Dimensions Transformer. arXiv 2024, arXiv:2412.05644. [Google Scholar]

- Joshi, M.; Levy, O.; Zettlemoyer, L.; Weld, D. BERT for Coreference Resolution: Baselines and Analysis. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019. [Google Scholar]

- Tay, Y.; Dehghani, M.; Gupta, J.P.; Aribandi, V.; Bahri, D.; Qin, Z.; Metzler, D. Are Pretrained Convolutions Better than Pretrained Transformers? In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 4349–4359. [Google Scholar]

- Hu, D. An introductory survey on attention mechanisms in NLP problems. In Intelligent Systems and Applications: Proceedings of the 2019 Intelligent Systems Conference (IntelliSys) Volume 2; Springer: Berlin/Heidelberg, Germany, 2020; pp. 432–448. [Google Scholar]

- Wang, Q.; Huang, P.; Wang, H.; Dai, S.; Jiang, W.; Liu, J.; Lyu, Y.; Zhu, Y.; Wu, H. Coke: Contextualized knowledge graph embedding. arXiv 2019, arXiv:1911.02168. [Google Scholar]

- Wang, L.; Zhao, W.; Wei, Z.; Liu, J. SimKGC: Simple contrastive knowledge graph completion with pre-trained language models. arXiv 2022, arXiv:2203.02167. [Google Scholar]

- Xiao, H.; Liu, X.; Song, Y.; Wong, G.Y.; See, S. Complex Hyperbolic Knowledge Graph Embeddings with Fast Fourier Transform. arXiv 2022, arXiv:2211.03635. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).