Automated Deadlift Techniques Assessment and Classification Using Deep Learning

Abstract

1. Introduction

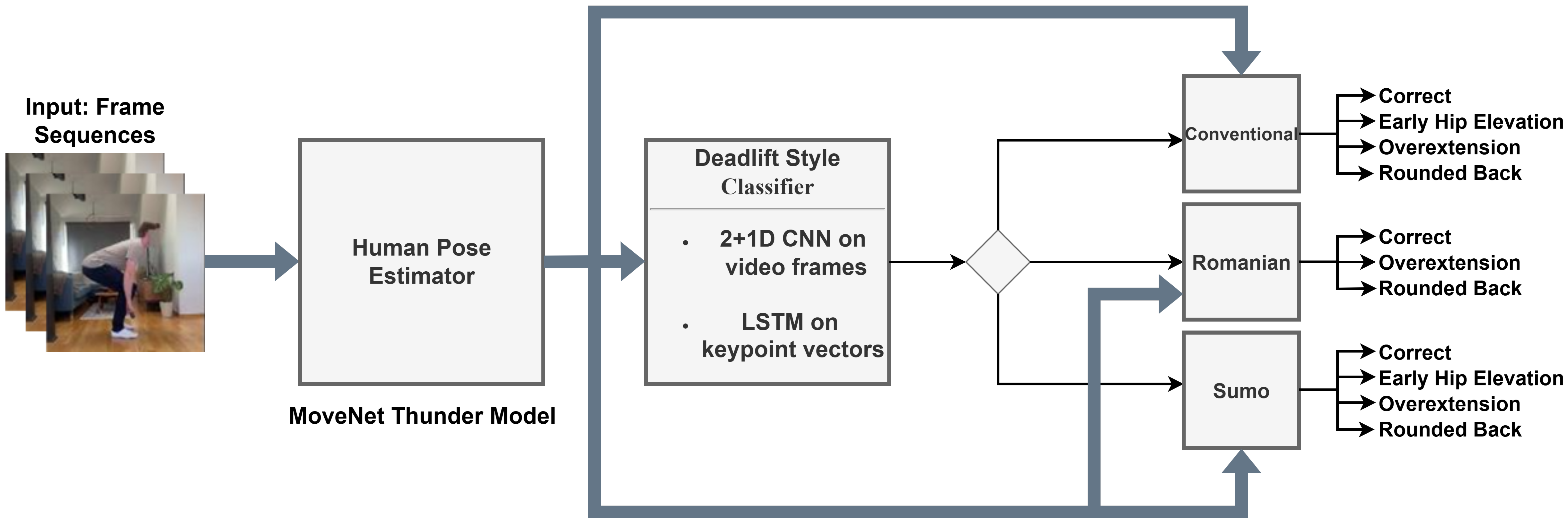

- A custom dataset of deadlift repetitions, which covers three styles (conventional, sumo, and Romanian) and different execution forms. This dataset features consistent annotations and data augmentations.

- An implementation and evaluation of two approaches for classifying deadlift styles and forms: the 2+1D CNN and the LSTM, utilizing raw videos with keypoint annotations and solely keypoint scores, respectively.

- Experimental insights into the limitations of using 3D CNNs for generalization, highlighting the effectiveness of a keypoint-based LSTM approach.

- The development of an app that enables real-time deadlift classification and offers intelligent feedback. The app has potential not only for deadlift exercises but also for other similar repetition-based workouts.

2. Related Work

3. Methodology

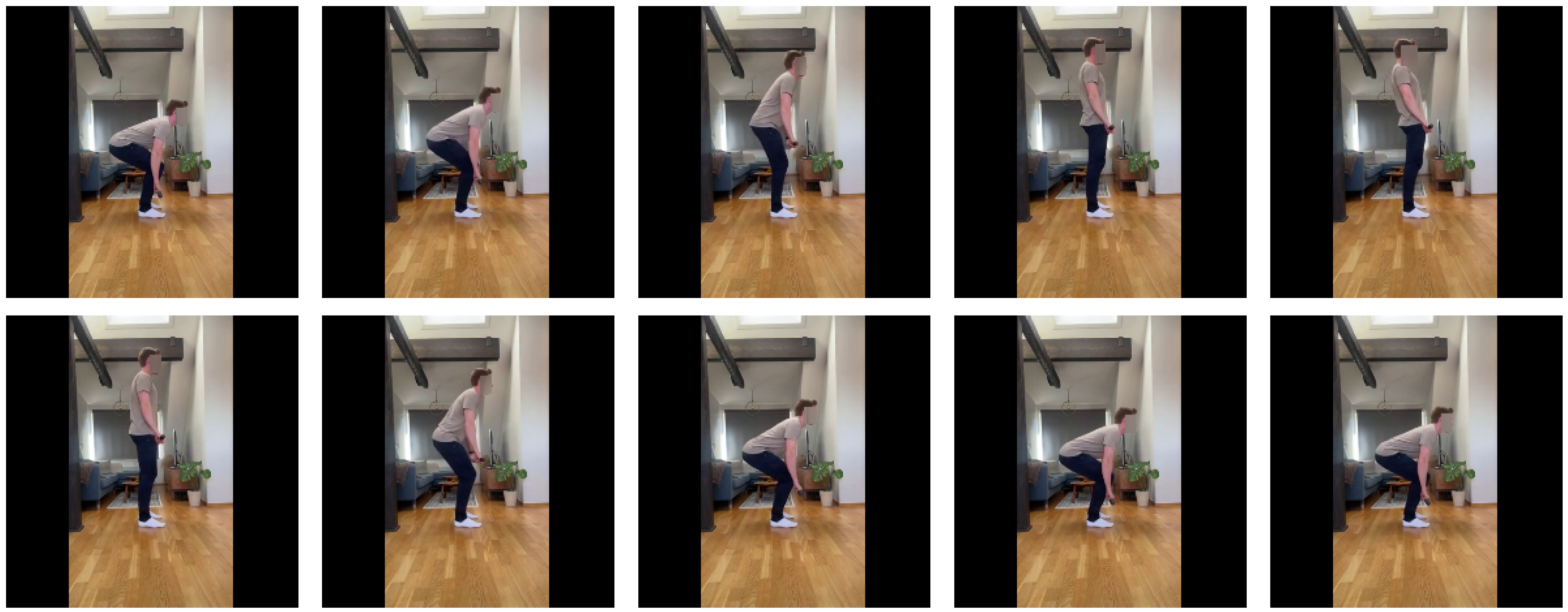

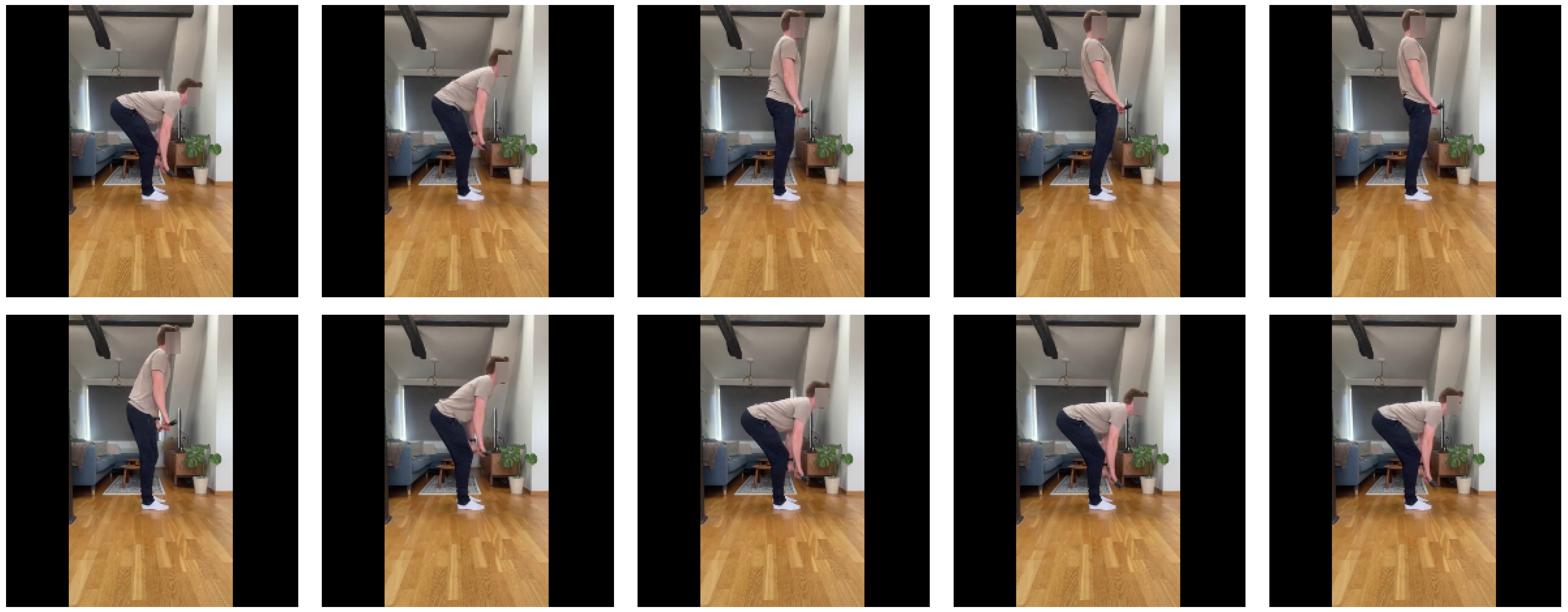

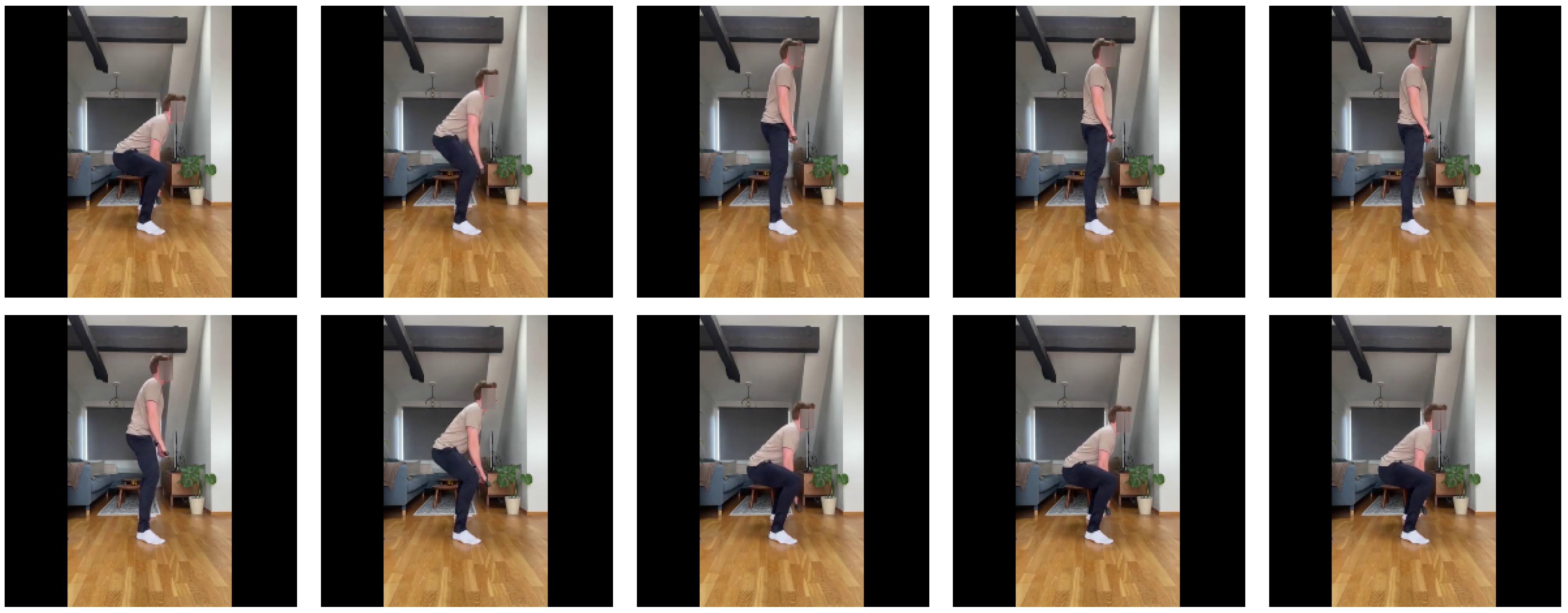

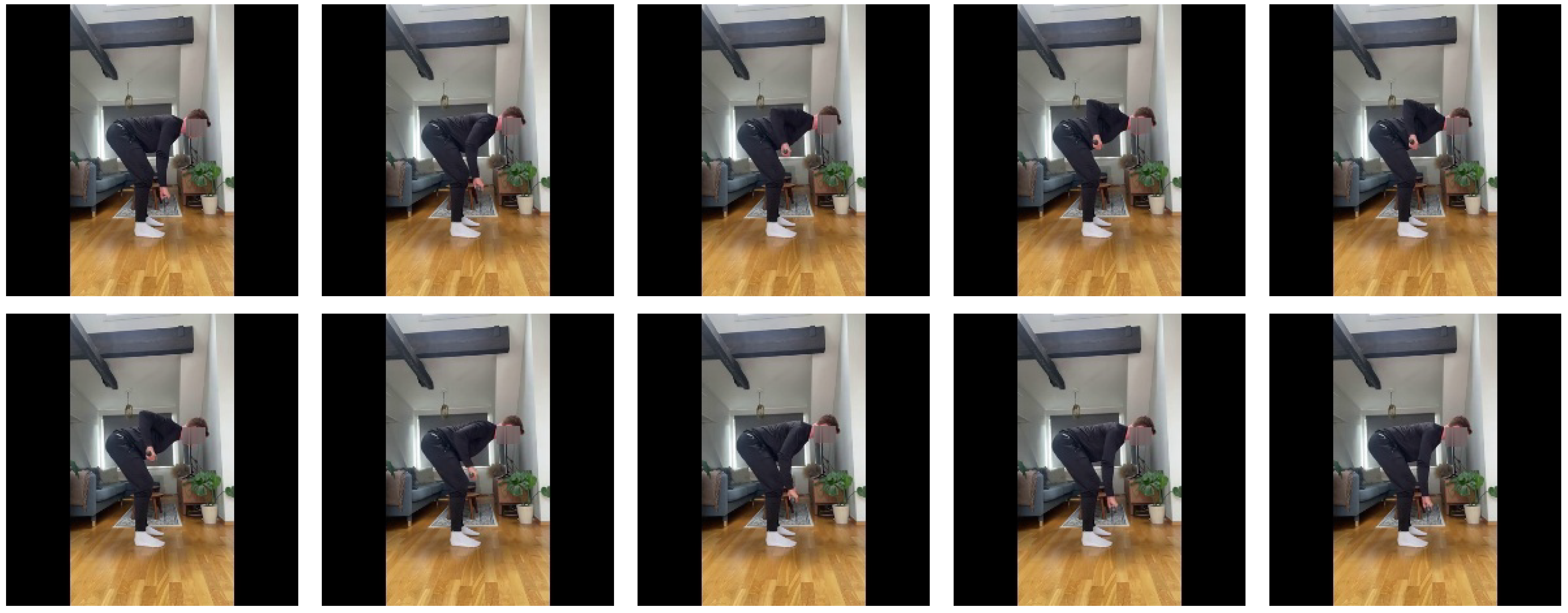

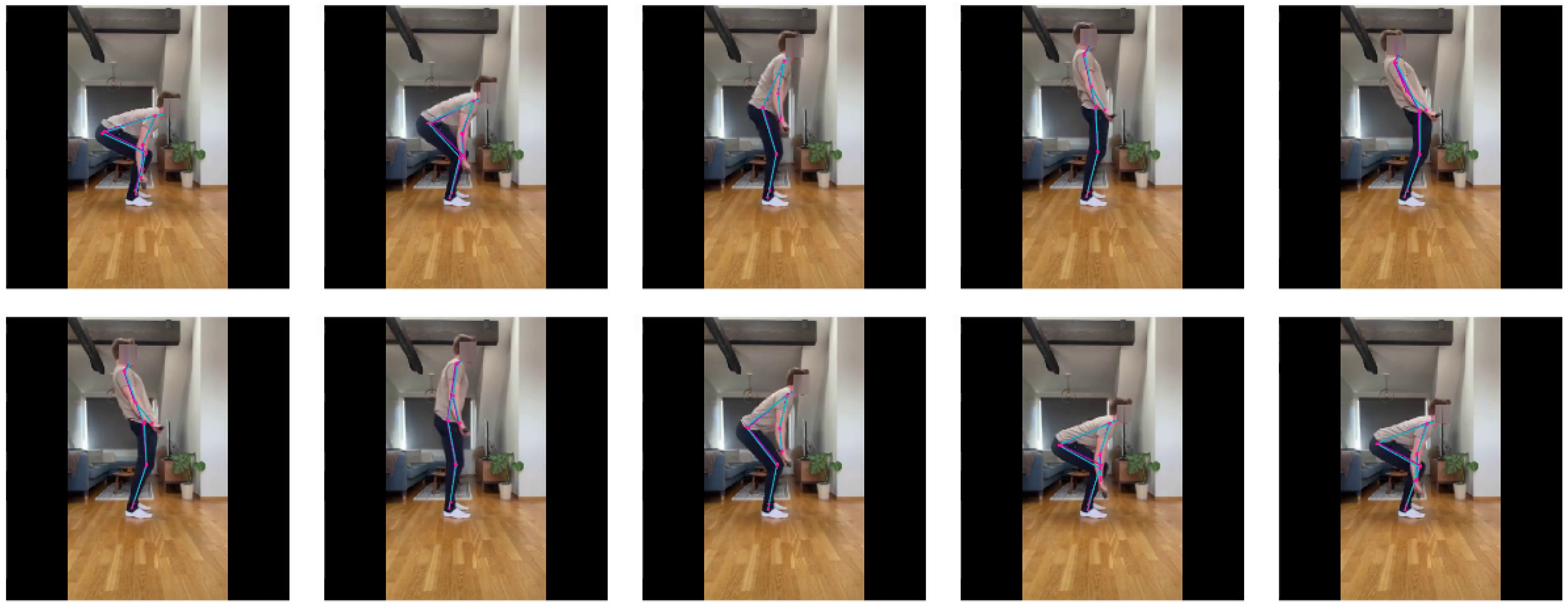

3.1. Data Generation and Preprocessing

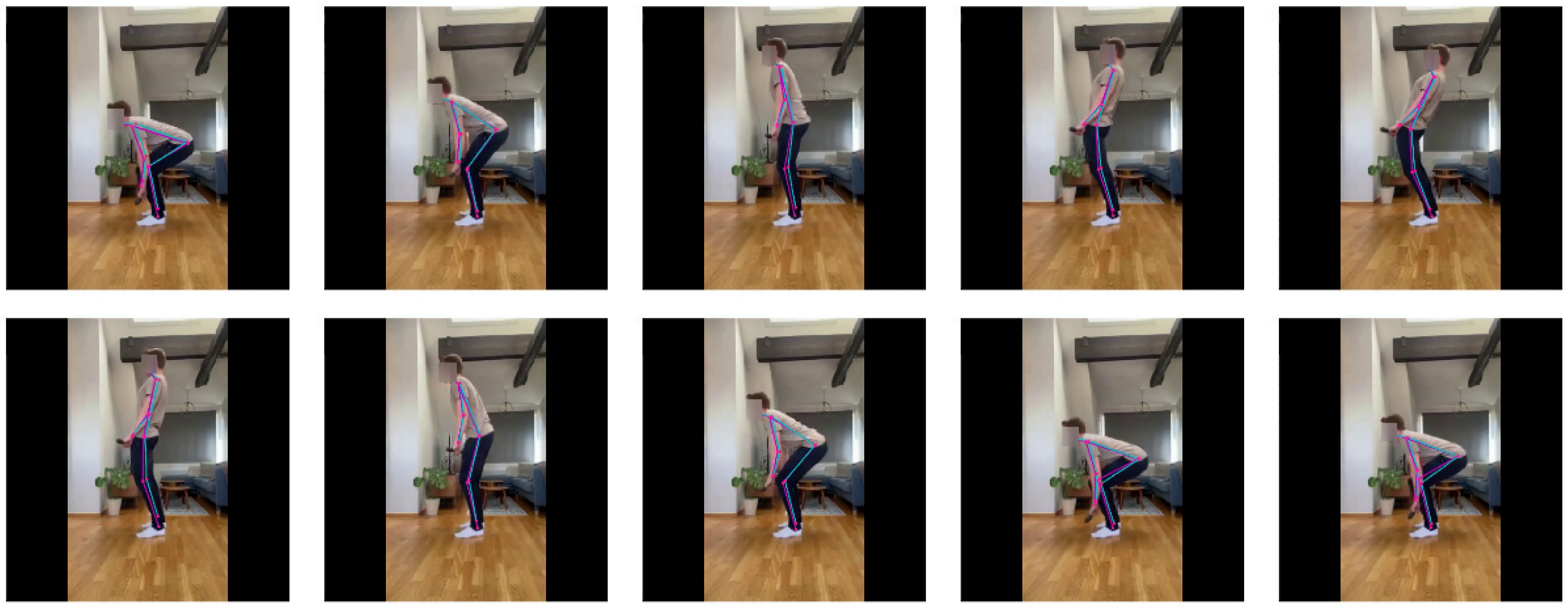

3.2. Feature Generation

- Direct Model Input: The keypoint coordinates can be directly utilized in machine learning models like LSTM. This approach effectively minimizes noise from the background, clothing, and lighting, allowing the model to concentrate more accurately on joint locations. This focus can enhance the ability of the model to generalize across various environments.

- Skeletal Representation for CNNs: The keypoint data can also be transformed into a skeletal representation of the individual, which is suitable for use in CNN models. Similar to the first method, this skeletal representation helps reduce noise and directs the model’s attention toward significant joint locations.

- Overlaying on Video: Lastly, the keypoint data can be utilized to overlay a skeletal representation onto the subject within the original video. This technique preserves the context of the video, enabling the model to capture cues that might be overlooked by the human pose estimator.

3.3. Classification Approach

4. Experimentation and Results

4.1. Experimental Setup

4.2. Results and Discussion

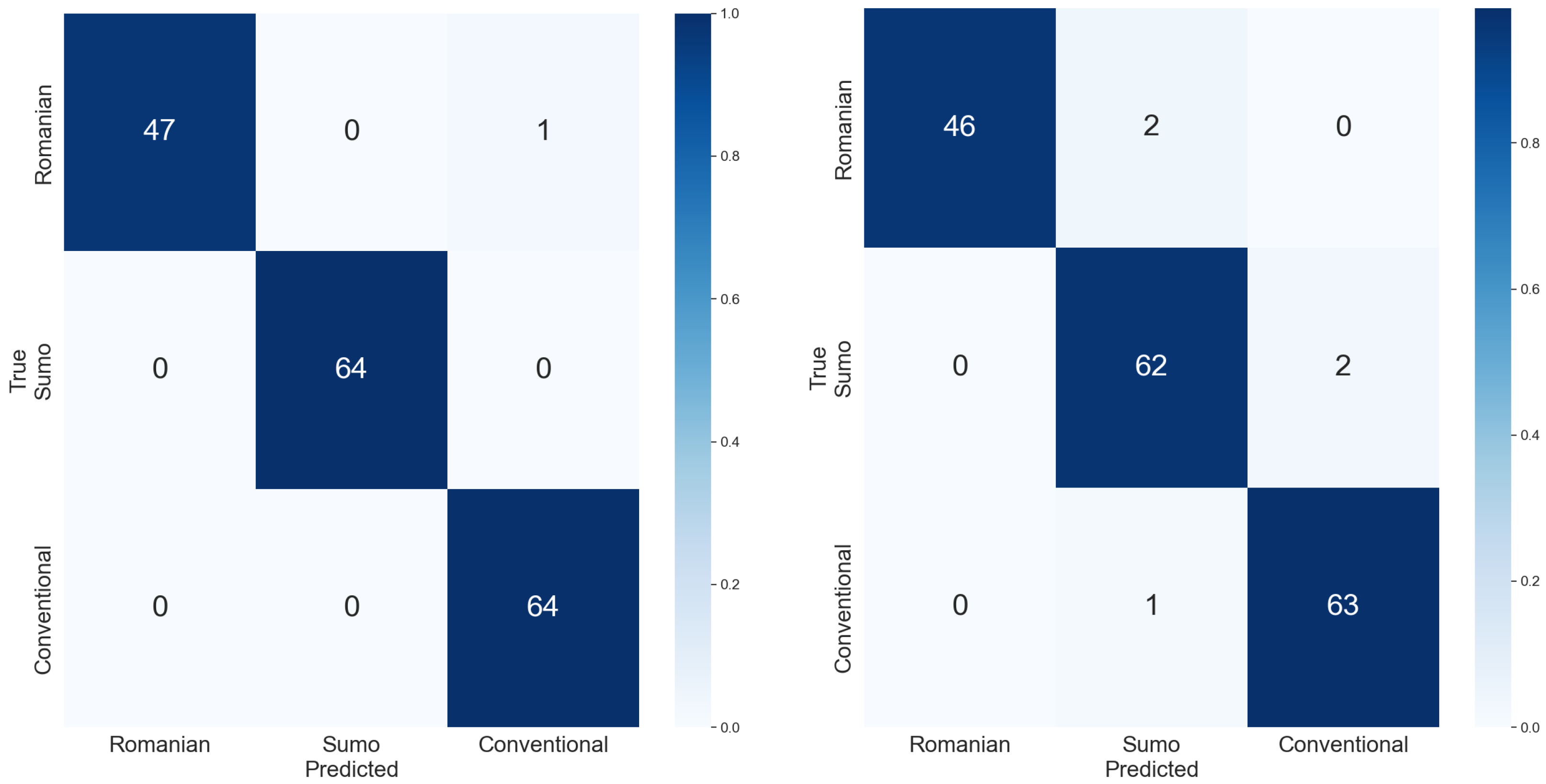

4.2.1. Deadlift Style Classifications

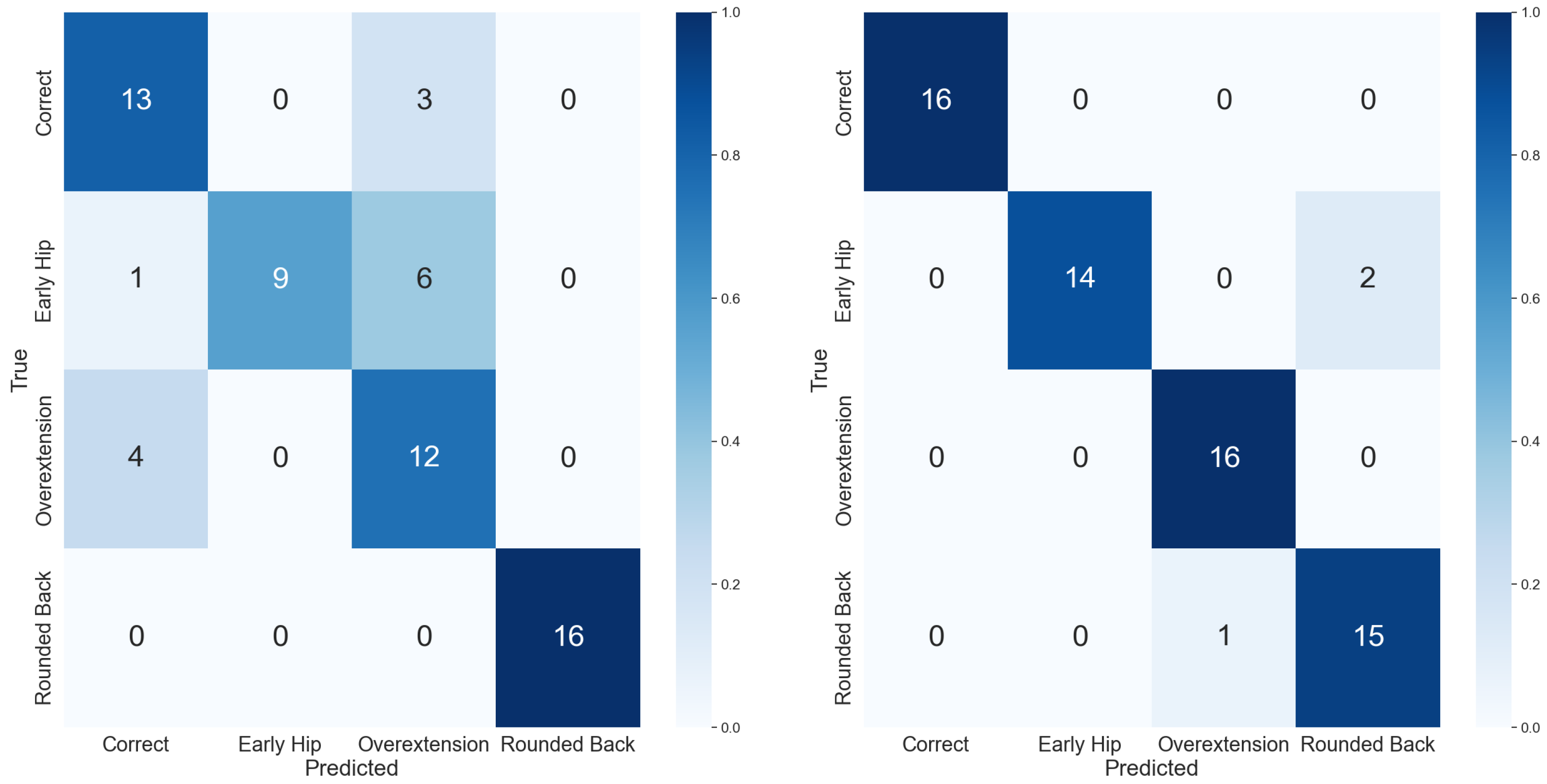

4.2.2. Deadlift Execution Form Classifications

4.3. Comparative Analysis

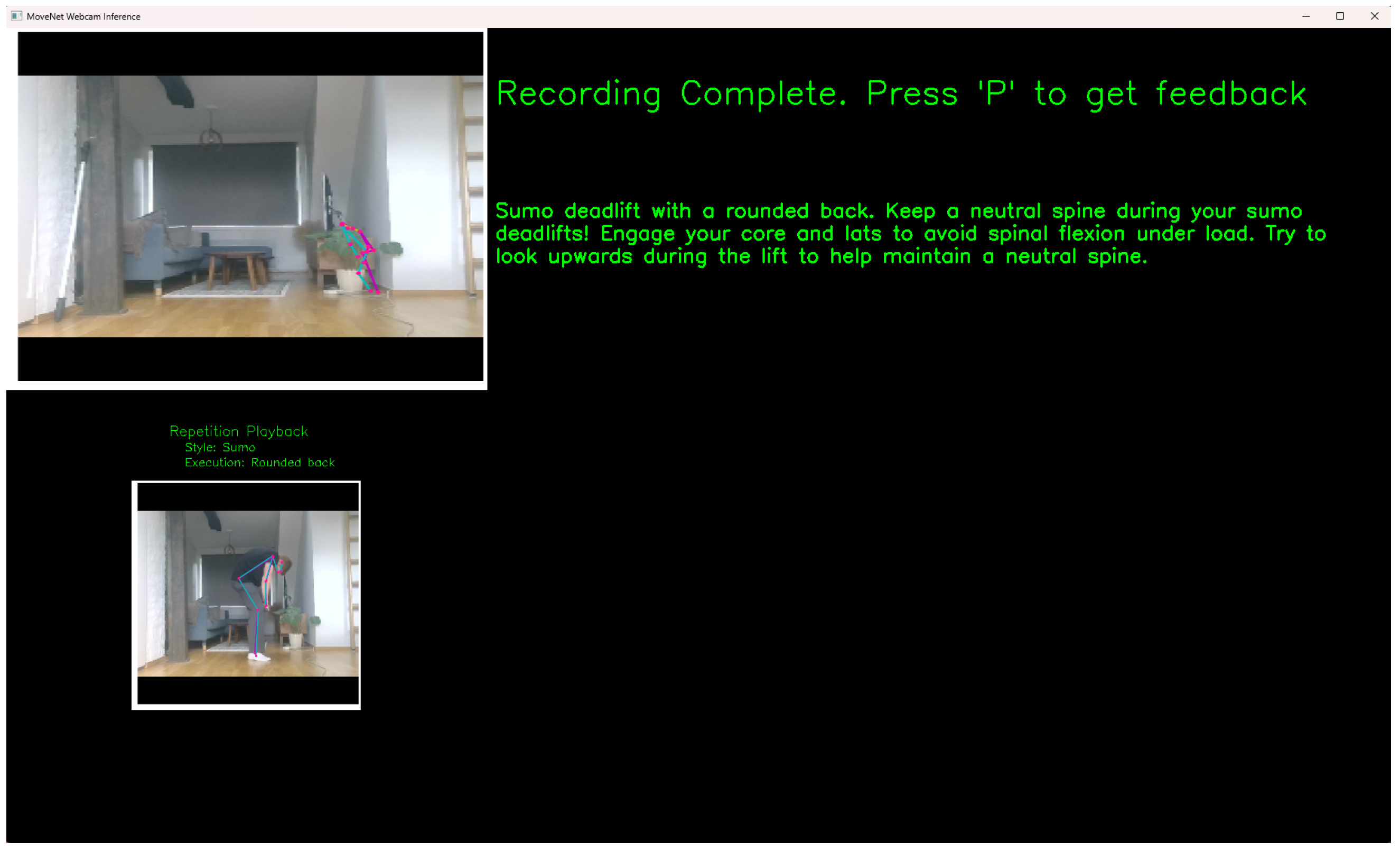

5. Deadlift Classification and Feedback Application

- A webcam feed powered by the OpenCV library, which captures the user’s movements in real time.

- A detection pipeline that analyzes joint angles to accurately identify completed deadlift repetitions.

- A sequence generation feature that prepares keypoints for classification using the LSTM model.

- A feedback system that overlays visual cues on the user’s movements and delivers text prompts generated by the Gemini Large Language Model.

5.1. Hip Angle-Based Rep Detection

5.2. Classification and Feedback

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Statistics Norway (SSB). Sports and Outdoor Activities, Survey on Living Conditions. 2021. Available online: https://www.ssb.no/en/kultur-og-fritid/idrett-og-friluftsliv/statistikk/idrett-og-friluftsliv-levekarsundersokelsen (accessed on 21 September 2024).

- Piper, T.J.; Waller, M.A. Variations of the Deadlift. Strength Cond. J. 2001, 23, 66–73. [Google Scholar] [CrossRef]

- Alekseyev, K.; John, A.; Malek, A.; Lakdawala, M.; Verma, N.; Southall, C.; Nikolaidis, A.; Akella, S.; Erosa, S.; Islam, R.; et al. Identifying the Most Common CrossFit Injuries in a Variety of Athletes. Rehabil. Process Outcome 2020, 9, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Strömback, E.; Aasa, U.; Gilenstam, K.; Berglund, L. Prevalence and Consequences of Injuries in Powerlifting: A Cross-sectional Study. Orthop. J. Sport Med. 2018, 6, 2325967118771016. [Google Scholar] [CrossRef] [PubMed]

- Mateus, N.; Abade, E.; Coutinho, D.; Gómez, M.Á.; Peñas, C.L.; Sampaio, J. Empowering the Sports Scientist with Artificial Intelligence in Training, Performance, and Health Management. Sensors 2025, 25, 139. [Google Scholar] [CrossRef] [PubMed]

- Sigrist, R.; Rauter, G.; Riener, R.; Wolf, P. Terminal Feedback Outperforms Concurrent Visual, Auditory, and Haptic Feedback in Learning a Complex Rowing-Type Task. J. Mot. Behav. 2013, 45, 455–472. [Google Scholar] [CrossRef] [PubMed]

- Tharatipyakul, A.; Choo, K.T.W.; Perrault, S.T. Pose Estimation for Facilitating Movement Learning from Online Videos. In Proceedings of the International Conference on Advanced Visual Interfaces, New York, NY, USA, 28 September–2 October 2020. [Google Scholar] [CrossRef]

- Walsh, C.M.; Ling, S.C.; Wang, C.S.; Carnahan, H. Concurrent Versus Terminal Feedback: It May Be Better to Wait. Acad. Med. J. Assoc. Am. Med. Coll. 2009, 84, 54–57. [Google Scholar] [CrossRef] [PubMed]

- Ingwersen, C.K.; Xarles, A.; Clapés, A.; Madadi, M.; Jensen, J.N.; Hannemose, M.R.; Dahl, A.B.; Escalera, S. Video-based Skill Assessment for Golf: Estimating Golf Handicap. In Proceedings of the 6th International Workshop on Multimedia Content Analysis in Sports, New York, NY, USA, 29 October 2023; pp. 31–39. [Google Scholar] [CrossRef]

- Wang, J.; Qiu, K.; Peng, H.; Fu, J.; Zhu, J. AI Coach: Deep Human Pose Estimation and Analysis for Personalized Athletic Training Assistance. In Proceedings of the 27th ACM International Conference on Multimedia, New York, NY, USA, 21–25 October 2019; pp. 374–382. [Google Scholar] [CrossRef]

- Moodley, T.; van der Haar, D. CASRM: Cricket Automation and Stroke Recognition Model Using OpenPose. In Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. Posture, Motion and Health, Proceedings of the 11th International Conference, DHM 2020, Copenhagen, Denmark, 19–24 July 2020; Duffy, V.G., Ed.; Springer: Cham, Switzerland, 2020; pp. 67–78. [Google Scholar] [CrossRef]

- Rossi, A.; Pappalardo, L.; Cintia, P. A Narrative Review for a Machine Learning Application in Sports: An Example Based on Injury Forecasting in Soccer. Sports 2022, 10, 5. [Google Scholar] [CrossRef] [PubMed]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. BlazePose: On-device Real-time Body Pose tracking. arXiv 2020, arXiv:2006.10204. [Google Scholar] [CrossRef]

- Votel, R.; Li, N. Next-Generation Pose Detection with MoveNet and TensorFlow.js. 2021. Available online: https://blog.tensorflow.org/2021/05/next-generation-pose-detection-with-movenet-and-tensorflowjs.html (accessed on 9 November 2024).

- Moustakas, L. Game Changer: Harnessing Artificial Intelligence in Sport for Development. Soc. Sci. 2025, 14, 174. [Google Scholar] [CrossRef]

- Singh, A.; Le, T.; Le Nguyen, T.; Whelan, D.; O’Reilly, M.; Caulfield, B.; Ifrim, G. Interpretable Classification of Human Exercise Videos through Pose Estimation and Multivariate Time Series Analysis. In AI for Disease Surveillance and Pandemic Intelligence; Studies in Computational Intelligence; Shaban-Nejad, A., Michalowski, M., Bianco, S., Eds.; Springer: Cham, Switzerland, 2021; Volume 1013, pp. 181–199. [Google Scholar] [CrossRef]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A Closer Look at Spatiotemporal Convolutions for Action Recognition. arXiv 2017, arXiv:1711.11248. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Vrskova, R.; Hudec, R.; Kamencay, P.; Sykora, P. Human Activity Classification Using the 3DCNN Architecture. Appl. Sci. 2022, 12, 931. [Google Scholar] [CrossRef]

| Properties | [9] | [10] | [17] | [11] | Ours |

|---|---|---|---|---|---|

| Employs non-intrusive | ✓ | ✓ | ✓ | ✓ | ✓ |

| activity classifications | |||||

| Achieves substantial | ✓ | ✓ | ✓ | ✓ | ✓ |

| classification performances | |||||

| Requires inexpensive | ✓ | ✓ | ✓ | ✓ | |

| feature engineering | |||||

| Employs human | ✓ | ✓ | ✓ | ✓ | |

| pose estimation | |||||

| Incorporates feedback | ✓ | ✓ | |||

| on user performance | |||||

| Incorporates replay feature | ✓ | ||||

| for self-evaluation |

| Dataset Files | ||

|---|---|---|

| File Name | Style | Number of Files |

| Conv_corr_n | Conventional Correct | 100 |

| Conv_ehe_n | Conventional Early Hip Elevation | 100 |

| Conv_oe_n | Conventional Back Over Extension | 100 |

| Conv_rb_n | Conventional Rounded Back | 100 |

| R_corr_n | Romanian Correct | 100 |

| R_oe_n | Romanian Back Over Extension | 100 |

| R_rb_n | Romanian Rounded Back | 100 |

| S_corr_n | Sumo Correct | 100 |

| S_ehe_n | Sumo Early Hip Elevation | 100 |

| S_oe_n | Sumo Back Over Extension | 100 |

| S_rb_n | Sumo Rounded Back | 100 |

| Exp. | Goal | Input | Prediction | Result |

|---|---|---|---|---|

| 1 | To assess the best classifier for deadlift style recognition | All deadlift video and or keypoint data | Labels of the style of the deadlift being performed from previously unseen deadlift videos | Table 4 and Table 5, Figure 6 |

| 2 | To assess the best classifier for identifying the correct deadlift execution form | All videos depicting the different forms for each deadlift style | Labels of the execution form from previously unseen deadlift videos | Table 6, Figure 7, Figure 8, Figure 9, Figure 10 and Figure 11 |

| 3 | To compare the robustness of LSTM against the CNN-based model designs | All deadlift video and or keypoint data | Labels of the style and execution forms of the deadlift being performed from previously unseen deadlift videos | Table 4, Table 5 and Table 6 |

| Method | Metric | Romanian | Sumo | Conventional | Average |

|---|---|---|---|---|---|

| 2+1D CNN (with video frames only) | Precision | 0.92 | 0.98 | 0.97 | 0.96 |

| Recall | 0.98 | 0.97 | 0.94 | 0.96 | |

| F1-score | 0.95 | 0.98 | 0.95 | 0.96 | |

| 2+1D CNN (with video + keypoint overlay) | Precision | 1.00 | 0.95 | 0.97 | 0.97 |

| Recall | 0.96 | 0.97 | 0.98 | 0.97 | |

| F1-score | 0.98 | 0.96 | 0.98 | 0.97 | |

| LSTM (with keypoint vectors) | Precision | 1.00 | 1.00 | 0.98 | 0.99 |

| Recall | 0.98 | 1.00 | 1.00 | 0.99 | |

| F1-score | 0.99 | 1.00 | 0.99 | 0.99 |

| Method | Metric | Romanian | Sumo | Conventional | Average |

|---|---|---|---|---|---|

| 3DCNN (with annotated videos) [20] | Precision | 1.00 | 1.00 | 0.97 | 0.99 |

| Recall | 1.00 | 0.97 | 1.00 | 0.99 | |

| F1-score | 1.00 | 0.98 | 0.98 | 0.98 | |

| 3DCNN (with raw videos) [20] | Precision | 1.00 | 1.00 | 0.93 | 0.97 |

| Recall | 0.94 | 0.97 | 1.00 | 0.97 | |

| F1-score | 0.97 | 0.98 | 0.96 | 0.97 | |

| LSTM (with keypoint vectors) (proposed) | Precision | 1.00 | 1.00 | 0.98 | 0.99 |

| Recall | 0.98 | 1.00 | 1.00 | 0.99 | |

| F1-score | 0.99 | 1.00 | 0.99 | 0.99 |

| Method | Style | Metric | Back Overextension | Rounded Back | Early Hip Elevation | Correct Form | Average |

|---|---|---|---|---|---|---|---|

| 2+1D CNN | Conventional | Precision | 1.00 | 1.00 | 0.94 | 1.00 | 0.99 |

| Recall | 0.94 | 1.00 | 1.00 | 1.00 | 0.99 | ||

| F1-score | 0.97 | 1.00 | 0.97 | 1.00 | 0.99 | ||

| LSTM | Conventional | Precision | 0.94 | 0.92 | 0.79 | 1.00 | 0.91 |

| Recall | 1.00 | 0.75 | 0.94 | 0.94 | 0.91 | ||

| F1-score | 0.97 | 0.83 | 0.86 | 0.97 | 0.91 | ||

| 2+1D CNN | Romanian | Precision | 1.00 | 1.00 | - | 1.00 | 1.00 |

| Recall | 1.00 | 1.00 | - | 1.00 | 1.00 | ||

| F1-score | 1.00 | 1.00 | - | 1.00 | 1.00 | ||

| LSTM | Romanian | Precision | 1.00 | 1.00 | - | 0.70 | 0.90 |

| Recall | 0.56 | 1.00 | - | 1.00 | 0.85 | ||

| F1-score | 0.72 | 1.00 | - | 0.82 | 0.85 | ||

| 2+1D CNN | Sumo | Precision | 0.94 | 0.88 | 1.00 | 1.00 | 0.96 |

| Recall | 1.00 | 0.94 | 0.88 | 1.00 | 0.96 | ||

| F1-score | 0.97 | 0.91 | 0.93 | 1.00 | 0.95 | ||

| LSTM | Sumo | Precision | 0.57 | 1.00 | 1.00 | 0.72 | 0.82 |

| Recall | 0.75 | 1.00 | 0.56 | 0.81 | 0.78 | ||

| F1-score | 0.65 | 1.00 | 0.72 | 0.76 | 0.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grymyr, W.L.; Lawal, I.A. Automated Deadlift Techniques Assessment and Classification Using Deep Learning. AI 2025, 6, 148. https://doi.org/10.3390/ai6070148

Grymyr WL, Lawal IA. Automated Deadlift Techniques Assessment and Classification Using Deep Learning. AI. 2025; 6(7):148. https://doi.org/10.3390/ai6070148

Chicago/Turabian StyleGrymyr, Wegar Lien, and Isah A. Lawal. 2025. "Automated Deadlift Techniques Assessment and Classification Using Deep Learning" AI 6, no. 7: 148. https://doi.org/10.3390/ai6070148

APA StyleGrymyr, W. L., & Lawal, I. A. (2025). Automated Deadlift Techniques Assessment and Classification Using Deep Learning. AI, 6(7), 148. https://doi.org/10.3390/ai6070148