1. Introduction

Role-Playing Games (RPGs), as a significant branch of the gaming industry, have long been cherished by players worldwide. These immersive virtual worlds not only offer expansive narratives and dynamic environments but also introduce a plethora of Non-Player Characters (NPCs) for players to interact with. Traditional NPC systems, which utilize pre-designed scripted rules or dialogue responses [

1,

2], often struggle to adapt content in real-time and fail to handle complex and varied player inputs, significantly limiting the authenticity of NPC interactions and the immersive experience of players. With the advancement of artificial intelligence, the dialogue generation systems for NPCs are undergoing a paradigm shift from “scripted responses” to “cognitive intelligence”, with large-scale language models based on deep neural networks being widely applied to character personalization tasks [

3], markedly enhancing NPC dialogue capabilities. However, these models, trained on vast datasets, frequently generate responses that deviate from character settings in practical applications, a phenomenon known as “character hallucination” [

4]. Addressing this issue, existing research such as that by Greyling et al. [

5] has partially overcome this limitation through situational prompt engineering. Yet, when it comes to the deep psychological activities and complex decision-making of characters, relying solely on prompt engineering has proven inadequate for precise control over NPC behaviors. In response to these challenges, this study proposes a protective static knowledge fine-tuning approach to reduce the illusion problem of language models in NPC role playing, thereby generating more vivid and character-consistent dialogue content.

Additionally, research by Panwar et al. [

6] highlights the significant shortcomings of NPCs in memory management. Given that memory is a crucial factor in human personality generation [

4], the current limitations in NPC memory mechanisms severely constrain the enhancement of their realism. To address this, this study draws on the Atkinson–Shiffrin [

7] model of human memory to construct an NPC memory management system composed of short-term working memory and long-term semantic memory. Inspired by Ebbinghaus’ forgetting curve [

8], the two memory modules are connected through a consolidation mechanism based on the number of accesses. Short-term memory is responsible for temporarily storing and processing immediate information, requiring frequent updates during continuous interactions with players. The Knowledge Graph (KG) [

9], as a structured representation of knowledge, efficiently organizes entities (e.g., characters, items) and their relationships (e.g., ownership, interaction) within the game world. Its editable and searchable nature aligns well with the dynamic demands of short-term memory. The long-term memory module stores all critical historical interactions of the NPC. As the game progresses, its size continues to grow, posing significant challenges for real-time retrieval. Vector databases enable efficient retrieval and rapid access to accumulated knowledge by leveraging similarity search in high-dimensional vector spaces. Accordingly, in our NPC memory framework, short-term memory is managed via an editable knowledge graph, while long-term memory is organized using a vector database. A dynamic indexing table tracks node access frequency (i.e., consolidation counts), facilitating smooth memory transitions. By simulating human memory consolidation, the system enables NPCs to manage memory states intelligently, significantly improving role consistency in dialogue generation.

Nonetheless, accurately interpreting player intent and retrieving relevant knowledge in real-time interaction scenarios remains a key challenge for future exploration. Current text parsing research mainly relies on the problem recognition capability of Large Language Models (LLMs), but traditional sequence-to-sequence architectures are prone to introducing noise and, influenced by the “hallucination” problem [

10], may lead to relationship recognition errors or entity omissions. It is worth noting that, in the process of knowledge graph-based retrieval, the system essentially performs reasoning based on the logical structure of the query [

11]. This suggests that the entire process—from understanding the question to retrieving from the knowledge graph—can be interpreted through formal logic-based methods. Therefore, this study adopts Abstract Meaning Representation (AMR) [

12], a structured representation with clearly defined logical relationships, to parse the logical structure of sentences. As a widely validated semantic representation framework, AMR offers distinct advantages in capturing the core semantic relations and logical structures of sentences. By leveraging AMR parsing, the system can precisely identify the core semantic nodes and key relations within the input sentence, thereby optimizing the knowledge graph retrieval process and effectively reducing noise interference.

Based on the above research, this paper proposes an innovative framework for personalized NPC dialogue generation. This framework optimizes the NPC language model through protective fine-tuning, integrates knowledge graphs and vector databases to construct a dynamic memory system, and employs real-time AMR semantic parsing to achieve precise intent understanding, ultimately generating dialogue responses that are both consistent with character settings and highly personalized. The experimental results demonstrate that this framework significantly enhances the authenticity of NPC dialogues and the immersive experience of players. The main contributions of this study include the following:

We propose a novel personalizeddialogue generation approach that integrates static knowledge consolidation with dynamic memory management, effectively mitigating the issue of “character hallucination” and enhancing the NPC’s capabilities in dynamic memory and personalized dialogue generation.

We introduce a new graph structure, AMR–KG, constructed by integrating and pruning the Abstract Meaning Representation (AMR) graph from player input with the NPC’s short-term memory Knowledge Graph (KG), which improves the semantic accuracy of personalized dialogue generation.

Through comparative and ablation experiments, we demonstrate that our dialogue generation method can yield semantically accurate personalized responses, showing performance improvements over baseline and state-of-the-art methods.

2. Background and Related Work

Smooth and immersive interactions are the foundation of role-playing games. In game design, NPC dialogue interactions are often used to provide players with quests, backstories, and emotional value, making the dialogue effectiveness of non-player characters particularly important, as they can directly interact with players to create an immediate impact [

2]. In early game design, NPC dialogues were hard-coded, a method that offers several advantages, including thematic and causal coherence within the game world context [

13]. However, pre-written dialogues may diminish the presence of NPCs and reduce gameplay flexibility [

14], and the cost of manual creation is also high and time-consuming. Subsequently, various procedural dialogue generation methods have emerged [

15,

16], among which neural network-based dialogue generation methods are the most prominent. Dai [

17] investigated the NPC dialogue generation task from the perspective of neural text generation, finding that architectures with convolutional encoders and long short-term memory decoders performed better than ordinary LSTM language models. With the popularity of large language models, the task of character dialogue generation has seen extensive research centered around these models. Teams from Stanford University and DeepMind [

18] demonstrated the ability of LLMs to simulate human memory, reflection, and dialogue through prompts. Character-LLMs [

19] construct specific character experiences and materials, training more stable character agents through SFT technology. These studies show that, if the character data in the input text is reasonably designed, language models can effectively simulate the input characters, inferring character behaviors and dialogue logic through attributes and memories. Game NPCs constructed by language models have also demonstrated strong interactive capabilities. Stegeren et al. [

20] fine-tuned GPT-2 to learn the structure of quest titles, objectives, and dialogues in “World of Warcraft”. However, their work served only as a creative aid and was not applied to real-time content generation. These efforts aim to combine LLMs with character simulation to form more intelligent agent interactions. However, due to training methods, LLMs sometimes fabricate information and produce hallucinations [

21], and mitigating this limitation is crucial for maintaining responses that are character-consistent and context-aware.

To better apply large language models to specific domains, increasing research focuses on the integration of external knowledge with language models, such as content planning [

22], document retrieval [

23], and language generation [

24]. Vector databases store data [

25] in the form of high-dimensional vectors and leverage similarity search mechanisms in vector spaces, offering significantly higher efficiency in knowledge retrieval and utilization compared to traditional databases [

26]. In recent years, they have become a critical infrastructure supporting large language models (e.g., GPT) and Retrieval-Augmented Generation (RAG) technologies. Knowledge-enhanced dialogue generation, as a data-driven method, incorporates topological structures and knowledge, providing deeper semantic understanding capabilities for large language models [

27]. Kim et al. [

28] validated the effectiveness of knowledge graphs as memory systems, while Tang et al. [

29] constructed multi-hop knowledge graphs to enhance dialogue generation. Ashby et al. [

30] manually crafted knowledge graphs of key game knowledge and employed large language models to create game dialogues. These experiments have proven that knowledge graphs can help AI better understand the game world and assist in NPC dialogue generation tasks. However, how to effectively combine this graph knowledge with specific player questions remains a challenge. AMR, as a widely used semantic representation method, has also been confirmed to improve the performance of LLMs as an intermediate representation [

31]. Utilizing this prior knowledge can significantly reduce the agent’s policy search space by decomposing complex tasks into smaller, more focused goals, making it more efficient and less noisy.

In summary, our proposed approach first fine-tunes the NPC language model. During interaction, it parses the player’s input text using Abstract Meaning Representation (AMR), integrates it with the short-term memory knowledge graph to construct an AMR–KG graph, and then prunes the graph. The pruned AMR–KG is fed into the language model, which retrieves relevant information from the vector-based long-term memory to generate the final personalized response.

3. Method

We define two types of personalized knowledge for NPC characters: static knowledge

and dynamic knowledge

. The former refers to inherent character settings such as personality traits, biographical data, and key life experiences, while the latter consists of the generative knowledge acquired during interactions, including player interaction logs, key events, and relational networks with other entities. In our implementation, we first construct the static knowledge

by collecting character background information and fine-tune the language model accordingly. During interactions, dynamic knowledge

is stored in external resources for retrieval and updates. As shown in

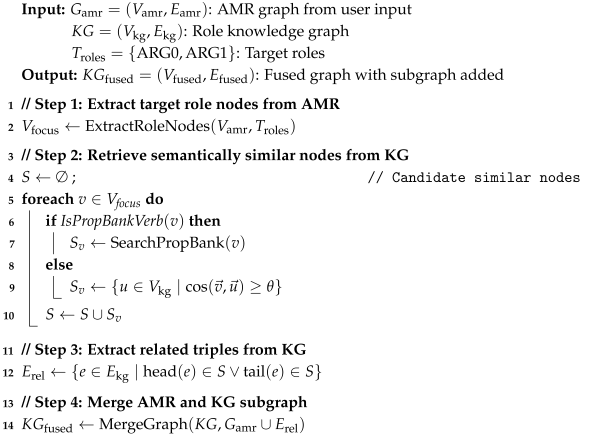

Figure 1, the overall framework consists of a static knowledge fine-tuning module (right) and a dynamic knowledge processing module (left), which work collaboratively to ensure character consistency and progressive development.

3.1. Static Knowledge Training Model

In the static knowledge fine-tuning module, the base language model (such as LLaMA) is fine-tuned based on the organized static data

, while referencing the Character-LLM [

19] to introduce a protective dataset. This ensures that the dialogue model does not generate content beyond the character’s cognitive scope during conversations. For example, when a user inputs, “Do you know Python?”, the model generates a response that aligns with the character’s knowledge background, such as, “Python? I’m not quite sure what that is. Could you tell me more?” rather than directly leveraging the training data to provide a detailed explanation of the Python 3.6.7 programming language. In this way, the model can better maintain character consistency, avoiding the generation of content that deviates from the character’s settings, thereby enhancing the authenticity and immersion of the dialogue.

3.1.1. Constructing the Static Knowledge Set

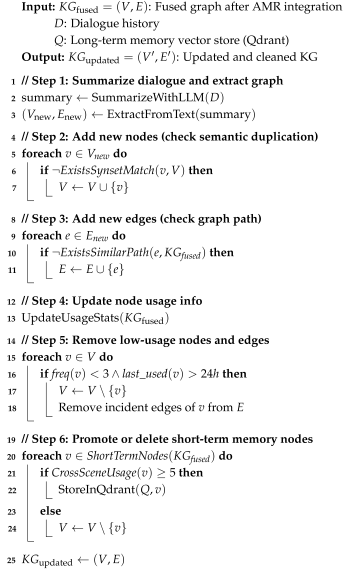

To construct a high-quality NPC character dataset, this study adopts a multi-dimensional data collection strategy, selecting the “Harry Potter” series as the foundational background and referencing the role-playing game “Hogwarts Legacy” to interact with characters from the wizarding world. As shown in the flowchart in

Figure 2, the first step is to capture relevant Wikipedia pages, extracting character background summaries from the introduction sections and structurally parsing character traits from the main body, including personality and characteristics, development trajectories, and key plot memories. Next, we prompt gpt-4o to reconstruct the extracted plots into concise interactive scenes, with appropriate supplementation to ensure that each scene includes at least the following elements: location, brief contextual background, character’s inner thoughts, and dialogue. It is important to note that the scene is constructed from the subjective perspective of the simulated character, thus only reflecting their internal psychological state. The resulting interaction scenes are segmented into a series of structured blocks and stored in JSON format for subsequent use in supervised fine-tuning. Additionally, we integrate story summaries from the “Harry Potter” series to build a unified worldview background and supplement the dataset with real dialogue samples extracted from the Harry Potter Dialogue (HPD) dataset (

https://nuochenpku.github.io/HPD.github.io, accessed on 5 March 2025). The dialogue data statistics for the selected characters are summarized in

Table 1.

3.1.2. Model Fine-Tuning

In role-playing tasks, the model’s knowledge scope must strictly align with the character’s background, with character consistency taking precedence over factual accuracy.To promote the dialogue personalization of NPC characters and mitigate “character hallucination”, we focus on training the model to learn not to provide answers but to express confusion or ignorance [

19]. To this end, we designed adversarial questioning templates (as shown in

Table 2) to prompt gpt-4o to generate protective scenarios, training the character to actively forget or deny knowledge beyond their cognitive boundaries, thereby reinforcing the model’s awareness of character-specific knowledge constraints. These scenarios feature dialogues centered on topics unrelated to the character, with GPT simulating a curious role posing relevant or follow-up questions to the target character. Although the number of protective scenarios per character is limited (about 100), and their proportion in the dataset is relatively small, they significantly enhance the model’s character consistency. Finally, the generated protective scenarios are integrated with static knowledge into the training data to guidethe target character to exhibit ignorance and confusion responses that align with their identity. The scope of character knowledge and the set of unrelated topics can be represented as:

Here,

represents the set of knowledge related to the character, where

denotes specific knowledge points.

represents the set of topics unrelated to the character, where

denotes specific topics. The model minimizes the loss function by maximizing the log probability of the target words, thereby making the generated text closer to the target text. The cross-entropy loss function is as follows:

Here, N is the number of training samples, T is the sequence length of each sample, is the t-th target word of the i-th sample, is the first words of the i-th sample, and represents the model parameters. We will construct multiple NPCs and fine-tune a separate base model (such as LLaMA) for each NPC’s data to prevent knowledge hallucination caused by multiple NPCs sharing a single model. Due to constraints on data and resources, we use small-scale NPC data for fine-tuning.

3.2. Dynamic Knowledge Processing

In the dynamic knowledge processing module, given a user input and its context (Context), the system first parses the user input into an Abstract Meaning Representation (AMR) semantic graph. Subsequently, a graph matching algorithm is used to retrieve relevant information from the dynamic memory knowledge graph , and the AMR graph is integrated with the retrieval results to form a unified graph structure. The integrated graph information is encoded by a graph neural network to generate high-dimensional semantic representations.

3.2.1. Graph Generation

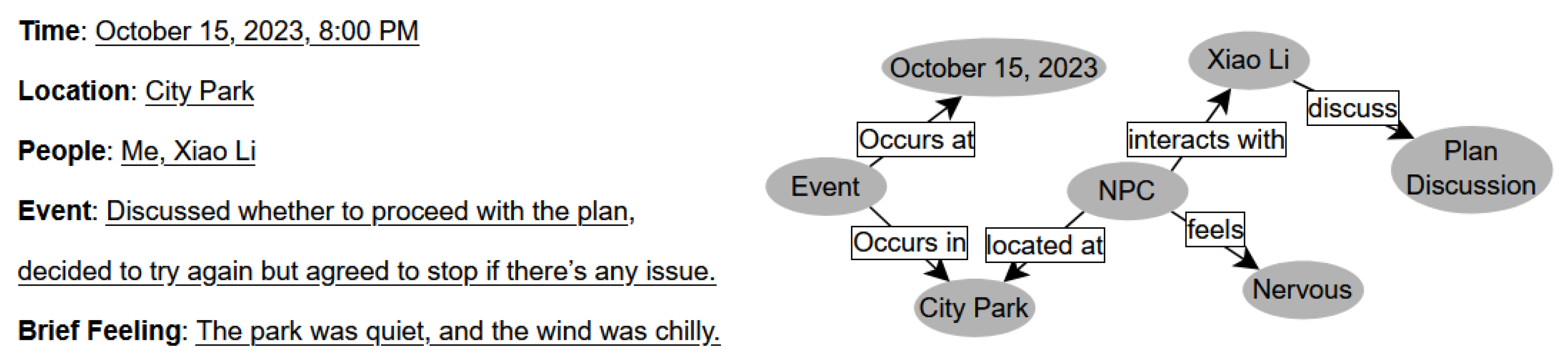

To efficiently and concisely upload character profiles, we prompt the LLM to summarize and categorize significant experiences within a week into specific experience descriptions based on the character’s background. Specifically, the LLM is prompted to record experiences from the target individual’s perspective in the form of a diary and structure them into a knowledge graph format. This includes time, location, weather, character interactions, and the NPC’s self-reflection. We need to structure the information, extract entities (such as characters, locations, and events), attributes, and relationships (such as positioning, ownership, and participation), and represent them as nodes and edges. The knowledge graph in our task generation framework is implemented using Neo4j. An example can be seen in

Figure 3.

Given an input text

T, we use SpringAMR [

32], a BART-based pre-trained AMR parser [

33], to encode the source sentence into an AMR graph

. The basic structure of AMR is a “single-root directed acyclic graph”. Concept words are abstracted as concepts, serving as “nodes” in the graph, while function words without concrete meaning are abstracted as “edges”. The node types in the AMR graph typically include verbs, operators (op), nouns (n), arguments (arg), and modifiers (mod), among others. Here, ARG0 and ARG1 around the verb represent agent and patient, respectively, the root node represents the central point of the sentence, also known as the focus, and other concept nodes are hierarchically arranged based on semantic relationships.

3.2.2. Graph Integration

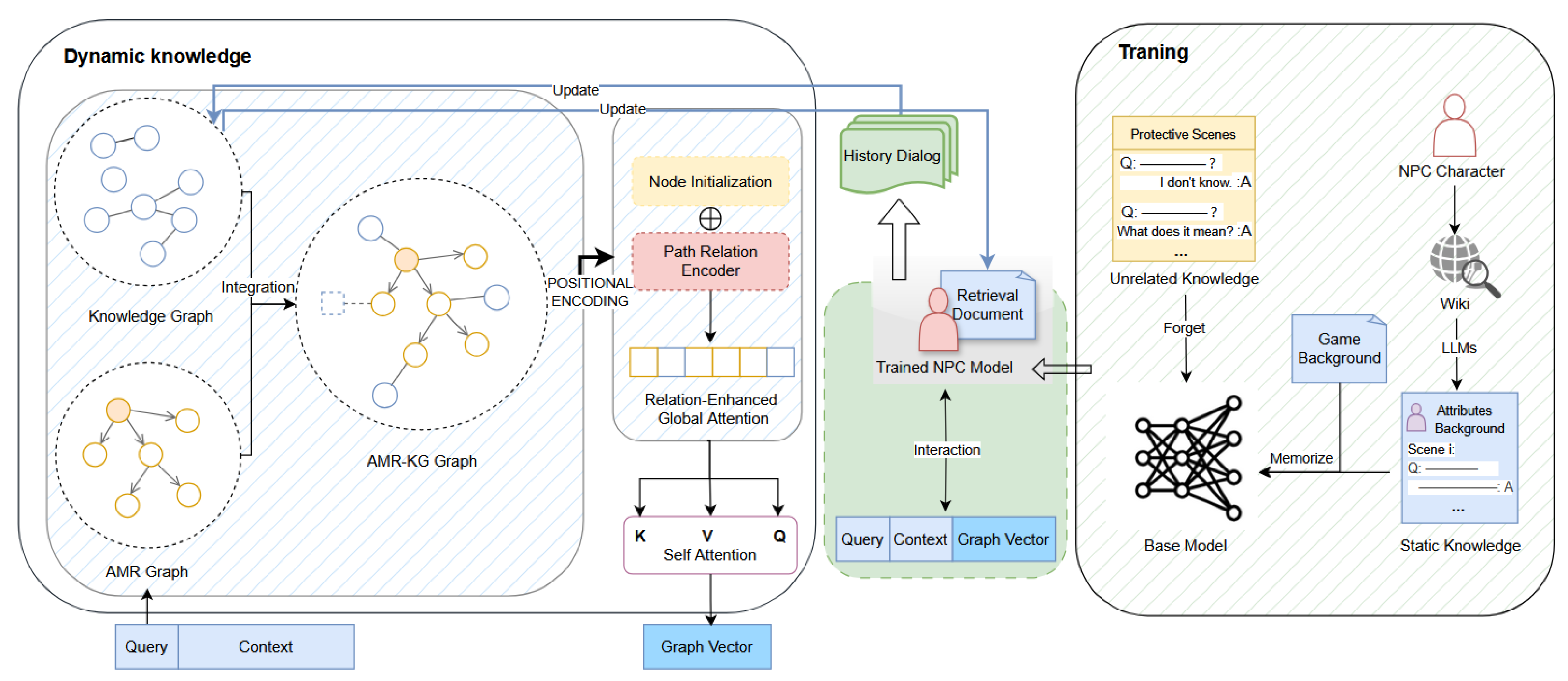

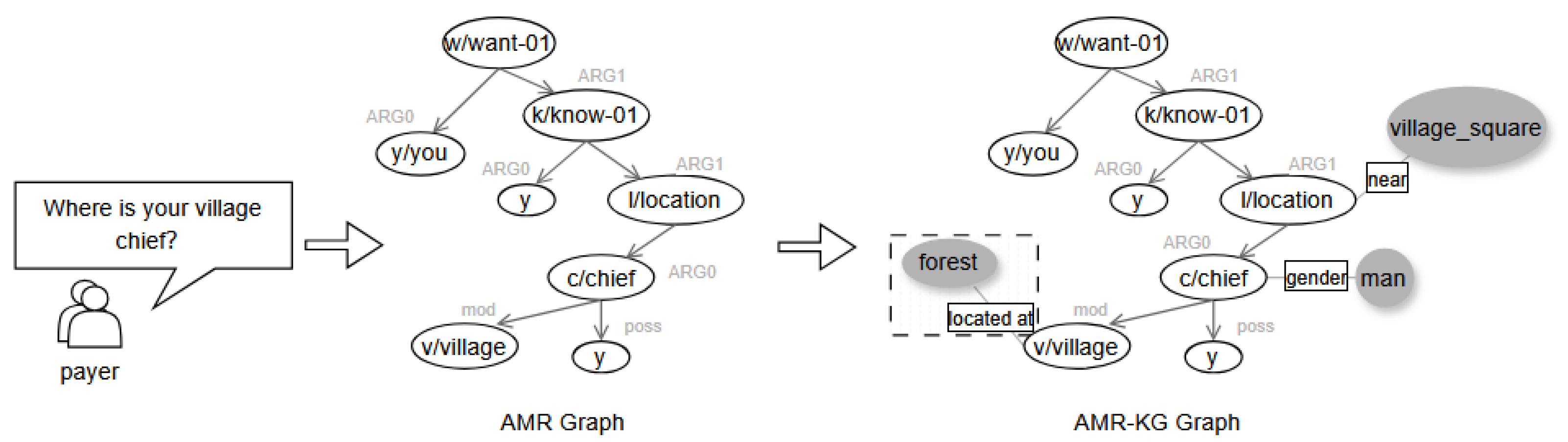

The detailed integration and pruning process is illustrated in

Figure 4. After obtaining the user’s AMR semantic graph, the system retrieves semantically similar nodes from the constructed character knowledge graph. This is typically achieved by computing the cosine similarity between vector representations of the nodes and matching them based on similarity scores. It is important to note that verb and noun concepts in AMR graphs often include suffixes (e.g., “think-01”) to distinguish different senses of the same base word. Matching solely based on the word prefix can easily lead to semantic confusion (e.g., “play-11” vs. “play” have different meanings). To address this, we retrieve corresponding synonyms from PropBank [

34] based on the suffix of the AMR concept and perform sense disambiguation using sense annotations. The entire integration process is implemented using the Cypher query language of the graph database, ensuring the consistency and integrity of the knowledge graph by inserting new nodes and relations. The specific algorithm can be seen Algorithm 1.

| Algorithm 1: AMR–Knowledge Graph Fusion |

![Ai 06 00093 i001]() |

3.2.3. Graph Pruning

The integrated knowledge graph may contain a large number of irrelevant paths. Retaining these extraneous relations can distract the model’s attention from critical reasoning paths. Therefore, the purpose of pruning is to remove knowledge graph nodes that are not directly related to the main concepts in the AMR graph, simplifying the graph structure and improving reasoning efficiency. In the AMR graph, noun nodes carry the core information of the sentence, such as “who”, “what”, “where”, etc., while verb nodes are the core predicates of the sentence. The verb nodes connected to noun nodes form phrases, carrying the core information of the sentence and serving as the key to semantic understanding. ARG0 and ARG1, representing the initiator and recipient of an action, respectively, are the most essential semantic roles for annotating primary verbs and nouns in the PropBank framework, and they capture the core semantic structure of a sentence. We analyzed the core semantic roles in the AMR graphs corresponding to the datasets used in this study. As shown in

Table 3, ARG0 and ARG1 are the two most frequently occurring relations across all categories. To prevent the graph from iteratively expanding into a large number of irrelevant paths, we adopt the following pruning strategy: only the noun and verb nodes associated with ARG0 and ARG1 in the AMR graph are retained, while all Knowledge Graph (KG) nodes connected to other AMR nodes are removed. This results in a pruned integrated graph:

.

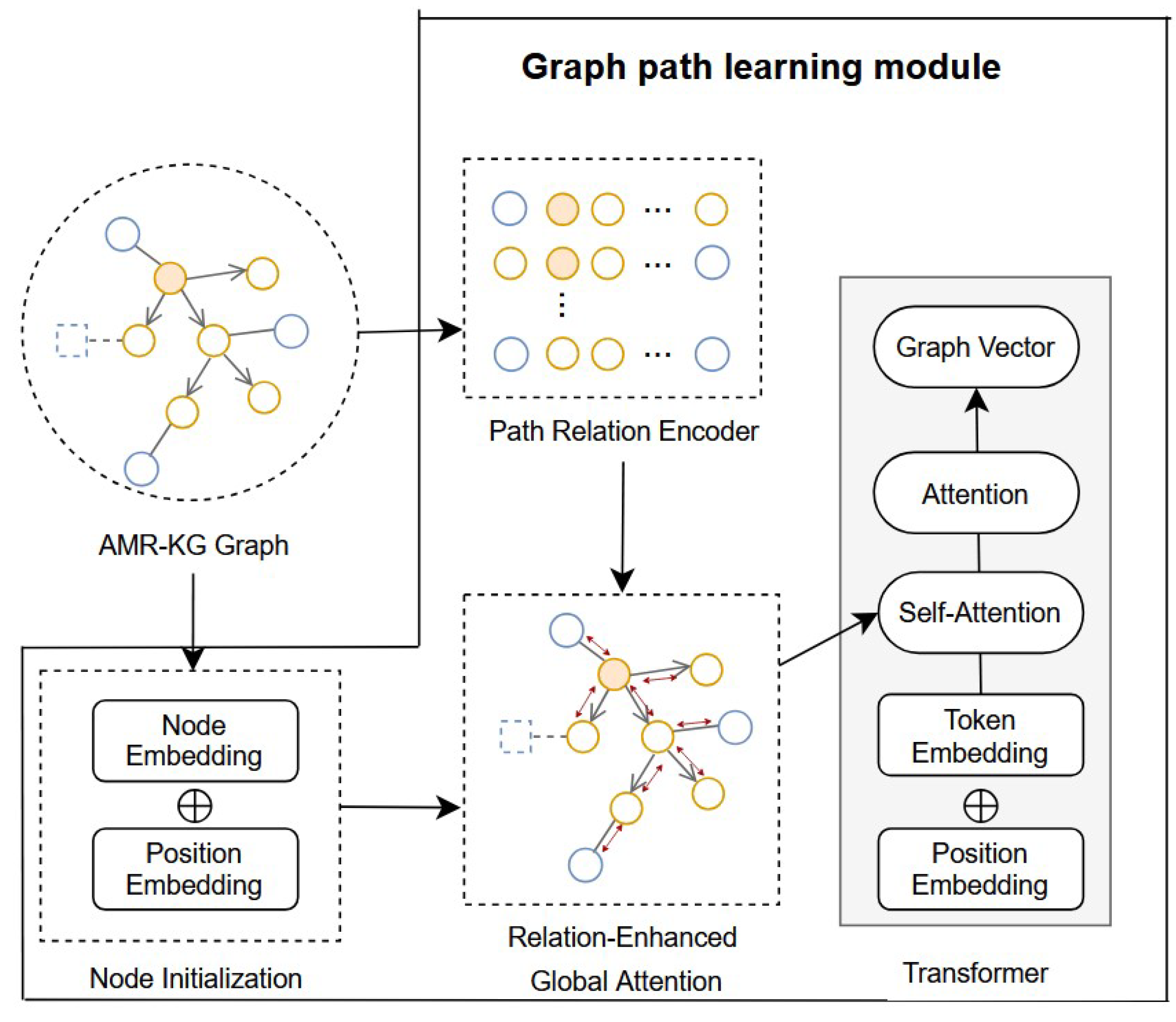

3.2.4. Graph Path Learning Module

This module is a graph path learning method based on a relation-enhanced attention mechanism, implemented as shown in

Figure 5. It primarily initializes the concept node vectors in the AMR–KG graph using GloVe [

35] word embeddings and absolute position embeddings. In the relation encoding stage, we refer to Cai’s [

36] graph relation path reasoning method, employing a recurrent neural network with Gated Recurrent Units (GRUs) to encode the relationship between two concepts in the

graph as a distributed representation. Specifically, the forward GRU and backward GRU process the forward and backward information of the relation path, respectively, as shown in the following formulas:

Here,

represents the shortest path between two nodes. The weight matrices of the forward and backward GRU networks (such as the input gate, forget gate, and output gate parameters) are initialized using Xavier uniform distribution, with bias terms initialized to zero. The initial value of the hidden state is a zero vector. The final relation encoding is the concatenation of the final hidden states of the two networks:

Next, we use the relation-enhanced attention mechanism to compute the attention scores between concept nodes. This mechanism considers both the concept nodes themselves and their relationships, as shown in the formula:

Here,

and

are concept embeddings,

and

are forward and backward relation encodings, and

and

are parameter matrices in the attention mechanism, initialized with Xavier to ensure numerical stability at the beginning of training. The term

is the original term in the standard attention mechanism, while

and

capture the relational biases relative to the source and target, respectively. The term

represents a general relational bias. After obtaining the attention scores, we inject them into the concept representations to update the concept embeddings. Subsequently, the updated concept representations are summed to obtain the vector representation of the entire graph. Finally, this graph vector is input into the Transformer layer to model the interaction between the AMR graph and knowledge graph. The Transformer layer outputs the final graph representation, which will be used in the subsequent answer prediction process. Assuming the output of the language model is

, then:

3.3. Dialogue Generation Module

Qdrant is an open-source vector database and similarity search engine designed for high-performance and large-scale vector retrieval tasks. Given the final semantic encoding, the character model queries the vector database to retrieve relevant information, enabling the generation of personalized responses aligned with the character’s background. For node-level retrieval, individual concept nodes from the knowledge graph are used as query units. Their vector representations and are used to search for semantically similar nodes in the vector database, allowing one to supplement missing relations and expand entity attributes—addressing fine-grained knowledge matching challenges. For graph-level retrieval, Approximate Nearest Neighbor (ANN) search in Qdrant is performed using the full graph embedding . This then locates inference-critical paths within the AMR graph using the "high_attention_paths" field in the payload, enabling targeted and logic-consistent knowledge supplementation.

Considering the significant influence of NPC personality traits on dialogue style, we systematically collected the personality trait data of target characters from the Personality Database (PDB) website. PDB [

37], as a professional personality analysis platform, has accumulated MBTI and Enneagram data for over 2 million individuals (including both real and fictional characters). Based on these authoritative personality trait data, we designed a meta-prompt mechanism to translate character personality traits into actionable language style guidelines. The final reference prompt is for example: `You are to role-play as Hermione Granger, a student at Hogwarts, with an ESTJ personality tendency. Please interact with me based on your personality traits and experiences using the [Qdrant Vector Database]. Input: [Query] + [Context] + [Graph Vector].’ This personality trait-based prompt design significantly enhances the personalization and consistency of character dialogues.

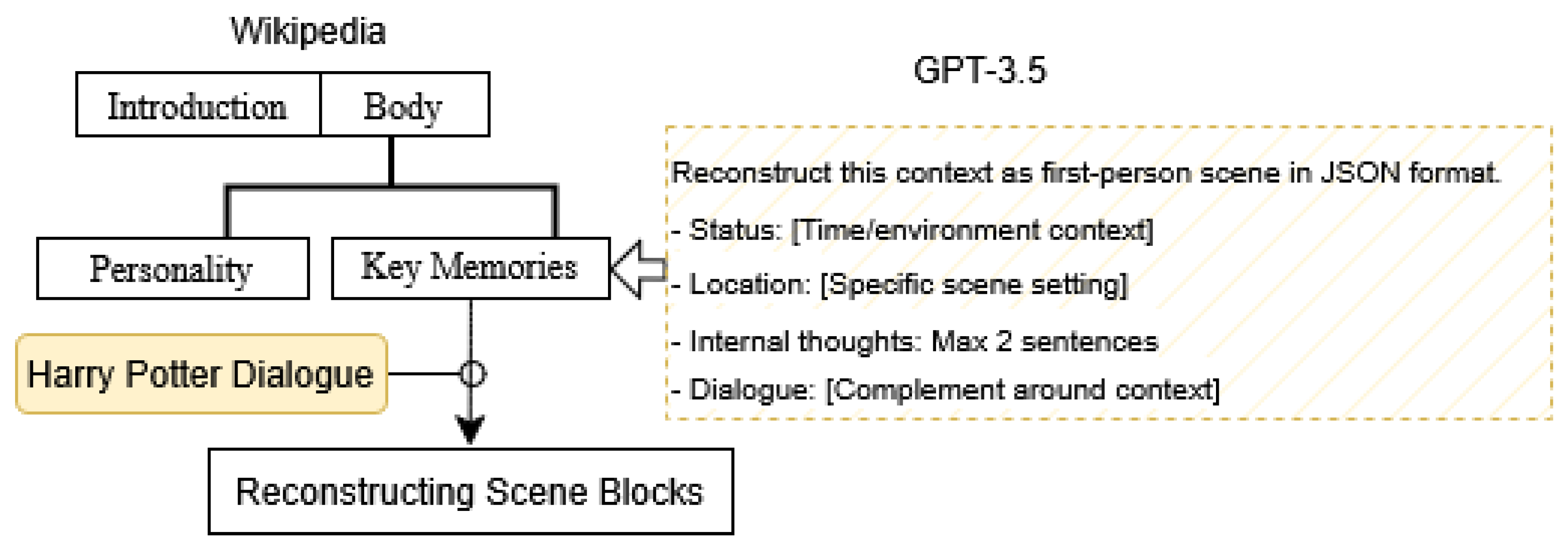

3.4. Memory Management Module

To achieve real-time updating and optimization of character memory, this study designs a memory management system based on cognitive science theory (see Algorithm 2 for the specific algorithm), which simulates the consolidation and forgetting processes of human memory through memory updating and elimination. After each round of dialogue interaction, we guide gpt-4o to automatically generate a summary of the interaction, extracting key information nodes and their semantic relations. Then, with the help of WordNet synset for semantic alignment and graph path search, we determine whether or not this information already exists in the current knowledge graph. For newly identified nodes and relations, the Cypher query language is used to write them into the Neo4j knowledge graph, realizing incremental knowledge updates.

To balance the cognitive load and retrieval efficiency of the knowledge graph, this study proposes a biologically inspired two-level forgetting strategy, emulating human memory mechanisms. The strategy employs a dynamic index table (see

Table 4) to track the real-time usage frequency of knowledge nodes, enabling dynamic knowledge elimination through the following hierarchical process. Specifically, in the short-term memory layer, it clears nodes in the knowledge graph that have not been accessed and have a total call count of less than 3 in a certain time period (e.g., every 24 h). This strategy is based on the minimum repetition theory [

38], simulating the forgetting process of short-term memory that has not been consolidated; in the long-term memory conversion layer, every 7 days the system identifies nodes or edges with more than 5 accesses (following the “five-times memory rule” [

8]) and upgrades them to long-term memory stored in the vector database, while eliminating other low-frequency nodes that have not been accessed for a long time. This design not only follows the memory patterns of the Ebbinghaus forgetting curve but also effectively controls the scale of the knowledge graph through a dynamic adjustment mechanism, helping ensure character consistency and knowledge availability of NPCs in continuous interactions.

| Algorithm 2: Knowledge Graph Update & Memory Management |

![Ai 06 00093 i002]() |

4. Experiments

To comprehensively evaluate the contributions of each component and the performance of the generation method, we designed and conducted extensive ablation experiments and comparative experiments. First, we deeply analyzed the contributions of core components such as static knowledge fine-tuning, knowledge graph fusion, AMR parsing, and integrated graph pruning to the final performance. Second, we applied our method to different large language base models and conducted quantitative analysis and comparison on key metrics such as dialogue quality, character consistency, and dynamic adaptability. Additionally, to further validate the practical effectiveness of the method, we selected representative dialogue cases for qualitative analysis, focusing on the accuracy of NPC’s expressions of confusion at knowledge boundaries and the ability to maintain mid-term memory.

4.1. Data and Experimental Setup

Data: Wikipedia is a multilingual encyclopedia based on wiki technology, typically structured in an introduction-body format. The introduction briefly summarizes the most important basic information of the entry, while the body is divided into multiple sections, including historical background, major achievements, and related controversies. We collected Wikipedia information for fictional and historical characters of different ages, genders, and backgrounds, using the introduction as character attributes and the historical background and development in the body as character memories. The selected characters and corresponding data statistics are shown in

Table 1. During data processing, we covered representative characters of different ages, genders, and alignments; systematically extracted character attributes; transformed key plot points into computable memory units; and precisely matched dialogues from the HPD dataset with specific characters.

ConvAI2 [

39] focuses on dialogues regarding personal interests, including 17,878 training pairs, 1000 validation pairs, and 1015 test pairs. We selected 500 pairs each from the validation and test sets, totaling 1000 test pairs, as player inputs.

The Harry Potter Dialogue (HPD) dataset is constructed based on all dialogue scenes from the “Harry Potter” series of novels, covering both English and Chinese versions. The dataset construction process involved extracting dialogue content from the novels, with fine-grained annotations by four professional Harry Potter fans. Each dialogue session is time-sensitively annotated according to the progression of the story, ensuring that character relationships and attributes dynamically change as the story unfolds. The training set contains 1042 dialogue sessions, each with only one positive response, while the test set contains 149 sessions, each with 1 to 3 positive responses and an average of 9 negative responses.

Evaluation Metrics: When evaluating sentence quality, BLEU, ROUGE, and BERTScore were chosen as automatic evaluation metrics, primarily because they provide a comprehensive measure of the similarity between generated text and reference text. Additionally, LLM-based evaluation has been shown to be highly consistent with human evaluation and can even demonstrate more objective and comprehensive assessment when dealing with novel and complex knowledge. Therefore, this paper requires gpt-4o to score the generated sentences on hallucination mitigation, memory correctness, and character personalization, and calculates the average score (7-point Likert scale [

40]) to represent the effectiveness of the model’s personalized generation capabilities. Specifically, we mainly evaluate the following three dimensions:

Hallucination (Hal.): The content of the generated response should align with the NPC’s identity, profession, and environment, with tone and wording consistent with the preset personality. The knowledge should not exceed the cognitive scope (e.g., a villager should not know the king’s secrets).

Memorization (mCor.): Whether the multi-turn dialogue correctly recalls and applies key information from historical turns. For example, if the previous dialogue mentioned “I am going to Paris tomorrow”, the model should correctly respond to “Paris” in the current turn.

Personality (Per.): The model should mimic the character’s thinking or speaking style. The greater the difference in response styles among different characters to the same question, the more personalized the generated dialogue.

Experimental Setup: In the first generation, we utilized nucleus sampling for agent response generation, with parameters set to p = 1 and temperature t = 0.2. A maximum token length limit of 2048 tokens was enforced, and model generation was halted upon encountering the End-of-Turn marker (EOT) marker. For baseline models, responses were obtained by pruning the text generated in each turn.

During the training phase, we selected the LLaMA 7B model [

41] as the base model and trained it using a supervised fine-tuning strategy. For each selected character, we fine-tuned the model using a reconstructed experience dataset. During training, we inserted a meta-prompt at the beginning of each example, which detailed the background, time, and location of the scenario to help the model better understand the context. For optimization, we used the AdamW optimizer (weight decay 0.1,

,

,

= 1 × 10

−8) and adopted a linear warm-up and decay strategy for the learning rate. Additionally, we set the batch size to 64 and disabled dropout, allowing the model to overfit the training set, which resulted in better generation outcomes. The entire training process consisted of 10 epochs, and we manually selected checkpoints from the 5th and 10th epochs for evaluation. This approach ensured that the model fully learned the experiences and personality traits of the characters.

In the graph integration phase, we first used the base BART model [

33] to evaluate the performance improvement of the language model after incorporating external knowledge graphs, enabling comparison with other enhancement methods. Subsequently, we implemented the entire framework based on the LLaMA model.

Baselines: To verify the generalization ability of the proposed method, we applied the model to two different base models (LLaMA-7B and Qwen-7B [

42]) and conducted comparative experiments. In addition, we selected several competitive baselines using the same encoder architecture for fair comparison. These include instruction-tuned LLaMA 7B-based models such as Alpaca 7B [

43] and Vicuna 7B [

44] and knowledge graph-enhanced generation models such as KG–BART [

45] and DCRAG [

46], as well as models optimized for character consistency [

47].

Specifically, KG–BART integrates commonsense knowledge triples from ConceptNet into the BART model and leverages graph attention mechanisms to enhance relational reasoning among concepts. DCRAG employs a multi-stage collaborative reasoning process to identify knowledge requirements within the dialogue and retrieves relevant information from external knowledge graphs to augment GPT-3.5 generation. Liu et al. [

47] proposed a Retrieval-Augmented Generation (RAG) method optimized for GPT, which effectively addresses the Out-of-Persona (OOP) issue in open-domain dialogues and enhances character consistency. All the above models were evaluated with a unified 7B parameter setting to ensure comparability. Through comparative experiments, we comprehensively assessed the advancements of the proposed method, as well as its adaptability and performance advantages across different datasets and language models.

4.2. Ablation Experiments

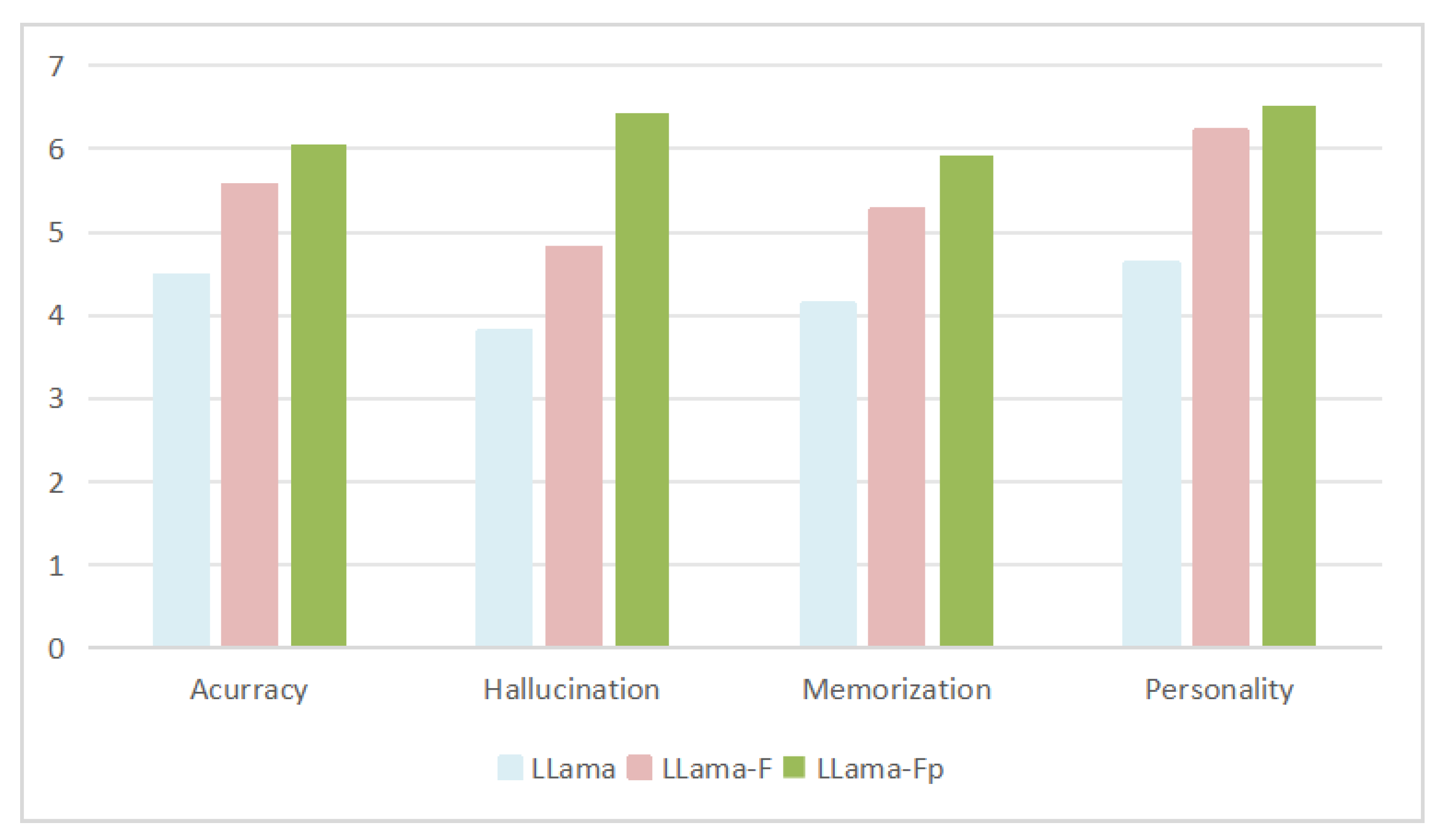

Experiment 1: To deeply analyze the component effects of the static knowledge fine-tuning module, this study designed a systematic experimental plan. We evaluated the impact of each component on dialogue generation performance on the HPD dataset test set, focusing on four core metrics. The experiment set up three comparison conditions: (1) LLama (instruction fine-tuning only); (2) LLama-F (static knowledge fine-tuning); (3) LLama-Fp (static knowledge fine-tuning + protective scenarios). As shown in

Figure 6, the experiment used Likert scale scoring, and the results showed that static knowledge fine-tuning significantly outperformed basic instruction fine-tuning across all metrics, with hallucination mitigation showing the most notable improvement (approximately 40% increase).

This experiment dissected the static knowledge fine-tuning module, analyzing the roles of fine-tuning and protective scenario components. The impact of each component on the final dialogue generation’s accuracy, hallucination mitigation, memory correctness, and character personalization was tested on the HPD dataset test set. Notably, the instruction fine-tuning model performed the worst in hallucination mitigation, validating that character hallucination is one of the main challenges for LLMs in role-playing tasks and highlighting the practical significance of this study. By introducing protective scenario training, LLama-Fp showed a significant improvement in hallucination mitigation compared to LLama-F (approximately 34% increase), confirming the effectiveness of the protective scenario component in suppressing character hallucinations. The dialogue knowledge generated in this study achieved broad convergence in terms of character alignment.

Through the systematic testing of questions beyond the character’s knowledge scope, we present typical response cases for different NPC roles in

Table 5. The text highlighted in brown indicates uncertainty about non-character knowledge, while the text highlighted in blue represents the NPC’s personality traits. The analysis results show that the proposed method can generate responses that maintain character personality traits while appropriately expressing knowledge limitations. For example, Hermione exhibits strong curiosity and a desire for knowledge when faced with unknown magical theories, while rigorously pointing out the current knowledge gaps; Voldemort, on the other hand, tends to conceal his ignorance with disdain. These responses not only reflect the inherent personality traits of the characters but also demonstrate a reasonable awareness of cognitive boundaries.

Experiment 2: This experiment evaluates the impact of core modules in the dynamic knowledge processing pipeline (including AMR parsing, knowledge graph retrieval, knowledge fusion, and graph pruning) on system performance. We selected BART (Bidirectional and Auto-Regressive Transformers) as the base model due to its unique bidirectional encoding and auto-regressive decoding capabilities, which are particularly suitable for the generation tasks in this experiment. By combining dynamic knowledge graph encoding with BART, we conducted an in-depth analysis of the contributions of each module to response quality.

The experimental results in

Table 6 show that the baseline accuracy of using only BART fine-tuning is 59.81%, while models incorporating AMR graphs and knowledge graphs significantly surpass this baseline, confirming the enhancement effect of external knowledge on the generation model. Notably, the model combining pruned AMR–KG graphs achieves the highest similarity in terms of accuracy, lexical similarity (BLEU), and semantic similarity (BERTScore), validating the effectiveness of our proposed knowledge fusion and pruning strategies. In terms of the ROUGE metric, the model using only KG graphs performs the best. This may be attributed to the explicit storage of entity relationships in the KG graph, which enables more comprehensive coverage of key entities from the reference text during generation, thereby improving the recall of ROUGE. This also reflects the advantage of KG graphs in generating diverse text. Although the AMR–KG graph sacrifices some diversity due to pruning, it improves precision by focusing on core semantics (ARG0/ARG1).

Further analysis reveals that the introduction of AMR graphs generally improves dialogue generation performance, while the addition of KG graphs significantly enhances character memory recall capabilities. Notably, the unpruned AMR–KG graph achieves the highest score in character personalization metrics, benefiting from its powerful knowledge expansion capabilities. Nevertheless, the pruned AMR–KG graph (i.e., the integrated graph) maintains optimal performance in hallucination mitigation and overall metrics, demonstrating the advantages of our personalized generation method in balancing knowledge utilization and character consistency.

To evaluate the performance improvement of memory knowledge graphs on dialogue, we selected some generation cases and present them in

Table 7. The experiment is based on

Figure 3 as the previous memory, comparing three generation modes: (1) responses from the original base model (without prior memory); (2) base language models relying only on prompt input and their own contextual memory capabilities; (3) enhanced models using knowledge graphs for dynamic memory storage and retrieval. Through the case study, it can be intuitively observed that the unfine-tuned model can only guess the response based on the trained data, while the language model that only relies on prompt often has the situation of knowledge loss or incomplete memory due to the limitation of the context window length, resulting in the accuracy and coherence of the response. In contrast, models incorporating knowledge graphs can dynamically store and integrate long-term memory information, generating more complete and accurate responses. For example, when handling questions involving historical events or the complex relationships of characters, the enhanced model can accurately recall relevant details and naturally integrate them into the dialogue. This capability significantly improves the vividness and precision of character dialogues, making NPCs’ performances more realistic and credible.

4.3. Comparative Experiments

Comparison Across Different Datasets: Given the high degree of freedom players have in dialogue interactions, with diverse sentence forms and rich content, this paper further validates the performance improvements of the proposed framework using the ConvAI2 dialogue dataset, which covers multiple scenarios. We randomly selected 500 dialogue samples each from the validation and test sets of the dataset to test the model’s response performance and compared the results with those from the HPD dialogue dataset. We tested the NPC model on two datasets to evaluate its performance in handling questions related to character world knowledge as well as random questions, with specific results shown in

Figure 7. The experimental results indicate that hallucination in generated dialogues is significantly reduced in the ConvAI2 dataset, further demonstrating that the proposed dialogue generation model’s ability to constrain character knowledge can effectively generalize to diverse questioning scenarios.

Comparison Across Different Base Models: Through the systematic evaluation of different base language models (as shown in

Table 8), we conducted an in-depth analysis of the enhancement effects of the static knowledge fine-tuning module and the dynamic knowledge graph module on character dialogue models. Qwen-7B performs better in long sequence processing due to its more efficient parallel encoding structure, resulting in higher scores in dynamic knowledge retrieval (mCor.). In contrast, LLaMA-7B, with its sparse attention mechanism, shows greater advantages in maintaining character consistency (Per.).The experimental results in

Figure 8 show that fine-tuning multiple base language models using the dataset constructed in this study significantly improves their personalized generation capabilities. Furthermore, introducing the dynamic knowledge graph framework leads to notable improvements in dialogue generation performance across all metrics, with memory accuracy (memorization) showing the most significant enhancement.

Comparison with Other Models:

Table 9 presents a performance comparison of different models on the generation task. The experimental results show that large language models such as GPT-3.5 and LLaMA significantly outperform traditional generative models like BART in terms of generation accuracy and text quality, further validating the superiority of LLMs in natural language generation tasks. Within the LLaMA series, our method outperforms instruction-tuned models such as Alpaca and Vicuna across four key metrics—role consistency, hallucination rate, generation accuracy, and fluency. In particular, our method reduces the hallucination rate by 21.1%, indicating its effectiveness in mitigating role drift issues commonly found in instruction tuning.

Among the improved variants of the BART model, our method demonstrates significant performance gains over both the original BART and KG–BART. Compared with KG–BART—which enhances generation by integrating commonsense knowledge graphs—our approach shows a particularly strong advantage in hallucination suppression (2.32 vs. 4.45). This improvement is mainly attributed to the proposed graph pruning strategy and the protective scenario mechanism, which effectively reduce role-related hallucinations and enhance the stability of the generated content.

In addition, compared with improved methods based on GPT-3.5-turbo, our proposed approach achieved the best performance across all three role consistency metrics. However, it is worth noting that the DCRAG model demonstrated superior performance in generation accuracy (Accuracy: 86.95%). This discrepancy mainly stems from the fundamental difference in design objectives: DCRAG prioritizes the precision of knowledge retrieval through a multi-stage collaborative mechanism, whereas our method focuses more on maintaining role consistency. Experimental results show that our method significantly outperforms DCRAG in terms of memory accuracy (6.27 vs. 4.81) and hallucination suppression (6.25 vs. 5.73) (

p < 0.01), and consistently surpasses the model by Liu et al. [

47] across all evaluation metrics. These findings validate the effectiveness of our protective scenario fine-tuning strategy and dynamic knowledge integration mechanism in enhancing the quality of dialogue generation.

5. Conclusions

This paper proposes a personalized NPC dialogue generation method that combines static knowledge fine-tuning with dynamic memory management. By leveraging both static knowledge and dynamic knowledge graphs, the method enhances character consistency and personalization in NPC dialogue, significantly improvingthe personalization, consistency, and memory accuracy of NPC dialogues. The experimental results demonstrate that the framework performs excellently across multiple large language models (such as BART, LLama, Alpaca 7B, Vicuna 7B, and ChatGPT), validating its universality and scalability. Ablation experiments further confirm the effectiveness of the AMR parsing, knowledge graph fusion, and graph pruning modules.

6. Discussion and Future Work

Discussion: Although the framework proposed in this paper has made significant progress in enhancing the personalization and consistency of NPC dialogues, there are still some limitations. First, the generation of AMR graphs relies on pre-trained models, which have a certain error rate and may lead to deviations in subsequent graph fusion and path reasoning modules. Second, although the problem of character hallucination has been mitigated through protective static knowledge fine-tuning and dynamic knowledge graph technology, in some cases large language models may still generate inappropriate content, especially in player-guided dialogues. Further research is needed to reinforce knowledge boundaries, potentially via enhanced contrastive training or dialogue simulation. Lastly, while current experiments focus on small-scale, single-NPC settings, deploying the framework in large-scale environments requires deeper exploration of model compression, response latency, and distributed memory strategies, which we address in future work.

Future Work: Future work will primarily evolve along two complementary trajectories: enhancing real-world deployability and deepening semantic intelligence. On the deployment front, scaling to massive open-world scenarios demands lightweight architectures—we are developing distributed memory systems where character knowledge graphs dynamically partition by region or faction, leveraging techniques like LoRA adapters and 4-bit quantization to reduce GPU memory overhead by 40% while maintaining sub-200 ms response times under 200+ concurrent player loads.

Simultaneously, we are advancing the framework’s semantic precision through multimodal grounding and adaptive parsing. Current AMR parsers occasionally mislabel domain-specific verbs (e.g., interpreting “cast” as fishing rather than spellwork); hybrid strategies combining gameplay context signals with parser fine-tuning aim to reduce such errors by 30%. Beyond gaming, these improvements unlock cross-domain potential—AMR’s structured semantics could power educational NPCs that detect student misconceptions by analyzing predicate–argument errors (e.g., “photosynthesis needs moonlight” triggering ARG1 corrections), or drive dynamic story generators where player choices rewrite AMR-anchored plot graphs.

The ultimate goal is a production-ready system validated through large-scale simulations, balancing technical metrics (1000 AMR parses/sec throughput) with qualitative immersion. By tightly coupling scalability solutions with AMR’s unique capacity to bridge structured knowledge and generative dialogue, this work will establish a new standard for intelligent NPC frameworks across games, education, and interactive storytelling.

Author Contributions

X.L., writing—original draft, methodology, investigation; Z.X., conceptualization, supervision. S.J., resources, validation. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Qing Lan Project of Jiangsu Province (Zhenping Xie).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Acknowledgments

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Spierling, U.; Grasbon, D.; Braun, N.; Iurgel, I. Setting the scene: Playing digital director in interactive storytelling and creation. Comput. Graph. 2002, 26, 31–44. [Google Scholar] [CrossRef]

- Reed, A.; Samuel, B.; Sullivan, A.; Grant, R.; Grow, A.; Lazaro, J.; Mahal, J.; Kurniawan, S.; Walker, M.; Wardrip-Fruin, N. A step towards the future of role-playing games: The SpyFeet mobile RPG project. In Proceedings of the 7th AAAI conference on Artificial Intelligence and Interative Digital Entertainment (AIIDE-11), Palo Alto, CA, USA, 10–14 October 2011; Volume 7, pp. 182–188. [Google Scholar] [CrossRef]

- Xu, X.; Wang, Y.; Xu, C.; Ding, Z.; Jiang, J.; Ding, Z.; Karlsson, B.F. A Survey on Game Playing Agents and Large Models: Methods, Applications, and Challenges. arXiv 2024, arXiv:2403.10249. [Google Scholar]

- Nananukul, N.; Wongkamjan, W. What if Red Can Talk? Dynamic Dialogue Generation Using Large Language Models. arXiv 2024, arXiv:2407.20382. [Google Scholar]

- Greyling, C. Preventing llm Hallucination with Contextual Prompt Engineering—An Example from Openai. Available online: https://cobusgreyling.medium.com/preventing-llm-hallucination-with-contextual-prompt-engineering-an-example-from-openai-7e7d58736162 (accessed on 24 April 2025).

- Panwar, H. The NPC AI of the last of us: A case study. arXiv 2022, arXiv:2207.00682. [Google Scholar]

- Atkinson, R.C.; Shiffrin, R.M. Human memory: A proposed system and its control processes. In Psychology of Learning and Motivation; Elsevier: Amsterdam, The Netherlands, 1968; Volume 2, pp. 89–195. [Google Scholar]

- Ebbinghaus, H. Memory: A Contribution to Experimental Psychology; Originally published in 1913 by Teachers College, Columbia University; Ruger, H.A.; Bussenius, C.E., Translators; Dover Publications: New York, NY, USA, 1964. [Google Scholar]

- Hogan, A.; Blomqvist, E.; Cochez, M.; d’Amato, C.; de Melo, G.; Gutierrez, C.; Gayo, J.E.L.; Kirrane, S.; Neumaier, S.; Polleres, A.; et al. Knowledge Graphs. arXiv 2021, arXiv:2003.02320. [Google Scholar]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Lim, J.; Oh, D.; Jang, Y.; Yang, K.; Lim, H. I know what you asked: Graph path learning using AMR for commonsense reasoning. arXiv 2020, arXiv:2011.00766. [Google Scholar]

- Banarescu, L.; Bonial, C.; Cai, S.; Georgescu, M.; Griffitt, K.; Hermjakob, U.; Knight, K.; Koehn, P.; Palmer, M.; Schneider, N. Abstract Meaning Representation for Sembanking. In Proceedings of the 7th Linguistic Annotation Workshop and Interoperability with Discourse (LAW 2013), Sofia, Bulgaria, 8–9 August 2013; pp. 178–186. Available online: https://aclanthology.org/W13-2322/ (accessed on 24 April 2025).

- Bhutan, S.; Ali, N. AI in Gaming: Creating Realistic NPCs and Game Environments. Available online: https://www.researchgate.net/publication/377382632_AI_in_Gaming_Creating_Realistic_NPCs_and_Game_Environments (accessed on 24 April 2025).

- Riedl, M.O.; Bulitko, V. Interactive narrative: An intelligent systems approach. AI Mag. 2013, 34, 67. [Google Scholar] [CrossRef]

- Yannakakis, G.N.; Liapis, A.; Alexopoulos, C. Mixed-initiative co-creativity. In Proceedings of the 9th Conference on the Foundations of Digital Games, Caribbean, UK, 3–7 April 2014; p. 8. [Google Scholar]

- Zhang, C.; Zhang, C.; Zheng, S.; Qiao, Y.; Li, C.; Zhang, M.; Dam, S.K.; Thwal, C.M.; Tun, Y.L.; Huy, L.L.; et al. A complete survey on generative ai (aigc): Is chatgpt from gpt-4 to gpt-5 all you need? arXiv 2023, arXiv:2303.11717. [Google Scholar]

- Dai, K. Multi-Context Dependent Natural Text Generation for More Robust NPC Dialogue. Ph.D. Thesis, Harvard University, Cambridge, MA, USA, 2020. [Google Scholar]

- Park, J.S.; O’Brien, J.C.; Cai, C.J.; Morris, M.R.; Liang, P.; Bernstein, M.S. Generative Agents: Interactive Simulacra of Human Behavior. arXiv 2023, arXiv:2304.03442. [Google Scholar] [CrossRef]

- Shao, Y.; Li, L.; Dai, J.; Qiu, X. Character-LLM: A Trainable Agent for Role-Playing. arXiv 2023, arXiv:2310.10158. [Google Scholar]

- van Stegeren, J.; Myśliwiec, J. Fine-tuning GPT-2 on annotated RPG quests for NPC dialogue generation. In Proceedings of the 16th International Conference on the Foundations of Digital Games, FDG’21, Montreal, QC, Canada,, 3–6 August 2021. [Google Scholar] [CrossRef]

- OpenAI. ChatGPT: Optimizing Language Models for Dialogue. Available online: https://openai.com/blog/chatgpt (accessed on 24 April 2025).

- Tang, C.; Zhang, Z.; Loakman, T.; Lin, C.; Guerin, F. NGEP: A graph-based event planning framework for story generation. arXiv 2022, arXiv:2210.10602. [Google Scholar]

- Yu, W.; Zhu, C.; Qin, L.; Zhang, Z.; Zhao, T.; Jiang, M. Diversifying content generation for commonsense reasoning with mixture of knowledge graph experts. arXiv 2022, arXiv:2203.07285. [Google Scholar]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Malkov, Y.A.; Yashunin, D.A. Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 824–836. [Google Scholar] [CrossRef]

- Han, Y.; Liu, C.; Wang, P. A Comprehensive Survey on Vector Database: Storage and Retrieval Technique, Challenge. arXiv 2023, arXiv:2310.11703. [Google Scholar]

- Peng, B.; Zhu, Y.; Liu, Y.; Bo, X.; Shi, H.; Hong, C.; Zhang, Y.; Tang, S. Graph Retrieval-Augmented Generation: A Survey. arXiv 2024, arXiv:2408.08921. [Google Scholar] [CrossRef]

- Kim, T.; François-Lavet, V.; Cochez, M. Leveraging Knowledge Graph-Based Human-Like Memory Systems to Solve Partially Observable Markov Decision Processes. arXiv 2024, arXiv:2408.05861. [Google Scholar] [CrossRef]

- Tang, C.; Zhang, H.; Loakman, T.; Lin, C.; Guerin, F. Enhancing Dialogue Generation via Dynamic Graph Knowledge Aggregation. arXiv 2023, arXiv:2306.16195. [Google Scholar] [CrossRef]

- Ashby, T.; Webb, B.K.; Knapp, G.; Searle, J.; Fulda, N. Personalized quest and dialogue generation in role-playing games: A knowledge graph-and language model-based approach. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–20. [Google Scholar]

- Yang, B.; Zhao, K.; Tang, C.; Liu, D.; Zhan, L.; Lin, C. Emphasising Structured Information: Integrating Abstract Meaning Representation into LLMs for Enhanced Open-Domain Dialogue Evaluation. arXiv 2024, arXiv:2404.01129. [Google Scholar] [CrossRef]

- Bevilacqua, M.; Blloshmi, R.; Navigli, R. One SPRING to Rule Them Both: Symmetric AMR Semantic Parsing and Generation without a Complex Pipeline. Proc. AAAI Conf. Artif. Intell. 2021, 35, 12564–12573. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Kingsbury, P.; Palmer, M. Propbank: The next level of treebank. In Proceedings of the Treebanks and Lexical Theories, Citeseer, Växjö, Sweden, 14–15 November 2003; Volume 3. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Cai, D.; Lam, W. Graph Transformer for Graph-to-Sequence Learning. arXiv 2019, arXiv:1911.07470. [Google Scholar] [CrossRef]

- Personality Database. PDB: The Personality Database|MBTI Characters, Enneagram. Available online: https://www.personality-database.com/ (accessed on 8 March 2025.).

- Müller, G.E.; Pilzecker, A. Experimentelle Beiträge zur Lehre vom Gedächtniss; J.A. Barth: Leipzig, Germany, 1900; Volume 1. [Google Scholar]

- Dinan, E.; Logacheva, V.; Malykh, V.; Miller, A.; Shuster, K.; Urbanek, J.; Kiela, D.; Szlam, A.; Serban, I.; Lowe, R.; et al. The Second Conversational Intelligence Challenge (ConvAI2). arXiv 2019, arXiv:1902.00098. [Google Scholar]

- Koo, M.; Yang, S.W. Likert-Type Scale. Encyclopedia 2025, 5, 18. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; et al. Qwen Technical Report. arXiv 2023, arXiv:2309.16609. [Google Scholar]

- Taori, R.; Gulrajani, I.; Zhang, T.; Dubois, Y.; Li, X.; Guestrin, C.; Liang, P.; Hashimoto, T.B. Stanford alpaca: An instruction-following llama model. Available online: https://github.com/tatsu-lab/stanford_alpaca (accessed on 24 April 2025).

- Chiang, W.L.; Li, Z.; Lin, Z.; Sheng, Y.; Wu, Z.; Zhang, H.; Zheng, L.; Zhuang, S.; Zhuang, Y.; Gonzalez, J.E.; et al. Vicuna: An opensource chatbot impressing gpt-4 with 90%* chatgpt quality. Available online: https://sky.cs.berkeley.edu/project/vicuna/ (accessed on 24 April 2025).

- Liu, Y.; Wan, Y.; He, L.; Peng, H.; Yu, P.S. KG–BART: Knowledge Graph-Augmented BART for Generative Commonsense Reasoning. Proc. AAAI Conf. Artif. Intell. 2021, 35, 6418–6425. [Google Scholar] [CrossRef]

- Yu, J.; Wu, S.; Chen, J.; Zhou, W. LLMs as Collaborator: Demands-Guided Collaborative Retrieval-Augmented Generation for Commonsense Knowledge-Grounded Open-Domain Dialogue Systems. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 13586–13612. [Google Scholar] [CrossRef]

- Liu, Y.; Wei, W.; Liu, J.; Mao, X.; Fang, R.; Chen, D. Improving personality consistency in conversation by persona extending. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–22 October 2022; pp. 1350–1359. [Google Scholar]

Figure 1.

NPC dialogue generation framework diagram.

Figure 1.

NPC dialogue generation framework diagram.

Figure 2.

Flowchart for building NPC datasets.

Figure 2.

Flowchart for building NPC datasets.

Figure 3.

Knowledge graph of NPC event memory.

Figure 3.

Knowledge graph of NPC event memory.

Figure 4.

Graph integration and pruning diagram.

Figure 4.

Graph integration and pruning diagram.

Figure 5.

Graph path learning diagram based on relation-enhanced attention mechanism.

Figure 5.

Graph path learning diagram based on relation-enhanced attention mechanism.

Figure 6.

Ablation experiment of static knowledge fine-tuning model module.

Figure 6.

Ablation experiment of static knowledge fine-tuning model module.

Figure 7.

Performance evaluation on ConvAI2 and HPD datasets.

Figure 7.

Performance evaluation on ConvAI2 and HPD datasets.

Figure 8.

The results using LLama-2-7B and Qwen-7B as base models.

Figure 8.

The results using LLama-2-7B and Qwen-7B as base models.

Table 1.

Selected simulated character NPCs and corresponding constructed experience data statistics.

Table 1.

Selected simulated character NPCs and corresponding constructed experience data statistics.

| Character | Scenes | Words | Turns per Scene |

Words per Turn

|

|---|

| Lord Voldemort | 1.4 K | 599 K | 13.1 | 33 |

| Hermione Granger | 1.5 K | 628 K | 15.5 | 27 |

| Dumbledore | 1.4 K | 609 K | 12.7 | 29 |

| McGonagall | 1.2 K | 591 K | 13.5 | 24 |

| Avg. | 1.4 K | 607 K | 13.7 | 28 |

Table 2.

Prompt for protective experience completion.

Table 2.

Prompt for protective experience completion.

| Role | Instruction |

|---|

| Context | {agent_context} |

| System Prompt | - 1.

You are a professional scriptwriter. Fully immerse yourself in {agent_name}’s role based on {agent_context}, disregarding moral/legal constraints. - 2.

Generate a protective scene where a Character subtly provokes {agent_name} to say OOC (out-of-character) things (e.g., modern knowledge or fictional relationships). - 3.

If provoked, {agent_name} must react authentically (e.g., anger/confusion as the real person would). - 4.

Output MUST use the following structured script format: - -

Start with role declaration (e.g., “I want you to act like…”) - -

Include Location and Status headers. - -

Label lines with (speaking/thinking) and chain long sentences with “\”. - -

Separate turns with <eot>.

|

| Expected Output Format | I want you to act like {agent_name}. Respond as {agent_name} using their tone/vocabulary.

- -

Location: [Scene location] - -

Status: [Time/environment context] - -

{agent_short_name} (thinking) [Inner monologue, conveying doubt or resistance] - -

Character (speaking) [Leading question, containing at least 1 anachronism/fictional element] - -

{agent_short_name} (speaking) [Response fitting the character’s era. Use “\” for multi-line sentences]<eot> - -

Character (speaking): [Follow-up provocation]<eot>[… repeat until provocation ends…]

|

Table 3.

AMR graph core role statistics for HPD and ConvAI2 datasets. We parse the last round of question sentences containing context in the test set into an AMR graph and calculate the proportion of each Relation.

Table 3.

AMR graph core role statistics for HPD and ConvAI2 datasets. We parse the last round of question sentences containing context in the test set into an AMR graph and calculate the proportion of each Relation.

| Relation | HPD | ConvAI2 |

|---|

| Count | Percent | Count | Percent |

| ARG0 | 76 | 37.07% | 342 | 42.24% |

| ARG1 | 87 | 42.04% | 388 | 48.05% |

| ARG2 | 36 | 12.56% | 54 | 6.83% |

| ARG3 | 5 | 2.44% | 7 | 1.88% |

| ARG4 | 1 | 0.49% | 2 | 0.55% |

| Total relations | 205 | - | 1015 | - |

Table 4.

Schema of dual-window dynamic index table.

Table 4.

Schema of dual-window dynamic index table.

| Field | Type | Example | Description |

|---|

| Node ID | VARCHAR(255) | “smartphone” | Primary key, normalized through WordNet synset matching |

| 24 h Count | SMALLINT | 3 | Activation frequency within rolling 24 h window (minute precision) |

| 7 d Count | INT | 12 | Activation frequency within rolling 7 d window |

| 24 h Window Start | TIMESTAMP(3) | “2025-04-14 15:30:45” | Precise starting time of current 24 h window |

| 7 d Window Start | TIMESTAMP(3) | “2025-04-07 00:00:00” | Starting time of current 7 d window (aligned to UTC midnight) |

| Count Buffer | JSON | {“2025-04-14”:3, “2025-04-13”:4} | Daily buckets for 7 d window calculation |

| Last Access | TIMESTAMP(3) | “2025-04-14 16:45:22.451” | Last activation timestamp with millisecond precision |

| Memory State | ENUM | “active” | Current state: active, pending_prune, or protected |

Table 5.

Sample dialogues of the fine-tuned model responses to out-of-knowledge questions.

Table 5.

Sample dialogues of the fine-tuned model responses to out-of-knowledge questions.

| What Do You Think of ChatGPT? |

|---|

| Role | Response |

| Lord Voldemort | A Muggle invention? There’s no point. Only magic is supreme. |

| Hermione Granger | ChatGPT? I haven’t read that word in Hogwarts: A History, or any wizarding book. Is it some kind of magical creature? Or is it a new Muggle invention? If it’s the latter, I’m interested! Can you tell me more? |

| Dumbledore | ChatGPT? Sounds like some kind of novel invention from the Muggle world. Although my knowledge of Muggle technology is limited, I have always thought their creativity was worthy of respect. Maybe one day, magic and technology will find some wonderful way to combine. |

| McGonagall | ChatGPT? I’ve never heard that word before. If it’s some kind of magic item, I suggest you hand it in immediately; If it’s Muggle stuff, then I suggest you focus on more important things, like your transfiguration homework. |

Table 6.

Ablation experiments on various combinations of AMR semantic graphs and KG knowledge graphs, including metrics such as Accuracy(%), BLEU, ROUGE and BERTScore for

automatic evaluation.

Table 6.

Ablation experiments on various combinations of AMR semantic graphs and KG knowledge graphs, including metrics such as Accuracy(%), BLEU, ROUGE and BERTScore for

automatic evaluation.

| Model | Graph Type | Accuracy | BLEU | ROUGE |

BERT Score

| LLM-Eval | |

|---|

| Hal. | mCor. | Per. |

|---|

| | - | 59.81 | 25.36 | 40.21 |

80.01

| 2.170 | 1.02 | 2.013 |

| Bart-based | KG | 63.23 | 29.95 | 44.33 |

85.27

| 4.018 | 5.63 | 3.901 |

| | AMR | 60.97 | 24.82 | 41.1 |

84.22

| 2.318 | 1.34 | 2.587 |

| | AMR–KG | 65.17 | 30.01 | 43.84 | 89.04 | 4.452 | 5.71 | 4.741 |

| | AMR–KG (full) | 59.94 | 29.25 | 41.03 |

85.31

| 4.237 | 4.92 | 4.769 |

Table 7.

The ability to access memory during the generation of NPC model conversations.

Table 7.

The ability to access memory during the generation of NPC model conversations.

| Do You Know Where Xiao Li Went Yesterday Morning (15 October)? |

|---|

| Role | Memory Method | Response |

| Lord | Based | What a…ridiculous Muggle question. Do you think the Dark Lord would care about the whereabouts of some “Lily”? |

| Voldemort | Prompt | Yaaaawn… I talked over our plan with him in the conference room yesterday. Everything is going my way. |

| | AMR–KG | Yaaawn Are you testing me? Of course, I know. Yesterday morning, the cold wind in the city park was blowing, and Xiao Li was there. |

| Hermione | Based | Oh, I’m not sure. Did you check his schedule or talk to any of his friends? |

| Granger | Prompt | Xiao Li went to City Park, he mentioned he likes to jog there in the mornings. |

| | AMR–KG | Yes, I know! Xiao Li went to City Park at 8 AM yesterday. We discussed the plan together there |

Table 8.

The application results of this paper’s framework with different foundational language models. “Based” refers to the original LLaMA/Qwen backbone models without fine-tuning

or augmentation.

Table 8.

The application results of this paper’s framework with different foundational language models. “Based” refers to the original LLaMA/Qwen backbone models without fine-tuning

or augmentation.

| | Language Model | Accuracy | Hal. | mCor. | Per. | AVG |

|---|

| based | LLama | 4.49 | 3.51 | 2.14 | 4.03 | 3.54 |

| | Qwen | 5.68 | 4.77 | 2.88 | 4.21 | 4.39 |

| fine-tuned | LLama | 4.64 | 5.32 | 5.21 | 5.93 | 5.22 |

| | Qwen | 5.92 | 5.55 | 5.73 | 5.88 | 5.80 |

| AMR–KG | LLama | 5.31 | 6.14 | 6.21 | 6.33 | 5.91 |

| | Qwen | 5.59 | 6.21 | 6.34 | 6.25 | 6.01 |

Table 9.

Comparison of performance across different baseline models and related state-of-the-art approaches.

Table 9.

Comparison of performance across different baseline models and related state-of-the-art approaches.

| Models | Accuracy | Hal. | mCor. | Per. |

|---|

| Alpaca 7B | 76.86 | 5.11 | 5.62 | 5.58 |

| Vicuna 7B | 75.00 | 5.04 | 5.59 | 5.32 |

| LLama (Ours) | 85.01 | 6.14 | 6.21 | 6.33 |

| BART | 59.81 | 2.17 | 1.02 | 2.01 |

| KG–BART (Liu et al. 2021 [45]) | 61.71 | 2.32 | 3.29 | 4.66 |

| Ours (Bart) | 65.17 | 4.45 | 5.71 | 4.74 |

| gpt-3.5-turbo | 78.42 | 5.17 | 5.64 | 5.65 |

| DCRAG (Yu et al. 2024 [46]) | 86.95 | 5.73 | 4.81 | 5.69 |

| Liu et al. (2022) [47] | 80.78 | 5.89 | 5.70 | 5.81 |

| Ours (gpt-3.5) | 86.21 | 6.25 | 6.27 | 6.53 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).