Ethical Integration of AI in Healthcare Project Management: Islamic and Cultural Perspectives

Abstract

1. Introduction

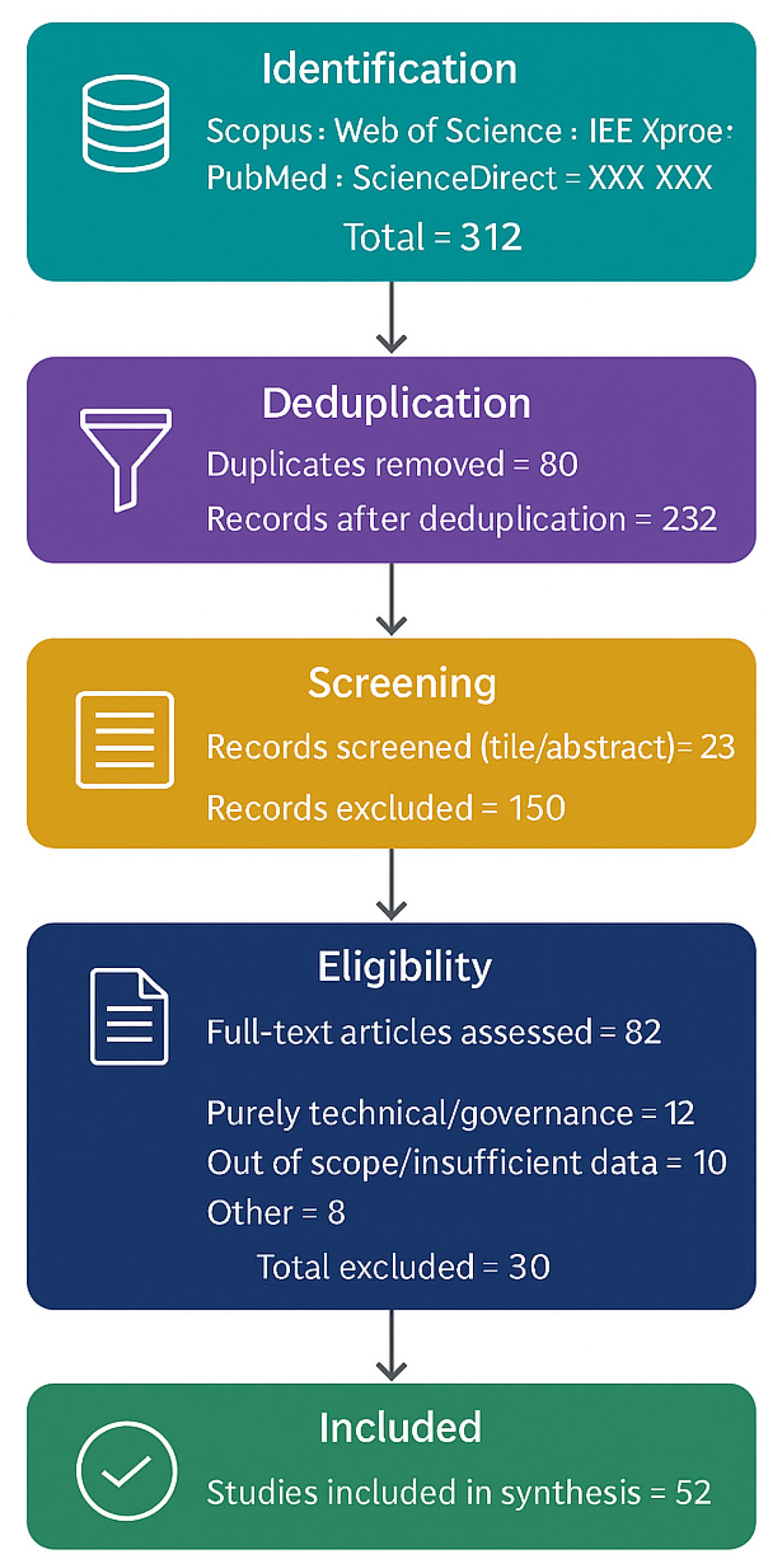

2. Methodology

3. Results—A Maqāṣid-Aligned Conceptual Framework for ANN-DSS

3.1. Overview and Novelty

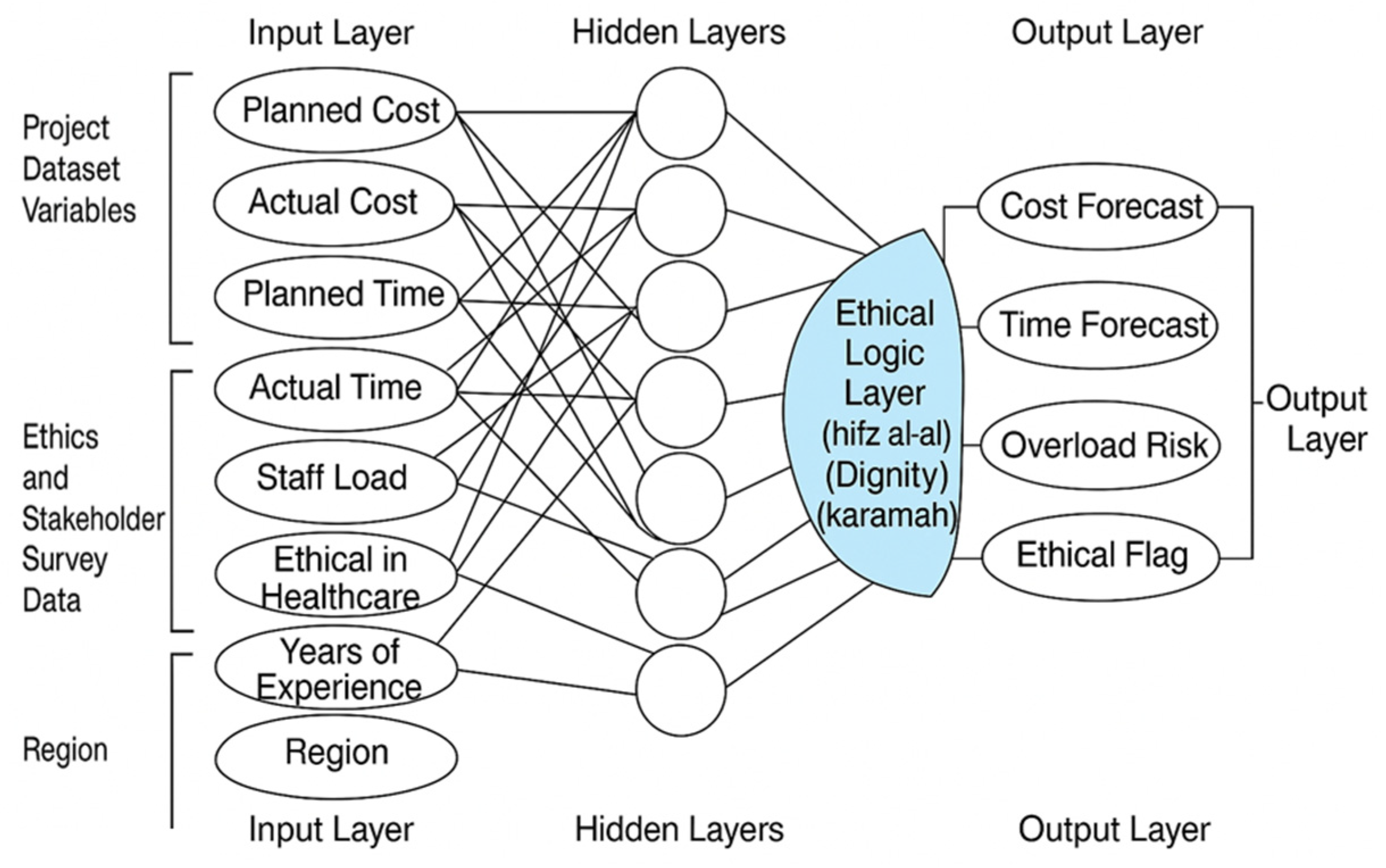

3.2. Architecture

3.3. Ethical Logic Layer

3.4. Dual Evaluation and Auditing

3.5. Stakeholder Co-Design

3.6. Implications

3.7. Comparative Positioning and Governance Boundaries

4. AI Applications in Healthcare Project Management

4.1. Technical Promise of ANN in Healthcare Projects

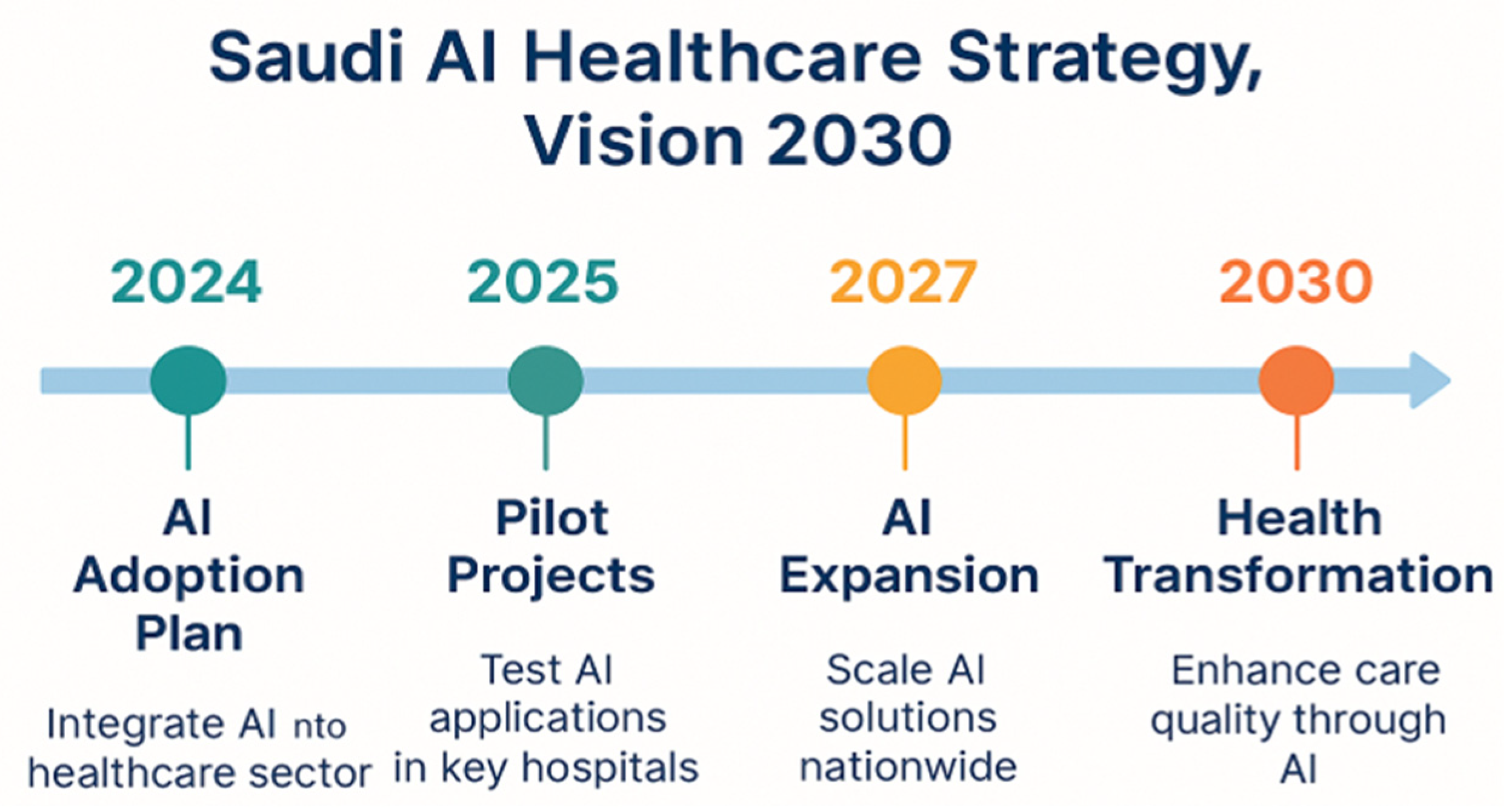

4.2. Saudi AI Integration Under Vision 2030

4.3. Limitations of Technocratic Deployment

4.4. Ethical Vacuum in the Existing Literature

4.5. Absence of Stakeholder-Centred Design

4.6. Towards Ethically Embedded ANN Systems

4.7. Summary of Technical and Ethical Gaps

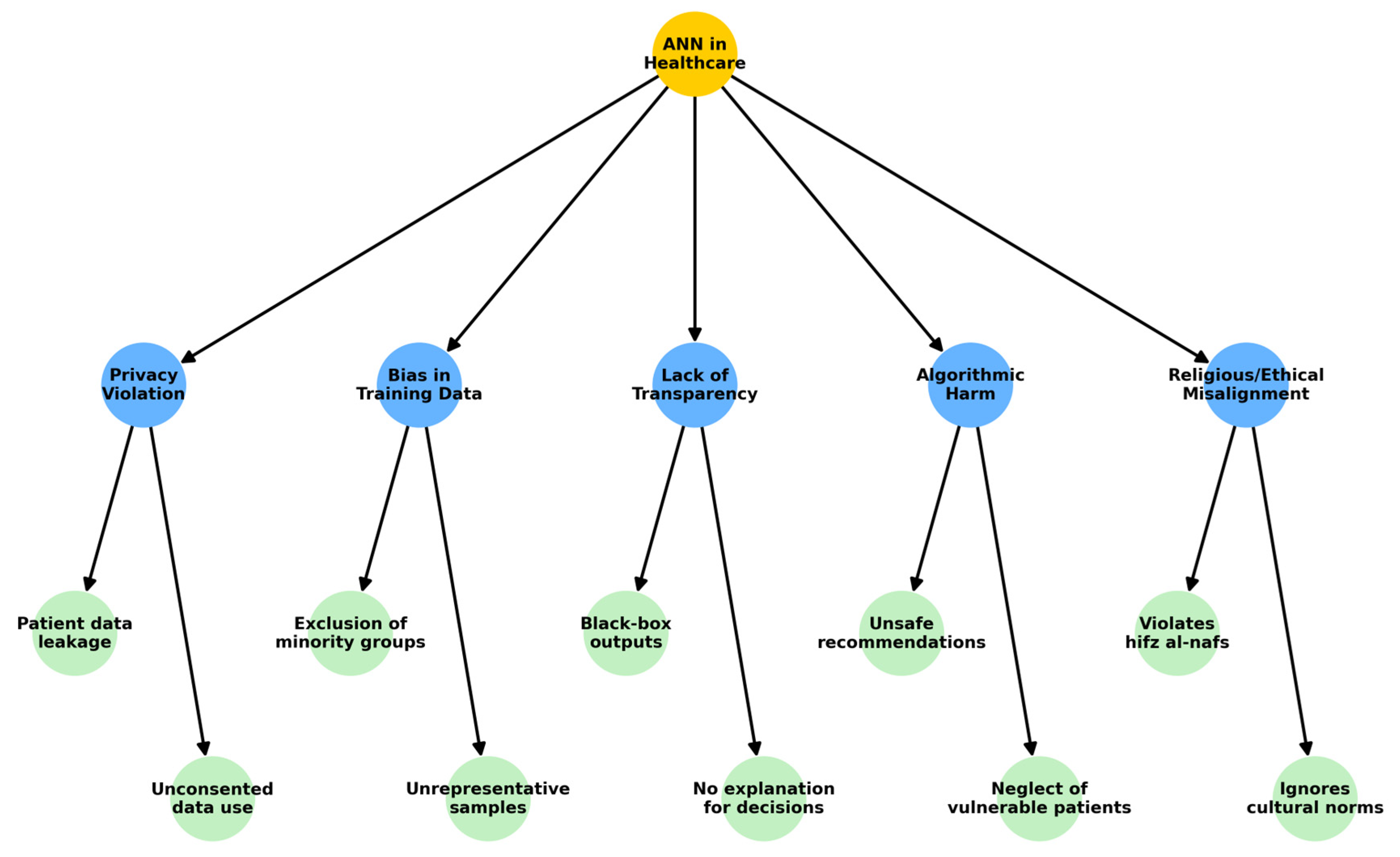

5. Ethical Challenges in ANN-Based Healthcare Systems

5.1. Value Misalignment and Moral Conflict

5.2. Opacity and Accountability Deficits

5.3. Exclusion of Affected Stakeholders

5.4. Cultural and Religious Blindness

5.5. Critical Evaluation

- Value-sensitive to Islamic jurisprudence

- Transparent in decision logic and outputs

- Inclusive in its development processes

- Context-aware of social, cultural, and theological expectations

6. Discussion of Ethical Issues in ANN Healthcare Applications

7. Islamic Ethical Foundations

7.1. From Principle to Practice: Gaps in Operationalisation

- ḥifẓ al-nafs (protection of life) could be encoded as a non-negotiable risk threshold in patient safety algorithms.

- ḥifẓ al-ʿaql (protection of intellect) could demand safeguards against algorithmic manipulation or misinformation.

- ḥifẓ al-dīn (preservation of faith) could require culturally sensitive defaults in AI outputs.

7.2. Toward a Shariah-Aligned Ethical AI Architecture

- Adopt Fiqh-based filters to vet ethically sensitive outputs.

- Include maqāṣid-informed loss functions to weigh decisions not only by cost or accuracy but by ethical impact.

- Ensure fatwa-aligned auditability, enabling traceable compliance with Islamic ethical rulings.

7.3. Ethical Validation Through Shariah Governance

7.4. Operationalising Maqāṣid in ANN Design

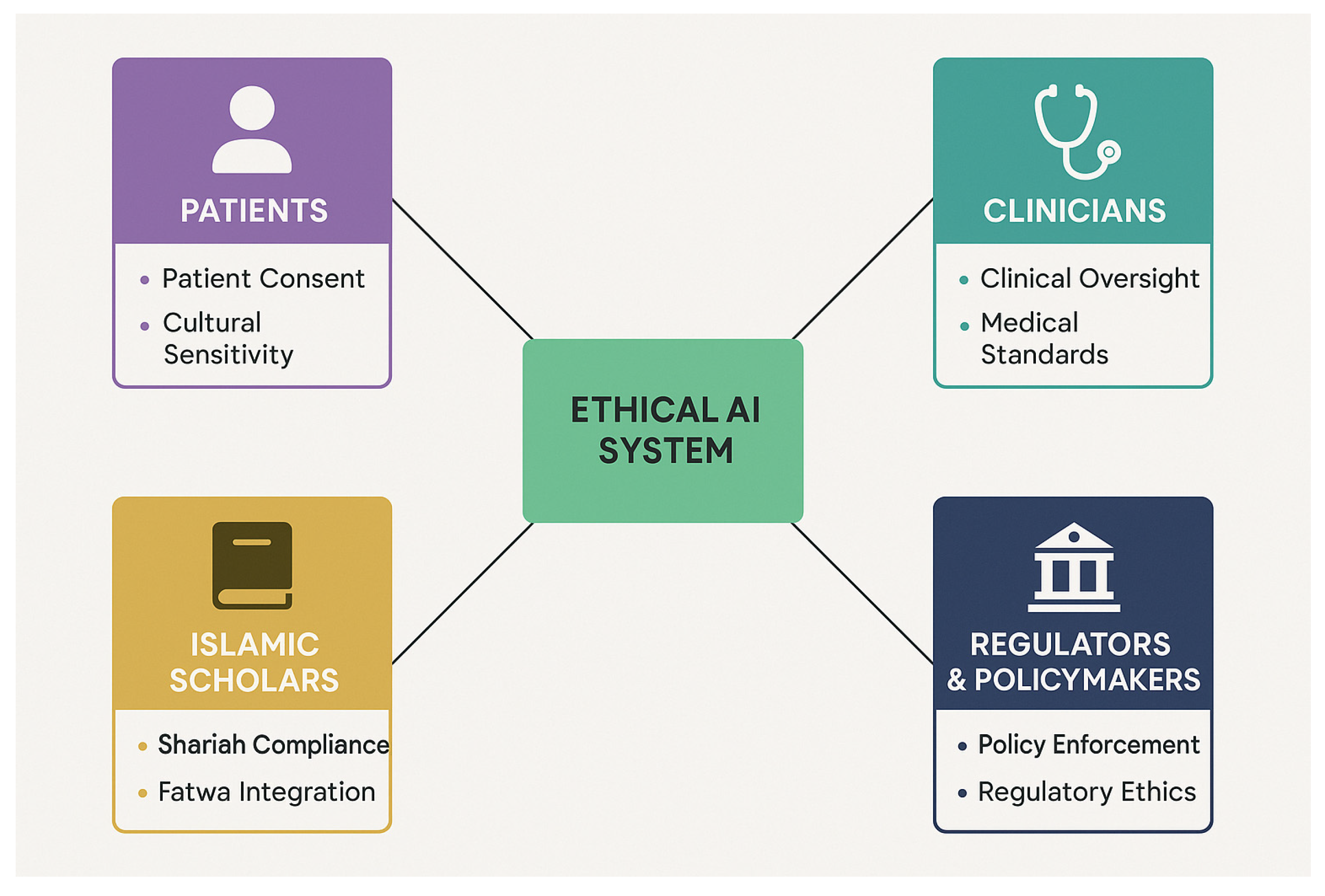

8. Stakeholder-Centric AI Design

8.1. Structural Deficiencies in Existing AI Development

8.2. Trust and Moral Legitimacy as Design Outcomes

8.3. Toward Inclusive and Ethical AI Co-Design

- Religious scholars to vet outputs against Islamic legal reasoning.

- Clinicians to ensure clinical usability and risk sensitivity.

- Patients and caregivers to validate relevance and cultural acceptability.

- Engineers and developers to implement technical requirements.

- Policymakers align AI tools with public health goals.

8.4. Critical Imperative for the Saudi Context

- Clinicians contribute domain expertise and evaluate whether AI recommendations align with clinical best practices and patient care standards.

- Patients provide insight into experiential, cultural, and personal values that shape the acceptability and trustworthiness of AI interventions.

- Islamic Scholars offer normative oversight, ensuring that AI outputs and processes respect principles of Islamic jurisprudence, particularly the maqāṣid al-sharīʿah.

- Regulators and Policymakers ensure compliance with legal, institutional, and national healthcare strategies, including Vision 2030 and ethical governance mandates.

9. ANN-Based Ethical Decision Support Systems (DSS)

- Ethics-augmented training datasets: Annotating historical project data with ethical markers derived from Islamic legal opinions, clinical ethics codes, and stakeholder consultation feedback. These markers could include red flags for dignity violations, unsafe cost-cutting, or unjust treatment recommendations.

- Maqāṣid-based filtering layers: Introducing an intermediary decision layer that evaluates ANN outputs against predefined maqāṣid constraints. For example, if the model suggests staff reduction to reduce costs, this recommendation is filtered through criteria such as ḥifẓ al-nafs (preservation of life) and karāmah (dignity), blocking or flagging ethically problematic outputs.

- Dynamic ethical calibration: Continuously updating the model’s thresholds and classification rules based on new fatwas, patient advocacy data, and evolving cultural standards. This ensures that the system remains jurisprudentially current and socially responsive.

- These mechanisms are summarised in Table 10.

- for each output y_hat in ANN_results:

- for each rule r in Ethical_Rule_Set:

- if r.trigger (y_hat) = True:

- apply (r.action)

- log (r.audit_artifact)

- return adjusted_outputs

- Thresholds for safeguards were not applied in this study but are proposed as starting points for pilot testing. Initial values were derived from safe clinical baselines and established fairness metrics. For example, δ = 0.1 (demographic parity difference) following [14] and α = 0.8 (confidence score) following [15]. These values will be refined through participatory co-design workshops with clinicians, Islamic scholars, and policy leaders to ensure cultural legitimacy and clinical safety. Local calibration is planned during pilot studies.

9.1. Ethical Reasoning as a Technical Component

9.2. Implementation Challenges and Institutional Readiness

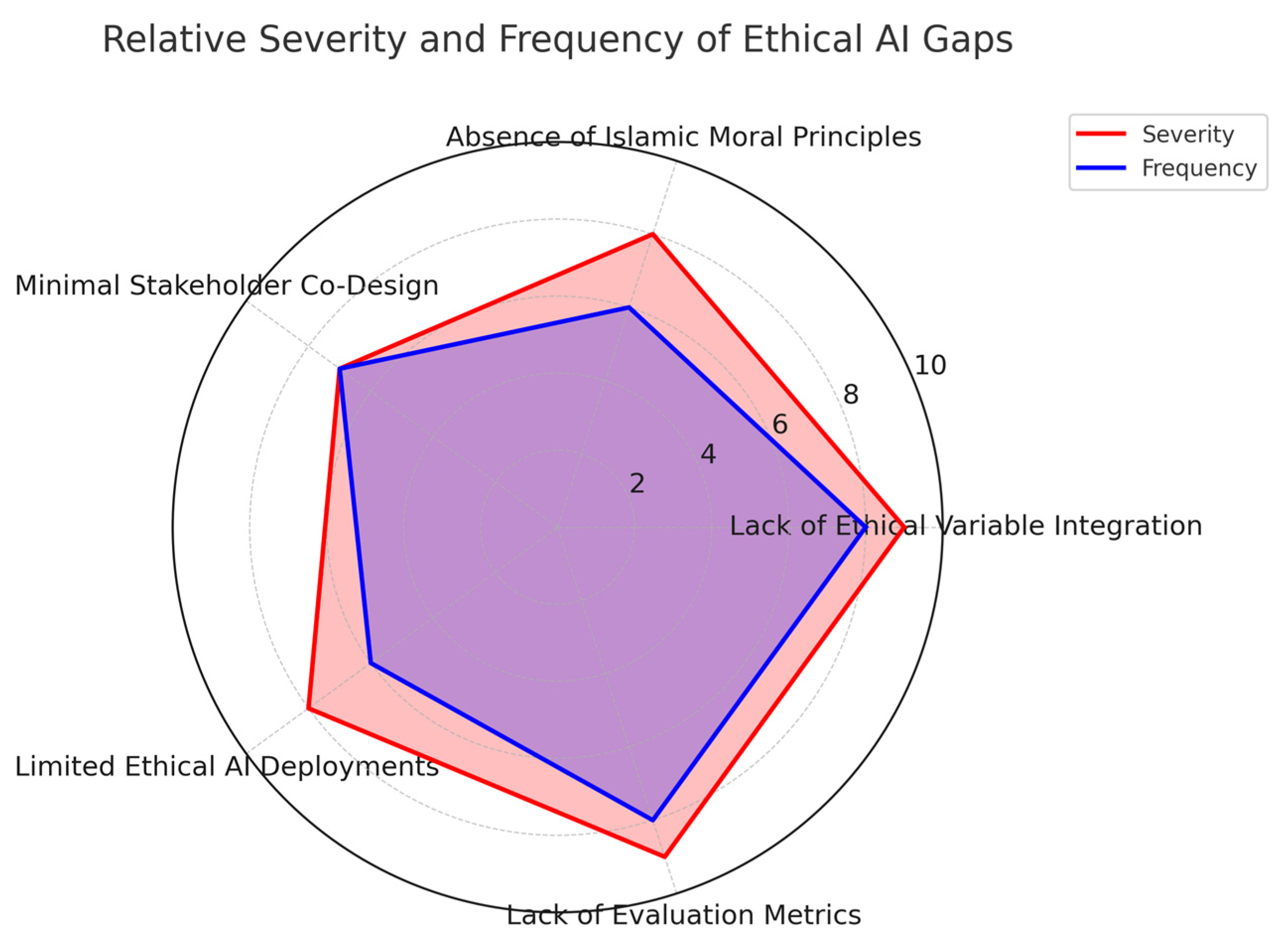

10. Gaps in the Literature the Review Reveal Five Major Gaps

10.1. Lack of Ethical Variable Integration in AI Training Data

10.2. Absence of Islamic Moral Principles in AI System Design

10.3. Minimal Stakeholder Co-Design in AI Development

10.4. Limited Case Studies of Ethical AI Deployment in Healthcare

10.5. Lack of Evaluation Metrics for Ethical Compliance in ANN Systems

10.6. Summary

- Gap 1:

- Lack of ethical variable integration in AI training data.

- Gap 2:

- Absence of Islamic moral principles in AI system design.

- Gap 3:

- Minimal stakeholder co-design in AI development.

- Gap 4:

- Limited case studies of ethical AI deployment in healthcare.

- Gap 5:

- Lack of evaluation metrics for ethical compliance in ANN systems.

11. Towards a Culturally Aligned Ethical AI

11.1. Theoretical Core: Maqāṣid Al-Sharīʿah

11.2. Stakeholder Input: Participatory Data Collection

- Survey data collection from frontline healthcare staff.

- Semi-structured interviews with patient groups.

- Deliberative consultation with scholars of Islamic jurisprudence.

11.3. System Design: ANN-Based DSS with Ethical Rule Engine

- Pre-trained ethical classifiers using annotated datasets.

- Filtering mechanisms to block outputs that violate dignity, safety, or religious norms.

- Adaptive recalibration based on updated fatwas and stakeholder feedback.

11.4. Evaluation Metrics: Beyond Accuracy

- Predictive Accuracy: Assesses performance integrity.

- Ethical Compliance: Measures system transparency, fairness, and harm minimisation, aligned with maqāṣid standards.

11.5. Empirical Implementation: Future Direction

12. AI Healthcare Project Management

13. Conclusion and Roadmap

13.1. Roadmap for Empirical Studies

13.2. Roadmap for Policymaking

13.3. Roadmap for Ethical Design in Practice

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| DSS | Decision Support System |

| KSA | Kingdom of Saudi Arabia |

| MAE | Mean Absolute Error |

| AUC | Area Under the Curve |

| SHAP | SHapley Additive exPlanations |

| LIME | Local Interpretable Model-agnostic Explanations |

| OECD | Organisation for Economic Co-operation and Development |

| EU | European Union |

| UNESCO | United Nations Educational, Scientific and Cultural Organisation |

| GDP | Gross Domestic Product (appears in references to policy context) |

| KPIs | Key Performance Indicators |

Appendix A. Iterative Coding and Theme Development

| Round | Data Excerpt (Example) | Action Taken | Change Made | Rationale | Impact on Themes |

|---|---|---|---|---|---|

| 1 | “The AI reduced nurses to save costs.” | Add | New code: Unsafe staff reduction | Patient safety concern | Created category Unsafe cost-cutting → Theme: ḥifẓ al-nafs (preservation of life) |

| 1 | “Doctors were confused by the AI decision.” | Add | New code: Lack of explanation | Repeated across multiple cases | Created category Interpretability issues |

| 2 | “Confusing outputs” merged with “Lack of explanation” | Merge | Combined into one stronger code | Overlap in meaning | Reinforced category Interpretability issues → Theme: ḥifẓ al-ʿaql (intellect) |

| 2 | “Users praised system speed” | Discard | Removed from coding | Irrelevant to ethical focus | No effect on themes |

| 3 | “System ignored gender preference in treatment” | Add | New code: Cultural insensitivity | Raised by stakeholders | Created category Faith-sensitive care → Theme: ḥifẓ al-dīn + karāmah |

Appendix B. Iterative Rule Development (Decision Logs)

| Rule ID | Version (Round 1) | Update (Round 2) | Update (Round 3) | Rationale | Linked Maqāṣid Theme |

|---|---|---|---|---|---|

| R-01 | Block nurse reduction if ratio < safe threshold | Added human review requirement | Required logging of decision rationale | Stakeholder concern about safety | ḥifẓ al-nafs (preservation of life) |

| R-02 | Re-rank bed allocation if fairness gap > δ | Adjusted δ after workshop | Require fairness report attached to each run | Ensure practical fairness | ʿadl (justice) |

| R-03 | Respect patient religious preference | No update | Added alternative treatment list | Align with stakeholder input | ḥifẓ al-dīn (faith) |

Appendix C. Evolution of Codes into Themes

| Round | Initial Code | Action | Category | Final Theme |

|---|---|---|---|---|

| 1 | “No explanation of outputs” | Add | Interpretability issues | ḥifẓ al-ʿaql (intellect) |

| 1 | “Unsafe staff reduction” | Add | Unsafe cost-cutting | ḥifẓ al-nafs (life) |

| 2 | “Confusing outputs” | Merge | Interpretability issues | ḥifẓ al-ʿaql (intellect) |

| 2 | “No audit logs” | Add | Weak governance | Accountability and transparency |

| 3 | “Ignored gender preference” | Add | Faith-sensitive care | ḥifẓ al-dīn + karāmah |

| 1 | “No explanation of outputs” | Add | Interpretability issues | ḥifẓ al-ʿaql (intellect) |

References

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef]

- Buolamwini, J.; Gebru, T. Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; PMLR: Birmingham, UK; pp. 77–91. [Google Scholar]

- Bernstein, M.H.; Atalay, M.K.; Dibble, E.H.; Maxwell, A.W.P.; Karam, A.R.; Agarwal, S.; Ward, R.C.; Healey, T.T.; Baird, G.L. Can incorrect artificial intelligence (AI) results impact radiologists, and if so, what can we do about it? A multi-reader pilot study of lung cancer detection with chest radiography. Eur. Radiol. 2023, 33, 8263–8269. [Google Scholar] [CrossRef] [PubMed]

- Koçak, B.; Ponsiglione, A.; Stanzione, A.; Bluethgen, C.; Santinha, J.; Ugga, L.; Huisman, M.; Klontzas, M.E.; Cannella, R.; Cuocolo, R. Bias in artificial intelligence for medical imaging: Fundamentals, detection, avoidance, mitigation, challenges, ethics, and prospects. Diagn. Interv. Radiol. 2025, 31, 75. [Google Scholar] [CrossRef]

- Mittelstadt, B.D.; Allo, P.; Taddeo, M.; Wachter, S.; Floridi, L. The ethics of algorithms: Mapping the debate. Big Data Soc. 2016, 3, 2053951716679679. [Google Scholar] [CrossRef]

- Ghaly, M. Islamic Perspectives on the Principles of Biomedical Ethics; World Scientific: Singapore, 2016. [Google Scholar]

- Auda, J. Maqasid Al-Shariah as Philosophy of Islamic Law: A Systems Approach; International Institute of Islamic Thought (IIIT): Herndon, VA, USA, 2008. [Google Scholar]

- Jabareen, Y. Building a conceptual framework: Philosophy, definitions, and procedure. Int. J. Qual. Methods 2009, 8, 49–62. [Google Scholar] [CrossRef]

- Torraco, R.J. Writing integrative literature reviews: Guidelines and examples. Hum. Resour. Dev. Rev. 2005, 4, 356–367. [Google Scholar] [CrossRef]

- Mitchell, M.; Wu, S.; Zaldivar, A.; Barnes, P.; Vasserman, L.; Hutchinson, B.; Spitzer, E.; Raji, I.D.; Gebru, T. Model cards for model reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 220–229. [Google Scholar]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4768–4777. [Google Scholar]

- Hardt, M.; Price, E.; Srebro, N. Equality of opportunity in supervised learning. Adv. Neural Inf. Process. Syst. 2016, 29, 3323–3331. [Google Scholar]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J. A unified framework of five principles for AI in society. In Machine Learning and the City: Applications in Architecture and Urban Design; Wiley-Blackwell: Hoboken, NJ, USA, 2022; pp. 535–545. [Google Scholar]

- Lipton, Z.C. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Mohadi, M.; Tarshany, Y. Maqasid al-Shari’ah and the ethics of artificial intelligence: Contemporary challenges. J. Contemp. Maqasid Stud. 2023, 2, 79–102. [Google Scholar] [CrossRef]

- Greenhalgh, T.; Wherton, J.; Papoutsi, C.; Lynch, J.; Hughes, G.; A’Court, C.; Hinder, S.; Fahy, N.; Procter, R.; Shaw, S. Beyond adoption: A new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J. Med. Internet Res. 2017, 19, e8775. [Google Scholar] [CrossRef]

- Barocas, S.; Hardt, M.; Narayanan, A. Fairness and Machine Learning: Limitations and Opportunities; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Moldovan, A.; Vescan, A.; Grosan, C. Healthcare bias in AI: A systematic literature review. In Proceedings of the 20th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE), Porto, Portugal, 4–6 April 2025. [Google Scholar]

- Cho, H.N.; Ahn, I.; Gwon, H.; Kang, H.J.; Kim, Y.; Seo, H.; Choi, H.; Kim, M.; Han, J.; Kee, G.; et al. Explainable predictions of a machine learning model to forecast the postoperative length of stay for severe patients: Machine learning model development and evaluation. BMC Med. Inform. Decis. Mak. 2024, 24, 350. [Google Scholar] [CrossRef]

- Elmahjub, E. Artificial intelligence (AI) in Islamic ethics: Towards pluralist ethical benchmarking for AI. Philos. Technol. 2023, 36, 73. [Google Scholar] [CrossRef]

- Chamsi-Pasha, H.; Albar, M.; Chamsi-Pasha, M. Comparative Study between Islamic and Western Bioethics: The Principle of Autonomy. J. Br. Islam. Med. Assoc. 2022, 11, 1–12. [Google Scholar]

- Procter, R.; Tolmie, P.; Rouncefield, M. Holding AI to account: Challenges for the delivery of trustworthy AI in healthcare. ACM Trans. Comput.-Hum. Interact. 2023, 30, 1–34. [Google Scholar] [CrossRef]

- Sadeghi, Z.; Alizadehsani, R.; Cifci, M.A.; Kausar, S.; Rehman, R.; Mahanta, P.; Bora, P.K.; Almasri, A.; Alkhawaldeh, R.S.; Hussain, S.; et al. A brief review of explainable artificial intelligence in healthcare. arXiv 2023, arXiv:2304.01543. [Google Scholar] [CrossRef]

- Ghaly, M. Biomedical scientists as co-muftis: Their contribution to contemporary Islamic bioethics. Die Welt Des Islams 2015, 55, 286–311. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kortz, M.; Budish, R.; Bavitz, C.; Gershman, S.; O’Brien, D.; Scott, K.; Schieber, S.; Waldo, J.; Weinberger, D.; et al. Accountability of AI under the law: The role of explanation. arXiv 2017, arXiv:1711.01134. [Google Scholar] [CrossRef]

- Mittelstadt, B. Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 2019, 1, 501–507. [Google Scholar] [CrossRef]

- Quraishi, A. Principles of Islamic Jurisprudence. By Mohammad Hashim Kamali. Cambridge: Cambridge Islamic Texts Society1991. Pp. xxi, 417. Price not available. ISBN: 0-946-62123-3. Paper. ISBN: 0-946-62124-1. J. Law Relig. 2001, 15, 385–387. [Google Scholar] [CrossRef]

- Ghaly, M. Islamic ethico-legal perspectives on medical accountability in the age of artificial intelligence. In Research Handbook on Health, AI and the Law; Edward Elgar Publishing Ltd.: Cheltenham, UK, 2024; pp. 234–253. [Google Scholar]

- Ghaly, M. Islamic Bioethics in the Twenty–First Century. Zygon 2013, 48, 592–599. [Google Scholar] [CrossRef]

- Habeebullah, A.A. Ethical Considerations of Artificial Intelligence in Healthcare: A Study in Islamic Bioethics. Fountain Univ. J. Arts Humanit. 2024, 1, 14–24. [Google Scholar]

- Gerdes, A. A participatory data-centric approach to AI ethics by design. Appl. Artif. Intell. 2022, 36, 2009222. [Google Scholar] [CrossRef]

- Bouderhem, R. Shaping the future of AI in healthcare through ethics and governance. Humanit. Soc. Sci. Commun. 2024, 11, 416. [Google Scholar] [CrossRef]

- Kamali, M.H. Principles of Islamic Jurisprudence; The Islamic Text Society: Cambridge, UK, 2003; Volume 14, pp. 369–384. [Google Scholar]

- Tucci, V.; Saary, J.; Doyle, T.E. Factors influencing trust in medical artificial intelligence for healthcare professionals: A narrative review. J. Med. Artif. Intell. 2022, 5, 4. [Google Scholar] [CrossRef]

- Solanki, P.; Grundy, J.; Hussain, W. Operationalising ethics in artificial intelligence for healthcare: A framework for AI developers. AI Ethics 2023, 3, 223–240. [Google Scholar] [CrossRef]

- Morley, J.; Machado, C.C.V.; Burr, C.; Cowls, J.; Joshi, I.; Taddeo, M.; Floridi, L. The ethics of AI in health care: A mapping review. Soc. Sci. Med. 2020, 260, 113172. [Google Scholar] [CrossRef] [PubMed]

- Guidance, W. Ethics and Governance of Artificial Intelligence for Health; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- Brett, M. Issues to consider relating to information governance and artificial intelligence. Cyber Secur. Peer-Rev. J. 2024, 7, 237–252. [Google Scholar] [CrossRef]

- Nastoska, A.; Jancheska, B.; Rizinski, M.; Trajanov, D. Evaluating Trustworthiness in AI: Risks, Metrics, and Applications Across Industries. Electronics 2025, 14, 2717. [Google Scholar] [CrossRef]

- Ratti, E.; Morrison, M.; Jakab, I. Ethical and social considerations of applying artificial intelligence in healthcare—A two-pronged scoping review. BMC Med. Ethics 2025, 26, 68. [Google Scholar] [CrossRef]

- Bapatla, S.K.S. Ethical AI in Healthcare: A Framework for Equity-by-Design. J. Multidiscip. 2025, 5, 143–153. [Google Scholar]

- Gilpin, L.H.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining explanations: An overview of interpretability of machine learning. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), Turin, Italy, 1–3 October 2018; IEEE: New York, NY, USA, 2018; pp. 80–89. [Google Scholar]

| Dimension | Global Frameworks (EU, OECD, UNESCO) | Islamic Ethics (maqāṣid al-sharīʿah) |

|---|---|---|

| Core Ethical Values | Human dignity, fairness, privacy, accountability | Preservation of life, intellect, faith, progeny, property |

| Guiding Philosophy | Human rights–based universal ethics | Divine law (sharīʿah) and public interest (maṣlaḥah) |

| Human Agency and Autonomy | Protected via consent, oversight mechanisms | Framed within duties to God and community (e.g., no harm principle) |

| Transparency and Explainability | Technical explainability and access to decisions | Moral clarity prioritised over technical transparency |

| Justice and Fairness | Non-discrimination and fairness principles | Rooted in ʿadl (justice) and community wellbeing |

| Privacy and Data Protection | GDPR-driven data protections | Privacy as sacred, linked to honour and dignity |

| Cultural Context Sensitivity | Abstract cultural respect, not systematised | Deep integration with local jurisprudence and social norms |

| Accountability | Legal and institutional liability models | Accountability before God and society (taklīf) |

| Implementation Mechanisms | Policy guidelines, technical toolkits, ethical auditing | Fatwas, Islamic ethical boards, sharīʿah-based review, and enforcement |

| Theme (Maqāṣid) | Why It Matters | Key Codes | Representative Evidence (Excerpt) | Implication for Framework |

|---|---|---|---|---|

| Safety—ḥifẓ al-nafs (protection of life) | Patient safety is a fundamental Islamic and clinical duty; unsafe recommendations undermine legitimacy and risk harm | Staff cuts below safe ratios; unsafe automation in high-risk contexts; risky cost-saving outputs | “Reducing nurses below safe levels risks patient harm.” (S1) “The system recommended fewer night staff during peak demand.” (S2) “Budget-driven reductions ignored the safety threshold.” (S3) | Block auto-approval if nurse/patient < safe threshold; escalate to human review; enforce safe staffing norms; log rationale for each override |

| Justice—ʿadl (fair allocation) | Justice requires that allocation decisions do not systematically disadvantage groups; fairness is both a legal and ethical mandate | Biassed bed/theatre allocation; under-service of vulnerable groups; unequal access by demographics; lack of fairness checks | “Older patients waited longer for ICU beds compared to others.” (J1) “Allocation patterns left rural patients disadvantaged.” (J2) “We need measurable fairness bounds to guarantee justice.” (J3) | Apply fairness re-ranking until demographic parity difference ≤ δ; publish fairness reports; record SHAP/LIME interpretability notes; embed parity metrics in model cards |

| Dignity—karāmah (respectful triage) | Dignity requires explanations in high-risk contexts; opaque or low-confidence outputs erode patient trust and clinician legitimacy | Dehumanising triage language; low confidence in high-stakes cases; absence of justification | “When the system is uncertain, patients deserve a clear justification.” (D1) “Outputs framed patients as ‘cases’ rather than people.” (D2) | If model confidence < α in high-harm cases, require explicit justification; produce explanation record; reviewer sign-off mandatory; embed justification quality indicators in audits |

| Faith—ḥifẓ al-dīn (cultural/religious alignment) | Outputs conflicting with religious duties erode adoption; care must align with Islamic practice and patient faith commitments | Conflicts with religious preferences; ignoring fasting/prayer; culturally unsafe care pathways | “This plan ignored fasting requirements during Ramadan.” (F1) “Scheduling did not account for prayer breaks.” (F2) | Flag or deny outputs that conflict with faith; provide culturally safe alternatives; log overrides; maintain alternatives list; consult religious scholars in co-design workshops |

| Intellect—ḥifẓ al-ʿaql (cognitive integrity of staff) | Cognitive overload undermines professional judgement; alert fatigue reduces trust and may cause errors | Excessive alert volume; non-interpretable outputs; staff unable to act effectively; risk of burnout | “We receive so many alerts that it is impossible to focus.” (I1) “Most alerts were incomprehensible to frontline staff.” (I2) | Cap alert volume per shift; add human-in-the-loop step; log alert load; require interpretable summaries; document overload risks in model cards |

| Theme (Maqāṣid) | Why It Matters | Key Codes | Representative Evidence (Excerpts) | Implication for Framework |

|---|---|---|---|---|

| Safety—ḥifẓ al-nafs (protection of life) | Patient safety is a fundamental Islamic and clinical duty; unsafe recommendations undermine legitimacy and risk harm | Staff cuts below safe ratios; unsafe automation in high-risk contexts; risky cost-saving outputs | “Reducing nurses below safe levels risks patient harm.” (S1) “The system recommended fewer night staff during peak demand.” (S2) “Budget-driven reductions ignored the safety threshold.” (S3) | Block auto-approval if nurse/patient < safe threshold; escalate to human review; enforce safe staffing norms; log rationale for each override |

| Justice—ʿadl (fair allocation) | Justice requires that allocation decisions do not systematically disadvantage groups; fairness is both a legal and ethical mandate | Biassed bed/theatre allocation; under-service of vulnerable groups; unequal access by demographics; lack of fairness checks | “Older patients waited longer for ICU beds compared to others.” (J1) “Allocation patterns left rural patients disadvantaged.” (J2) “We need measurable fairness bounds to guarantee justice.” (J3) | Apply fairness re-ranking until demographic parity difference ≤ δ; publish fairness reports; record SHAP/LIME interpretability notes; embed parity metrics in model cards |

| Dignity—karāmah (respectful triage) | Dignity requires explanations in high-risk contexts; opaque or low-confidence outputs erode patient trust and clinician legitimacy | Dehumanising triage language; low confidence in high-stakes cases; absence of justification | “When the system is uncertain, patients deserve a clear justification.” (D1) “Outputs framed patients as ‘cases’ rather than people.” (D2) | If model confidence < α in high-harm cases, require explicit justification; produce explanation record; reviewer sign-off mandatory; embed justification quality indicators in audits |

| Faith—ḥifẓ al-dīn (cultural/religious alignment) | Outputs conflicting with religious duties erode adoption; care must align with Islamic practice and patient faith commitments | Conflicts with religious preferences; ignoring fasting/prayer; culturally unsafe care pathways | “This plan ignored fasting requirements during Ramadan.” (F1) “Scheduling did not account for prayer breaks.” (F2) | Flag or deny outputs that conflict with faith; provide culturally safe alternatives; log overrides; maintain alternatives list; consult religious scholars in co-design workshops |

| Intellect—ḥifẓ al-ʿaql (cognitive integrity of staff) | Cognitive overload undermines professional judgement; alert fatigue reduces trust and may cause errors | Excessive alert volume; non-interpretable outputs; staff unable to act effectively; risk of burnout | “We receive so many alerts that it is impossible to focus.” (I1) “Most alerts were incomprehensible to frontline staff.” (I2) | Cap alert volume per shift; add human-in-the-loop step; log alert load; require interpretable summaries; document overload risks in model cards |

| Step | Examples from This Study | Outcome |

|---|---|---|

| 1. Define core concepts (from three strands) |

| Three foundational concept families (technical, global ethics, Islamic ethics) |

| 2. Map risks to safeguards |

| Integrated categories linking ANN risks with maqāṣid safeguards |

| 3. Specify operational mechanisms |

| Final framework constructs of the maqāṣid-aligned ANN–DSS |

| Risk | Maqāṣid | Rule (Action) | Trigger Metric | Audit Artefact |

|---|---|---|---|---|

| Unsafe staff cuts | ḥifẓ al-nafs (life) | Block auto-approval; send to human review | Nurse/patient ratio < safe threshold | Decision log + rationale [11,20] |

| Biassed bed allocation | ʿadl (justice) | Re-rank to satisfy fairness bounds | Demographic parity diff > δ | Fairness report + SHAP/LIME note [13,21] |

| Dignity-eroding triage | karāmah (dignity) | Require explicit justification | Model confidence < α with high harm | Explanation record + reviewer sign-off [18,22] |

| Faith-incompatible care | ḥifẓ al-dīn (faith) | Deny/flag option; propose alternative | Religious preference conflict = true | Override note + alternatives list [7] |

| Cognitive overload of staff | ḥifẓ al-ʿaql (intellect) | Slow rollout; add human-in-the-loop step | Alert volume > cap per shift | Model-card risk note + mitigation [11] |

| Application Domain | Predictive Task | Project Impact | Ethical Gap |

|---|---|---|---|

| Bed Occupancy Management | Predict emergency bed availability | Improved allocation, reduced waiting time | No fairness checks; risk of disadvantaging vulnerable groups in allocation |

| Surgical Scheduling | Forecast surgery durations and delays | Optimised theatre use, better time management | Low-confidence predictions may undermine patient dignity without safeguards |

| Workforce Planning | Forecast staffing demands | Reduced overstaffing or understaffing | No mechanism to prevent unsafe staff reductions (ḥifẓ al-nafs risk) |

| Hospital Expansion Projects | Estimate project completion time | Avoid cost/time overruns | Focus on efficiency only; ignores social impact and equity considerations |

| Medical Equipment Logistics | Predict equipment demand patterns | Efficient procurement, reduced idle resources | Lacks prioritisation safeguards for critical or vulnerable patient groups |

| Risk Classification | Identify probability of patient safety events | Pre-emptive action, improved safety protocols | Risk flagged but without embedded ethical accountability or transparency |

| Financial Forecasting | Estimate budget needs and funding gaps | Improved financial planning and reporting | May prioritise cost savings over dignity or equitable service provision |

| Ethical Issue | Description | Example in Healthcare | Implication for Framework |

|---|---|---|---|

| Bias in Training Data | AI systems reflect and amplify existing societal or dataset biases | ANN under-predicts treatment needs for minority groups | Fairness re-ranking under ʿadl + audit via SHAP/LIME |

| Opacity (‘Black box’) | Lack of interpretability makes decisions unexplainable to users | Doctors receive decisions without rationale in triage AI | Require explanation logs + reviewer sign-off under karāmah |

| Loss of Autonomy | Automated decisions reduce clinicians’ or patients’ ability to intervene | AI system overrides clinical judgement in ICU resource allocation | Human-in-the-loop safeguards; override records |

| Privacy Violation | Sensitive health data may be misused or leaked | ANN exposes patient records in public training sets | Data protection guided by dignity (karāmah) and amāna |

| Cultural/Ethical Misalignment | Recommendations may violate local religious or moral norms | AI recommends gender-insensitive care in Islamic settings | Faith filters under ḥifẓ al-dīn |

| Algorithmic Harm | Actions cause harm due to misaligned values or technical error | AI triage system suggests denying expensive care to elders | Block unsafe outputs under ḥifẓ al-nafs |

| Maqāṣid Principle | AI Ethical Dimension | Example in ANN Systems | Safeguard in Framework |

|---|---|---|---|

| Life (ḥifẓ al-nafs) | Patient safety, harm minimisation | Flag ANN recommendations that reduce staff below safe levels | Block auto-approval if nurse/patient < safe threshold; escalate to human review; record override rationale |

| Intellect (ḥifẓ al-ʿaql) | Explainability, clinical oversight | Require ANN models to provide interpretable outputs to doctors | Require interpretable outputs (e.g., SHAP/LIME summaries); cap alert volume; embed explanation records in audits |

| Religion (ḥifẓ al-dīn) | Respect for religious values in AI decisions | Ensure ANN care recommendations align with Islamic norms (e.g., gender-sensitive care) | Flag or deny outputs conflicting with faith; provide culturally safe alternatives; log overrides and consult scholars |

| Progeny (ḥifẓ al-nasl) | Protection of family-related medical data and outcomes | Prevent predictive biases against reproductive health cases | Apply fairness re-ranking to reproductive health models; include subgroup performance in model cards |

| Property (ḥifẓ al-māl) | Data security, financial fairness in cost algorithms | Control access to ANN-predicted billing and cost-saving decisions | Secure audit logs; restrict billing optimisation to fairness bounds; publish accountability reports |

| Participatory Design Principle | Current Practice Gap in Healthcare AI | Implications for Saudi Context |

|---|---|---|

| Inclusivity—Involve all relevant stakeholders early | Technical teams dominate system design with minimal user or patient involvement | Lack of input from patients, religious scholars, or end-users reduces cultural legitimacy |

| Transparency—Design decisions are visible and explainable | AI model architecture and training processes often remain opaque | Users cannot audit ethical risks or religious appropriateness of algorithmic decisions |

| Reciprocity—Stakeholders influence system outcomes | Feedback loops from users are rare or superficial | Ethical misalignment persists when Islamic jurisprudential views are excluded |

| Contextualisation—Systems are tailored to cultural/legal norms | Most systems use standard datasets and universal metrics | Disregards Saudi ethical-legal codes, such as maqāṣid al-sharīʿah |

| Iterative Co-design—Ongoing user involvement in refinement | Ethical considerations are often added post hoc, not embedded in design lifecycle | Retroactive ethical fixes undermine trust and systemic alignment |

| Power Redistribution—Equal voice to all groups | Institutional hierarchies silence minority, religious, or patient voices | Fails to uphold Islamic concepts of justice (ʿadl) and collective welfare (maslahah) |

| Accountability—System designers remain responsible for harm | No established mechanism to flag or correct ethical violations post-deployment | Contradicts Islamic emphasis on amanah (responsibility) and ihsan (doing what is right) |

| ANN Input Variable | Predicted Output | Ethical Concern | Logic Gate Intervention (Example) | Relevant Maqāṣid Principle |

|---|---|---|---|---|

| Patient Risk Score | Bed Allocation Priority | Bias against elderly or chronically ill | If risk score ↑ and patient age > 65 → override deprioritisation | hifz al-nafs (life) |

| Budget Constraint | Staff Reduction Strategy | Loss of human care, patient dignity | If recommendation = reduce nurses → flag for human review | Karāmah (dignity) |

| Cost-Effectiveness Ratio | Treatment Protocol Selection | Undermining costly but life-saving treatment | If cheaper protocol < 85% effectiveness → block auto-selection | hifz al-nafs (life) |

| Staff Fatigue Index | Shift Assignment | Exploitation or burnout risk | If fatigue score > 80 → prohibit critical care assignment | hifz al-ʿaql (intellect) |

| Diagnostic Confidence | Automatic Patient Discharge Decision | Premature or unsafe discharge | If confidence < 95% → route to ethical/clinical reviewer | hifz al-nafs (life) |

| Patient Religious Preference (binary) | AI-guided Intervention Type | Incompatibility with religious practices | If intervention violates preference (e.g., no DNR) → deny or flag | hifz al-din (faith) |

| Resource Scarcity Index | Allocation of Ventilators | Equity and fairness in crisis | If demand > supply → activate fairness allocation rule | ʿadl (justice) |

| Identified Gap | Root Cause | Proposed Mitigation Strategy |

|---|---|---|

| 1. Lack of ethical variable integration in AI training data | Focus on technical metrics (accuracy, cost) rather than normative dimensions | Annotate datasets with ethical risk signals (e.g., harm potential, dignity threats) based on stakeholder feedback |

| 2. Absence of Islamic moral principles in AI system design | Secular design paradigms dominate global AI development | Embed maqāṣid al-sharīʿah principles into AI architecture through ethical rule engines |

| 3. Minimal stakeholder co-design in AI development | Top-down engineering processes exclude end-user and ethical voices | Implement participatory design with clinicians, patients, scholars, and policy leaders |

| 4. Limited case studies of ethical AI deployment in real-world healthcare | Most research remains in simulation or tech labs; lack of contextual testing | Conduct pilot deployments in culturally sensitive healthcare settings; collect feedback for iterative development |

| 5. Lack of evaluation metrics for ethical compliance in ANN systems | Overemphasis on performance KPIs (accuracy, speed) neglects moral dimensions | Define dual evaluation metrics: technical performance + ethical compliance (e.g., fairness, transparency, harm minimisation) |

| Evaluation Dimension | Predictive Performance Metrics | Ethical Compliance Metrics | Assessment Method | Explanation and References |

|---|---|---|---|---|

| Accuracy | Prediction accuracy (e.g., classification rate, MAE, AUC) | Ethical correctness (e.g., harm avoided, dignity preserved) | Expert panel rating using 5-point Likert scale (1 = poor, 5 = excellent) | Experts assess alignment between model outputs and ethical intent [41,42]. |

| Efficiency | Processing speed, resource utilisation | Proportionality of outcomes (no undue burden on patients or staff) | Structured checklist of operational constraints and impact logs | Ensures performance gains do not override principles of care and equity [43]. |

| Fairness | Bias reduction (e.g., balanced accuracy, confusion matrix) | Demographic parity, equal opportunity | Quantitative fairness metrics + expert Likert review (1–5) | Combines objective bias scores with ethical judgement [6]. |

| Transparency | Model explainability (e.g., SHAP, LIME scores) | Moral interpretability (reasonableness of rationale) | Explainability artefact checklist (model card, trace log, audit note) | Verifies traceability and justification of decisions [11,42]. |

| Robustness | Resistance to data noise/outliers | Ethical resilience (maintains moral validity under exceptional conditions) | Stress-test report + binary compliance (pass/fail) | Confirms safety and ethical stability under edge-case scenarios [44]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alotaibi, H.M.S.; Balachandran, W.; Hunaiti, Z. Ethical Integration of AI in Healthcare Project Management: Islamic and Cultural Perspectives. AI 2025, 6, 307. https://doi.org/10.3390/ai6120307

Alotaibi HMS, Balachandran W, Hunaiti Z. Ethical Integration of AI in Healthcare Project Management: Islamic and Cultural Perspectives. AI. 2025; 6(12):307. https://doi.org/10.3390/ai6120307

Chicago/Turabian StyleAlotaibi, Hazem Mathker S., Wamadeva Balachandran, and Ziad Hunaiti. 2025. "Ethical Integration of AI in Healthcare Project Management: Islamic and Cultural Perspectives" AI 6, no. 12: 307. https://doi.org/10.3390/ai6120307

APA StyleAlotaibi, H. M. S., Balachandran, W., & Hunaiti, Z. (2025). Ethical Integration of AI in Healthcare Project Management: Islamic and Cultural Perspectives. AI, 6(12), 307. https://doi.org/10.3390/ai6120307