A Synergistic Multi-Agent Framework for Resilient and Traceable Operational Scheduling from Unstructured Knowledge

Abstract

1. Introduction

2. State of the Art and Research Contribution

2.1. Strategic Review of the Literature

2.1.1. The Challenge of Unstructured Knowledge: Advances in Document Intelligence

2.1.2. Process Automation: Agentic AI in Logistics and Maintenance

2.1.3. The Imperative of Resilience: Simulation for Planning and Risk Analysis

2.1.4. The Demand for Trust: Explainable AI and Traceability

2.2. Identified Research Gaps and Objectives

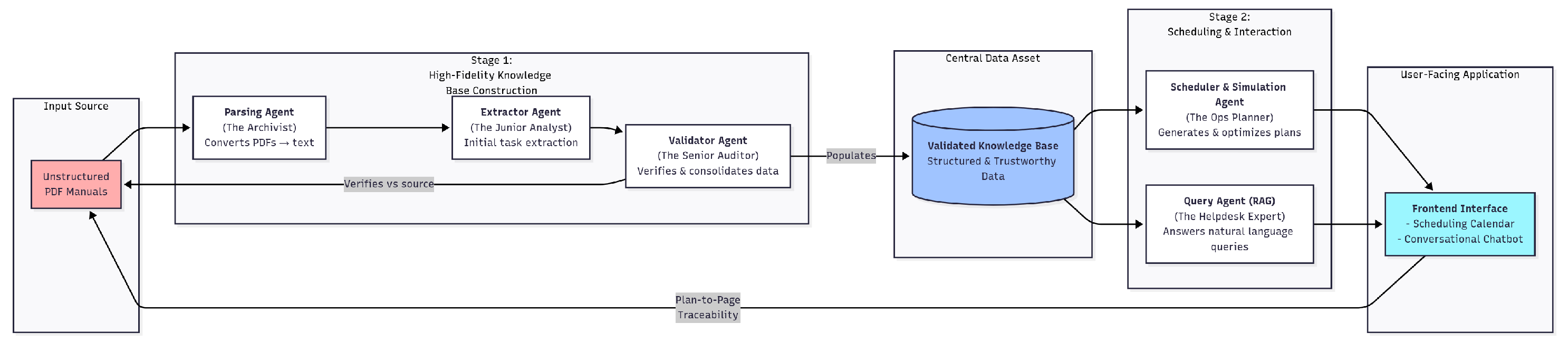

3. The Synergistic Multi-Agent Framework

3.1. Stage 1: High-Fidelity Knowledge Base Construction

3.2. Stage 2: Resilience-Driven Maintenance Scheduling

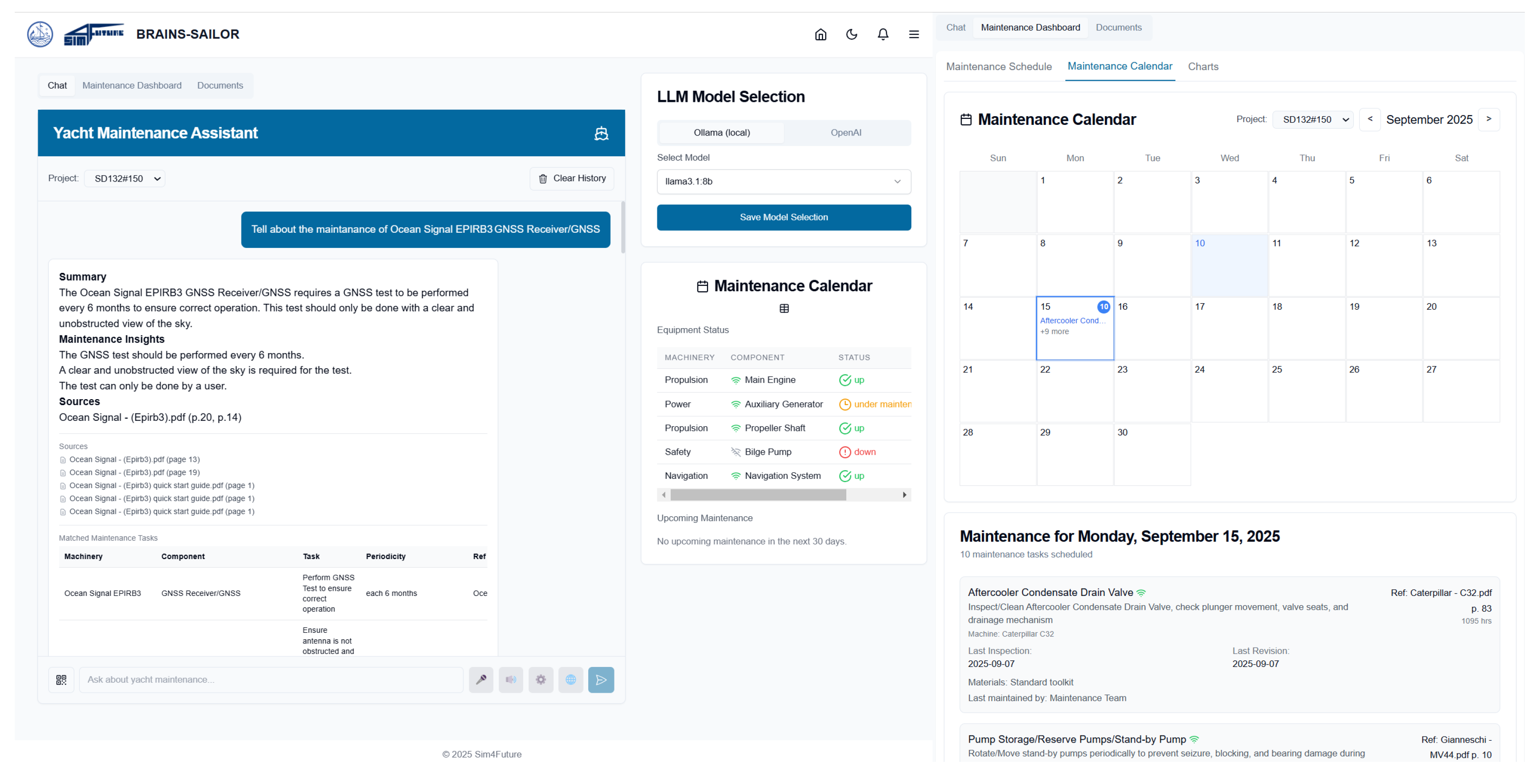

3.3. Stage 3: Explainability by Design: The User Interaction Layer

3.4. Technical Implementation Details

4. Empirical Validation and Results

4.1. Experimental Setup and Dataset

4.2. Schedulable Task Filtering

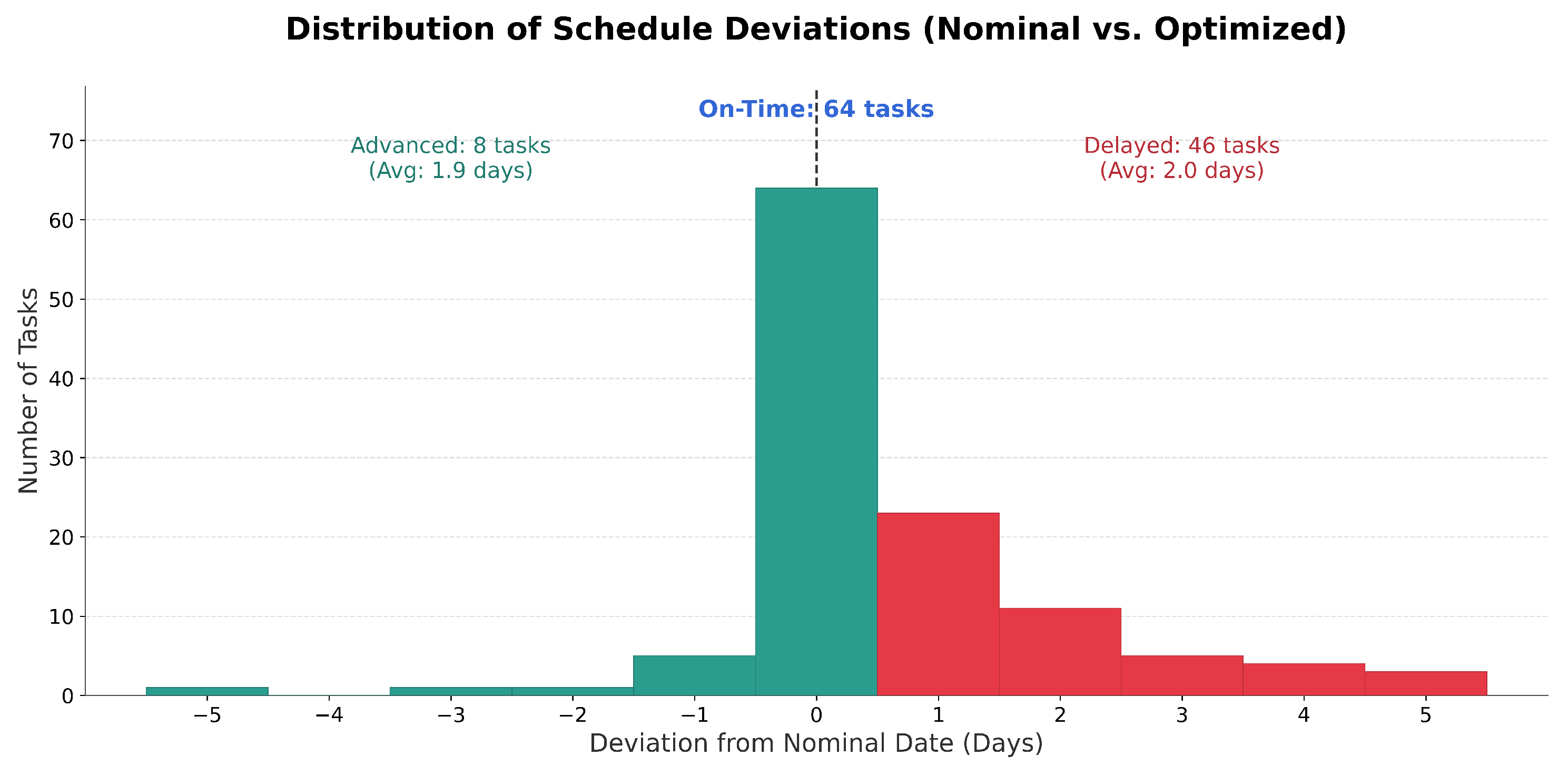

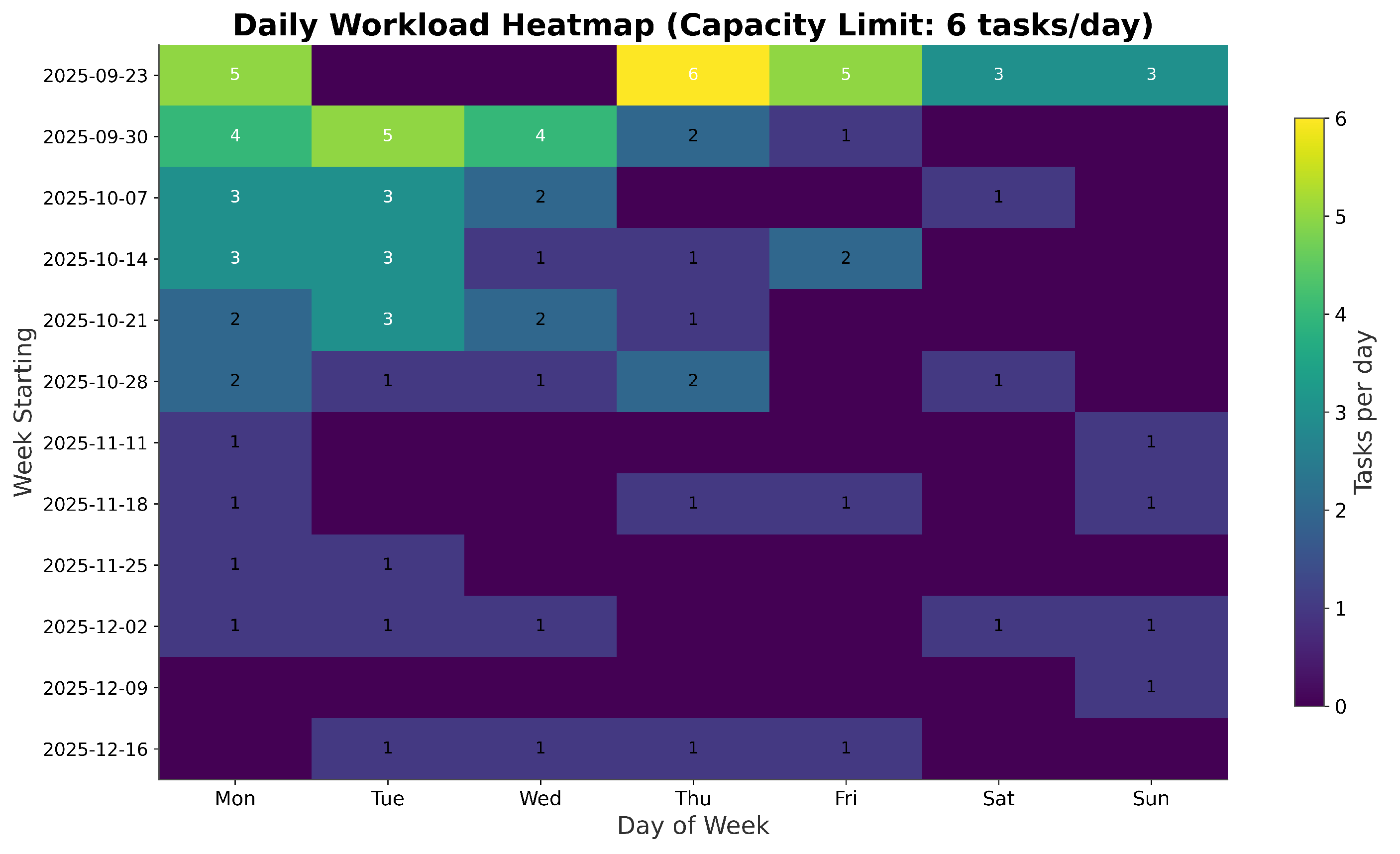

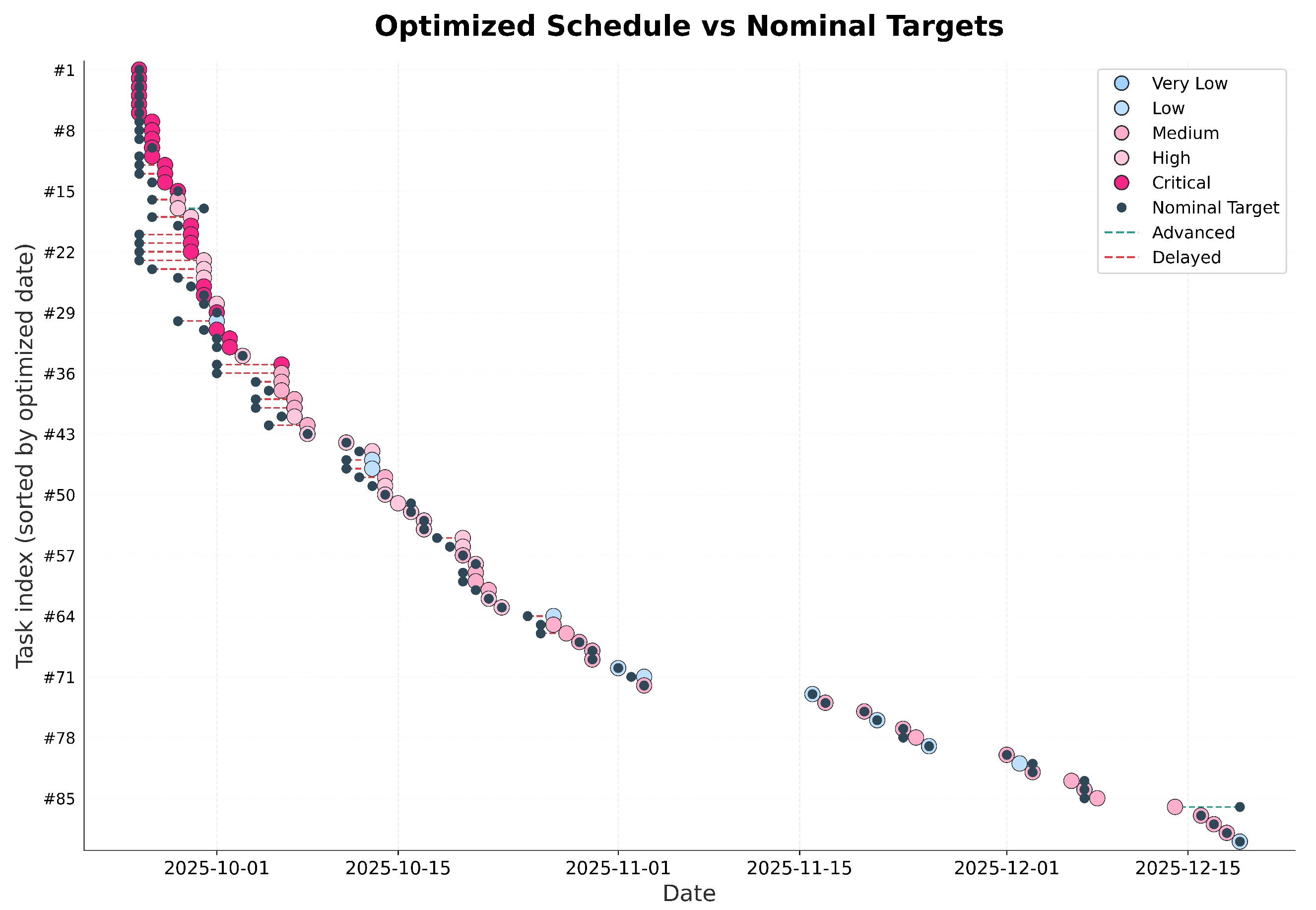

4.3. Scheduling Performance and Visual Analytics

4.4. Qualitative User Feedback

5. Discussion

5.1. Practical and Industrial Implications

5.2. Limitations and Future Work

- Rule-based extraction systems with heuristic scheduling.

- Knowledge Graph approaches (e.g., Neo4j-based maintenance ontologies).

- Digital Twin frameworks with physics-based simulation.

- Commercial platforms (e.g., SAP Predictive Maintenance, IBM Maximo).

- Competing LLM models (GPT-5, Claude 4.5, locally hosted open models via Ollama).

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Carvalho, T.P.; Soares, F.A.; Vita, R.; Francisco, R.D.P.; Basto, J.P.; Alcalá, S.G. A systematic literature review of machine learning methods applied to predictive maintenance. Comput. Ind. Eng. 2019, 137, 106024. [Google Scholar] [CrossRef]

- Stone, P.; Veloso, M. Multiagent Systems: A Survey from a Machine Learning Perspective. Auton. Robot. 2000, 8, 345–383. [Google Scholar] [CrossRef]

- Tako, A.A.; Robinson, S. The application of discrete event simulation and system dynamics in the logistics and supply chain context. Decis. Support Syst. 2012, 52, 802–815. [Google Scholar] [CrossRef]

- Gandomi, A.; Haider, M. Beyond the hype: Big data concepts, methods, and analytics. Int. J. Inf. Manag. 2015, 35, 137–144. [Google Scholar] [CrossRef]

- Bokrantz, J.; Skoogh, A.; Berlin, C.; Wuest, T.; Stahre, J. Smart maintenance: A research agenda for industrial maintenance management. J. Manuf. Syst. 2020, 56, 176–200. [Google Scholar] [CrossRef]

- Zonta, T.; da Costa, C.A.; da Rosa Righi, R.; de Lima, M.J.; da Trindade, E.S.; Li, G.P. Predictive maintenance in the Industry 4.0: A systematic literature review. Comput. Ind. 2020, 123, 103289. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Zheng, Y.; Guo, Y.; Luo, Z.; Yu, Z.; Wang, K.; Zhang, H.; Zhao, H. A Survey on Document-Level Relation Extraction: Methods and Applications. In Proceedings of the 3rd International Conference on Internet, Education and Information Technology (IEIT 2023), Xiamen, China, 28–30 April 2023; pp. 1061–1071. [Google Scholar]

- Xu, Y.; Li, M.; Cui, L.; Huang, S.; Wei, F.; Zhou, M. LayoutLM: Pre-training of Text and Layout for Document Image Understanding. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 6–10 July 2020; pp. 1192–1200. [Google Scholar]

- Marinai, S.; Gori, M.; Soda, G. Artificial neural networks for document analysis and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 23–35. [Google Scholar] [CrossRef]

- Shen, Y.; Yu, J.; Wang, P.; Zhang, R.; Liu, T. Large Language Models for Document-Level Information Extraction: A Survey. arXiv 2024, arXiv:2402.17930. [Google Scholar]

- Dorri, A.; Kanhere, S.S.; Jurdak, R. Multi-Agent Systems: A Survey. IEEE Access 2018, 6, 28573–28593. [Google Scholar] [CrossRef]

- Ouelhadj, D.; Petrovic, S. A survey of dynamic scheduling in manufacturing systems. J. Sched. 2009, 12, 417–431. [Google Scholar] [CrossRef]

- Hussain, M.S.; Ali, M. A Multi-agent Based Dynamic Scheduling of Flexible Manufacturing Systems. Glob. J. Flex. Syst. Manag. 2019, 20, 267–290. [Google Scholar] [CrossRef]

- Dekker, R. Applications of maintenance optimization models: A review and analysis. Reliab. Eng. Syst. Saf. 1996, 51, 229–240. [Google Scholar] [CrossRef]

- Bruzzone, A.G.; Sinelshchikov, K.; Gotelli, M.; Monaci, F.; Sina, X.; Ghisi, F.; Cirillo, L.; Giovannetti, A. Machine Learning and Simulation Modeling Large Offshore and Production Plants to improve Engineering and Construction. Procedia Comput. Sci. 2025, 253, 3318–3324. [Google Scholar] [CrossRef]

- Ruiz Rodríguez, M.L.; Kubler, S.; de Giorgio, A.; Cordy, M.; Robert, J.; Le Traon, Y. Multi-agent deep reinforcement learning based predictive maintenance on parallel machines. Robot. Comput. Integr. Manuf. 2022, 78, 102406. [Google Scholar] [CrossRef]

- Jennings, N.R. An agent-based approach for building complex software systems. Commun. ACM 2001, 44, 35–41. [Google Scholar] [CrossRef]

- He, Y.; Yang, Y.; Wang, M.; Zhang, X. Resilience Analysis of the Container Port Shipping Network Structure: A Case Study of China. Sustainability 2022, 14, 9489. [Google Scholar] [CrossRef]

- Bruzzone, A.G.; Massei, M.; Gotelli, M.; Giovannetti, A.; Martella, A. Sustainability, Environmental Impacts and Resilience of Strategic Infrastructures. In Proceedings of the International Workshop on Simulation for Energy, Sustainable Development and Environment, SESDE, Athens, Greece, 18–20 September 2023. [Google Scholar]

- Ivanov, D.; Dolgui, A. Viability of intertwined supply networks: Extending the supply chain resilience angles towards survivability. Int. J. Prod. Res. 2020, 58, 2904–2915. [Google Scholar] [CrossRef]

- Tordecilla, R.D.; Juan, A.A.; Montoya-Torres, J.R.; Quintero-Araujo, C.L.; Panadero, J. Simulation–optimization methods for designing and assessing resilient supply chain networks under uncertainty scenarios: A review. Simul. Model. Pract. Theory 2021, 106, 102172. [Google Scholar] [CrossRef]

- Moosavi, S.; Zanjani, M.; Razavi-Far, R.; Palade, V.; Saif, M. Explainable AI in Manufacturing and Industrial Cyber–Physical Systems: A Survey. Electronics 2024, 13, 3497. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. 2018, 51, 93. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Advances in Neural Information Processing Systems 33; Curran Associates, Inc.: Red Hook, NY, USA, 2020; pp. 9459–9474. [Google Scholar]

- Cummins, C.; Somers, F.; Delaney, A.; Bruton, K. Explainable predictive maintenance: A survey of current methods, challenges, and opportunities. arXiv 2024, arXiv:2401.07871. [Google Scholar] [CrossRef]

- Pinedo, M.L. Scheduling: Theory, Algorithms, and Systems, 6th ed.; Springer: Cham, Switzerland, 2022. [Google Scholar]

| Column Heading | Description |

|---|---|

| Machinery | The specific name or model of the equipment. |

| Component | The sub-component or part of the machinery being maintained. |

| Maintenance Description | A clear, verb-first description of the required maintenance action. |

| Activity Type | The categorical nature of the task (e.g., Control, Maintenance, Replacement, Other). |

| Operating Hours | The maintenance interval defined in terms of machinery operating hours. |

| Time Period | The calendar-based interval (e.g., Daily, Weekly, Monthly, Yearly). |

| Every Use Flag | A boolean flag for tasks to be performed before or after each use. |

| Reference | The filename of the source PDF document. |

| Page | The precise page number within the source document. |

| Necessary Material | A comma-separated list of required tools, parts, or materials. |

| Operator | The designated role or qualification for the person performing the task. |

| Note | Any supplementary notes, warnings, or crucial instructions. |

| Machinery | Component | Maintenance Description | Activity Type | Necessary Material | Time Period | Operator | Note |

|---|---|---|---|---|---|---|---|

| Calpeda pump | Pump Body | Rinse with clean water to remove deposits | Maintenance | N.A. 1 | Before each use | User | Briefly run the pump with clean water to remove accumulated deposits. |

| Gaggenau CI292 | Silicone Seal | Remove and inspect silicone seal around cooktop | Maintenance | Suitable removal tool | Each 12 months | Authorized personnel | Use suitable tool to remove seal carefully. |

| Metric | Value |

|---|---|

| Total Schedulable Tasks | 118 |

| Schedule Adherence | |

| Tasks On-Time (Executed on nominal date) | 64 (54.2%) |

| Tasks Advanced (Executed early) | 8 (6.8%) |

| Tasks Deferred (Executed late) | 46 (39.0%) |

| Deviation Metrics | |

| Average Deferral (for late tasks) | 2.0 days |

| 95th Percentile Deferral | 4.8 days |

| Max Deferral | 5 days |

| Average Advancement (for early tasks) | 1.9 days |

| Workload & Capacity | |

| Daily Capacity Limit | 6 tasks |

| Peak Daily Load (Max tasks in one day) | 6 tasks |

| Days Exceeding Daily Capacity | 0 |

| Weekly Capacity Limit | 28 tasks |

| Peak Weekly Load (Busiest week) | 22 tasks |

| Weeks Exceeding Weekly Capacity | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cirillo, L.; Gotelli, M.; Massei, M.; Sina, X.; Solina, V. A Synergistic Multi-Agent Framework for Resilient and Traceable Operational Scheduling from Unstructured Knowledge. AI 2025, 6, 304. https://doi.org/10.3390/ai6120304

Cirillo L, Gotelli M, Massei M, Sina X, Solina V. A Synergistic Multi-Agent Framework for Resilient and Traceable Operational Scheduling from Unstructured Knowledge. AI. 2025; 6(12):304. https://doi.org/10.3390/ai6120304

Chicago/Turabian StyleCirillo, Luca, Marco Gotelli, Marina Massei, Xhulia Sina, and Vittorio Solina. 2025. "A Synergistic Multi-Agent Framework for Resilient and Traceable Operational Scheduling from Unstructured Knowledge" AI 6, no. 12: 304. https://doi.org/10.3390/ai6120304

APA StyleCirillo, L., Gotelli, M., Massei, M., Sina, X., & Solina, V. (2025). A Synergistic Multi-Agent Framework for Resilient and Traceable Operational Scheduling from Unstructured Knowledge. AI, 6(12), 304. https://doi.org/10.3390/ai6120304