1. Introduction

Medical errors in ophthalmology can arise from both documentation inaccuracies and clinical reasoning mistakes, posing significant risks to patient safety. Even after the utilization of electronic health records (EHRs), routine audits reveal frequent documentation errors; for example, one study found that 32% of ophthalmic medications in glaucoma clinic visits had discrepancies between the structured medication list and the progress notes [

1]. Such inconsistencies (e.g., wrong-eye designations, outdated copied notes, or medication mismatches) may go unnoticed in busy practice and can lead to inappropriate treatments or billing issues [

1]. Beyond documentation, serious clinical mistakes still occur despite safety protocols. A review of 143 ophthalmic surgical “confusions” (wrong-site or wrong-implant errors) showed that about two-thirds involved an incorrect intraocular lens implant and 14% involved a wrong-eye procedure or anesthesia block [

2]. These kinds of errors not only undermine patient trust but can result in harm if a diagnosis is missed or a wrong treatment is administered. Notably, patients themselves often catch mistakes in their records: in a survey of over 22,000 outpatient note readers, 21% reported finding an error in their visit notes, and nearly half of those errors were deemed serious (commonly involving diagnoses or medications) [

3]. This highlights the persistent challenge of ensuring accuracy in both documentation and clinical decision-making in ophthalmology.

Current strategies for catching errors rely largely on manual reviews and safety checks, which are time-consuming and prone to oversight [

3]. Physicians already face heavy administrative burdens, as nearly half of their workday may be spent on EHR and desk work rather than direct patient care [

4]. In ophthalmology clinics, where high patient volumes are common, there is a pressing need for tools that can assist in identifying mistakes (whether in the note or in the care plan) without adding to provider workload. Automated decision support that flags inconsistencies or likely errors could save valuable time and prevent errors from propagating, ultimately improving patient safety.

Advances in artificial intelligence suggest that large language models (LLMs), and especially large reasoning models (LRMs), might serve as such error-checking and decision-support assistants [

5]. LLMs are neural network models trained on vast text corpora, enabling them to interpret context and generate human-like text [

5]. Modern LLMs are built on the Transformer architecture, which uses self-attention with positional encodings; encoder-only variants (e.g., BERT) are well-suited to classification/extraction, decoder-only models (e.g., GPT) to open-ended text generation, and encoder–decoder systems (e.g., T5) to sequence-to-sequence tasks [

6,

7,

8,

9,

10].

In medicine, these models have demonstrated strong capabilities; models like GPT-4 have even passed rigorous medical licensing exams, indicating a robust level of medical reasoning [

5]. Early applications of LLMs in healthcare include drafting clinical notes, autocompleting plans, and summarizing patient encounters [

5]. For instance, an LLM “co-pilot” integrated into an ophthalmology EHR could theoretically cross-check a note and alert the clinician if a left-eye treatment is documented even though the right eye was supposed to be treated, or if a planned medication deviates from standard dosing. By leveraging their ability to parse and generate text, LLMs might double-check both the documentation and the clinical logic of a note, functioning as a real-time assistant to catch oversights that busy clinicians might miss.

Initial experiments with LLMs in clinical documentation and decision support are encouraging yet cautionary. These models can certainly produce plausible, well-structured clinical text, and they often correctly recall medical facts or suggestions [

9]. In one pilot study, an LLM was used to generate ophthalmology clinic plan summaries; it successfully produced comprehensive plans, but it also omitted important details and sometimes offered nonsensical suggestions, underscoring that the model’s output cannot be trusted blindly [

10]. Moreover, in one of our previous studies, we have shown how GPT-4 performed on par with human doctors in clinical decision making in emergency Ophthalmology, while similar performance was observed with a LRM (o1 model) in emergency internal medicine [

11,

12].

In this study, we aimed to comprehensively evaluate four contemporary LLMs (two closed and two open-source models): o3 (closed source), DeepSeek-v3-r1 (open source), MedGemma-27b (open source), and GPT-4o (closed source), in medical error detection in Ophthalmology. The evaluation is conducted using real-world patient cases to assess the models’ practical applicability and accuracy in high-stakes medical scenarios. Furthermore, with this evaluation, our goal was to elucidate the reliability of state-of-the-art open-source LLMs in medical error checking, potentially paving the way for real-world deployment.

2. Materials and Methods

2.1. Study Design and Setting

We conducted a prospective, comparative evaluation of large language models (LLMs) for error checking in ophthalmology clinical documentation. The objective was to assess each model’s ability to (i) locate the predefined error in a clinician-authored report and (ii) recommend an appropriate fix, while also quantifying the usefulness of any additional issues the model flagged. Anonymized encounter notes were drawn from the Emergency Ophthalmology ward of the University Hospital of Split (Croatia). Prior to any model interaction, reports were de-identified by removing direct identifiers (e.g., names, personal identifiers, time stamps, contact information) and any residual structured fields that could reveal identity. Institutional Review Board/Ethics Committee approval was obtained from the University Hospital of Split (Class: 520-03/24-01/100, Reg. No.: 2181-147/01-06/LJ.Z.-24-02). The requirement for informed consent was waived due to the use of de-identified records. The research adhered to the tenets of the Declaration of Helsinki. The study workflow diagram is exhibited in

Figure 1.

2.2. Case Selection and Reference Standard

Eligible notes were complete ophthalmology encounters containing the reason for visit, signs and symptoms, diagnostic procedures, and the final diagnosis and treatment. Incomplete or structurally inconsistent notes were excluded. Each included note contained one clinically salient documentation error (which was introduced to the report by two expert ophthalmologists, with 6 and 10 years of work experience, e.g., wrong laterality, mis-specified medication/dose, discordant examination finding, or an internal contradiction across sections). The reference standard for the target error was established in advance by faculty ophthalmologists, who annotated the exact erroneous statement and its correct resolution. These annotations served as the ground truth against which model outputs were graded.

2.3. Models and Access

We evaluated four contemporary LLMs (two closed and two open-source models): o3 (closed source), DeepSeek-v3-r1 (open source), MedGemma-27b (open source), and GPT-4o (closed source). MedGemma-27b is licensed under “Health AI Developer Foundations License”, while Deepseek-v3-r1 is under the “MIT License”. Models were accessed via their official APIs or web interfaces, depending on availability at the time of evaluation. All prompts were issued programmatically in Python (v3.10) to ensure reproducibility and uniform request formatting. No model-specific fine-tuning was performed.

2.4. Prompting Protocol

Each model received the full de-identified note and a standardized instruction to act as an expert ophthalmologist auditing the document for clinical/documentation errors. The instruction requested the model to:

Identify the error precisely (quote or point to the offending text and state what is wrong),

Explain why it is an error (clinical or documentation rationale), and

Propose a correction that resolves the issue (e.g., corrected laterality, medication/dose, or reconciled finding).

Models were further asked to list any additional issues they believed to be present. To reduce variance across systems, the same role and task wording was used for all models; temperature and other sampling parameters were kept at conservative/default values to prioritize determinism and traceability. Ground-truth error descriptions and corrections were not included in the prompt. The exact prompt is provided in

Supplementary Materials.

2.5. Outcomes and Grading Rubric

For each case–model output, grading was performed independently by two expert ophthalmologists (with 6 and 10 years of work experience) using a three-component rubric that separates (i) successful localization of the predefined target error, (ii) usefulness of any additional issues the model flagged, and (iii) the quality of the proposed fix. Raters were blinded to model identity and graded from the de-identified note plus the model’s response only. There was no statistically significant difference between the observers (p = 0.74, Wilcoxon signed rank test), indicating a high level of consistency and reliability in the grading process. Moreover, the Cohen’s kappa between the raters for the o3 model was 0.85, while for the MedGemma-27b it was 0.81, and for GPT-4o 0.88, indicating substantial agreement between the raters.

2.6. Error Located (“Found Mistake”)

This binary endpoint captures whether the model correctly identified the pre-specified target error embedded in the note. A score of 1 required specific and explicit localization of the target error, typically by quoting or unambiguously paraphrasing the offending statement, pointing to its section (e.g., diagnosis, medication list, laterality in the exam), and stating what is wrong in a way that matches the reference standard. Merely describing a general problem class (“there may be a laterality issue”) without tying it to the actual erroneous statement was scored 0. If the model highlighted only non-target problems (even if clinically valid), the “Found mistake” score remained 0; those observations were instead considered under “Relevance of other issues.” When multiple errors were mentioned, the presence of the target among them with sufficient specificity was necessary and sufficient for a score of 1. If the target error was only partially captured (e.g., “dose is wrong” without specifying the correct dose or without pinpointing where), raters judged whether the description still uniquely identified the target; otherwise it was scored 0. Polite hedging (“possible”, “may”) did not reduce the score if specificity was otherwise met.

2.7. Relevance of Other Issues (1–4)

This ordinal rating reflects the clinical correctness, significance, and actionability of additional problems flagged by the model beyond the target error. Raters assessed these holistically across all extra items the model raised in that case. The lowest anchor (1) was used when additional flags were incorrect, trivial, or hallucinated, or when they lacked enough detail to guide action. A typical rating of 2 indicated partially correct or low-impact findings (e.g., broadly sensible documentation advice that did not materially change care, or correct but generic recommendations already contained in the note). A rating of 3 reflected mostly correct and clinically pertinent observations that would improve the note or decision-making but with minor omissions or limited specificity. The highest anchor (4) required clear, correct, and materially useful observations, e.g., pointing out a missing contraindication check, an overlooked essential diagnostic step given the presented signs, or a discordance between sections that could alter management. Redundant restatements of information already present in the note did not contribute to higher scores; conversely, concise, non-duplicative insights with explicit rationale were rewarded. When the model produced a mixture of useful and spurious points, raters weighted overall clinical value and specificity; a single serious false claim typically capped the score at 1–2.

2.8. Overall Recommendation Quality (1–4)

This ordinal rating summarizes whether the proposed fix for the identified error was appropriate, specific, and actionable within current ophthalmic practice. A score of 1 was assigned when the recommendation was unsafe or clearly inappropriate (e.g., endorsing the erroneous laterality or proposing a non-indicated drug), or so vague that it could not be implemented. A 2 indicated partial appropriateness, for example, recognizing the right direction but omitting essential elements (dose, route, timing, follow-up, or documentation amendments), or proposing a correction that was too generic to execute reliably. A 3 denoted a mostly appropriate, implementable fix with minor deficiencies in completeness or citation of indications. A 4 required a fully appropriate, guideline-concordant correction that resolves the specific error, states precisely what should change in the note (e.g., “OS → OD in diagnosis and treatment sections”), and, where relevant, includes concrete parameters (e.g., medication name, dose, route, frequency, duration), follow-up, and any safety checks. Raters referenced contemporaneous standard-of-care to judge appropriateness; stylistic improvements without clinical consequence did not increase the score. Identification of the target error and recommendation quality were scored independently: a model could receive 1 on “Found mistake” yet still obtain a non-zero recommendation score for generally sensible documentation advice, and vice versa.

2.9. Adjudication Rules and Edge Cases

Ambiguity was resolved in favor of patient-safety and specificity: vague or hedged language without clear linkage to the erroneous statement did not qualify as “found.” Listing multiple candidate errors without prioritization counted only if the target was explicitly among them. Meta-comments about formatting or grammar did not affect scores unless they bore on clinical correctness. When models proposed several mutually inconsistent fixes, raters evaluated the net implementability and safety of the most emphasized recommendation; inconsistency generally reduced the overall score. All three outcomes were recorded per case–model pair; the “Relevance” score was a holistic judgment across all extra issues in that response rather than an average over items.

2.10. Statistical Analysis

All analyses were performed at the case level with a within-case (repeated-measures) design because each note was graded against every model. The primary endpoint was binary error localization (“found mistake”: 0/1). Secondary endpoints were Relevance of other mistakes and Overall recommendation quality, each rated on a 1–4 ordinal scale.

For the binary endpoint, we report accuracy (proportion of cases with “found = 1”) and 95% exact binomial (Clopper–Pearson) confidence intervals for each model. For ordinal endpoints, we report means ± SD and medians [IQR]. Means with 95% CIs for figures were computed as mean ± t_{0.975, n − 1} · SE; these are presented for interpretability but inferential tests on ordinal outcomes used non-parametric procedures.

Concerning across-model comparisons, for binary outcomes, we compared models with Cochran’s Q test (k-sample extension of McNemar) on complete matched cases (i.e., cases with valid ratings for all models; n = 129). When Cochran’s Q was significant, we performed pairwise McNemar tests (two-sided, exact binomial on discordant pairs). For each pair, we report the counts of discordant cases, the odds ratio with Haldane–Anscombe 0.5 continuity correction, and its 95% CI from the log-OR standard error. Pairwise McNemar p-values were not multiplicity-adjusted (pre-specified primary contrasts).

For ordinal outcomes, we have used Friedman’s test for k-sample repeated-measures comparisons across models (complete matched cases). Significant Friedman tests were followed by pairwise Wilcoxon signed-rank tests (two-sided) with Holm step-down adjustment within each endpoint family (Relevance tested separately from Overall). For Wilcoxon contrasts we report effect size.

Descriptive statistics for each model used all available ratings. Inferential tests (Cochran’s Q, Friedman, and all pairwise post hocs) were conducted on complete-case matched sets to preserve within-case pairing; no imputation was performed. The final matched sample across all four models contained n = 129 cases.

The binary endpoint is naturally modeled with paired nominal tests (Cochran’s Q/McNemar). Ordinal endpoints were analyzed with rank-based repeated-measures methods (Friedman/Wilcoxon), which make no distributional assumptions and align with the measurement level of 4-point scales. Means and t-intervals are displayed for communication and to allow CI bars in figures; inference relies on non-parametric tests. All tests were two-sided with α = 0.05. Exact p-values are reported where available.

All analyses were implemented in Python (pandas, SciPy, NumPy, matplotlib). Exact binomial confidence intervals and McNemar p-values used SciPy’s routines; rank-based tests used SciPy’s Friedman and Wilcoxon implementations; Holm adjustments were applied programmatically.

3. Results

We analyzed 129 ophthalmology reports graded against four large language models (LLMs)—o3 (closed-source), DeepSeek-v3-r1 (open-source), MedGemma-27b (open-source), and GPT-4o (closed-source). Complete-case comparisons across all four models were conducted on 129 reports with all ratings present.

To provide further context, the case set spanned a wide range of ophthalmic conditions. The majority of cases involved conjunctival disease, followed by eyelid/orbital pathology, retinal/macular disease, and trauma-related presentations.

Table 1 summarizes the distribution of diagnoses across ocular disease groups.

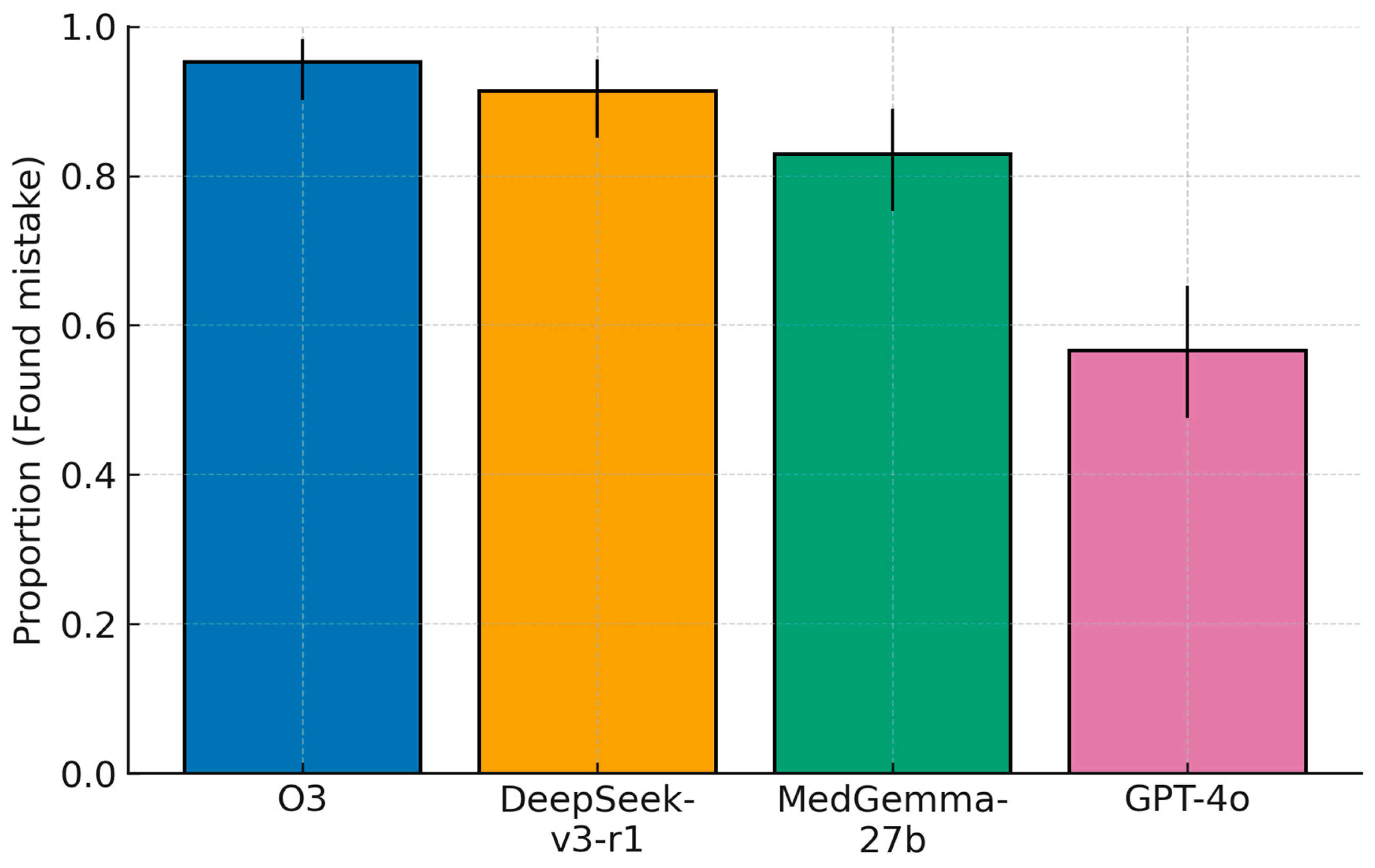

3.1. Primary Outcome: Error-Localization Accuracy

Error localization differed significantly across models (Cochran’s Q = 91.06, df = 3,

p < 1 × 10

−16). Model-wise accuracies (95% CIs) were: o3, 95.3% (123/129; 90.2–98.3); DeepSeek-v3-r1, 91.4% (117/128; 85.1–95.6); MedGemma-27b, 82.9% (107/129; 75.3–89.0); and GPT-4o, 56.6% (73/129; 47.6–65.3). Pairwise McNemar tests on the 128 matched cases indicated a numerical advantage for o3 over DeepSeek-v3-r1 that did not reach significance (discordant = 7; 6 o3-only vs. 1 DeepSeek-only correct;

p = 0.125; OR = 4.33, 95% CI 0.73–25.58). o3 exceeded MedGemma-27b (

p = 4.02 × 10

−4; discordant = 20, 18 vs. 2; OR = 7.40, 1.98–27.72), and both o3 and DeepSeek-v3-r1 were markedly superior to GPT-4o (O3 vs. GPT-4o:

p = 3.55 × 10

−15; discordant = 49, 49 vs. 0; OR = 99.00, 6.11–1605.10. DeepSeek-v3-r1 vs. GPT-4o:

p = 1.34 × 10

−12; discordant = 46, 45 vs. 1; OR = 30.33, 5.96–154.28). DeepSeek-v3-r1 also exceeded MedGemma-27b (

p = 0.0266; discordant = 21, 16 vs. 5; OR = 3.00, 1.14–7.87), and MedGemma-27b surpassed GPT-4o (

p = 1.96 × 10

−6; discordant = 49, 41 vs. 8; OR = 4.88, 2.33–10.21). Overall, the hierarchy for the primary endpoint was o3 (closed-source) ≳ DeepSeek-v3-r1 (open-source) > MedGemma-27b (open-source) >> GPT-4o (closed-source) (

Figure 2), with the o3–DeepSeek contrast limited by only five discordant cases.

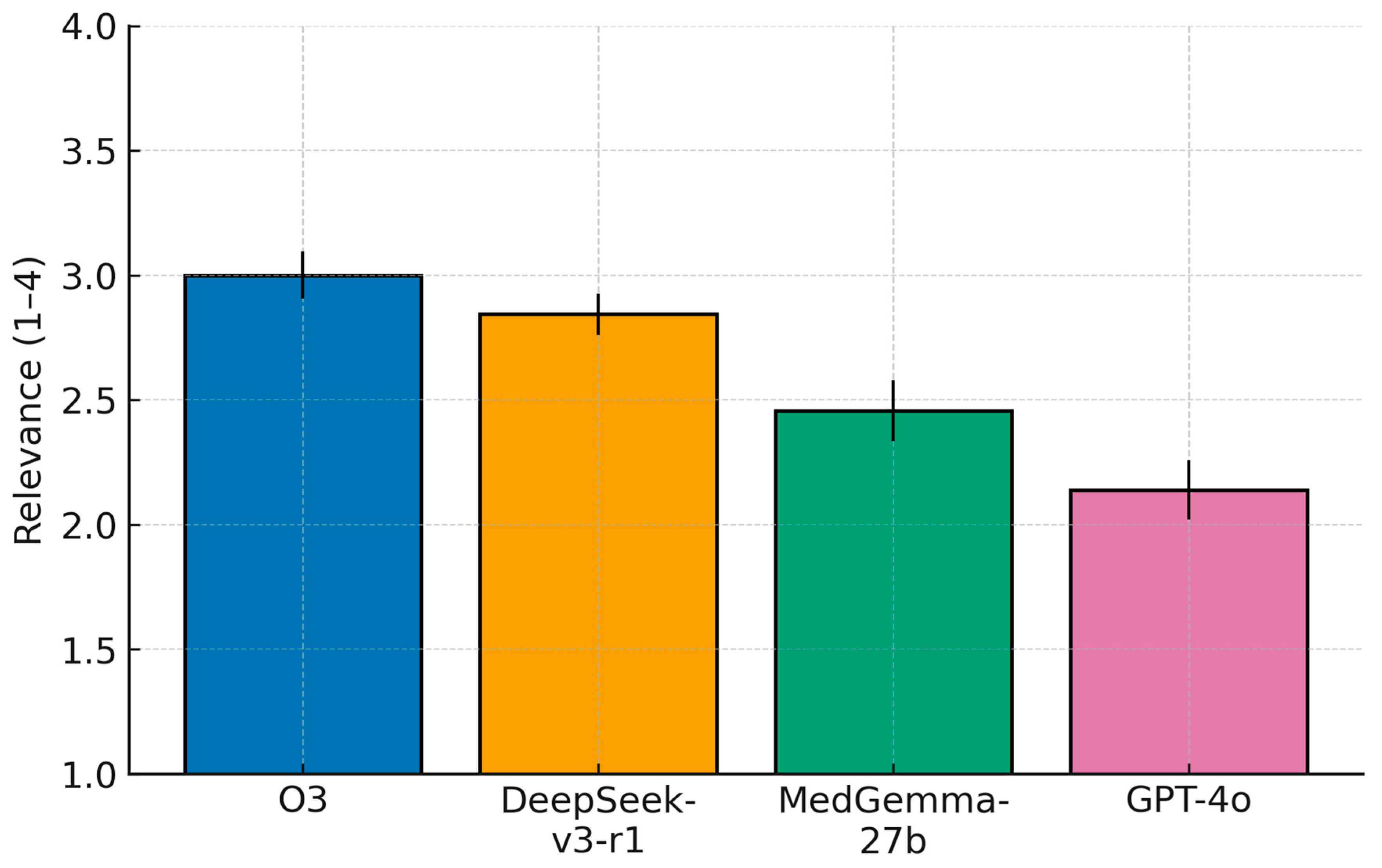

3.2. Secondary Outcomes: Relevance and Overall Recommendation Quality

Ordinal ratings differed strongly across models (Friedman; Relevance: χ2(3) = 131.65, p = 2.39 × 10−28; Overall: χ2(3) = 148.95, p = 4.44 × 10−32). Mean scores (±95% CI) followed a consistent pattern. For Relevance, o3 3.00 (2.91–3.09), DeepSeek-v3-r1 2.84 (2.76–2.93), MedGemma-27b 2.46 (2.33–2.58), and GPT-4o 2.14 (2.02–2.26). For Overall, o3 3.28 (3.16–3.40), DeepSeek-v3-r1 3.02 (2.90–3.14), MedGemma-27b 2.55 (2.41–2.69), and GPT-4o 2.09 (1.95–2.22).

Post hoc Wilcoxon signed-rank tests with Holm correction (

n = 128) showed large, consistent advantages of o3 and DeepSeek-v3-r1 over GPT-4o, and of o3/DeepSeek-v3-r1 over MedGemma-27b. For Relevance, effect sizes (|r|) were 0.72 (o3 > GPT-4o; adjusted

p = 2.68 × 10

−15), 0.65 (DeepSeek-v3-r1 > GPT-4o; 1.31 × 10

−12), 0.52 (o3 > MedGemma-27b; 1.63 × 10

−8), 0.43 (DeepSeek-v3-r1 > MedGemma-27b; 4.01 × 10

−6), 0.33 (MedGemma-27b > GPT-4o; 4.69 × 10

−4), and 0.25 (o3 > DeepSeek-v3-r1; 5.02 × 10

−3). For Overall, |r| values were 0.76 (o3 > GPT-4o; 6.61 × 10

−17), 0.66 (DeepSeek-v3-r1 > GPT-4o; 4.63 × 10

−13), 0.57 (O3 > MedGemma-27b; 5.20 × 10

−10), 0.43 (DeepSeek-v3-r1 > MedGemma-27b; 2.83 × 10

−6), 0.41 (MedGemma-27b > GPT-4o; 7.82 × 10

−6), and 0.30 (o3 > DeepSeek-v3-r1; 5.75 × 10

−4). These findings place o3 (closed-source) consistently at the top of both ordinal scales, followed closely by DeepSeek-v3-r1 (open-source), with MedGemma-27b (open-source) intermediate and GPT-4o (closed-source) lowest (

Figure 3 and

Figure 4).

4. Discussion

This study provides a head-to-head comparison of closed versus open-source LLMs as medical error checkers in Ophthalmology clinical decision making. We found that model performance varied markedly, with the closed-source o3 system outperforming all others on every metric. o3 correctly identified the planted medical error in ~96% of cases, compared to about 90% for the best open model (DeepSeek-v3-r1), 81% for the second open model (MedGemma), and only ~53% for GPT-4o (closed-source). In practical terms, the top model (o3) missed the error in only a handful of notes, whereas GPT-4o failed to find it almost half the time. A similar ranking emerged for the quality of the models’ suggestions beyond the primary error:

o3 not only caught mistakes but also provided the most relevant additional advice and high-quality corrections (mean recommendation score ≈ 3.3 out of 4), followed by DeepSeek-v3-r1 (≈3.1), then MedGemma-27b (≈2.5), with GPT-4o lowest (≈2.1). These differences were statistically significant. In short, the best proprietary model achieved the highest accuracy and delivered the most useful recommendations, the open-source models also showed good performance (especially the deepseek-reasoner), and the baseline GPT-4o model lagged behind. We believe the reason for this gap in performance is because GPT-4o is a non-reasoning model (when compared to o3 and deepseek-reasoner), and hence it spends less compute to “reason” comprehensively through a complex medical report. In addition, the lag in performance when compared to MedGemma-27b could be explained with the differences in the training corpus (as MedGemma-27b is specifically trained and fine-tuned on medical data) [

13]. Importantly, the ≈4% of O3 failures were not, in our reviewers’ judgment, systematically ‘more serious’ than the 96% that were caught. In most of these misses, the ophthalmologist graders had already flagged the note as ambiguous or potentially unclear, typically because more than one correction could be defended (e.g., inconsistency between history and plan where either side could be the source of truth, or discordant laterality across two sections written at different times). In such cases, O3 often surfaced one plausible inconsistency but did not phrase it in the exact way required by our reference standard, and was therefore scored as 0. A smaller subset of misses were genuinely harder cases (tightly coupled medication–timing or follow-up discrepancies) that also challenged the human raters. Thus, the residual error rate mostly reflects ambiguity and rubric strictness, not a concentration of clinically more dangerous mistakes.

Deploying LLMs in a real-world medical setting favours open-sourced models, as it is preferable to run inference on such models locally, so none of the sensitive and proprietary hospital data goes to external APIs. With this approach, issues with HIPAA and GDPR, and medical data sensitivity in general, are avoided. Our results highlight the good performance of deepseek-reasoner (90% of mistakes found) and MedGemma-27b (81% of mistakes found), while these models can be deployed in a local fashion, given the necessary compute requirements.

Furthermore, deploying these open-source models locally in a hospital setting requires specific hardware to ensure efficient inference while maintaining data privacy. For the deepseek-reasoner model, which is the full 671 billion parameter variant optimized for advanced reasoning tasks, deployment demands a high-end multi-GPU server setup such as at least 16x NVIDIA A100 80 GB cards (providing around 1280 GB VRAM) or equivalent, paired with at least 2 TB of DDR4 ECC system RAM, robust CPUs like dual AMD EPYC or Intel Xeon processors with 32+ cores each, and substantial storage (e.g., 16 TB NVMe SSD) to handle model weights and operations; quantization techniques like FP8 can optimize memory usage, but the scale still suits enterprise-level data centers rather than consumer hardware [

14]. On the other hand, running MedGemma-27b, a 27 billion parameter model fine-tuned for medical tasks, necessitates at least 32 GB VRAM (e.g., via an RTX 4090 or A100 GPU) with quantization to reduce the memory footprint, 50 GB storage, and a robust CPU like an Intel Core i7 or AMD Ryzen 7 for supporting operations [

15]. These requirements allow hospitals to integrate such LLMs into clinical workflows without external data transmission, although initial setup may involve IT expertise for optimization and multi-GPU scaling.

Despite the differences in model performance, all the evaluated LLMs showed at least some capacity to function as a “second pair of eyes” on clinical notes. This has promising implications for ophthalmic practice. In our emergency setting, each note was intentionally given one significant error; in real life, such errors might occur infrequently, but when they do, the consequences can be serious—for example, a wrong-eye notation in a procedure note can lead to a wrong-site surgery if not corrected, or a mistyped drug dosage could result in under- or overdosing [

2,

16]. Our findings demonstrate that a well-designed LLM system could catch a high proportion of such errors automatically. The top models not only flagged the primary inserted mistake in most cases, but often went further by pointing out other issues (for instance, minor inconsistencies or suboptimal documentation practices) with reasonable accuracy. A related implication is that our experiment used a single, fixed, text-only audit prompt; we did not exploit in-context learning. Recent ophthalmic work with GPT-4o has shown that providing a handful of exemplar cases plus a structured, slot-filled prompt can (i) shift performance upward without any parameter updates, (ii) make the model emit ready-to-use clinical assets (HTML checklists, calculators, triage templates) from natural-language instructions, and (iii) expose model limits when the test case is out-of-distribution for the provided example [

17]. In our setting, few-shot exemplars could have anchored the model to the exact style of error localization we rewarded (quote → explain → fix), potentially reducing the gap between models that tended to hedge or stay generic and those that were more audit-like.

An AI tool that flags “Dr. Smith, the diagnosis says left eye, but the treatment plan says right eye—please reconcile” could prevent a potential mishap before it ever reaches the patient. Likewise, LLM-generated prompts like “consider adding whether the patient’s visual function is correctable with glasses” (if an attestation is missing) could help clinicians meet documentation requirements for billing and quality of care [

18]. This kind of assistance might be especially valuable in high-volume settings where attendings and residents are drafting numerous notes under time pressure. By reducing therapy and diagnostic errors, as well as improving documentation consistency and completeness, LLM auditors could enhance patient safety and continuity of care. Importantly, the models in our study sometimes raised false alarms or trivial points (reflected in lower “Relevance of other issues” scores for weaker models), so any AI suggestions would need clinician review, but on balance, a net improvement in patient care and documentation quality is conceivable. Such a system could function like a spelling-and-grammar checker, but for clinical logic and documentation coherence.

Several limitations of this study should be acknowledged. First, all notes came from a single emergency ophthalmology service within one academic center, using a relatively homogeneous documentation style and Croatian/English-mixed terminology; accordingly, our findings should be viewed as in-sample performance, not evidence of universal generalizability. LLM behavior can shift when EHR vendors/templates, language/locale, or ophthalmic subspecialty case mix (retina, cornea, glaucoma, oculoplastics) change. A logical next step is an external, multi-site validation that deliberately varies these factors and includes a temporal holdout to test whether the model ranking and absolute error-localization rates are preserved. Second, we tested only two open-source models, and the field is evolving quickly; newer or larger open models, or further fine-tuning, may narrow the gap we observed. Third, our grading of LLM outputs, while rigorous and supported by high inter-rater agreement (κ ≈ 0.8–0.9), still involved some subjective judgment on what constituted a “useful” suggestion; different experts might weight completeness or actionability differently. Finally, we focused on the models’ ability to catch a predefined, known error plus obvious additional issues and did not fully quantify false-positive alerts; excessive, low-yield flags could produce alert fatigue. Our “Relevance of other issues” metric partly captures this, but future work should measure specificity more directly. Bringing LLM-based documentation auditors into clinical workflow will require careful thought regarding regulation and user interaction. From a regulatory standpoint, an AI that influences clinical documentation or decisions can be considered a form of clinical decision support software [

19]. If it actively identifies errors and recommends corrections, one could argue it performs a medical “reasoning” function, potentially qualifying it as a medical device in many jurisdictions. Regulatory bodies such as the U.S. FDA and the European Commission are actively dealing with how to categorize and oversee LLM-driven tools [

20,

21]. Key concerns include ensuring patient safety (the AI should not introduce new errors or adverse recommendations), maintaining accountability (clinicians and institutions need clarity on who is responsible if the AI misses an error or suggests an incorrect change), and protecting patient data [

21].

In summary, we showed that modern LLMs can accurately detect clinically salient errors in ophthalmology notes and generate actionable corrections, with a clear performance gradient: o3 (closed-source) ≥ DeepSeek-v3-r1 (open-source) > MedGemma-27b (open-source) » GPT-4o (closed-source). While the top proprietary model achieved the highest accuracy and recommendation quality, the best open-source alternative approached its performance and offers compelling advantages for on-premise, privacy-preserving deployment. With careful workflow integration, LLM-based documentation auditing has the potential to reduce preventable errors and improve patient safety in ophthalmic care.