1. Introduction

The integration of artificial intelligence (AI) into organizational contexts represents one of the most profound transformations in contemporary work environments. AI acts as a powerful driver of change in work dynamics, reshaping tasks and influencing interpersonal interactions, strategic decision-making, and the emotional and cognitive meanings workers attach to their work [

1]. However, despite its potential, the diffusion of AI within organizations is often accompanied by skepticism and resistance [

2,

3]. Accurately understanding workers’ attitudes toward AI is crucial for understanding adoption dynamics [

4].

Several studies in the field of human-AI interaction have employed traditional measures such as the Technology Acceptance Model [

5], which assesses perceived usefulness and ease of use, thereby focusing more narrowly on the technical and functional characteristics of the technology itself while neglecting the specific features of the systems under investigation. More recent instruments, including the General Attitudes Toward Artificial Intelligence Scale [

6] and the Smart Technology, Artificial Intelligence, Robotics, and Algorithms (STARA) Awareness Scale [

7], aim to capture general orientations toward AI or perceptions of how smart technologies may influence their career prospects. Yet, although these tools address specific technologies more directly, they often lack contextual sensitivity, overlooking the organizational settings in which attitudes are shaped.

The traditional conception of attitude formation assumes a dichotomy between “subjects”, who evaluate, and “objects”, which are judged as more or less acceptable. This view neglects the dynamic, interactive relationship between individuals and their environment [

8]. As Lakoff [

9] highlights, the categories through which people organize reality do not depend on objects per se, but on the ways in which individuals interact with them: how they perceive, represent, and structure information, and how they engage with it through embodied experience [

10]. Accordingly, attitudes towards AI in organizations should be understood as socially situated phenomena, shaped both by individual perceptions and by responses to the attitudes and actions of others. They cannot, therefore, be examined in isolation or in purely abstract terms, but only in relation to the specific organizational and social context in which they arise.

From an organizational perspective, two contextual factors can be considered particularly relevant in this field. First, organizational innovativeness may represent a key resource in an era of rapid transformation, enabling organizations to adapt to volatile environments and maintain competitive advantage. Innovation orientation is not merely a general predisposition toward change but a precursor to the implementation of innovation, organizational performance, and economic growth. Van de Ven [

11] defined innovation orientation as “the development and implementation of new ideas by people who engage in transactions with others within an institutional context over time”. In this perspective, innovation orientation reflects both the willingness of members to adopt innovations and the extent to which management prioritizes and supports innovation processes. As Siguaw and colleagues [

12] argue, long-term organizational success depends less on individual innovations than on an overall innovation orientation, which creates enduring capabilities for generating new ideas. Despite its importance, research has often focused on product and process innovation, paying limited attention to innovation orientation at the organizational level itself, and to how workers’ perceptions of this orientation may positively influence their attitudes towards the use of innovative products.

Secondly, the ethical culture of organizations may play a decisive role in shaping workers’ perceptions and responses to AI. Corporate ethics refers to the laws, norms, codes, or principles that guide morally acceptable decision-making in business operations and relationships. At its core, it reflects integrity and fairness in interactions with workers and clients [

13]. Given the sensitive issues raised by AI [

14], such as transparency, explainability, and accountability, the ethical dimension of the organizational environment becomes a critical factor in determining whether employees perceive AI adoption as legitimate and trustworthy when promoted by the organization itself.

On the individual level, attitude formation can also be framed into consolidated psychological theories. Among these, Chaiken’s heuristic–systematic model (HSM; [

15,

16]) is particularly influential. The HSM posits two distinct routes of information processing: a heuristic route, based on cognitive shortcuts, and a systematic route, requiring greater effort and careful analysis. Since systematic processing is cognitively costly, individuals tend to favor the heuristic route whenever this does not jeopardize decision accuracy [

17]. In this perspective, the HSM explains how prior behaviors and experiences, such as the perception of job performances supported by AI, may shape subsequent evaluations. Hence, in this perspective, workers’ past experiences with AI, whether positive or negative, can be decisive in shaping their attitudes.

Consequently, it clearly emerges that focusing exclusively on the technical features of AI systems is insufficient; equal attention must be devoted to organizational factors and individual perceptions associated with their use [

18,

19].

Our study focuses on three underexplored dimensions, integrating contextual and individual factors: workers’ perceptions of organizational ethical culture, workers’ perceptions of organizational innovativeness, and workers’ perceptions of their own job performance supported by the use of AI within their professional roles. To this aim, it becomes essential to examine how these organizational and individual factors shape workers’ attitudes toward AI and influence its acceptance and integration in the workplace. Specifically, the study aims to address the following research questions:

How do workers’ perceptions of organizational ethical culture relate to their attitudes toward the use of AI in their work?

How does workers’ perception of their organization’s innovativeness influence their openness to adopting and integrating AI technologies?

To what extent do workers’ perceptions of their own job performance in relation to AI use affect their overall attitudes toward these technologies?

Hypothesis Development

Based on the research questions and the overall objective of the present study, specific hypotheses were formulated to be tested through the analysis of the collected data.

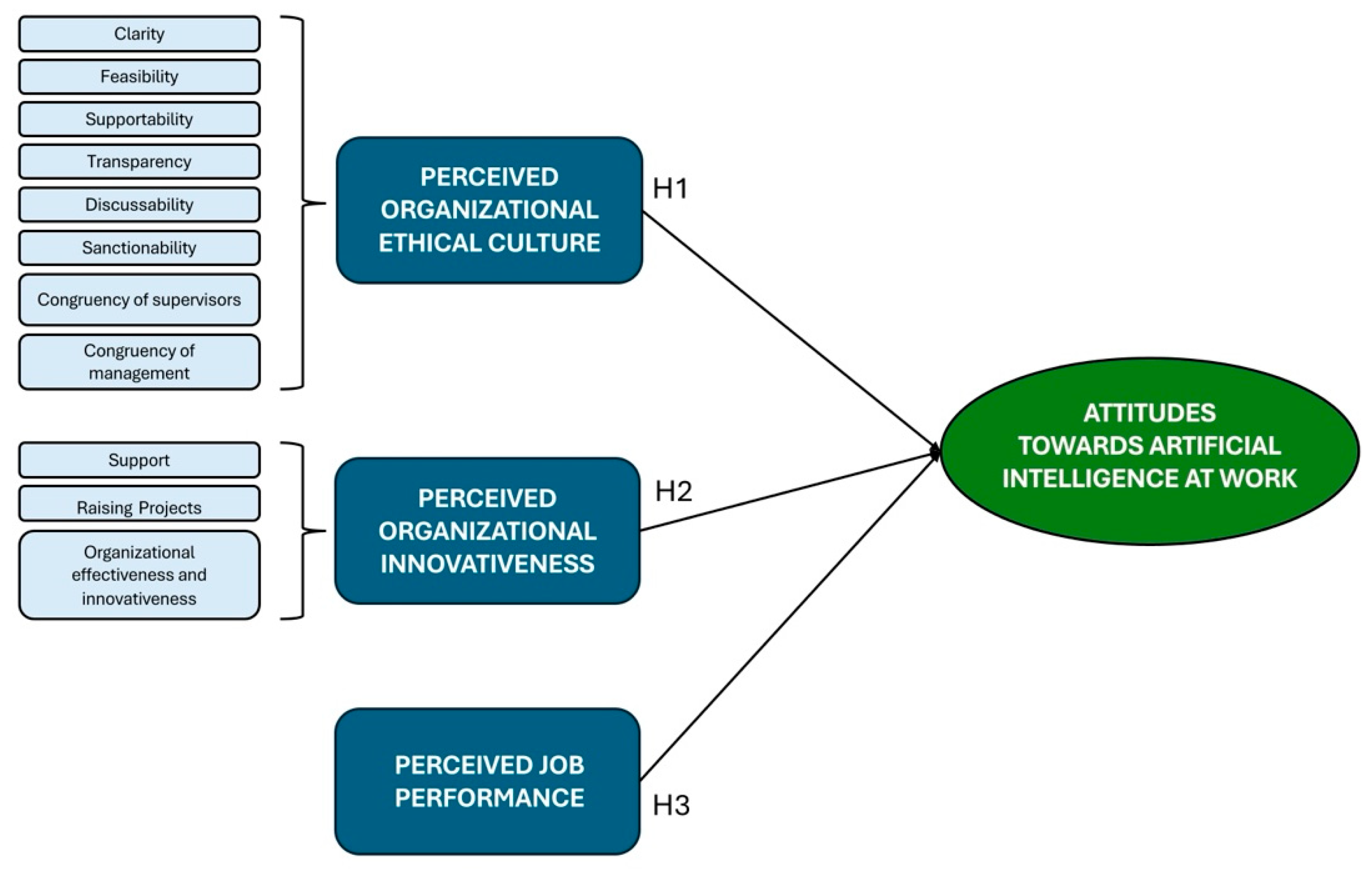

These hypotheses aim to translate theoretical questions into testable relationships between observed variables, in order to identify the factors that may affect workers’ acceptance of AI in organizational settings. In particular, the following hypotheses were formulated (

Figure 1):

H1. Perceived organizational ethical culture has a significant impact on workers’ attitudes toward the use of AI in organizational contexts.

H2. Perceived organizational innovativeness has a significant impact on workers’ attitudes toward the use of AI in organizational contexts.

H3. Perceived job performance related to the use of AI has a significant impact on workers’ attitudes toward the use of AI in organizational contexts.

Although firmly grounded in the literature, these constructs have not been directly connected to attitudes toward AI, underscoring the exploratory nature of the present study. For these reasons, the hypotheses were not formulated with a specific direction (e.g., positive or negative impact). Instead, the actual directions of the relationships were left to be determined by the data.

2. Measures and Methods

After selecting the most suitable scales based on the literature review and the research questions, a structured process was initiated to develop and administer the questionnaire. The first phase involved the translation and linguistic adaptation of some of the selected scales. Next, the overall structure of the questionnaire was defined and prepared. This phase was followed by the contact and recruitment of Italian companies and professional organizations operating in both the public and private sectors, across different industries and of varying sizes, characterized by diverse organizational modalities (on-site, hybrid, and remote). Specifically, organizations were selected based on their willingness to participate in the study and the presence of work arrangements that involved, at least in part, the use of technologies for communication and coordination. Contact with organizations was primarily established via email, which included a brief description of the study and a link to the online questionnaire hosted on the Qualtrics platform. Data collection took place throughout April 2025.

2.1. Participants

The final analytic sample comprised N = 356 participants who completed the survey after n = 46 individuals discontinued following informed consent. The gender distribution was approximately balanced (n = 177 women, n = 173 men, n = 6 other), with a mean age of M = 42.37, SD = 11.36. Men were slightly older on average (M = 43.90, SD = 11.56) than women (M = 41.11, SD = 11.12).

Other sociodemographic variables were explored: concerning educational attainment, the majority of participants held a high school diploma (41.8%), while 43.5% had completed a university degree or higher, indicating a relatively well-educated sample. Instead, regarding the occupational sectors of research participants, the most represented were Banking (18.7%), Information Technology (13.4%), and Electronic/Electromechanical (13.2%), whereas medium-represented sectors included Construction, Consulting, and Training. Role distribution was also diverse, including employees (41.8%), managers (12.2%), executives (5.5%), consultants (10.9%), and workers (10.2%) as participants. Regarding work modality, the sample was distributed as follows: 3% fully remote, 52% fully on-site, and 31.3% hybrid.

Regarding AI use, 29.9% of participants reported using organization-provided AI tools, and 41.8% had experience with AI at work more generally. The most frequently used AI applications were aimed at automatic text generation, content production, translation, and fact-checking, whereas creative or specialized tools, such as music/video generation, social media management, recommendation systems, and reinforcement learning, were less commonly used. These results indicate that AI adoption remains partial and selective, with a clear preference for text- and information-focused applications.

Hypotheses were tested on a subsample of N = 154 participants who reported prior experience of AI use in their workplace. This subsample was necessary because only participants with AI experience could provide meaningful responses to items assessing perceived job performance in relation to AI, which were central to the study’s hypotheses.

2.2. Measures

To address the research questions, it was necessary to select tools capable of measuring the identified theoretical constructs. The selection process involved a careful review of the scientific literature, aiming to identify well-established, theoretically grounded scales that have already been applied in organizational contexts. Specifically, four scales were identified:

- ▪

Attitudes Towards Artificial Intelligence at Work (AAAW), to assess workers’ attitudes toward AI in the workplace [

1];

- ▪

Corporate Ethical Virtues–Short Version (CEV), to measure workers’ perceptions of ethical culture within the organization [

13];

- ▪

Inventory of Organizational Innovativeness (IOI), to investigate workers’ perceived orientation toward change and innovation within the organization [

20];

- ▪

An adapted scale based on the In-Role Behavior Scale by Williams and Anderson [

21], aimed at assessing workers’ perceived job performance related to AI use.

The AAAW scale [

1] assesses workers’ attitudes toward AI in organizational settings through 25 items across six dimensions:

Perceived humanlikeness of AI: the extent to which individuals attribute human-like characteristics (desires, emotions, beliefs, free will) to AI. Example items: “AI has desires”; “AI has the ability to experience emotion”.

Perceived adaptability of AI: the perception of AI’s ability to learn, improve, and adapt to the work context. Example items: “AI learns from experience at work”; “AI adapts itself over time at work”.

Perceived quality of AI: the perceived reliability, accuracy, completeness, and clarity of the information provided by AI. Example items: “AI produces correct information”; “The information from AI is always up to date”.

AI use anxiety: the level of discomfort or anxiety experienced when using AI at work. Example items: “Using AI for work is somewhat intimidating to me”; “I would feel uneasy if I were given a job where I had to use AI”.

Job insecurity: concerns that AI may replace one’s role, diminish career prospects, or make specific skills obsolete. Example items: “I am worried that what I can do now with my work skills will be replaced by AI”; “I think my job could be replaced by AI”.

Perceived personal utility of AI: the extent to which AI is perceived as a useful tool that enhances skills and improves the work experience. Example items: “Using AI would allow me to have increased confidence in my skills at work”; “Using AI would give me greater control over my work”.

Three dimensions (Perceived adaptability, Perceived quality, and Perceived personal utility) reflect functional aspects of AI, while the other three (AI use anxiety, Job insecurity, Perceived humanlikeness) capture socio-emotional aspects. From a practical perspective, the AAAW is useful for organizations to monitor the impact of AI implementation and to design interventions, such as training programs, aimed at reducing anxiety and insecurity while enhancing perceived utility. Compared to the other previously mentioned scales, this instrument captures both the specificity of the technology and the context of investigation. The AAAW dimensions demonstrated good to excellent reliability: Perceived Humanlikeness of AI (α = 0.77), Perceived Adaptability of AI (α = 0.90), Perceived Quality of AI (α = 0.82), AI Use Anxiety (α = 0.89), Job Insecurity (α = 0.90), and Perceived Personal Utility of AI (α = 0.86).

To measure perceived organizational ethical culture, the Italian short version of the Corporate Ethical Virtues (CEV) model was used [

13]. This version includes 24 items across eight dimensions (three items per dimension), each capturing a specific aspect of the ethical culture:

Clarity: workers’ perception of the clarity of organizational norms.

Feasibility: measuring the extent to which workers feel able to act consistently with their values, given time and resources.

Supportability: perception of an environment characterized by shared commitment, respect for rules, and positive interpersonal relationships.

Transparency: visibility of actions by supervisors and colleagues within the organization.

Discussability: perceived opportunity to openly discuss ethical issues, including reporting and correcting unethical behaviors.

Sanctionability: perception that unethical behaviors are punished and ethical behaviors are rewarded.

Congruency of supervisors: alignment between supervisors’ behaviors and expected ethical values.

Congruency of management: coherence between top management behaviors and the ethical principles promoted within the organization.

Its multidimensional structure allows for a detailed assessment of ethical culture while remaining practical for survey administration.

The CEV scale dimensions showed acceptable to strong internal consistency: Clarity (α = 0.76), Supportability (α = 0.75), Transparency (α = 0.70), Discussability (α = 0.72), Congruency of Supervisors (α = 0.84), and Congruency of Management (α = 0.80). Two subscales, Feasibility (α = 0.63) and Sanctionability (α = 0.60), were slightly below the conventional threshold of 0.70, indicating moderate reliability, but were considered acceptable given both the multidimensional nature of this scale and the fact that it has been validated in an Italian context, as in the present research.

The Italian version of the IOI scale [

20,

22] was used to measure the perceived organizational innovativeness from workers’ perspectives, reflecting both individual openness to innovation and management support for innovative processes.

Originally, the IOI assessed nine dimensions at three levels:

- ▪

Organizational level: managerial support, project initiation, and communication processes.

- ▪

Interpersonal level: consultative leadership, teamwork integration and trust, peer support.

- ▪

Individual level: knowledge and skills, task stimulation.

The original 44-item version was reduced in subsequent validations, resulting in a 36-item scale. For the purposes of this study, three key dimensions were selected for their theoretical relevance and practical feasibility:

Support: the extent to which the organization provides resources, training, and recognition to foster innovation and creativity.

Raising projects: organizational encouragement for workers to propose ideas and engage in innovative initiatives.

Summary assessment items: workers’ overall perception of organizational effectiveness and innovativeness.

Selecting only these three dimensions allowed the survey to remain manageable while capturing the core aspects of an innovative organizational climate.

The IOI scale also demonstrated good reliability: Support (α = 0.89), Raising Projects (α = 0.87), and Summary Assessment of General Organizational Effectiveness and Innovativeness (α = 0.82).

Finally, perceived job performance in relation to AI was measured using items adapted from Williams and Anderson (1991) [

21], focusing specifically on in-role behaviors (IRB)-i.e., the completion of formally expected job tasks.

The scale includes four items, modified to explicitly reference AI (e.g., “Thanks to AI, I adequately complete my assigned tasks”; see

Appendix A).

This approach allowed for a precise assessment of perceived work effectiveness enhanced by AI. The perceived job performance scale, adapted from the In-Role Behavior Scale and comprising four items, showed excellent reliability (α = 0.94).

2.3. Socio-Demographic Variables

In addition to the variables directly related to the research hypotheses, the questionnaire included several socio-demographic variables to better contextualize participants’ responses. These covered a range of sociodemographic characteristics (such as gender, age, and educational level) and professional information (including organizational sector, job role). Finally, the questionnaire also explored participants’ prior exposure to AI tools in the workplace, both in terms of organizationally provided solutions and individually adopted technologies.

2.4. Data Analysis

Before hypothesis testing, data were screened for completeness and suitability for regression analyses. Descriptive statistics were computed for all variables, and reliability analyses were conducted for multi-item scales to ensure internal consistency. Independent variables included dimensions of perceived organizational ethical culture, perceived organizational innovativeness, and perceived job performance related to AI, while dependent variables were the dimensions of the AAAW Scale.

Hypotheses were tested using hierarchical multiple regression analyses, with each AAAW dimension entered as a separate dependent variable. Predictor variables were entered in blocks corresponding to organizational ethical culture, organizational innovativeness, and perceived job performance. This allowed examination of the unique contribution of each predictor while controlling for the others. Given the exploratory nature of the study, analyses were two-tailed, and only statistically significant effects (p < 0.05) were interpreted.

4. Discussion

This study examined the factors influencing workers’ attitudes toward AI in organizational contexts, with a focus on perceived organizational ethical culture, perceived organizational innovativeness, and perceived job performance in relation to AI use. The findings underscore the multidimensional nature of AI acceptance, reinforcing prior claims that workplace attitudes toward innovative technologies cannot be explored in isolation, but only in relation to the specific organizational and social context in which they emerge [

10,

18,

19].

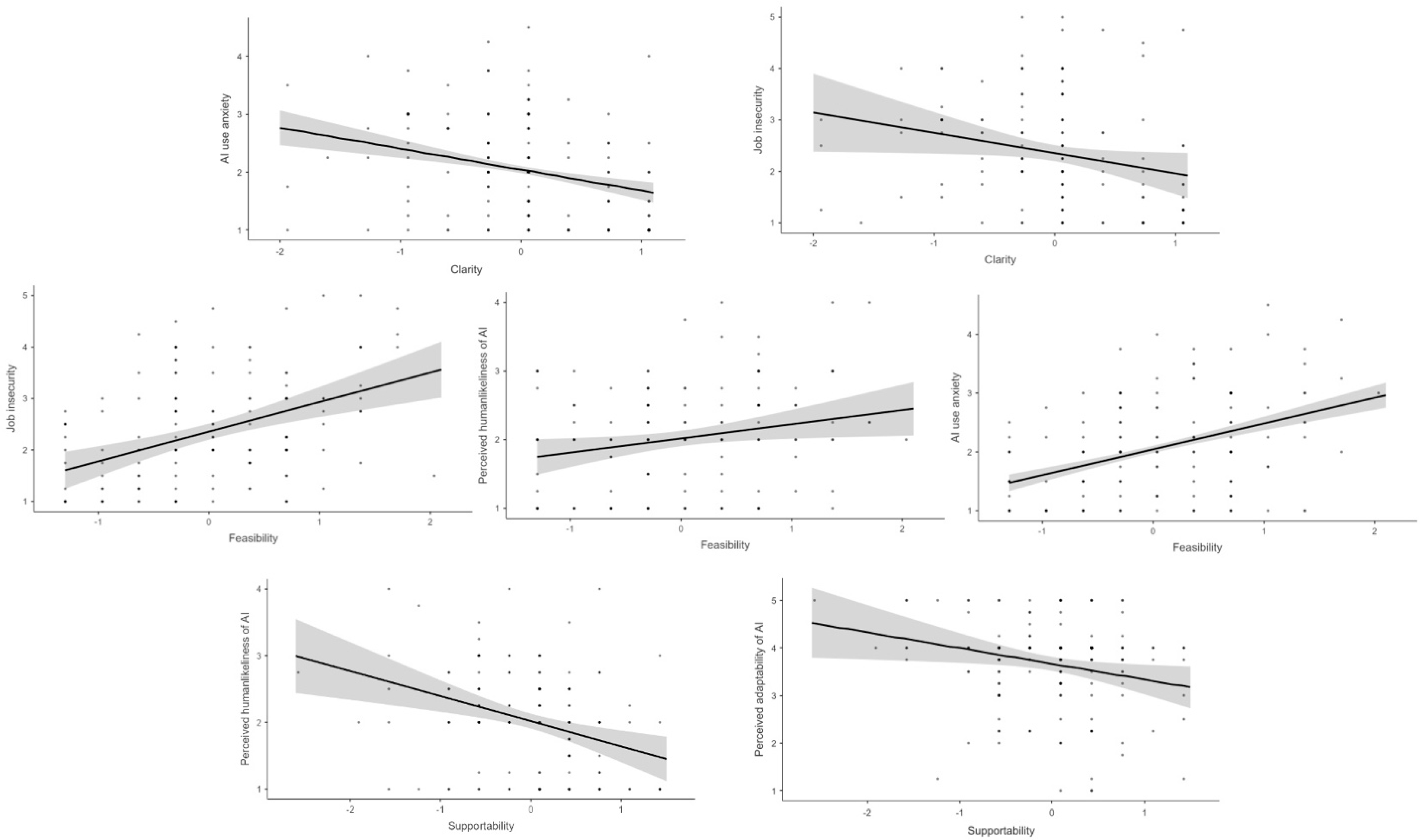

A first relevant contribution concerns perceived organizational ethical culture, which emerged as a decisive factor in shaping how workers perceive and accept AI. In particular, Clarity, the perception of organizational procedures as transparent and definite, was associated with lower levels of AI-related anxiety and job insecurity in workers. This suggests that transparent ethical frameworks help reduce the uncertainty and perceived threat of AI integration. Moreover, Supportability, understood as a cooperative and respectful work environment, appears to be linked to lower perceptions of AI’s adaptability and learning capacity in participants. This may indicate that, in an organizational climate seen as stable and supportive, workers may rely less on technology to provide assistance. Consistently, Supportability is also negatively associated with the humanization of AI, suggesting that when interpersonal support is already considered abundant among workers, there is little need to attribute human-like supportive roles to AI. Conversely, perceptions of Feasibility, reflecting the possibility of acting in line with personal ethical values, were linked to heightened anxiety and job insecurity, as well as to stronger attributions of human-like qualities to AI. This finding demonstrates that in contexts perceived as ethically incoherent, workers not only experience higher levels of discomfort and insecurity but also tend to project human characteristics onto AI, thereby further complicating the human–technology relationship. This latter aspect is complex to interpret and may be related to the tendency of workers to seek compensatory mechanisms in the face of ethical incoherence; however, it requires further investigation. Previous research has widely investigated the ethical dimension of AI, primarily focusing on individual-level perceptions of AI-related ethics [

2,

23]. Such studies often highlight concerns such as the risk of discrimination, where AI may reproduce or amplify biases present in data or system design, and fears related to job displacement, including potential unemployment and limited alternatives for those affected. The main contribution of the present study is to shift the focus from the individual to the organizational level, examining the interplay between organizational ethical culture and the formation of positive or negative attitudes toward AI. This perspective allows for a better understanding of how ethical challenges, traditionally studied at the individual level, may be mitigated or amplified by organizational conditions.

A second contribution relates to organizational innovativeness. As hypothesized, its influence was more selective than general: among the different explored dimensions, only Raising Projects, the active encouragement of workers’ ideas, was positively associated with perceptions of AI adaptability. This indicates that the perception of broad, abstract signals of organizational innovativeness is insufficient to shape workers’ perceptions. This highlights the importance of active engagement in innovation as a mechanism through which organizational innovativeness is perceived, and, in turn, translates into partially favorable attitudes toward innovative technological adoption. Although this relationship warrants further investigation, the present study provides an initial insight into this area. Previous research on organizational innovativeness and AI adoption in the workplace has primarily focused on the aspects of organizational readiness [

24,

25] or the level of organizational digital transformation [

26], emphasizing not only technological infrastructure and data structures, but also the skills, expertise, and processes of human resources necessary to leverage emerging technologies and facilitate AI adoption. In contrast, our study examines organizational innovation orientation in a broader sense, beyond purely technological considerations. Preliminary results indicate a complex and not yet fully interpretable relationship between general innovation orientation and AI attitudes, underscoring the need for further research with larger samples to clarify these dynamics.

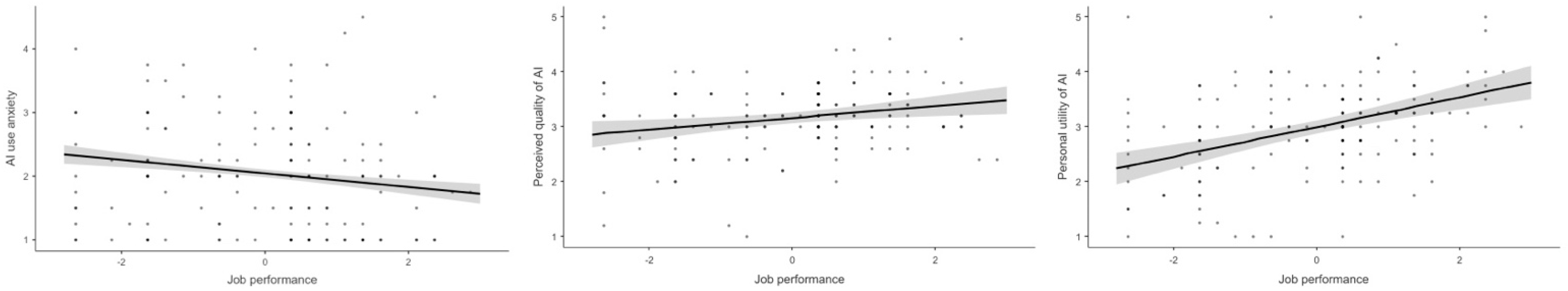

While previous studies have examined this relationship by considering attitudes toward AI as predictors of improved performance [

27], the present study takes the opposite perspective. Specifically, it investigates whether perceived performance influences attitudes, in line with the HSM [

15], which posits that prior experiences play a key role in shaping subsequent opinions and evaluations. This finding resonates with the HSM, showing that workers who experienced enhanced performance through AI reported not only lower anxiety in the use of AI but also greater perceptions of quality, usefulness, and even human-like traits in the technology. This underscores the importance of direct successful experiences in promoting favorable attitudes and AI acceptance. Hence, when workers perceive AI as enhancing their effectiveness, they are more likely to regard the technology as valuable and useful, thereby increasing appreciation and potentially fostering trust while reducing resistance.

5. Conclusions

This study demonstrates that attitudes toward AI in organizations are socially situated and context-dependent, emerging from the interaction of organizational culture, innovation practices, and individual experiences rather than from technological features alone. By adopting a multidimensional perspective, the findings highlight both the enablers that foster trust and openness toward AI (e.g., organizational ethical clarity, supportive climates, and participatory innovation) and the barriers that heighten anxiety or resistance (e.g., organizational ethical incoherence with personal values or negative prior experiences with AI). Importantly, the contribution of this work is to provide a comprehensive account of workers’ perceptions, mapping the conditions under which AI is viewed as useful, trustworthy, or threatening. Organizational factors such as transparent procedures and participatory innovation initiatives represent actionable levers for shaping workers’ attitudes. At the same time, individual experiences with AI leave room for user experience design and service design [

28,

29,

30] to foster positive interactions with the technology, complementing the technical features that ensure its effectiveness.

Theoretically, it integrates organizational-level factors with individual perceptions, moving beyond traditional models such as TAM. Practically, it offers guidance for managers and policymakers: investing in transparent ethical procedures, participatory innovation, and genuine performance-enhancing AI tools can foster smoother integration. Looking forward, these findings also speak to the future of work, suggesting that organizations cultivating ethical integrity, innovation, and meaningful human–AI collaboration will be better positioned to ensure both effectiveness and worker well-being [

31,

32].

Ultimately, this study enriches the growing literature on AI use in organizations by offering an exploratory yet integrative framework that captures the multifaceted nature of AI acceptance.

Limitations and Future Directions

Several limitations should be acknowledged. First, the exclusive reliance on self-report measures may introduce social desirability bias; complementary qualitative approaches, such as interviews or focus groups, could provide deeper insights into AI-related experiences. Second, although the study surveyed 356 workers, only 154 participants reported prior AI use at work. This proportion is consistent with broader adoption patterns [

33]: globally, only 42% of enterprise businesses with more than 1000 employees actively use AI. Consequently, the representation of participants with prior AI experience in the working context aligns with observed trends in real-world organizational adoption. It is also important to consider potential biases. Sample bias may have arisen due to the voluntary nature of participation, potentially overrepresenting individuals with a higher interest or comfort with AI. While company managers were asked to distribute the survey widely to reach a broad range of employees, we cannot fully control for individual motivation. Future research could include measures of curiosity or prior interest in AI to better understand its influence on attitudes. Moreover, future studies should examine in greater depth the descriptive differences within the sample, analyzing variations across sectors, nationalities, professional roles, and generational cohorts, as well as their impact on AI acceptance. Moreover, regarding the CEV scale, two subscales, Feasibility and Sanctionability, presented Cronbach’s alphas slightly below the conventional threshold of 0.70, respectively, of 0.63 and 0.60. This is an important limitation of these two dimensions of the CEV, but, from the reliability analysis of the whole scale, it emerges that the used version of the CEV has high values both of the Cronbach’s alpha and of the McDonald’s omega. So, given both the multidimensional nature of this scale and the fact that this scale has been validated in an Italian context, as in the present research, the authors decided to accept the values resulting from these sub-dimensions of the overall scale anyway. This should be considered in future research to explore these dimensions in different samples and contexts. Additionally, distinguishing between different types of AI technologies and considering the nature of human–machine interactions could provide further understanding of how AI shapes work experiences and employee attitudes. Finally, longitudinal research designs would allow for the tracking of changes in attitudes over time and help uncover potential causal relationships between organizational practices, individual experiences, and the formation of attitudes toward AI.