1. Introduction

Time series forecasting has received attention across a great number of research disciplines for decades, such as power energy [

1], economy [

2], web traffic [

3] and air pollution [

4]. At present, most real-world data usually exhibit multivariate time series characteristics: seasonality, trends, and correlation among multivariate time series. Because of the arrival of the digital generation, multivariate time-series data and their complexity have been massively increased. Major challenges in real-world multivariate time series forecasting tasks are: (1) long-term dependency, (2) inter-series correlations, (3) nonlinearity and shifted trends, (4) gaps or missing values in the time series, and (5) the increasing complexity and dimensionality of time series data.

Despite the broad applications of statistical and ML-based methods, handling multivariate time-series forecasting tasks requires professional expertise in data science and statistics, significant time costs, and substantial manual involvements. VAR and VARIMA [

5,

6] are classical statistical models designed primarily for multivariate time series. A VAR model expresses each variable being considered and a serially uncorrelated error term [

7]. VARIMA extends VAR by adding moving average (MA) components and incorporating differencing, allowing it to capture both short- and long-term dynamics in the data. However, ML-based and AutoML-based models can automatically capture nonlinear patterns without requiring time series assumptions such as stationarity. AutoML [

8] is critically important as it aims to provide an end-to-end automated solution for non-professionals in time series forecasting. The goal of AutoML is to use raw data as the input to produce a high-quality model efficiently and output with minimal human intervention [

9,

10,

11,

12]. In addition, these models can be retrained periodically to reflect any changes in the data and maintain high-quality performance efficiently. An AutoML system has three attributes: full automation, adaptability, and high-quality [

9,

10,

12]. AutoML approaches are designed for a specialized search space for time series models, including convolution, recurrent, gating units, etc. [

12]. Moreover, their search spaces consist of core hyperparameters, activation functions and optimizers, and AutoML frameworks can systematically suggest the optimal configurations of those components. To increase robustness of time-series models, the AutoML system assembles the top suitable models and generates the best combination of hyperparameters discovered in the search space. In our proposed method, time series predictions are produced in the way of recursive or multiple-steps ahead forecasting.

Our previous study [

9] has empirically shown that the overall performance of LSTM networks in univariate time series forecasts outperform that of classical statistical methods and other neural networks (NNs) within the AutoML framework, by practicing AutoML attributes and obtaining the highest forecasting accuracy in every length. The extended study [

10] proposes using an LSTM network within an AutoML framework to perform the short- and long-term forecasting of correlated bivariate time series, even when the inter-series correlation is small. Empirical evidence further suggests that forecasting both time series jointly yields lower prediction errors compared to modeling each time series independently. Incorporating inter-series correlation as a contributing factor, we have found that higher correlations between time series generally lead to better forecasting performance. Despite low inter-series correlations, jointly analyzing correlated time series can still enhance forecasting accuracy. This research focuses on developing an AutoML framework that allows users to leverage multiple inter-correlated time series with data anomalies including trend shifts and the presence of missing values across multiple series to perform both short-term and long-term forecasting effectively. The proposed method employs GRU networks to perform multi-step ahead forecasting, while ensuring minimal human intervention and the automatic adaptability of new data, achieved through pre-specified hyperparameter tuning and fewer selective DL models. In the simulation, inter-series correlations are introduced using Lower–Upper (LU) Decomposition [

13]. We then simulate trend shifts and missing values to assess the proposed model’s robustness. Furthermore, we emphasize the practical implications of our AutoML approach and its effectiveness in real-world scenarios. A real dataset is used to validate our findings.

Our research hypotheses include: the higher the inter-series correlation among multiple series, the better the forecasting performances; and time-series data anomalies cause higher forecasting errors. The main contributions of our article are summarized as follows:

- (1)

By meeting the AutoML attributes, we provide a novel approach with minimal preprocessing to multiple-steps-ahead forecast correlated multivariate time series, while addressing the shiftiness of trends and missing values in the data.

- (2)

Fixing multiple hyperparameters in the GRU-RNN model, the proposed approach can significantly speed up the training process without compromising predictive performance.

- (3)

Empirical results are provided to investigate the experimental effects of previously mentioned components and to show that our proposed methods outperform traditional and AutoML-based methods.

- (4)

To solve real-world forecasting tasks in the economic, financial and energy sectors, the proposed AutoML approach can reduce the technical barriers and facilitate broader adoption across interdisciplinary fields.

The remaining sections of this study proceed as follows.

Section 2 discusses related research from two perspectives: statistical time series methods and recent ML-based methods.

Section 3 discusses the theoretical aspects of traditional forecasting models and AutoML-based models and introduces their integration of the AutoML system.

Section 4 introduces simulated data and real data and discusses the experimental designs.

Section 5 presents a comparative analysis report and empirical findings.

Section 6 provides discussions of the proposed method, concludes this article and summarizes and highlights our research outcomes. Lastly,

Section 7 discusses potential future research directions.

2. Related Works

2.1. Statistical Learning Approaches

Autoregressive Integrated Moving Average (ARIMA) or Seasonal ARIMA [

5] is a classical statistical model that productively predicts univariate time series and presents competitive forecasting accuracy, compared to some NN models [

9]. Meanwhile, the ARIMA model requires stationary time series to ensure valid future predictions [

11]. Thus, the decomposition of nonstationary time series is a crucial preprocessing step in order to remove seasonality and trend patterns before proceeding to ARIMA model. This stationarity requirement should be met for the other statistical learning models.

Other than VAR and VARIMA, the Vector Autoregressive Fractionally Integrated Moving Average (VARFIMA) model can address the long-memory behavior of multivariate time series by allowing for fractional differencing parameters [

14]. While the structure of VARFIMA can increase computational complexity, statistical methods are inevitably prone to overfitting and high computational costs as multivariate time series take on more high-dimensional inputs and complex patterns [

15].

2.2. Machine-Learning-Based Approaches

As machine learning (ML) thrives in various fields and industries, time series research continues making significant progresses. All time series forecasting methods assume that there exists an underlying relationship between past values and future values. Artificial neural network (ANN) and DL algorithms can outperform traditional models, by better estimating this underlying function when solving complex patterns [

16]. The achievements of ANNs and DL algorithms are also recognized, because of their flexibility, generalization and competitive quality.

Multivariate Temporal Convolutional Network (M-TCN) [

15] and Multivariate Time Series Forecasting Framework via a Temporal Attention-based Encoder-decoder Model (MTSMFF) [

17] are the novel NN structures proposed based on state-of-art of deep learning (DL), in order to improve multivariate time series predictions and adaptively learn inter-series correlation. At the same time, both models require data preprocessing and some degree of human intervention.

3. Methods

This section briefly discusses the theoretical aspects of both traditional time series methods and AutoML-based time series forecasting methods. We primarily introduce the integration of the AutoML framework and examine how these methods address the challenges of multivariate time series.

3.1. Vector Autoregressive (VAR) and Vector Autoregressive Integrated Moving Average (VARIMA)

VAR is a macroeconometric framework developed to systematically model the dynamic interrelationships among multivariate time series variables [

18]. A VAR model is an

-equation,

-variable linear model in which each variable is in turn explained by its own lagged values, plus the current and past values of the remaining

variables [

7]. VARIMA is an extension of VAR, by adding an MA component and differencing. Due to their ability to capture interdependencies and model flexibility, the VAR and VARIMA models are widely applied in financial and economic tasks.

A VAR

model and a VARIMA(

) are defined, respectively, in Equations (1) and (2):

where order

is the lagged value,

is the differencing operator of order

,

,

is a

parameter matrix,

and

is a sequence of white noise with mean

and covariance matrix

.

Before modeling by VAR and VARIMA, data are required to be stationary to avoid spurious prediction results. A stochastic process

is stationary if its statistical properties are independent of time. Meanwhile, seasonality and trends are significant and common patterns in nonstationary time series. To address nonstationarity, deseasonalization and detrend (DSDT) is a crucial step for data preprocessing. The aim of time series decomposition is to decompose a nonstationary time series

into nonstationary effects and a remaining component [

19]. Because VAR and VARIMA rely on linearity assumptions, they are limited to capturing nonlinear dependencies. In addition, estimating parameters can be difficult, labor intensive and time consuming, particularly for high-dimensional data.

Training Details

It is required to verify time series stationarity by conducting Augmented Dickey Fuller (ADF) tests [

20] with a level of significance

. To stabilize the systems, DSDT is implemented as a preprocessing step for the VAR

and VARIMA

models by differencing the series until stationarity has been reached.

The Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) in Equations (3) and (4) are the metrics utilized to select the optimal combination of parameters

:

where

is the number of parameters,

is the number of observations and

is log-likelihood of the model. Both AIC and BIC penalize the likelihood of the model as

increases. A grid search is conducted until both AIC and BIC reach reasonably low values, starting from

to 12,

, and a maximum value of

due to monthly data. Compared to DL-based models, computational efficiency is superior in VAR and VARIMA due to their linear structures.

3.2. Long Short-Term Memory (LSTM) Within the AutoML Framework

RNNs are better aligned to model sequential and temporal data, because there exists a recurrent network in the structure, which is to maintain memory and capture dependency in the temporal data. In AutoML-based forecasting methods, two effective RNN variants, LSTM and GRU, are elaborated upon and utilized for multivariate time series prediction [

21,

22,

23,

24,

25].

Given an input vector

, a standard RNN computes the hidden state

, where

:

where

is the element-wise activation function, and

are the weight matrices and bias vector parameters. Equation (5) is considered as an intermediate memory cell, denoting

. Then, Equations (6)–(8) are set by adding

to the value of the previous internal memory cell

, to produce the current value of memory cell

:

where

represent input, forget and output activation vectors, respectively, and

is an element-wise multiplication. Their mathematical expressions are Equations (9)–(11):

where

is the sigmoid function. Therefore, LSTM networks can effectively capture seasonality, trend and aperiodic patterns at an early stage and carry them across long distances.

Integration of AutoML-LSTM and Training Details

To integrate LSTM networks into an AutoML system, the input, forget and output gates within each memory cell can process raw data input and update parameters by selectively discarding irrelevant information and retaining useful memory. Comparing with statistical learning methods, AutoML approaches do not require stationarity conditions and hand-crafted parameters.

Both LSTM and GRU networks are applied on the open-source software Keras and TensorFlow. It is optional to normalize the data from the AutoML aspect, but it is highly recommended because of reduced computational costs. Then, the normalized training set proceeds to TimeseriesGenerator() to transform the sequential data into batches of input–output pairs. It adapts the sliding window technique and controls the number of lagged values (n_input) and batch size. n_input is selected as 12, because the data are in monthly form. n_feature = {3} is selected based on the number of variables in the data.

To facilitate the AutoML system,

Table 1 specifies the fixed and core hyperparameters, and

Table 2 specifies the hyperparameters to be searched or tuned based on the nature of the data. The proposed AutoML methods intend to reduce the computational intensity and runtime by setting some fixed hyperparameters. Enforcing and testing these hyperparameter can help users develop an optimal and valid combination of NNs. As shown empirically in [

11], the rectified linear unit (ReLU) activation function combined with the Adam optimizer achieved the lowest forecasting errors in time series predictions. On the Keras interface, LSTM(), Dense(), MaxPooling1D() and Dropout() are the layer choices. Due to the nature of time series, LSTM() captures patterns from autocorrelation, lagged relationships and interdependencies between variables. Dense() performs the linear combination of the inputs. MaxPooling1D() reduces the computational complexity by decreasing dimensionality. Dropout() mitigates overfitting by randomly dropping out units during training. After completing the construction, output layer is Dense(3), matching n_feature. For high-dimensional multivariate time series, additional Dense layers can increase the probability of patterns being captured.

3.3. Gated Recurrent Units (GRUs) Within the AutoML Framework

Similar to the architecture of the LSTM network and AutoML setting, GRU has shown promising forecasting performance in various ML-based tasks, because GRUs’ networks are simpler and more computational efficient, consisting of fewer gates (update and reset gates). GRU has gating units that modulate the flow of information inside the unit; however, without separate memory cells, it maintains the effect of LSTM [

22,

26].

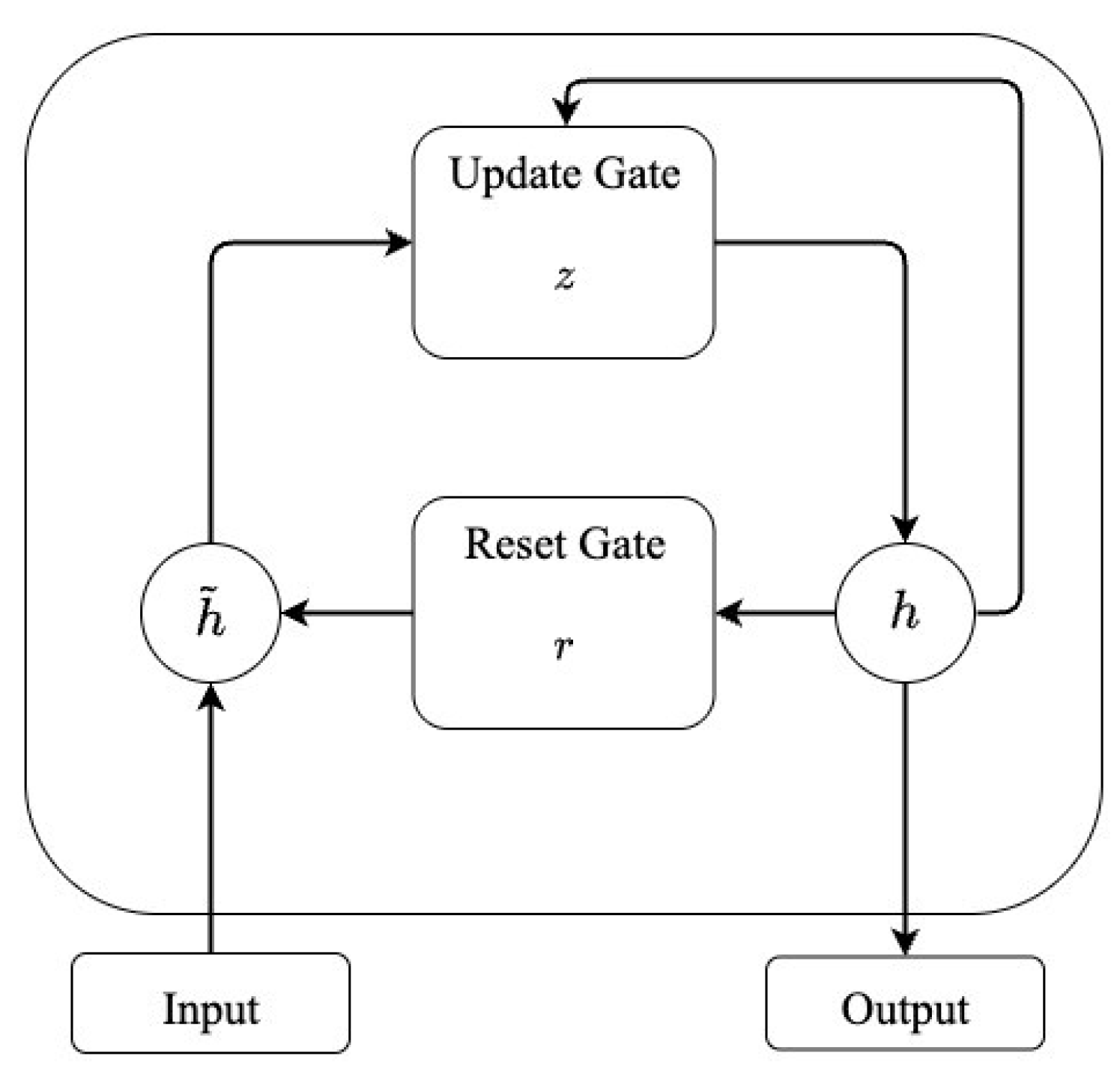

Figure 1 presents the functioning of GRUs’ gates;

are the reset and update gates, respectively.

At time

, the activation

conducts a linear interpolation between a previous hidden state

and the candidate activation

. The candidate activation

computes in the similar way to the standard RNN in Equation (5). Mathematically, the GRU procedure is expressed as in Equations (12) and (13):

where the update and reset gate are Equations (14) and (15), respectively:

where

is the sigmoid function. The reset and update gates are calculated according to the previous hidden state

and current input at time

. In the context of time series tasks, GRUs within the AutoML framework achieve lower computational costs while being able to effectively capture significant patterns.

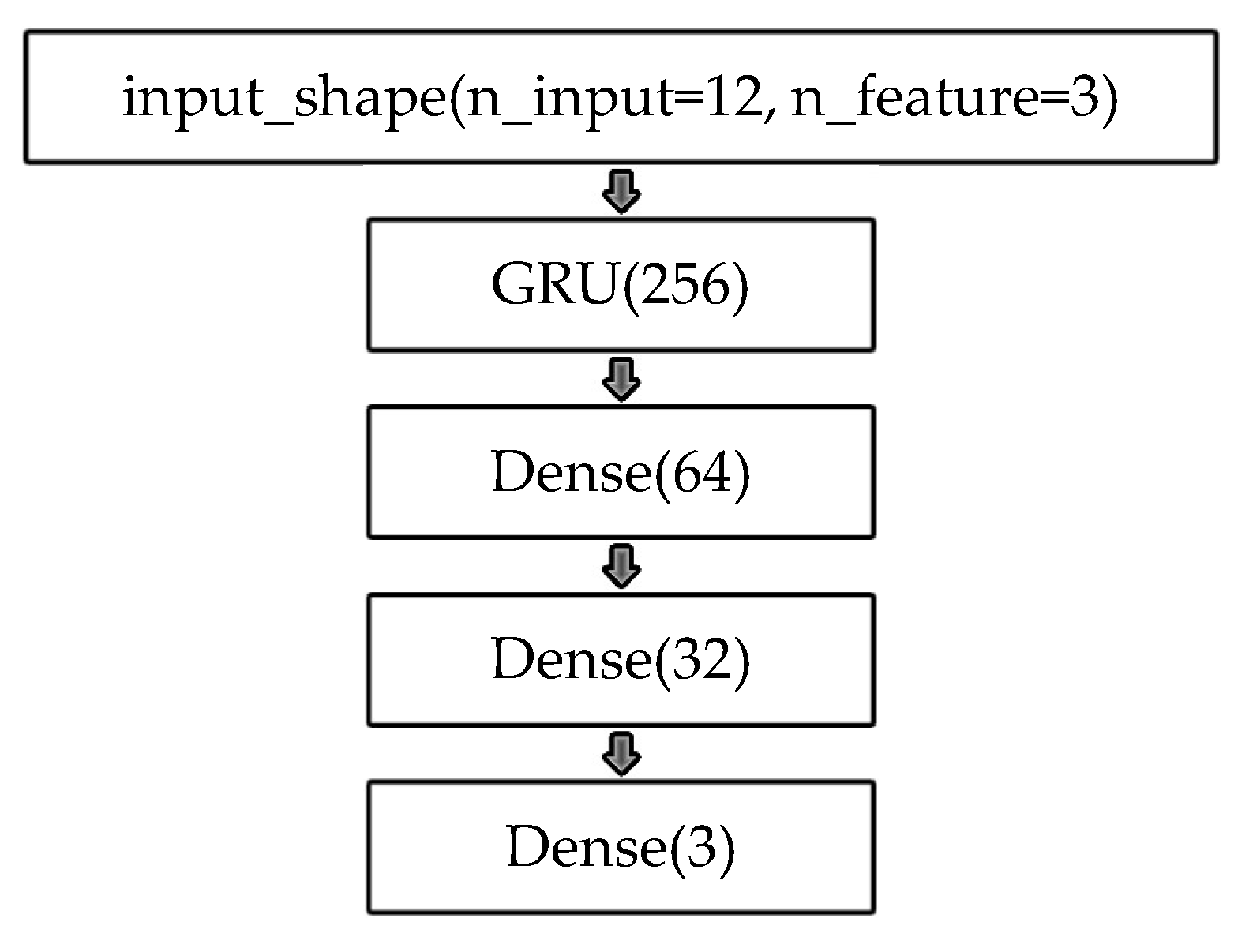

Integration of AutoML-GRU and Training Details

The proposed AutoML-based methods can automatically and implicitly perform feature engineering inside their network structures. Similar to modeling AutoML-LSTM, Auto-GRU follows a comparable approach; thus, GRU() is operated on Keras to capture the temporal dependency of sequential data.

Table 1 and

Table 3 are the hyperparameters being used and tested to construct the GRU model.

4. Experiments

Adapting a novel research design and extending the simulation from a previous study [

10], this section first covers our proposed simulation of correlated multivariate time series with missing values and shifted trends. It also introduces the real data and presents the experimental settings of traditional and AutoML methods.

4.1. Simulation of Correlated Multivariate Time Series with Missing Values and Shifted Trends

An outline of our simulation is provided as follows.

- (1)

Generate three independent time series, using the mixed decomposition model.

- (2)

Generate shiftiness of trends on one selected simulated time series.

- (3)

Given a correlation matrix with identical off-diagonal elements and perform LU decomposition on the matrix.

- (4)

Using the decomposed lower triangular, transform uncorrelated time series to correlated multivariate time series.

- (5)

Randomly generate missing values on one of the simulated multivariate time series, according to 15%, 10% and 5% missing values of the training set.

4.1.1. Independent Time Series

Our research focuses on monthly time series, as such data are commonly utilized and processed in economics and business. Both simulated and real data are structured in this form, due to the high granularity of the data and the better detection of data patterns. Adopting one of the research designs of [

2], we choose the mixed decomposition model to generate independent time series. The mixed decomposition model introduces the nonstationary mean

and variance

over time [

19], because it involves both additive and multiplicative decompositions. To cope with seasonality and trend effects, three models are given in Equations (16)–(18):

where, at time

, linear trend components are given by Equations (19)–(21), respectively, for each series:

That is,

,

and

represent the seasonality indexes referred to

Table 4, and

are the Gaussian noise

over time.

is selected to simulate the Gaussian noise in the time series, because it can reduce overfitting issues during training while enhancing the model’s robustness. Adding Gaussian noise makes the simulated data resemble real data more closely. In this step, we produce 60-month simulated multivariate time series and they are uncorrelated.

4.1.2. Shiftiness of Trends

Shifted trends are common in financial and business data. A notable example is the 2008 financial crisis, which caused abrupt structural changes in global markets. Thus, this factor is considered in our simulation.

is selected to include shifted trends among three series. For

, its linear trend pattern remains the same as in Equation (21), and, for

, the trend has shifted as follows in Equation (22):

4.1.3. Lower–Upper Decomposition (LU Decomposition)

LU decomposition [

13] is a useful matrix factorization technique commonly applied in numerical analysis and optimization. The study [

10] chooses the Cholesky Decomposition [

27], requiring a symmetric positive-definite matrix to be decomposed. On the other hand, LU decomposition is a generalization without this requirement; thus, it is applicable to any square matrix. LU decomposition enables pivoting to improve numerical stability.

is defined as a square and full rank matrix, and

is decomposed into a lower triangular matrix

with

and an upper triangular matrix

as in Equation (23):

Given that the correlation matrix is full rank, the decomposition is unique in Equations (23) and (24). LU decomposition is implemented by a square correlation matrix with the designated Pearson correlation coefficient based on simulation nature, denoted as

ranging from −1 to 1. The correlation matrix is decomposed into a lower matrix and an upper matrix in Equation (24):

The off-diagonal entries of the correlation matrix are set such that , to demonstrate that higher correlations can lead to better forecasting performance. This simplification facilitates comparison by avoiding the complexity introduced by mixed correlations. However, this is not a limitation of the proposed approach, which remains applicable to cases with unequal correlations, as evidenced by our real data analysis. In the next step, the simulation incorporates the specified correlation structure into the multivariate time series.

4.1.4. Correlated Multivariate Time Series

The uncorrelated multivariate time series is generated in

Section 4.1.1 and

Section 4.1.2, and it is defined as

. Given a correlation matrix with designated

, decomposition is proceeded as Equation (24), and

is transformed by the multiplication of lower triangle matrix and

such that:

where

is the correlated multivariate time series.

is used to proceed to modeling. Therefore, the designated

has become a component in

. Please note that the first series in

remains the same as which of

, after transformation in Equation (25).

4.1.5. Missing Values

Missing data remain one of the major concerns across time series studies, especially in clinical [

28] and environmental fields [

29]. However, this represents another common factor that should be incorporated into our study and simulation. In time series analysis, missing data and incomplete observations can easily disrupt temporal structures, such as seasonality, trend and autocorrelation, thereby degrading the reliability of forecasts. Some observations are removed on another series

in

; thus, 15%, 10% and 5% of missing values are randomly generated and selected according to the training size, with values drawn from Uniform

.

4.1.6. Simulated Data

Another study [

10] aims to study the relationship between a wider range of correlations, such as 0.1 to 0.99, and bivariate time series forecasting. It concludes that, as correlation increases in simulated data, the overall forecasting accuracy increases. In our study,

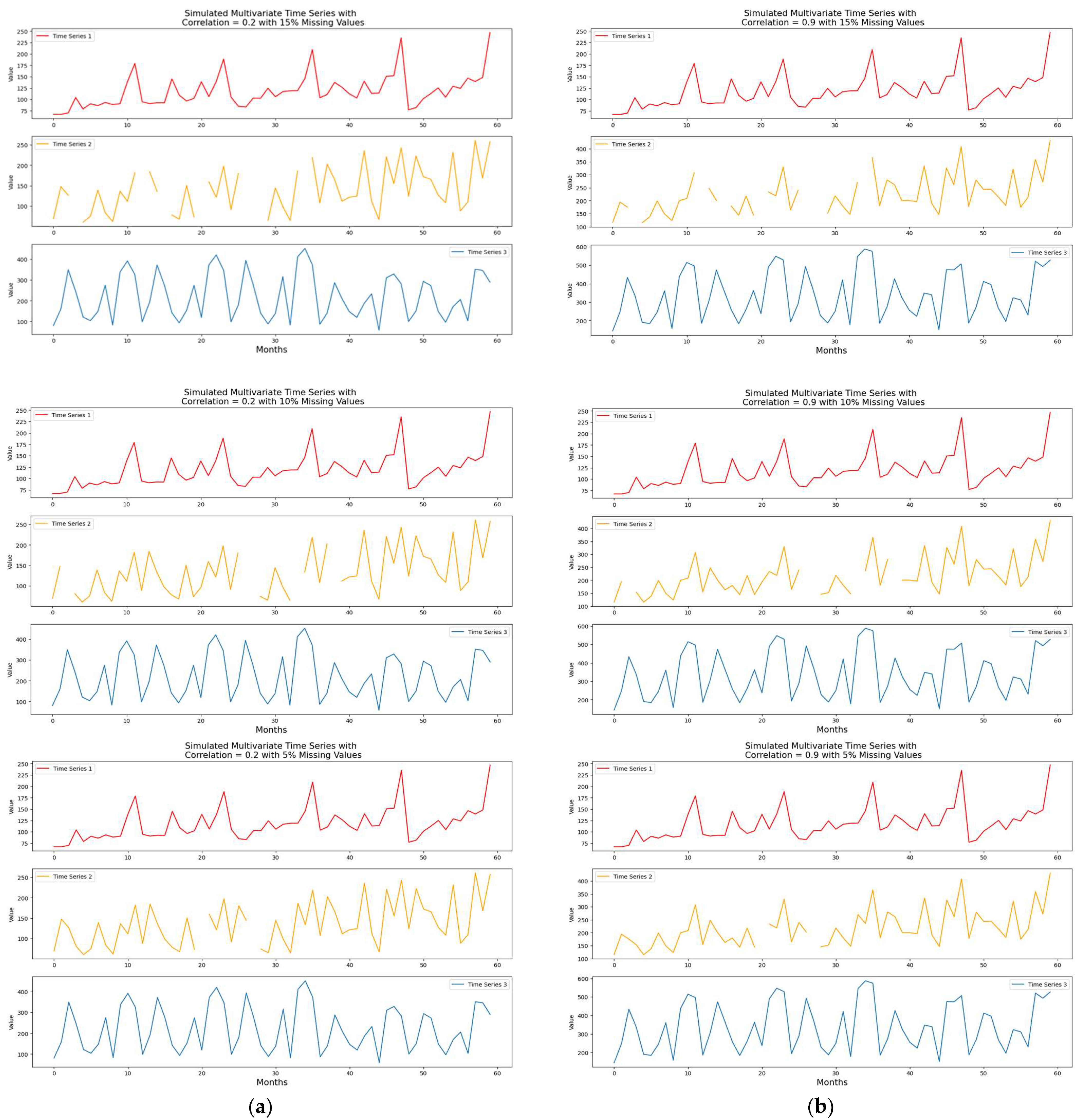

are selected to be the designated correlation coefficients computed as in Equations (24) and (25). Two correlated multivariate time series datasets with shifted trends are obtained. Moreover, 15%, 10% and 5% of missing values are considered. Therefore, six simulated time-series datasets are analyzed and modeled in total, and the plots of simulated time series data are presented in

Figure 2.

As the correlation increases, the patterns of the time series change; the second and third series obtain more data patterns than the first series. To describe the simulated data, each simulated dataset includes 60 observations (monthly data) with three time series (variables).

4.2. Real Data

The first real-world dataset used in our study was originally collected from U.S. Energy Information Administration (EIA) Open Data [

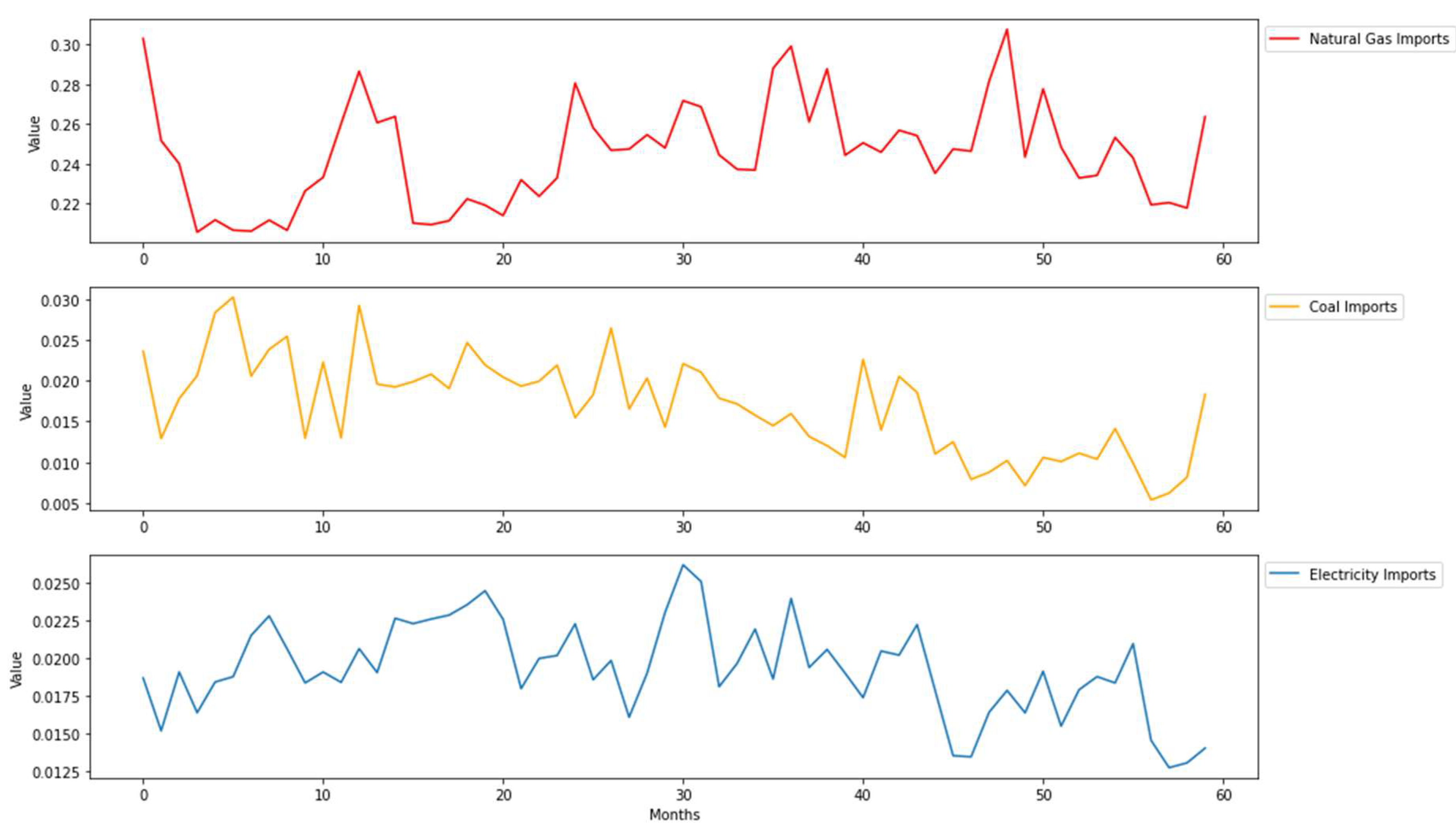

30]. It comprises monthly energy imports time-series data ranging from January 2014 to December 2018, shown in

Figure 3, and it includes three variables: natural gas, coal and electricity imports in Quadrillion British Thermal Units (Btu). The correlation matrix among the three energy imports is given in

Table 5, in which correlation coefficients can be investigated. Both coal and electricity imports series show obvious shifted trends, which increase initially and then decrease. Data show more aperiodicity, but no missing data are observed.

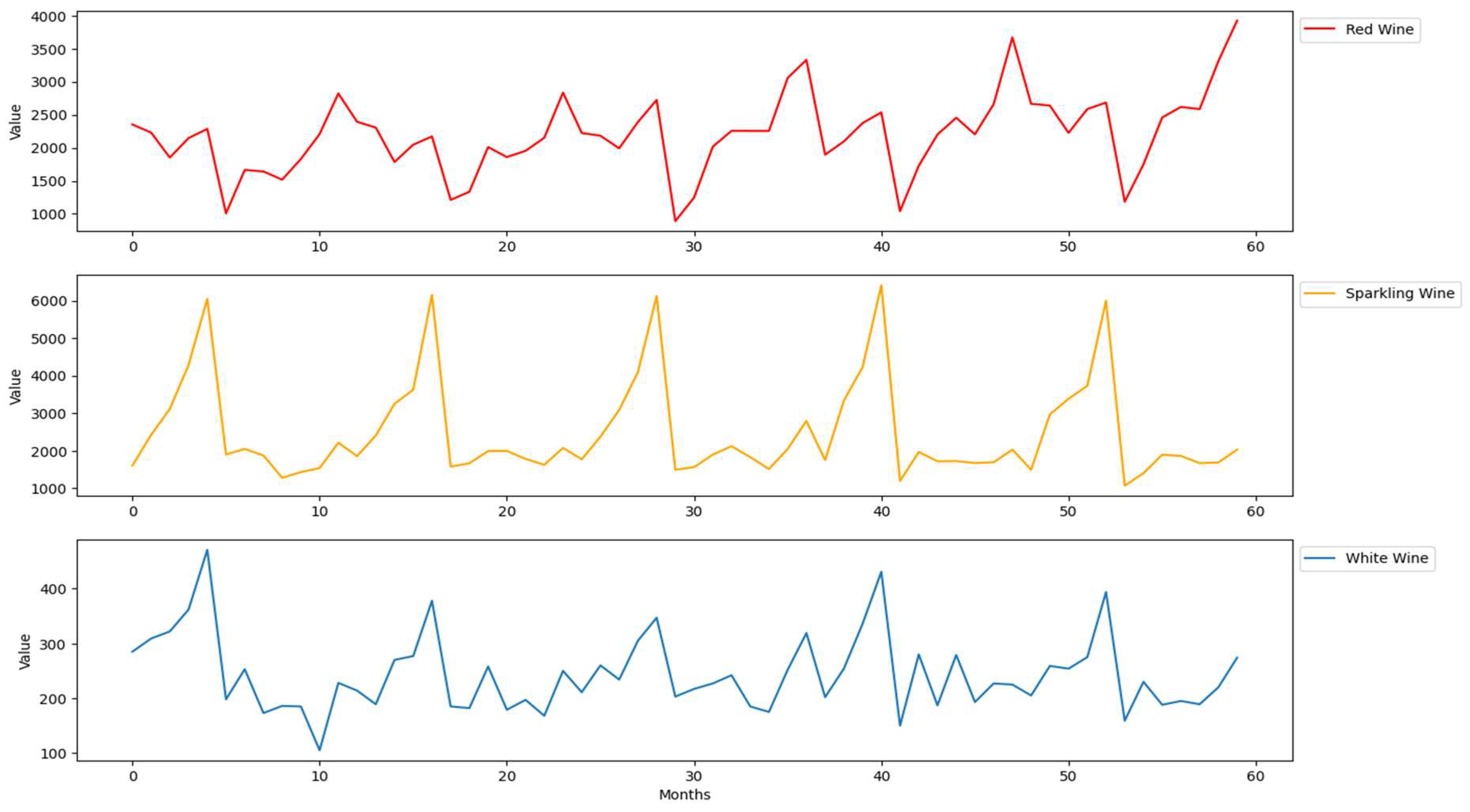

The second real-world dataset was collected from the database of analytical results of the Australian Wine Research Institute’s Commercial Services Group [

31]. It comprises monthly wine sales data ranging from August 1990 to July 2005, shown in

Figure 4, and it includes three variables: red wine sales, sparking wine sales and white wine sales.

Figure 4 shows more regular patterns and no missing values. Red wine sales show increasing trends, sparkling wine sales show regular seasonality patterns, and white wine sales show shifted trends from increased to decreased. The correlation matrix among the three wine sales is given in

Table 6. To maintain consistency with the simulated data, these two real datasets include 60 observations with three time series.

4.3. Experimental Design and Framework of Computational Approaches

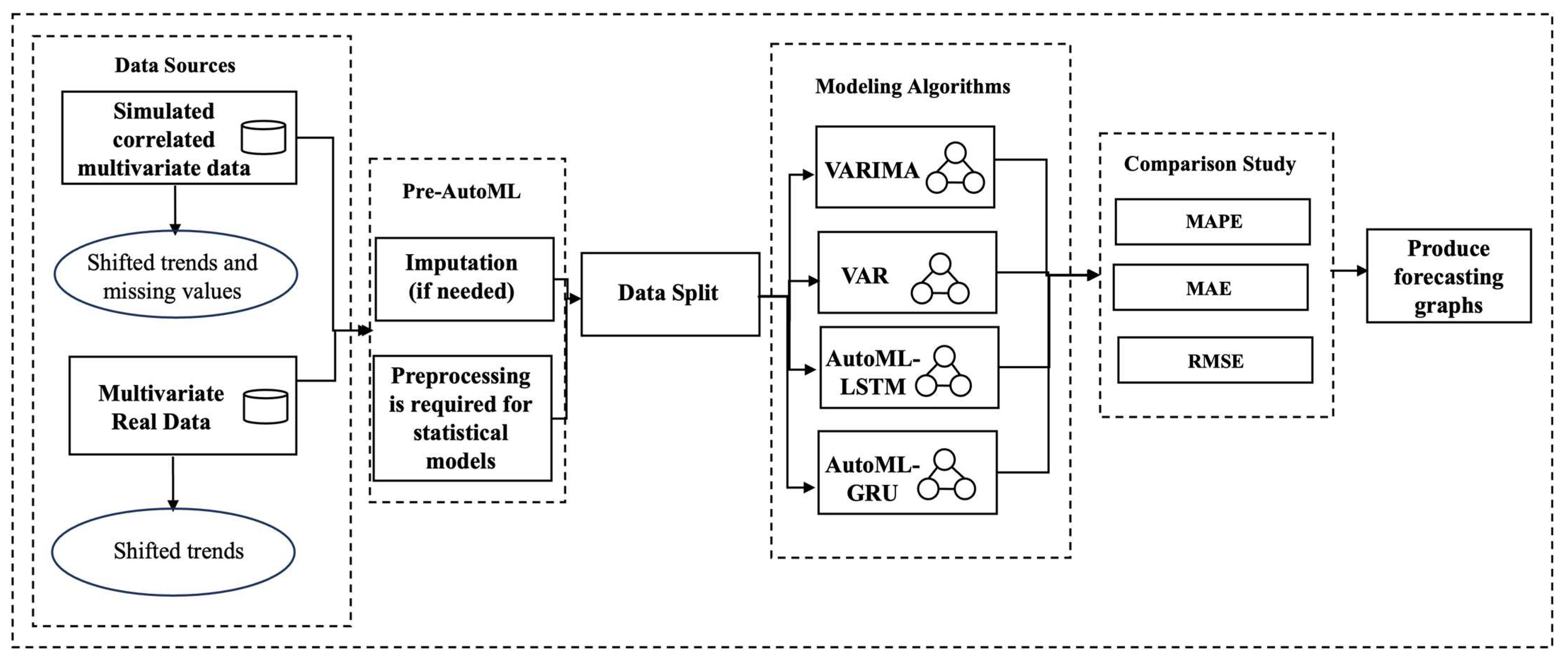

The experimental design tends to illustrate the modeling strategies for both traditional and AutoML approaches discussed in

Section 3 to forecast time series in various lengths, such as 6-month and 12-month forecasts. These strategies specifically address multivariate time-series limitations, including correlation, shifted trends and missing values. All datasets show nonstationarity in the experiments.

To tackle missing values in time series data, imputation methods are necessary, such as pre-AutoML. In our case, missing data are categorized as missing at random (MAR). Under MAR, the complete case analysis is no longer relies on a random sample of the source population, and selection bias is likely to occur [

32]. Instead of imputing with the mean of the training set, the proposed method suggests imputing with the mean of the previous and next valid observations. Hence, this approach intends to maximize the seasonality effect and autocorrelation in the training set.

Throughout the whole experiment, all datasets are partitioned temporally: the first 48 months of data points are used for the training set and the last 12 months of data points are used for the test set. The test set aims for prediction, so it is set to unknown. The experimental results represent deterministic outcomes under consistent experiment settings, by fixing random seeds (888). After searching the hyperparameters from

Table 1 and

Table 3, the optimal AutoML-GRU networks are given in

Figure 5, as training simulated data with correlation

, shifted trends, and 10% of missing values.

Both traditional and AutoML methods adopt the multi-step ahead forecasting strategy [

33]. Each AutoML model trains time series data up to time

, and the iterative process of predictions is mathematically expressed in Equations (26)–(28):

That is, a time series model produces a one-step-ahead prediction, feeds that prediction as input back in the model to predict the next time-step values, and repeats this process to produce multiple future values. This forecasting strategy is efficient for high-frequency time series, such as stock price data.

Lastly,

Figure 6 summarizes a computational framework of this study, starting from our data source to forecasting graphs.

5. Empirical Findings

This section presents and interprets the empirical results from experiments including forecasting plots and tables of forecasting errors. The discussion is divided into two parts: simulated data and real data. To evaluate the forecasting methods, we aim to investigate the prediction performances with varying lengths such as the first 6 months and 12 months.

Three forecasting accuracy metrics are applied to assess the empirical results: Mean Absolute Percentage Error (MAPE), Mean Absolute Error (MAE) and Root Mean Square Error (RMSE). MAPE and MAE are important measures to evaluation time series forecasts, and RMSE represents a more robust indicator utilized to address zeros and heterogeneous scales. The three metrics are defined in Equations (29)–(31):

where

is the predicted value at time

and

is the observed value at time

.

5.1. Modeling Results of Simulated Data

We have evaluated the performance of our proposed method on each simulated dataset and compared it with traditional methods. Each dataset is involved with specific multivariate time series components: correlation

and missing values (15%, 10%, 5%). We also demonstrate and investigate the effects taken by those components in simulated data. Therefore,

Table 7 and

Table 8 report the forecasting performance metrics (MAPE, MAE and RMSE) for both 6-month and 12-month datasets. Each partition in the table represents the forecasting errors of simulated data under specific correlations and specific percentages of missing data.

As shown in

Table 8, AutoML-GRU outperforms adequately in time series prediction, particularly in correlated data at

. The patterns of multivariate data become less complex and easier to capture as the correlation increases to

. Meanwhile, overall forecasting errors of correlated data with

are lower than those of correlated data with

, which is empirically verified by our article [

10].

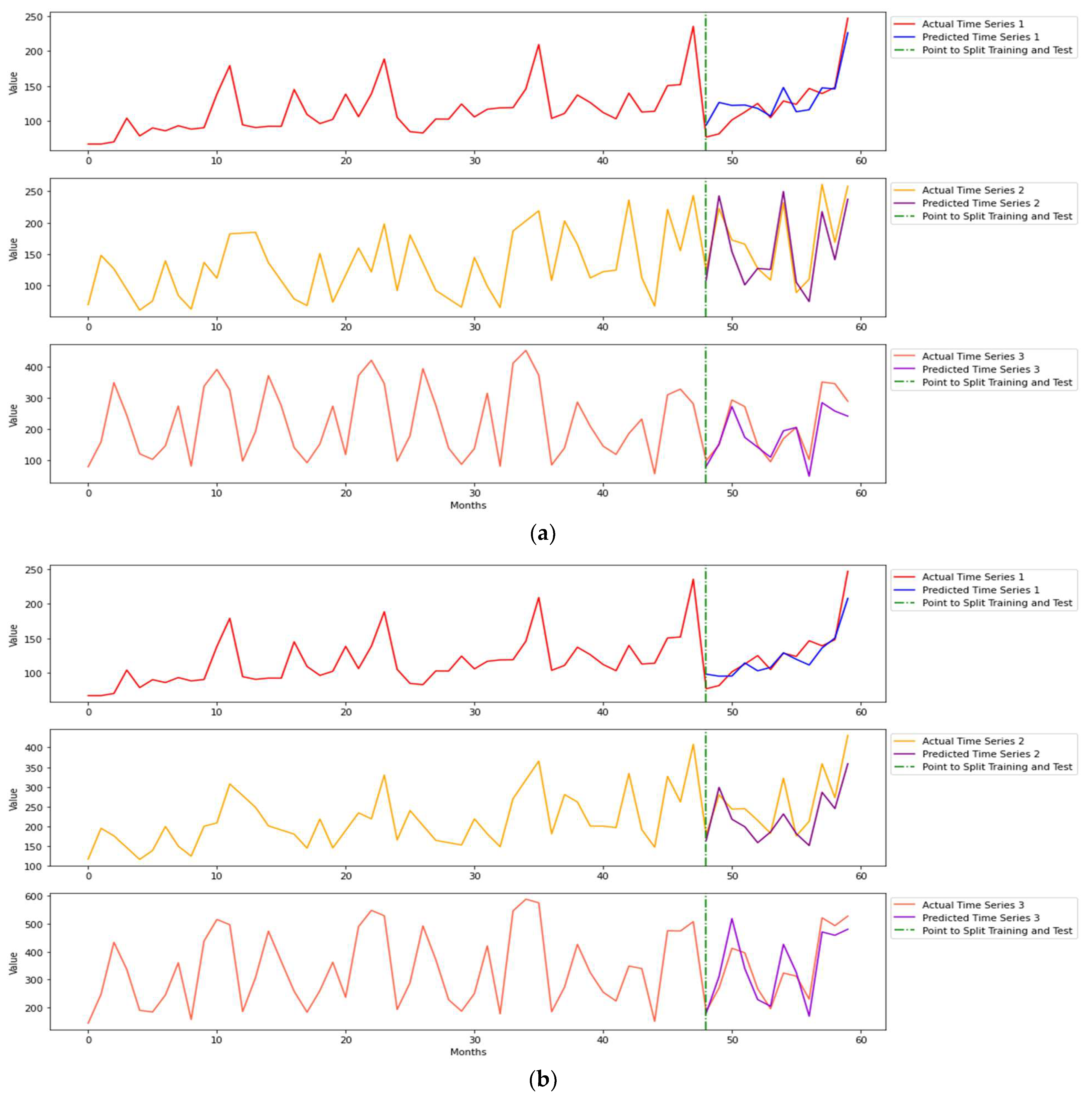

A detailed analysis of

Table 7 and

Table 8 reveals that series 1 is a regular seasonal and trended time series, but the overall forecasting errors of series 1 are not as low as those of series 2. Our imputation method obtains reliable and valid predictions in series. 2. Time series 3 comprises shifted trends in the unknown test set, and it is more challenging to predict, so the general forecasting errors are higher than those of the other series. When addressing long-term dependency, the 12-month forecasts modeled by AutoML-GRU achieve more accurately than those produced by AutoML-LSTM.

Figure 7 shows the forecasting plots modeled by AutoML-GRU. Time series 3 predictions in both datasets are satisfying, because our proposed method is able to learn the shiftiness of dependency. The 12-month forecasting MAPE of the time series 3 of

data is decreased by 52.13% from that of time series 3 for

data.

AutoML-LSTM is a competitive alternative for modeling, resulting in some low forecasting errors. For time series 3 for simulated data with and 5% missing data, the six-month MAPE of AutoML-LSTM is 30.94% lower than that of AutoML-GRU. For time series 1 for simulated data with and 10% missing data, the 12-month MAPE of AutoML-LSTM is 10.68% lower than that of GRU. Combining forecasting results and plots, AutML-GRU forecasts involve some fluctuations but generally result in higher forecasting accuracy and efficiency. Empirically speaking, our proposed method tends to exhibit relatively superior performance in simulated correlated data as the correlation increases. The forecasting performances of traditional methods yield noticeably larger errors in the experiments. The simulated data initially assume linearity, but a linear model is not the optimal choice when correlation, missing values and shifted trends are accounted for.

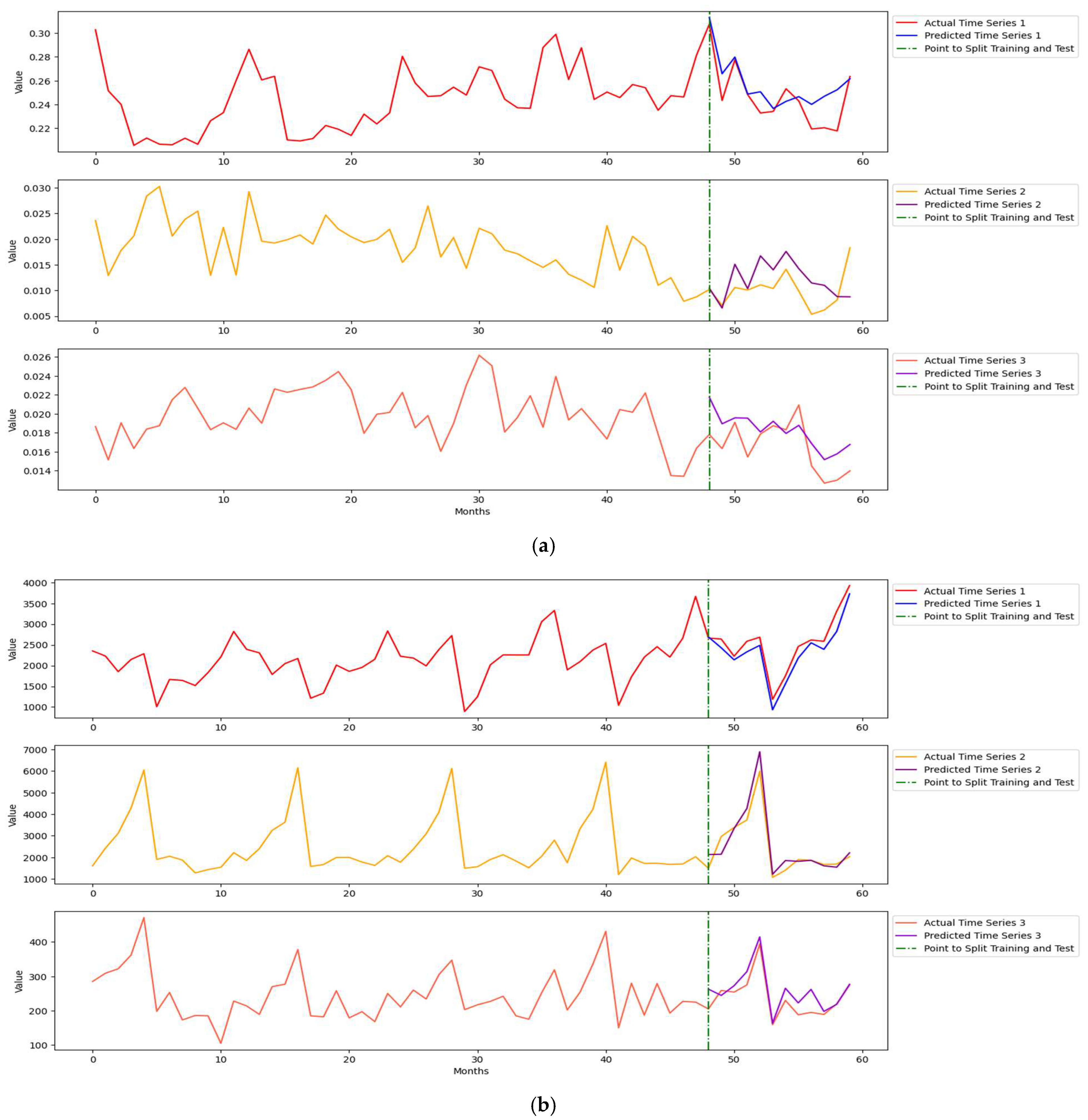

5.2. Modeling Results of Real Data

The evaluation strategy for modeling real data is the same as that for modeling simulated data. Although correlation, shifted trends and seasonality are also temporal characteristics presented in both real datasets,

Figure 8 demonstrates more complex multivariate temporal patterns with higher noise and aperiodicity, whereas

Figure 4 shows clearer seasonality with slightly shifted trends. Both

Figure 8a and

Table 9 clearly show that AutoML-GRU outperforms in forecasting both 6-month and 12-month natural gas imports, because of the evident increasing trends and seasonality. The 6-month MAPE in forecasting natural gas imports modeled by AutoML-GRU is 44.85% lower than that by Auto-LSTM. In the case of coal and electricity import data, the trend begins to decline gradually from the 12th month of the training period, though with noticeable fluctuations.

Figure 8a presents the forecasting plots, demonstrating that AutoML methods can capture decreasing trends. However, the 6-month and 12-month forecasting performances by AutoML-LSTM in predicting electricity imports outperform other models, because of the complexity of LSTM networks.

Figure 8b and

Table 9 present the wine sales forecasting performance. AutoML-GRU forecasts outperform in predicting all-length and three series, except in the 6-month MAPE of white wine sales, with a value 7.61% lower than that of AutoML-LSTM. Both [

10] and this research have indicated that traditional methods neither learn the correlations among bivariate time series nor produce subpar forecasting performances.

6. Discussion and Conclusions

One significant advantage of most traditional time series methods is the assumption of linearity. Thus, ARIMA, VARIMA and VAR have less computational effort and high interpretability, but they experience more preprocessing efforts and lower prediction accuracy. At present, time series are more complex, aperiodic and high-dimensional, requiring a powerful alternative. Within the AutoML search space, both LSTM and GRU networks are recommended due to their forecasting performance, with each addressing certain limitations of multivariate time series. After missing data imputation, GRU with the simpler NN architecture outperforms because trends and seasonality can be identified and learnable. On the other hand, the LSTM network is a powerful alternative if the task focuses on solving long-term dependency. Compared with traditional forecasting methods, LSTM and GRU are DL models, which introduce expensive computations. That is, they can reach some hidden information of time series and allow them to be learned. These two NN constructions within the AutoML framework can be further optimized by considering more factors in the experiments. This article introduces initial GRU applications to multivariate time series analysis; however, similar to other NN architectures, GRUs operate largely as “black boxes” with limited interpretability. In addition, several implementation details and practical considerations remain open issues that will be addressed in future research. A multi-step recursive forecasting strategy is used to predict time series due to its effectiveness in capturing specific patterns for coherent prediction and its compatibility in correlated time series. However, the multi-step direct method is well-suited for long-term predictions and volatile time series. In future research, a comparative analysis can be conducted to evaluate direct, hybrid and recursive forecasting strategies, rather than limiting the comparison with statistical methods.

In this article, we proposed AutoML approaches to forecasting correlated multivariate time series consisting of shifted trends and missing data, by utilizing GRUs. AutoML aims to solve a time series task in an automated way so that little manual effort is required [

34]. When processing nonstationary multivariate data, our proposed method does not require preprocessing. The empirical evidence from simulated and real data indicates that LSTM and GRU networks significantly outperform traditional methods in the terms of manual intervention, preprocessing and prediction accuracy. Even though the computational costs of conventional forecasting methods are low, AutoML and NN systems can learn to address long-term dependencies across multiple variables in data. The advantages of LSTM and GRU stand out because they are able to tackle some multivariate time series problems but find it challenging to address all problems simultaneously. As AutoML aims to identify the suitable and best-fit models, our research exhibits and empirically shows that GRU is highly recommended for multivariate time series tasks characterized by clearer seasonality and shifted trend patterns. In contrast, LSTM serves as a quality alternative for tasks involving more complex structures, such as aperiodicity and noise. The nature of AutoML may allow us to overlook the lack of interpretability, as it is primarily designed to assist non-experts without prior technical expertise. The proposed AutoML frameworks are user-friendly and efficient, particularly for time series forecasting tasks, while effectively capturing essential domain-specific features.

Hence, our research goals and hypotheses have been achieved along with empirical evidence. The utilization of AutoML approaches have been discussed and demonstrated comprehensively, addressing specific multivariate time series characteristics or limitations. Compared to traditional methods, the forecasting performances of proposed methods achieve enhanced accuracy.

7. Future Work

To build on this article, an AutoML approach can be proposed to solve the task of multivariate time series with covariates. Usually, multivariate time-series data come with additional variables, such as socio-economic status and marital status.

A further study would incorporate more time series challenges, such as extreme and abrupt events in time series. For instance, COVID-19 had a significant impact on the world, from our daily lives to the global economy. Considering extreme events as factors in the time series data, AutoML solutions can be developed based on their effects. From the perspective of traditional methods, VARFIMA can be considered due to its ability to capture long-term memory and cross-correlations between series.