1. Introduction

Animal behavior recognition based on video data is an essential task in many scientific areas, such as biology, psychology, and medicine discovery research [

1]. In particular, the analysis of rodent behavior is of great interest to researchers in behavioral neuroscience. Due to physiological and behavioral similarities between rodents and humans, researchers can study rodent behaviors to understand human behavior, diseases and response to various treatments, and social interactions, among other things [

2,

3]. Traditionally, researchers have analyzed the behaviors of rodents in video data manually. This is a time-consuming task and subject to human error and inconsistencies [

4]. With the advancement of artificial intelligence and deep learning methods, behavior recognition has been automated through video analysis. Such approaches offer promising results, are highly scalable, and can be used in real time [

5]. While recent studies have produced consistently better results for automated behavior recognition [

6], there is still significant room for improvement in terms of identifying and classifying individual behaviors, such as grooming or feeding, or social behaviors, such as facing each other or following each other. One of the main challenges in automated approaches is dealing with diverse rat breeds, backgrounds, varying lighting conditions, and overlapping behaviors in video recordings. These factors can significantly impact the accuracy and robustness of behavior recognition models. Additionally, training highly effective deep learning models requires large, well-annotated datasets, but the process of data collection and annotation is both labor-intensive and complex [

7].

Early automated methods were based on handcrafted features, like Scale-Invariant Feature Transform (SIFT) and Histogram of Oriented Gradients (HOG) [

8], which can take significant human effort and face difficulties when applied to complex patterns. The emergence of Convolutional Neural Networks (CNNs) transformed the field by enabling efficient feature extraction from images and videos. However, CNNs are primarily designed for capturing spatial features and lack the ability to model long-range dependencies, which is critical for recognizing behaviors in sequential video frames [

9]. To address this, recurrent architectures such as Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks were introduced, but they often suffer from computational inefficiency [

10].

More recently, Transformer models have brought new capabilities to modelling local and global relations, which has modified fundamental deep-learning operational concepts through self-attention [

11]. Vision Transformers (ViTs) have the ability to capture long-range dependencies by using the patches of images in a sequence and self-attention mechanism [

12,

13]. However, standard ViTs often ignore capturing the local features and demand high computational resources [

14,

15]. Later, hybrid architectures such as Swin and Focal Transformers were proposed to address these challenges. The Swin Transformer incorporates a local feature extraction mechanism using hierarchical shifted windows, while the Focal Transformer balances global context modeling and computational efficiency [

16].

Despite these improvements, there remains a gap in the effective capture of global dependencies in a computationally scalable manner. To address this gap, Hatamizadeh et al. [

17] introduced the GC-ViT network, which exhibits a hierarchical architecture consisting of global and local self-attention modules. Global query tokens are computed at each stage using modified Fused-MBConv blocks [

18]. These are advanced fused inverted residual blocks designed to capture and integrate global context information from different parts of the image. While the short-range information is captured within the local self-attention modules and across all global self-attention modules, query tokens are consistently employed to exchange information with local key and value representations.

In this study, we leverage the GC-ViT network to design an approach for identifying rat social interactions, called Vision Transformer for Rat Social Interactions, or ViT-RSI. Our working hypothesis is that this approach, which captures both local features specific to each rat in an image and also incorporates global features that represent the global image-level interactions between rats, has great potential in accurately identifying social rat behaviors. Along these lines, the main contributions of our work can be summarized as follows:

We focus on the task of identifying rodent social behaviors and propose an approach that leverages the GC-ViT architecture [

17]. We refer to our approach as ViT-RSI.

This architecture enhances feature representation by integrating multiscale depthwise separable convolutions within a mixed-scale feedforward network and fused MBConv blocks. Using residual connections and depthwise separable operations, this architecture effectively captures rich multiscale contextual information while significantly reducing the computational cost.

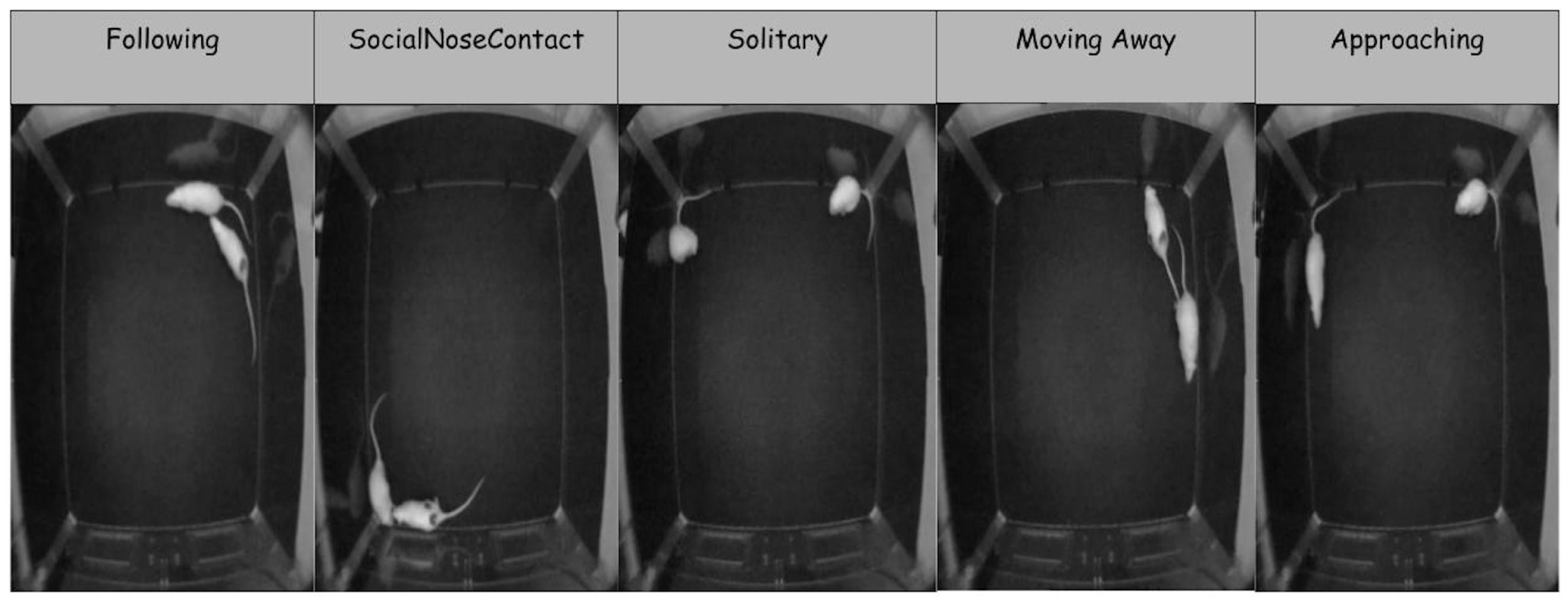

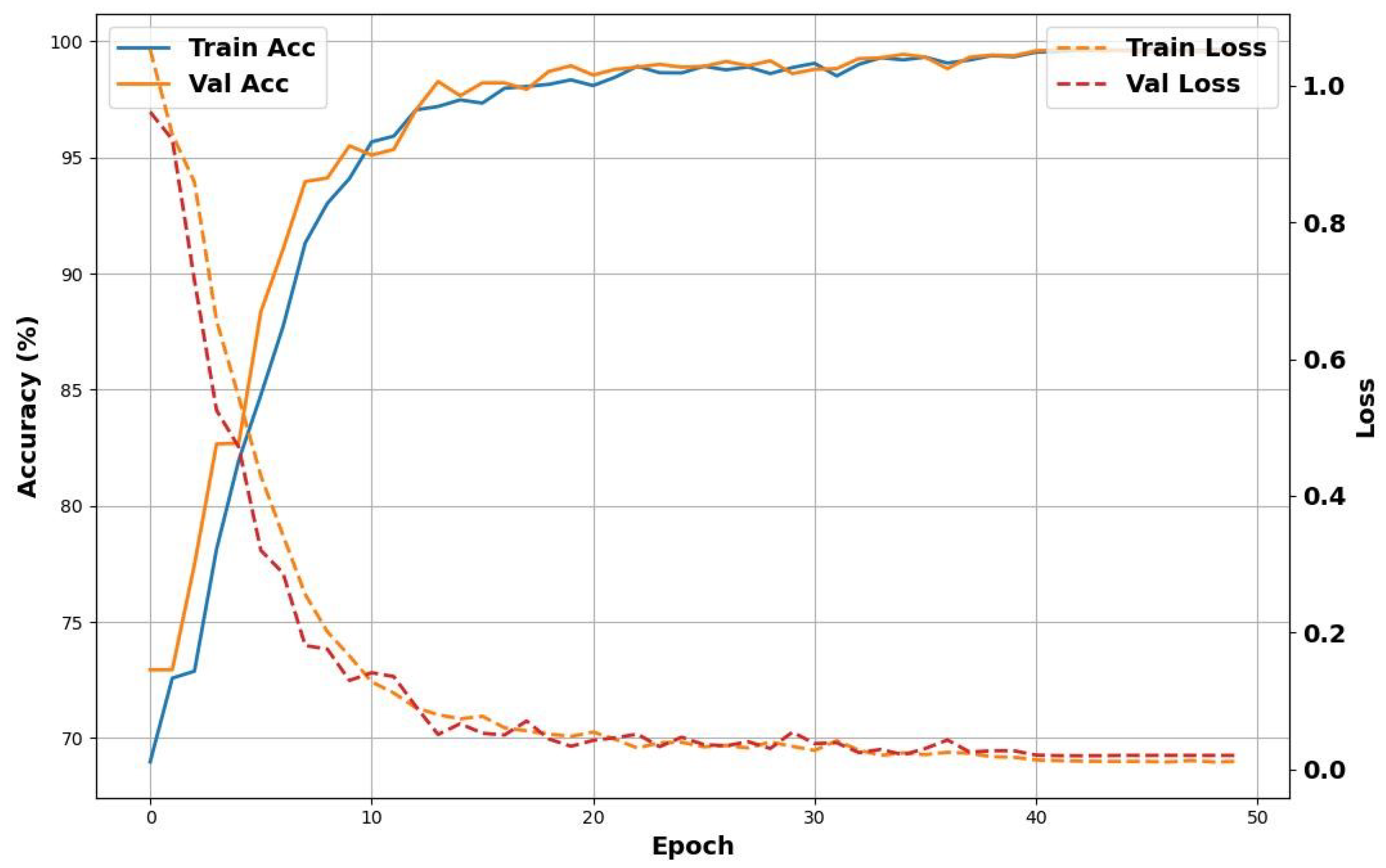

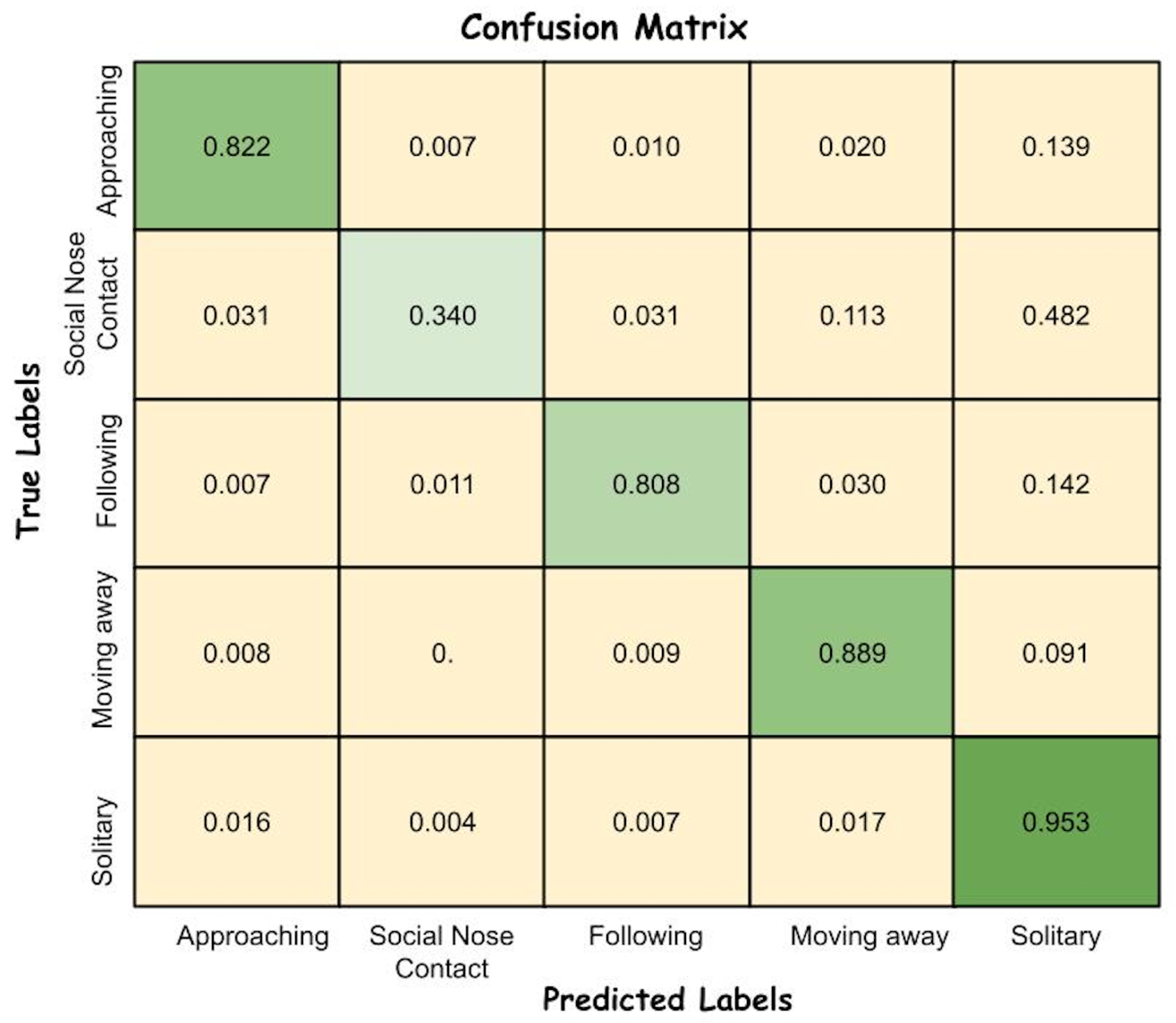

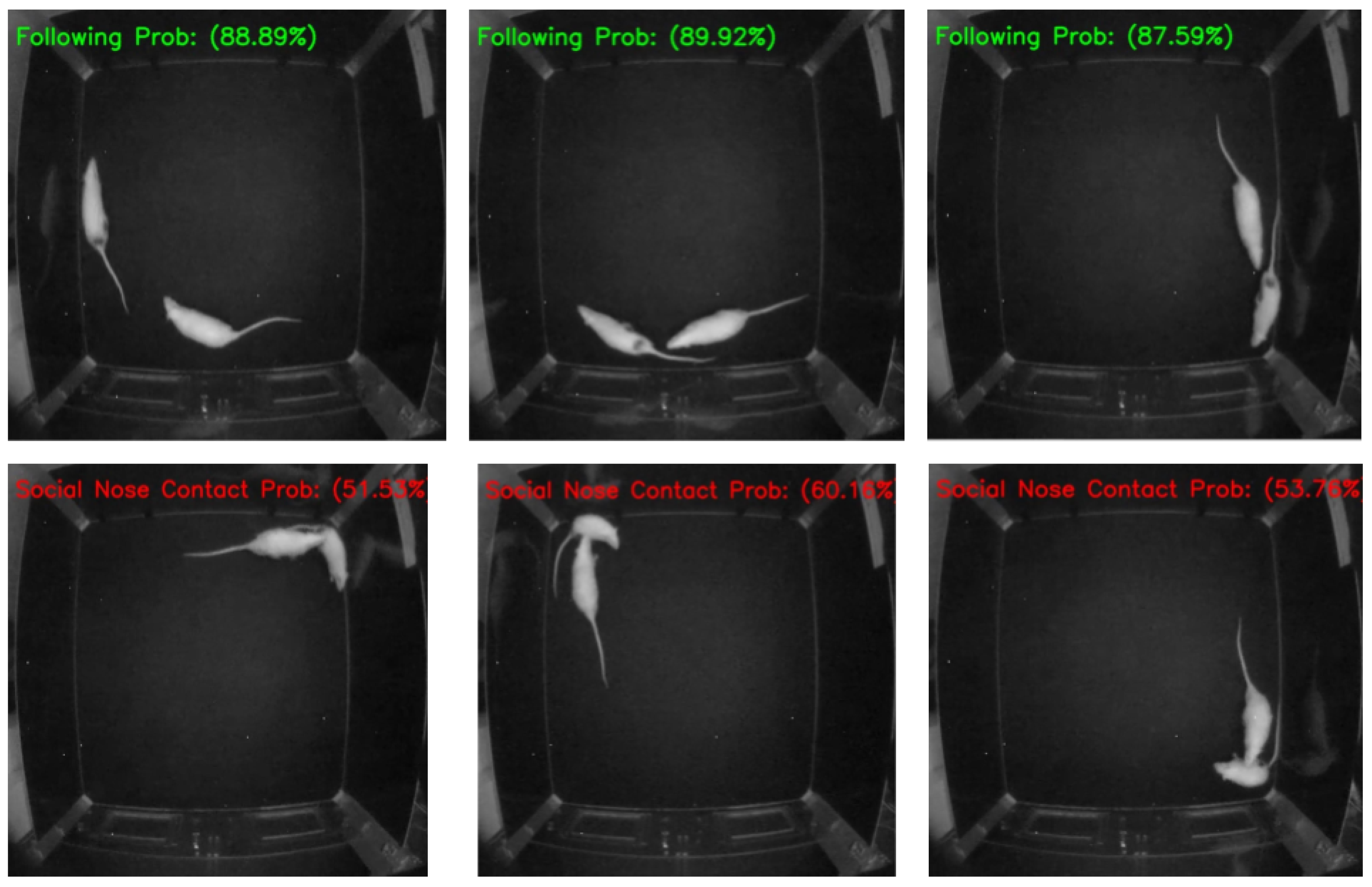

We used the RatSI dataset to experiment with the proposed ViT-RSI model. Specifically, we focused on five behaviors from the RatSI dataset, including “Approaching”, “Following”, “Social Nose Contact”, “Moving away”, and “Solitary”, and showed that our approach outperformed a prior GMM baseline model for four out of five behaviors.

3. Proposed Methods

We propose the use of a Vision Transformer for Rat Social Interaction (ViT-RSI) model to identifying rat behaviors. This model is based on the Global Context Vision Transformer (GC-ViT) [

17]. The GC-ViT model contains a sequence of blocks with four main types of layers. Specifically, each block consists of local Multi-Scale Attention (MSA) with a Multi-Layer Perceptron (MLP) layer and Global MSA with MLP layers. We adapt the GC-ViT model by replacing the MLP layers following the local MSA with a Depthwise Separable Convolution-based Mixed-Scale Feedforward Network (DSC-MSFN) [

38], to enhance feature extraction through the cross-resolution property of the DSC-MSFN network. MLPs do not capture the spatial relationships between neighboring regions of the image and also process the image tokens independently [

39]. The DSC-MSFN enables the network to learn spatial features at multiple scales, allowing the model to recognize complex behavioral patterns that require both the local and global context of an image [

40]. We believe this is useful for recognizing social rat behaviors, where we need to capture local rat features and also global image-level features when the rats occur in different regions of the image.

The architecture is illustrated in

Figure 1. The model takes an input image with resolution

, where overlap patches are obtained using 3 × 3 convolution operations with a stride rate of 2 and the same padding. These patches are mapped into a C-dimensional embedding space using another set of 3 × 3 convolution operations with the same stride and padding. The flow of the proposed method is based on four consecutive blocks, each including Local MSA, DSC-MSFN, global MSA, and MLP. Each block’s downsampling is also connected to the global token generation module and the next similar consecutive block.

Each architecture stage combines local self-attention for capturing fine-grained features and global self-attention using global tokens. The DSC-MSFN replaces standard MLPs to extract spatial features at multiple scales, and downsampling modules reduce dimensionality while preserving necessary information. The final global pooling aggregates features for classification.

6. Conclusions

In this paper, the Rodent Social Behavior Recognition Vision Transformer (ViT-RSI) method is proposed, which consists of four blocks having four layers—local MSA, DSC-MSFN, Global MSA, and MLP, in this sequence. The method is evaluated using the RatSI dataset from which five behaviors are selected for experiments—“Approaching”, “Social Nose Contact”, “Following”, “Moving Away”, and “Solitary”. Experimental results show that the ViT-RSI model can accurately predict most of the behaviors considered. However, possibly overlapping behaviors (e.g., frames where the rats may exhibit both the “Solitary” and “Social Nose Contact” behaviors) may prevent the model for performing well on some of the less represented behaviors. The ViT-RSI results proved to be superior to the results of the prior model that was tested on this dataset [

41] for four out of five behaviors, and also consistenly better than the results of the state-of-the-art Swin Transformer. Furthermore, compared to the Swin Transformer network (which has 28.2 million parameters for the smallest variant), the best and largest ViT-RSI utilizes only 11.7 million parameters. This indicates that our model not only performs well, but is also computationally inexpensive, lightweight, and easily deployable.