Abstract

Data preprocessing and feature engineering play key roles in data mining initiatives, as they have a significant impact on the accuracy, reproducibility, and interpretability of analytical results. This review presents an analysis of state-of-the-art techniques and tools that can be used in data input preparation and data manipulation to be processed by mining tasks in diverse application scenarios. Additionally, basic preprocessing techniques are discussed, including data cleaning, normalisation, and encoding, as well as more sophisticated approaches regarding feature construction, selection, and dimensionality reduction. This work considers manual and automated methods, highlighting their integration in reproducible, large-scale pipelines by leveraging modern libraries. We also discuss assessment methods of preprocessing effects on precision, stability, and bias–variance trade-offs for models, as well as pipeline integrity monitoring, when operating environments vary. We focus on emerging issues regarding scalability, fairness, and interpretability, as well as future directions involving adaptive preprocessing and automation guided by ethically sound design philosophies. This work aims to benefit both professionals and researchers by shedding light on best practices, while acknowledging existing research questions and innovation opportunities.

1. Introduction

Preprocessing and feature engineering are essential data mining (DM) building blocks that enable the conversion of varying and crude inputs into interpretable and standardised forms. The data involved in real-life applications often pose challenges such as noise, sparsity, inconsistency, imbalance, and variability of distribution. Furthermore, datasets are often limited by practical scale-related limitations, with additional issues related to data management and challenges regarding data replication, all crucial to ensure repeatability and verifiable outcomes. It is necessary to treat preprocessing as an essential component of an end-to-end data pipeline requiring systematic methods that include cleansing and transformation of data with standardised encoding and normalisation to feature generation and selection, along with dimensionality reduction (DR). These are necessary to align with essential analytical requirements related to accuracy, efficiency, robustness, and fairness.

This paper aims to guide practitioners in building pipelines to withstand data leakage, ensure verifiability, and maintain reliability with changes to data, models, and requirements by integrating methodological knowledge with comprehensive details on selected tools and practical design frameworks.

1.1. Role of Preprocessing in DM

Data preprocessing is a step in DM [1]. It can be viewed as a critical go-between process that bridges unpolished, often disorganised or noisy datasets and sophisticated algorithms that distil knowledge or create predictive models. Without preprocessing, even very advanced models can perform poorly, due to incompleteness, outliers, missing values, or non-pertinent attributes [2,3]. Practically, preprocessing enhances input data quality and, thus, the accuracy of results. In addition, it ensures that models run on datasets with completeness, a systematic structure, and absence of distortion, which, otherwise, can contaminate results or reduce interpretability.

Preprocessing involves a set of operations, including data cleaning, transformation, normalisation, feature encoding, and DR [1,4]. These procedures affect the effectiveness of DM methods, especially in scenarios where procedures rely on differences in input distributions, feature scale discrepancies, or the level of the discrepancies of sparsity. For instance, unnormalised numerical features have the potential to add bias to distance-based clustering procedures, while encoded categorical features have the capacity to inhibit strong model development.

Additionally, preprocessing contributes to model fairness and effectiveness by reducing noise, as well as by dealing with the intrinsic structural data imbalances. For example, in [5], the authors discuss how various ML pipeline stages can introduce or mitigate bias, while preprocessing facilitates automation within the data pipelines, further contributing to increased reproducibility, scalability, and transferability in application scenarios. As the dependency of core sectors, including finance, healthcare, and governance, has increased, effective preprocessing has been transformed from a subject of technical interest to an essential methodological framework.

1.2. Motivating Examples and Performance Impact

For a binary classification task like forecasting credit defaults, failure to compensate for missing income data, for example, can lead to misclassification of high-risk individuals. Deploying strong imputation methods—like k-nearest neighbours or regression-based imputation—can have a significant impact on both precision and recall. Equally, when analysing medical diagnoses based on patient histories, variations in symptom coding or measurement scales can have negative effects on the efficiency of machine learning (ML) techniques. Standardisation and normalisation procedures performed on data from different sources not only produce gains in mean precision but also increase interpretability [6,7].

In healthcare, leakage-safe imputation and encoding must preserve rare but clinically salient events; robust scaling is preferable to minimise the effect of extreme laboratory values, and subgroup analyses should accompany any missing-data strategy to avoid disparate impact [2]. In finance, time-aware validation (blocked or forward-chaining) is mandatory; encoders with target information must be fitted out-of-fold, and drift monitoring should track segment-level shifts (e.g., by product or region) to trigger recalibration [1].

Even basic preprocessing can have significant impact. For example, feature scaling represents an essential prerequisite to optimisation-based approaches like support vector machines and logistic regression [6,8]. Insufficient scaling can hinder or downright prevent convergence, leading to a model becoming stuck in suboptimal solutions. For time-series mining, feature misalignment can prevent critical patterns from being identified. Another area of preprocessing, feature engineering, provides larger boosts to performance in place of changes made to a model’s hyperparameters. In many cases, top-performing solutions rely upon carefully crafted feature transformations, instead of overly complex models [9].

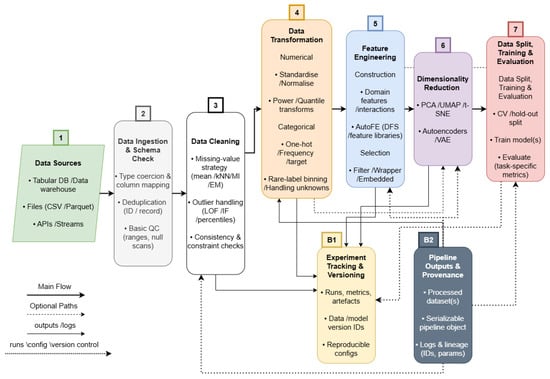

Additionally, automated preprocessing packages (implemented via scikit-learn–style pipelines or low-code frameworks such as PyCaret) show that simple models exposed to uniform preprocessing can outperform state-of-the-art models trained from unprocessed data [8,10]. Automated ML (AutoML) benchmarks report top-tier performance for pipeline-driven tools across binary, multiclass, and multilabel tasks [11,12]. Ref. [13] also ascertains through domain-specific experiments that preprocessing choices can outweigh hyperparameter tuning in their effect on downstream accuracy, raising preprocessing as both a scientific and an engineering priority in DM. An overview of the end-to-end preprocessing pipeline referenced throughout this review is shown in Figure 1, followed by Table 1, which summarises typical risks and the required safeguards across domains.

Figure 1.

End-to-end data preprocessing pipeline. Raw data are ingested, validated, and progressively refined through Cleaning, Transformation, Feature Engineering, and optional DR before Data Split, Training, and Evaluation. Artefacts (processed datasets, serialised pipelines, logs) and experiment tracking/versioning are captured alongside each stage to ensure reproducibility and auditability.

Table 1.

Cross-domain preprocessing requirements and safeguards.

Recent evaluations underline that evaluation protocol and leakage control determine the credibility of reported gains; widespread, often subtle leakage has been documented across domains [14]. For temporally ordered data, blocked or forward-chaining validation is recommended to avoid look-ahead bias and information bleed [15].

1.3. Objectives of This Review

This review has four objectives, corresponding with the need for transparency, critical integration, and practically useful guidance identified in the literature:

- 1.

- Develop a unifying framework: Develop a context-specific taxonomy that integrates preprocessing and feature engineering as systematic phases (cleaning, transformation, construction, selection, reduction), while incorporating decision variables such as dataset dimensions, interpretability, and computational resources.

- 2.

- Evaluate techniques critically: Make comparative assessments of imputation, encoding, feature selection, and DR techniques, including linked risks, trade-offs, and recorded failure instances.

- 3.

- Describe practical heuristics: Specify requirements as numerical values (e.g., sample sizes of autoencoders and variance filter thresholds) and shed light on common pipeline design choices (e.g., fitting training-only scalers) to enhance reproducibility and tackle data leakage.

- 4.

- Codify best practices: Establish definitive design patterns and procedural guidelines for direct implementation by practitioners, covering topics such as leakage management, CV, fairness auditing, and monitoring in realistic deployment scenarios.

These objectives distinguish this work from preceding surveys, as it moves from a description to a systematic, critical, and prescriptive contribution.

1.4. Scope and Structure of the Review

This research provides a thorough analysis of data preprocessing and feature engineering methodologies, focusing mainly on structured data. The aim is to incorporate basic and advanced automated or manual techniques, as well as assessing their impact on tasks, such as classification, clustering, and regression. Consideration has been given to optimise techniques allowing for reproducibility, interpretability, and streamlined workflow, qualities becoming ever more critical in real use cases and regulatory compliance [16].

First, we discuss data cleaning and transformation concepts, such as handling missing values and outliers, as well as scaling and encoding. Then, we delve into an in-depth analysis of feature engineering and selection methods, including filter, wrapper, and embedded methods. We further discuss DR, handling standard linear and nonlinear embedding methods. We also discuss different automation libraries and tools, which allow for preprocessing pipelines to be created easily. In subsequent sections, we discuss selection procedures and how they impact model assessment, and we explain how best practices help to increase system robustness, scalability, and maintainability regardless of size and complexity. Finally, we summarise by highlighting challenges and future research directions, mainly automation, interpretability, and scalability.

1.5. Novelty and Contribution

This work advances the state of the art by going beyond descriptive surveys and making three original contributions:

- 1.

- We propose a unifying framework (Section 2), which systematically organises data preprocessing and feature engineering into five pipeline stages (cleaning, transformation, construction, selection, reduction), while explicitly linking them to decision criteria (dataset size, interpretability, domain constraints, computational resources).

- 2.

- We provide comparative tables and method-level evaluations that highlight trade-offs, risks, and common failure cases across imputation, encoding, selection, and DR.

- 3.

- We highlight best engineering practices, including serialisation, experiment tracking, and monitoring for drift and health, such that methodologies are always reliably transferable from the realm of notebooks into production environments.

These contributions position this review not just as a consolidation of methods existing in the literature but as an integrative framework and prescriptive guide for researchers.

2. Conceptual Framework: A Context-Aware Taxonomy of Data Preprocessing and Feature Engineering

This section presents a unifying conceptual framework. It organises data preprocessing and feature engineering into a context-aware taxonomy, which emphasises two dimensions. The stage of the preprocessing pipeline, ranging from cleaning to DR on the one hand, and the approach modality, distinguishing manual, expert-driven procedures from automated, algorithm-driven solutions on the other. It also incorporates decision criteria, such as dataset size, interpretability requirements, domain constraints, and computational resources.

The first stage of the taxonomy is data cleaning, which encompasses the detection and correction of missing values and outliers. Manual strategies, such as rule-based deletion or domain-specific imputations remain common in practice [17]. Automated techniques, however, have grown in prominence: k-nearest neighbours imputation exploits local similarity structures [18], and regression imputation leverages inter-feature relationships [7]. Expectation–maximisation and multiple imputation provide statistically principled treatment under the Missing At Random assumption [18], and deep learning models, including autoencoder-based imputers, capture nonlinear dependencies but require substantial training data and careful tuning [18,19,20].

Outlier handling follows a similar duality: domain experts may flag suspicious points manually, while algorithmic approaches include statistical thresholds, clustering-based detectors, and scalable methods, such as Isolation Forest [21]. Crucially, as Refs. [22,23,24] emphasise, decisions about whether to retain or remove outliers must be informed by the domain context, since extreme values may represent valid and meaningful cases (e.g., fraud or rare diseases).

The second stage includes data transformation, so variables are readied for algorithmic application through scaling and encoding. Based on exploratory data analysis of non-scalar distributions, one might introduce manual (e.g., logarithmic or square-root) transformations, while automated scaling methods introduce z-score standardisation or min-max scaling across the entire feature space. These stages are key to attaining stability in algorithmic performance depending on distances and gradients [25,26].

Categorical variables introduce an anomaly: one-hot encoding maintains the integrity of nominal characteristics while inducing an increase in the number of dimensions [27]. Ordinal encoding is justified only if the categories represent some kind of order. Automated encoders, as target and frequency encoding or entity embeddings, are capable of identifying predictive signals or relational patterns but induce leakage and bias or reduce interpretability [27]. The selection of encoding techniques directly intertwines with the modelling scenario and the need for interpretability corresponding to the application scenario.

The third stage is feature construction, in which raw attributes are supplemented or transfigured into variables with greater information content. Manual feature engineering, based on domain understanding, is still potent: scientists can construct domain-specific scores, ratios, or indices that embody theoretical constructs [28]. Automated construction is increasingly tractable using tools like deep feature synthesis [28] and AutoML systems [9,29], which algorithmically generate large sets of candidate features. The literature demonstrates that hybrid systems, blending automated generation with expert knowledge, frequently yield the most robust outcomes, as they have the advantages of machine-driven discovery, as well as human interpretability.

The fourth stage is feature selection, where a subset of variables is left for modelling. Filter techniques rank features according to statistical criteria and are fast but might miss interactions [30]. Wrapper techniques search iteratively over subsets with a learning algorithm and usually achieve higher precision at the expense of exponential computation [30]. Embedded techniques build selection into model training, for instance using regularisation penalties or a tree-based measure of variable importance, and find a balance between performance and speed [31]. Each class comes with trade-offs, and the selection relies not only on available computational power but also on what kind of stability and interpretability is required.

The last stage, DR, is used to compress feature space, thus counteracting the curse of dimensionality and improving computational speed. Principal component analysis (PCA) is established as the default linear method [30], while nonlinear manifold learning methods, such as t-Distributed Stochastic Neighbour Embedding (t-SNE) and Uniform Manifold Approximation and Projection (UMAP), and deep autoencoders [32,33] are capable of preserving more complex structures. However, as suggested in the literature, the interpretability of the applied transformations may vary significantly: components resulting from PCA may sometimes correspond to interpretable constructs, while those from neural embeddings usually remain opaque. Because of that, the decision to apply DR needs a careful consideration of the benefits of preserving variance and computational speed against potential loss of semantic interpretability.

Across all five stages, the taxonomy incorporates decision criteria that determine whether manual or automated methods are preferable. Small or sparse datasets often favour simple or manual techniques to avoid overfitting [7,15], while large, high-dimensional datasets typically require automated solutions for scalability [11]. Applications in healthcare, finance, or other regulated domains prioritise interpretability and fairness, which biases choices toward transparent preprocessing [27,34]. Domain constraints, such as regulatory rules or known causal structures, may mandate or forbid certain transformations [23,34]. Finally, computational budgets influence feasibility: wrappers and deep autoencoders deliver strong performance but are resource-intensive [31,33].

The proposed framework is defined in Figure 2 and combines the five stages of the preprocessing as a single complete pipeline and connects each of them with the final decision criteria.

Figure 2.

Context-aware taxonomy of data preprocessing and feature engineering. The framework structures the pipeline into five stages (cleaning, transformation, construction, selection, reduction) while explicitly incorporating decision criteria (data size, interpretability, domain constraints, resources) that guide whether manual or automated approaches are appropriate.

3. Data Cleaning and Transformation

This section addresses Stages 1 and 2 illustrated in Figure 2, which represent the passage from raw, noisy inputs to representations amenable to modelling. Stage 1, or cleaning, restores data validity in the management of missing values and outlier detection and correction, while Stage 2, or transformation, brings feature spaces into alignment using normalisation or scaling and categorical coding. Together, these steps reduce bias, stabilise learning algorithms, and preserve a strong signal for downstream modelling.

3.1. Handling Missing Values

Missing values are frequent in real-world datasets, coming from various sources, such as sensor failures, human entry errors, integration mismatches, or intentional omission [1]. Missing data can significantly bias model outputs and prevent the application of some algorithms that require complete input vectors [7]. For this reason, missing value handling is often the first necessary step in a preprocessing pipeline.

The easiest initial approach is deletion—either columnwise (removing attributes) or listwise (removing rows)—but it is appropriate only if missing data is minimal and randomly dispersed. Unbiasedness holds only under Missing Completely At Random (MCAR). In realistic Missing At Random (MAR)/ Missing Not At Random (MNAR) settings, deletion reduces power and introduces selection bias [7]. In more realistic scenarios, especially where missingness is systematic or extensive, deletion will lead to loss of information and sample bias. As a substitute, imputation techniques are employed to input missing values in a statistically or contextually informed manner. Simple imputation techniques, such as mean, median, or mode imputation, are favoured due to simplicity but assume feature symmetry and may distort relationships or distributions. Replacing with a single summary value shrinks variance and attenuates correlations, inflating apparent model stability [7].

Beyond deletion, imputation strategies span summary-based (mean/median/mode), instance-based (k-NN) [17,35], regression-based, and model-based approaches (EM, multiple imputation) [36], as well as recent deep-learning variants (autoencoder-based) [37]. Deep models tend to work best in large-sample regimes with careful regularisation, whereas in small-n or sparse settings they may underperform simpler alternatives. Method performance also hinges on the distance metric and feature scaling (local neighbourhoods become noisy in high dimensions) [35]. Deterministic regression fills underestimate uncertainty and can overfit training structure—hence the preference for multiple imputation when feasible [7]. Even for principled estimators, convergence and model misspecification can propagate bias [36]. The choice of imputation method should be guided not only by the proportion of missingness but also by its mechanism (MCAR/MAR/MNAR) and the assumptions of the downstream modelling task, especially in clinical contexts [17].

Evaluation can be performed via synthetic experiments, CV, and downstream model comparisons. To avoid redundancy, we centralise method-level specifics in Table 2 and keep the narrative focused on decision drivers (missingness mechanism, data regime, and target task). Table 2 provides a comparative overview of common missing-value handling methods, highlighting their key assumptions, advantages, and limitations to guide appropriate selection in real-world scenarios.

Table 2.

Overview of common missing-value handling techniques with advantages, limitations, and indicative data regimes.

Comparative studies confirm that no single imputer dominates across mechanisms or regimes and that deep architectures typically require larger samples or strong inductive structure to be competitive [18,19,20]. These ranges operationalise that guidance. Precise cut-offs remain dataset-dependent.

3.2. Outlier Detection and Correction

Outliers are observations that differ substantially from the majority of observations and may result from errors in data entry, variability due to measurement, or rare but real events [24,38]. Outliers can cause bias in statistical averages and significantly reduce the effectiveness of algorithms that are sensitive to them, particularly those based on distance measures or parametric modelling approaches. For instance, the presence of a single outlier can skew the computation of the mean, inflate the variance, and mislead algorithms, such as k-means clustering or linear regression.

The identification of outliers involves both univariate and multivariate approaches. For a univariate analysis, outliers can be identified by z-scores by using interquartile ranges (IQRs), boxplots, or similar methods. For a multivariate case, however, identification of outliers becomes more complex and can be achieved by using Mahalanobis distance; clustering-based methods, such as Density-Based Spatial Clustering of Applications with Noise (DBSCAN); or density-based models, such as Local Outlier Factor (LOF), which flag points whose local reachability density is much lower than that of their neighbours [24].

Univariate screens are fast but ignore multivariate interactions and, therefore, miss context-dependent anomalies; multivariate detectors capture local structures but are sensitive to metric choice, feature scaling, and high-dimensional concentration effects [24,38]. For high-dimensional data, tree-based methods, such as Isolation Forest, scale well and do not rely on distance concentration [21], whereas autoencoder detectors exploit nonlinear manifolds via reconstruction error but require careful tuning and sufficient data. This diversity of assumptions is why method selection should be driven by data geometry and computational constraints rather than a single default. Other evaluations and frameworks reach similar conclusions for high-dimensional, streaming, and industrial settings, emphasising metric choice, dimensionality, and computational constraints [39,40,41].

As this work is a review, we operationalise verification via a task-driven evaluation protocol and a standardised set of indicators rather than novel experiments. Section 9 complements this with cross-validated design, leakage checks, and stability/fairness reporting.

Evaluation Protocol for Outlier Handling

Because there is no domain-agnostic standard, we adopt a task-driven protocol: (i) diagnose influence (e.g., leverage, Cook’s distance) and distinguish data errors from rare-but-valid events [42]; (ii) compare treatments—retain, transform (e.g., log/winsorise) or remove—using CV on task metrics (ROC-AUC, RMSE) and stability across folds; (iii) select the least complex treatment that improves performance without degrading calibration or fairness; (iv) document decisions and parameters for audit [24,38]. Table 3 showacses indicators for evaluating outlier treatments.

Table 3.

Indicators for evaluating outlier treatments. We centralise evaluation metrics to avoid narrative repetition. Compare candidates on predictive performance (mean ± SD across folds), calibration (e.g., Brier score and modern recalibration guidance; [43]), robustness/influence diagnostics, and—where relevant—subgroup fairness ([5]).

Once identified, whether to correct or remove outliers should be guided by domain expert knowledge and the analytic goals. For example, in cases like fraud detection or disease diagnosis, outliers could be pointing towards important cases and not just noise. In these circumstances, it could be desirable to flag or rescale rather than remove them. Different techniques for different applications can include winsorisation (ceiling at particular percentile levels), data transformations (e.g., logarithmic), or basing outliers as a separate binary feature. Proper handling is important to maintain valuable variability and especially in datasets typified by imbalances or rare events. Ideally, the outlier strategy should be tested against model performance or quality-related metrics in the domain [23,45].

- Testing content and reporting template.

We define the test explicitly to ensure reproducibility:

- 1.

- Data splitting. Use k-fold (or repeated k-fold) CV; detectors and downstream models are fit within folds and evaluated on held-out folds [44].

- 2.

- Candidates. Compare retain, transform (e.g., log/winsorise), and remove; record detector thresholds and treatment parameters.

- 3.

- Metrics (report mean ± SD across folds). Predictive: ROC-AUC or RMSE. Calibration: Brier score and expected calibration error (ECE) with B bins, [2,43]. Influence/robustness: max leverage/Cook’s D; feature-wise IQR ratio (after/before). Fairness (if applicable): subgroup parity deltas [5].

- 4.

- Decision rule. Select the least-complex treatment that improves the task metric by ≥one standard error without increasing ECE by >0.01 and without worsening subgroup parity.

- 5.

- Reporting. Provide fold-wise scores, chosen thresholds/parameters, and a one-line rationale.

In practice, because there is no unified processing standard, we recommend reporting at least two treatment alternatives and selecting the one that improves cross-validated task metrics, without degrading calibration or stability, especially in imbalanced or rare-event settings. Documenting criteria and results makes the choice defensible and reproducible across datasets and releases. These choices correspond to Stage 1 (Cleaning) in our context-aware framework (Section 3), where detector selection and treatment are governed by data geometry, interpretability, and resource constraints.

- Unified decision rule (retain/transform/remove).

We codify a reproducible rule to replace ad-hoc judgment:

- 1.

- Retain (with flagging/robust loss) if influence diagnostics are low (e.g., leverage/Cook’s D below conventional cut-offs) and removal degrades calibration or subgroup parity (Table 3).

- 2.

- Transform (e.g., winsorise or log) if the transformed option improves the cross-validated task metric by at least one standard error over retain, with no material deterioration in calibration (e.g., ECE ) or fairness (absolute parity delta non-increasing).

- 3.

- Remove only if removal improves the cross-validated task metric by at least one standard error over both retain and transform, while not worsening calibration or fairness (as above). Report the criteria and selected option.

This rule operationalises the indicators in Table 3 (predictive mean ± SD, calibration via Brier/ECE, influence, robustness, and subgroup metrics).

3.3. Normalisation and Scaling

Many ML algorithms are sensitive to the magnitude, distribution, and scale of numeric input features. Algorithms that depend on distance measures, such as k-NN and k-means clustering, or those using gradient-based optimisation methods like SVMs and neural networks (NNs), have high impacts caused by unevenly scaled features. The scaling and normalisation processes convert numeric features to a common range or distribution, thus improving the convergence of algorithms and the comparison of features [46].

The common methods used in data preprocessing are min-max normalisation, which scales values to a given range (with the most common being [0, 1]), and z-score standardisation, which scales the dataset to a mean of 0 and unit variance. While these are very effective in many ML tasks, their accuracy depends on the original data distribution. Where the data shows skewness, log transformations or Box-Cox transformations have been used to stabilise variance, as well as reduce the effect of extreme values. Robust scaling is also recommended because of its dependence on the median and interquartile range when there are outliers, as it greatly reduces the contribution of extreme values.

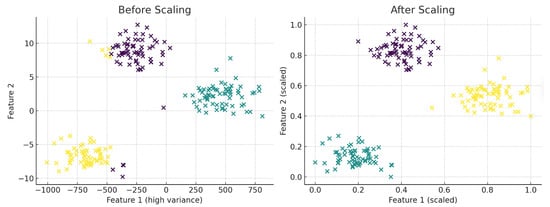

Many ML algorithms, especially those reliant on distance metrics or gradient descent, exhibit sensitivity to feature magnitudes. When input features possess varying scales, these algorithms may exhibit erratic behaviour or converge inadequately. Figure 3 demonstrates this effect utilising a synthetic dataset. In the absence of normalisation, clustering is skewed by feature imbalance, whereas min-max scaling facilitates precise cluster identification.

Figure 3.

Effect of feature scaling on clustering using k-means. Without scaling (left), one feature dominates due to higher variance, resulting in poorly formed clusters (points are coloured by cluster assignment). After min-max normalisation (right), the clusters become compact and clearly separable.

The choice of an appropriate scaling technique should be guided by the intrinsic properties of the dataset, as well as the particular properties of the mining algorithm being utilised. For example, decision tree-based algorithms like random forests and gradient boosting have a level of insensitivity to monotonic transformation, hence often dispensing with the need for scaling. However, models based on distance measures and NNs are well known to realise optimal performance when their features are scaled appropriately. In the case of multiple variables, the use of feature-based uniform scaling is imperative to avoid changes in patterns of correlation.

Finally, it is important for the scaling parameters to be computed only on the training set (or training folds within CV) and then applied to validation/test data to prevent leakage of distributional information [25,26]. In practice, this should be implemented within CV folds: scalers must be fitted only on the training fold and then applied to the validation/test fold. Even minor leakage of distributional statistics can inflate performance metrics and undermine generalisation [8,38]. This prevents contamination: even minor differences in test set statistics can bias coefficients and thresholds, inflating accuracy and undermining generalisation. This ensures that no statistical information from the test distribution contaminates the training process. Otherwise, even minor leakage can artificially inflate performance metrics and compromise generalisation [8,46].

Min–max scaling is highly sensitive to extreme values: a single outlier can compress the dynamic range of the remaining samples; in such cases, clipping winsorisation or a robust scaler (median/IQR) is preferable; z-score standardisation implicitly relies on roughly symmetric distributions; under heavy skew the mean/variance can be unrepresentative, in which case log/Box–Cox transforms or robust scaling provides more stable behaviour [2,6]. Tree-based models are largely insensitive to monotone transformations, but distance- and gradient-based learners usually benefit from consistent scaling across features [21,38,39,47]. In our taxonomy (Section 3), these scaling and transformation choices fall under Stage 2 (Transformation, see Figure 2), where the decision between simple or robust methods is guided by data distribution, interpretability, and robustness needs.

Why Fit Scalers Only on Training Data

Estimating scaling parameters (mean, variance; min, max; quantiles) on validation/test introduces information from held-out data and inflates performance estimates. Therefore, scalers must be fitted exclusively on the training portion (or training folds) and applied to validation/test without refitting [2,31]. Empirical studies show that the choice of normalisation alters both predictive performance and the set of selected features, with dataset-dependent effects [48,49].

3.4. Encoding Categorical Variables

To apply categorical variables to most DM models, it becomes necessary to represent these as numerical values. The choice of an encoding technique strongly impacts both the efficiency and interpretability of the model. One-hot encoding provides a common, simple technique to produce binary indicators of every distinct category [27]. Though this does not compromise any integrity of original categorical values and does not impose ordinal assumptions, its use on variables with too many distinct values can lead to increased dimensional feature spaces, thereby inflating memory usage and extending training times. Where cardinality is high, encoders that control dimensionality or application of rare-level grouping to avoid variance inflation are preferred [27].

Ordinal encoding assigns numerical values to categorical variables, thus creating a perceived ranking that might not reflect true relationships. Though more efficient in disk space usage than one-hot encoding, this approach risks causing algorithms to make incorrect assumptions that numerical differences imply semantic meaning [27]. For nominal variables with high cardinality, other strategies like target encoding, which replaces categories with the mean of the target variable, and frequency encoding, which uses the relative frequencies of each of the categories, are utilised [50]. Though these approaches can provide improved modelling effectiveness, they also share the risk of overfitting, especially when working with small sample data.

More sophisticated methods tackle the conversion of categorical variable representations into continuous vector representations by the application of deep learning methods. The entity embeddings learned in the training of NNs capture subtle interdependencies between categories and have the ability to represent high-dimensional spaces [51]. Such methods require large datasets and careful parameter tuning, and they can incidentally sacrifice interpretability due to the involved complexity. Methods using a hybrid model—using one-hot encoding for common categories while summing infrequent categories—can effectively balance expressiveness and generalisability. In fact, any coding frameworks must ultimately be aligned with goals related to task modelling, dataset size, and interpretability standards.

Choosing an appropriate encoding method for categorical variables is crucial for both model performance and interpretability. The choice depends on the data type, cardinality, and target task. Table 4 summarises widely used encoding techniques along with typical use cases, strengths, and potential pitfalls, helping practitioners balance expressiveness, scalability, and interpretability.

Table 4.

Summary of categorical encoding techniques, including typical use cases, benefits, and challenges.

4. Feature Construction and Selection

This section discusses Stage 5 in Figure 1, where the pipeline’s attention now moves from input preparation to the build-up of representations that the model is supposed to learn. In this stage, either manual or automated processes generate features, often assisted by domain knowledge, and then are polished using filter, wrapper, or embedded selection methods to maximise signal strength, while minimising redundancy. The result is an improved and informative feature set that maximises interpretability and efficiency, thus supporting the DR highlighted in Section 5.

4.1. Manual vs. Automated Construction

Feature construction/generation/extraction refers to the process by which fresh features are created or existing features transformed to better capture intrinsic patterns within available data. Often, manual feature construction depends on the knowledge of domain experts, who invent new variables by combining, transforming, or aggregating raw feature attributes into a form pertinent to real-world objects or decision-making procedures.

For example, in an e-commerce dataset, manually constructed features might include “time since last purchase”, “mean order value”, or “category diversity” [52]. These carefully crafted features often represent subtle indicators to boost model performance beyond what simple model tuning can achieve. However, its construction process is subjective, manual, and can be problematic when it comes to scalability to different domains or datasets. Furthermore, it requires deep knowledge of data, as well as business context, which makes it less available to domain-unspecialised practitioners. Also, cognitive biases innate to human decision-making can lead to omission of crucial interactions or conversions.

Automated feature construction aims to mitigate these constraints by the systematic creation of potentially new features. One major such method is Deep Feature Synthesis (DFS), used in libraries such as Featuretools [28], tapping into the relationships found in relational data to generate features via aggregation and transformation operations [29]. The methodologies included also cover polynomial expansion, interaction discovery, and other unsupervised transformation techniques, like clustering-based encoding. AutoML frameworks often incorporate automated feature construction as part of their optimisation pipelines [53,54]. While these methods help improve scalability and reproducibility, they can unwittingly create redundant or suboptimal features, which need further filtering or selection steps. In reality, finding a balance between automated and manual feature construction typically proves to be the best approach.

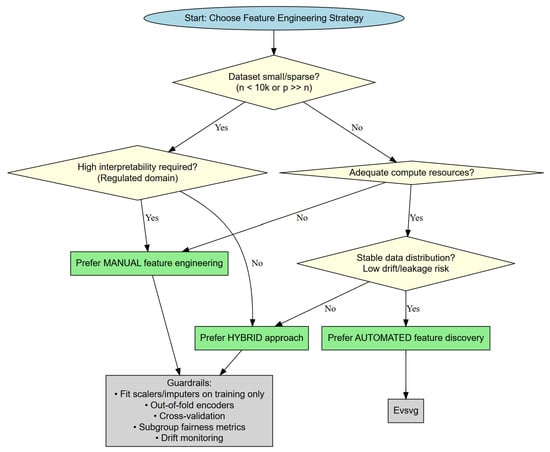

Therefore, the choice between manual and automated feature construction affects both development time and model generalisability. Manual workflows rely heavily on domain intuition, whereas automated approaches leverage scalable tools and heuristics. Figure 4 illustrates this comparison by outlining the different steps and toolchains involved in each workflow.

Figure 4.

Comparison of manual and automated feature construction workflows. Manual pipelines rely on human-driven exploration and custom logic, whereas automated methods leverage AutoML tools for scalable, reusable feature generation.

Decision Framework

To make the decision between automated, manual, and hybridised feature engineering operable, we present a flowchart-like methodology (Figure 5). The framework works along four dimensions: (i) data scale and sparsity, (ii) interpretability and regulatory requirements, (iii) compute/time budget, and (iv) data stability and drift. Each one of the outcomes (manual, automated, or hybrid) is also aligned with guardrails: train-only fitting of imputers/scalers/encoders, out-of-fold encoders, in-fold CV, and subgroup fairness metrics. This framework expands upon the current conceptual taxonomy (Figure 2) into an actual, step-wise guide, explicitly outlining the trade-offs between dataset attributes, interpretability, resource usage, and stability, thus ensuring that the process is not just theory-informed but also practically translatable.

Figure 5.

Flowchart decision framework for feature engineering. The framework operationalises the taxonomy (Figure 2) by guiding the choice between manual, automated, and hybrid approaches. Branching criteria include dataset scale/sparsity, interpretability and regulatory constraints, compute/time budget, and data stability/drift. Each outcome is coupled with guardrails for leakage control (train-only fitting, out-of-fold encoders), reproducibility (in-fold CV), fairness auditing (subgroup metrics), and drift monitoring.

4.2. Domain-Driven Feature Creation

Domain knowledge can be an asset for feature engineering [52]. Professionals with such knowledge can often identify latent variables or structural relationships not apparent when only dealing with raw data. For example, in healthcare analytics, a domain expert might recommend feature construction to represent the co-occurrence of different symptoms or a composite health risk score from laboratory measurements. For marketing, features based upon domain knowledge could include customer lifetime value judgments, affinity scores showing product correlations, or seasonality correlations tied to buying behaviour.

Domain-informed feature engineering often supports temporal aggregation, including the computation of rolling averages, cumulative statistics, and lag variables in time-series datasets, to more effectively capture temporal dependencies and trends. In addition, the augmentation of base attributes by including external data sources can be useful [9,28,29,52]. For example, combining meteorological, geographical, or macroeconomic data. Creating aggregation hierarchies, including customer-level figures aggregated at regional or store levels, in normalised enterprise data can lead to gains in performance and understandability [55,56].

4.3. Filter, Wrapper, and Embedded Selection Methods

The increasing complexity and size of datasets have led to more available features, often more than what is practical or useful. Feature selection is essential in reducing dimensionality, enhancing the generalisability of models, and aiding interpretability. Feature selection techniques can be grouped into three main categories: filter, wrapper, and embedded methods [30,52,57].

Filter methods are notable for their model freedom and rely on statistical values to determine the importance of features relative to the target variable. The most widely used metrics include Pearson correlation, mutual information, Chi-square statistics, and ANOVA F-values [52,58]. These techniques are computationally inexpensive and can be applied before model training; however, they do not consider interactions between features or the specific learning algorithms used by the model.

Wrapper methods evaluate sets of features by training and testing a model for each respective set. A well-known example is Recursive Feature Elimination (RFE), which progressively removes the least relevant feature according to model performance [30]. Wrapper methods typically yield better results than filter methods, especially when feature interactions are important; however, they are computationally intensive and prone to overfitting, particularly with small datasets.

Embedded methods incorporate feature selection directly within model training (e.g., regularisation in Least Absolute Shrinkage and Selection Operator (LASSO)/Elastic Net, feature importance in decision trees). Regularisation procedures, including LASSO, or L1 regularisation, and Elastic Net, have the ability to automatically set the coefficients of unimportant features to zero. Tree-based models also generate statistics involving feature importance, aiding in informing selection procedures. Embedded methods often balance efficiency and effectiveness best, and when combined with simple filters in a pipeline, they achieve high performance at moderate cost [59].

Filter methods are computationally efficient, scaling almost linearly with the number of features, but they ignore feature interactions and can rank correlated variables inconsistently. Wrapper methods explicitly evaluate subsets and often achieve higher predictive performance, but they are orders of magnitude slower and prone to overfitting in high-dimensional or small-sample settings [30]. Embedded methods strike a middle ground: they integrate selection within model fitting, balancing efficiency with the ability to capture interactions, but their outputs are model-dependent and may not generalise well to other learners [59]. Thus, the choice of approach should be driven by dataset dimensionality, computational resources, and the intended downstream model.

Feature selection methods are commonly classified into filter, wrapper, and embedded methods, each offering distinct benefits and trade-offs depending on dataset size, modelling complexity, and performance goals. Table 5 presents a comparative analysis of these three categories, outlining their operational characteristics, computational cost, and impact on modelling workflows.

Table 5.

Comparison of filter, wrapper, and embedded feature selection methods across multiple dimensions.

4.4. Correlation, Mutual Information, and Variance Thresholds

Quantitative measures are often used to inform feature removal or selection based on their statistical properties. Correlation analysis can be used as a tool to identify redundant features defined by linear dependencies. Features that have a strong level of correlation (e.g., a Pearson measure larger than 0.9) can potentially lead to multicollinearity, undermining the stability and interpretability of linear models [52]. While sophisticated DR techniques, including PCA, can overcome this problem, more basic techniques, such as the removal of one of the highly correlated features, may be adequate.

Mutual information can be defined as a nonlinear measure of feature-target variable dependency. Unlike correlation, it can capture more complex dependency and has a special benefit when handling categorical features. Mutual information, however, can be sensitive to discretisation parameters and can potentially overestimate variable importance in noise-dominated datasets [58]. The use of statistical significance testing or permutation techniques can help to validate the resulting estimates from mutual information.

Functions exhibiting low variance, referring to minimal change across many observations, are found to be poor predictors and tend to be pruned using defined variance threshold criteria. The approach becomes very helpful when dealing with high-dimensional sparse data, particularly when such data originate from one-hot encoding techniques [52]. Nevertheless, one needs to be cautious, as sporadic values can have critical significance. For example, a binary variable created to indicate fraudulent transactions can have low variance but strong predictive ability. Typically, variance thresholds should be used after applying different filtering methods and always be tested against performance metrics. These statistics work together to develop an interpretable and well-informed feature set.

As a practical rule, zero-variance features can be removed completely and near-zero-variance predictors (e.g., frequency ratio >20:1 or unique value proportion <10%) reviewed using resampling frameworks. Arbitrary defaults such as 0.01 should not be adopted blindly. Thresholds should be tuned and validated against predictive performance [2]. Ultimately, feature selection and construction set the stage for the next step: reducing dimensionality of the feature space, which is discussed in Section 5.

In the context-aware taxonomy, these approaches belong to Stage 4 (Feature Selection, in Figure 2), where the key trade-off lies between computational cost, reliability to interactions, and model interpretability.

Interrelationship with DR

Feature selection/construction and DR are complementary rather than substitutive:

- 1.

- When to prefer selection → reduction. If interpretability, regulatory traceability, or stable feature semantics are required, prune redundancy first (filters/embedded in Section 4.3 and Section 4.4) and apply DR thereafter to compress residual collinearity. This retains named features, while still curbing variance.

- 2.

- When to prefer reduction → selection. In ultra-high-dimensional, sparse regimes (e.g., one-hot with many rare levels), apply a lightweight DR step (e.g., PCA or hashing-based sketches) to mitigate dimensionality, and then perform wrapper/embedded selection on the compressed representation.

- 3.

- Order-of-operations ablation. Evaluate four candidates under the same CV split: (i) baseline, (ii) selection-only, (iii) DR-only, (iv) selection→DR, (v) DR→selection; pick the least-complex option that improves task metrics without harming calibration/stability.

- 4.

- Guardrails. Fit every statistic within folds (selection criteria, DR components) and apply to hold-outs only; report component counts and selected features per fold. When DR reduces semantic transparency (e.g., neural embeddings), justify with performance/calibration gains and provide post hoc projections where possible.

5. DR Techniques

Beyond feature selection, another approach to handling high-dimensional data is DR. It seeks to project data into a lower-dimensional space, whilst preserving important structure. This section reviews both linear and nonlinear DR methods (Stage 6 in Figure 1).

5.1. Principal Component Analysis (PCA)

PCA represents a commonly used technique in performing linear DR, which projects high-dimensional data into a set of low-dimensional, orthogonal variables denoted by principal components. The components represent themselves as linear combinations of original features aiming to maximise variance residing within data. There are multiple usages of PCA: it reduces computational cost, reduces multicollinearity, and increases the ability to visualise high-dimensional data [61]. However, retaining a fixed percentage of variance (e.g., 90–95%) does not guarantee optimal predictive performance; the number of components should be chosen by cross-validated task metrics rather than a variance rule of thumb. PCA must be fitted on the training split only to avoid information leakage from the test set.

The mathematical principle behind PCA is Eigen decomposition or Singular Value Decomposition (SVD) of the covariance matrix computed from the input features [61]. The leading principal component corresponds to maximum variance, and the following principal component to the next maximum variance, orthogonal to the previous, and so forth. Selecting only the top k components, it becomes possible to retain much of a dataset’s structure and discard noise and redundancy. One common rule of thumb is to retain adequate components in such a way that, together, their variance captures around 90–95% of all variance; its value, however, may be domain-dependent.

PCA is particularly valuable for exploratory data analysis, where visualising data in two or three dimensions can reveal clusters, trends, or anomalies, which are not apparent in higher dimensions [61]. It also benefits algorithms sensitive to redundant or correlated features, such as k-means clustering or linear regression.

However, PCA works on an assumption of linearity and has sensitivity to data scale. In addition, principal components are linear mixtures without clear semantic meaning, which can limit interpretability in regulated or explanation-critical contexts [8,61]. As such, standardising input features prior to PCA application is required. Additionally, components resulting from PCA tend to be difficult to interpret, not necessarily mapping to the original feature space. This property can be problematic in cases where interpretability or regulatory compliance is needed. Regardless of these challenges, PCA remains a strong and flexible tool, best implemented in the early stages of data analysis or as a preprocessing step in more complex analysis.

5.2. Autoencoders and Neural Embeddings

Autoencoders form a special class of NN architecture aiming to draw useful representations from input data by leveraging unsupervised learning techniques [32]. These networks have two primary units: an encoder, which maps input to a representation in the latent space, and a decoder, which tries to reproduce the initial input from its encoded form. Optimising reconstruction error while training the encoder, the resultant network tends to converge to a low-dimensional representation of input data, which retains its most useful properties.

Unlike PCA, which can only deal with linear transformations, autoencoders have the ability to capture nonlinear associations, thus making them suitable for complex datasets in which linear projections cannot capture the underlying organisation [33]. Extensions like denoising autoencoders add strength by reconstructing pristine inputs from damaged ones, while Variational Autoencoders (VAEs) add probabilistic encoding and regularisation methods to encourage the construction of informative latent spaces [62].

Autoencoders have immense value in domains of image, text, or sensor data; moreover, they have been successfully used within structured tabular datasets. Their efficiency largely depends on multiple factors, such as architecture, activation functions, optimisation parameters, and training data level. Autoencoders of deep architecture, which have multiple hidden layers, perform very well in extracting hierarchical representations; however, they require large datasets and careful tuning to avoid overfitting. Applications as in [32,33] typically involve thousands of instances; in small-sample settings, they may underperform simpler linear methods unless strong regularisation (e.g., dropout, early stopping) is applied.

One of their main advantages is the latent embeddings they produce, which can be used as features for other subsequent tasks, such as classification, clustering, and outlier detection. These embeddings are usually representations of high-level abstractions and improve model generalisability. However, their very nature as black-box modules makes them difficult to interpret, thus making them less ideal for applications where feature translucency is important. However, they remain a very powerful nonlinear replacement for traditional dimension reduction methods and are a vital part of preprocessing modules in deep learning and DM pipelines.

5.3. Manifold Learning Methods

Manifold learning methods belong to nonlinear DR techniques used for uncovering the inherent geometrical structure of high-dimensional datasets. They rely on points within large-dimensional spaces, often located on or near a low-dimensional manifold embedded within a higher-dimensional setting. Through maintaining local or global geometrical attributes, they make it possible to project data from high-dimensional contexts to low-dimensional representations, whilst preserving crucial structural features.

t-SNE has been recognised for its utility as a DR algorithm well-suited for high-dimensional data, commonly reducing them to two or three dimensions for visualisation purposes [63]. t-SNE works by converting distances between points to joint probabilities, then lowering these probabilities to deduce the divergence between the original and the low-dimensional representations. t-SNE is most effective in retaining clusters and local patterns; however, it is not capable of retaining global structural relationships and is very sensitive to hyperparameters like perplexity and learning rate. Consequently, t-SNE is best treated as an exploratory visualisation tool rather than a stable feature transformer for predictive training; results can vary across runs and settings [63,64]. t-SNE also requires a great deal of computational power, and its non-parametric nature hinders it from generalising to new data without retraining.

The UMAP algorithm constitutes a novel technique distinguished by increased computational effectiveness, better global pattern retention, and a more varied set of parameters, promising prospective applications [65]. Despite an improved global structure, UMAP embeddings remain stochastic and hyperparameter-sensitive; report seeds and configuration; and avoid interpreting embedded distances as metric-faithful [65,66]. UMAP, in turn, creates a weighted k-nearest-neighbour graph inside high-dimensional spaces and adjustingly manipulates a low-dimensional representation to retain local associations concomitantly with some global correlations.

Such techniques remain best exploited in exploratory data analysis or visual investigation of complex datasets, such as gene expression profiles and word embeddings. Since most techniques remain chiefly used for visualisation within predictive modelling paradigms and not necessarily operating as feature transformers per se, their ability to reveal underlying structures incorporates critical benefits to preprocessing techniques. These techniques can be used to perform feature engineering, support noise identification, and improve clustering techniques. Interpretations, however, need to remain cautious, as distances measured in the low-dimensional representation cannot necessarily represent meaningful associations within the original dataset.

DR methods differ not only in their underlying assumptions, but also in how they reveal structure in complex datasets. Table 6 summarises practical trade-offs among PCA, manifold learning, and autoencoders to guide method selection in applied workflows.

Table 6.

Summary of DR methods and their practical trade-offs.

Additionally, Figure 6 compares three widely used techniques: PCA, t-SNE, and VAE. PCA, being linear, captures global variance but may fail to separate nonlinearly distributed classes. In contrast, t-SNE and VAE are better suited for revealing compact, nonlinear clusters in latent space. VAE in particular produces a continuous yet separable embedding that resembles its generative modelling objective.

Figure 6.

Comparison of DR techniques on synthetic data. PCA (left) preserves global variance but fails to separate classes clearly. t-SNE (centre) captures nonlinear clusters effectively. VAE (right) shows continuous but distinct latent structures, as typically produced by VAE. Point colours denote the true class membership, using the same colour scheme across panels.

6. Preprocessing Pipelines and Automation Tools

6.1. Pipeline Design in Scikit-Learn and PyCaret

The creation of reliable and reproducible preprocessing pipelines forms a central component of modern DM and ML approaches (Stages 4–7 in Figure 1). Libraries like scikit-learn and PyCaret facilitate seamlessly integrating preprocessing operations into every step of model creation and assessment, thereby ensuring consistency across process components [31,67].

In the scikit-learn library, the ColumnTransformer and Pipeline objects are essential parts [31]. Pipeline enables the definition of a pipeline of transformations, such as imputation, scaling, and encoding, before applying a ML estimator. This framework ensures all preprocessing steps are performed systematically. On the other hand, ColumnTransformer enables the implementation of different preprocessing methods on different subsets of features; it can be used, for example, to apply scaling to numerical columns while performing, at the same time, one-hot encoding on categorical variables, allowing, therefore, a more flexible construction of modular pipelines, better suited to handling data heterogeneity efficiently. In addition, it makes it possible to perform hyperparameter tuning by grid or randomised search procedures, which can be used on extensive pipeline configurations instead of single models alone.

Figure 7 visualises such a pipeline using a ColumnTransformer to separately scale numerical data and encode categorical data before passing the result on to a classifier.

Figure 7.

Modular preprocessing pipeline using scikit-learn ColumnTransformer. Numerical and categorical features are processed in parallel using scaling and encoding, respectively. The resulting feature matrix is passed on to a logistic regression model.

PyCaret expands on the capabilities of scikit-learn by the inclusion of a low-code application programming interface, which automates several parts of the preprocessing pipeline [67]. Upon importing a dataset into PyCaret, it automatically performs several preprocessing operations, such as imputation, feature scaling, encoding, outlier detection, transformation, and feature selection. Such automation allows entry into the market for beginners, while providing experienced practitioners with room for customisation. It also accommodates experiment logging, model comparison, and ensemble building, making it an invaluable resource in both research and production environments.

Another benefit of pipeline architectures is their ability to prevent data leakage; all transformation parameters, including scaling factors and means, are calculated from the training set before their application to the test set. Additionally, they improve the efficiency in model deployment by bundling the model and its relevant transformations into a single versioned object, which can be retained and reused. In this regard, modularity becomes highly advantageous in system development, which requires maintainability and scalability, especially in production environments, where reliability and traceability are important. By bundling its related transformations with a model into a single versioned object, they further enhance the productivity in model deployment.

6.2. DataPrep, Featuretools, and Automated Feature Engineering (AutoFE)

Whilst scikit-learn and PyCaret provide generalisable preprocessing pipelines, some specialist tools focus on automating specific aspects relevant to feature engineering and data preparation. Examples of specialist tools include DataPrep, Featuretools, and some newer libraries created for AutoFE.

DataPrep is a Python library intended to make exploratory data analysis and cleaning easier [68]. It provides sophisticated functionality for normalising column names, standardising date format, filling missing values, and detecting outliers. Additionally, its visual analytical features, such as distributions, correlation matrices, and null heatmaps, help gain insights into data quality before model building commences. Compared to more comprehensive frameworks, DataPrep is distinguished by its lightweight approach and focus on the rapid generation of insights, making it highly useful during the initial stages of DM.

Featuretools is a leading application of DFS, a framework that aims to automatically derive new features from relational data structures [28]. By analysing the relationships between tables (e.g., transactions and customers), it creates compound features like “average purchase amount per customer” or “total distinct items purchased last month”. Featuretools is best when applied to normalised schemas or temporal hierarchies, providing the capability to generate hundreds to thousands of possible features with little or no manual work. Further, its ability to perform large-scale computations by utilising Dask and Spark allows it to execute large-scale analytical workflows with high efficiency.

Automated feature engineering software, such as autofeat, tsfresh, and ExploreKit, promote automation by the use of statistical heuristics, information theory-based principles, or domain-specialised search techniques, which allow for the detection or identification of strongly significant features [29,54]. The tools normally support numerical and categorical data inputs, and they can suggest nonlinear transforms, interactions, and heuristics based on domain-relevant expertise. Also, some AutoML frameworks integrate AutoFE into their search process, allowing for end-to-end optimisation of features and models. Automation has some definite strengths like scalability and consistency, but it also has some pitfalls, such as feature proliferation, loss of interpretability, and risk of overfitting. Thus, AutoFE needs to be complemented by post-processing and validation methods to guarantee that the created features promote improved model performance while meeting the task’s objectives.

6.3. Pipeline Serialisation and Reproducibility

A core property of effective DM infrastructures is reproducibility. Preprocessing pipelines deserve special consideration, since inconsistencies in the training or prediction stages can reduce model effectiveness or produce unexpected outcomes (see Section 9.7 for the placement of experiment tracking and versioned redeployments).

Serialisation permits saving a trained pipeline, including all preprocessing components within a model, in a form amenable to later loading. In Python, commonly used tools for performing serialisation are joblib and pickle; similar functionality exists in most frameworks and programming languages. For extending the practical usefulness of deep learning and to support cross-platform deployments, Open Neural Network Exchange (ONNX) provides a format to perform the serialisation of pipelines, focusing on portability and shareability.

The reproducibility promotion is intrinsically supported by following systematic definitions of pipelines, the use of configuration files (e.g., formatted in YAML or JSON), and the application of version control software. The clear delimitation of transformation parameters (such as imputation methods, scaling, or chosen features) in a separate configuration file, for instance, supports pipeline execution on different data subsets or on different computers with one command. Additionally, the use of software tools such as MLflow, Data Version Control (DVC), or Weights & Biases further supports experiment tracking, artefact preservation, and change analysis in pipelines for different iterations [69].

A basic additional requirement is schema consistency. Data processing models need to include mechanisms for ensuring the consistency of incoming data with predetermined formats, column descriptions, and statistical distributions. These steps considerably reduce the risk of experiencing runtime faults or hidden failures at the implementation level. In cases where reproducibility is of high priority, such as financial forecasting or medical assessments, identical results across these approaches trigger concerns around compliance with regulatory needs, thus emphasising the imperatives of transparency and accountability. Finally, the use of reproducibility and serialisation methods is crucial for improving the scalability and reliability of DM systems. Such approaches enable seamless deployment and monitoring of models, promote collaboration, aid debugging, and help to ensure long-term sustainability of analysis endeavours.

7. Evaluation of Preprocessing Impact

7.1. Measuring Improvements in Model Accuracy and Stability

Comparing the effectiveness of preprocessing operations is important for understanding their true impact on model performance and ensuring that improvements observed are not a result of random fluctuations or intrinsic properties of the dataset [8]. One common method for comparative assessment is comparative modelling, which involves training a particular ML algorithm and evaluating it with and without a chosen preprocessing operation. Afterwards, differences in performance are measured based on different metrics, such as accuracy, precision, recall, F1-score, or Root Mean Square Error (RMSE).

To push beyond simple point estimates, professionals commonly use k-fold CV to assess how preprocessing’s effects generalise to different data subsets by measuring performance [44]. Such a technique works well to control variance and prevent overfitting, particularly when sample sizes are small. In such cases, improvements in preprocessing should not be evident from a single train–test split, yet they tend to become obvious when averaged across multiple folds.

Besides predictive accuracy, stability is a key consideration. A preprocessing pipeline that improves the model accuracy but produces significant variation across different validation folds might not be the best for deployment. Model stability can be measured by the standard deviation of performance metrics across different folds or by exploring feature importance consistency across models [60].

Other preprocessing methods, such as scaling and imputation, have a bidirectional effect on both the model accuracy and the interpretation and weighting of input features for models. Imputation refers to handling missing values, necessary before using certain models that, by nature, do not support NaN values (e.g., logistic regression) for the training process. Figure 8 shows the absolute coefficients from a logistic regression model, which was solely trained using imputed data, compared to data that was imputed after scaling. Due to logistic regression’s vulnerability towards feature scaling variability, this demonstrates how imputation changes feature importance, thus validating the statement that even simple preprocessing notably affects a model’s internal representation.

Figure 8.

Feature influence shift after scaling. A logistic regression model trained with imputation only versus imputation and scaling shows substantial changes in coefficient magnitudes, highlighting the importance of feature normalisation in linear models.

The effectiveness of preprocessing procedures can be evaluated indirectly by tracking increases in convergence rates, training times, or resource utilisation efficiency [30]. For instance, although accuracy will not be increased by normalisation or DR, it can reduce computational costs considerably or allow more complex models to be trained within set time constraints. Additionally, visualisation tools, like learning curves or confusion matrices, can be used to gain insight into how preprocessing decisions impact error distributions and general model effectiveness.

Above all, the argument in support of picking particular preprocessing methods has to be grounded in the application of performance metrics, stability tests, and interpretability assessments. Methods like ablation studies can be used, in which elements can be progressively removed from a preprocessing pipeline to estimate individual element contributions. In this comprehensive assessment framework, preprocessing cannot be treated as a black box; instead, it supports DM pipelines by allowing for more awareness, reproducibility, and ruggedness.

7.2. Bias–Variance Implications

The choice of preprocessing procedures has a strong impact on the bias–variance trade-off underlying generalisable predictive models. Bias has been defined as error due to very simple representations of the model, while variance represents error due to increased sensitivity of the model to slight variations in the training set. Certain preprocessing procedures would decrease bias simultaneously while variance increases, and vice versa, thereby requiring comprehension of their combined effects.

The feature selection process, for instance, can significantly reduce variance by eliminating noisy or collinear features, which tend to lead to overfitting on the training data [70]. On the other hand, too aggressive selection methods can add bias by eliminating informative features that are weak in signal. Similarly, outlier removal often reduces variance by removing extreme data points that play a significant role in model parametrisation. However, in real-world applications, like fraud detection or disease diagnosis, such outliers can represent infrequent yet significant cases, and removing them can increase bias by degrading the model’s capability to detect important edge cases.

Normalisation and scaling methods can improve the convergence reliability of gradient-based methods, later reducing model variance [71]. However, if normalisation distorts feature distributions or hides domain semantics, it effectively adds bias. When used carefully, DR techniques, like PCA, can reduce both bias and variance; however, blind adoption of such techniques can lead to the loss of important information.

To estimate such trade-offs, practitioners often use learning curves describing the behaviour of training and validation performance versus sample size [72]. Preprocessing techniques highlighting generalisable data should have a low generalisation error across the whole curve. In addition, special techniques, such as regularisation, can be combined with preprocessing to explicitly trade bias and variance and thereby reduce negative consequences from data preparation. In short, bias–variance trade-off assessment requires simultaneous empirical exploration, as well as deep knowledge of the application domain pertinent to a problem. Simply measuring mean accuracy cannot suffice; it becomes critical to consider consistency, fairness, and interpretability of model behaviour in different subgroups and settings. Thus, preprocessing cannot be treated as a static process, but rather as a set of tunable choices considerably affecting underlying learning dynamics.

- Protocol (controlling confounders).

To isolate the effect of a single preprocessing step (e.g., feature selection), imputation, encoding, scaling, and the downstream model/hyperparameters are held fixed; only the focal step varies across candidate settings. Evaluation uses nested or repeated k-fold CV, and results are reported as fold-wise mean ± SD. Learning curves (train/validation performance vs. sample size) are produced within the same splits to diagnose bias–variance behaviour. An ablation study removes or replaces the focal step to confirm the necessity. Reporting includes calibration (Brier score/ECE), selection or transformation stability across folds, and—where applicable—subgroup fairness metrics (see Section 9.3 for a general evaluation checklist).

7.3. Interaction with Downstream Mining Tasks

Different DM tasks have different responses to preprocessing techniques. What improves the effectiveness of one task can compromise performance in another, so task-specific evaluation becomes necessary at preprocessing workflow construction times.

For instance, normalisation is critical for distance-based models like k-NN or k-means clustering, where unscaled features dominate distance calculations and lead to skewed clusters or nearest neighbours [73]. However, for tree-based models, normalisation often has a negligible impact, as these models are scale-invariant. Applying unnecessary normalisation in such cases adds complexity without benefit and can sometimes interfere with interpretability.

Similarly, DR by procedures like PCA or autoencoders can enable clustering or visualisations by discovering latent group structures, but it can compromise interpretable relationships needed to support rule mining or feature attribution [63]. In association rule mining, where detection of presence or absence of specific categorical items plays a significant role, encoding protocols should preserve discrete semantics; too much feature merging to eliminate cardinality could lead to vital co-occurrence patterns becoming lost [74].

In classification and regression, it is critical that feature encoding and imputation of missing values be aligned to the predictive target. For example, target encoding uses response variable-based information and runs a risk of leakage if not properly implemented within a cross-validated framework [50]. For preprocessing within time-series analysis, it has to honour temporal ordering to avoid look-ahead bias, and this requires the application of special-purpose imputation and data splitting techniques.

The evaluation of preprocessing effectiveness should, thus, include not only traditional measures, but also goals related to particular tasks, e.g., clustering quality (e.g., silhouette score), comprehensibility (e.g., rule lengths), and critical business performance measures (e.g., false negative rate in fraud prevention). Suggested approaches include the creation of dual pipelines tailored for different tasks and methodical comparisons of outcomes in a structured and transparent framework.

8. Open-Source Libraries and Frameworks

The development of the open-source preprocessing ecosystem has led to the appearance of three closely related categories that support data preprocessing at different abstraction levels: (i) general-purpose ML libraries, providing explicit, composable transformers and pipelines (e.g., scikit-learn, PyCaret, and MLJ); (ii) AutoML systems concurrently searching for preprocessing choices and model hyperparameters, such as H2O AutoML, auto-sklearn, and Tree-based Pipeline Optimisation Tool (TPOT); (iii) notebook-based experimental ecosystems improving exploratory analysis, reporting, and reproducibility (demonstrated by Jupyter, featuring profiling, tracking, and versioning extensions). A quick comparison of such representative tools is presented in Table 7. In addition, in the following subsections, each category is placed in the broader context, explaining the trade-offs concerning automation control and integrating them into the end-to-end pipelines described in Section 6.

Table 7.