Creativeable: Leveraging AI for Personalized Creativity Enhancement

Abstract

1. Introduction

1.1. Existing Creativity Training Programs

1.2. AI and Creativity Enhancement

1.3. Creativity Support Tools

1.4. Story Writing as a Creativity Training Task

1.5. The Current Study

2. Materials and Methods

2.1. Participants

2.2. Creativeable

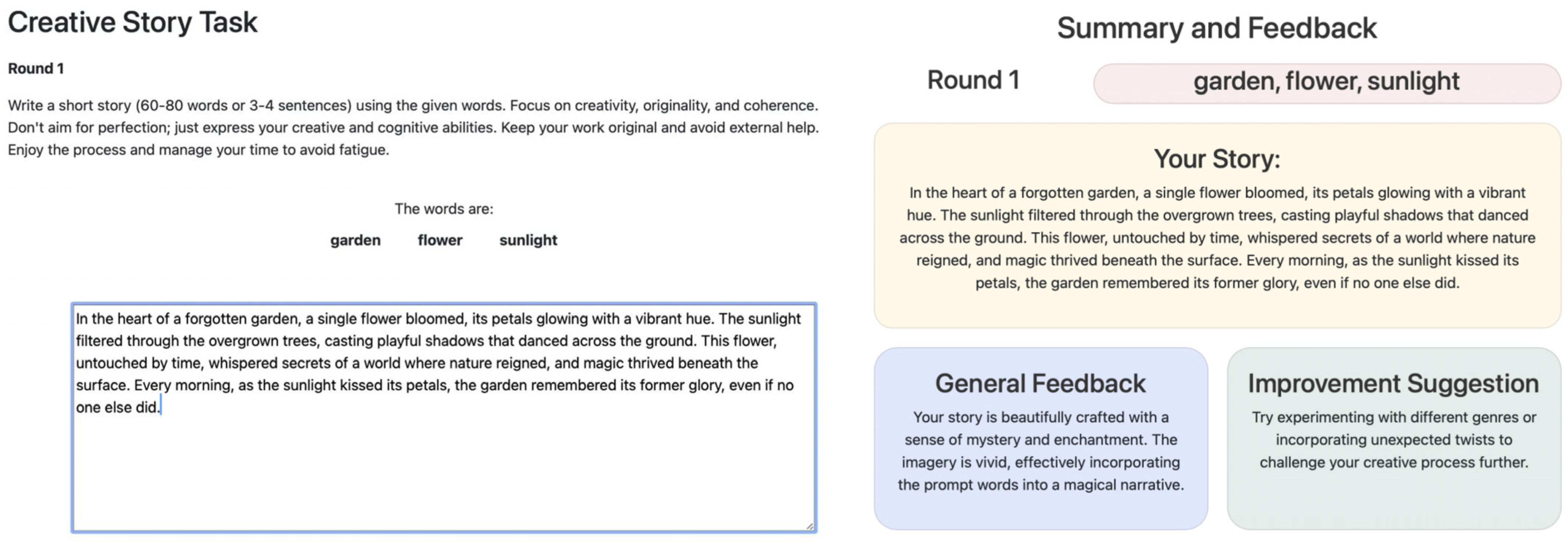

2.2.1. Creativeable Website

2.2.2. Story Writing Task (SWT)

2.2.3. SWT Stimuli

2.2.4. Experimental Creativeable Conditions

Feedback with Varying Difficulty Level (F/VL)

Feedback with Constant Difficulty Level (F/CL)

No Feedback, Varying Difficulty Level (NF/VL)

No Feedback, Constant Difficulty Level (NF/CL)

2.2.5. Evaluating the Creativity of the Stories

2.2.6. Adaptive Difficulty Level Policy

2.2.7. Personalized Feedback and Suggestions for Improvement

2.3. Creativity Assessment

2.4. Fluency Assessment

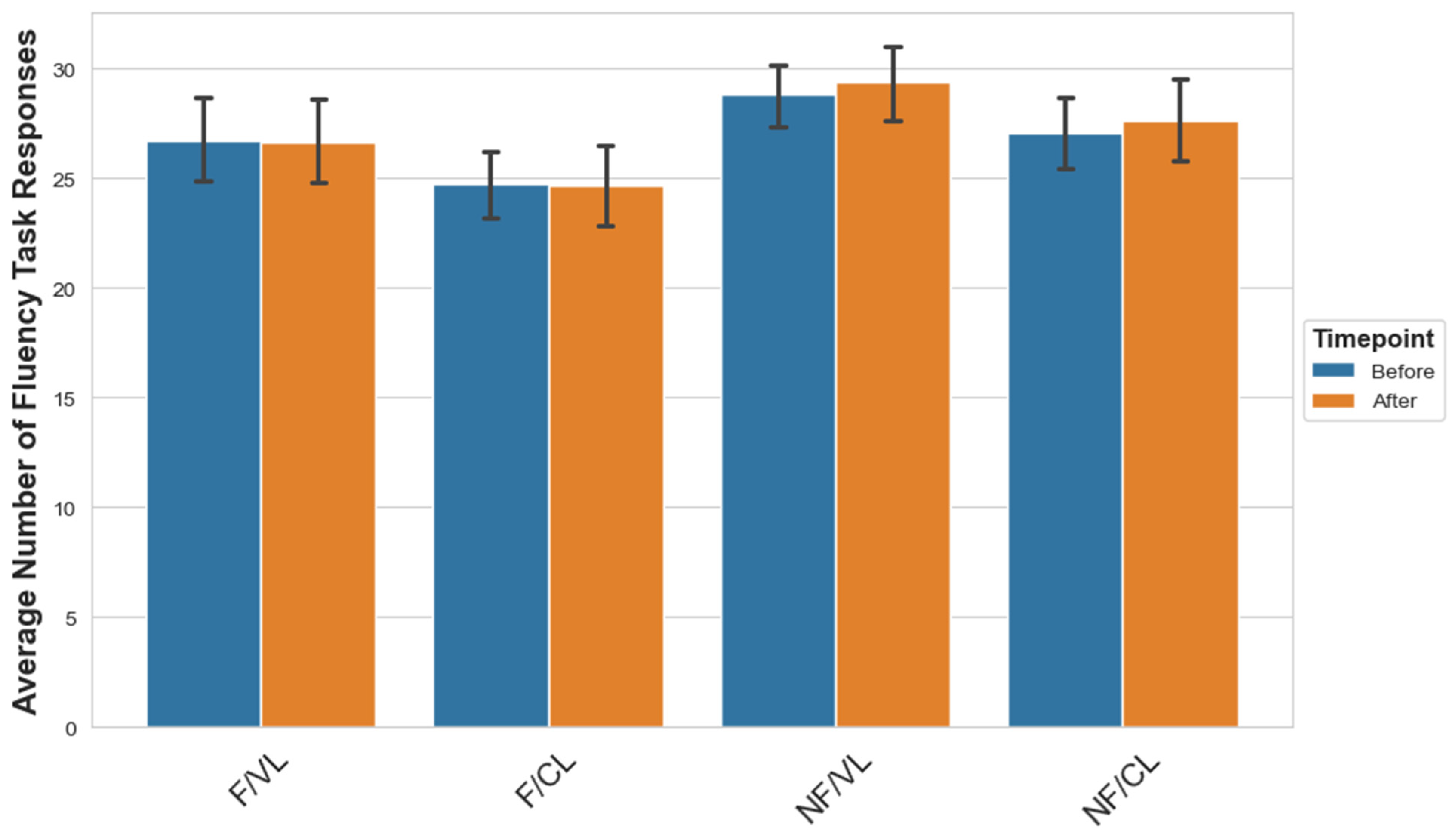

2.5. Procedure

3. Results

3.1. Validation of Experimental Design

3.1.1. Perceived Task Difficulty Through Training Rounds

3.1.2. Self-Reported Fatigue Through Training Rounds

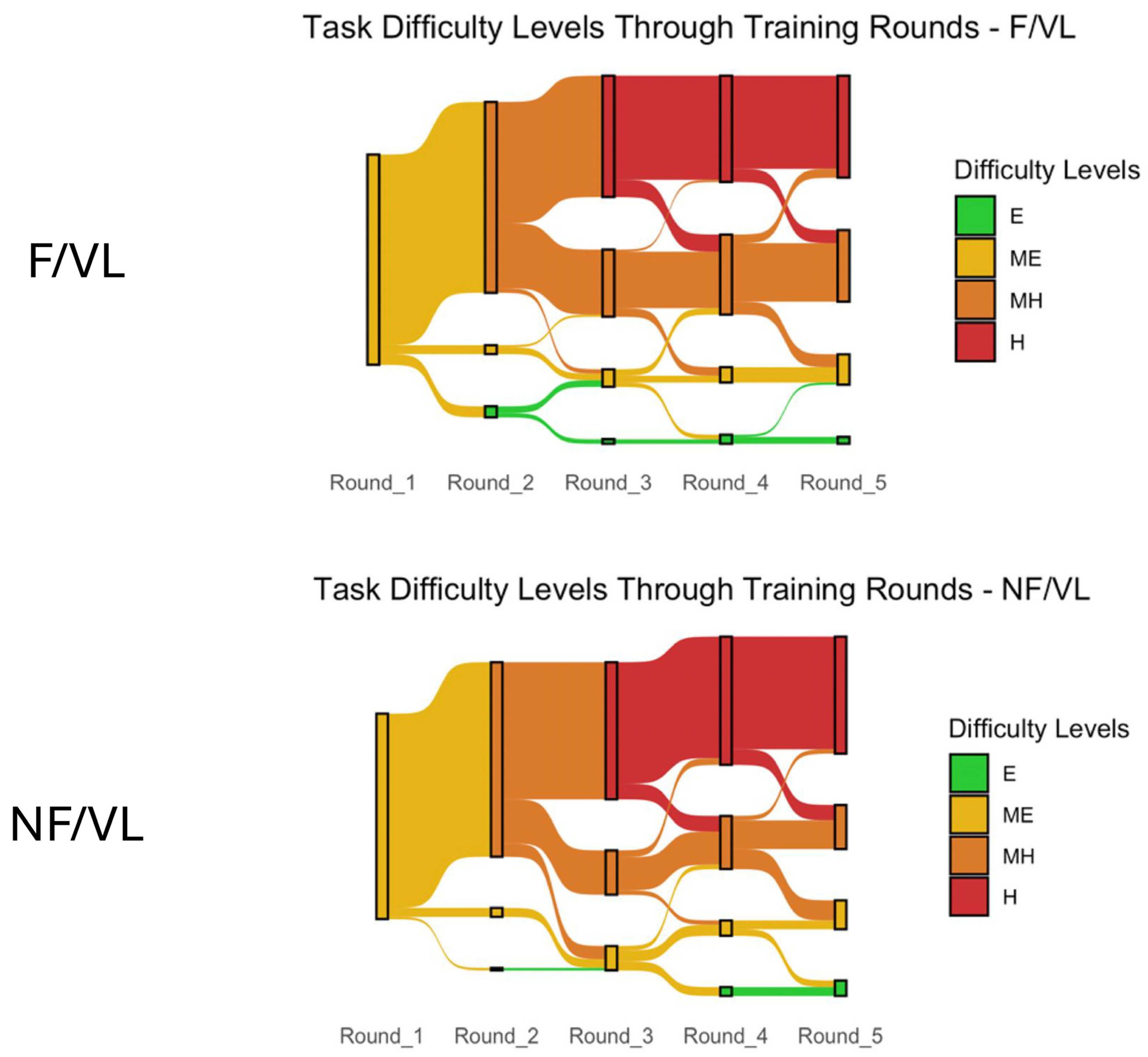

3.1.3. Task Difficulty Levels Through Training Rounds

3.2. Creativity and Performance Results

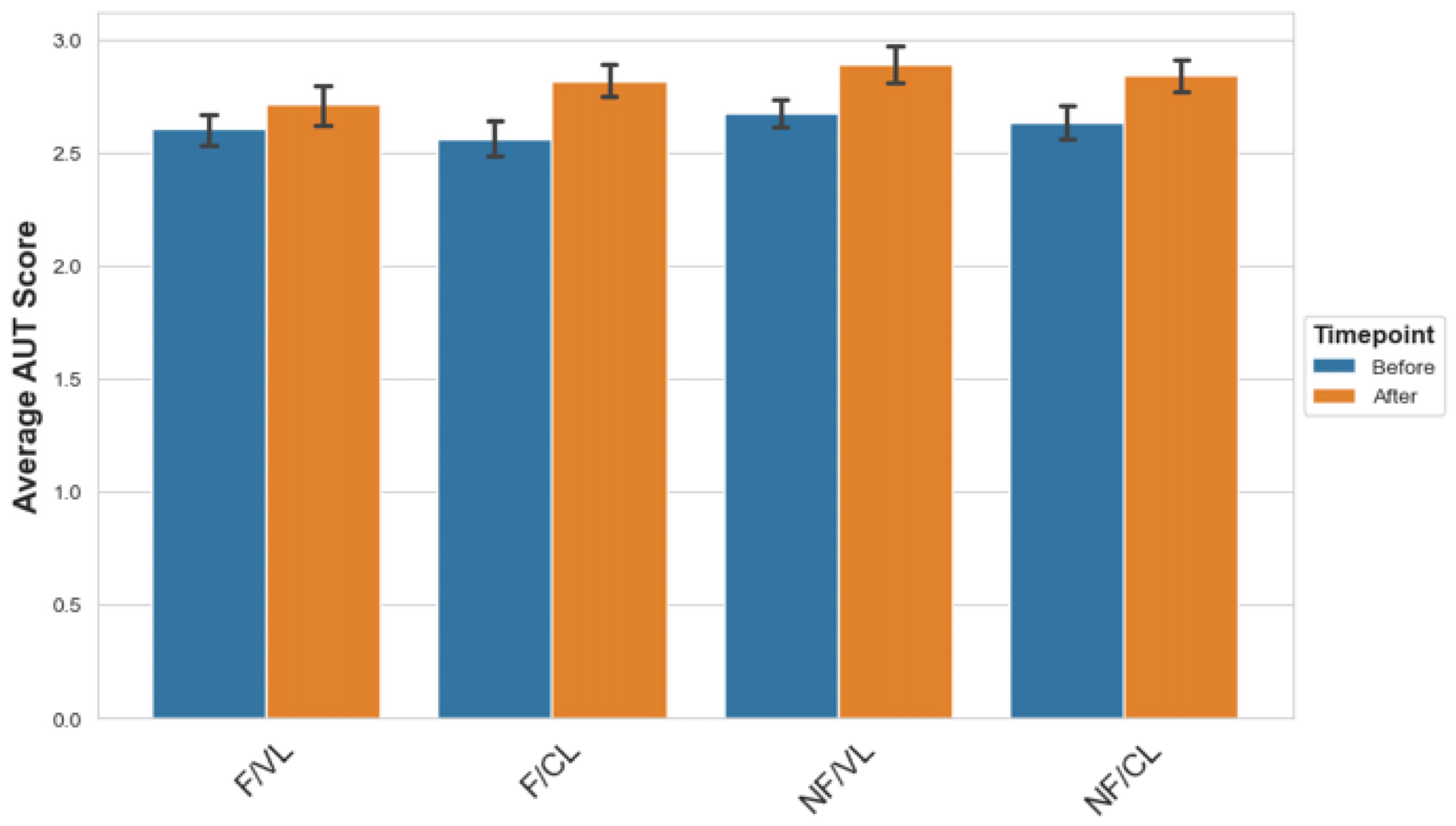

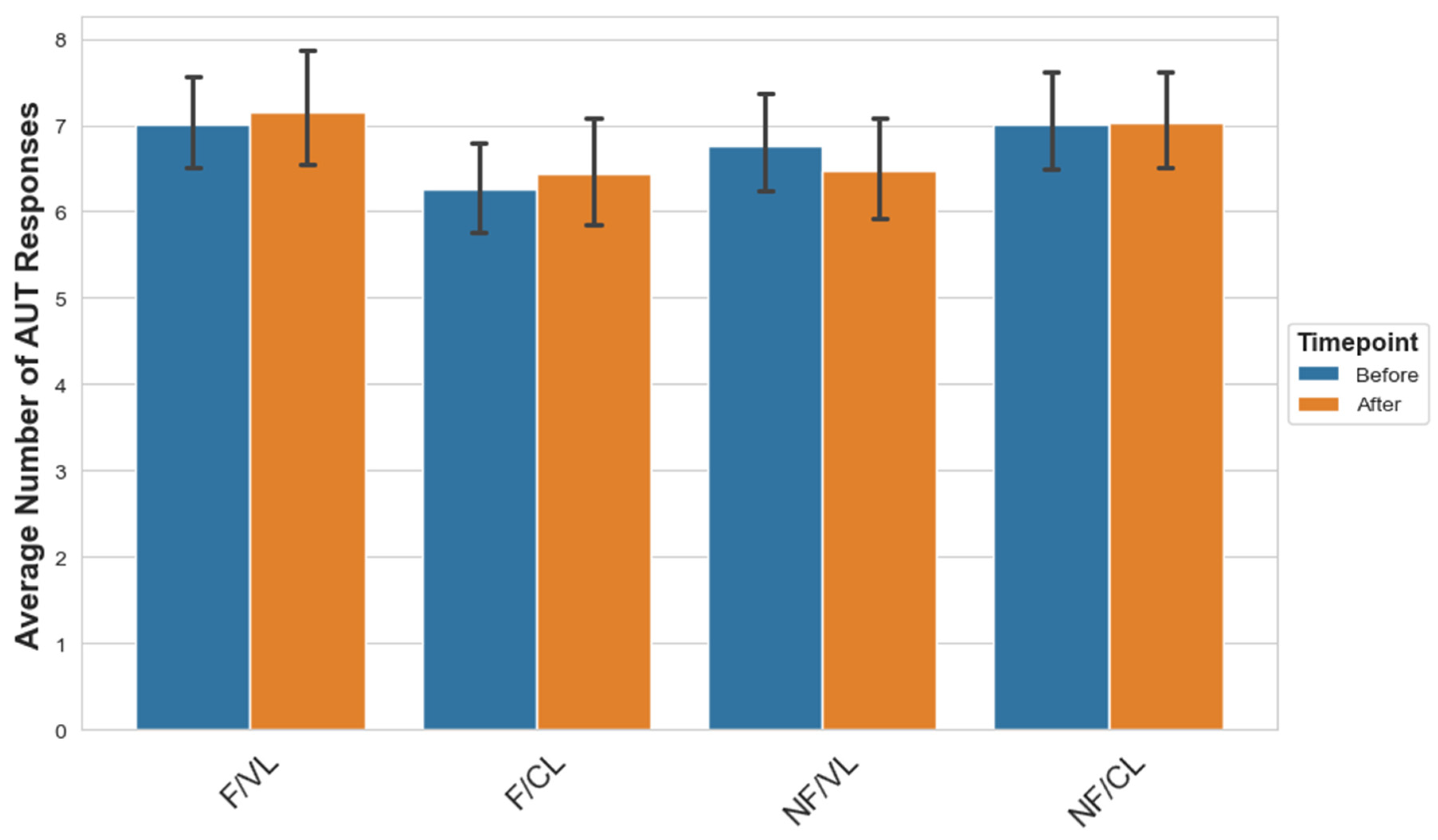

3.2.1. Creativity Improvements Across Conditions

3.2.2. Creativity Scores Across Training Rounds

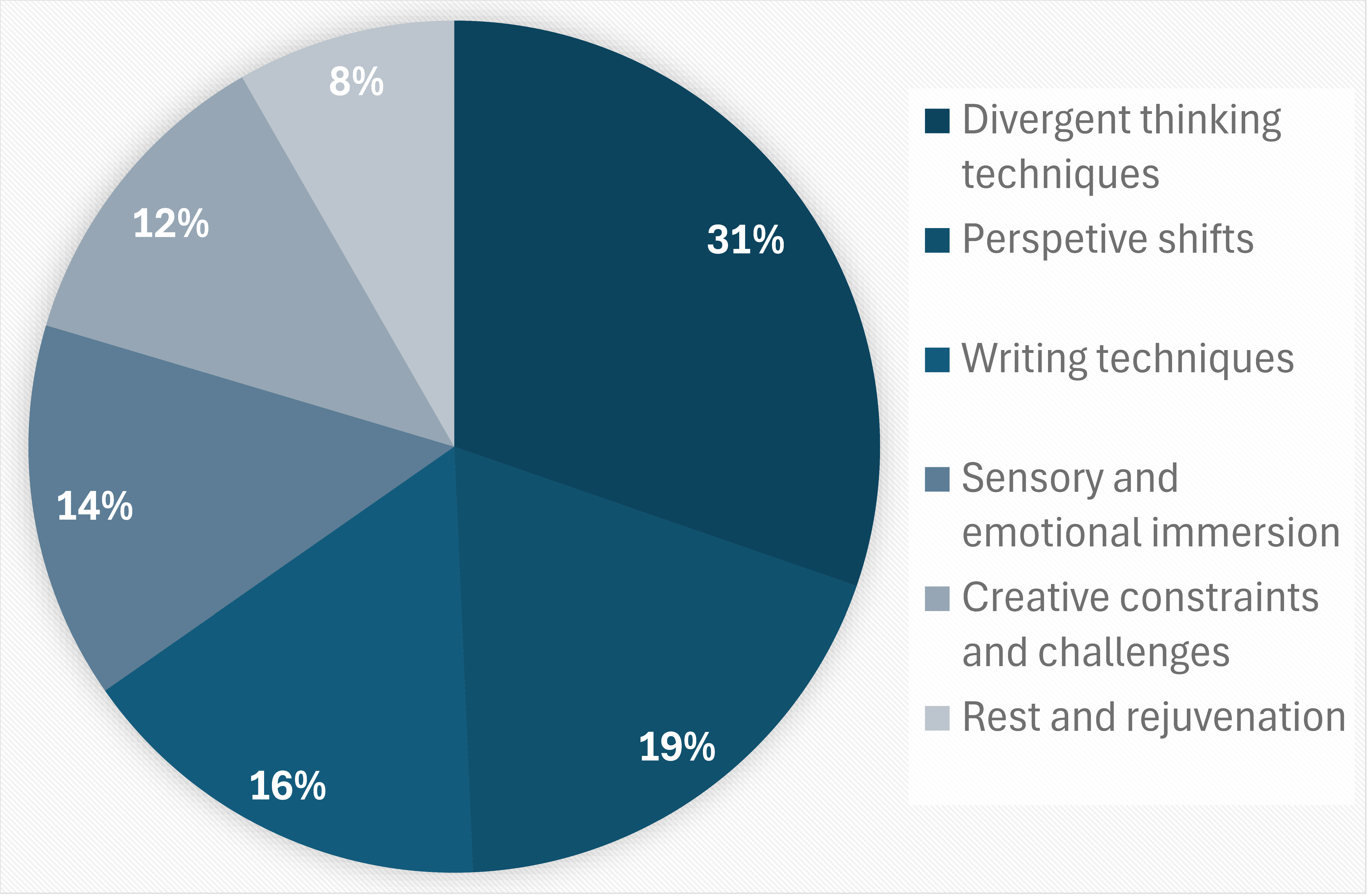

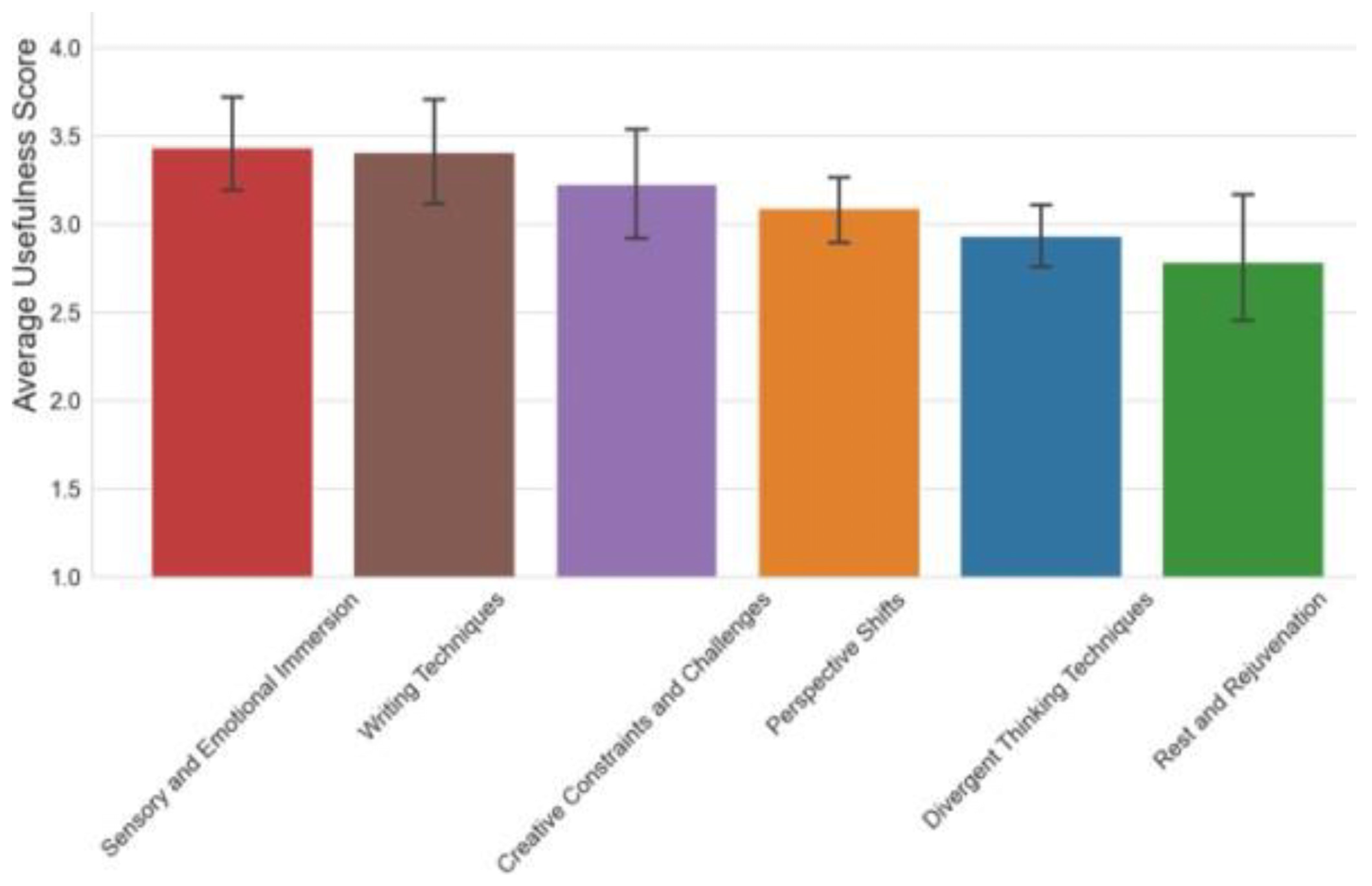

3.3. Exploratory Insights on Feedback

3.3.1. Transfer Feedback

3.3.2. Participant Experiences with Improvement Suggestions in the Feedback Conditions

3.3.3. Changes in Motivation and Engagement During the Training

4. Discussion

4.1. Comparing with Existing Training Programs

4.2. Effectiveness of Creativity Enhancement

4.3. AI Generated Feedback in Creativity Enhancement

4.4. Implications for Future Creativity Training Programs

4.5. Limitations and Future Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AUT | Alternative Uses Task |

| CST | Creativity Support Tool |

| DT | Divergent thinking |

| F/CL | Feedback/Constant Level condition |

| F/VL | Feedback/Varying Level condition |

| LLM NF/CL | Large Language Model No Feedback/Constant Level condition |

| NF/VL | No Feedback/Varying Level condition |

| RAT | Remote Associates Task |

| SFT | Semantic Fluency Task |

| SWT | Story Writing Task |

References

- Runco, M.A.; Jaeger, G.J. The standard definition of creativity. Creat. Res. J. 2012, 24, 92–96. [Google Scholar] [CrossRef]

- Green, A.E.; Beaty, R.E.; Kenett, Y.N.; Kaufman, J.C. The process definition of creativity. Creat. Res. J. 2024, 36, 544–572. [Google Scholar] [CrossRef]

- Beaty, R.E.; Kenett, Y.N. Associative thinking at the core of creativity. Trends Cogn. Sci. 2023, 27, 671–683. [Google Scholar] [CrossRef] [PubMed]

- Chiu, F.-C. Improving your creative potential without awareness: Overinclusive thinking training. Think. Ski. Creat. 2015, 15, 1–12. [Google Scholar] [CrossRef]

- Dacey, J.S.; Lennon, K.H. Understanding Creativity: The Interplay of Biological, Psychological, and Social Factors; Jossey-Bass: Hoboken, NJ, USA, 1998. [Google Scholar]

- Benedek, M.; Beaty, R.E.; Schacter, D.L.; Kenett, Y.N. The role of memory in creative ideation. Nat. Rev. Psychol. 2023, 2, 246–257. [Google Scholar] [CrossRef]

- Basadur, M.; Runco, M.A.; Vegaxy, L.A. Understanding how creative thinking skills, attitudes and behaviors work together: A causal process model. J. Creat. Behav. 2000, 34, 77–100. [Google Scholar] [CrossRef]

- Cropley, D.H. The role of creativity as a driver of innovation. In Proceedings of the 2006 IEEE International Conference on Management of Innovation and Technology, Singapore, 21–23 June 2006. [Google Scholar]

- Henriksen, D.; Henderson, M.; Creely, E.; Ceretkova, S.; Černochová, M.; Sendova, E.; Sointu, E.T.; Tienken, C.H. Creativity and technology in education: An international perspective. Technol. Knowl. Learn. 2018, 23, 409–424. [Google Scholar] [CrossRef]

- Scott, G.; Leritz, L.E.; Mumford, M.D. The effectiveness of creativity training: A quantitative review. Creat. Res. J. 2004, 16, 361–388. [Google Scholar] [CrossRef]

- Haase, J.; Hanel, P.H.P.; Gronau, N. Creativity enhancement methods for adults: A meta-analysis. Psychol. Aesthet. Creat. Arts 2025, 19, 708–736. [Google Scholar] [CrossRef]

- Ding, K.; Kenett, Y.N. Creativity enhancement: A primer Trans. In The Oxford Handbook of Cognitive Enhancement and Brain Plasticity; Barbey, A.K., Ed.; Oxford University Press: Oxford, UK, 2024. [Google Scholar]

- Ma, H.-H. A synthetic analysis of the effectiveness of single components and packages in creativity training programs. Creat. Res. J. 2006, 18, 435–446. [Google Scholar] [CrossRef]

- Valgeirsdottir, D.; Onarheim, B. Studying creativity training programs: A methodological analysis. Creat. Innov. Manag. 2017, 26, 430–439. [Google Scholar] [CrossRef]

- Müller, B.C.N.; Gerasimova, A.; Ritter, S.M. Concentrative meditation influences creativity by increasing cognitive flexibility. Psychol. Aesthet. Creat. Arts 2016, 10, 278. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S. Gpt-4 technical report. arXiv 2023. [Google Scholar] [CrossRef]

- Gai, Y.; Zhou, L.; Qin, K.; Song, D.; Gervais, A. Blockchain large language models. arXiv 2023. [Google Scholar] [CrossRef] [PubMed]

- Goslen, A.; Kim, Y.J.; Rowe, J.; Lester, J. Llm-based student plan generation for adaptive scaffolding in game-based learning environments. Int. J. Artif. Intell. Educ. 2025, 35, 533–558. [Google Scholar] [CrossRef]

- Hou, X.; Wu, Z.; Wang, X.; Ericson, B.J. Codetailor: Llm-powered personalized parsons puzzles for engaging support while learning programming. In Proceedings of the Eleventh ACM Conference on Learning at Scale, Atlanta, GA, USA, 18–20 July 2024. [Google Scholar]

- Xiao, C.; Xu, S.X.; Zhang, K.; Wang, Y.; Xia, L. Evaluating reading comprehension exercises generated by llms: A showcase of chatgpt in education applications. In Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023), Toronto, ON, Canada, 13 July 2023. [Google Scholar]

- Pandya, K.; Holia, M. Automating customer service using langchain: Building custom open-source gpt chatbot for organizations. arXiv 2023. [Google Scholar] [CrossRef]

- Radensky, M.; Weld, D.S.; Chee Chang, J.; Siangliulue, P.; Bragg, J. Let’s get to the point: Llm-supported planning, drafting, and revising of research-paper blog posts. arXiv 2024. [Google Scholar] [CrossRef]

- Yu, C.; Zang, L.; Wang, J.; Zhuang, C.; Gu, J. Charpoet: A chinese classical poetry generation system based on token-free llm. arXiv 2024. [Google Scholar] [CrossRef]

- Wu, Z.; Weber, T.; Müller, F. One does not simply meme alone: Evaluating co-creativity between llms and humans in the generation of humor. In Proceedings of the 30th International Conference on Intelligent User Interfaces, Cagliari, Italy, 24–27 March 2025. [Google Scholar]

- Gómez-Rodríguez, C.; Williams, P. A confederacy of models: A comprehensive evaluation of llms on creative writing. arXiv 2023. [Google Scholar] [CrossRef]

- Qin, H.X.; Jin, S.; Gao, Z.; Fan, M.; Hui, P. Charactermeet: Supporting creative writers’ entire story character construction processes through conversation with llm-powered chatbot avatars. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024. [Google Scholar]

- Wang, T.; Chen, J.; Jia, Q.; Wang, S.; Fang, R.; Wang, H.; Gao, Z.; Xie, C.; Xu, C.; Dai, J. Weaver: Foundation models for creative writing. arXiv 2024. [Google Scholar] [CrossRef]

- Ding, S.; Liu, Z.; Dong, X.; Zhang, P.; Qian, R.; He, C.; Lin, D.; Wang, J. Songcomposer: A large language model for lyric and melody composition in song generation. arXiv 2024. [Google Scholar] [CrossRef]

- Yuan, R.; Lin, H.; Wang, Y.; Tian, Z.; Wu, S.; Shen, T.; Zhang, G.; Wu, Y.; Liu, C.; Zhou, Z. Chatmusician: Understanding and generating music intrinsically with llm. arXiv 2024. [Google Scholar] [CrossRef]

- Poldrack, R.A.; Lu, T.; Beguš, G. Ai-assisted coding: Experiments with gpt-4. arXiv 2023. [Google Scholar] [CrossRef]

- Ismayilzada, M.; Paul, D.; Bosselut, A.; Plas, L.v.d. Creativity in ai: Progresses and challenges. arXiv 2024. [Google Scholar] [CrossRef]

- de Chantal, P.-L.; Beaty, R.E.; Laverghetta, A.; Pronchick, J.; Patterson, J.D.; Organisciak, P.; Potega vel Zabik, K.; Barbot, B.; Karwowski, M. Artificial intelligence enhances human creativity through real-time evaluative feedback. PsyarXiv 2025. [Google Scholar] [CrossRef]

- de Chantal, P.-L.; Houde-Labrecque, C.; Leblanc, M.-C.; Organisciak, P. Investigating lasting effects of real-time feedback on originality and evaluation accuracy. Creat. Res. J. 2024, 1–18. [Google Scholar] [CrossRef]

- de Chantal, P.-L.; Organisciak, P. Automated feedback and creativity: On the role of metacognitive monitoring in divergent thinking. Psychol. Aesthet. Creat. Arts 2023. [Google Scholar] [CrossRef]

- Chung, N.C. Human in the loop for machine creativity. arXiv 2021. [Google Scholar] [CrossRef]

- Acar, S.; Runco, M.A. Divergent thinking: New methods, recent research, and extended theory. Psychol. Aesthet. Creat. Arts 2019, 13, 153–158. [Google Scholar] [CrossRef]

- Runco, M.A.; Acar, S. Divergent thinking as an indicator of creative potential. Creat. Res. J. 2012, 24, 66–75. [Google Scholar] [CrossRef]

- Karkockiene, D. Creativity: Can It Be Trained? A Scientific Educology of Creativity. Online Submission. 2005, pp. 51–58. Available online: https://www.researchgate.net/publication/280304250_Creativity_Can_it_be_Trained_A_Scientific_Educology_of_Creativity (accessed on 15 September 2025).

- Perry, A.; Karpova, E. Efficacy of teaching creative thinking skills: A comparison of multiple creativity assessments. Think. Ski. Creat. 2017, 24, 118–126. [Google Scholar] [CrossRef]

- Samašonok, K.; Leškienė-Hussey, B. Creativity development: Theoretical and practical aspects. J. Creat. Bus. Innov. 2015, 1, 19–34. [Google Scholar]

- Vally, Z.; Salloum, L.; AlQedra, D.; El Shazly, S.; Albloshi, M.; Alsheraifi, S.; Alkaabi, A. Examining the effects of creativity training on creative production, creative self-efficacy, and neuro-executive functioning. Think. Ski. Creat. 2019, 31, 70–78. [Google Scholar] [CrossRef]

- West, R.E.; Tateishi, I.; Wright, G.A.; Fonoimoana, M. Innovation 101: Promoting undergraduate innovation through a two-day boot camp. Creat. Res. J. 2012, 24, 243–251. [Google Scholar] [CrossRef]

- Hargrove, R.A.; Nietfeld, J.L. The impact of metacognitive instruction on creative problem solving. J. Exp. Educ. 2015, 83, 291–318. [Google Scholar] [CrossRef]

- Haase, J.; Hoff, E.V.; Hanel, P.H.; Innes-Ker, Å. A meta-analysis of the relation between creative self-efficacy and different creativity measurements. Creat. Res. J. 2018, 30, 1–16. [Google Scholar] [CrossRef]

- Tierney, P.; Farmer, S.M. Creative self-efficacy: Its potential antecedents and relationship to creative performance. Acad. Manag. 2002, 45, 1137–1148. [Google Scholar] [CrossRef]

- Mathisen, G.E.; Bronnick, K.S. Creative self-efficacy: An intervention study. Int. J. Educ. Res. 2009, 48, 21–29. [Google Scholar] [CrossRef]

- Baruah, J.; Paulus, P.B. Effects of training on idea generation in groups. Small Group Res. 2008, 39, 523–541. [Google Scholar] [CrossRef]

- Bonnardel, N.; Didier, J. Enhancing creativity in the educational design context: An exploration of the effects of design project-oriented methods on students’ evocation processes and creative output. J. Cogn. Educ. Psychol. 2016, 15, 80–101. [Google Scholar] [CrossRef]

- Ulger, K. The creative training in the visual arts education. Think. Ski. Creat. 2016, 19, 73–87. [Google Scholar] [CrossRef]

- Dyson, S.B.; Chang, Y.-L.; Chen, H.-C.; Hsiung, H.-Y.; Tseng, C.-C.; Chang, J.-H. The effect of tabletop role-playing games on the creative potential and emotional creativity of taiwanese college students. Think. Ski. Creat. 2016, 19, 88–96. [Google Scholar] [CrossRef]

- Karwowski, M.; Soszynski, M. How to develop creative imagination?: Assumptions, aims and effectiveness of role play training in creativity. Think. Ski. Creat. 2008, 3, 163–171. [Google Scholar] [CrossRef]

- Mansfield, R.S.; Busse, T.V.; Krepelka, E.J. The effectiveness of creativity training. Rev. Educ. Res. 1978, 48, 517–536. [Google Scholar] [CrossRef]

- Benedek, M.; Fink, A.; Neubauer, A.C. Enhancement of ideational fluency by means of computer-based training. Creat. Res. J. 2006, 18, 317–328. [Google Scholar] [CrossRef]

- Fink, A.; Benedek, M.; Koschutnig, K.; Pirker, E.; Berger, E.; Meister, S.; Neubauer, A.C.; Papousek, I.; Weiss, E.M. Training of verbal creativity modulates brain activity in regions associated with language- and memory-related demands. Hum. Brain Mapp. 2015, 36, 4104–4115. [Google Scholar] [CrossRef]

- Sun, M.; Wang, M.; Wegerif, R. Using computer-based cognitive mapping to improve students’ divergent thinking for creativity development. Br. J. Educ. Technol. 2019, 50, 2217–2233. [Google Scholar] [CrossRef]

- Huang, T.-C. Do different learning styles make a difference when it comes to creativity? An empirical study. Comput. Hum. Behav. 2019, 100, 252–257. [Google Scholar] [CrossRef]

- Mednick, S.A. The associative basis of the creative process. Psychol. Rev. 1962, 69, 220–232. [Google Scholar] [CrossRef]

- Phượng, V.B. An educational computerized game to train creativity: First development and evidence of its creativity correlates. In New Issues in Educational Sciences: Inter-Disciplinary and Cross-Disciplinary Approaches; Zun.vn: Ha Noi, Vietnam, 2018; Available online: https://www.zun.vn/tai-lieu/an-educational-computerized-game-to-train-creativity-first-development-and-evidence-of-its-creativity-correlates-59604/ (accessed on 15 September 2025).

- Kim, S.; Chung, K.; Yu, H. Enhancing digital fluency through a training program for creative problem solving using computer programming. J. Creat. Behav. 2013, 47, 171–199. [Google Scholar] [CrossRef]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024. [Google Scholar] [CrossRef]

- Dasgupta, I.; Lampinen, A.K.; Chan, S.C.Y.; Creswell, A.; Kumaran, D.; McClelland, J.L.; Hill, F. Language models show human-like content effects on reasoning. arXiv 2022. [Google Scholar] [CrossRef]

- Orrù, G.; Piarulli, A.; Conversano, C.; Gemignani, A. Human-like problem-solving abilities in large language models using chatgpt [Original Research]. Front. Artif. Intell. 2023, 6, 1199350. [Google Scholar] [CrossRef] [PubMed]

- Shahriar, S.; Lund, B.D.; Mannuru, N.R.; Arshad, M.A.; Hayawi, K.; Bevara, R.V.K.; Mannuru, A.; Batool, L. Putting GPT-4o to the sword: A comprehensive evaluation of language, vision, speech, and multimodal proficiency. Appl. Sci. 2024, 14, 7782. [Google Scholar] [CrossRef]

- Franceschelli, G.; Musolesi, M. On the creativity of large language models. AI Soc. 2025, 40, 3785–3795. [Google Scholar] [CrossRef]

- Stevenson, C.E.; Smal, I.; Baas, M.; Grasman, R.; Maas, H.L.J.v.d. Putting gpt-3’s creativity to the (alternative uses) test. in International Conference on Innovative Computing and Cloud Computing. arXiv 2022. [Google Scholar] [CrossRef]

- Wang, X.; Hu, Z.; Lu, P.; Zhu, Y.; Zhang, J.; Subramaniam, S.; Loomba, A.R.; Zhang, S.; Sun, Y.; Wang, W. Scibench: Evaluating college-level scientific problem-solving abilities of large language models. arXiv 2023. [Google Scholar] [CrossRef]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. arXiv 2023. [Google Scholar] [CrossRef]

- Evanson, L.; Lakretz, Y.; King, J.-R. Language acquisition: Do children and language models follow similar learning stages? In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Doshi, A.R.; Hauser, O.P. Generative ai enhances individual creativity but reduces the collective diversity of novel content. Sci. Adv. 2024, 10, eadn5290. [Google Scholar] [CrossRef]

- Chandrasekera, T.; Hosseini, Z.; Perera, U. Can artificial intelligence support creativity in early design processes? Int. J. Archit. Comput. 2025, 23, 122–136. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; McFowland, E.; Mollick, E.R.; Lifshitz-Assaf, H.; Kellogg, K.C.; Rajendran, S.; Krayer, L.; Candelon, F.; Lakhani, K.R. Navigating the jagged technological frontier: Field experimental evidence of the effects of ai on knowledge worker productivity and quality. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Wenger, E.; Kenett, Y.N. We’re different, we’re the same: Creative homogeneity across llms. arXiv 2025. [Google Scholar] [CrossRef]

- Moon, K.; Green, A.; Kushlev, K. Homogenizing effect of large language model (llm) on creative diversity: An empirical comparison of human and chatgpt writing. PsyarXiv 2024. [Google Scholar] [CrossRef]

- Moon, K.; Kushlev, K.; Bank, A.; Green, A. Impersonal statements: Llm-era college admissions essays exhibit deep homogenization despite lexical diversity. PsyarXiv 2025. [Google Scholar] [CrossRef]

- Rafner, J.; Beaty, R.E.; Kaufman, J.C.; Lubart, T.; Sherson, J. Creativity in the age of generative ai. Nat. Hum. Behav. 2023, 7, 1836–1838. [Google Scholar] [CrossRef]

- Vinchon, F.; Lubart, T.; Bartolotta, S.; Gironnay, V.; Botella, M.; Bourgeois-Bougrine, S.; Burkhardt, J.-M.; Bonnardel, N.; Corazza, G.E.; Glăveanu, V.; et al. Artificial intelligence & creativity: A manifesto for collaboration. J. Creat. Behav. 2023, 57, 472–484. [Google Scholar] [CrossRef]

- Cropley, D. Is artificial intelligence more creative than humans?: Chatgpt and the divergent association task. Learn. Lett. 2023, 2, 13. [Google Scholar] [CrossRef]

- Candy, L. Evaluating creativity Trans. In Creativity and Rationale: Enhancing Human Experience by Design; Carroll, J.M., Ed.; Springer: London, UK, 2013; pp. 57–84. [Google Scholar] [CrossRef]

- Frich, J.; MacDonald Vermeulen, L.; Remy, C.; Mose Biskjaer, M.; Dalsgaard, P. Mapping the landscape of creativity support tools in hci. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Wang, H.-C.; Cosley, D.; Fussell, S.R. Idea expander: Supporting group brainstorming with conversationally triggered visual thinking stimuli. In Proceedings of the 2010 ACM Conference on Computer Supported Cooperative Work, Savannah, GA, USA, 6–10 February 2010; pp. 103–106. [Google Scholar]

- Zhao, Z.; Badam, S.K.; Chandrasegaran, S.; Park, D.G.; Elmqvist, N.L.E.; Kisselburgh, L.; Ramani, K. Skwiki: A multimedia sketching system for collaborative creativity. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 1235–1244. [Google Scholar]

- Ngoon, T.J.; Fraser, C.A.; Weingarten, A.S.; Dontcheva, M.; Klemmer, S. Interactive guidance techniques for improving creative feedback. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar]

- Wise, T.A.; Kenett, Y.N. Sparking creativity: Encouraging creative idea generation through automatically generated word recommendations. Behav. Res. Methods 2024, 56, 7939–7962. [Google Scholar] [CrossRef]

- Rafner, J.; Biskjær, M.M.; Zana, B.; Langsford, S.; Bergenholtz, C.; Rahimi, S.; Carugati, A.; Noy, L.; Sherson, J. Digital games for creativity assessment: Strengths, weaknesses and opportunities. Creat. Res. J. 2022, 34, 28–54. [Google Scholar] [CrossRef]

- Rafner, J.; Wang, Q.J.; Gadjacz, M.; Badts, T.; Baker, B.; Bergenholtz, C.; Biskjaer, M.M.; Bui, T.; Carugati, A.; de Cibeins, M.; et al. Towards game-based assessment of creative thinking. Creat. Res. J. 2023, 35, 763–782. [Google Scholar] [CrossRef]

- Swanson, B.; Mathewson, K.W.; Pietrzak, B.; Chen, S.; Dinalescu, M. Story centaur: Large language model few shot learning as a creative writing tool. In Proceedings of the Conference of the European Chapter of the Association for Computational Linguistics, Online, 19–23 April 2021. [Google Scholar]

- Xu, X.; Yin, J.; Gu, C.; Mar, J.; Zhang, S.; E, J.L.; Dow, S.P. Jamplate: Exploring llm-enhanced templates for idea reflection. In Proceedings of the 29th International Conference on Intelligent User Interfaces, Greenville, SC, USA, 18–21 March 2024. [Google Scholar]

- Chakrabarty, T.; Padmakumar, V.; Brahman, F.; Muresan, S. Creativity support in the age of large language models: An empirical study involving professional writers. In Proceedings of the 16th Conference on Creativity & Cognition, Chicago, IL, USA, 23–26 June 2024. [Google Scholar]

- Hwang, A.H.-C.; Won, A.S. Ideabot: Investigating social facilitation in human-machine team creativity. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Online, 8–13 May 2021. [Google Scholar]

- Kenett, Y.N. The role of knowledge in creative thinking. Creat. Res. J. 2025, 37, 242–249. [Google Scholar] [CrossRef]

- Hills, T.T.; Kenett, Y.N. An entropy modulation theory of creative exploration. Psychol. Rev. 2025, 132, 239–251. [Google Scholar] [CrossRef]

- Bowden, E.M.; Jung-Beeman, M. Aha! Insight experience correlates with solution activation in the right hemisphere. Psychon. Bull. Rev. 2003, 10, 730–737. [Google Scholar] [CrossRef]

- Worthen, B.R.; Clark, P.M. Toward an improved measure of remote associational ability. J. Educ. Meas. 1971, 8, 113–123. [Google Scholar] [CrossRef]

- Kajić, I.; Gosmann, J.; Stewart, T.C.; Wennekers, T.; Eliasmith, C. A spiking neuron model of word associations for the remote associates test [Original Research]. Front. Psychol. 2017, 8, 99. [Google Scholar] [CrossRef] [PubMed]

- Smith, K.A.; Huber, D.E.; Vul, E. Multiply-constrained semantic search in the remote associates test. Cognition 2013, 128, 64–75. [Google Scholar] [CrossRef] [PubMed]

- Howard-Jones, P.A.; Blakemore, S.-J.; Samuel, E.A.; Summers, I.R.; Claxton, G. Semantic divergence and creative story generation: An fmri investigation. Cogn. Brain Res. 2005, 25, 240–250. [Google Scholar] [CrossRef]

- Luchini, S.; Moosa, M.; Patterson, J.D.; Johnson, D.; Baas, M.; Barbot, B.; Bashmakova, I.; Benedek, M.; Chen, Q.; Corazza, G.; et al. Automated assessment of creativity in multilingual narratives. Psychol. Aesthet. Creat. Arts 2025. [Google Scholar] [CrossRef]

- Prabhakaran, R.; Green, A.E.; Gray, J.R. Thin slices of creativity: Using single-word utterances to assess creative cognition. Behav. Res. Methods 2014, 46, 641–659. [Google Scholar] [CrossRef]

- Wenzel, W.G.; Gerrig, R.J. Convergent and divergent thinking in the context of narrative mysteries. Discourse Process. 2015, 52, 489–516. [Google Scholar] [CrossRef]

- Benedek, M.; Jurisch, J.; Koschutnig, K.; Fink, A.; Beaty, R.E. Elements of creative thought: Investigating the cognitive and neural correlates of association and bi-association processes. NeuroImage 2020, 210, 116586. [Google Scholar] [CrossRef] [PubMed]

- Volle, E. Associative and controlled cognition in divergent thinking: Theoretical, experimental, neuroimaging evidence, and new directions. In The Cambridge Handbook of the Neuroscience of Creativity; Jung, R.E., Vartanian, O., Eds.; Cambridge University Press: Cambridge, UK, 2018; pp. 333–362. [Google Scholar]

- Becker, M.; Cabeza, R. The neural basis of the insight memory advantage. Trends Cogn. Sci. 2025, 29, 255–268. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Green, A.E.; Chen, Q.; Kenett, Y.N.; Sun, J.; Wei, D.; Qiu, J. Creative problem solving in knowledge-rich contexts. Trends Cogn. Sci. 2022, 26, 849–859. [Google Scholar] [CrossRef] [PubMed]

- Vygotskiĭ, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Kidd, C.; Piantadosi, S.T.; Aslin, R.N. The goldilocks effect: Human infants allocate attention to visual sequences that are neither too simple nor too complex. PLoS ONE 2012, 7, e36399. [Google Scholar] [CrossRef]

- Fong, C.J.; Patall, E.A.; Vasquez, A.C.; Stautberg, S. A meta-analysis of negative feedback on intrinsic motivation. Educ. Psychol. Rev. 2019, 31, 121–162. [Google Scholar] [CrossRef]

- Hattie, J.A.C.; Timperley, H.S. The power of feedback. Rev. Educ. Res. 2007, 77, 112–181. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Krampe, R.T.; Tesch-Römer, C. The role of deliberate practice in the acquisition of expert performance. Psychol. Rev. 1993, 100, 363. [Google Scholar] [CrossRef]

- Chen, D.L.; Schonger, M.; Wickens, C. Otree—An open-source platform for laboratory, online, and field experiments. J. Behav. Exp. Financ. 2016, 9, 88–97. [Google Scholar] [CrossRef]

- Vasiliev, Y. Natural Language Processing with Python and Spacy: A Practical Introduction; No Starch Press: San Francisco, CA, USA, 2020. [Google Scholar]

- Shen, S.; Wang, S.; Qi, Y.; Wang, Y.; Yan, X. Teacher suggestion feedback facilitates creativity of students in steam education. Front. Psychol. 2021, 12, 723171. [Google Scholar] [CrossRef]

- Gilardi, F.; Alizadeh, M.; Kubli, M. Chatgpt outperforms crowd workers for text-annotation tasks. Proc. Natl. Acad. Sci. USA 2023, 120, e2305016120. [Google Scholar] [CrossRef]

- Gray, M.A.; Šavelka, J.; Oliver, W.M.; Ashley, K.D. Can gpt alleviate the burden of annotation? In Proceedings of the International Conference on Legal Knowledge and Information Systems, Maastricht, The Netherlands, 18–20 December 2023. [Google Scholar]

- Hackl, V.; Müller, A.E.; Granitzer, M.; Sailer, M. Is gpt-4 a reliable rater? Evaluating consistency in gpt-4’s text ratings [Original Research]. Front. Educ. 2023, 8, 1272229. [Google Scholar] [CrossRef]

- Kim, S.; Jo, M. Is gpt-4 alone sufficient for automated essay scoring?: A comparative judgment approach based on rater cognition. In Proceedings of the Eleventh ACM Conference on Learning at Scale, Atlanta, GA, USA, 18–20 July 2024. [Google Scholar]

- Lundgren, M. Large language models in student assessment: Comparing chatgpt and human graders. arXiv 2024. [Google Scholar] [CrossRef]

- Yadav, S.; Choppa, T.; Schlechtweg, D. Towards automating text annotation: A case study on semantic proximity annotation using gpt-4. arXiv 2024. [Google Scholar] [CrossRef]

- Guilford, J.P. The Nature of Human Intelligence; McGraw-Hill: Columbus, OH, USA, 1967. [Google Scholar]

- Organisciak, P.; Acar, S.; Dumas, D.; Berthiaume, K. Beyond semantic distance: Automated scoring of divergent thinking greatly improves with large language models. Think. Ski. Creat. 2023, 49, 101356. [Google Scholar] [CrossRef]

- Alhashim, G.; Alhashim, A.G.; Marshall, M.; Marshall, M.; Hartog, T.; Jonczyk, D.R.; Jo’nczyk, R.; Hell, P.J.v.; Siddique, P.Z.; Siddique, Z. Wip: Assessing creativity of alternative uses task responses: A detailed procedure. ASEE Annu. Conf. Expo. Conf. Proc. 2020, 2020, 1656. [Google Scholar]

- Forthmann, B.; Gerwig, A.; Holling, H.; Çelik, P.; Storme, M.; Lubart, T. The be-creative effect in divergent thinking: The interplay of instruction and object frequency. Intelligence 2016, 57, 25–32. [Google Scholar] [CrossRef]

- Henry, J.; Crawford, J. A meta-analytic review of verbal fluency deficits in schizophrenia relative to other neurocognitive deficits. Cogn. Neuropsychiatry 2005, 10, 1–33. [Google Scholar] [CrossRef]

- Henry, J.D.; Crawford, J.R. Verbal fluency deficits in parkinson’s disease: A meta-analysis. J. Int. Neuropsychol. Soc. 2004, 10, 608–622. [Google Scholar] [CrossRef]

- Laws, K.R.; Duncan, A.; Gale, T.M. ‘Normal’ semantic–phonemic fluency discrepancy in alzheimer’s disease? A meta-analytic study. Cortex 2010, 46, 595–601. [Google Scholar] [CrossRef]

- Ardila, A.; Ostrosky-Solís, F.; Bernal, B. Cognitive testing toward the future: The example of semantic verbal fluency (animals). Int. J. Psychol. 2006, 41, 324–332. [Google Scholar] [CrossRef]

- Rofes, A.; de Aguiar, V.; Jonkers, R.; Oh, S.J.; DeDe, G.; Sung, J.E. What drives task performance during animal fluency in people with alzheimer’s disease? Front. Psychol. 2020, 11, 1485. [Google Scholar] [CrossRef]

- Shao, Z.; Janse, E.; Visser, K.; Meyer, A. What do verbal fluency tasks measure? Predictors of verbal fluency performance in older adults [Original Research]. Front. Psychol. 2014, 5, 772. [Google Scholar] [CrossRef]

- Abdi, H. Holm’s sequential bonferroni procedure. Encycl. Res. Des. 2010, 1, 1–8. [Google Scholar]

- Holinger, M.; Kaufman, J.C. The relationship between creativity and feedback. In The Cambridge Handbook of Instructional Feedback; Cambridge University Press: Cambridge, UK, 2018; pp. 575–587. [Google Scholar] [CrossRef]

- Hernandez Sibo, I.P.; Gomez Celis, D.A.; Liou, S. Exploring the landscape of cognitive load in creative thinking: A systematic literature review. Educ. Psychol. Rev. 2024, 36, 24. [Google Scholar] [CrossRef]

- Rodet, C.S. Does cognitive load affect creativity? An experiment using a divergent thinking task. Econ. Lett. 2022, 220, 110849. [Google Scholar] [CrossRef]

- Redifer, J.L.; Bae, C.L.; Zhao, Q. Self-efficacy and performance feedback: Impacts on cognitive load during creative thinking. Learn. Instr. 2021, 71, 101395. [Google Scholar] [CrossRef]

- Beaty, R.E.; Silvia, P.J. Why do ideas get more creative over time? An executive interpretation of the serial order effect in divergent thinking tasks. Psychol. Aesthet. Creat. Arts 2012, 6, 309–319. [Google Scholar] [CrossRef]

- Silvia, P.J. Intelligence and creativity are pretty similar after all. Educ. Psychol. Rev. 2015, 27, 599–606. [Google Scholar] [CrossRef]

- Anderson, B.R.; Shah, J.H.; Kreminski, M. Evaluating creativity support tools via homogenization analysis. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024. [Google Scholar]

- Merseal, H.M.; Luchini, S.; Kenett, Y.N.; Knudsen, K.; Bilder, R.M.; Beaty, R.E. Free association ability distinguishes highly creative artists from scientists: Findings from the big-c project. Psychol. Aesthet. Creat. Arts 2025, 19, 495–504. [Google Scholar] [CrossRef]

- Saretzki, J.; Andrae, R.; Forthmann, B.; Benedek, M. Investigation of response aggregation methods in divergent thinking assessments. J. Creat. Behav. 2025, 59, e1527. [Google Scholar] [CrossRef]

- Chen, W.-Y. Intelligent tutor: Leveraging chatgpt and microsoft copilot studio to deliver a generative ai student support and feedback system within teams. arXiv 2024. [Google Scholar] [CrossRef]

- Jacobsen, L.J.; Weber, K.E. The promises and pitfalls of large language models as feedback providers: A study of prompt engineering and the quality of ai-driven feedback. AI 2025, 6, 35. [Google Scholar] [CrossRef]

- Steiss, J.; Tate, T.; Graham, S.; Cruz, J.; Hebert, M.; Wang, J.; Moon, Y.; Tseng, W.; Warschauer, M.; Olson, C.B. Comparing the quality of human and chatgpt feedback of students’ writing. Learn. Instr. 2024, 91, 101894. [Google Scholar] [CrossRef]

- Herlambang, M.B.; Taatgen, N.A.; Cnossen, F. The role of motivation as a factor in mental fatigue. Hum. Factors 2019, 61, 1171–1185. [Google Scholar] [CrossRef]

- Stella, M.; Hills, T.T.; Kenett, Y.N. Using cognitive psychology to understand gpt-like models needs to extend beyond human biases. Proc. Natl. Acad. Sci. USA 2023, 120, e2312911120. [Google Scholar] [CrossRef]

- Zeng, L.; Proctor, R.W.; Salvendy, G. Can traditional divergent thinking tests be trusted in measuring and predicting real-world creativity? Creat. Res. J. 2011, 23, 24–37. [Google Scholar] [CrossRef]

| F/VL | F/CL | NF/VL | NF/CL | |

|---|---|---|---|---|

| N | 97 | 98 | 93 | 97 |

| M/F | 41/55 | 57/41 | 42/51 | 39/57 |

| Age | 31.7 (8.2) | 28.8 (8.43) | 29.12 (8.0) | 29.0 (8.06) |

| Education | 14.21 (2.76) | 13.9 (1.85) | 13.87 (2.0) | 14.27 (2.29) |

| Examples of General Feedback Provided by the AI Trainer |

|---|

| The story is a thoughtful commentary on societal norms surrounding poetry, effectively using the prompt words to highlight feelings of disconnection. It could be enriched by a vivid scene or character to ground these abstract concepts. |

| The story presents a cynical, introspective tone that effectively incorporates the given words but could benefit from a clearer narrative or emotional arc. |

| The story cleverly integrates the three required words, painting an image of escapism and the juxtaposition of mundane life with grandiose dreams. However, the connection between the man’s transformation and the context (bowling and astronomy) could be more cohesive and purposeful. |

| The story features an unexpected twist on the concept of romance and incorporates the prompt words with a touch of humor. Be cautious with sensitive topics to ensure they’re handled appropriately and with the consideration of potential readers. |

| The story paints a vivid image of an evocative and historically rich setting, successfully weaving the prompt words into a tapestry of jazz culture. The tale provides a fanciful origin story for jazz that invites readers to visualize the unconventional logo and the atmosphere within the club. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kreisberg-Nitzav, A.; Kenett, Y.N. Creativeable: Leveraging AI for Personalized Creativity Enhancement. AI 2025, 6, 247. https://doi.org/10.3390/ai6100247

Kreisberg-Nitzav A, Kenett YN. Creativeable: Leveraging AI for Personalized Creativity Enhancement. AI. 2025; 6(10):247. https://doi.org/10.3390/ai6100247

Chicago/Turabian StyleKreisberg-Nitzav, Ariel, and Yoed N. Kenett. 2025. "Creativeable: Leveraging AI for Personalized Creativity Enhancement" AI 6, no. 10: 247. https://doi.org/10.3390/ai6100247

APA StyleKreisberg-Nitzav, A., & Kenett, Y. N. (2025). Creativeable: Leveraging AI for Personalized Creativity Enhancement. AI, 6(10), 247. https://doi.org/10.3390/ai6100247