Digital Technologies Impact on Healthcare Delivery: A Systematic Review of Artificial Intelligence (AI) and Machine-Learning (ML) Adoption, Challenges, and Opportunities

Abstract

1. Introduction

Rationale of Research

2. Materials and Methods

2.1. Study Protocol

2.2. The Objectives of the Study Were To

- evaluate the impact and potential of AI and ML adoption in healthcare delivery;

- examine the challenges and ethical dilemmas presented by AI and ML adoption and implementation in healthcare settings;

- explore the opportunities and prospects presented by AI and ML adoption for optimizing healthcare delivery.

2.3. Method of Data Collection

2.4. Search Strategy

2.5. Framing of Search Query

2.6. Database Search Procedure and Search Strategy

2.7. Inclusion and Exclusion Criteria

2.8. Titles and Abstracts Screening

2.9. Studies Selection

2.10. Quality Assessment of Included Studies

2.11. Data Synthesis

3. Results

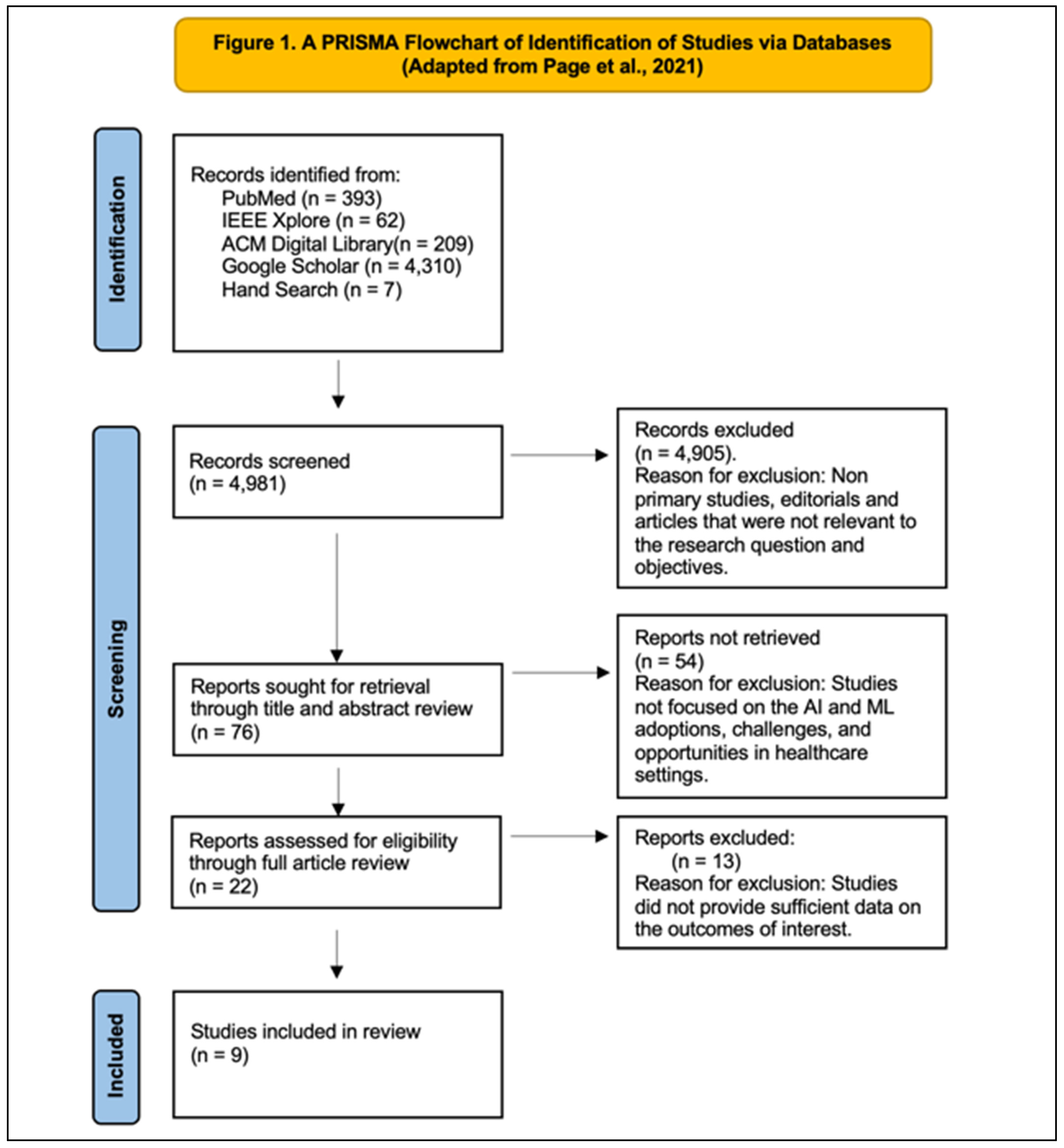

3.1. PRISMA Flow Chart

3.2. Data Extraction of Included Studies

3.3. Study Characteristics

3.4. Data Extraction and Synthesis

| Author | Petersson et al. [25] | Pumplun et al. [26] | Liyanage et al. [27] | Sun and Medaglia, [28] | Alanazi [29] | Blease et al. [30] | Dumbach et al. [31] | Lammons et al. [32] | Katirai et al. [33] |

|---|---|---|---|---|---|---|---|---|---|

| Title: | Challenges to Implementing Artificial Intelligence in Healthcare: A Qualitative Interview Study with Healthcare Leaders in Sweden | Adoption of Machine Learning Systems for Medical Diagnostics in Clinics: Qualitative Interview Study | Artificial Intelligence in Primary Health Care: Perceptions, Issues, and Challenges | Mapping the Challenges of Artificial Intelligence in the Public Sector: Evidence from Public Healthcare | Clinicians’ Views on Using Artificial Intelligence in Healthcare: Opportunities, Challenges, and Beyond | Artificial Intelligence and the Future of Primary Care: Exploratory Qualitative Study of UK General Practitioners’ Views | The Adoption of Artificial Intelligence in SMEs—A Cross-National Comparison in German and Chinese Healthcare | Centring Public Perceptions on Translating AI Into Clinical Practice: Patient and Public Involvement and Engagement Consultation Focus Group Study | Perspectives on Artificial Intelligence in Healthcare From a Patient and Public Involvement Panel in Japan: An Exploratory Study |

| Publication Year | 2022 | 2021 | 2019 | 2019 | 2023 | 2019 | 2021 | 2023 | 2023 |

| Extraction Date | 30 March 2024 | 30 March 2024 | 30 March 2024 | 30 March 2024 | 30 March 2024 | 30 March 2024 | 30 March 2024 | 30 March 2024 | 30 March 2024 |

| Study Country | Sweden | Germany | Australia, Belgium, Canada, Croatia, Italy, New Zealand, Spain, United Kingdom, and USA | China | Saudi Arabia (Riyadh) | United Kingdom (UK) | Germany and China. | United Kingdom (UK) | Japan |

| Article Source | BMC Health Services Research | Journal of Medical Internet Research | Yearbook of Medical Informatics | Government Information Quarterly | Cureus journal | Journal of Medical Internet Research | CEUR-WS | Journal of Medical Internet Research | Frontiers in Digital Health |

| Study Purpose | The study aimed to explore the challenges perceived by healthcare leaders in a regional Swedish healthcare setting regarding the implementation of artificial intelligence (AI) in healthcare. | To explore the factors influencing the adoption process of machine-learning systems for medical diagnostics in clinics and to provide insights into measuring the clinic status quo in the adoption process. | To form consensus about perceptions, issues, and challenges of AI in primary care. | To map the challenges in the adoption of artificial intelligence (AI) in the public healthcare sector as perceived by key stakeholders. | The study aimed to explore the current and potential uses of artificial intelligence (AI) in healthcare from the perspective of clinicians, as well as to examine the challenges associated with its implementation. | The study aimed to explore the views of UK General Practitioners (GPs) regarding the potential impact of future technology on key tasks in primary care. | The study aimed to examine the current status of AI development and adoption, perceived advantages and challenges of AI, and the expected future development and implementation of AI in healthcare over the next five years. | To understand patients’ and the public’s perceived benefits and challenges for AI and to clarify how to best conduct patient and public involvement and engagement (PPIE) in projects on translating AI into clinical practice, given public perceptions of AI. | To explore the perspectives of a Patient and Public Involvement Panel in Japan regarding the use of artificial intelligence (AI) in healthcare. |

| Study Design | Qualitative interview study | Qualitative interview study | Three-round Delphi qualitative study | Qualitative case study | Qualitative study using focus group interviews | Exploratory qualitative study | Qualitative multiple-case expert interviews | Qualitative focus group study | Qualitative research design. |

| Study Population and Participant Selection Criteria | Healthcare leaders in Sweden | Medical experts from clinics and their suppliers with profound knowledge in the field of machine learning. | Experts in primary health care informatics and clinicians | Government policymakers, hospital managers/doctors, and information technology (IT) firm managers. | Clinicians with interest in AI-enabled health technology | UK general practitioners (GPs) according to gender and age. | Industry experts from small and medium-sized enterprises (SMEs) in the healthcare sector in Germany and China. | Public collaborators representing 7 National Institute of Health and Care Research Applied Collaborations across England participated in the study and were those who had special interest in AI | Members of a Patient and Public Involvement Panel in Japan ensuring diverse perspectives and knowledge |

| Sample Size | 26 | 22 | Round 1 (n = 20), Round 2 (n = 12), Round 3 (n = 8) | 20 | 26 | 720 | 14 | 17 | 11 |

| Setting | Regional Swedish healthcare setting. | The study was conducted in clinics. | Primary healthcare setting | Public healthcare sector in China | Healthcare-delivery context and the integration of AI with electronic health record (EHR) systems. | GPs practicing in various primary care settings in the UK | Healthcare sector, specifically focusing on SMEs in Germany and China | National Institute of Health and Care Research Applied Research across England | Online setting using the Apisnote platform |

| Validity and reliability of findings | The study is rigorous in that it adhered to the Consolidated Criteria for Reporting Qualitative Research (COREQ) checklist, ensuring methodological rigor and quality standards. The snowball recruitment to select participants from a diverse sample. Semi-structured interviews conducted by trained researchers are clearly outlined and, therefore, contribute to data reliability. Qualitative content enhances reliability and consistency. The healthcare leaders’ perceptions viewed qualitatively enhanced the study’s validity, transparency, and reflexivity, along with professional translation of quotations, enhancing the credibility and trustworthiness of the study. | In-depth interviews using a qualitative approach, and the interviews with experts added to the rigor of the study. Data collection and analysis included theoretical sampling, iterative coding, and multi-researcher triangulation, adding further to the rigor and reliability of the study. The theoretical framework (NASSS) for analysis, model development, and triangulation of data sources ensured internal validity. | The structured three-round Delphi study, which provided clear guidelines and objectives, ensured rigor, credibility and consistency. Reliability is achieved through a panel of 20 experts participating in multiple rounds. Internal validity is demonstrated through a systematic approach in each Delphi round of appropriateness of the method used for data collection. | The study’s use of multiple data sources and rigorous analysis techniques enhanced the reliability of the findings. However, there is no measure for inter-coder reliability or member checking; the internal validity is ensured via use of multiple data sources and triangulation. However, external validity is limited, as the focus is solely on the Chinese case study and cannot be generalized to the findings in other healthcare contexts | Purposive sampling ensured inclusion of participants with relevant perspectives and experiences, enhancing rigor and reliability of the study. An appropriate sample size was employed, ethical considerations were ensured, and the data collection method using semi-structured discussions and open-ended questions ensured further rigor. Participant demographics were transparent, and therefore, reproducibility and reliability of the study were further ensured. The study’s validity was ensured by addressing ethical considerations. Triangulation minimized bias and enhanced validity, especially due to the consistency in perspectives across participants, allowing broader representativeness. | A broad representation was ensured using a web-based survey of UK General Practitioners. Anonymity ensured or encouraged possible honest responses and response validity. There was a respectable response rate of 48.84%, which enhanced sample representativeness. The survey instrument and consultations ensured face validity. Overall methodology can be replicated, as detail is described. External validity enhanced via the widely accessed Doctors.net.uk platform, enhancing internal validity by clear communication and comprehension. | Yin’s guidelines were followed, ensuring a structured research procedure and data-collection procedures via interviews with diverse participants. Detailed methodologies, accurate transcription and translation processes, and data triangulation ensured data reliability, study rigor, and internal validity. Detailed descriptions allow assessment of findings’ transferability. Interviews and analysis across different countries enhance external validity. | The use of Consolidated Criteria for Reporting Qualitative Research (COREQ) ensures transparency and validity of the research. Analysis reliability enhanced via iterative coding processes. Public collaborators who participated in focus groups contributed as coauthors and co-analysts adding credibility to the analysis. Closed-captioned recordings and pseudonymization of transcripts ensured transparent and reliable data analysis. Internal validity is demonstrated through authors’ review, discussion of discrepancies, and clarifying edits, enhancing analysis credibility. | The methodologies are rigorous, as a balanced representation of patients, caregivers, and the public is included in participant selection. Detailed information about workshop sessions enhanced transparency and reproducibility, adding to reliability of the study. |

| Main outcomes of the study | The study identified several challenges to implementing AI systems in healthcare that are categorized into three main areas: external conditions, capacity for change management, and transformation of professions and practices. | The study provided an integrated overview of factors specific to the adoption of machine-learning (ML) systems in clinics, utilizing the NASSS framework. It emphasizes the importance of deep integration while highlighting common challenges faced by clinics. | The study highlights the potential of AI to enhance both managerial and clinical decisions in primary care, particularly through predictive modeling and decision-making capabilities. | The study reveals biases among stakeholder groups in framing challenges related to AI adoption. These biases lead to distinct viewpoints across seven dimensions, with no shared issues identified. | AI offers various opportunities in healthcare, including decision-support systems, predictive analytics, natural language processing (NLP), patient monitoring, and mobile technology, which can enhance clinical procedures, patient engagement, continuity of care, and population health management. However, challenges and concerns surround the implementation of AI in healthcare, such as data quality, patient privacy, technical limitations, regulations, and cybersecurity threats. Integrating AI into existing systems poses operational challenges, and concerns exist about accuracy, reliability, and ethical implications. | The study provides foundational insights into GPs’ views but acknowledges limitations in comment brevity and probing responses, suggesting further research to explore attitudes among other healthcare professionals and patients. Overall, the study underscores the importance of medical education to prepare physicians for potential technological changes in clinical practice. | The study highlighted the importance of addressing challenges in data quality, transparency, and legal guidelines for successful AI adoption. Limitations include sample-size constraints, prompting the need for further research in diverse contexts. | The study findings highlighted common concerns, such as data security and bias. Public involvement is deemed crucial for successful AI implementation. Benefits include system improvements and enhanced patient care quality, while concerns revolve around security, bias, and potential loss of human touch in decision-making. | The themes highlighted consistent concerns such as patient autonomy and data, security. Notably, concerns about bias, regulatory frameworks, and commercial involvement were absent among Japanese participants. |

4. Discussion

4.1. Theme 1—Impact and Potentials of AI and ML Adoption in Healthcare Delivery

4.2. Theme 2—Challenges, Limitations, Concerns, and Risks

4.3. Theme 3—Opportunities and Prospects Presented by AI and ML

5. Limitations

6. Conclusions

7. Future Research Needs

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Topol, E. The topol review. In Preparing the Healthcare Workforce to Deliver the Digital Future; Health Education England: Leeds, UK, 2019; pp. 1–48. [Google Scholar]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Thrall, J.H.; Li, X.; Li, Q.; Cruz, C.; Do, S.; Dreyer, K.; Brink, J. Artificial intelligence and machine learning in radiology: Opportunities, challenges, pitfalls, and criteria for success. J. Am. Coll. Radiol. 2018, 15, 504–508. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Kreimeyer, K.; Foster, M.; Pandey, A.; Arya, N.; Halford, G.; Jones, S.F.; Forshee, R.; Walderhaug, M.; Botsis, T. Natural language processing systems for capturing and standardizing unstructured clinical information: A systematic review. J. Biomed. Inform. 2017, 73, 14–29. [Google Scholar] [CrossRef]

- Noorbakhsh-Sabet, N.; Zand, R.; Zhang, Y.; Abedi, V. Artificial intelligence transforms the future of health care. Am. J. Med. 2019, 132, 795–801. [Google Scholar] [CrossRef] [PubMed]

- Paul, D.; Sanap, G.; Shenoy, S.; Kalyane, D.; Kalia, K.; Tekade, R.K. Artificial intelligence in drug discovery and development. Drug Discov. Today 2021, 26, 80. [Google Scholar] [CrossRef]

- Xu, L.; Sanders, L.; Li, K.; Chow, J.C. Chatbot for health care and oncology applications using artificial intelligence and machine learning: Systematic review. JMIR Cancer 2021, 7, e27850. [Google Scholar] [CrossRef] [PubMed]

- Pulimamidi, R.; Buddha, G.P. The Future of Healthcare: Artificial Intelligence’s Role in Smart Hospitals and Wearable Health Devices. Tuijin Jishu/J. Propuls. Technol. 2023, 4, 2498–2504. [Google Scholar]

- Char, D.S.; Shah, N.H.; Magnus, D. Implementing machine learning in health care—Addressing ethical challenges. NEJM 2018, 378, 981. [Google Scholar] [CrossRef]

- He, J.; Baxter, S.L.; Xu, J.; Xu, J.; Zhou, X.; Zhang, K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019, 25, 30–36. [Google Scholar] [CrossRef]

- Naik, N.; Hameed, B.M.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and ethical consideration in artificial intelligence in healthcare: Who takes responsibility? Front. Surg. 2022, 9, 266. [Google Scholar] [CrossRef] [PubMed]

- Ali, O.; Abdelbaki, W.; Shrestha, A.; Elbasi, E.; Ali Alryalat, M.A.; Dwivedi, Y.K. A systematic literature review of artificial intelligence in the healthcare sector: Benefits, challenges, methodologies, and functionalities. J. Innov. Knowl. 2023, 8, 100333. [Google Scholar] [CrossRef]

- Al Kuwaiti, A.; Nazer, K.; Al-Reedy, A.; Al-Shehri, S.; Al-Muhanna, A.; Subbarayalu, A.V.; Al Muhanna, D.; Al-Muhanna, F.A. A review of the role of artificial intelligence in healthcare. J. Pers. Med. 2023, 13, 951. [Google Scholar] [CrossRef] [PubMed]

- Udegbe, F.E.; Ebulue, Ogochukwu Roseline; Ebulue, Charles Chukwudalu; Ekesiobi, Chukwunonso. The role of artificial intelligence in healthcare: A systematic review of applications and challenges. Int. Med. Sci. Res. J. 2024, 4, 500–508. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Ngiam, K.Y.; Khor, W. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 2019, 20, e262–e273. [Google Scholar] [CrossRef]

- Shinners, L.; Aggar, C.; Grace, S.; Smith, S. Exploring healthcare professionals’ understanding and experiences of artificial intelligence technology use in the delivery of healthcare: An integrative review. Health Inform. J. 2020, 26, 1225–1236. [Google Scholar] [CrossRef]

- Saraswat, D.; Bhattacharya, P.; Verma, A.; Prasad, V.K.; Tanwar, S.; Sharma, G.; Sharma, R. Explainable AI for healthcare 5.0: Opportunities and challenges. IEEE Access 2022, 10, 84486–84517. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 134, 178–189. [Google Scholar] [CrossRef]

- Critical Appraisal Skills Programme. CASP Checklist. 2018. Available online: https://casp-uk.net/casp-tools-checklists/ (accessed on 31 May 2024).

- Critical Appraisal Skills Programme. CASP Qualitative Checklist. 2018. Available online: https://casp-uk.net/casp-tools-checklists/ (accessed on 31 May 2024).

- Gattrell, W.T.; Logullo, P.; van Zuuren, E.J.; Price, A.; Hughes, E.L.; Blazey, P.; Winchester, C.C.; Tovey, D.; Goldman, K.; Hungin, A.P.; et al. ACCORD (ACcurate COnsensus Reporting Document): A reporting guideline for consensus methods in biomedicine developed via a modified Delphi. PLoS Med. 2024, 21, e1004326. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Petersson, L.; Larsson, I.; Nygren, J.M.; Nilsen, P.; Neher, M.; Reed, J.E.; Tyskbo, D.; Svedberg, P. Challenges to implementing artificial intelligence in healthcare: A qualitative interview study with healthcare leaders in Sweden. BMC Health Serv. Res. 2022, 22, 850. [Google Scholar] [CrossRef] [PubMed]

- Pumplun, L.; Fecho, M.; Wahl, N.; Peters, F.; Buxmann, P. Adoption of machine learning systems for medical diagnostics in clinics: Qualitative interview study. J. Med. Internet Res. 2021, 23, e29301. [Google Scholar] [CrossRef] [PubMed]

- Liyanage, H.; Liaw, S.T.; Jonnagaddala, J.; Schreiber, R.; Kuziemsky, C.; Terry, A.L.; de Lusignan, S. Artificial intelligence in primary health care: Perceptions, issues, and challenges. Yearb. Med. Inform. 2019, 28, 041–046. [Google Scholar] [CrossRef]

- Sun, T.Q.; Medaglia, R. Mapping the challenges of Artificial Intelligence in the public sector: Evidence from public healthcare. Gov. Inf. Q. 2019, 36, 368–383. [Google Scholar] [CrossRef]

- Alanazi, A. Clinicians’ Views on Using Artificial Intelligence in Healthcare: Opportunities, Challenges, and Beyond. Cureus 2023, 15, 1–11. [Google Scholar] [CrossRef]

- Blease, C.; Kaptchuk, T.J.; Bernstein, M.H.; Mandl, K.D.; Halamka, J.D.; DesRoches, C.M. Artificial intelligence and the future of primary care: Exploratory qualitative study of UK general practitioners’ views. J. Med. Internet Res. 2019, 21, e12802. [Google Scholar] [CrossRef]

- Dumbach, P.; Liu, R.; Jalowski, M.; Eskofier, B.M. The Adoption of Artificial Intelligence in SMEs-A Cross-National Comparison in German and Chinese Healthcare. Joint Proceedings of the BIR 2021 Workshops and Doctoral Consortium Co-Located with 20th International Conference on Perspectives in Business Informatics Research (BIR 2021) (2991). 2021, pp. 84–98. Available online: https://ceur-ws.org/Vol-2991/paper08.pdf (accessed on 31 May 2024).

- Lammons, W.; Silkens, M.; Hunter, J.; Shah, S.; Stavropoulou, C. Centering Public Perceptions on Translating AI Into Clinical Practice: Patient and Public Involvement and Engagement Consultation Focus Group Study. J. Med. Internet Res. 2023, 25, e49303. [Google Scholar] [CrossRef]

- Katirai, A.; Yamamoto, B.A.; Kogetsu, A.; Kato, K. Perspectives on artificial intelligence in healthcare from a Patient and Public Involvement Panel in Japan: An exploratory study. Front. Digit. Health 2023, 5, 1229308. [Google Scholar] [CrossRef]

- Tran, B.D.; Chen, Y.; Liu, S.; Zheng, K. How does medical scribes’ work inform development of speech-based clinical documentation technologies? A systematic review. JAMIA Open 2020, 27, 808–817. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef] [PubMed]

- Brynjolfsson, E.; Rock, D.; Syverson, C. Artificial intelligence and the modern productivity paradox: A clash of expectations and statistics. In The Economics of Artificial Intelligence: An Agenda; University of Chicago Press: Chicago, IL, USA, 2019; pp. 23–57. ISBNs: 978-0-226-61333-8 (cloth); 978-0-226-61347-5 (electronic). Available online: http://www.nber.org/books/agra-1 (accessed on 31 May 2024).

- Shen, J.; Zhang, C.J.; Jiang, B.; Chen, J.; Song, J.; Liu, Z.; He, Z.; Wong, S.Y.; Fang, P.H.; Ming, W.K. Artificial intelligence versus clinicians in disease diagnosis: Systematic review. JMIR Med. Inform. 2019, 7, e10010. [Google Scholar] [CrossRef] [PubMed]

- Nagendran, M.; Chen, Y.; Lovejoy, C.A.; Gordon, A.C.; Komorowski, M.; Harvey, H.; Topol, E.J.; Ioannidis, J.P.; Collins, G.S.; Maruthappu, M. Artificial intelligence versus clinicians: Systematic review of design, reporting standards, and claims of deep learning studies. BMJ 2020, 368. [Google Scholar] [CrossRef]

- Lainjo, B. The Global Social Dynamics and Inequalities of Artificial Intelligence. Int. J. Innov. Sci. Res. Rev. 2020, 5, 4966–4974. [Google Scholar]

- Liu, X.; Rivera, S.C.; Moher, D.; Calvert, M.J.; Denniston, A.K.; Ashrafian, H.; Beam, A.L.; Chan, A.W.; Collins, G.S.; Deeks, A.D.J.; et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI extension. Lancet Digit Health 2020, 2, e537–e548. [Google Scholar] [CrossRef]

- Miotto, R.; Wang, F.; Wang, S.; Jiang, X.; Dudley, J.T. Deep learning for healthcare: Review, opportunities, and challenges. Brief Bioinform. 2018, 19, 1236–1246. [Google Scholar] [CrossRef] [PubMed]

- Karimian, G.; Petelos, E.; Evers, S.M. The ethical issues of the application of artificial intelligence in healthcare: A systematic scoping review. AI Ethics 2022, 2, 539–551. [Google Scholar] [CrossRef]

- Morley, J.; Machado, C.C.; Burr, C.; Cowls, J.; Joshi, I.; Taddeo, M.; Floridi, L. The Ethics of AI in Health Care: A Mapping. Ethics Gov. Policies Artif. Intell. 2021, 144, 313. [Google Scholar]

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare; Academic Press: Cambridge, MA, USA, 2020; pp. 295–336. [Google Scholar]

- Wang, Y.; Kung, L.; Byrd, T.A. Big data analytics: Understanding its capabilities and potential benefits for healthcare organizations. Technol Forecast. Soc Change 2018, 126, 3–13. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Z.M. Ethics and governance of trustworthy medical artificial intelligence. BMC Med Inform Decis Mak. 2023, 23, 7. [Google Scholar] [CrossRef]

- Wiens, J.; Saria, S.; Sendak, M.; Ghassemi, M.; Liu, V.X.; Doshi-Velez, F.; Jung, K.; Heller, K.; Kale, D.; Saeed, M.; et al. Do no harm: A roadmap for responsible machine learning for health care. Nat Med. 2019, 25, 1337–1340. [Google Scholar] [CrossRef] [PubMed]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine learning in medicine. NEJM 2019, 380, 1347–1358. [Google Scholar] [CrossRef] [PubMed]

- Kabir, R.; Sivasubramanian, M.; Hitch, G.; Hakkim, S.; Kainesie, J.; Vinnakota, D.; Mahmud, I.; Apu, E.H.; Syed, H.Z.; Parsa, A.D. “Deep learning” for Healthcare: Opportunities, Threats, and Challenges. In Deep Learning in Personalized Healthcare and Decision Support; Academic Press: Cambridge, MA, USA, 2023; pp. 225–244. [Google Scholar]

- Sverdlov, O.; Ryeznik, Y.; Wong, W.K. Opportunity for efficiency in clinical development: An overview of adaptive clinical trial designs and innovative machine learning tools, with examples from the cardiovascular field. Contemp Clin Trials. 2021, 105, 106397. [Google Scholar] [CrossRef] [PubMed]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94. [Google Scholar] [CrossRef]

- Howard, J. Artificial intelligence: Implications for the future of work. Am. J. Ind. Med. 2019, 62, 917–926. [Google Scholar] [CrossRef]

- Jarrahi, M.H.; Askay, D.; Eshraghi, A.; Smith, P. Artificial intelligence and knowledge management: A partnership between humans and AI. Bus Horiz. 2023, 66, 87–99. [Google Scholar] [CrossRef]

- Deardorff, A. Assessing the impact of introductory programming workshops on the computational reproducibility of biomedical workflows. PLoS ONE 2020, 15, e0230697. [Google Scholar] [CrossRef]

- Hlávka, J.P. Security, privacy, and information-sharing aspects of healthcare artificial intelligence. In Artificial Intelligence in Healthcare; Academic Press: Cambridge, MA, USA, 2020; pp. 235–270. [Google Scholar]

- McCool, J.; Dobson, R.; Whittaker, R.; Paton, C. Mobile health (mHealth) in low-and middle-income countries. Annu. Rev. Public Health 2020, 43, 525–539. [Google Scholar] [CrossRef] [PubMed]

- Dixon, B.E.; Kharrazi, H.; Lehmann, H.P. Public health and epidemiology informatics: Recent research and trends in the United States. Yearb. Med. Inform. 2015, 24, 199–206. [Google Scholar] [CrossRef]

- Nundy, S.; Montgomery, T.; Wachter, R.M. Promoting trust between patients and physicians in the era of artificial intelligence. JAMA 2019, 322, 497–498. [Google Scholar] [CrossRef]

- Panch, T.; Mattie, H.; Celi, L.A. The “inconvenient truth” about AI in healthcare. NPJ Digit Med. 2019, 2, 77. [Google Scholar] [CrossRef] [PubMed]

- Tuomi, I. The Impact of Artificial Intelligence on Learning, Teaching, and Education: Policies for the Future. JRC Science for Policy Report. Eur. Comm. 2019, 9, 70–78. [Google Scholar] [CrossRef]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef] [PubMed]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International evaluation of an AI system for breast cancer screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.T. Application of machine and deep learning algorithms in intelligent clinical decision support systems in healthcare. J. Health Med. Inform. 2018, 9, 321. [Google Scholar] [CrossRef]

- Kolluri, S.; Lin, J.; Liu, R.; Zhang, Y.; Zhang, W. Machine learning and artificial intelligence in pharmaceutical research and development: A review. AAPS J. 2022, 24, 1–10. [Google Scholar] [CrossRef]

| Spider | Search Terms |

|---|---|

| Sample | Healthcare Organizations, Healthcare Systems, Healthcare Professionals, Administrators, Patients |

| Phenomenon of Interest | Artificial Intelligence, Machine Learning, Deep Learning, Neural Network |

| Design | Qualitative, Quantitative, or Mixed-Methods Studies |

| Evaluation | Adoption, Challenges, Opportunities |

| Research type | Primary research |

| Database | Search Query/Format | Search Dates | Inclusion Year | Search Results |

|---|---|---|---|---|

| PubMed | (“Healthcare Delivery” OR “Patient Care” OR “Healthcare Services”) AND (“Artificial Intelligence” OR “Machine Learning”) AND (“Impact” OR “Challenges” OR “Obstacles” OR “Opportunities”) | 25 April 2024 | 2014–2024 | 393 |

| IEEE Xplore | (“Healthcare Delivery” OR “Patient Care” OR “Healthcare Services”) AND (“Artificial Intelligence” OR “Machine Learning”) AND (“Impact” OR “Challenges” OR “Obstacles” OR “Opportunities”) | 25 April 2024 | 2014–2024 | 62 |

| ACM Digital Library | (“Healthcare Delivery” OR “Patient Care” OR “Healthcare Services”) AND (“Artificial Intelligence” OR “Machine Learning”) AND (“Impact” OR “Challenges” OR “Obstacles” OR “Opportunities”) | 25 April 2024 | 2014–2024 | 209 |

| Google Scholar | (Artificial Intelligence” OR “Machine Learning”) AND (“Impact” OR “Challenges” OR “Opportunities”) AND (“Healthcare Delivery”) | 25 April 2024 | 2014–2024 | 4310 |

| Hand Search | 25 April 2024 | 2014–2024 | 7 | |

| Total Articles | 25 April 2024 | 2014–2024 | 4981 |

| Spider | Inclusion Criteria | Exclusion Criteria |

|---|---|---|

| Sample | Studies involving healthcare professionals, patients, or healthcare organizations implementing digital technologies, specifically focusing on AI/ML adoption in healthcare delivery. | Studies that did not focus on the impact of digital technologies in healthcare delivery, specifically AI and ML adoption. |

| Phenomenon of Interest | Studies examining the impact of AI and ML adoption on patient outcomes, healthcare efficiency, diagnostic accuracy, and workflow management. | Studies not focused on AI/ML adoption in the healthcare-delivery context. |

| Design | Qualitative, quantitative, or mixed-methods study designs. | Commentaries, Editorials. |

| Evaluation | Studies reporting on adoption, challenges, and opportunities regarding AI/ML in healthcare settings | Studies that did not address the adoption, challenges, or opportunities associated with these technologies. |

| Research type | Primary research | Research published in non-peer-reviewed sources. |

| Year Range | January 2014–April 2024 | Articles before January 2014 and after April 2024. |

| Database | Search Dates | Inclusion Year | Search Results | Excluded | Included |

|---|---|---|---|---|---|

| PubMed | 25 April 2024 | 2014–2024 | 393 | 380 | 13 |

| IEEE Xplore | 25 April 2024 | 2014–2024 | 62 | 44 | 18 |

| ACM Digital Library | 25 April 2024 | 2014–2024 | 209 | 197 | 12 |

| Google Scholar | 25 April 2024 | 2014–2024 | 4310 | 4281 | 29 |

| Hand search | 25 April 2024 | 2014–2024 | 7 | 3 | 4 |

| Total Articles | 25 April 2024 | 2014–2024 | 4981 | 4905 | 76 |

| Theme 1: Impact and Potentials of AI and ML Adoption in Healthcare Delivery Subtheme 1: Advantages of integrating AI and ML into healthcare According to the studies by Blease et al. [30] and Katirai et al. [33], the use of AI as a personal assistant was highlighted by many GPs, as shown below: “Be useful to develop AI to do analyses of pathology returns, and read all the letters, to provide another presence in the consulting room, and to write the referral letters, organize investigations and the like, i.e., act like a personal assistant might do” [Participant 135] [30] (p. 4). “I think technology’s place is more about informing patients about conditions and management booking appointments, ordering prescriptions, contacting the surgery via the internet rather than the phone” [Participant 683] [30] (p. 4). “Please hurry up with the technological advances to take away some of the crap that I still have to sort out—then I will be able to get back to proper diagnosing and doctoring” [Participant 693] [30] (p. 4). “The possibility that healthcare professionals will be able to concentrate on the work that they should be able to focus on” [Extract 1, Group 2] [33] (p. 03). |

| Subtheme 2: AI’s Contribution to Medical Performance and Efficiency Participants’ perspectives from studies by Dumbach et al. [31] and Blease et al. [30] served to highlight the efficiency of AI in the healthcare sector, as shown below: “AI is seen as a technology that leads to better performance compared to humans or traditional algorithms. Higher accuracy (C4–5, C7), better data processing (G3–4), path planning for medical robots (C2), or the ability to find solutions for existing problems (G1) are linked to this benefit category.” [Several Participants] [31] (p. 91). “Medicine will be unrecognizable compared to its present form in 25 years” [Participant 312] [30] (p. 5). “All twelve AI adopters highlighted ‘efficiency improvement’ as a benefit, that manifests in e.g., speeding up data and image processing (G1–5, C1, C5–7) or improving management efficiency (C2–4).” [Several Participants] [31] (p. 91). |

| Subtheme 3: AI Replacing Routine or Simple Tasks The advantages and disadvantages of AI in carrying out mundane tasks were also observed as a positive outcome of the use of AI in the healthcare sector, as shown in the studies by Sun and Medaglia [28], below: “Some simple and boring work may be replaced by AI. But not all jobs.” [1GOV01] [28] (p. 376). “Doctors may feel they will be replaced [by Watson]. Because they [i.e., the doctors] made many efforts to achieve their status.” [3IT04] [28] (p. 376). “Hospital managers/doctors report to experiencing frustration when facing the real technology after the societal hype.” [3IT01] [28] (p. 373). |

| Subtheme 4: AI Assisting in Data Interpretation and Automation and Enhanced Diagnostic and Imaging Capabilities The use of AI in data interpretation was also mentioned in two studies [30,33]: “AI may make it easier to interpret a blood result or follow a protocol, but AI will always struggle when the same human can score 1/10 for a symptom today and 10/10 tomorrow” [Participant 201] [30] (p. 4). “AI can assist with routine tasks, such as analyzing pathology results or organizing patient records, acting like a personal assistant.” [Participant 135] [30] (p. 4). “AI was expected to facilitate better communication in clinical settings, and overall, there was the expectation that AI would become a familiar entity in patients’ lives, with hopes for personalized interactions” [Extract 3, Group 2] [33] (p. 4). |

| Subtheme 5: Patient and Public Adaptation to AI Integration According to participants in patient and public involvement (PPI), in a study by Katirai et al. [33], the PPI groups raised concerns about acceptability by patients. “The question is whether they will be acceptable to patients although they may be very accessible compared to the current system” [Participant 88] [30] (p. 5). |

| Subtheme 6: Improved Access and Communication and Potential For Enhanced Data Utilisation According to participants in patient and public involvement (PPI) in a study by Katirai et al. [33], the PPI groups felt that AI would be useful for improving patient communications and reducing disparities in healthcare. “Standardization of the level of healthcare, elimination of the concentration of patients at large hospitals” [Extract 6, Group 2] [33] (p. 4). “Possibility of clinical examinations and treatment from home for people in remote areas, the elderly, and people with disabilities” [Extract 2, Group 2] [33] (p. 4). Another study by Petersson et al. [25] highlighted ways in which AI could be used to improve communications with patients: “If the legislation is changed so that the management information can be automated…but they’re not allowed to do that yet. It could, however, be so that you open an app in a few years’ time, then you furnish the app with the information that it needs about your health status. Then the app can write a prescription for medication for you.” [Leader 2] [25] (p. 5–6). |

| Subtheme 7: Enhancing Diagnosis, Therapeutics, and Patient Care Alanazi’s study [29] focuses on the use of AI in facilitating personalized treatment recommendations, improving patient outcomes, and for the use of precision medicine, which are direct components of personalized medicine. The study by Lammon et al. [32] extended insights into the perspective of public collaborators on the perceived benefits and challenges of adopting AI in clinical practice. The study by Katirai et al. [33] identified the expectation of improved quality of care and personalized interactions as one of the benefits of AI in healthcare. This implies the use of AI to provide individualized care experiences to patients, aligning with the principles of personalized medicine. These are supported by quotes from participants from some of the above studies: “The integration of AI technology has significantly improved healthcare. It has made patient record management more efficient, boosted diagnostic accuracy, and allowed physicians to devote more time to patient care.” [29] (p. 3). “The integration of AI into EHRs has streamlined the extraction and analysis of detailed data, thereby facilitating the development of personalized treatment recommendations and ultimately leading to improved patient outcomes.” [29] (p. 3). “The healthcare organization has been transformed by incorporating AI technologies into their process, clinically and administratively. AI technology has enhanced the efficiency of patient data extraction, analysis, and treatment recommendations by aiding decision-making processes.” [29] (p. 3). “With emerging applications of AI in medical imaging technology and diagnostic screenings, there has been an unprecedented enhancement in patient care.” [29] (p. 3). “Excited about the future of healthcare because AI will be something the children will be familiar with going forward” [Extract 3, Group 1] [33] (p. 4). “In the future, the researchers, when they have large data, AI will help to accurately analyze them.” [FG1] [32] (p. 5). “AI can even detect things before […] a human can.” [FG3] [32] (p. 5). “AI can be used for detection…monitoring…management…decision making…as a carer, I think there is a lot of elements to AI, which I don’t think healthcare providers are using enough.” [FG2] [32] (pp. 5–6). Blease et al.’s study [30] focuses on participant skepticism about AI’s ability to replicate the human aspects of care, such as empathy and non-verbal communication, which are ethically important in patient care: “Technology will never attain a personal relationship with patients. We are essentially a people business. It’s personal relationships that count” [Participant 45] [30] (p. 3). “Technology cannot replace doctors. There is definitely a 6th sense” [Participant 635] [30] (p. 3). “Technology won’t replace GPs as patient management is about negotiation and managing risks and different patients have different views” [Participant 703] [30] (p. 4). The study [30] also discussed the patient acceptance of AI accountability and trust: “The somewhat blunt tool of technology as it stands will need to evolve some way before the culture of clinicians and patients will accept it” [Participant 453] [30] (p. 5). “Technology will be supporting clinicians in the very near future—the issue is responsibility and liability in legal terms for such tools” [Participant 453] [30] (p. 5). Lammons et al.’s study [32] discusses the data security and bias associated with data security, emphasizing the need for ethical data management: “AI picking up more Black people than the white population…we have to consider those kinds of ethical questions.” [FG2] [32] (p. 6). “We have to be careful…when we code the programming for AI, that [it] isn’t just the white population.” [FG2] [32] (p.6). “Some contributors warned that AI, through challenges like access and bias, could increase inequality. We need to think about how it’s going to affect everyone. I think we are running in terms of artificial intelligence and some people are going to get left behind. [FG1] [32] (p. 6). “You must involve patients and families and carers in that development and the design […]. Without that […] systems will be meaningless or less effective.” [FG2] [32] (p. 7). Patient Involvement in AI design was at the heart of Lammon et al.’s study [32]. Participants in this study focused on inclusivity: “You must involve patients and families and carers in that development and the design. Without that, systems will be meaningless or less effective.” [FG2] [32] (p. 7). “Certain Black minority ethnic people feel that this is yet another white exercise for white people. If you’ve got clinicians, leaders, researchers who have got their same background, they will appeal to a certain group.” [FG3] [32] (p. 8). Katirai et al. [33] found that members of the Patient and Public Involvement Panel (PPIP) had high expectations for AI’s impact, anticipating improvements in hospital administration, better quality of care, resource optimization, enhanced diagnosis and treatment, personalized interactions, cost savings, and reduced healthcare disparities. One patient participant expressed hopes for AI to simplify hospital procedures, shorten waiting times, and improve accessibility: “I expect that procedures at the hospital will be simplified… I hope that hospital visits will no longer exhaust patients and lead to a breakdown in their health” [Extract 5, Group 3] [33] (p. 4). |

| Theme 2: Challenges, Limitations, Concerns, and Risks Alanazi [29] outlined several challenges associated with AI adoption in healthcare, including concerns about data quality, privacy, and cybersecurity, as well as ethical and philosophical questions. Workforce displacement, transparency issues, cost allocation, and unintended consequences of AI also emerged as key barriers. One participant voiced concerns about AI governance: “Biased data can lead to unfair outcomes, while a lack of transparency and regulation can result in AI misuse. Proper governance is crucial for ethical and responsible AI use that does not harm society” [29] (p. 4). Katirai et al. [33] revealed additional concerns from the public, such as the potential for AI to alter healthcare dynamics and limit human autonomy, as well as issues with accuracy, accountability, and ethical implications. One group member raised concerns about AI’s tendency toward absolutes: “I think that in healthcare, the language of ‘absolutes’ is avoided, but AI healthcare may come with such absolutes” Psychological anxiety over the lack of human interaction was also noted, with one participant expressing worry about “no longer being able to meet the real thing” [Extract 7, Group 1] [33] (p. 5). Dumbach et al.’s [31] and Katirai et al.’s [33] findings all showed participants’ concerns regarding reliability and technological limitations: “Ten interviewees showed a consistent opinion regarding ‘reliability and technological limitations,’ concerning the current AI accuracy and needed supervision (G3, C1, C5–7) or existing issues of non-reproducibility and robustness in heterogeneous environments (G2–3).” [G3, C1, C5–7, G2–3] [31] (p. 2). “Issues of backups when online platforms are unavailable due to natural disasters, etc.” [Extract 10, Group 2] [33] (p. 5). |

| Theme 3: Opportunities and Prospects Presented by AI and ML Subtheme 1: AI’s Role in Enhancing Patient Care and Reducing Healthcare Disparities The potential for AI to reduce healthcare disparities and improve patient experience was a significant opportunity highlighted by Katirai et al. [33]. In particular, AI’s ability to optimize resources, enhance diagnosis and treatment, and provide personalized care were seen as key factors in improving healthcare outcomes: “It will become easier to accumulate and search (personal) information” [Extract 4, Group 3] [33] (p. 4). Petersson et al. [25] noted that AI can support patients, which can lead to more effective self-care and management of chronic conditions. “The complexity in terms of for example apps is very, very, very much greater, we see that now. Besides there being this app, so perhaps the procurement department must be involved, the systems administration must definitely be involved, the knowledge department must be involved and the digitalization department, there are so many and the finance department of course and the communication department, the system is thus so complex” [Leader 9] [25] (p. 9). Similarly, Pumplun et al. [26] suggest that AI can enhance the interpretation of large datasets, which is crucial for personalized treatment plans, since many physicians feel that they have fewer numbers of years of experience when compared to ML datasets: As a doctor who may have ten or 20 years of experience […], would I like to be taught by a machine […]? [S-03] [26] (10). “Nowadays, in the feel of health inequality and so on, I feel sometimes AI perhaps can be a fairer instrument” [FG3] [26] (p. 6). “It’s like with the police force, the facial recognition and AI […] picking up more Black people than the white population… we have to consider those kind of ethical questions” [FG2] [26] (p. 6). “I feel sometimes, patient safety could be endangered if you have, a very rigid, algorithm, that overlook [sic] some sometime very vital clue” [FG3] [26] (p. 7). |

| Subtheme 2: Risks Associated with Use Of AI and ML In Healthcare Liyanage et al. [27] highlighted the risks associated with AI in primary care, such as the limited competence of current AI technology in replacing human decision-making in clinical scenarios, risks of medical errors, biases, and secondary effects of AI use. The study also emphasized the need for regular scrutiny by clinicians due to uncertainties regarding AI accuracy and relevance. Sun and Medaglia [25] emphasized several challenges, including societal misunderstandings of AI’s capabilities and a lack of innovation spirit, especially in comparison to Western countries. An IBM China director noted: “We have to say the innovation spirit in the U.S. should be admired by us [Chinese]. […] We need to learn from them” [2IBM01] [25] (p. 373). The ethical and social challenges highlighted in Sun and Medaglia’s study involved issues related to racial differences and disease profiles. For instance “Western countries have more vascular-related diseases, while China has more hepatic diseases” [5GOV01] [25] (p. 373). Differences in treatment attitudes, such as cancer management, also present a challenge: “In the West… there is a greater emphasis on managing cancer as a chronic disease. [In China] patients do not see it this way” [1HP01] [25] (p. 373). Pumplun et al. [26] identified the lack of transparency and adaptability in ML systems as significant barriers to their adoption. The fragmented nature of proprietary clinic systems and legal concerns, such as liability for incorrect ML model results, were cited as critical challenges: “Who is responsible for the interpretation and possibly wrong results of the ML model?” [C-14] [26] (p. 10). Liyanage et al. [27] acknowledged the potential of AI to improve healthcare delivery but recommended further scrutiny of AI systems and mechanisms to detect biases in unsupervised algorithms. This suggests a future where AI systems could become more refined and trusted in primary care settings. Sun and Medaglia [28] noted that AI systems like Watson could offer solutions to healthcare challenges, but these opportunities are hampered by societal and technological limitations, including the need for more country-specific data and standards. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Okwor, I.A.; Hitch, G.; Hakkim, S.; Akbar, S.; Sookhoo, D.; Kainesie, J. Digital Technologies Impact on Healthcare Delivery: A Systematic Review of Artificial Intelligence (AI) and Machine-Learning (ML) Adoption, Challenges, and Opportunities. AI 2024, 5, 1918-1941. https://doi.org/10.3390/ai5040095

Okwor IA, Hitch G, Hakkim S, Akbar S, Sookhoo D, Kainesie J. Digital Technologies Impact on Healthcare Delivery: A Systematic Review of Artificial Intelligence (AI) and Machine-Learning (ML) Adoption, Challenges, and Opportunities. AI. 2024; 5(4):1918-1941. https://doi.org/10.3390/ai5040095

Chicago/Turabian StyleOkwor, Ifeanyi Anthony, Geeta Hitch, Saira Hakkim, Shabana Akbar, Dave Sookhoo, and John Kainesie. 2024. "Digital Technologies Impact on Healthcare Delivery: A Systematic Review of Artificial Intelligence (AI) and Machine-Learning (ML) Adoption, Challenges, and Opportunities" AI 5, no. 4: 1918-1941. https://doi.org/10.3390/ai5040095

APA StyleOkwor, I. A., Hitch, G., Hakkim, S., Akbar, S., Sookhoo, D., & Kainesie, J. (2024). Digital Technologies Impact on Healthcare Delivery: A Systematic Review of Artificial Intelligence (AI) and Machine-Learning (ML) Adoption, Challenges, and Opportunities. AI, 5(4), 1918-1941. https://doi.org/10.3390/ai5040095