1. Introduction

Steel is an extremely versatile product commonly used in essential industries, including construction, transportation, energy, and manufacturing. It is considered the most important engineering and construction material. The process of steel manufacturing, however, presents a challenging and hazardous work environment. Workers frequently interact with heavy machinery and are exposed to toxic gases and numerous safety hazards. In 2022, data collected by World Steel from 55 organizations covering 60% of its membership reported 18,448 injuries and 90 fatalities among workers, including both employees and contractors. The primary causes of these incidents were identified as slips/trips/falls and interactions with moving machinery [

1]. Injuries common in this industry include traumatic brain injuries (TBI), burns, amputations, spinal cord injuries, and carpal tunnel syndrome.

Numerous literature reviews underscore the importance of early hazard detection in significantly reducing these incidents [

2,

3]. The steel industry has proactively adopted various safety measures, such as comprehensive training programs, innovative maintenance and operation strategies, technological advancements for automating repetitive tasks, and consistent updates on safety protocols [

4,

5]. Despite these efforts, there remains an urgent need to advance the methods of hazard identification in steel mills by shifting from manual to automated processes, thereby reducing human error and enhancing safety interventions.

Computer vision, empowered by deep learning, offers robust capabilities for automating tasks like detection, tracking, monitoring, and action recognition, which can be specifically tailored to the steel manufacturing sector [

6,

7,

8]. For instance, identifying hazards such as proximity to heavy equipment can mitigate fatalities caused by moving machinery [

9,

10]. The use of personal protective equipment (PPE) is another critical area where computer vision can make a significant impact. According to the Occupational Safety and Health Administration (OSHA), proper PPE usage can prevent up to 37.6% of occupational injuries and diseases. Additionally, the failure to wear PPE contributes to 12–14% of occupational injuries leading to total disability [

11]. The Centers for Disease Control and Prevention (CDC) note that among all industries, steelworkers are particularly susceptible to traumatic brain injuries (TBIs) often resulting from slips/trips/falls. Wearing a safety hard hat can reduce the likelihood of a TBI by 70% in such incidents [

12]. In large steel manufacturing sites, manually ensuring comprehensive compliance with safety hard hat regulations is unfeasible. Here, computer vision’s object detection capabilities, enhanced by deep learning, can automate and improve the precision of safety hard hat detection.

While significant progress has been made in applying automated hazard identification and safety monitoring in industries like construction and agriculture through computer vision and deep learning, a system specifically designed and tested for the steel manufacturing industry is yet to be realized. This gap highlights the potential for impactful advancements in this field. The objective of this research is to explore the feasibility and potential of implementing computer vision technologies for safety management within the steel manufacturing industry. This is executed through a pilot case study focused on the utilization of computer vision-based deep learning technology, specifically designed to automatically detect the use of hard hats by steelworkers. To achieve this objective, a review phase characterizing hazards with computer vision application was conducted, and then a multi-criteria decision model was deployed in selecting commercially available computer vision programs for application toward safety in steel manufacturing. This analysis is crucial to determine the most appropriate computer vision system for effective safety application within the steel manufacturing industry. Findings from this study could demonstrate the efficacy of CV in enhancing safety management in the steel industry, suggesting its broader applicability in high-risk sectors. This research exemplifies the practical use of CV in safety management at actual steel manufacturing sites and could pave the way for integrating more advanced technologies in industrial safety practices.

2. Background

2.1. Overview of the Steelmaking Process

Steel production predominantly employs either the integrated mill, known as the Basic Oxygen Furnace (BOF), or the mini-mill, termed the Electric Arc Furnace (EAF) method [

13]. These methodologies diverge in their choice of raw materials and the inherent risks associated with each. The BOF process utilizes iron ore and coke, initially processed in a blast furnace and subsequently in an oxygen converter, where an exothermic reaction removes impurities like carbon, silicon, manganese, and phosphorus. In contrast, mini-mills primarily use scrap metal or direct reduced iron (DRI), processed in the EAF [

14].

While both methods necessitate rigorous safety management, there are notable operational differences. Integrated mills, generally larger, surpass mini-mills in steel production [

15]. EAF facilities, smaller and less economical, differ from the BOF by their intermittent operation, offering more flexibility [

16]. Despite its lower scale and output, the safety management in mini-mills is critical and often underestimated when compared to BOF processes [

14,

15]. The increasing focus on steel recycling and greenhouse emissions reduction has amplified the adoption of EAFs, which are capable of using 100% scrap steel to produce carbon steel and alloys [

17,

18]. This trend underscores the importance of robust safety management in mini-mills.

2.2. Occupational Hazards in Steel Manufacturing

Steelworkers encounter numerous safety risks inherent to their profession [

5]. Monitoring and reducing injury and fatality statistics are crucial. The steel industry primarily utilizes the Lost Time Injury Frequency Rate (LTIFR) and Fatality Frequency Rate (FFR) to gauge these metrics. LTIFR represents work-related incidents causing disability to employees or contractors, preventing them from performing their duties, expressed as the number of lost time injuries per million hours worked [

19]. A notable decrease in LTIFR from 4.55 in 2006 to 0.81 in 2021, a reduction of 82%, has been reported by the World Steel Association [

19]. The goal remains to further minimize these incidents toward a zero-incident rate. FFR, conversely, tracks fatalities among company and contractor employees. This index is critical as it represents loss of life and is often viewed as a lagging, rather than proactive, safety measure [

20,

21]. A proactive approach to reducing LTIFR can indirectly impact FFR. Recent data show an increase in FFR from 0.021 in 2019 to 0.03 in 2021, a 43% rise, highlighting the need for enhanced safety protocols [

19].

The steelmaking process, with its complex operations, poses various hazards. These risks, akin to those in construction, agriculture, and general manufacturing, are categorized by the International Labor Organization [

22] into physical, chemical, safety, and ergonomic hazards. Physical hazards encompass noise, vibration, extreme heat, and radiation. Chemical hazards include exposure to harmful gases and substances. Safety hazards, notably slips, trips, falls, proximity to heavy machinery, and risks from falling or flying objects, are predominant injury causes in the steel industry [

19]. Kifle et al. [

23] identified such incidents as the leading injury causes in this sector.

2.3. Current Safety Practices in the Steel Manufacturing Industry

The steel manufacturing industry has demonstrated significant progress in enhancing workplace safety, as evidenced by the decline in Lost Time Injury Frequency Rates (LTIFRs) over the past decade [

19]. The National Institute for Occupational Safety and Health [

24] identifies five hierarchical levels of hazard controls, which are ranked by effectiveness: personal protective equipment (PPE) controls, administrative controls, engineering controls, substitution, and elimination. These measures are increasingly being adopted in steel manufacturing sites.

For instance, administrative controls like the Lock-out/Tagout/Tryout (LOTO) procedures are crucial in preventing accidents during equipment maintenance. The rigorous use of PPE, a fundamental safety practice, has been evolving with innovations in the Internet of Things (IoT) and wearable technologies and safety incentives [

25]. This evolution has introduced smart PPEs, such as helmets, bracelets, and belts, providing real-time safety feedback, a potential boon for the steel industry [

23,

26,

27,

28,

29]. Additionally, workers training in hazard identification and safe working practices has advanced, with interactive simulators and the use of virtual and augmented reality technologies proving effective safety measures [

30,

31]. NIOSH posits that hazard elimination or substitution represents the most effective control mechanisms, as they remove or replace the hazard entirely. However, given the nature of steel manufacturing, complete elimination or substitution of certain hazards is impractical without ceasing specific operations. Thus, the focus shifts to engineering controls that leverage technology to distance workers from hazards. Implementing these controls might include modifying existing equipment or introducing advanced technologies like computer vision to automate certain tasks, thus enhancing worker safety.

2.4. Computer Vision Applications in Safety Management and Review of YOLO Models

Computer vision (CV), an interdisciplinary domain, capitalizes on advanced technologies for analyzing visual data, such as images and videos, to extract meaningful insights in real time. This technology, particularly beneficial in securing work environments by isolating workers from hazards, is recognized as a potent form of engineering control [

32,

33]. Object detection is a critical task in computer vision, aiming to identify and locate objects within an image or video. Among the various approaches developed, the You Only Look Once (YOLO) family of models has garnered significant attention for its real-time object detection capabilities. The original YOLO model, introduced by Joseph Redmon et al. [

34], marked a significant shift by framing object detection as a single regression problem rather than a classification problem. This novel approach enabled real-time detection, distinguishing YOLO from other object detection frameworks such as R-CNN and Fast R-CNN.

YOLOv2, also known as YOLO9000, introduced enhancements, including batch normalization, a high-resolution classifier, and multi-scale training, allowing it to detect over 9000 object categories using a joint training algorithm that combined detection and classification datasets [

35]. YOLOv3 further advanced the architecture by adopting a multi-scale prediction strategy with residual connections and predicting bounding boxes at three different scales, which improved its capability to detect smaller objects. YOLOv4, developed by Alexey Bochkovskiy et al. [

36], optimized the model for single GPU systems by integrating advanced techniques such as CSPDarknet53 as the backbone, PANet path aggregation, and the use of the Mish activation function, setting new benchmarks in speed and accuracy. YOLOv5, released by Ultralytics, introduced major improvements in usability and performance, incorporating features like auto-learning bounding box anchors, mosaic data augmentation, and integrated hyperparameter evolution. YOLOv5 is available in various versions (small, medium, and large), catering to different resource constraints and accuracy requirements [

37]. Subsequent versions, with minor distinctions from version 5, such as YOLOv6 and YOLOv7, focused on efficiency and accuracy improvements, while YOLOv8 introduced architectural changes that further refined detection capabilities, particularly in challenging scenarios with occlusions and complex backgrounds. YOLOv8 provided five scaled versions: YOLOv8n (nano), YOLOv8s (small), YOLOv8m (medium), YOLOv8l (large), and YOLOv8x (extra-large). YOLOv8 supports multiple vision tasks such as object detection, segmentation, pose estimation, tracking, and classification [

37]. YOLOv9, on the other hand, leverages hybrid neural network architectures, enhanced data augmentation techniques, and improved loss functions to deliver unprecedented accuracy and robustness [

38].

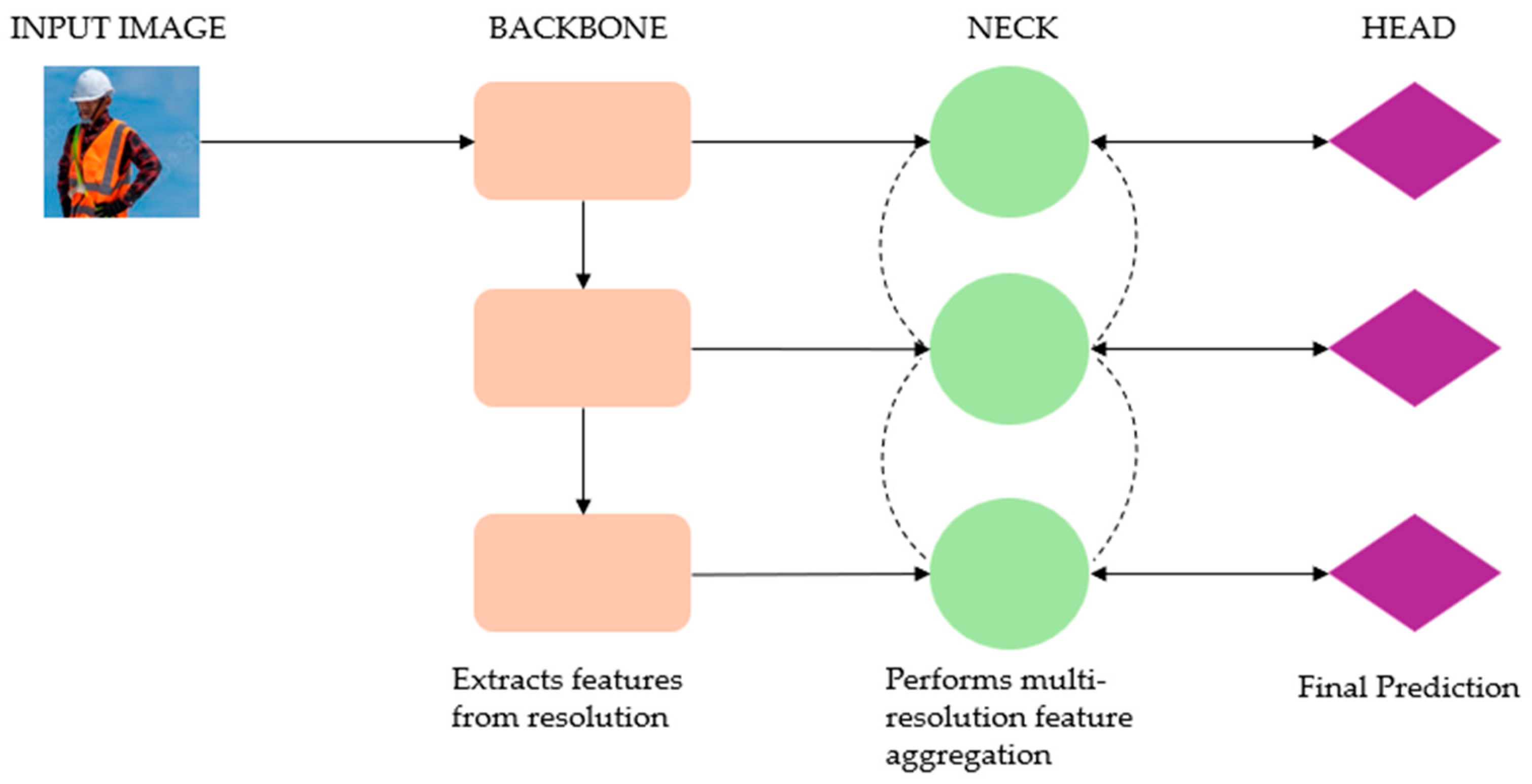

The architecture of YOLO models has evolved significantly, incorporating various innovations to enhance their detection capabilities. The backbone network, responsible for extracting feature maps from the input image, has seen a progression from Darknet-19 in earlier versions to deeper and more complex architectures like CSPDarknet53 [

37,

38]. The neck component, which aggregates features from different stages of the backbone, often uses PANet and FPN architectures to enhance detection. The head of the YOLO model generates the final predictions, including bounding boxes and class probabilities, with YOLOv3 introducing multi-scale predictions refined in subsequent versions.

Figure 1 shows the architecture of modern object detectors, including the backbone, the neck, and the head.

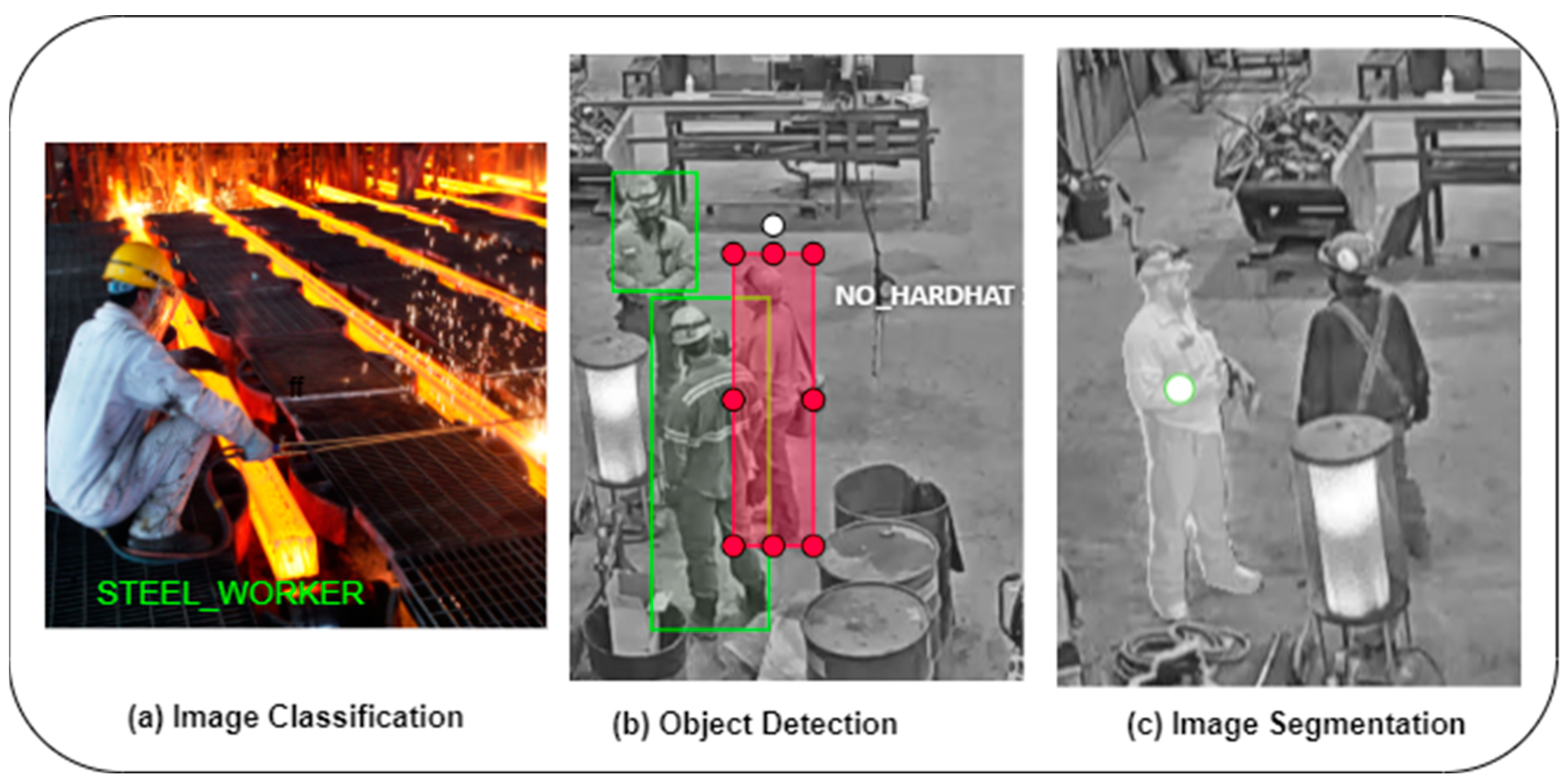

Figure 2 shows an example of different tasks that could be performed on YOLO models, a scenario in the object detection task where the non-compliant worker (no_hardhat) is highlighted with a red bounding box. These models use a combination of localization, confidence, and classification loss functions, with advances in loss functions like Complete Intersection over Union (CIoU) and Distance Intersection over Union (DIoU), improving model convergence and accuracy.

YOLO networks have been applied across various domains, demonstrating their versatility and effectiveness. In autonomous driving, YOLO models are used for real-time object detection, enabling the detection of pedestrians, vehicles, traffic signs, and obstacles [

39]. In security and surveillance, they facilitate real-time monitoring and threat detection [

40]. In healthcare, YOLO networks assist in medical imaging tasks such as tumor detection, organ segmentation, and surgical tool localization [

41]. In agriculture, they are employed for crop monitoring, pest detection, and yield estimation, enhancing precision farming practices [

42].

Table 1 shows a summary of other domains in which YOLO networks have been implemented.

2.5. Research Need Statement

The application of CV systems in the steel manufacturing industry, particularly for safety management, remains underexplored in the existing literature. Current studies predominantly focus on enhancing productivity through CV applications in areas like quality control, monitoring of continuous casting processes, defect detection on steel surfaces, and breakout prediction [

50,

51,

52]. These studies, while valuable, do not specifically address safety management within the steel manufacturing industry. Given the dynamic and hazardous nature of steel manufacturing, along with the variety of risks present in its processes, there is a clear need for dedicated research on the potential of CV systems to improve industrial safety in this sector. This paper aims to bridge this gap by presenting pioneering work that could serve as a foundation for future studies focused on developing CV applications for safety management in steel manufacturing.

This research contributes significantly to the existing body of knowledge by evaluating commercially available CV programs suitable for safety management in the steel industry. The findings offer a comprehensive overview of safety-oriented CV systems that can be applied in this sector, providing a template for steel manufacturers interested in integrating CV technology into their safety management protocols. Additionally, the paper showcases a practical implementation of CV for detecting personal protective equipment (PPE) in a steel manufacturing context, with a case study focusing on safety hard hat detection. This practical application demonstrates the feasibility of using CV for safety management in steel manufacturing.

3. Materials and Methods

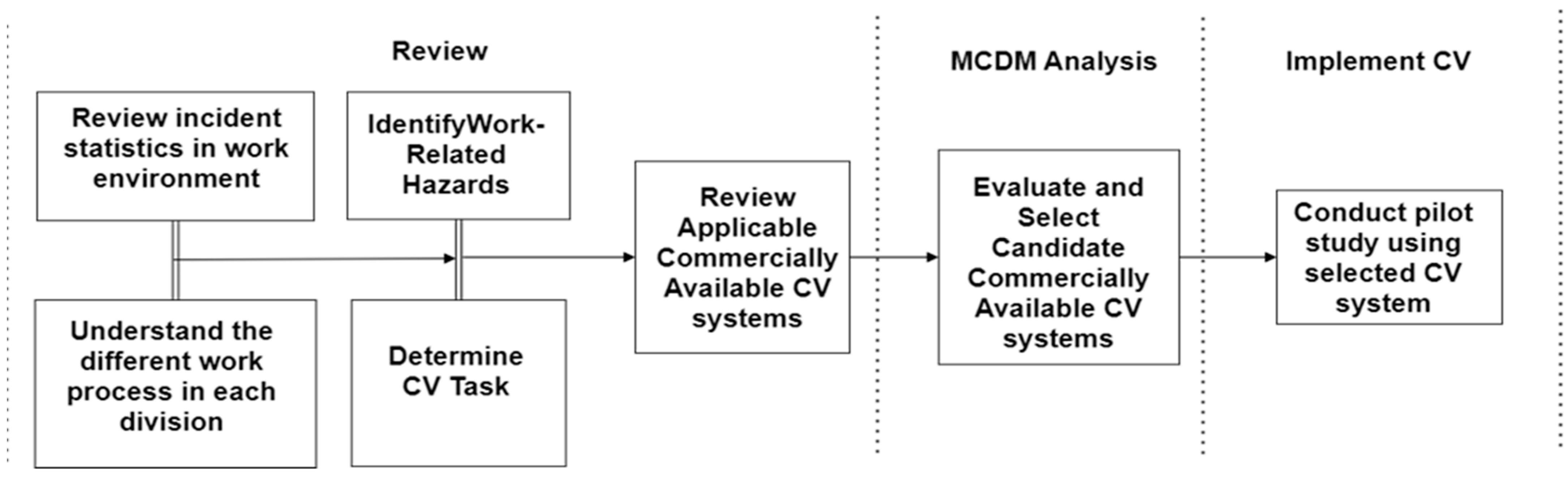

This study aimed to test the feasibility of CV application for safety management in steel manufacturing by conducting a pilot case study that leverages the approach of computer vision-based deep learning technology to automatically detect hard hats on steelworkers. To achieve this objective, a review phase characterizing hazards with computer vision application was conducted, and then a multi-criteria decision model was deployed in selecting commercially available computer vision programs for application toward safety in steel manufacturing.

Figure 3 shows the research process for this study.

3.1. Characterization of Computer Vision Applications for Safety in Steel Manufacturing

To effectively characterize the application of computer vision (CV) for safety in steel manufacturing, especially in a mini-mill context, this study’s approach encompassed a comprehensive understanding of the various work processes involved. This endeavor unfolded in phases: review, observation, and characterization. During the review phase, the research team scrutinized reports and academic papers on steel mini-mill operations. The team further delved into the mini-mills’ operational standards, machinery types, operation modes, and key safety metrics like the Lost Time Injury Frequency Rate (LTIFR) and Fatality Frequency Rate (FFR). Significantly, the Association for Iron & Steel Technology (AIST) steel wheel application proved invaluable. It offered a systematic and visual overview of standard mini-mill operations, enhancing our conjectural understanding of these processes.

Building on this foundational knowledge, the research team sought an empirical, practical perspective through a live tour of a steel mini-mill. During this visit, the mill’s management team led the research team through various departments and work processes. Delving deeper, group interviews, focusing on gathering narratives about current safety hazards, existing safety measures, and their shortcomings, were conducted as suggested by Bolderston [

53]. The interview, lasting approximately 70 min, was a collaborative effort, with team members actively sharing notes and highlighting key points, aligning with the methodology outlined by Guest [

54]. The full-day tour, combined with the initial review, provided a robust foundation for the research approach. It enabled a thorough understanding of each work process in the steel mini-mill and identified the inherent hazards faced by workers. With this understanding, each identified hazard could be effectively aligned with corresponding CV tasks.

3.2. Evaluation and Selection of Commercially Available CV Systems for Safety Management

To conduct this pilot case study of detecting workers’ compliance with safety hard hats in the steel mini-mill, there was a need to determine the computer vision system that would serve as the detection system for this pilot study.

3.2.1. Computer Vision System Search

The research team conducted an extensive online search to identify commercially available computer vision (CV) systems suitable for the pilot case study in steel manufacturing safety. Utilizing Google, key search phrases such as “computer vision companies”, “artificial intelligence in health and safety”, “commercially available object detection companies”, and “artificial intelligence and workplace safety” were employed for the search. This search strategy yielded a vast array of CV systems. To refine these results, the focus was exclusively on CV systems that demonstrate potential for use in safety applications. Additionally, to enhance the depth and relevance of the CV system search, recommendations from industry professionals were sought, following the approach recommended by Creswell [

55]. This dual strategy of combining an internet search with expert consultations allowed for the identification of the most suitable and effective CV systems relative to this study’s scope, ensuring that the selected systems were both commercially available and directly applicable to workplace safety.

3.2.2. Computer Vision System Evaluation and Selection

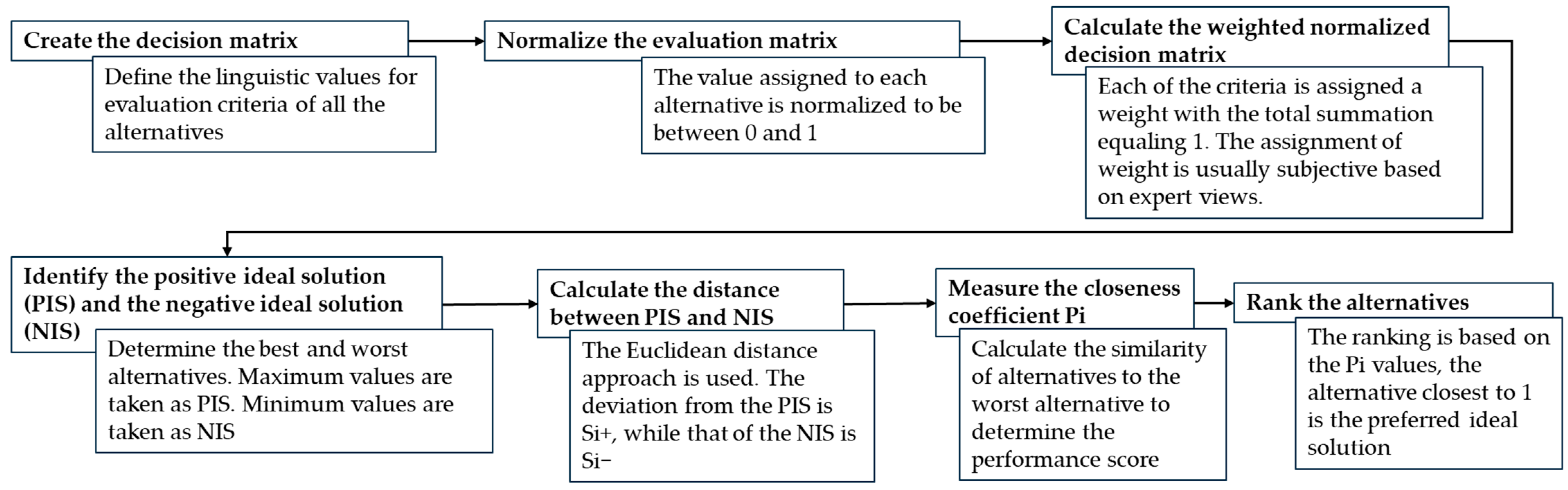

The research identified eight operational off-the-shelf CV systems suitable for safety management in the steel industry. To select the most appropriate system for this pilot study, a multi-criteria decision-making (MCDM) method was utilized. MCDMs are essential tools for decision-makers, aiding in choosing the best option among multiple alternatives based on various criteria. Common MCDMs include the Analytical Hierarchy Process (AHP), Analytical Network Process (ANP), Multi-objective Optimization on the basis of Ratio Analysis (MOORA), and the Technique for Order Preference by Similarity to Ideal Solution (TOPSIS), with the selection often depending on the decision maker’s preferences and objectives [

56].

For this study, TOPSIS, a method praised for its straightforwardness, ease of interpretation, and efficiency in identifying the best alternative, as well as visualizing differences between alternatives using normalized values, was used [

57,

58]. Originating from Hwang and Yoon in 1981 and further modified since TOPSIS’s core principle is to select the best option by measuring Euclidean distances, it aims to minimize the distance to the ideal alternative (PIS) and maximize the distance from the non-ideal alternative (NIS). The optimal choice is the one closest to the PIS and farthest from the NIS.

Figure 4 of this study illustrates the flow process of the TOPSIS model, outlining the systematic approach we employed to select the most suitable CV system for our pilot study in the steel industry.

The following steps explain the TOPSIS method implemented in this study:

Step 1: Create the decision matrix consisting of alternatives (the eight commercially available computer vision programs) and criteria (PPE detection, data privacy, proximity to heavy equipment, slips, trips, falls, etc.). Also, define the importance weights of the criteria. Linguistic values for “available” or “not available” were set for the criteria.

Decision matrix

. Weight factor

Step 2: Normalize the evaluation matrix.

This is to ensure all data are in the same unit. The formulas in Equations (1)–(3) [

56,

59,

60] below are used to calculate the normalized values:

Step 3: Calculate the weighted normalized decision matrix.

This is the product of the weights with the normalized values given by Equation (4) [

56,

59,

60].

For Function criteria are either costs or benefits; in this case, “availability” is the benefit function yielding toward a better alternative.

Step 4: Identify the positive and negative ideal solution. This is carried out by determining the best and the worst alternatives for each evaluation criterion (i.e., the maximum and minimum values) among all the CV programs.

The positive ideal solution is denoted as V

+ in Equation (5) [

56,

59,

60].

Meanwhile, the negative ideal solution is denoted as V

− in Equation (6).

Step 5: Calculate the distance between the PIS and NIS.

The Euclidean distance is measured, the deviation from PIS is

, while the deviation from NIS is

. This is shown in Equations (7) and (8) [

56,

59,

60].

Step 6: Measure the closeness coefficient pi.

Using values obtained from

, the alternatives are ranked; in this case, the

value closest to 1 is the best alternative among the options. To determine

, Equation (9) is used [

56,

59,

60]:

3.3. Pilot Study on Safety Hard Hat Detection

3.3.1. Pilot Study Context

There has been extensive research on the application of computer vision (CV) in various industries, as evidenced by the literature review. However, the steel industry remains underrepresented in studies focusing on CV applications for safety management. This gap is particularly significant given the unique challenges that the steel industry faces, such as a volatile working environment, a shortage of training data, and concerns about data privacy among industry stakeholders. This study aims to address these challenges by serving as an introductory feasibility pilot study on the application of computer vision for safety management in the steel manufacturing industry. The primary focus is on assessing the practicality and feasibility of implementing CV systems in this context rather than on optimizing performance metrics. To conduct this feasibility pilot study, the candidate CV system utilized was selected through an MCDM analysis. This study specifically explores the detection of safety hard hats on steelworkers. Using the selected CV system, a quantitative analysis was performed to evaluate its effectiveness and feasibility in the steel manufacturing environment. By focusing on feasibility, this study provides a foundational outlook on the potential of CV applications in the steel industry, paving the way for more detailed and performance-oriented research in the future. The results and insights gained from this pilot study are crucial for understanding the practical implications and readiness of CV systems for enhancing safety management in steel manufacturing.

3.3.2. Detection Models

Three pre-trained YOLO variants—YOLOv5m, YOLOv8m, and YOLOv9c were utilized and compared for detecting safety hard hats in the steel manufacturing industry. YOLOv5m, known for its balance of speed and accuracy, incorporates CSPNet for efficient gradient flow, PANet for multi-scale feature fusion, and SPPF for enhanced feature extraction. YOLOv8m builds on this foundation with CSPDarknet53, combining PANet and FPN for improved multi-scale detection and advanced augmentation techniques like MixUp and CutMix to enhance generalization, while YOLOv9c integrates CSPResNeXt for robust feature extraction, BiFPN for optimal feature fusion, and adaptive detection methods for improved localization and reduced false positives. These models collectively demonstrate significant advancements in detection performance, offering speed, accuracy, and robustness, making them ideal for real-time safety applications in industrial settings. The comparison of the three models can be seen in

Table 2.

3.3.3. Dataset Collection and Processing

In conducting the quantitative analysis for this study, a dataset comprising 703 meticulously labeled images from a steel manufacturing site was utilized. The images were extracted from five hours of CCTV footage, specifically from the maintenance (‘maint’) area of a steel mini-mill, which also includes a storeroom section. This particular area was selected due to its high frequency of worker activity, rendering it an optimal site for data collection within the constrained observation window. The labeling process was executed using the Computer Vision Annotation Tool (CVAT), an interactive video and image annotation tool designed for computer vision applications.

To assess the impact of fine-tuning on the pre-trained primary OD model, it was retrained using the small yet diverse dataset of 703 images. Diversity was introduced to the dataset through various augmentation techniques, including rotation, horizontal flipping, and adjustments in grayscale brightness and saturation. This strategy was critical to avert the overfitting of the model to the dataset. The scarcity of instances depicting safety hard hat violations in the CCTV data necessitated the generation of artificial images embodying such infractions. This was accomplished through the implementation of the stable diffusion inpainting technique, as expounded by Rombach et al. [

61], with the result exhibited in

Figure 5. A K-fold distribution (K = 5) was employed to enhance the robustness of the model evaluation. For each fold, the dataset was split into training (80%) and validation (20%) subsets to facilitate comprehensive model assessment.

Figure 6 shows a sample of the training dataset.

3.3.4. Evaluation Metrics

To evaluate the performance of the object detection (OD) models, the following metrics were used: precision, recall, F1-score, average precision (AP), specificity, and area under the curve (AUC) [

62,

63]. To detect workers’ compliance with wearing safety hard hats, not wearing a hard hat was considered the positive class. Where TP represents correct predictions of a person not wearing a hard hat, FP represents incorrect predictions of a person wearing a hard hat, and FN represents incorrect predictions of a person not wearing a hard hat. An Intersection over Union (IoU) threshold of 0.5 was used to determine the identification of these parameters in the confusion matrix.

4. Results and Discussion

The results of this study are systematically outlined in the subsequent sections, following the research methodology. These sections detail hazard characterization, the application of the TOPSIS technique, and the analysis of data from this pilot case study. This structured approach offers a comprehensive view of this study’s findings, from identifying and categorizing hazards to applying TOPSIS for their assessment and culminating in the empirical insights gained from this case study.

4.1. Safety Hazard Characterization and TOPSIS Analysis

4.1.1. Safety Hazard Characterization for CV Applications in Steel Manufacturing

This study rigorously identifies hazards inherent in each stage of the mini-mill steel manufacturing process and demonstrates how CV can be strategically employed to monitor these hazards. This approach aims to provide early warnings, thereby mitigating the risks of injuries, illnesses, and fatalities. The hazard assessment begins at the shredding site, a phase where scrap metals are processed, segregating ferrous from non-ferrous metals. It is noteworthy that not all mini-mills include a shredding phase; however, this study encompasses it for comprehensive analysis. The process continues with the transportation of the ferrous metal to the Electric Arc Furnace (EAF) for melting. The subsequent stage involves purification at the Ladle Metallurgical Station (LMS), followed by the solidification of the molten steel into semi-finished forms like billets, slabs, or blooms at the continuous caster. These semi-finished products undergo further processing in the rolling mill, where they are transformed into finished steel products through various methods, including annealing, hot forming, cold rolling, pickling, galvanizing, coating, or painting. The manufacturing cycle concludes with the finishing and transportation of the final products.

Table 3 in this study provides a detailed mapping of CV tasks and implementation processes to the identified hazards in each work process at the mini-mill. The goal is to establish a framework where CV can effectively track, detect, or monitor these hazards.

Figure 7 in this study visually encapsulates the entire steel manufacturing workflow, highlighting the pivotal role of CV in augmenting safety throughout the process. By aligning CV tasks with specific hazards at each stage, this study offers a pragmatic approach to enhancing safety in the dynamic environment of steel mini-mills.

4.1.2. TOPSIS Analysis

With the result of the characterization of hazards, the need to select a CV system that would be deployed in conducting the pilot case study was imperative. TOPSIS technique, as described in the methodology, was deployed for the analysis of the eight (8) computer vision programs (alternatives) considered for evaluation, with the objective of selecting one. The eight CV systems enlisted were Everguard, Intenseye, Cogniac, Protex, Rhyton, Chooch, Kogniz, and Matroid. These alternatives were appraised on eleven (11) criteria: PPE detection, data privacy, ergonomics, health, geofencing, proximity to heavy equipment, slips/trips/falls, application in steel manufacturing real-time processing, user-friendliness of graphical user interface (GUI), and versatility in other applications. These criteria were identified from the literature review and selected based on their respective influence in achieving satisfactory safety management in steel manufacturing.

Table 4,

Table 5,

Table 6,

Table 7 and

Table 8 present the results of the TOPSIS MCDM approach used for analysis.

Table 4 shows the defined linguistic values for each of the evaluation criteria, with 1 and 2 denoting “Not Available” and “Available”, respectively.

Table 5 presents the elements of the matrix, showing the relationship between the criteria and the alternatives with their assigned weights. This assignment of weights was subjectively determined by the research team’s safety experience and the respective criteria’s impact on the research objective.

Table 6 shows the normalized values of the evaluation matrix.

Table 7 shows the weighted values of the evaluation matrix, including PIS and NIS.

Table 8 shows the separation distance measure from the PIS and the NIS, the closeness ratio, and the ranking of alternatives. The closeness ratio to the PIS was the determinant of which alternative was selected. Out of the eight CV systems, Everguard, Intenseye, and Chooch were ranked 1st, 2nd, and 3rd, respectively, with a closeness ratio of 0.760, 0.444, and 0.435. Therefore, based on the examined criteria, this MCDM analysis selected Everguard as the candidate selected CV system among the evaluated systems that could be deployed for safety management in steel manufacturing.

4.2. Pilot Case Study Results: Safety Hard Hat Detection Using Candidate CV System

4.2.1. Experimental Environment

The network model used for analysis was developed using the Python programming language in conjunction with the PyTorch deep learning library. PyTorch provided the necessary framework to input the annotated data into the Python interface, facilitating the process of updating weights and performing calculations essential for the analysis. The specific configuration of the environment, including the CPU, GPU, and other relevant libraries and tools, is detailed in

Table 9. All training images were resized to meet the 640-input size. These datasets underwent training for 50 epochs with batch sizes of 16. The learning rates were set at 0.001. Constant values for the momentum, box loss gain, and optimizer choice were maintained. Utilizing the Stochastic Gradient Descent (SGD) optimizer with a momentum of 0.937, based on Gupta et al. [

72], who noted that although SGD converges slower and its gradients are uniformly scaled, its lower training error leads to better generalization, which is especially beneficial for test data. The training was executed using an NVIDIA 4090 ×1 GPU. This setup was instrumental in ensuring the smooth execution and accurate processing of the object detection task.

4.2.2. Detection Results across Models

Precision, Recall, F-1 Score, and mAP Results

To ensure a robust evaluation of the models’ performance, a five-fold cross-validation approach was employed. This method allows for a comprehensive assessment of the models’ ability to generalize to unseen data, providing a more reliable estimate of their real-world performance. Three state-of-the-art YOLO variants—YOLOv5m, YOLOv8m, and YOLOv9c were evaluated on a steel mill dataset comprising 703 labeled images.

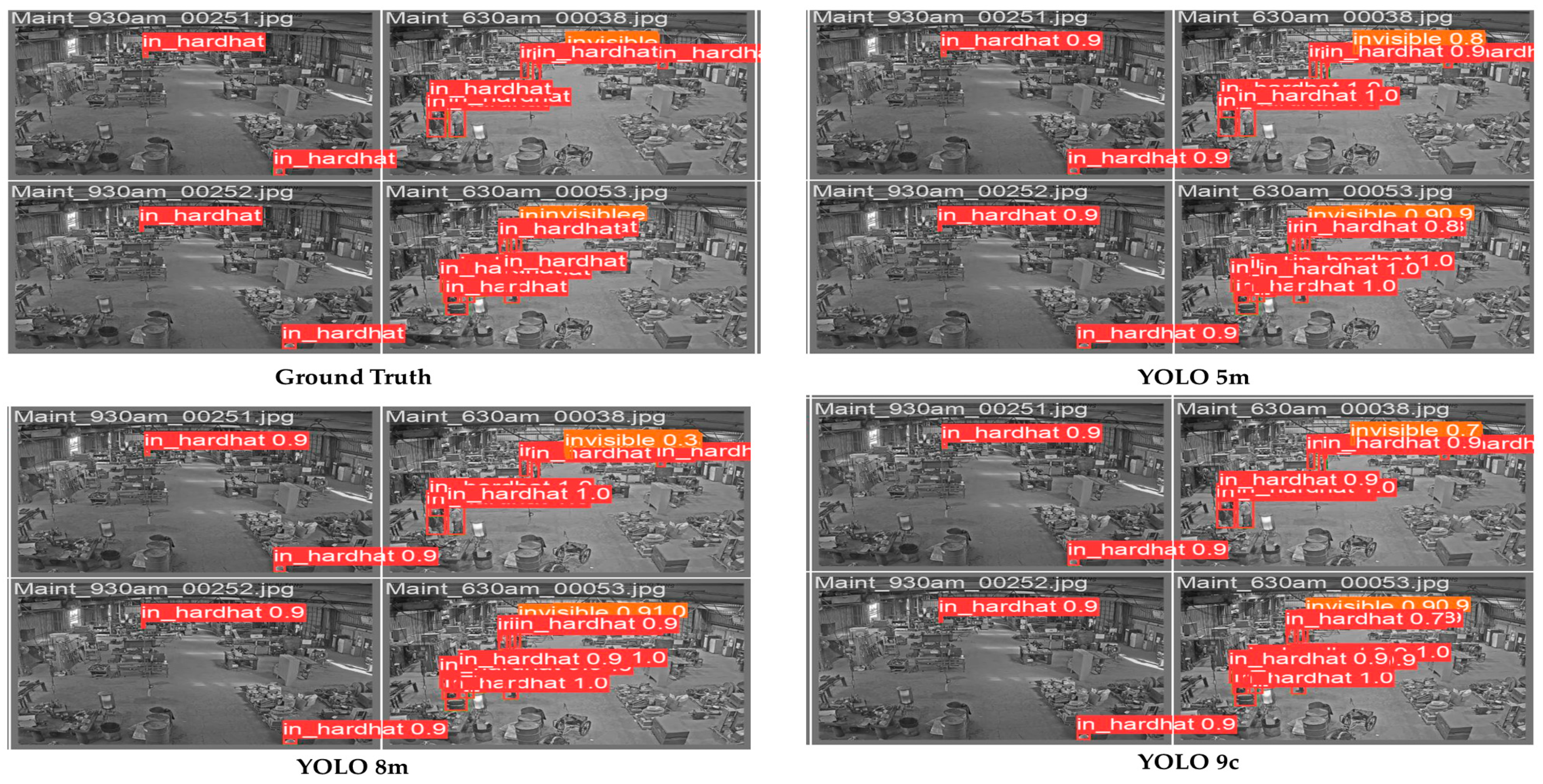

The evaluation involved partitioning the dataset into five equally sized subsets, where each subset served as a test set once while the remaining subsets were used for training. This process was repeated five times, ensuring that every image was used for both training and validation. The performance metrics for each fold were averaged to provide an overall estimate of the models’ effectiveness. A visual comparison of the models’ predictions against the ground truth labels in an instance is shown in

Figure 8. The ground truth images represent the actual safety compliance labels, serving as the benchmark. The detection results for each model highlight instances of workers wearing hard hats (“in_hardhat”), not wearing hard hats (“no_hardhat”), and cases where workers are not visible (“invisible”). High confidence scores are observed across various models in these instances, reflecting the models’ predictive capabilities.

Table 10 presents a comprehensive summary of the cross-validation results for each model across four key performance metrics: precision, recall, F1-score, and mean average precision (mAP). These metrics provide a detailed insight into the models’ effectiveness in detecting safety compliance and violations. High precision indicates the models’ ability to accurately identify non-compliant workers, minimizing false positives. High recall ensures that most non-compliant instances are detected, minimizing false negatives. The F1-score provides a balance between precision and recall, reflecting the overall effectiveness of the models. Mean average precision (mAP) evaluates the models’ performance across various recall levels, providing a comprehensive measure of accuracy table that illustrates the results of K-fold cross-validation for three different YOLO models, YOLOv5m, YOLOv8m, and YOLOv9c, highlighting their performance in terms of average precision, recall, F1-score, and mean average precision (mAP) across five folds. YOLOv5m demonstrated an average precision of 0.976, with individual fold values ranging from 0.96 to 0.98. Its recall was consistently high, averaging 0.974, indicating that the model effectively identifies true positive instances. However, its F1-score exhibited some variability, with a mean of 0.956, suggesting fluctuations in the balance between precision and recall. The mAP for YOLOv5m averaged 0.940, showing robust object detection capabilities across different classes despite some variability.

YOLOv8m, on the other hand, exhibited superior consistency across all metrics. Its average precision was 0.978, with little variation across folds. The recall averaged 0.974, matching YOLOv5m, but with less variability, indicating more reliable performance. The F1-score for YOLOv8m was consistently high, with an average of 0.978, reflecting its strong ability to balance precision and recall. The mAP for YOLOv8m averaged 0.938, demonstrating its capability to accurately detect objects across different classes with slight variability but still maintaining high performance.

YOLOv9c displayed high precision and recall values similar to YOLOv8m, with averages of 0.974 and 0.976, respectively. Its F1-score was the highest among the three models, averaging 0.982, indicating the best balance between precision and recall. YOLOv9c’s mAP was the highest, with an average of 0.944, showcasing its superior performance in detecting objects across all classes consistently.

Overall, the high performance across all models (with all metrics above 0.93) demonstrates the viability of using these YOLO variants for hard hat detection in steel manufacturing environments. The consistent recall scores obtained are particularly important for safety applications, as they indicate a low probability of missing instances where workers are not wearing hard hats. However, the slight variations in performance metrics highlight the importance of model selection based on specific use-case requirements. For instance, if minimizing false alarms is a priority, YOLOv8m might be preferred due to its high and consistent precision. If adaptability to various scenarios is crucial, YOLOv9c could be the better choice, given its superior mAP.

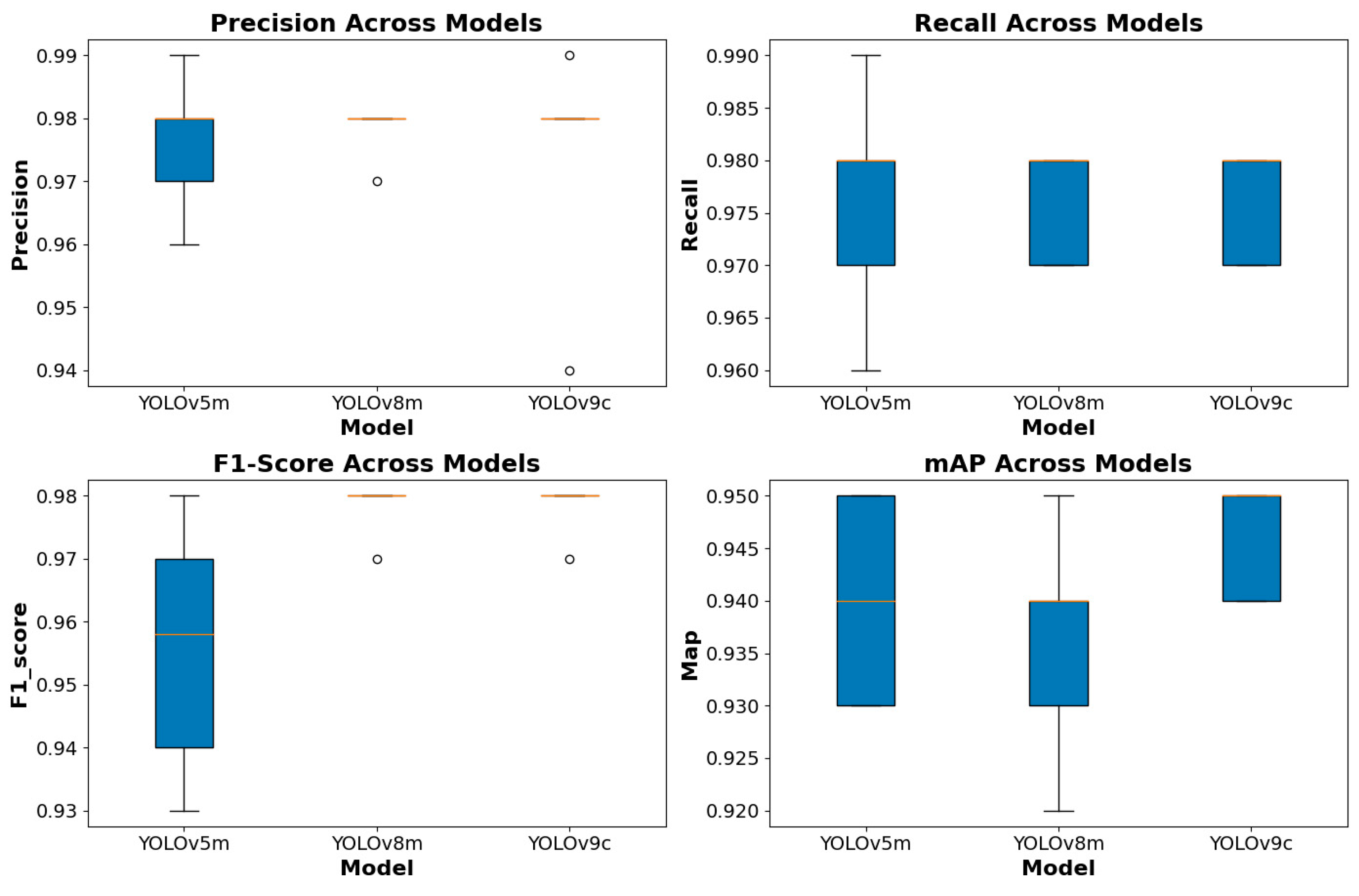

The box plots in

Figure 9 provide a visual representation of the performance of three YOLO models (YOLOv5m, YOLOv8m, and YOLOv9c) across multiple metrics: precision, recall, F1-score, and mean average precision (mAP). The precision plot shows that YOLOv5m has a wider spread compared to YOLOv8m and YOLOv9c, indicating more variability in its precision across different folds. The median precision of YOLOv5m is slightly lower than that of YOLOv8m and YOLOv9c, which have very similar and consistent precision values, as evidenced by their narrow interquartile ranges and few outliers.

In the recall plot, YOLOv5m exhibits more variability compared to the other two models. The recall values for YOLOv8m and YOLOv9c are very consistent, with their medians and interquartile ranges nearly identical, highlighting their reliability in identifying true positive instances across different folds. The F1-score plot shows that YOLOv5m has a wider interquartile range and several outliers, indicating fluctuations in balancing precision and recall across folds. In contrast, YOLOv8m and YOLOv9c demonstrate very stable F1-scores, with narrow interquartile ranges and few outliers, reflecting their strong and consistent performance. The mAP plot reveals that YOLOv5m has a slightly wider spread compared to YOLOv8m and YOLOv9c, but its median mAP is comparable to the other two models. YOLOv8m shows a noticeable variability in mAP, suggesting some inconsistency in detecting objects across different classes. YOLOv9c, however, maintains a high and consistent mAP, as indicated by its narrow interquartile range and absence of outliers.

The high performance across all models (with all metrics consistently above 0.93) validates the feasibility of using these YOLO variants for hard hat detection in steel manufacturing environments. The consistently high recall scores are particularly crucial for safety applications, as they indicate a low probability of missing instances where workers are not wearing hard hats. However, the slight variations in performance metrics highlight the importance of model selection based on specific use-case requirements: For areas where minimizing false alarms is critical, YOLOv8m might be preferred due to its high and consistent precision and F1-score. For applications requiring adaptability to various scenarios or where overall detection performance is paramount, YOLOv9c could be the optimal choice given its superior mAP. In scenarios where computational resources are limited or where a balance between performance and model complexity is needed, YOLOv5m remains a viable option.

Specificity and AUC Results

The AUC and specificity analysis in

Figure 10 revealed nuanced performance differences among YOLOv5m, YOLOv8m, and YOLOv9c for hard hat detection, with an additional challenge presented by the grayscale nature of the source videos. For the “in_hardhat” class, all models demonstrated high AUC values (>0.7), with YOLOv9c slightly outperforming the others (AUC ≈ 0.75), indicating robust discrimination ability for compliant hard hat usage even in the absence of color information. However, the “no_hardhat” class presented significant challenges, with lower AUC scores across all models, particularly for YOLOv5m (AUC ≈ 0.38), while YOLOv9c showed the best performance (AUC ≈ 0.6). This performance disparity likely stems from a class imbalance in the training data, limitations of synthetic data augmentation, and the reduced feature space inherent to grayscale imagery. The grayscale format potentially exacerbated difficulties in distinguishing subtle contrasts between hard hats and backgrounds or other headwear, especially in low-light areas of the steel mill.

Specificity analysis further elucidated these trends, with YOLOv8m exhibiting the highest specificity for the “in_hardhat” class (≈0.54), suggesting superior false positive mitigation even in grayscale conditions. For the “no_hardhat” class, YOLOv9c showed the highest specificity (≈0.55), albeit with greater variability across folds. These results underscore the need for targeted improvements in model architecture and training strategies, with particular attention to enhancing performance on grayscale inputs. Future work should focus on addressing class imbalance through more sophisticated data augmentation techniques, developing illumination-invariant features, and potentially exploring multi-modal approaches that can complement the limited information in grayscale imagery. While YOLOv9c demonstrates the most promise for real-world deployment in steel manufacturing safety monitoring, particularly for detecting safety violations, further refinement is necessary to improve the reliability of “no_hardhat” detection in challenging grayscale scenarios typical of steel manufacturing environments.

5. Contributions and Limitations of This Study

This study provides a detailed characterization of the specific hazards present in the steel manufacturing industry and explores how computer vision (CV) technologies can be applied to mitigate these risks. By identifying key risk factors and mapping them to CV applications, the research offers a strategic framework for enhancing workplace safety; this detailed characterization is currently lacking in existing research. This pilot study makes several significant contributions to the field of computer vision-based safety management in the steel industry while also acknowledging important limitations. Primarily, it pioneers the application of state-of-the-art YOLO models (YOLOv5m, YOLOv8m, and YOLOv9c) for safety management, specifically hard hat detection in steel manufacturing environments, addressing a critical gap in both the literature and industry practice. Also, this study provides a unique analysis of these models’ performance on grayscale imagery, which is common in industrial CCTV systems but underrepresented in computer vision research. The comprehensive comparison of these models, utilizing diverse metrics (precision, recall, F1-score, mAP, specificity, and AUC) across multiple classes (in_hardhat, no_hardhat, and invisible), provides valuable insights into their relative strengths and weaknesses in this specific context. Furthermore, this study identifies unique challenges in applying computer vision in steel manufacturing, such as extreme lighting conditions and dynamic environments, establishing a performance baseline and methodological framework for future research.

However, several limitations must be considered when interpreting these results. This study utilized a relatively small dataset (703 images) from a specific area of a steel mini-mill, potentially impacting the generalizability of findings. Class imbalance, particularly the scarcity of “no_hardhat” instances, necessitated synthetic data augmentation, which may not fully capture real-world complexity. Additionally, this study’s focus solely on hard hat detection does not address the full spectrum of safety equipment required in steel manufacturing.

Despite these limitations, the high overall performance of YOLO models in hard hat detection, especially in the precision, recall, F1-score, and mAP, demonstrates the potential of computer vision for enhancing safety monitoring in steel manufacturing. These findings suggest that with further refinement, such models could significantly improve compliance with safety regulations and potentially reduce accidents in steel manufacturing environments. Future research should focus on expanding the dataset to include more diverse scenarios, developing techniques to improve model performance in detecting safety violations and handling occlusions, evaluating real-time performance, and extending the scope to include other critical safety equipment. Specifically, we recommend (a) collecting larger, more diverse datasets that better represent the full spectrum of steel manufacturing environments; (b) developing advanced data augmentation techniques to address class imbalance issues; (c) investigating multi-camera setups to mitigate occlusion problems; and (d) extending the study to include the detection of other safety equipment such as safety glasses, gloves, and protective clothing.

This pilot study provides crucial insights that will guide future research and development efforts in industrial safety, balancing promising results with a clear understanding of current limitations and areas for improvement. By addressing these challenges, future studies can further enhance the applicability and reliability of computer vision systems in the steel manufacturing environment.

6. Conclusions

The paramount importance of worker safety in steel manufacturing sites necessitates vigilant monitoring against unsafe practices and proactive identification of potential hazards. This research explored the feasibility and potential of implementing computer vision technologies for safety management within the steel manufacturing industry through a pilot case study focused on automatically detecting the use of hard hats by steelworkers using computer vision-based deep learning technology.

This study began with a comprehensive review phase characterizing hazards in the steel manufacturing environment and exploring their mitigation through computer vision applications. A multi-criteria decision model (TOPSIS MCDM) was then deployed to select commercially available computer vision programs suitable for safety management in this context. This approach involved a thorough assessment of hazards present in a steel mini-mill, accomplished through detailed analysis of mill reports, on-site observations, and stakeholder consultations. This comprehensive analysis provided the necessary insights for aligning potential hazards with computer vision capabilities, demonstrating the practicality of using computer vision for automated hazard recognition and active worksite surveillance. An extensive online search led to the evaluation of eight commercially available computer vision systems, with the Everguard system emerging as the most suitable candidate for this pilot study.

This pilot study demonstrated the feasibility of implementing computer vision systems for hard hat detection in steel manufacturing environments. We evaluated three state-of-the-art YOLO models (YOLOv5m, YOLOv8m, and YOLOv9c) using a dataset of 703 grayscale images from a steel mini-mill. A comprehensive comparison of these models across diverse metrics (precision, recall, F1-score, mAP, specificity, and AUC) provided valuable insights into their relative strengths and weaknesses in this specific context. All models showed promising performance, particularly for the “in_hardhat” class, with high AUC values (>0.7) and YOLOv9c slightly outperforming the others. However, the “no_hardhat” class presented significant challenges, with lower AUC scores across all models, particularly for YOLOv5m. YOLOv9c demonstrated the best performance in detecting safety violations. The grayscale nature of the source videos added complexity to the detection task, potentially exacerbating difficulties in distinguishing subtle contrasts in low-light areas of the steel mill.

While this study affirms the feasibility of applying computer vision-based deep learning for safety management in steel manufacturing environments, it also highlights important challenges. These include class imbalance issues, the limitations of synthetic data augmentation, and the reduced feature space inherent to grayscale imagery. Future research would focus on addressing these challenges through more sophisticated data augmentation techniques, developing illumination-invariant features, and exploring multi-modal approaches to complement the limited information in grayscale imagery.

Looking ahead, this methodology holds promise for detecting additional safety equipment such as vests, gloves, and glasses, as well as for monitoring workers’ posture and proximity to heavy machinery. These areas represent valuable directions for future research, contributing to the continuous enhancement of workplace safety in the steel manufacturing industry.

In conclusion, this pilot study provides crucial insights that will guide future research and development efforts in industrial safety, balancing promising results with a clear understanding of current limitations and areas for improvement. By addressing these challenges, future studies can further enhance the applicability and reliability of computer vision systems in industrial safety management, particularly in the complex and dynamic environment of steel manufacturing.