Abstract

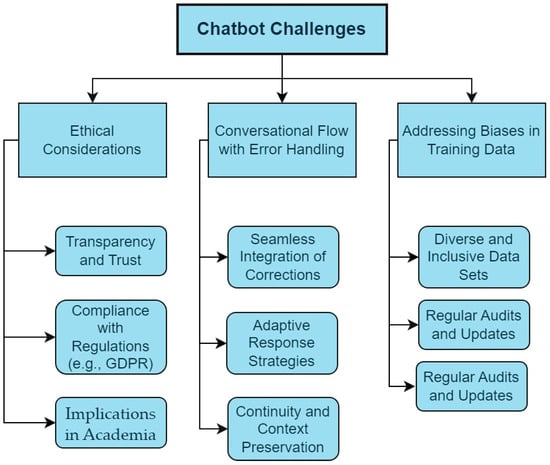

This study explores the progress of chatbot technology, focusing on the aspect of error correction to enhance these smart conversational tools. Chatbots, powered by artificial intelligence (AI), are increasingly prevalent across industries such as customer service, healthcare, e-commerce, and education. Despite their use and increasing complexity, chatbots are prone to errors like misunderstandings, inappropriate responses, and factual inaccuracies. These issues can have an impact on user satisfaction and trust. This research provides an overview of chatbots, conducts an analysis of errors they encounter, and examines different approaches to rectifying these errors. These approaches include using data-driven feedback loops, involving humans in the learning process, and adjusting through learning methods like reinforcement learning, supervised learning, unsupervised learning, semi-supervised learning, and meta-learning. Through real life examples and case studies in different fields, we explore how these strategies are implemented. Looking ahead, we explore the different challenges faced by AI-powered chatbots, including ethical considerations and biases during implementation. Furthermore, we explore the transformative potential of new technological advancements, such as explainable AI models, autonomous content generation algorithms (e.g., generative adversarial networks), and quantum computing to enhance chatbot training. Our research provides information for developers and researchers looking to improve chatbot capabilities, which can be applied in service and support industries to effectively address user requirements.

1. Introduction

Chatbots are being increasingly integrated into various aspects of modern life and are revolutionizing the way individuals and businesses interact and operate [1]. Fundamentally, chatbots are software applications designed to simulate human conversation. They enable interaction with users through text or voice commands. The evolution of chatbots has been driven by recent developments in artificial intelligence (AI) techniques such as natural language processing (NLP) that allow them to understand, interpret, and respond to human language more accurately [2]. This has led to chatbots that can be available 24/7, have fast response times, are scalable to huge amounts of data, are cost-effective, and are multilingual [3].

Chatbots serve a multitude of purposes in different sectors. In customer service, they reduce wait times and improve customer satisfaction by providing instant support, handling routine inquiries, and resolving common issues [4]. In e-commerce platforms, chatbots offer personalized shopping experiences and offer product recommendations and assistance based on user preferences and browsing history [5]. In the healthcare sector, they enhance patient engagement and healthcare accessibility by helping in symptom assessment, appointment scheduling, and providing health tips [6]. In education, they assist in tutoring, language learning, and administrative tasks, making educational resources more accessible and interactive [7]. Moreover, chatbots play an important role in streamlining internal business processes. They assist in automating repetitive tasks such as data entry and scheduling, thereby increasing productivity and allowing human employees to focus on more complex and creative tasks.

As chatbots gather valuable data through interactions, they can offer insights into customer behavior and preferences, which can be used to inform business strategies [8,9]. Chatbots like IBM Watson Assistant have transformed customer support and engagement. On messaging platforms, the WhatsApp Business API has enabled seamless communication between businesses and customers. Meanwhile, cutting-edge AI models like ChatGPT from OpenAI have redefined the boundaries of text-based conversations [10].

However, the rise of chatbots also brings challenges, particularly concerning privacy and data security, as they often handle sensitive user information [11]. As chatbot technology continues to evolve, addressing these challenges in conversational AI remains crucial.

1.1. The Importance of Error Correction in ML

Error correction is an important component of chatbots that shapes their efficacy and reliability. This includes enhancing the chatbot’s learning capabilities, adaptability, and accuracy. ML models, trained on extensive datasets, are designed to identify patterns, make decisions, and predict outcomes. Despite their sophistication, these models are prone to errors due to factors such as biases in training data, overfitting, underfitting, or the complexity of tasks [12,13]. Error correction involves identifying these inaccuracies and refining the model for improved functionality. In the context of chatbots, inaccurate models not only impact performance but also pose the risk of a loss of credibility, particularly in critical sectors like healthcare and finance where errors can have grave consequences [14].

Error correction is essential for addressing biases in ML models. Biases, often ingrained in the training data, can lead to skewed outcomes, exacerbating existing societal prejudices [15]. Techniques like data augmentation, re-weighting, and adversarial training can help identify and mitigate biases within models [16]. Error correction also plays a crucial role in enhancing a model’s ability to generalize from training data to new, unseen data [13]. As models are deployed, they encounter novel data and scenarios, often diverging from their initial training environments [17]. Generalization ensures the model’s robustness and versatility in diverse real-world scenarios, especially in dynamic environments where adaptability to new data types and conditions is necessary [18]. This is especially important in real-time analytics and decision-making [19]. By incorporating user feedback and new data, chatbots can continuously learn and improve their performance over time [20].

1.2. Article Contributions and Overview

In this article, we explore the evolving world of chatbots, exploring their capabilities, challenges, and the crucial role of error correction in shaping their evolution. Our work provides a comprehensive exploration into training chatbots to learn from their mistakes. It starts with an overview of chatbot technology, highlighting its evolution and current significance (Section 2). The focus then shifts to identifying common chatbot errors, which forms the basis for understanding their learning requirements (Section 3). A significant portion is dedicated to the importance of error correction in ML, emphasizing its role in enhancing chatbot accuracy and efficiency (Section 4). The article also outlines various strategies employed for chatbot improvement, including advanced techniques like feedback loops and reinforcement learning (RL) (Section 5). Incorporating real-world case studies, this article demonstrates the practical application and success of these methods (Section 6). Furthermore, it discusses the challenges and ethical considerations in chatbot training (Section 7). The article concludes with insights into future trends in chatbot development and offers a perspective on the ongoing evolution in this field (Section 8 and Section 9).

While previous research has addressed individual aspects of chatbot error correction, our work offers a unique contribution by providing a holistic and systematic analysis of the issue. We go beyond simply identifying common chatbot errors; we delve into their root causes. We also examine the broader impact of these errors on user experience and trust, highlighting the importance of error mitigation for the successful adoption and long-term viability of chatbots. Additionally, we offer a comprehensive review of error correction techniques, including both established methods and emerging approaches like reinforcement learning, and showcase their real-world applications through diverse case studies. This holistic approach distinguishes our work from the existing literature, which often focuses on specific error types or correction techniques in isolation.

2. Understanding Chatbots

Chatbots, also known as conversational agents, are software applications designed to simulate human-like conversation using text or voice interactions [21]. They function by recognizing user input, such as specific keywords or phrases, and responding based on a set of predefined rules or through more advanced AI techniques.

At their core, chatbots are programmed to mimic the conversational abilities of humans. Early versions of chatbots were rule-based and could only respond to specific commands. These have evolved into more advanced AI-driven chatbots that use NLP and ML to understand, interpret, and respond to user queries in a more natural and context-aware manner [22].

The key to a chatbot’s functionality lies in its ability to process and analyze language. Rule-based chatbots rely on a database of responses and pick one based on the closest matching command from the user. In contrast, AI-powered chatbots use NLP to parse and understand the user’s language, intent, and sentiment, enabling them to provide more relevant and personalized responses [23,24]. Chatbots are typically used in customer service to provide quick and automated responses to common inquiries and ease the workload of human staff [25]. They are also employed in various other domains, such as e-commerce for personalized shopping assistance, in healthcare for preliminary diagnosis and appointment scheduling, and in entertainment as interactive characters.

As technology advances, chatbots are becoming more capable of handling complex conversations, learning from past interactions, and providing more accurate and human-like responses. This evolution is transforming how businesses and customers interact, making chatbots an integral part of the modern digital experience.

2.1. Types of Chatbots: Rule-Based vs. AI-Based

Chatbots can generally be categorized into two primary types: rule-based and AI-based, each with unique functionalities and applications.

2.1.1. Rule-Based Chatbots

These chatbots operate on predefined rules and a set of scripted responses. They are designed to handle queries based on specific conditions and triggers. Rule-based chatbots can efficiently manage straightforward, routine tasks by recognizing keywords or phrases in user inputs and responding with pre-programmed answers [26]. The key advantage of these chatbots lies in their simplicity and reliability in executing well-defined tasks. However, their major limitation is their lack of flexibility and inability to handle queries that fall outside their programmed rules. They cannot learn from interactions or improve over time, which makes them less adaptable to varying user needs [27].

An example of a rule-based chatbot is the “APU Admin Bot”, designed to handle student inquiries, leveraging a Waterfall model development process and informed by interviews and questionnaires [28]. To build this chatbot, the developers gathered student needs through interviews and questionnaires. Analyzing these data, they created a flowchart outlining conversation paths. This flowchart was then used to design the chatbot’s logic on a platform like Chatfuel. Students can interact with the bot by following prompts or searching keywords to access pre-determined information. The chatbot provides easy access to information and improves administrative efficiency. Despite its success, its limitations include no backend updates and a lack of personalized responses.

In an approach aiming to promote self-reflection and proactive mental health, Miura et al. presented a rule-based chatbot system designed to monitor the mental well-being of elderly individuals [29]. Through the LINE messaging platform, the chatbot delivers daily inquiries tailored to assess the mental state of users. These inquiries, designed with simplicity in mind, prompt users to respond with yes or no answers, enabling easy expression of thoughts and emotions. Based on user responses, the chatbot adjusts its inquiries and provides weekly feedback and self-care advice, utilizing rules to identify areas of concern.

2.1.2. AI-Based Chatbots

AI-based chatbots provide a dynamic approach to automated interaction by leveraging advanced AI technologies like NLP, ML, and occasionally deep learning. These chatbots are intricately designed to grasp the context and intent of user queries, enabling conversational exchanges far beyond the capabilities of their rule-based counterparts [30].

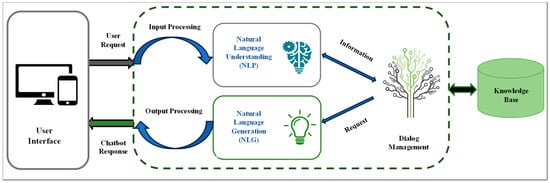

Figure 1 illustrates the general architecture of an AI-based chatbot. User Interface (UI) is at the forefront of this architecture, which should offer users a smooth and intuitive means to communicate. Input Processing acts as the initial gatekeeper that parses user inputs and prepares them for deeper analysis. To understand user intent, AI-based chatbots use a natural language understanding (NLU) component. This unit analyzes the complexities of language and extracts key intents and entities from the dialogue. This analysis is then channeled into the dialogue management system, the chatbot’s decision-making core, which determines the most relevant response based on the conversation context, the chatbot’s accumulated knowledge, and previous interactions.

Figure 1.

The architecture of an AI-based chatbot.

The chosen response is carefully constructed by the natural language generation (NLG) component, which translates the chatbot’s decision into a coherent and contextually appropriate message. This message undergoes final refinements in the Output Processing stage before being presented to the user via the UI. The chatbot relies on a knowledge base/database containing factual data and conversational patterns to inform its responses.

One of the most transformative features of AI-based chatbots is their learning component. This module allows for continuous improvement and personalization by integrating feedback and new data into the chatbot’s operational framework. This learning adaptability of AI chatbots positions them as invaluable assets in fields requiring deep interaction and engagement. However, the sophistication of their design and the necessity for continuous training introduce challenges including the need for large datasets and powerful computing systems [31].

Al-Sharafi et al. investigated factors influencing the sustainable use of AI-based chatbots for educational purposes [32]. They built a theoretical model combining Expectation Confirmation Model (ECM) constructs (expectation confirmation, perceived usefulness, and satisfaction) with knowledge management (KM) factors (knowledge sharing, acquisition, and application). Data were collected from 448 university students who utilized chatbots for learning. Importantly, the study employed a novel hybrid Structural Equation Modeling–Artificial Neural Network (SEM-ANN) approach for analysis. The results emphasized the significance of knowledge application on chatbot sustainability, followed by perceived usefulness, acquisition, satisfaction, and sharing.

A recent study presented a legal counseling chatbot system enhanced by AI [33]. The system addresses the challenge of users locating pertinent legal information without specialized domain knowledge. It employs a slot-filling approach, prompting users to provide structured details about their legal inquiries such as the relevant legal domain and key terms. AI-powered NLP analyzes these structured data, enabling the system to understand the user’s intent more accurately than traditional rule-based searches. To provide tailored responses, the system leverages a deep learning algorithm trained on a substantial database of legal questions and answers. This algorithm analyzes the user’s structured query, identifies similar cases within the database, and extracts relevant information. Importantly, the system promotes continuous improvement through user feedback mechanisms. As users interact with the chatbot and assess the helpfulness of provided answers, the AI model can incorporate this feedback, refining the accuracy and relevance of its responses over time.

Rule-based chatbots are suitable for tasks requiring straightforward, consistent responses, while AI-based chatbots excel in scenarios that demand more complex, context-aware, and personalized interactions [34].

2.2. Common Applications of Chatbots

Chatbots have become integral across multiple sectors, significantly enhancing user experience and operational efficiency. In customer service, they offer instant support across digital platforms, efficiently handling inquiries and improving satisfaction [35]. The e-commerce sector sees chatbots personalizing shopping experiences, aiding in product discovery, and facilitating transactions, which potentially boosts sales [36,37]. Healthcare chatbots streamline patient interactions, from symptom checking to appointment scheduling, thereby increasing accessibility and efficiency in medical services [38]. In banking and finance, they provide secure, immediate assistance for account inquiries and transactions, revolutionizing customer service [39]. Educationally, chatbots support learning and administrative tasks, offering personalized tutoring and managing routine inquiries, enhancing the educational experience [40]. HR chatbots automate onboarding and recruitment processes, improving efficiency and candidate engagement [41]. In travel and hospitality, they simplify booking processes and customer support, enhancing travel experiences [42]. The legal field is no exception, with AI-powered chatbots emerging as valuable tools to bridge the gap between legal knowledge and user access [33]. Lastly, in entertainment and media, chatbots curate personalized content and engage users in interactive experiences, enriching media consumption [43,44]. Despite their widespread application, challenges in deployment and meeting user expectations underscore the importance of continuous improvement in chatbot technologies.

3. The Nature of Mistakes in Chatbots

As chatbots become increasingly integrated into various aspects of life, understanding the nature of their mistakes is crucial [45]. Like any technology based on AI, chatbots are prone to errors that can range from minor misunderstandings to significant miscommunications. These errors, while often technical in nature, can have far-reaching implications on user experience and trust [46]. This section explores the types of errors commonly encountered in chatbot interactions and examines the impact of these errors on users’ perceptions and trust.

Table 1 provides a concise summary of these common errors with descriptions and examples for clarity.

Table 1.

Types of chatbot errors and examples.

3.1. Types of Errors in Chatbot Responses

Misunderstanding. One of the most common errors in chatbot interactions is the misunderstanding of user intent [47]. This can occur due to various factors, such as the complexity of language, use of slang, typos, or ambiguous queries. When a chatbot fails to correctly interpret the user’s request, it may provide irrelevant or off-target responses, leading to frustration and inefficiency.

Research has shown that dialog act classification can be a useful tool in helping chatbots better discern user intent and reduce misunderstandings [48]. The complexities of natural language understanding and generation often contribute to misunderstandings, as highlighted in studies on language model performance [49].

Inappropriate responses. Chatbots may sometimes generate responses that are inappropriate or offensive [50]. These instances usually stem from limitations in the chatbot’s programming or issues in the training data. Inappropriate responses can be particularly damaging, as they might offend users or reflect poorly on the brand or organization the chatbot represents.

Ethical considerations in the design of dialogue systems, including strategies to mitigate inappropriate responses, have been a focus of recent research [51]. In addition, the issue of “toxic degeneration” in language models, where they generate harmful or biased outputs, is a growing concern that can lead to inappropriate chatbot responses [52].

Factual errors. Chatbots providing informational or advisory services may occasionally give incorrect or outdated information [45]. This frequently results from a lack of updates to the chatbot’s knowledge base or errors within its source data. Factual inaccuracies carry the risk of misleading users and could have harmful consequences in critical applications like healthcare or finance.

Leveraging knowledge bases like Wikipedia and semantic interpretation techniques can improve the accuracy of natural language processing systems and reduce factual errors in chatbots [53]. The development of web-scale knowledge fusion techniques, such as those employed in the Knowledge Vault project, offers a promising avenue for creating more accurate and up-to-date knowledge bases for chatbot information retrieval [54].

Repetitive responses. Chatbots with limited response generation capabilities might become stuck in a repetitive loop, offering the same responses over and over again, regardless of the user’s input. This indicates a lack of flexibility and can quickly make the interaction feel stale and frustrating for the user. For example, a chatbot that continuously repeats “I’m sorry, I don’t understand” shows that it is unable to adapt its responses.

Research into diversity-promoting objective functions and neural conversation models has explored ways to enhance the diversity and adaptability of chatbot responses, reducing repetitiveness [55,56].

Lack of personalization. Many chatbots fail to tailor their interactions to individual users. This means they provide generic, one-size-fits-all responses that do not consider the user’s specific needs, preferences, or interaction history. This lack of personalization can make the chatbot feel robotic and impersonal, leading to a less engaging user experience. For example, if a chatbot does not remember a user’s previous purchase or issue, it will not be able to offer a helpful, contextually relevant solution.

Recent advancements in personalized dialogue generation have focused on incorporating user traits and controllable induction to create more tailored and engaging interactions [57,58].

Language limitations. Chatbots often have limitations in their language understanding and generation abilities. They might be primarily designed to function in a single language, unable to handle multilingual conversations smoothly. Additionally, they may struggle with nuanced language, misinterpreting sarcasm, humor, or figurative speech. This can lead to misunderstandings and hinder the chatbot’s ability to communicate effectively.

Multilingual neural machine translation systems, like those developed by Google, have the potential to greatly expand the language capabilities of chatbots and enable seamless cross-lingual communication [59]. Cross-lingual information retrieval models based on multilingual sentence representations, such as those utilizing BERT, can help chatbots better understand and respond to queries in diverse languages [60].

Hallucinations. In the context of AI and chatbots, hallucinations refer to instances where the AI generates outputs that are factually incorrect, nonsensical, or unrelated to the given input [61,62]. This is often due to the model trying to fill in gaps in its knowledge with fabricated information, rather than admitting its limitations.

Research on faithfulness and factuality in abstractive summarization highlights the challenges of ensuring the accuracy and relevance of generated text, which directly relates to the issue of hallucinations in chatbots [63]. The growing body of work surveying hallucination in natural language generation provides valuable insights into the causes and potential solutions for this phenomenon [64].

3.2. Impact of Chatbot Errors on User Experience and Trust

The impact of chatbot errors on user experience is multifaceted [26,65,66]. When chatbots fail to understand user intent, provide inappropriate responses, or deliver inaccurate information, users experience frustration and dissatisfaction [67]. This directly affects their perception of the chatbot’s efficiency and usefulness, potentially discouraging them from further interaction. In scenarios where users rely on the chatbot for critical information, such as in healthcare or financial advice, factual errors can have severe consequences, leading to misinformation and misguided decisions.

Beyond immediate frustration, errors significantly erode user trust in the chatbot and the organization it represents [68]. Trust is a cornerstone of successful human–computer interactions, especially when sensitive information or important decisions are involved [26,69]. Errors, particularly those related to factual accuracy or social appropriateness, undermine the chatbot’s credibility and raise doubts about its reliability. This loss of trust is not easily repaired and can have lasting repercussions on user engagement and loyalty [44,70]. Users may become hesitant to share information, reluctant to follow advice, or simply choose to avoid the chatbot altogether. In essence, errors create a ripple effect, impacting not only the current interaction but also the long-term relationship between the user and the chatbot system.

Addressing these errors requires a multi-pronged approach. Developers must prioritize error identification and correction, utilizing robust error-handling mechanisms and transparent communication about the chatbot’s limitations. Continuous learning and improvement are essential, ensuring the chatbot adapts and evolves to better meet user needs and expectations. By proactively addressing errors and building a foundation of trust, chatbot developers can create more satisfying, reliable, and valuable user experiences.

4. Foundations of ML for Chatbots

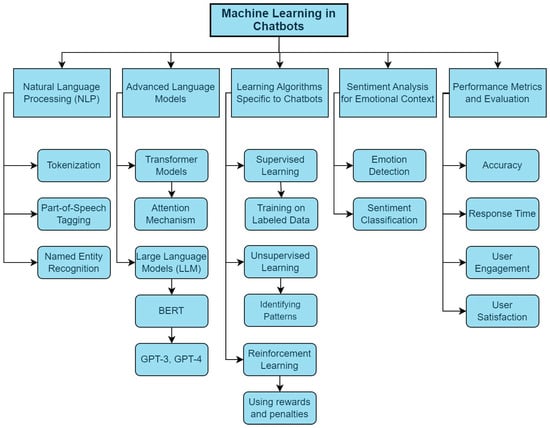

ML is at the heart of modern chatbot technology. It equips chatbots with the ability to interpret, learn from, and respond to human language [71]. This section offers an overview of the key ML concepts that are instrumental in the development and operation of chatbots. Figure 2 shows an overview of these key ML concepts in chatbots.

Figure 2.

Overview of key machine learning concepts in chatbots.

4.1. Key ML Concepts in Chatbots

4.1.1. Natural Language Processing (NLP)

NLP is vital in enabling chatbots to understand and interpret user queries contextually. This involves parsing user input, discerning intent, and generating appropriate responses. Advanced NLP techniques, such as tokenization, part-of-speech tagging, and named entity recognition, are employed to analyze, understand, and interpret human language [72].

Modern chatbots often utilize sophisticated language models like BERT (Bidirectional Encoder Representations from Transformers) or GPT (Generative Pre-trained Transformer) models [73]. GPT models are generative and are trained to predict the next word in a sequence, making them excellent for tasks like text generation and conversational AI. BERT models, on the other hand, are designed to understand the deep contextual relationships between words in a sentence. This bidirectional training makes BERT models ideal for tasks like question answering, sentiment analysis, and natural language understanding [74,75].

4.1.2. Learning Algorithms Specific to Chatbots

Data-driven learning is a fundamental concept in the development of chatbots, crucial for enhancing their effectiveness and adaptability [76]. At its core, data-driven learning involves the systematic analysis of vast amounts of data to enable chatbots to improve their conversational abilities over time. By leveraging diverse and comprehensive datasets, chatbots can better understand user intents, preferences, and behaviors, allowing them to generate more accurate and contextually relevant responses [77].

Supervised vs. unsupervised learning. In the context of chatbots, supervised learning is predominant. Here, chatbots are trained on labeled datasets comprising user queries and corresponding correct responses, allowing them to learn contextually appropriate reactions. Unsupervised learning, while less common, can be used to identify patterns or anomalies in user interactions, aiding in the chatbot’s adaptive learning process [78].

For example, Wang et al. [79] proposed a chatbot to observe and evaluate the psychological condition of women during the perinatal period. They employed supervised machine learning techniques to analyze 31 distinct attributes of 223 samples. The objective was to train a model that can accurately determine the levels of anxiety, depression, and hypomania in perinatal women. Meanwhile, psychological test scales were used to assist in evaluations and make treatment suggestions to help users improve their mental health. The trained model demonstrated high reliability in identifying anxiety and depression, with initial reliability rates of 86% and 89%, respectively. Notably, over time, through long-term feedback and simulations, the model’s diagnostic and recommendation accuracies improved. Specifically, after three weeks, the accuracy for anxiety diagnosis and recommendations reached 93%, and for depression, it reached 91% across five simulations.

RL in conversations. This learning paradigm involves training chatbots to make sequences of decisions. By using a system of rewards and penalties, chatbots learn to optimize their responses based on user feedback, gradually improving their conversational abilities and decision-making processes [80]. For instance, Jadhav et al. [81] introduced a new method for creating a chatbot system using ML for the academic and industrial sectors. The system facilitates human–machine discussions, identifies sentences, and responds to queries. It uses NLP and reinforcement learning algorithms, focusing on experience learning, improving connections, and constant information handling to maximize model accuracy and convergence rates.

A more detailed review of different learning algorithms aimed specifically at error correction in chatbots is provided in Section 5.

4.1.3. Sentiment Analysis for Emotional Context

Chatbots equipped with sentiment analysis can gauge the emotional tone of user inputs [82]. By analyzing text for positive, negative, or neutral sentiments, chatbots can tailor their responses to match or appropriately respond to the user’s emotional state, enhancing the overall interaction quality [83]. For example, Rifqi Majid et al. developed the Dinus Intelligent Assistant (DINA) chatbot to assist with student administration services, addressing the challenge of recognizing emotions in text-based conversations [84]. In their study, they preprocessed conversations using sentiment analysis and then collected data based on the conversations and the results of the sentiment analysis. Subsequently, they applied recurrent neural networks (RNNs) to categorize emotions based on current conversations. The outcome of this approach was promising, exhibiting a precision accuracy of 0.76. From these results, it was demonstrated that their algorithm could indeed help in recognizing emotions from text-based conversations.

4.1.4. Large Language Models (LLMs)

Large Language Models (LLMs) have emerged as the cornerstone of modern AI-based chatbots [85]. These powerful language models, fueled by sophisticated architectures like transformer models, have revolutionized how chatbots understand and respond to human language.

Transformer models, introduced by Google in 2017, are a type of neural network architecture that has proven exceptionally effective for NLP tasks. Unlike traditional recurrent neural networks (RNNs), transformers can process entire sentences in parallel, allowing for faster training and improved performance with long sequences of text.

The core mechanism within transformers is the “attention mechanism”. This allows the model to weigh the importance of different words in a sentence when predicting the next word. This attention mechanism is crucial for capturing contextual relationships between words and understanding the nuances of human language.

Two notable examples of LLMs that have garnered significant attention are GPT-3 and GPT-4, developed by OpenAI. These models, built upon the transformer architecture, have demonstrated impressive capabilities in a wide range of language tasks, including text generation, translation, summarization, and question answering. GPT-3, with its 175 billion parameters, marked a significant milestone in LLM development. Its successor, GPT-4, is expected to be even more powerful and capable, pushing the boundaries of what is possible with AI-based chatbots.

LLMs form the backbone of modern AI-based chatbots by enabling them to do the following:

- (1)

- Understand user input: LLMs analyze text input from users, deciphering the meaning, intent, and context behind their queries.

- (2)

- Generate human-like responses: LLMs can craft responses that mimic human conversation, making interactions feel more natural and engaging.

- (3)

- Adapt and learn: LLMs can continuously learn from new data and interactions, improving their performance and responsiveness over time.

By harnessing the power of LLMs, chatbot developers can create more intelligent, responsive, and helpful conversational agents that are capable of understanding and responding to a wide range of user queries.

4.1.5. Performance Metrics and Evaluation

Performance metrics and evaluation are essential components in assessing the effectiveness of chatbots. These metrics, including accuracy, response time, user engagement rate, and satisfaction scores, provide valuable insights into how well a chatbot is performing in real-world scenarios. Accuracy measures the chatbot’s ability to provide correct responses, while response time reflects its efficiency in delivering those responses. The user engagement rate indicates the level of interaction and interest users have with the chatbot, while satisfaction scores gauge overall user satisfaction with the chatbot experience.

There is, however, a pressing need to standardize evaluation metrics for various chatbot applications, including healthcare. For instance, a 2020 study identified diverse technical metrics used to evaluate healthcare chatbots and suggested adopting more objective measures, such as conversation log analyses, and developing a framework for consistent evaluation [86].

4.2. Learning from Interactions

Chatbots, particularly those powered by AI, have the remarkable ability to learn and evolve from user interactions [87]. This learning process involves various stages of data processing, analysis, and adaptation. Here we explore how chatbots learn from interactions:

Data collection and analysis: The initial step in the learning process is data collection. Every interaction a chatbot has with a user generates data. These data include the user’s input (questions, statements, and commands), the chatbot’s response, and any follow-up interaction. Over time, these data accumulate into a substantial repository. Chatbots analyze these collected data to identify patterns and trends. For instance, they can recognize which responses satisfactorily answered users’ queries and which led to further confusion or follow-up questions [88]. Additionally, analysis of user interactions can reveal common pain points, enabling the chatbot to proactively address them in the future [89].

Feedback incorporation: User feedback, both implicit and explicit, is a critical component of the learning process. Explicit feedback can be in the form of user ratings or direct suggestions, while implicit feedback is derived from user behavior and interaction patterns. Chatbots use this feedback to adjust their algorithms. Positive feedback reinforces the responses or actions taken by the chatbot, while negative feedback prompts it to adjust its approach [90]. Reinforcement learning (RL) algorithms play a significant role in this adaptive process, allowing chatbots to learn from rewards and penalties associated with their actions [91].

Training and model updating: The core of a chatbot’s learning process lies in training its ML models. Using gathered data and feedback, the chatbot’s underlying ML model is periodically retrained to improve its accuracy and effectiveness [72]. During retraining, the model is exposed to new data points and variations, allowing it to learn from past mistakes and successes. This process can involve adjusting weights in neural networks, refining decision trees, or updating the parameters of statistical models to better align with user expectations and needs.

Natural language understanding (NLU): A significant aspect of a chatbot’s learning process is enhancing its NLU [92]. This involves improving its ability to comprehend the context, tone, and intent behind user inputs. Through continuous interactions, the chatbot learns to parse complex sentences, understand colloquialisms, and recognize emotions or sentiments expressed by users. By leveraging NLU, chatbots can provide more accurate and contextually relevant responses, leading to improved user satisfaction [93].

Personalization: As chatbots interact with individual users over time, they start to personalize their responses. By recognizing patterns in a user’s queries or preferences, the chatbot can tailor its responses to be more relevant and personal, enhancing user experience [94]. Personalization also involves adapting to the user’s style of communication, which can include adjusting the complexity of language used, the formality of responses, or even the type of content presented [95].

Continuous improvement and adaptation: The learning process for chatbots is ongoing. They continually adapt to new trends in language use, changes in user behavior, and shifts in the topics or types of queries they encounter. This continuous improvement cycle ensures that chatbots remain effective over time, even as the environment in which they operate evolves [96].

In the next section, we will investigate these learning scenarios for error reduction in more detail.

5. Strategies for Error Correction

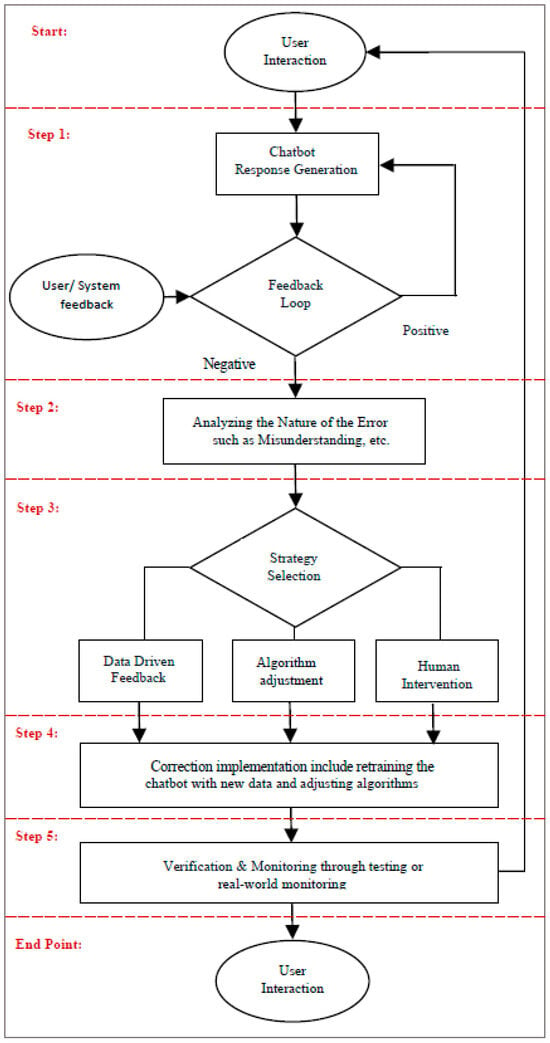

Error correction in chatbots is a critical process that ensures these AI-driven tools are efficient, accurate, and reliable. This section explores the various strategies employed to refine and enhance chatbot interactions, particularly focusing on how errors are identified and rectified. Three key approaches are explored as follows: data-driven methods utilizing feedback loops, algorithmic adjustments through reinforcement and supervised learning, and the incorporation of human oversight in the learning process. While these strategies can stand alone, in practice, the most effective chatbots use a combination of these approaches for the best results.

Figure 3 illustrates the general process of chatbot error correction, and Table 2 summarizes the different strategies that can be used for error correction with their benefits and challenges.

Figure 3.

Flowchart of chatbot error correction process.

Table 2.

Strategies for error correction in chatbots.

5.1. Data-Driven Approach

Using feedback loops in a data-driven approach is a powerful strategy for error correction in chatbots [97,98]. Feedback loops are systems that collect responses and reactions from users and utilize this information to adjust and improve the chatbot. This allows for the continuous improvement of chatbot performance in dynamic conversational environments. This process works in the following manner:

Collection of user feedback: The collection of user feedback is a crucial step in the chatbot learning process. Chatbots gather feedback through various channels, including explicit mechanisms like direct ratings or textual comments on chatbot responses [99]. Additionally, implicit feedback is derived from analyzing user behavior, such as session duration, conversation abandonment rates, or even click-through patterns on suggested responses [98,100]. These diverse feedback mechanisms provide valuable insights into the effectiveness of the chatbot’s responses and pinpoint areas where misunderstandings or errors occur [101]. By carefully analyzing these feedback data, developers can identify recurring issues, understand user preferences, and prioritize areas for improvement, thus driving the continuous enhancement of chatbot performance.

Analysis of feedback data: The collected data are analyzed to identify patterns and common issues. For instance, consistently low ratings or negative feedback on specific types of responses can indicate an area where the chatbot is struggling [102]. This analysis often involves looking at the chatbot’s decision-making process, understanding why certain responses were chosen, and determining if they align with user expectations [103].

Adapting and updating the chatbot: Based on the analysis, the chatbot’s response mechanisms are adapted [104,105]. This might involve updating the database of responses, changing the way the chatbot interprets certain queries, or modifying the algorithms that guide its decision-making process. In more advanced systems, this adaptation can be semi-automated, where the chatbot itself learns to make certain adjustments based on ongoing feedback [106].

Testing and iteration: After adjustments are made, the updated chatbot is tested, either in a controlled environment or directly in its operating context [107]. User interactions are closely monitored to assess the impact of the changes. This process is iterative. Continuous feedback is sought and analyzed, leading to further refinement [108].

Enhancing personalization: Feedback loops also aid in enhancing the personalization aspect of chatbots [109]. By understanding user preferences and common queries, chatbots can tailor their responses to be more personalized and context-specific, thereby improving user satisfaction [110].

5.2. Algorithmic Adjustments

Algorithmic adjustments, mainly through supervised reinforcement, are crucial in the error correction process of chatbots [81]. These techniques enable chatbots to learn from user interactions and feedback, adjust their decision-making algorithms, and progressively improve their ability to engage in accurate and contextually relevant conversations.

5.2.1. RL in Chatbots

RL involves training a chatbot through a system of rewards and penalties [111]. The chatbot is programmed to recognize certain actions or responses as positive (leading to a reward) or negative (resulting in a penalty). The objective is to develop a policy for the chatbot that maximizes the cumulative reward over time. This policy dictates the chatbot’s responses based on the situation and past experiences [112].

Application in chatbots. In chatbots, RL can be used to fine-tune conversation flow and response accuracy. For instance, if a chatbot correctly interprets a user’s request and provides a satisfactory response (as judged by user feedback or predefined criteria), it receives a reward. Conversely, inaccurate or inappropriate responses incur penalties. Over time, the RL algorithm adjusts the chatbot’s responses to maximize rewards, thereby improving conversational accuracy and user satisfaction.

For instance, Jadhav et al. [81] investigated the use of RL to improve the responsiveness and adaptability of chatbots in academic settings. The proposed chatbot system integrates traditional NLP techniques for query understanding and response generation. Crucially, it employs an RL-based dialogue manager to optimize its interactions with users. The core of RL implementation is the Q-learning algorithm. This algorithm allows the chatbot to learn from experience by assigning rewards or penalties to its responses based on their perceived effectiveness. Over time, the chatbot prioritizes responses that consistently receive positive reinforcement. In another study, Liu et al. [113] proposed Goal-oriented Chatbots (GoChat), a framework for end-to-end training of chatbots to maximize long-term returns from offline multi-turn dialogue datasets. The framework uses hierarchical RL (HRL) to guide conversations toward the final goal, with a high-level policy determining sub-goals and a low-level policy fulfilling them. A recent paper proposed a novel framework for training dialogue agents using deep RL. The authors used an actor-critic architecture, where the actor generates responses and the critic evaluates their quality. They trained the model on a large dataset of human–human conversations and demonstrated that it can generate more engaging and natural dialogue than traditional rule-based or supervised learning methods [114]. Another recent work addressed a key challenge in DRL for dialogue: the need for a large amount of human feedback to train the reward function [115]. The authors proposed a way off-policy batch DRL algorithm that can learn from implicit human preferences, such as click-through rates or conversation length. They showed that this approach can significantly reduce the amount of human feedback required to train a high-performing dialogue agent.

Challenges and considerations. One of the challenges in applying RL to chatbots is defining appropriate reward mechanisms. The complexity of human language and the subjective nature of conversations make it difficult to quantify rewards and penalties accurately. Continuous monitoring and adjustment are often required to ensure that the RL system remains aligned with desired outcomes and does not develop biases or undesirable response patterns [116].

5.2.2. Supervised Learning in Chatbots

Supervised learning involves training a chatbot on a labeled dataset, where the input (user query) and the desired output (correct response) are provided. The chatbot uses this data to learn how to respond to various types of queries. This method is particularly effective for training chatbots on specific tasks, such as customer support, where predictable and accurate responses are crucial [117].

The chatbot is exposed to a vast array of conversation scenarios during the training phase. The more diverse and comprehensive the training dataset, the better the chatbot becomes at handling different types of queries. The training process also involves fine-tuning the model parameters and structure to improve response accuracy and reduce errors like misunderstanding user intent or providing irrelevant responses [118].

Based on performance metrics (like accuracy, precision, and recall), the chatbot model is continually refined. New data, including more recent user interactions and feedback, are often incorporated into the training set to keep the chatbot updated with evolving language use and user expectations.

5.3. Overcoming Data and Label Scarcity

To further expand the chatbot capabilities, the use of new learning paradigms beyond traditional models is crucial. These techniques encompass semi-supervised and weakly supervised learning, as well as few-shot, zero-shot, and one-shot learning. These approaches address key challenges in chatbot development, such as data scarcity and the need for rapid adaptation to new tasks or domains.

5.3.1. Semi-Supervised Learning

Semi-supervised learning stands as a hybrid model that merges the strengths of both supervised and unsupervised learning [119]. It utilizes a small amount of labeled data alongside a larger volume of unlabeled data. This blend is particularly advantageous for chatbots, as acquiring extensive, well-labeled conversational data can be resource-intensive. In this approach, the chatbot initially learns from the labeled data, gaining a basic understanding of language patterns and user intents. It then extrapolates this knowledge to the broader, unlabeled dataset, enhancing its comprehension and response capabilities.

For chatbots, semi-supervised learning can significantly expedite the training process. The chatbot can develop a preliminary model based on the limited labeled data and refine its understanding through exposure to the more extensive, unlabeled data [120]. This process is particularly effective for understanding the nuances of natural language, which is often too complex to be fully captured in a limited labeled dataset. The method is also beneficial in adapting to new slang, jargon, or evolving language trends, as the unlabeled data can provide a more current snapshot of language use.

For example, a recent study addressed the challenge of automating intent identification in e-commerce chatbots, crucial for enhancing the shopping experience by accurately answering a wide range of pre- and post-purchase user queries [121]. Recognizing the complexity added by code-mixed queries and grammatical inaccuracies from non-English speakers, the study proposed a semi-supervised learning strategy that combines a small, labeled dataset with a larger pool of unlabeled query data to train a transformer model. The approach included supervised MixUp data augmentation for the labeled data and label consistency with dropout noise for the unlabeled data. Testing various pre-trained transformer models, like BERT and sentence-BERT, the study showed significant performance gains over supervised learning baselines, even with limited labeled data. A version of this model has been successfully deployed in a production environment.

5.3.2. Weakly Supervised Learning

Training with imperfect labels. Weakly supervised learning comes into play when the available training data are imperfectly labeled [122]. This might involve labels that are noisy, inaccurate, or too broad. In the context of chatbots, this means training the system with data where the annotations may not precisely match the desired output. Despite the less-than-ideal nature of the training data, this approach can still yield valuable learning outcomes. It allows chatbots to be trained on a more diverse range of data, capturing a wider array of conversational styles and topics.

Advantages in chatbot development. One of the key benefits of weakly supervised learning is the ability to leverage larger datasets that might otherwise be unusable due to imperfect labeling. This can be particularly useful for developing chatbots designed to operate in specific niches or less common languages, where labeled data are scarce. Additionally, this approach can facilitate quicker iterations in the development cycle of chatbots [123]. It allows for rapid prototyping and testing of chatbot models, with the understanding that these initial models will be refined as more accurate data become available or as the chatbot itself helps to clean and label the data through user interactions.

For example, a study focused on enhancing chatbots for food delivery services tackled the challenge of understanding customer intent, especially when faced with incoherent English and code-mixed language (Hinglish) [122]. Recognizing the high cost of acquiring large volumes of high-quality labeled training data, the research explored weaker forms of supervision to generate training samples more economically, albeit with potential noise. The study addressed the complexity of conversations that could involve multiple messages with diverse intents and proposed the use of lightweight word embeddings and weak supervision techniques to accurately tag individual messages with relevant labels. Additionally, it found that simple augmentation techniques could notably improve the handling of code-mixed messages. Tested on an internal benchmark dataset, the proposed sampling method outperformed traditional random sampling, raw sample usage, and even Snorkel, a leading weak supervision framework, demonstrating a substantial improvement in the F1 score and illustrating the effectiveness of these strategies in a real-world application.

5.3.3. Few-Shot, Zero-Shot, and One-Shot Learning

Few-shot, zero-shot, and one-shot learning stand to revolutionize the training methodologies of chatbots. These paradigms are particularly adept at addressing the challenge of data scarcity and the need for chatbots to adapt to new domains or tasks with minimal examples.

Few-shot learning enables chatbots to grasp new concepts or intents from a very limited set of examples. This approach is instrumental in scenarios where collecting extensive labeled data is impractical, thus significantly speeding up the chatbot’s ability to adapt to new user queries or languages [124].

Zero-shot learning takes this a step further by empowering chatbots to understand and respond to tasks or queries they have never encountered during training [125]. This paradigm leverages generalizable knowledge learned during training, applying it to entirely new contexts without the need for explicit examples. In the context of chatbots, this capability could be transformative, enabling them to provide relevant responses across a broader spectrum of topics without exhaustive domain-specific training.

One-shot learning, on the other hand, focuses on learning from a single example [126]. This method is especially beneficial for personalizing interactions or quickly incorporating user-specific preferences and contexts into the chatbot’s response framework. By effectively learning from a single interaction, chatbots can offer more tailored and relevant responses, enhancing the user experience.

The integration of few-shot, zero-shot, and one-shot learning paradigms into chatbot training embodies a transformative approach to enhancing conversational AI’s adaptability and intelligence. Implementing these paradigms necessitates the adoption of advanced algorithms that excel in abstracting learned knowledge and applying it to novel scenarios that the chatbot was not explicitly trained on. In the remaining part of this section, we explore in a deeper way how these advanced learning paradigms can be applied in the context of chatbot training.

Leveraging meta-learning techniques: Meta-learning, or learning to learn, stands at the forefront of enabling few-shot, zero-shot, and one-shot learning in chatbots [127]. By employing meta-learning algorithms, chatbots can generalize their learning from one task to another, facilitating rapid adaptation to new tasks or domains with minimal data. In practical terms, this means a chatbot trained in customer service could quickly adapt to provide support in a different language or domain, using only a few examples to guide the transition. Meta-learning achieves this by optimizing the model’s internal learning process, allowing it to apply abstract concepts learned in one context to another vastly different one.

For example, a recent study introduced D-REPTILE as a meta-learning algorithm to refine dialogue state tracking across various domains by leveraging domain-specific tasks. This method involved selecting multiple domains, using them to iteratively adjust the initial model parameters, and thus creating a base model state optimized for quick adaptation to new, related domains [128]. D-REPTILE stood out for its operational simplicity and efficiency, enabling significant performance boosts in models trained on sparse data by preparing them for effective fine-tuning on target domains not seen during the initial training phase.

Another recent study adapted the model-agnostic meta-learning (MAML) approach for personalizing dialogue agents without relying on detailed persona descriptions [127]. Researchers trained the model on a variety of user dialogues, and then fine-tuned it to new personas with just a few samples from specific users. This process allowed the model to quickly adapt its responses to reflect the unique characteristics of each user’s persona, demonstrated by improved fluency and consistency in conversations when evaluated against traditional methods.

Embedding rich contextual and semantic understanding: The core of effectively implementing these learning paradigms lies in infusing chatbots with a deep understanding of language semantics and context. Advanced NLP techniques, such as transformer models [129] and contextual embeddings [130], play a crucial role here. These models can capture the nuances of human language, including idioms, colloquialisms, and varying syntactic structures, making it possible for chatbots to understand and respond to queries accurately, even with minimal prior exposure to similar content. For instance, a chatbot could use one-shot learning to accurately interpret and respond to a user’s unique request after seeing just one example of a similar query, thanks to its deep semantic understanding of the query’s intent and context [131].

For example, a recent study introduced the context-aware self-attentive NLU (CASA-NLU) model, enhancing natural language understanding in dialog systems by incorporating a broader range of contextual signals, including prior intents, slots, dialog acts, and utterances [132]. Unlike traditional NLU systems that handle utterances in isolation and defer context management to dialogue management, the CASA-NLU model integrates these signals directly to improve intent classification and slot labeling. This approach led to a notable performance increase, with up to a 7% gain in intent classification accuracy on conversational datasets, and set a new state-of-the-art for the intent classification task on the Snips and ATIS datasets without relying on contextual data.

Transfer learning: Beyond meta-learning, transfer learning techniques can facilitate the application of few-shot, zero-shot, and one-shot learning by transferring knowledge from data-rich domains to those where data are scarce. Chatbots can leverage pre-trained models on extensive datasets and then fine-tune them with a small subset of domain-specific data [133,134]. This approach significantly reduces the need for large-labeled datasets in every new domain the chatbot encounters, streamlining the process of extending chatbot functionalities across various fields.

For example, an experimentation study focused on comparing Open Domain Question Answering (ODQA) solutions using the Haystack framework, particularly for troubleshooting documents [135]. The study explored various combinations of Retriever and Reader components within Haystack to identify the most effective pair in terms of speed and accuracy. A dataset of 1246 question–answer pairs was created and divided into sets for training and validation, employing transfer learning with pre-trained models like BERT and RoBERTa on 724 questions. The performance of ten different Retriever–Reader combinations was assessed after fine-tuning these models. Notably, the combination of BERT Large Uncased with the ElasticSearch Retriever emerged as the most effective, demonstrating superior performance in top-1 answer evaluation metrics.

Domain adaptation: Domain adaptation in chatbots refers to the process of extracting and transferring knowledge from a chatbot experienced in one domain to another domain. For example, a chatbot trained in customer service for telecommunications can transfer some of its learned behaviors to retail customer service, adjusting only for domain-specific knowledge.

In a recent study, a personalized response generation model, PRG-DM, was developed using domain adaptation [136]. Initially, it learned broad human conversational styles from a large dataset, then fine-tuned on a smaller personalized dataset with a dual learning mechanism. The study also introduced three rewards for evaluating conversations on personalization, informativeness, and grammar, employing the policy gradient method to optimize for high-quality responses. Experimental results highlighted the model’s ability to produce distinctly better-personalized responses across different users.

Dynamic data augmentation and synthetic data generation: To support these advanced learning paradigms, dynamic data augmentation and synthetic data generation techniques can be utilized to enrich the training data. These methods artificially expand the dataset with new, varied examples derived from the existing data, improving the model’s ability to generalize from limited examples. In the context of chatbots, this could mean generating new user queries and dialogues that simulate potential real-world interactions, thereby providing a richer training environment for the chatbot to learn from [137]. For example, a framework called Chatbot Interaction with AI (CI-AI) was developed to train chatbots for natural human–machine interactions. It utilized artificial paraphrasing with the T5 model to expand its training data, enhancing the effectiveness of transformer-based NLP classification algorithms. This method led to a notable improvement in algorithm performance, with an average accuracy increase of 4.01% across several models. Specifically, the RoBERTa model trained on these augmented data reached an accuracy of 98.96%. By combining the top five performing models into an ensemble, accuracy further increased to 99.59%, illustrating the framework’s capacity to interpret human commands more accurately and make AI more accessible to non-technical users [129].

Challenges and considerations: While the potential benefits are vast, the practical application of few-shot, zero-shot, and one-shot learning in chatbots comes with a set of challenges. Ensuring the chatbot’s responses remain accurate and relevant in the face of sparse data points necessitates ongoing evaluation and refinement. Moreover, developing models that can effectively leverage these learning paradigms requires a deep understanding of the underlying mechanisms and the ability to translate this knowledge into conversational AI.

5.4. Integrating Human Oversight

Integrating human oversight, commonly referred to as the “human-in-the-loop” approach, is a crucial strategy in the context of chatbot development and error correction. This method involves direct human participation in training, supervising, and refining the AI models that drive chatbots. Here is a detailed exploration of how this approach enhances chatbot functionality:

Direct involvement in training and feedback. In the human-in-the-loop method, human experts actively participate in the chatbot training process [138]. They provide valuable feedback on the chatbot’s responses, guiding and correcting them where necessary. This involvement is particularly beneficial in addressing complex or nuanced queries that the chatbot might struggle with [139]. Human trainers can also help in tagging and labeling data more accurately, which is a vital part of supervised learning. Their expertise ensures high-quality training data, leading to more effective and accurate chatbot responses [140].

Ongoing supervision and refinement. Post-deployment, human supervisors monitor the chatbot’s interactions to ensure it continues to respond appropriately [141]. They intervene when the chatbot fails to answer correctly or encounters unfamiliar scenarios, providing the correct response or action. This ongoing supervision allows for continuous refinement of the chatbot’s algorithms. Human experts can identify and rectify subtle issues, such as context misunderstanding or tone misinterpretation, which might not be evident through automated processes alone [142].

Enhancing personalization and empathy. Human input is instrumental in enhancing the chatbot’s ability to personalize interactions and respond empathetically. Humans can teach the chatbot to recognize and appropriately react to different emotional cues, a nuanced aspect that is challenging to automate [143]. By analyzing and understanding varied emotional responses and conversational styles, human trainers can program the chatbot to adapt its responses accordingly, making the interactions more relatable and engaging for users [144].

Quality control and ethical oversight. Human oversight also plays a crucial role in maintaining quality control, ensuring that the chatbot’s responses meet ethical standards and do not inadvertently cause offense or harm [145]. This aspect is particularly vital in sensitive areas such as healthcare, finance, or legal advice, where inaccurate information or inappropriate language can have serious consequences [146].

Balancing AI and human capabilities. The human-in-the-loop approach effectively balances the strengths of AI with human intuition and understanding. It recognizes that while AI can handle a vast amount of data and provide quick responses, human judgment is essential for nuanced interpretation and ethical decision-making [147,148]. This balanced approach leads to the development of chatbots that are technically proficient and demonstrate a level of understanding and responsiveness that resonates more closely with human users.

6. Case Studies: Error Correction in Chatbots

In this section, we explore various case studies where chatbots learn from their mistakes to be successfully applied in a specific domain. This exploration is split into two parts: firstly, we demonstrate specific chatbots that have shown significant improvement through learning from errors; secondly, we analyze the strategies employed and the outcomes achieved. Table 3 provides a summary of these case studies.

Table 3.

Examples of error correction strategies in chatbots across various domains.

6.1. Effective Chatbot Learning Examples

Several chatbots across different industries have shown remarkable progress in enhancing their interaction quality by learning from errors. The strategies employed range from data-driven feedback loops to advanced ML models, showcasing the versatility and adaptability of chatbots in enhancing user interaction quality [155,156,157].

6.1.1. Customer Service Chatbot in E-Commerce

In e-commerce, chatbots are implemented for handling customer queries. Through the integration of a feedback loop, these chatbots start to learn from customer interactions. Over time, they begin to recognize specific customer preferences and context, leading to more accurate product suggestions and higher customer satisfaction rates [158].

In an innovative application within the e-commerce sector, a new study has leveraged an intelligent, knowledge-based conversational agent to enhance its customer service capabilities [149]. The system’s core is a unique knowledge base (KB) that continuously improves itself to offer superior support over time. The KB organizes customer knowledge into six categories: knowledge about the customer, knowledge from the customer, knowledge for the customer, reference information on products (such as comments from social media), confirmed knowledge from reliable sources (like manuals), and pre-confirmed knowledge awaiting human expert review.

A web crawler automatically gathers information from the internet to keep the KB updated with fresh data. An NLP engine analyzes user queries to understand their intent and meaning by recognizing keywords, entities, and grammatical structure. The dialogue module manages conversation flow, using the NLP engine to interpret user queries and retrieve relevant information from the KB. If an answer is found, the reply generator provides a response. Otherwise, the query is handed off to a human customer service representative. A handover module facilitates the transition between the chatbot and human representatives for complex queries, while an adapter allows the chatbot to connect with various online chat platforms.

A prototype system was implemented for a leading women’s intimate apparel company, with positive results demonstrating increased customer service efficiency, improved customer satisfaction, and an enhanced knowledge base. This intelligent chatbot system offers a valuable tool for customer service applications across various industries, automating routine inquiries, improving customer experience, and freeing up human staff for more strategic tasks.

6.1.2. Healthcare Assistant Chatbot

Healthcare chatbots aim to assist patients with appointment scheduling and medication reminders [159]. Such chatbots may initially face challenges in interpreting patient inputs correctly. By employing supervised learning techniques, where the chatbots are trained with a more extensive set of medical terms and patient interaction scenarios, the accuracy of their responses improves significantly.

A recent study explored the development of an AI-powered chatbot named “Ted”, designed to assist individuals with mental health concerns [150]. The proposed solution aims to combat the shortage of mental healthcare providers by using NLP and deep learning techniques to understand user queries and provide supportive responses. The chatbot employs NLP methods such as tokenization, stop word removal, lemmatization, and lowercasing to preprocess user input. A neural network with Softmax activation is used for intent classification. Initial results demonstrate high accuracy (98.13%), suggesting the effectiveness of the proposed approach. This research highlights the potential of AI chatbots to offer accessible mental health support and address the stigma associated with seeking traditional help. Future studies should focus on conducting clinical validation to assess real-world benefits and addressing critical safety and ethical considerations.

Focusing on the underexplored area of hypertension self-management, a recent study introduced “Medicagent”, a chatbot developed through a user-centered design process utilizing Google Cloud’s Dialogflow [160]. This chatbot underwent rigorous usability testing with hypertension patients, involving tasks, questionnaires, and interviews, highlighting its potential to enhance self-management behaviors. With an impressive completion rate for tasks and a System Usability Scale (SUS) score of 78.8, indicating acceptable usability, “Medicagent” demonstrated strong potential in patient assistance. However, feedback suggested areas for improvement, including enhanced navigation features and the incorporation of a health professional persona to increase credibility and user satisfaction.

6.1.3. Banking Support Chatbot

In the banking sector, chatbots can assist customers with account inquiries and transactions. The incorporation of semi-supervised learning, where the bot is trained using a combination of labeled and unlabeled transaction data, enables it to better understand and classify different transaction requests, leading to more efficient customer service [161].

A recent study explored the development of a chatbot designed to enhance customer service efficiency in banking by processing natural language queries and providing timely responses [151]. A notable feature of this chatbot is its ability to learn from its mistakes through a feedback mechanism. When the chatbot fails to deliver a satisfactory answer, users can indicate their dissatisfaction via a Dislike button. Such responses are logged, allowing developers to later refine the chatbot’s database and retrain its classification model with accurate answers, thus progressively improving its performance and dataset accuracy. The study compared seven classification algorithms to identify the most effective for categorizing user queries. The integration of NLP, vectorization, and classification algorithms enables the chatbot to efficiently classify new queries without the need for retraining each time, significantly reducing processing time. Through usability testing, the Random Forest and Support Vector Machine classifiers emerged as the most accurate, informing the final choice for the chatbot’s design. In addition, query mapping and response generation were further refined using cosine similarity to match user queries with the most relevant answers from the dataset. This approach ensured that the chatbot remains domain-specific, with built-in thresholds for cosine similarity to manage out-of-domain queries effectively.

6.1.4. Travel Booking Chatbot

Chatbots have proven useful for booking flights and hotels. RL enables these chatbots to improve their performance by rewarding the chatbot for accurately understanding booking details. Additionally, these chatbots can be trained to ask clarifying questions when faced with ambiguous user input, leading to more accurate bookings and an overall enhanced user experience [152,162].

For instance, a pioneering study introduced an advanced chatbot system designed for the Echo platform, showcasing a significant leap in enhancing human–machine interactions within the travel industry [152]. This chatbot, developed with the goal of streamlining travel planning, leverages a deep neural network (DNN) approach, specifically employing the Restricted Boltzmann Machine (RBM) combined with Collaborative Filtering techniques. It excels in gathering user preferences to form a comprehensive knowledge base, which in turn facilitates highly personalized travel recommendations.

A key feature of this chatbot is its capacity for learning from user interactions. As travelers interact with the chatbot, providing feedback and preferences, the system fine-tunes its recommendation algorithms. This continuous learning process improves the accuracy of travel suggestions over time and significantly enhances user experience by making interactions more intuitive and responses more relevant to individual needs.

6.1.5. Education

Chatbots are rapidly transforming the educational landscape, offering innovative solutions for personalized learning, 24/7 support, and enhanced engagement. These AI-powered tools can analyze student data to create tailored learning paths, provide instant feedback, and automate administrative tasks, allowing educators to focus on meaningful interactions [163]. Interactive features like quizzes, games, and simulations make learning more enjoyable and effective. Chatbots can also assist with language learning, mental health support, and special needs education, catering to diverse student needs.

For example, Abu-Rasheed et al. presented an LLM-based chatbot designed to support students in understanding and engaging with personalized learning recommendations [153]. Recognizing that student commitment is linked to understanding the rationale behind recommendations, the authors proposed using the chatbot as a mediator for conversational explainability. The system leverages a knowledge graph (KG) as a reliable source of information to guide the LLM’s responses, ensuring accurate and contextually relevant explanations. This approach mitigates the risks associated with uncontrolled LLM output while still benefiting from its generative capabilities. The chatbot also incorporates a group chat feature, allowing students to connect with human mentors when needed or when the chatbot’s capabilities are exceeded. This hybrid approach combines the strengths of both AI and human guidance to provide comprehensive support. The researchers conducted a user study to evaluate the chatbot’s effectiveness, highlighting the potential benefits and limitations of using chatbots for conversational explainability in educational settings. This study serves as a proof-of-concept for future research and development in this area.

Heller et al. (2005) explored the use of “Freudbot,” an AIML-based chatbot emulating Sigmund Freud, to investigate whether a famous person chatbot could enhance student engagement with course content in a distance education setting [164]. The study involved 53 psychology students who interacted with Freudbot for 10 min, followed by a questionnaire assessing their experience. While student evaluations of the chat experience were neutral, participants expressed enthusiasm for chatbot technology in education and provided insights for future improvement. An analysis of chat logs revealed high levels of on-task behavior, suggesting the potential for chatbots as effective learning tools in online and distance education environments.

6.1.6. Language Learning Assistant

The challenge in the design of language learning chatbots is providing correct grammar explanations. By integrating the human-in-the-loop approach, language experts are able to provide direct feedback and corrections [163]. This human oversight, combined with continuous user interaction data, allows the chatbot to refine its grammar teaching techniques, becoming a more effective learning tool [165].

As an example, during the COVID-19 pandemic, an innovative chatbot was developed to support and motivate second language learners, leveraging Dialogflow for its construction [154]. Designed to complement in-class learning with active, out-of-class interactions, this chatbot adapts to each learner’s unique abilities and learning pace, offering personalized instruction. A significant aspect of its design is the capability to learn from interactions, particularly addressing and correcting language mistakes. By engaging in chats, the chatbot identifies areas of difficulty for learners, adjusting its instructional approach accordingly. Hosted on a language center’s Facebook page, it provides a familiar and accessible learning environment, facilitating 24/7 language practice. This chatbot exemplifies how AI can enhance language learning by adapting to individual learning needs and continuously improving its instructional methods based on learner feedback and errors.

Another example is “Ellie”, a second language (L2) learning chatbot, that leverages voice recognition and rule-based response mechanisms to support language acquisition [166]. Developed with a focus on user-centered design, Ellie offers three interactive modes to cater to diverse learning needs. Its use of Dialogflow for NLP enables it to understand and respond to complex queries. The key to Ellie’s design is its capacity for iterative improvement; by analyzing user interactions and feedback, it continuously refines its responses. Piloted among Korean high school students, Ellie demonstrated its potential as an effective educational tool, underscoring the importance of adaptability and personalized learning in language education.

6.2. Strategy Analysis and Outcomes

In examining the case studies of chatbots that have effectively learned from their mistakes, it becomes evident that the success of these chatbots centers around the strategic application of specific error correction methodologies. This analysis focuses on dissecting the strategies employed and evaluating their outcomes, providing a comprehensive understanding of what works in practical settings.

6.2.1. Feedback Loops and User Engagement