The Eye in the Sky—A Method to Obtain On-Field Locations of Australian Rules Football Athletes

Abstract

1. Introduction

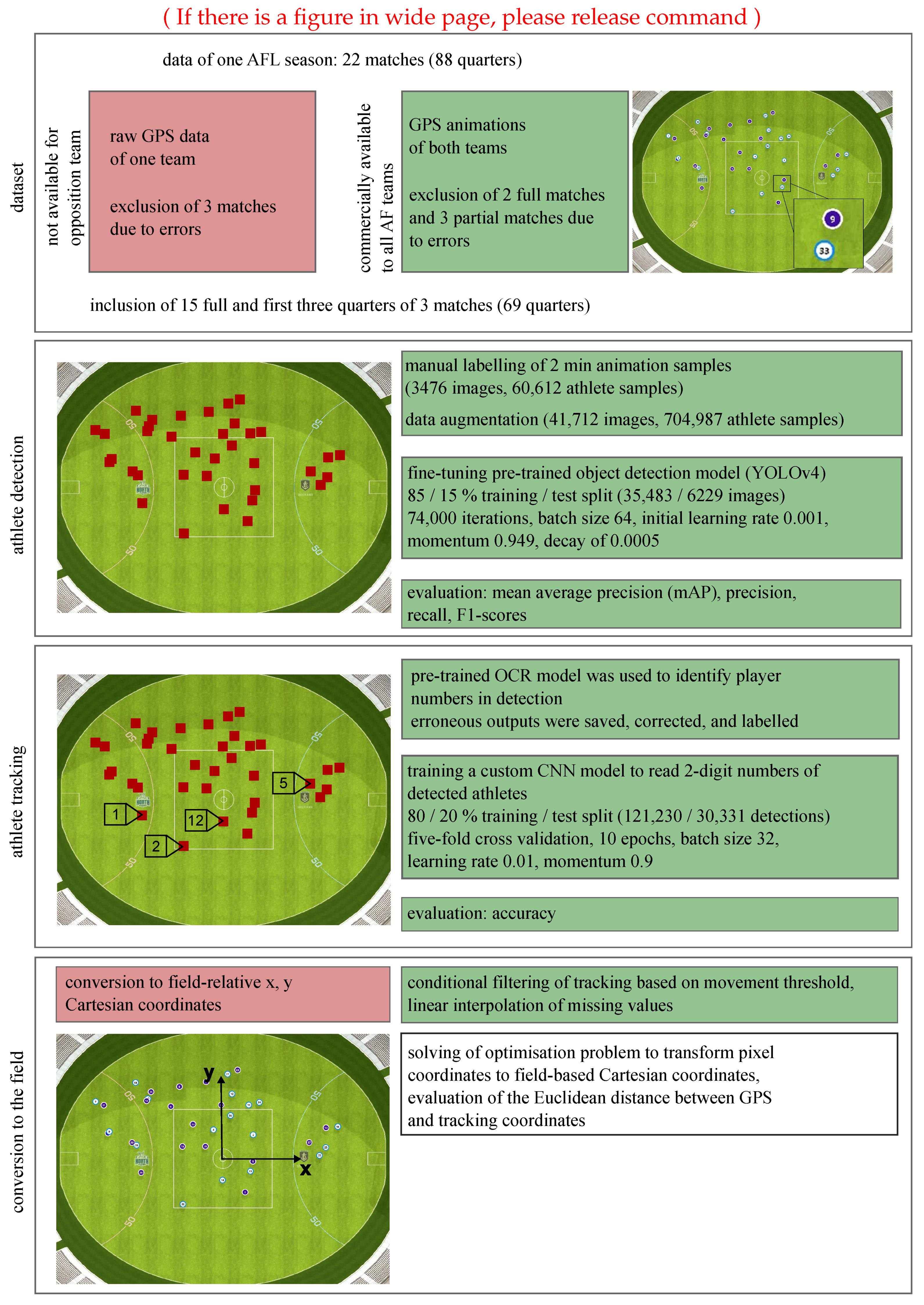

2. Materials and Methods

2.1. Athlete Detection

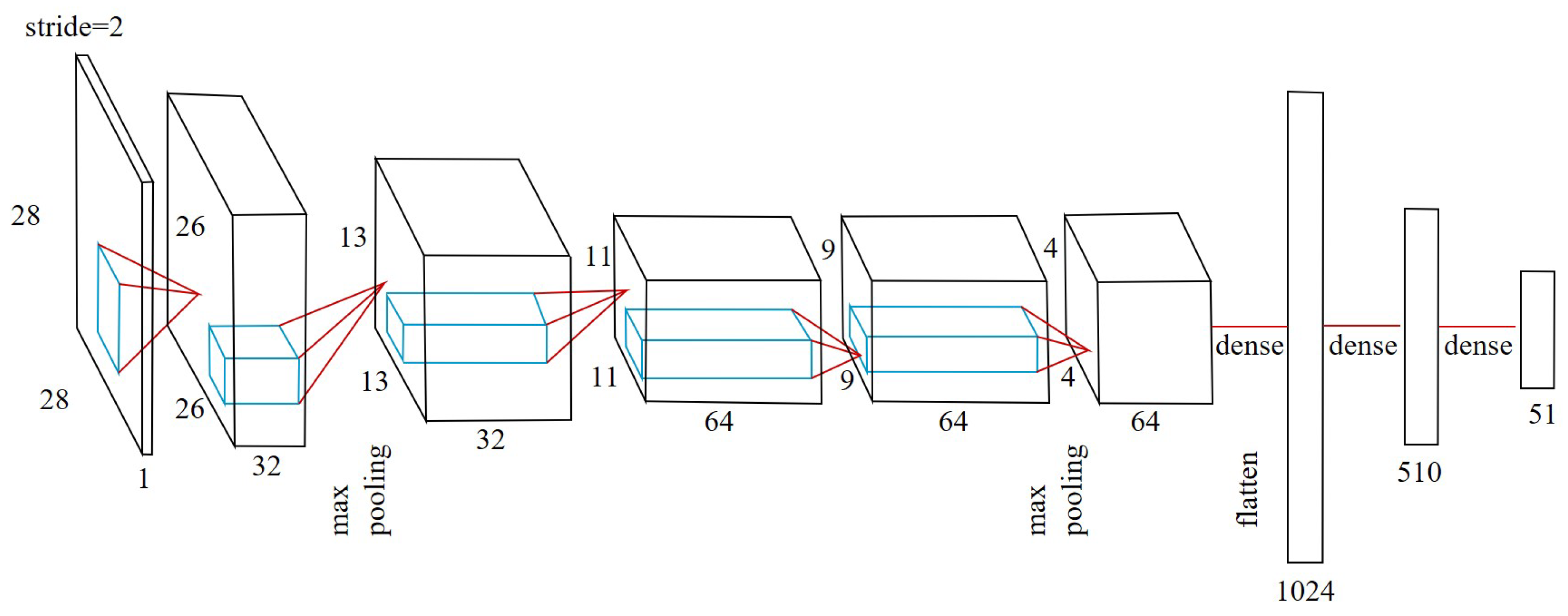

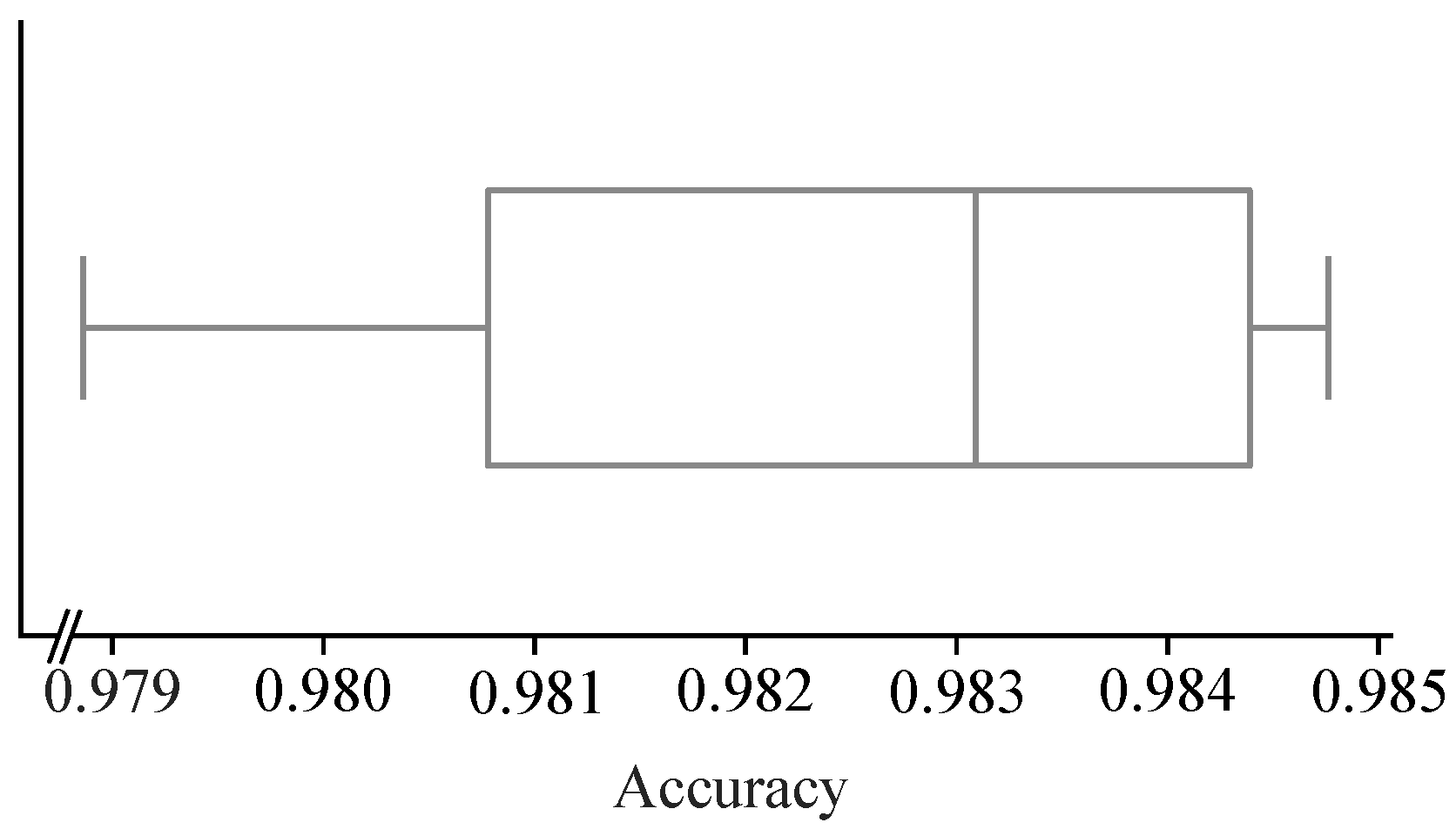

2.2. Athlete Tracking

2.3. Conversion to the Field

2.3.1. GPS Data

2.3.2. Animation Data

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Robertson, S. Man & machine: Adaptive tools for the contemporary performance analyst. J. Sport. Sci. 2020, 38, 2118–2126. [Google Scholar] [CrossRef] [PubMed]

- Naik, B.T.; Hashmi, M.F.; Bokde, N.D. A Comprehensive Review of Computer Vision in Sports: Open Issues, Future Trends and Research Directions. Appl. Sci. 2022, 12, 4429. [Google Scholar] [CrossRef]

- Rahimian, P.; Toka, L. Optical tracking in team sports: A survey on player and ball tracking methods in soccer and other team sports. J. Quant. Anal. Sport. 2022, 18, 35–57. [Google Scholar] [CrossRef]

- Torres-Ronda, L.; Beanland, E.; Whitehead, S.; Sweeting, A.; Clubb, J. Tracking Systems in Team Sports: A Narrative Review of Applications of the Data and Sport Specific Analysis. Sport. Med.-Open 2022, 8, 15. [Google Scholar] [CrossRef]

- Kovalchik, S.A. Player Tracking Data in Sports. Annu. Rev. Stat. Its Appl. 2023, 10, 677–697. [Google Scholar] [CrossRef]

- Vella, A.; Clarke, A.C.; Kempton, T.; Ryan, S.; Coutts, A.J. Assessment of Physical, Technical, and Tactical Analysis in the Australian Football League: A Systematic Review. Sport. Med.-Open 2022, 8, 124. [Google Scholar] [CrossRef]

- Junliang, X.; Haizhou, A.; Liwei, L.; Shihong, L. Multiple Player Tracking in Sports Video: A Dual-Mode Two-Way Bayesian Inference Approach With Progressive Observation Modeling. IEEE Trans. Image Process. 2011, 20, 1652–1667. [Google Scholar] [CrossRef] [PubMed]

- Heydari, M.; Moghadam, A. An MLP-based player detection and tracking in broadcast soccer video. In Proceedings of the 2012 International Conference of Robotics and Artificial Intelligence, Rawalpindi, Pakistan, 22–23 October 2012. [Google Scholar] [CrossRef]

- Ivankovic, Z.; Rackovic, M.; Ivkovic, M. Automatic player position detection in basketball games. Multimed. Tools Appl. 2014, 72, 2741–2767. [Google Scholar] [CrossRef]

- Manafifard, M.; Ebadi, H.; Abrishami Moghaddam, H. A survey on player tracking in soccer videos. Comput. Vis. Image Underst. 2017, 159, 19–46. [Google Scholar] [CrossRef]

- Liu, J.; Huang, G.; Hyyppä, J.; Li, J.; Gong, X.; Jiang, X. A survey on location and motion tracking technologies, methodologies and applications in precision sports. Expert Syst. Appl. 2023, 229, 120492. [Google Scholar] [CrossRef]

- Bastanfard, A.; Jafari, S.; Amirkhani, D. Improving Tracking Soccer Players in Shaded Playfield Video. In Proceedings of the 2019 5th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), Shahrood, Iran, 18–19 December 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Previtali, F.; Bloisi, D.; Iocchi, L. A distributed approach for real-time multi-camera multiple object tracking. Mach. Vis. Appl. 2017, 28, 421–430. [Google Scholar] [CrossRef]

- Hurault, S.; Ballester, C.; Haro, G. Self-Supervised Small Soccer Player Detection and Tracking. In Proceedings of the 3rd International Workshop on Multimedia Content Analysis in Sports, Seattle, WA, USA, 16 October 2020; pp. 9–18. [Google Scholar]

- Host, K.; Ivasic-Kos, M.; Pobar, M. Tracking Handball Players with the DeepSORT Algorithm. In Proceedings of the ICPRAM, Valletta, Malta, 22–24 February 2020; pp. 593–599. [Google Scholar]

- Buric, M.; Ivasic-Kos, M.; Pobar, M. Player tracking in sports videos. In Proceedings of the 2019 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Sydney, Australia, 11–13 December 2019; pp. 334–340. [Google Scholar]

- Yoon, Y.; Hwang, H.; Choi, Y.; Joo, M.; Oh, H.; Park, I.; Lee, K.H.; Hwang, J.H. Analyzing Basketball Movements and Pass Relationships Using Realtime Object Tracking Techniques Based on Deep Learning. IEEE Access 2019, 7, 56564–56576. [Google Scholar] [CrossRef]

- Santiago, C.B.; Sousa, A.; Reis, L.P.; Estriga, M.L. Real Time Colour Based Player Tracking in Indoor Sports. In Computational Vision and Medical Image Processing: Recent Trends; Springer: Dordrecht, The Netherlands, 2011; pp. 17–35. [Google Scholar] [CrossRef]

- Tong, X.; Liu, J.; Wang, T.; Zhang, Y. Automatic player labeling, tracking and field registration and trajectory mapping in broadcast soccer video. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–32. [Google Scholar] [CrossRef]

- Ben Shitrit, H.; Berclaz, J.; Fleuret, F.; Fua, P. Multi-Commodity Network Flow for Tracking Multiple People. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1614–1627. [Google Scholar] [CrossRef] [PubMed]

- Santiago, C.; Sousa, A.; Reis, L. Vision system for tracking handball players using fuzzy color processing. Mach. Vis. Appl. 2013, 24, 1055–1074. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. 511–518. [Google Scholar] [CrossRef]

- de Padua, P.; Padua, F.; Sousa, M.; De A. Pereira, M. Particle Filter-Based Predictive Tracking of Futsal Players from a Single Stationary Camera. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015. [Google Scholar] [CrossRef]

- Martín, R.; Martínez, J. A semi-supervised system for players detection and tracking in multi-camera soccer videos. Multimed. Tools Appl. 2014, 73, 1617–1642. [Google Scholar] [CrossRef]

- Kim, W.; Moon, S.W.; Lee, J.; Nam, D.W.; Jung, C. Multiple player tracking in soccer videos: An adaptive multiscale sampling approach. Multimed. Syst. 2018, 24, 611–623. [Google Scholar] [CrossRef]

- Li, W.; Powers, D. Multiple Object Tracking Using Motion Vectors from Compressed Video. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 29 November–1 December 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. ECO: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Thinh, N.H.; Son, H.H.; Phuong Dzung, C.T.; Dzung, V.Q.; Ha, L.M. A video-based tracking system for football player analysis using Efficient Convolution Operators. In Proceedings of the 2019 International Conference on Advanced Technologies for Communications (ATC), Hanoi, Vietnam, 17–19 October 2019; pp. 149–154. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of Deep Learning for Object Detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar] [CrossRef]

- Ran, N.; Kong, L.; Wang, Y.; Liu, Q. A Robust Multi-Athlete Tracking Algorithm by Exploiting Discriminant Features and Long-Term Dependencies. In MultiMedia Modeling: 25th International Conference, MMM 2019, Thessaloniki, Greece, 8–11 January 2019; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Baysal, S.; Duygulu, P. Sentioscope: A Soccer Player Tracking System Using Model Field Particles. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1350–1362. [Google Scholar] [CrossRef]

- Fu, X.; Zhang, K.; Wang, C.; Fan, C. Multiple player tracking in basketball court videos. J. Real-Time Image Process. 2020, 17, 1811–1828. [Google Scholar] [CrossRef]

- Santhosh, P.; Kaarthick, B. An Automated Player Detection and Tracking in Basketball Game. Comput. Mater. Contin. 2019, 58, 625. [Google Scholar] [CrossRef]

- Maria Martine, B.; Noah, E.; Qipei, M.; Mustafa, G.; Samer, A.; Lindsey, W. A Deep Learning and Computer Vision Based Multi-Player Tracker for Squash. Appl. Sci. 2020, 10, 8793. [Google Scholar] [CrossRef]

- Johnston, R.; Black, G.; Harrison, P.; Murray, N.; Austin, D. Applied Sport Science of Australian Football: A Systematic Review. Sport. Med. 2018, 48, 1673–1694. [Google Scholar] [CrossRef] [PubMed]

- Faulkner, H.; Dick, A. AFL player detection and tracking. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Linke, D.; Link, D.; Lames, M. Validation of electronic performance and tracking systems EPTS under field conditions. PLoS ONE 2018, 13, e0199519. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J. Darknet: Open Source Neural Networks in C. 2013. Available online: http://pjreddie.com/darknet/ (accessed on 17 February 2022).

- Supervisely. Unified OS/Platform for Computer Vision. 2022. Available online: https://supervise.ly/ (accessed on 17 February 2022).

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Ooms, J. Tesseract: Open Source OCR Engine. 2022. Available online: https://github.com/ropensci/tesseract (accessed on 17 February 2022).

- Rizky, A.F.; Yudistira, N.; Santoso, E. Text recognition on images using pre-trained CNN. arXiv 2023, arXiv:2302.05105. [Google Scholar]

- Earth, G. Riverway Stadium. 2023. Available online: https://www.google.com/maps (accessed on 12 June 2023).

- The Defense Mapping Agency. World Geodetic System 1984, Its Definition and Relationship with Local Geodetic Systems; Report; Department of Defense: Washington, DC, USA, 1991.

- Varley, M.; Gabbett, T.; Aughey, R. Activity profiles of professional soccer, rugby league and Australian football match play. J. Sport. Sci. 2014, 32, 1858–1866. [Google Scholar] [CrossRef]

- Levenberg, K. A Method for the Solution of Certain Non-Linear Problems in Least Squares. Q. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef]

- Liang, Q.; Wu, W.; Yang, Y.; Zhang, R.; Peng, Y.; Xu, M. Multi-Player Tracking for Multi-View Sports Videos with Improved K-Shortest Path Algorithm. Appl. Sci. 2020, 10, 864. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. arXiv 2022, arXiv:2110.06864. [Google Scholar]

- Pu, Y.; Liang, W.; Hao, Y.; YUAN, Y.; Yang, Y.; Zhang, C.; Hu, H.; Huang, G. Rank-DETR for High Quality Object Detection. In Proceedings of the Advances in Neural Information Processing Systems; Oh, A., Neumann, T., Globerson, A., Saenko, K., Hardt, M., Levine, S., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 16100–16113. [Google Scholar]

- Yang, L.; Zheng, Z.; Wang, J.; Song, S.; Huang, G.; Li, F. AdaDet: An Adaptive Object Detection System Based on Early-Exit Neural Networks. IEEE Trans. Cogn. Dev. Syst. 2024, 16, 332–345. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Le, H.; Carr, P.; Yue, Y.; Lucey, P. Data-driven ghosting using deep imitation learning. In Proceedings of the MIT Sloan Sports Analytics Conference, Boston, MA, USA, 3–4 March 2017. [Google Scholar]

- Seidl, T.; Cherukumudi, A.; Hartnett, A.; Carr, P.; Lucey, P. Bhostgusters: Realtime Interactive Play Sketching with Synthesized NBA Defenses. In Proceedings of the MIT Sloan Sports Analytics Conference, Boston, MA, USA, 23–24 February 2018. [Google Scholar]

| Stadium (No. Matches) | Pix-uv | m Coeff (Mean ± Std) | c Coeff (Mean ± Std) |

|---|---|---|---|

| Optus Stadium (10) | u | 0.15 ± 4.32 × 10−3 | −160.02 ± 3.99 |

| v | 0.13 ± 3.46 × 10−3 | −69.36 ± 2.04 | |

| Metricon Stadium (1) | u | 0.13 ± 7.85 × 10−4 | −129.01 ± 0.62 |

| v | 0.15 ± 3.76 × 10−4 | −81.69 ± 0.51 | |

| Adelaide Oval (2) | u | 0.12 ± 3.60 × 10−4 | −117.23 ± 0.40 |

| v | 0.15 ± 3.96 × 10−4 | −84.85 ± 0.38 | |

| Melbourne Cricket Ground (1.75) | u | 0.18 ± 3.13 × 10−4 | −172.77 ± 0.24 |

| v | 0.14 ± 6.11 × 10−4 | −81.65 ± 0.43 | |

| University of Tasmania Stadium (1) | u | 0.14 ± 6.42 × 10−4 | −131.02 ± 0.52 |

| v | 0.18 ± 2.22 × 10−3 | −101.01 ± 0.91 | |

| Manuka Oval (0.75) | u | 0.14 ± 1.17 × 10−4 | −132.54 ± 0.22 |

| v | 0.17 ± 1.07 × 10−3 | −95.14 ± 0.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Born, Z.; Mundt, M.; Mian, A.; Weber, J.; Alderson, J. The Eye in the Sky—A Method to Obtain On-Field Locations of Australian Rules Football Athletes. AI 2024, 5, 733-745. https://doi.org/10.3390/ai5020038

Born Z, Mundt M, Mian A, Weber J, Alderson J. The Eye in the Sky—A Method to Obtain On-Field Locations of Australian Rules Football Athletes. AI. 2024; 5(2):733-745. https://doi.org/10.3390/ai5020038

Chicago/Turabian StyleBorn, Zachery, Marion Mundt, Ajmal Mian, Jason Weber, and Jacqueline Alderson. 2024. "The Eye in the Sky—A Method to Obtain On-Field Locations of Australian Rules Football Athletes" AI 5, no. 2: 733-745. https://doi.org/10.3390/ai5020038

APA StyleBorn, Z., Mundt, M., Mian, A., Weber, J., & Alderson, J. (2024). The Eye in the Sky—A Method to Obtain On-Field Locations of Australian Rules Football Athletes. AI, 5(2), 733-745. https://doi.org/10.3390/ai5020038