Abstract

Situational awareness (SA) is defined as the perception of entities in the environment, comprehension of their meaning, and projection of their status in near future. From an Air Force perspective, SA refers to the capability to comprehend and project the current and future disposition of red and blue aircraft and surface threats within an airspace. In this article, we propose a model for SA and dynamic decision-making that incorporates artificial intelligence and dynamic data-driven application systems to adapt measurements and resources in accordance with changing situations. We discuss measurement of SA and the challenges associated with quantification of SA. We then elaborate a plethora of techniques and technologies that help improve SA ranging from different modes of intelligence gathering to artificial intelligence to automated vision systems. We then present different application domains of SA including battlefield, gray zone warfare, military- and air-base, homeland security and defense, and critical infrastructure. Finally, we conclude the article with insights into the future of SA.

1. Introduction

Situational awareness (SA) can be defined as the cognizance of entities in the environment, understanding of their meaning, and the projection of their status in near future. From an Air Force perspective, SA refers to the capability to conceive the current and future disposition of red and blue aircraft and surface threats within a volume of space. Endsley’s model of SA [1] has been widely adopted, which comprises of three distinct stages or levels: perception, comprehension, and projection. The U.S. Department of Defense (DOD) dictionary of military and associated terms defines space SA as: “The requisite foundational, current, and predictive knowledge and characterization of space objects and the operational environment upon which space operations depend” [2]. SA is often considered to encompass assessment (machine), awareness (user), and understanding (user-machine teaming) [3].

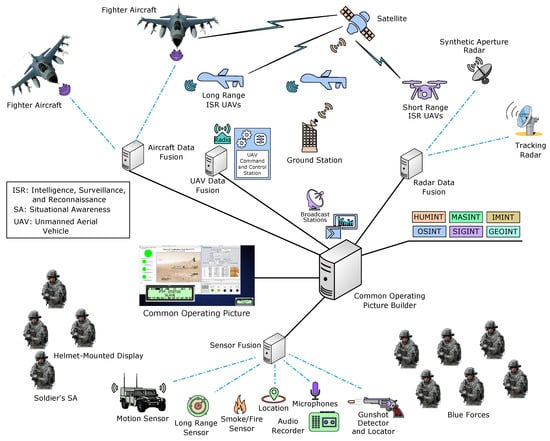

Although SA is needed for many domains such as emergency and/or disaster response, industrial process control SA, and infrastructure monitoring, SA is particulary important for military and Air Force. Figure 1 depicts an overview of SA from military and Air Force perspective. SA is an integral part of military command and control (C2). The U.S. DOD dictionary of military and associated terms defines C2 as: “The exercise of authority and direction by a properly designated commander over assigned and attached forces in the accomplishment of the mission” [2]. C2 can be regarded as comprising of SA, planning, tasking, and control. The C2 system design is aimed at presenting the situation selectively in a way to the commander that facilitates the commander to comprehend the situation and then take the best possible action. SA is imperative for not only commanders but also for dismounted operators. For dismounted operators to engage efficiently, they must not only obtain and comprehend information regarding their environment but also utilize this information to predict events in the near future, and thus plan and adapt their actions accordingly.

Figure 1.

Overview of situational awareness.

SA is imperative for Air Force and is regarded as the decisive factor in air combat engagements [4]. Survival in dogfight is heavily reliant on SA as it depends on observing the enemy’s aircraft current move and predicting its future action a fraction of seconds before the enemy observes his/her aircraft’s movement himself/herself. SA can also be viewed as equivalent to “observe” and “orient” phases of the observe-orient-decide-act (OODA) loop, described by the United States Air Force (USAF) war theorist Colonel John Boyd [5]. The winning strategy in combats is to get inside the opponent’s OODA loop by having a better SA than the opponent and thus not only making one’s own decisions quicker than the opponent but also be potentially able to change the situation in way that is not observable or comprehendible by the opponent in the given time. Loosing one’s SA is tantamount to being out of the OODA loop. Since pilots deal with many arduous situations such as higher levels of aviation traffic, inclement weather (e.g., storms, fog), and recently unmanned aerial vehicles (UAVs) in the air space, they need to be equipped with an advanced SA system to cope with these antagonistic conditions. This article discusses SA from a military and Air Force standpoint. Our main contributions in this article are as follows:

- We define and elaborate SA from a military and Air Force perspective.

- We present a model for SA and dynamic decision-making.

- We discuss measurement of SA, identify metrics for SA assessment, and challenges associated with the quantification of SA.

- We discuss different techniques and technologies that help improve SA.

- We provide an overview of different application domains of SA including battlefield, urban warfare, gray zone warfare, homeland security and defense, disaster response management, and critical infrastructure.

The remainder of this article is organized as follows. Section 2 presents a model for SA and associated decision-making. Section 3 discusses measurement methods of SA and the challenges associated with measuring SA. The techniques and technologies to improve SA are elaborated in Section 4. Section 5 provides an overview of different application domains of SA. Finally, Section 6 concludes this article.

2. SA and Decision Model

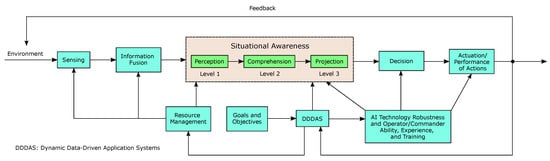

In this section, we present and discuss a model for SA and associated decision-making. Figure 2 depicts our SA and dynamic decision-making model that has been inspired from Endsley’s model of SA [6], which has been widely adopted. The model has an SA core whereas sensing and decision-making elements are built around the SA core. A multitude of sensors sense the environment to acquire the state of the environment. The sensed information is fused together to remove the redundancies in the sensed data, such as multiple similar views captured by different cameras or quantities sensed by different sensors in close locality, and also to overcome of the shortcomings of data acquired from a single source, such as occlusions, change in ambient lighting conditions, and/or chaotic elements in the environment. The fused data is then passed to the SA core, which comprises of three levels or stages [7].

Figure 2.

Model of SA and dynamic decision-making.

Perception—Level 1 SA: The first stage of attaining SA is the perception of the status, attributes, and dynamics of the entities in the surroundings. For instance, a pilot needs to discern important entities in the environment such as other aircraft, terrain, and warning lights along with their pertinent characteristics.

Comprehension—Level 2 SA: The second stage of SA is the comprehension of the situation, which is based on the integration of disconnected level 1 SA elements. The level 2 SA is a step further than just being aware of elements in the environment as it deals with developing an understanding of the significance of those elements in relation to an operator’s objectives. Concisely, we can state the second stage of SA as understanding of entities in the surroundings, in particular when integrated together, in connection to the operator’s objectives. For instance, a pilot must understand the significance of the perceived elements in relation to each other. An amateur operator may be able to attain the same level 1 SA as more experienced ones, but may flounder to assimilate the perceived elements along with relevant goals to comprehend the situation fully (level 2 SA).

Projection—Level 3 SA: The third level of SA relates to the ability to project the future actions of entities in the environment at least in the near term. This projection is achieved based on the cognizance of status and dynamics of elements in the environment and comprehension of the situation. Succinctly, we can state the third level of SA as prediction or estimation of the status of entities in the surroundings in future, at least in the near future. For example, from the perceived and comprehended information, the experienced pilots/operators predict possible future events (level 3 SA), which provides them knowledge and time to determine the most befitting course of action to achieve their objectives [1].

As shown in Figure 2, the SA core also receives input from the commanders at strategic or operational levels regarding goals or objectives of SA. Our model enhances the SA model from Endsley [7] for perception, comprehension, and projection by adding support for artificial intelligence (AI) assisted decision-making and resource management. Perception is addressed through the standard information fusion and resource management loop. Moreover, to better manage the resources according to changing situations, a dynamic data-driven application systems (DDDAS) module provides input to a resource management module, which manages the sensors sensing the environment and computing resources in the SA core. The DDDAS is a paradigm in which the computation and instrumentation facets of an application system are dynamically assimilated in a feedback control loop such that the instrumentation data can by dynamically fused into the executing model of the application, while the the executing model can in turn control the instrumentation [8].

In Figure 2, the DDDAS module helps steer the information fusion process of measurement instrumentation and data augmentation from physical knowledge for improved SA of the entities of interest in the environment. The DDDAS module can help guide and reconfigure sensors to increase the information content of the sensed data for enhancing SA of the activities of interest in the environment [8]. The DDDAS module also helps in adaptation of computing resources by matching the expected entropies of localized information content and accordingly directs to allocate more computation resources to entities that have resulted in high information entropies. For example, in case of surveillance application, existence of objects of interest in a particular locality would require high resolution sensing and prioritized computations to enable detection and tracking of those objects in real-time whereas areas with no activity of interest would accept courser sensing and computations without a noticeable deterioration in SA. One example of use of DDDAS data augmentation of physical knowledge is in target tracking using road constraints as knowledge [8,9]. Hence, the DDDAS paradigm with the augmented data provides additional opportunities for leveraging deep learning to complement traditional methods of information fusion for various applications of SA, such as autonomous vehicles tracking with GPS data [10], traffic surveillance with image data [11], and transportation scheduling [12].

Due to the recent advancements in AI, AI has become an integral part of SA core and dynamic decision-making. AI assists operators/pilots in comprehending the situation (level 2 SA) and then making projections about the future actions of entities in the environment (level 3 SA). Thus, both the robustness of AI models as well as the operators’ ability, experience, and training determine the level of comprehension acquired by the operators and the accuracy of future projections. Based on the acquired comprehension and projection, decisions are recommended by the AI models to the commanders and then the commanders make the appropriate decisions taking into account the input from the AI and the assessed situation. Finally, the decisions are implemented at the tactical level by the operators. The decisions to be implemented have a vast range including, for example, the positioning of personnel and equipment, firing of the weapons, medical evacuation, and logistics support.

3. Measuring Situational Awareness

A fundamental question that arises in designing SA systems is whether the system under design is better than some other alternating system in promoting SA. To answer that question, a designer needs a means for evaluating the concepts and technologies which are being developed for enhancing SA. The SA measurement methodology should encompass a multitude of system design concepts, including [13]: (i) display symbolism, (ii) advanced display conceptions such as 3-D displays, voice control, flat panel displays, heads-up displays (HUD), and helmet-mounted displays (HMD), etc., (iii) electronics, avionics, and sensing concepts, (iv) information fusion concepts, (v) automation, (vi) integrity, (vii) trustworthiness, and (viii) training approaches. Some of the metrics for SA assessment include: (i) timeliness, (ii) accuracy, (iii) trust, (iv) credibility (can be described by a confusion matrix of probability of detection and probability of false alarm), (v) availability (of information and system), (vi) workload, (vii) cost, (viii) attention, (ix) performance (success in accomplishing the mission; can also be used to assess decisions taken), and (x) scope (local versus global or single-intelligence (single-INT) versus multi-intelligence (multi-INT)).

Uhlarik and Comerford [14] describes the types of constructs that are relevant when assessing the validity of SA measurement approaches:

- Face validity: the degree to which an SA measurement approach appears to measure SA as adjudicated by a subject matter expert.

- Construct validity: the extent to which an SA measurement approach is supported by sound theory or model of SA.

- Predictive validity: the degree to which an SA measurement approach can predict SA.

- Concurrent validity: the extent to which an SA measurement approach correlates with other measures of SA.

3.1. SA Assessment Techniques

Nguyen et al. [15] have presented a detailed review of SA assessment approaches. SA assessment techniques utilize different types of probes to measure SA. These probes include [15]:

Freeze-Probe Techniques: In freeze-probe techniques, the task performed by a subject (pilot) is randomly frozen in a flight simulator training. All displays are blanked and a set of queries are presented to the subject. The subject answers the queries based on his/her knowledge and comprehension of the current environment (i.e., frozen points). The subject’s responses are recorded and compared against the actual state of the current environment to provide an SA score.

Real-Time Probe Techniques: In real-time probe techniques, the queries are presented to the subject at relevant points during task execution without freezing the task. The responses of the subject to the queries along with response time are recorded to determine the SA score.

Post-Trial Self-Rating Techniques: Each subject provides a subjective assessment of his/her own SA on a rating scale after task execution. Since the self-rating is carried out post-trial, the self-rating techniques are swift and easy to use but suffer from high subjectivity that depends on the task/mission performance of the subject and may not accurately reflect the SA that was actually received during the mission because humans often exhibit poor recall of past mental events [15].

Observer-Rating Techniques: The subject matter experts (SMEs) provide an SA rating by observing the subject’s behavior and performance during a task execution. These techniques are non-intrusive, however, the SA rating assigned by an observer is ambiguous as it is not feasible to accurately observe the internal process of SA.

Performance-based Rating Techniques: The SA rating is assigned based on the performance of a subject during a mission/task. Several characteristics of performance during the events of a task are recorded and analyzed to determine SA rating. Performance-based ratings have a drawback as the techniques assume that a subject’s efficient performance corresponds to a good SA, which is not necessarily true because performance also depends on the subject’s experience and skill.

Process Indices-based Rating Techniques: Process indices-based SA rating techniques record, analyze, and rate certain processes that a subject follows to determine SA during task execution. Observing a subject’s eye movements during task execution is one of the examples of process indices. The process indices-based ratings have a drawback as a subject may focus on a certain environment element as indicated by an eye-tracking device but the subject is not able to perceive the element or situation.

In this section, we discuss a few of the commonly used SA measurement techniques, viz.,: (i) NASA task load index (TLX) [16], (ii) SA global assessment technique (SAGAT) [13], (iii) SA rating technique (SART) [17], and (iv) critical decision method (CDM) [18]. Table 1 provides a comparison of different SA measurement techniques based on the assessment metrics discussed earlier. The techniques are discussed in the following.

Table 1.

Comparison of SA assessment techniques.

3.1.1. NASA TLX

Workload is a cardinal factor of any user’s operations as there is an optimal point to balance between the mission requirement and the allotted time to carry out various tasks. Hart and Staveland developed the TLX in 1988 [16]. NASA TLX utilizes post-trial self-rating probing techniques. The TLX provides a subjective report of the user regarding SA metrics, which is typically utilized to estimate workload. The user answers to the questions on a reported scale gives a relative assessment of mental, physical, and temporal demands of a task. NASA TLX focuses on timeliness, workload, and performance metrics of SA assessment.

3.1.2. SAGAT

The SAGAT [13] has been proposed as a method for measuring a pilot’s SA. The SAGAT is named as such because the techniques distinguishes local SA versus global SA. The SAGAT utilizes freeze-probe techniques to determine SA rating. In SAGAT, a pilot flies a mission scenario using a given aircraft system in a man-in-the-loop simulation. At some random point in time, the simulation is halted and the pilot is provided a questionnaire to determine his/her knowledge of the situation at that moment of time. Since it is infeasible to ask all the questions from the pilot regarding his/her SA in one stop, a subset of the SA queries selected randomly are asked from the pilot in each stop. This random sampling process is reiterated a number of times for several pilots flying the same mission for statistical significance. This random sampling method permits consistency and statistical validity, which enables SA scores across different trials, pilots, systems, and missions to be compared. At the completion of the trial, the query answers are examined against what actually was happening in the simulation. The comparison of the perceived and actual situation provides a measure of the pilot’s SA.

The SAGAT score is characterized for three zones: immediate, intermediate, and long range. The SAGAT is also able to assess displays’ symbolisms and conceptions. Certain displays capture local SA only whereas other displays present global SA. SAGAT would determine whether the user/pilot was fixated on a single intelligence product or was taking advantage of the fusion of information from multi-intelligence displays for answering the queries from the probes. An example is single target tracking or group tracking from imagery intelligence. Single target tracking from full motion video (FMV) might not capture the global situation information that can be acquired from higher altitude viewpoints such as wide area motion imagery (WAMI). The SAGAT focuses on accuracy, credibility, cost, and performance metrics of SA assessment.

The main limitation of SAGAT is that the simulation must be halted to obtain the pilot’s perceived SA data. Furthermore, SAGAT is developed for air-to-air fighter mission, and there does not exist SA measurement tools for evaluating SA for a global construct that encompasses air-to-air, air-to-ground, and air-to-sea scenarios.

3.1.3. SART

The SART questionnaire was developed by Taylor in 1990 [17]. The SART utilizes post-trial self-rating probing techniques. The SART provides a subjective opinion of a pilot’s/user’s attention or awareness during performance. The SART questionnaire encompasses elements from situation stability/instability, situation complexity, situation variability, alertness to the situation, attention concentration, attention division, spare mental capacity, information quantity, and situation familiarity. The metrics of trust, credibility, workload, attention, and performance can be assessed from the SART.

We note that the attention, workload, and trust can be an evolving assessment from the SART as the user becomes acquainted with the tools utilized for the mission. Attention division in the SART relates to single-intelligence (single-INT) diagnostics versus combined multi-intelligence (multi-INT) presentation. To acquire multi-INT, an SA system should not require a user to switch between single INTs as the switching can increase workload, requires additional concentration, and can even create instability or misperceptions. Multi-INT fusion displays are preferred for enhance SA as Multi-INT displays can reduce data overload, increase attention, and support comprehension. Elements most relevant to workload assessment in the SART are spare mental capacity and information quantity. The spare mental capacity questionnaire examines if the user could focus on a single event/variable or could track multiple events/variables utilizing multi-INT presentation. The information quantity questionnaire checks the amount of information gained by the user using the SA system. This questionnaire also inspects if the user is provided with timely and actionable data when and where needed. The quantity of information should include the pertinent information and provide knowledge of the situation. The information quantity comprises the types, quality, and relevance of the data presented to the user as excessive data can overburden the user and adds confusion to the situation analysis. Finally, trust of the user on an SA system can be evaluated from the overall responses that capture the extent of information acquired regarding the situation by utilizing the SA system.

3.1.4. CDM

The CDM was developed by O’hare et al. in 1998 [18]. The CDM utilizes post-trial self-rating probing techniques. The CDM has been used to determine what decision points were used during the performance. The CDM questionnaire incorporate elements regarding goal specification, cue identification, decision expectation, decision confidence, information reliability, information integration, information availability, information completeness, decision alternatives, decision blocking, decision rule, and decision analogy. The goal specification probe checks the specific goals of the user at various decision points. The cue identification probe examines the features a user was looking for when formulating the decision. The decision expectation probe checks if the decision that was made was an expected decision during the course of the event/mission. The decision confidence inquisition assess the confidence in the decision made and if the decision could be different in some altered situation. The information reliability query analyzes if the user was dubious of the reliability or the relevance of the available information. The information integration inquiry checks if the available information was presented in an integrated manner and important entities, relation between those entities, and events were highlighted. the information availability probe verifies the availability of the adequate information at the time of decision. The information completeness probe analyzes if the information presented to the user was complete at the time of decision and if any additional information would have assisted the user in formulation of the decision. The decision alternative inquiry examines if there were any alternate decisions possible or under consideration other than the decision that was made. The decision blocking probe assays if at any stage during the decision-making process, the user found it difficult to process and/or integrate the available information. The decision rule probe examines if a user could develop a rule based on his experience that could assist another person to make the same decision successfully. Finally, the decision analogy query analyzes if the user could relate the decision-making process to previous experiences in which a similar/different decision was made. The metrics of timeliness, accuracy, trust, credibility, availability, and performance can be assessed from the SART.

3.2. Challenges in Measuring SA

SA can be measured by empirically assessing an operator’s performance via quantifiable metrics, such as time and accuracy, and compare these to the results of similar tests without SA assistance. Nevertheless, little work exists on quantifying SA [9,19]. Most cockpit designers rely on speculation to estimate the SA provided by alternate designs. Most of the existing methods for measuring SA suffer from various shortcomings, including but not limited to:

Subjectivity: A technique for measuring SA can ask a commander, operator, and/or pilot to rate his/her SA (e.g., on a scale from 1 to 10). This approach has severe limitations as the subject is not aware of what is actually going on in the environment and his/her ability to rate his/her own SA is subjective [20]. Additionally, rating of a subject may be influenced by the output of the mission, that is, when the mission is successful whether through a fluke or good SA, the subject will most likely report good SA, and vice versa. Furthermore, if the assessment is carried out by different pilots, operators, or commanders, there can be markable differences in the SA assessment of a given system.

Physiology: It is challenging to obtain the exact picture of a subject’s mind to estimate SA at all times. The P300 (P3) and electroencephalography (EEG) measurements provide promise for measuring cognitive functions but do not provide determination of the subject’s comprehension of elements in the environment. The SA from biological methods is used as an indirect measurement of “stress” and can be used with performance; however, having a good match between physiological measurements and mental performance is still not well known [21].

Surveying: A detailed questionnaire can be administered to the subjects regarding the SA they obtained through an SA system. However, people’s recall is affected by the amount of time and intermediate events between the activities of interest and administration of questionnaire [13,20]. This deficiency can be overcome by asking the subject of their SA while using the SA simulator. However, this approach also has drawbacks. First, in a situation of interest, the subject is under a heavy workload which may prohibit him/her from answering the questions. Second, the questions can hint the subject to attend to the requested information on the display thus changing his/her true SA.

Limitedness: Many methods aim to evaluate a single design issue at a time [19]. In such a situation, a subject may unintentionally bias their attention to the issue under evaluation, which will result in the untrue impact of the issue on the subject’s SA. Since SA by its nature is a global construct, a global measure of SA is required if the designers are to sufficiently address the subject’s (e.g., commander, operator, pilot) SA needs.

Coverage:Table 1 indicates that contemporary SA measurement techniques typically focus on a few assessment metrics. For example, NASA TLX focuses on timeliness and workload; SAGAT emphasizes on accuracy, credibility, and cost; SART targets throughput; and CDM focuses on credibility. Hence, challenge is to develop SA assessment techniques that cover more metrics than the existing measurement techniques.

3.3. SA Metrics

Various metrics have been developed to evaluate the worthiness of an SA system. These metrics can be classified into five categories or dimensions [19]: (i) confidence, (ii) accuracy/purity, (iii) timeliness, (iv) throughput, and (v) cost. Table 2 summarizes SA system’s metrics.

Table 2.

Situational awareness system metrics.

Confidence is a measure of how well the system detects the true activities and is typically reported as a probability. There are three metrics that are used to quantify confidence: (a) precision, (b) recall, and (c) fragmentation.

Precision is the percentage of correct detections/predictions the SA system makes in relation to the total number of detected activities. Precision can be given as:

where true detections mean that activities are detected and are true or correct detections, and false detections mean that activities are detected, but are incorrect detections/predictions.

Recall is the percentage of activities correctly identified by the SA system in relation to the total number of known activities as defined by the ground truth. Recall can be given as:

where false negatives mean that activities are predicted as negative but are actually positives or alternatively ground truth activities are not detected correctly.

Fragmentation is the percentage of activities reported as multiple activities that should have been reported as a single activity. For example, in an island-hopping attack, a targeted computer is compromised, which is then used to launch attacks on other computers. For correct detection of this attack, all instances when a target becomes an attacker should be included in the same track as that of the original attacker through the island. Many times a fusion engine in an SA system will not be able to correctly associate the subsequent evidence with the original attack, and thus report the attacks as two or more attack tracks. We note that an attack track refers to the evidence (i.e., events generated from raw sensor data) from multiple data streams that is fused together to identify a potential attack [22]. Fragmentation gives the semblance of a false positive, because an SA system with fragmentation identifies an existing activity as a new activity rather than associating that existing activity with a complex activity. Fragmentation can be given as:

Accuracy/purity refers to the quality of the predicted/detected activities, that is, if the observations were correctly matched and associated to the right activity track. Two metrics are used to quantify purity: (a) misassignment rate, and (b) evidence recall. Misassignment rate is defined as the percentage of evidence or observations that are incorrectly assigned to a given activity. Misassignment rate can be given as:

Misassignment rate helps to assess whether the SA system is assigning evidence to an activity track that is not relevant or if the SA system is only assigning directly useful evidences to an activity track.

Evidence recall is the percentage of evidence or alerts detected in relation to the total known or ground truth events. Evidence recall can be given as:

Evidence recall quantifies how much of the available evidence is truly being utilized.

It has been observed that in some SA domains, such as cyber SA systems, purity metrics have not proved very helpful. If the misassignment rate is high, it indicates an incorrect correlation or association of the underlying data; however, it does not indicate much about the quality of detected attacks [22]. Regarding evidence recall metric, intuitively one would think that the attack detection would be more accurate with high confidence (high precision and high recall) if more evidence is used; however, empirically no relation between the amount of evidence and quality of detections in cyber SA systems was observed by [22]. On the contrary, sometimes less evidence leads to better detections. This implies that there are only a few truly relevant events that connote attacks.

Timeliness assesses the ability of an SA system to respond within the time requirements of a particular SA domain. Timeliness is often measured through a delay metric. Delay is typically referred to as the time between the occurrence of an event and its alert issued by an SA system. Timeliness characterizes the ability of an SA system to respond within the time requirements of a particular SA domain. Timeliness dimension is important to assess if an SA system not only quickly identifies an activity, but also provides sufficient time to taken an action (if needed) on that activity.

Throughput measures the amount of work (e.g., tasks, transactions) done per unit time. In the SA context, throughput refers to the number of events detected per unit time.

Cost is often measured in terms of two metrics: (a) cost utility, and (b) weighted cost. Cost utility estimates the utility for the invested cost on an SA system. As limited resources are utilized on a given SA system, there is an “opportunity cost” of the best alternative activity forgone because of the investment on a given SA system. Weighted cost metric is intended to capture the usefulness of an SA system by considering the types of activities or attacks detected with a positive weight, while penalizing the system for false positives with a negative weight. Different weights are assigned to different categories of activities or attacks. Weighted cost is then the ratio of the sum of values (weights) assigned to the activities or attacks detected to the sum of values of the activity/attack tracks in the ground truth.

4. Techniques and Technologies to Improve Situational Awareness

Improving SA has been at the forefront of military technological advancements. Various technological innovations have been exploited by military to improve SA. While it is not possible to cover all techniques and technologies that have been utilized to improve SA, this section outlines a few of these technological advances that either are under consideration or have already been adopted by the military and/or Air Force to enhance SA.

4.1. Intelligence Gathering Modes

SA relies upon intelligence gathering from multiple sources, such as humans, signals, data (e.g., text, audio, video), and social media. To obtain better SA and to improve the accuracy of the acquired SA, intelligence from multiple sources are required to filter the discrepancies reported from a particular intelligence source. Different sources of intelligence that help improve SA include human Intelligence (HUMINT), open-source intelligence (OSINT), measurement and signature intelligence (MASINT), signals intelligence (SIGINT), imagery intelligence (IMINT), and geospatial Intelligence (GEOINT).

4.2. Sensors and Sensor Networks

Technological advancements have led to the development of a multitude of sensors many of which have found applications in surveillance and SA systems. Sensors that are often employed to enhance SA include location sensors, visible-light (red, green, blue (RGB)) camera sensors, night vision camera sensors, infrared image sensors, ultraviolet image sensors, motion sensors, proximity sensors, smoke/fire sensors, gunshot detectors and locators, long range sensors, and synthetic aperture radars. These sensors are connected together often wirelessly to provide surveillance coverage for a given area. Geolocation systems are an example of sensor-based systems that are frequently a part of surveillance and SA applications. Geolocation systems for military aim to overcome multipath and provide less than 1 m (<1 m) ranging accuracy in open terrain and less than 2 m (<2 m) accuracy inside buildings. To attain these objectives, geolocation systems for military equipment rely on a variety of sensors. Many of the geolocation systems utilized for military equipment integrate measurements from complementary sensors, such as global positioning system (GPS), inertial measurement unit, time of arrival, barometric sensors, and magnetic compasses, to provide a fused solution that is more precise than any individual sensor.

The data collected by a multitude of intelligence, surveillance, and reconnaissance (ISR) sensors enhance the SA of decision makers and help them to better understand their environment and threats. However, a variety of factors hinder this enhancement of SA for end users including incompatible data formats, bandwidth limitations, sensor persistence (ability of a sensor to sense continuously), sensor revisit rates (the rate at which a sensor observes the same geographic point; the term is mostly referred for mobile sensors, such as satellite or UAV sensing), and multi-level security [23]. Furthermore, with increasing amount of sensor data, challenge is to identify the most significant pieces of information, fusing that information, and then presenting that information to the end user in a suitable format.

Recently in military and Air Force, the number of end users requiring access to sensor information is continuously increasing. For example, the end user of this information could be a pilot of a fighter aircraft (e.g., F-16 Fighting Falcon, F-35 Lightning II) making a targeting decision, a military commander securing a city, an Army squad leader trying to suppress a civil commotion, or members of strategic team of Combined Air Operations Center (CAOC) developing a strategic plan for air operations [23]. Although the specific information requirement, security level, and bandwidth constraint will vary among each of these end users, the overall need for timely, accurate, relevant and trustworthy information to achieve and maintain SA remains the same. The concept of layered sensing has been envisioned by the Air Force Research Laboratory (AFRL) Sensors Directorate to deliver fused, multi-source, multi-dimensional, and multi-spectral sensor data to the decision-makers conforming with their needs irrespective of their location and with the goal of improving SA of decision makers.

4.3. Software-Defined Radio

Software-defined radio (SDR) is a radio communication system, which implements many of the radio components, such as mixers, modulators, demodulators, error correction, and encryption, in software instead of hardware, and thus permits easier reconfiguration and adaptability to different communication situations. The Joint Tactical Radio System (JTRS) program of U.S. military was tasked to replace existing military radios with a single set of SDRs that could work at new frequencies and modes (waveforms) by simple software update instead of requiring multiple radios for different frequencies and modes and requiring circuit board replacements for upgrade [24]. Later on JTRS was transitioned into Joint Tactical Networking Cener (JTNC) [25]. SDR has often been utilized in small unit operators’ SA system in order to provide warfighters with a system that can provide reliable and flexible communications in restrictive environments, particulary, urban ones. The SDR offers a wide tuning range (e.g., from 20 MHz to 2500 MHz [26]) to enable the operators’ SA system to select the optimal frequency band to maintain link connectivity in diverse and restricted terrains. Furthermore, the SDR permits an adaptable direct sequence spread spectrum waveform (e.g., from 0.5 MHz to 32 MHz [26]) for modulation. Other potential tunable parameters of SDR include adaptation of radio settings (e.g., transmission power, frequency, antenna gain, modulation, coding, baseband filtering, signal gain control, sampling and quantization), data link layer parameters (e.g., channel monitoring and association schemes, transmission and sleep scheduling, transmission rate, and error checking), and network layer parameters (e.g., routing, quality of service management, and topology control) [27].

4.4. Artificial Intelligence

Advancements in AI have significant impact on SA. AI can tremendously enhance the awareness of the dismounted operators, soldiers, and pilots of the environment as AI based applications can notify soldiers of the presence and movement of red forces and thus assist in threat identification and mitigation. AI can be particularly helpful in “projection” stage (level 3 SA) of SA. AI can help with real-time analysis and prediction for improving SA. For military applications, AI can provide dismounted operators on ground and pilots with actionable intelligence and assistance in decision-making. AI can also facilitate in logistics by enabling predictive maintenance, enhancing the safety of operating equipment, and reducing the operational cost. Furthermore, AI can promote the readiness of troops by tracking and supplying military ammunition before they are depleted. AI is also integral to human-machine teaming efforts that aim to promote efficient and effective integration of humans with complex machines that extends beyond the usage of standard graphical user interfaces, computer keyboard, and mouse, and focuses on multimodal interaction capabilities, such as eyeglass displays, speech, gesture recognition, and other innovative interfaces. AI is also suitable for accomplishing military jobs that are dull, dangerous, or dirty, and can thus reinforce military strength and reduce casualties.

4.5. Unmanned Aerial Vehicles

Unmanned aerial vehicles (UAVs) can tremendously help in improving SA as UAVs are suitable for gathering intelligence from environments which are considered dull, dirty, or dangerous. With the advancements in technology, the next generation of UAVs will not only collect data but will also be able to perform on-board data processing, fusion, and analytics. Context-aware UAVs outfitted with a camera can generate a high-level description of the scene observed in the video, and identify potential critical situations. Semantic technologies can be leveraged to identify objects and their interactions in the scene [28]. Cognitive capabilities can be added to the UAVs by using fuzzy cognitive map reasoning models, deep neural networks, or other machine learning models to make the UAVs aware of the evolving situations in the scene and identify precarious situations. Furthermore, UAVs can play a pivotal role in edge SA by acting as flying fogs and thus as a sink for data from the ground sensors as the UAV navigates above the sensors. An ideal characteristic for the UAV flying fog will be the ability to process, fuse, and analyze the data on-board as well as transfer the data (whether in original or processed form depending on the nature, complexity, and context) to the ground station and/or cloud for archival and detailed analysis. The UAVs can also employ DDDAS methods for enabling UAVs to adapt their sensing, processing, and navigation (routing) dynamically based on live sensor data for better monitoring and tracking of the target.

4.6. Autonomous Vehicles

Although autonomous vehicles (AVs) are primarily targeted towards consumer market, AVs can also benefit SA as discussed in the following. Since AVs embed a plethora of sensors, AVs are capable of always-on monitoring during operation. A network of AVs, for example, can enable mass surveillance in the form of comprehensive, detailed tracking of all AVs and their users at all times and places [29]. The information retrieved from different sensors on-board AVs can be collected and centrally stored. The detailed analytics of that stored information can be used to provide SA insights.

4.7. Gunshot Location Systems

Gunshot location systems serve as an important tool for acoustic surveillance against the illegitimate use of firearms in urban areas, and for locating the origin of artillery fire, bullets, and snipers in urban warfare and battlefields. Acoustic SA can be interpreted as the activity and means used to observe acoustical components of the environment at all times and in all weather conditions. Target acquisition in acoustic SA refers to characterizing acoustical sources that constitute environmental noise by detection, spatial localization, reconnaissance, and identification of one or more previously identified targets. Modern gunshot location systems owe to the technological transference of military sniper detection technology that was developed to counter sniper operations in the battlefield. The operation of gunshot location systems can be explained by fluid dynamics modeling, acoustic sensing technology, and seismic techniques. Physically, fire constitutes of two principal phenomenon: a shock-induced chemical reaction of the ammunition propellant inside the gun barrel, and the dynamics of the projectile discharged into the air from the open end of the barrel [30]. The shock-induced chemical reaction of the ammunition propellant has two phases: in the first phase, the bullet propellant is ignited and heat is flown out blusteringly through the gun barrel, and in the second phase, the unburned propellant is re-ignited and combustion products are released in the air just outside the muzzle.

The most crucial fluid dynamics process pertinent to the shock-induced chemical reaction is the muzzle blast flow of the discharging plasma from the open end of the barrel. This expeditious exit of high temperature and high pressure plasma creates a muzzle blast wave which radiates spherically from the muzzle. Projectile dynamics has three relevant fluid dynamics phenomena [30]. First, the piston-like movement of the projectile nose releasing the barrel induces a shock wave. Second, the vortex shedding by the projectile in motion originates a commotion along the trajectory, which would create Aeolian tones. Third, if the projectile velocity is transonic or higher, it will create a ballistic shock wave or Mach cone following the bullet in flight, whose pressure amplitude has an N-wave profile. Further, different types of weapons can be distinguished based on their acoustical signature. Shadowgraphy has been used to distinguish between the fires of different weapons.

Electro-acoustic sensors are often used to detect, localize, and identify gun fires or shooting noise. An electro-acoustic sensor consists of a piezoelectric substrate and a low-cost condenser type acoustic transducer sensitive to acoustic pressure and having omnidirectional pickup pattern. These electro-acoustic sensors process the information embedded in acoustical emissions of gunfire events to guesstimate the spatial coordinates (i.e., azimuth, elevation, range) of the gunfire origin as well as other relevant ballistic features. The electro-acoustic sensors can transmit the data using either wireless communication or wired communication (e.g., dedicated telephone lines). To conduct acoustic surveillance, acoustic sensors are distributed in the area of interest to form an acoustic sensor network. The acoustic sensors are typically installed at higher places, such as lighting poles, high buildings, cellular base stations, etc. The coverage of acoustic sensor networks depends on the acoustic sensor sensitivity to pick up the muzzle blast waveform and the environment. In open air conditions and in the absence of background noise, the muzzle blast wave can be perceived 600 m (0.373 miles) or more from the firearm. In urban environments, the acoustic multipath introduced by buildings and the high background noise remarkably deteriorates the quality of propagating acoustic signal.

Modern gunshot location system are able to assess the azimuth and elevation of the shooter, the range to the shooter, trajectory and calibre of the projectile, and muzzle velocity. The direction of arrival of projectile is estimated from the time delay of arrival of each perturbation of the ballistic shock wave to the pair of acoustic sensors. The arrival time delay of the shock wave between a pair of transducers is used to estimated the velocity of the projectile. The projectile caliber can be inferred from the N-wave analysis. The range to the shooter is determined by analyzing the curvature of the muzzle blast wave front using microphone arrays and triangulation algorithms. Gunshot location systems typically display the gunshot localization and identification results within a few seconds after a fired shot on a user interface that is usually integrated into a geographical information system [30]. This geographical information system serves as one input of the acoustic SA to the commander or law-enforcement officials.

4.8. Internet of Things

Internet of things (IoT) can immensely enhance SA by their integration with military and Air Force personnel. The operators/ground soldiers are assets in the battlefield and are crucial for implementing tactical decisions. An increasing number of ubiquitous sensing and computing devices are worn by military personnel and are embedded within military equipment (e.g., combat suits, instrumented helmets, weapon systems, etc.). These sensing and computing devices are networked together to form an Internet of military things (IoMT) or an Internet of battlefield things (IoBT) [31]. The IoMT/IoBT are capable of acquiring a variety of static and dynamic biometrics (e.g., face, iris, periocular, fingerprints, gait, gestures, and facial expressions), which can be used to carry out context-adaptive continuous monitoring of soldier’s psychophysical and emotional conditions on the field. The IoMT/IoBT can also help in capturing soldiers’ vital health parameters (e.g., heart rate, electrocardiogram (ECG), sugar level, body temperature, blood pressure) in addition to monitoring soldiers’ weapons, ammunition, and location. With IoMT/IoBT, military and Air Force personnel are able to acquire tactical SA and thus gain perception of the enemy as well as advances of the friendly forces. The variety of statistics and parameters gathered by IoMT/IoBT have significant value for the commanders sitting in the command and control center. This acquired data is then sent to edge servers or cloud where information fusion and big data analytics can then be applied for SA, situation evaluation, decisional activity, and providing real-time support to soldiers in the battlefield. The commander can become aware of blue forces’ health parameters, weapon parameters, equipment parameters, and ammunition parameters and thus can place orders for providing appropriate assistance.

4.9. Graph Databases

Recently, it has been proposed in [32] to combine IoT (IoMT/IoBT) with graph databases for providing SA about every parameter of the soldiers in the battlefield and thus enable a better decision support system. Graph databases are a new paradigm where data can be embodied in a graphical form for relatively easier searching and traversing than the traditional databases. In a graph database, data is stored in the form of nodes that are connected to each other in a graphical manner via edges, which represent a relationship between the connected nodes. Graph databases are useful when attributes of the data/node are important. For example, a soldier can be a main node with different attributes, such as equipment, ammunition, weapons, and body sensor parameters. Since IoT has a lot of interconnections with other nodes and data, graph databases can be used to store and retrieve that information. The graphical representation and storage facilitates querying of such complex data. The standard algorithms for graph theory (e.g., shortest path, clustering, community detection, etc.) as well as machine learning methods can be used on graph databases to facilitate retrieval of the required information [32]. The use of graph database can improve the visualization and SA for the commander. Often information in a planar way is not adequate for developing SA in a clear manner. The information from various sources stored in a graph database allows retrieval of the stored information graphically, which helps in better understanding of the relation between the attributes of different nodes in the graph. Since there is a transition from traditional map-based and phone-based SA in the IoT era, the commander is able to access all the information remotely on an IoT-enabled device. The graphical databases can be implemented at fog/cloud level so that visual results of the query can provide interesting data on situation to assist the commander in tactical decisions. For instance, the IoMT/IoBT can collect the vital parameters of soldiers and their equipment in the battlefield along with their geographical location, which can be stored in graphic databases that would enable the commander to visualize this information to better gauge the health of the military assets in the battlefield.

4.10. Fog/Edge Computing

Fog computing or edge computing is a novel trend in computing that pushes applications, services, data, computing power, knowledge generation, and decision making away from the centralized nodes to the logical extremes of a network. Edge computing and fog computing are similar in essence and have some subtle differences that have been outlined in our prior work [33]. Existing surveillance systems struggle to detect, identify, and track targets in real-time mainly due to the latency involved in transmitting the raw data from sensors, performing the information fusion, computation, and analytics at a distant central platform (e.g., cloud), and sending the commands back to the actuators for implementing control decisions. The fog/edge computing enables computations near the edge of the network and helps in reducing the communication burden on the core network. The fog/edge computing also enables location-based services, local analytics, and can help improve the real-time responsiveness of an SA system [34,35]. Using biometrics, environmental sensors, and other connected IoMT/IoBT devices to send and receive data quickly leveraging fog/edge computing will not only help improve command and control operations but will also permit military personnel to timely respond to potentially dangerous situations on the battlefield.

4.11. Information Fusion

Surveillance applications employ a multitude of sensors, such as motion detectors, proximity sensors, biometric sensors, and a variety of cameras including color cameras, night vision imaging cameras, and thermal imaging cameras, that observe the targets from different view points and resolutions. Information fusion plays an important role in SA by assisting in deriving valuable insights from the sensed data. SA incorporates low-level information fusion (tracking and identification), high-level information fusion (threat- and scenario-based assessment), and user refinement (physical, cognitive, and information tasks) [3]. Information fusion minimizes redundancy between the data captured by different sensors, such as same or similar views captured by different cameras. Furthermore, information fusion also assists in performing handoff between cameras when an object being tracked by one of the cameras in the system moves out of its field of view and enters into the field of view of another camera. In fog/edge computing paradigm, information fusion at sensor/IoT nodes reduces the data that need to be transmitted to the edge servers, which conserves the energy for IoT devices and thus help prolong the battery life of the IoT devices. Similarly, information fusion at the edge servers/fog nodes lessens the data that need to be transmitted to the cloud and thus help alleviate burden on the core network. Advance computing techniques, such as parallel computing and reconfigurable computing can be levered for real-time information fusion to provide real-time SA at IoT and edge/fog nodes.

4.12. Automatic Event Recognition in Video Streams

Video streams from UAVs, IoT devices, and closed-circuit television (CCTV) cameras are important sources for surveillance and SA. Traditionally, multiple operators are required to watch these video streams, and then pass the information on events of interest to the commander for improving SA. Increasing number of video streams gathered from a plethora of UAVs and IoT devices require an increasing number of operators. Furthermore, during periods of high activity, operators may get overloaded and thus may not be able to track all events of interest. Automatic analysis of video data from various sources, such as UAVs and IoT devices, can curtail the number of operators required by providing a notification of events or activities of interest. Automatic event recognition requires improvements over basic object recognition techniques as not only the relationship between objects need to be recognized (situation) but also how these relationships evolve over time (i.e., occurrence of events) [36]. An event can be defined as the change in relationship between objects that occurs over a period of time. An interesting example of automatic event recognition using UAVs is convoy surveillance. A small UAV, such as ScanEagle, can be utilized to provide surveillance for the convoy, where the UAV flying over the convoy will not only detect and track the entities in the convoy but also other vehicles and objects in the vicinity of the convoy that might pose a threat.

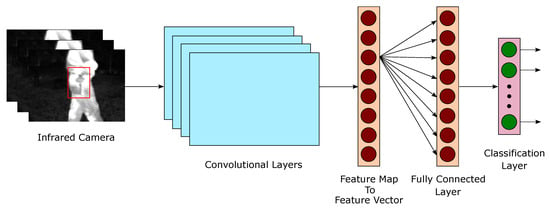

A variety of approaches have been used for event recognition, such as Bayesian networks, hidden Markov models (HMM), and neural networks [36]. Particularly, with recent advancements in deep learning, convolutional neural networks (CNNs) have become very popular for automatic object detection, classification, and recognition in video frames. Figure 3 presents a CNN architecture for automatic object detection and classification. A CNN architecture typically comprises of an input layer, an output layer, and multiple hidden layers. The hidden layers of CNN mainly comprise of multiple convolutional layers, fully connected layers, and optional non-linearity, pooling, and normalization layers. In Figure 3, input from an infrared camera is fed to the CNN architecture, which processes the input through multiple convolutional, non-linearity, pooling, normalization (note that the non-linearity, pooling, and normalization layers are not shown in Figure 3 for simplicity), and fully connected layers, and produces an output of object detection and classification at the output/classification layer. Figure 3 shows the CNN architecture detecting a pistol from an infrared video input.

Figure 3.

A convolutional neural network (CNN) architecture for automatic object detection and classification.

We note that the HMMs and neural networks require a large amount of training data for configuring the network to recognize events. Since, many events of interest are aberrant events for which there is scarcity of video data for training, Bayesian networks can be utilized for event recognition when training video data is scarce [36]. Bayesian networks also incorporate uncertainty in the reasoning and provide an output indicating a likelihood that the event is true, or for multi-state events, the probability that the event is in particular state.

Although automatic event recognition has an immense value for SA, it is a challenging problem and the focus of recent research. Other relevant research problems that are critical for SA and are subject of recent research are object detection and identification, recognition of relationship between objects, and activity recognition.

4.13. Enhanced Vision System (EVS)

An enhanced vision system (EVS) or enhanced flight vision system (EFVS) enhances pilot’s visibility and SA through active and passive sensors that penetrate weather phenomenon such as storms, fog, haze, darkness, rain, and snow. Modern military aircraft have been outfitted with enhanced vision systems, however, these systems are not common on commercial aircraft due to cost, complexity, and technicality. Performance of enhanced vision sensors depend upon the sensor characteristics and external environment. For example, range performance of high-frequency radars (e.g., 94 GHz) and infrared sensors degrades in heavy precipitation and certain fog types [37]. On the contrary, low-frequency (e.g., 9.6 GHz) and mid-frequency (e.g., 35 GHz) radars have improved range but poor display resolution. Furthermore, active radar sensors can experience mutual interference when multiple active sensors are in close proximity. Although enhanced vision systems have been heavily utilized in military and Air Force to improve SA, contemporary enhanced vision systems do not extract color features which may possibly produce misleading visual artifacts under particular temperature or radar reflective conditions [37]. Examples of EVS/EFVS include Collins Aerospace’s EFVS-4860 [38] and Collins Aerospace’s EVS-3600 [39], which use a combination of short-wave infrared, long-wave infrared, and a visible camera to enhance pilot visibility. Another example of EVS is Elbit System’s ClearVision [40], which combines visual camera, near infrared sensor, and long-wave infrared sensor to provide improved SA.

4.14. Synthetic Vision System (SVS)

Synthetic vision systems are in essence weather-immune displays that permit an operator and/or pilot to see the world as it would be in perfect weather condition at all times. Synthetic vision systems use a combination of sensors, global positioning system (GPS) satellite signals, inertial reference systems, and an internal database to provide pilots a synthetic view of the world around them. Synthetic vision systems replace the old attitude indicators (i.e., the blue-over-brown attitude indicator that indicates where the horizon is) in aircraft by an augmented reality (AR) system that superimposes a real-world image on the attitude reference system. AR-based SA systems, such as NASA’s synthetic vision system, can improve the aviation safety and enhance the efficiency of aircraft operations. The SVS provides various benefits such as enhanced safety, improved SA, reduced pilot workload, reduced technical flight errors, and color coding for absolute altitude terrain.

Collins Aerospace’s SVS provides weather independent, high-definition image displays on HUDs with three layers of information—terrain, obstacle, and airports and runways—to render a complete picture of the environment [39]. Collins Aerospace’s SVS integrates sensors with a worldwide database that provides terrain contours, mile markers, runway highlights, and airport domes. Another example of SVS is Honeywell’s SmartView [41], which synthesizes flight information from various onboard databases, GPS and inertial reference systems into a comprehensive, easy-to-comprehend 3-D rendering of the forward terrain.

4.15. Combined Vision System (CVS)

Synthetic vision systems provide the pilots with a clear view of the world outside the window in all weather conditions. These vision systems are a step towards a future vision system, also known as combined vision system (CVS), that would provide pilots vision far beyond what the eye can see. A CVS is a combination of synthetic vision (i.e., systems that create rendered environments in real-time based on sensors and stored database information) and enhanced flight vision systems (i.e., displays that utilize forward-looking infrared systems and millimeter wave radar to provide pilots a live view of the world around the aircraft) [42]. Collins Aerospace’s CVS provides a clear view to the pilots by combining their EVS and SVS into one dynamic image as depicted in Figure 4. The CVS algorithms utilize the overlapping fields of vision from EVS and SVS to detect, extract, and display content from both sources. For instance, CVS provides thermal imagery of terrain at night, weather-independent virtual terrain, and the quick detection of runway and approach lighting integrated in a single system.

Figure 4.

Collins Aerospace’s Combined Vision System [43].

4.16. Augmented Reality

With the increasing computing capability of mobile devices that can be carried by dismounted operators in multiple segments (e.g., military, civilian, law enforcement), AR provides a promising means to enhance SA. Since the dismounted operators tend to deal with a large amount of information that may or may not be relevant, an AR system can help improve the SA by filtering, organizing, and displaying the information in a discernible manner [44]. AR systems need to identify the elements of interest to be displayed and augmented in HUDs of the operators. These elements include map of the terrain and indication of blue and red forces. Map of the terrain can be displayed using a radial mini-map centered on the operator which shows the operator’s position in real-time along with the position of allies and foes and points of interest. Navigation data can be integrated with the terrain map by shading of the paths using AR techniques and thus superimposing the maps and navigation path on the view of the operator. AR is also able to help in situations where it may be difficult for the operators to maneuver their equipment and/or weapons, such as in close quarters combat or in patrol, where the weapon is often at rest at the hip level. An AR crosshair pointing to the direction where the operator’s weapon is currently engaging can improve the accuracy and response time for the operator [44]. The effectiveness of this AR crosshair can be empirically measured by comparing the response time of the operator during an engagement with or without the crosshair. In summary, the usage of AR in military and Air Force equipment has been continuously increasing with the technological advancements in AR.

5. Application Domains of Situational Awareness

SA is needed in various domains such as battlefield, military- and air-bases, aviation, air traffic control, emergency and/or disaster response, industrial process management, urban areas, and critical infrastructure as depicted in Figure 5. In this section, we provide an overview of a few of these domains. Inadequate SA has often been associated with human errors resulting in adversities, such as military losses in the warfare, loss of civilians’ and first responders’ lives in emergency and disaster response, and loss of revenue in industrial control.

Figure 5.

Domains of situational awareness.

5.1. Battlefield

SA is imperative for an informed and reliable battlefield C2 system. SA enhanced by the state-of-the-art technologies, such as IoBT/IoMT and fog computing, can help the military and Air Force to fully exploit the information gathered by a wide set of heterogeneous IoBT/IoMT devices deployed in the (future) battlefield and can provide the military/Air Force a strategical advantage over adversaries. SA in battlefield can be provided at different levels: (i) commanders that oversee the battlefield operation, (ii) dismounted soldiers that carry out the mission at tactical level, and (iii) pilots who provide close air support to soldiers. Recent advancements in technology for SA can help soldiers identify the enemy, access devices and weapons systems under low-latency and improve SA and safety of soldiers.

5.2. Urban Warfare

Maintaining SA for military and Air Force units in urban environments is more challenging than in rural and open terrain. The dynamics of battlespace in urban environment change significantly from traditional two-dimensional space with the enemy mainly in the front to a three-dimensional maze where the enemy can launch the offensive from any direction and from multiple directions simultaneously [26]. The urban battlespace is further convoluted by the increasing presence of non-combatants. Navigation aids such as GPS are not as reliable in urban environments than open terrain because the line of sight to the satellite is difficult to attain. Hence, sharing information about the battlespace in urban environment to obtain common operating picture (COP) (The U.S. DOD dictionary of military and associated terms defines COP as: “A single identical display of relevant information shared by more than one command that facilitates collaborative planning and assists all echelons to achieve situational awareness” [2]) requires a communication system/network that can maintain a link in any environment (i.e., in the presence of multipath and radio interference).

5.3. Gray Zone Warfare

SA is particulary needed in asymmetric warfare or gray zone warfare [45] where it is not always straightforward to identify enemy combatants, for example, the enemies can appear as civilians or access restricted military bases with a stolen badge. To provide SA in gray zone situations, the biometric sensors can scan irises, fingerprints, and other biometric data to identify individuals who might pose a danger. The use of DDDAS methods in SA systems can then help in targeted collection of data on identified individuals and can also mobilize the responding units (e.g., snipers) to diffuse the threat. The SA systems can provide real-time monitoring of sites and potential gray zone actors to help prevent or mitigate negative effects of gray zone warfare, such as misinformation and disruptions of services and critical infrastructures.

5.4. Military- and Air-Bases

SA is needed for the safety and security of military- and air-bases. Since enemies can try to infiltrate the bases with stolen badges/identities, continuous surveillance is needed for military/air bases. SA systems can detect suspicious individuals and unauthorized personnel in the bases based on which the authorities can take the appropriate action.

5.5. Homeland Security and Defense

SA systems are required for enhancing homeland security and defense. Real-time surveillance can help in providing timely detection and response against both natural disasters (e.g., hurricanes, floods) and man-made events (e.g., terrorism). Furthermore, SA systems can assist law enforcement officials in counter-terrorism, anti-smuggling efforts, and apprehending fugitives.

5.6. Disaster Response Management

Disaster recovery operations are challenging and require support from multiple agencies including local and international emergency response personnel, non-governmental organizations, and the military. In the immediate aftermath of a disaster, the most compelling requirement is of SA so that resources, including personnel and supplies, can be prioritized and directed based on the impact and the need for disaster relief. Furthermore, in disastrous situations, SA needs to be continuously updated based on the changing conditions as the recovery efforts continue. Traditional sources of information to provide SA in disastrous situations include reporting by the victims of the disaster, however, reporting by victims may not be viable in all circumstances as communication means could be disrupted by the disaster. Thus, SA systems are required for disaster monitoring and reporting, and hence, help providing an appropriate response to the disaster. SA systems are also an integral part of early warning systems and can help in safety enhancement and sustainability of cities by facilitating quick detection and response to emergency situations, such as fire, flood, bridge collapses, water supply disruptions, earth quakes, volcanic eruption, and tornadoes, etc.

5.7. Critical Infrastructure

SA is needed for safety of critical infrastructures, such as bridges, electricity generation and distribution networks, water supply networks, telecommunication networks, transportation networks, etc. We illustrate the need for SA for critical infrastructure with a few examples from road networks, which are an essential part of transportation networks. Road SA requires perceiving adverse road conditions (e.g., potholes), motorists, pedestrians, and traffic conditions [10]. Traffic monitoring and detection of suspicious vehicles afforded by road SA would result in reduced crimes, accidents, and fatalities. Advances in deep learning have helped immensely to enhance Road SA. CNNs can be used to perceive vehicle category and location in the road image data, and thus help with the situational element identification problem in road traffic SA system [11]. Monitoring of critical infrastructures becomes more important in era of gray zone warfare as enemies often aim to create disruptions in critical infrastructure to generate auspicious situations for military engagement.

6. Conclusions

In this article, we have defined and explained situational awareness (SA) from a military and Air Force perspective. We have presented a model for SA and dynamic decision-making. The proposed model incorporates artificial intelligence and dynamic data-driven application systems to help steer the measurement and resources according to changing situations as perceived and projected by the SA core. We have discussed measurement of SA and have identified the metrics for SA assessment. We have also outlined the challenged associated with measuring SA. We have discussed different techniques and technologies that can enhance SA, such as, different modes of intelligence gathering, sensors and sensor networks, software defined radio, artificial intelligence, unmanned aerial vehicles, autonomous vehicles, gunshot location systems, Internet of things, fog/edge computing, information fusion, automatic event recognition in video streams, enhanced vision systems, synthetic vision systems, combined vision systems, and augmented reality. Finally, we have presented different domains or application areas where having SA is imperative, such as battlefield, urban warfare, gray zone warfare, military and air bases, homeland security and defense, and critical infrastructure.

As a future outlook, SA techniques and methods will continue to improve with the technological advancements. There are many areas that require active research to enhance SA. For instance, military is still in quest of perfect radios based on software-defined radio (SDR) that can attain secure, interoperable, and resilient multi-channel communication capable of working with different modes/waveforms. Automatic event detection and recognition is another area pertinent to SA that requires active research. In particular, real-time automatic event detection and recognition is a challenging problem that requires not only advances in AI but also advance computing techniques, such as parallel computing and reconfigurable computing. Furthermore, hardware accelerators can be expedient for enabling real-time automatic event detection and recognition. Another area where active research is needed is safe and secure autonomous navigation of unmanned aerial vehicles (UAVs) so that UAVs or swarm of UAVs be able to accomplish missions autonomously without requiring active remote piloting. Further research in Internet of battlefield/military things (IoBT/IoMT) is warranted to sense, integrate, and process a multitude of parameters related to soldiers’ health and psychophysical conditions, soldiers’ weapons, ammunition, and location on the field as well as information about red forces in the regions of interest. Further research in fog/edge computing can ensure the real-time processing and analytics of these sensed parameters to help enable real-time assessment and command and control decisions to respond to evolving situations. Enhanced vision systems for soldiers and pilots need continuous improvement with the innovations in sensing and augmented reality (AR) technologies. New visualization techniques need to be developed based on multi-intelligence fusion results that will allow a user to view a reconfigurable user-defined operating picture. Regarding SA quantification, new SA measurement techniques need to be developed that can cover more assessment metrics. Finally, there is a pressing need for developing new SA assessment tools so that SA can be quantified based on which further enhancements can be made depending on the SA requirement for a given application domain.

Author Contributions

Conceptualization, A.M.; methodology, A.M.; investigation, A.M.; resources, A.M.; writing—original draft preparation, A.M.; writing—review and editing, A.M., A.A. and E.B.; supervision, A.M.; funding acquisition, A.A. and E.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the Air Force Research Laboratory (AFRL) Information Directorate (RI), through the Air Force Office of Scientific Research (AFOSR) Summer Faculty Fellowship Program®, Contract Numbers FA8750-15-3-6003, FA9550-15-0001 and FA9550-20-F-0005. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the AFRL and AFOSR. The APC was funded by the Kansas State University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available on request due to proprietary restrictions. The data presented in this study can be made available on request from the corresponding author. The data are not publicly available due to proprietary restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Endsley, M.R. Designing for Situation Awareness in Complex System. In Proceedings of the Second Intenational Workshop on Symbiosis of Humans, Artifacts and Environment; 2001. Available online: https://www.researchgate.net/profile/Mica-Endsley/publication/238653506_Designing_for_situation_awareness_in_complex_system/links/542b1ada0cf29bbc126a7f35/Designing-for-situation-awareness-in-complex-system.pdf (accessed on 13 December 2021).

- DOD. DOD Dictionary of Military and Associated Terms. 2019. Available online: https://www.jcs.mil/Portals/36/Documents/Doctrine/pubs/dictionary.pdf (accessed on 16 August 2019).

- Blasch, E. Multi-Intelligence Critical Rating Assessment of Fusion Techniques (MiCRAFT). In Proceedings of the SPIE, Signal Processing, Sensor/Information Fusion, and Target Recognition XXIV; 2015; Volume 9474. Available online: https://www.spiedigitallibrary.org/conference-proceedings-of-spie/9474/94740R/Multi-intelligence-critical-rating-assessment-of-fusion-techniques-MiCRAFT/10.1117/12.2177539.short?SSO=1 (accessed on 13 December 2021).

- Spick, M. The Ace Factor: Air Combat and the Role of Situational Awareness; Naval Institute Press: Annapolis, MD, USA, 1988. [Google Scholar]

- McKay, B.; McKay, K. The Tao of Boyd: How to Master the OODA Loop. 2019. Available online: https://www.artofmanliness.com/articles/ooda-loop/ (accessed on 14 August 2019).

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors J. Hum. Factors Ergon. Soc. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Endsley, M.R. Situation Awareness in Aviation Systems. In Handbook of Aviation Human Factors; Garland, D.J., Wise, J.A., Hopkin, V.D., Eds.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 1999; pp. 257–276. [Google Scholar]

- Blasch, E.; Ravela, S.; Aved, A. (Eds.) Handbook of Dynamic Data Driven Applications Systems; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]