1. Introduction

The recent coronavirus disease 2019 (COVID-19) has impacted many lives, and changed the world drastically. The World Health Organization declared COVID-19 a global pandemic on 11 March 2020 [

1]. By the end of October 2020, more than 43.3 million cases had been confirmed globally, with over 1.15 million deaths reported [

2] related to the novel virus. The reported illnesses from people infected include fever, shortness of breath, dry cough, as well as loss of taste and smell. Many people with underlying conditions (particularly heart and lung diseases) seem to be at higher risk of developing serious complications according to the Center for Disease Control and Prevention. Many researchers are currently looking for early detection methods for COVID-19, to help reduce the spread of this disease [

3,

4].

Several testing methods are being used for diagnostic purposes, including the Reverse-Transcription-Polymerase Chain Reaction (RT-PCR). These tests have proven to have high specificity but variable sensitivity for the detection of the diseases [

3,

4]. Unfortunately, obtaining the results takes about 24 h or more, and these kits are in limited supply across the globe [

4]. Currently, the world is in need of rapid solutions that would assist radiologists and doctors in identifying COVID-19.

Patients with COVID-19 often develop pneumonia caused by lung opacities. Thus, another strategy is to detect this form of pneumonia in chest radiographs and Computed Tomography (CT) scans. Both CT scans and chest radiographs are widely used imaging modalities for various diseases associated with the lungs. In this paper, we solely focus on chest radiographs because these are more widely available than CT scans. Chest radiographs require far less data memory, disk space and processing time. Furthermore, they have a much lower radiation dose than CT scans [

5]. Hence, a Computer Aided Detection (CAD) system on chest radiographs to detect COVID-19 would be a valuable tool to enhance the workflow of radiologists. This type of CAD system would provide a rapid and objective second opinion to radiologists. We believe this could be particularly helpful in areas with a scarcity of COVID-19 test kits. CAD tools have been a research area attracting great interest in the past decade [

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30]. Computer-vision based machine learning and deep learning approaches have been proposed in the literature for various applications including lung cancer, pneumonia, and tuberculosis detection, as well as pneumonia diagnosis on chest radiographs.

In this paper, we present three training methodologies using deep learning to detect COVID-19 on chest radiographs without any traditional data augmentation. These systems include:

- (i)

Baseline method using traditional transfer learning approach using four different established Convolutional Neural Networks (CNNs)

- (ii)

Enhanced baseline method adds preprocessing and lung segmentation to the baseline method

- (iii)

Recommended system further adds our novel transfer-to-transfer learning approach to mitigate class imbalance.

For the enhanced baseline method and recommended system, we preprocess the chest radiographs to enhance the contrast and segment the lungs. Only the segmented lung region is used by the classification architecture for both these methods.

The training dataset used in this research suffers from class imbalance. Traditionally, this issue is tackled by data augmentation, weighted cross entropy loss, or by removing the samples from the majority class to match the quantity of minority class. Data augmentation is not a preferred approach for medical imaging applications. Randomly removing samples from the majority class (i.e., the non-COVID-19 class) in this scenario might lead to loss of critical information. The weighted cross entropy loss approach has not been effective for training datasets with high class imbalance ratio. Therefore, to overcome class imbalance, we introduce a novel transfer-to-transfer learning approach. In our recommended system, we split the training dataset into mini-sets. Each mini training set contains the entire training suite of COVID-19 images and approximately the same quantity of non-COVID-19 images. The weights are then transferred to the next sets subsequently and this process is repeated until we process through every non COVID-19 class image present in our training dataset. We studied and compared the performance of the recommended system with the baseline and enhanced baseline methods. We studied the performance of these methods using four established CNNs: ResNet50 [

31], Xception [

32], Inception-v3 [

33,

34], and DenseNet201 [

35].

The primary novel contribution of this research is in the proposed computationally efficient transfer-to-transfer training mechanism to combat class imbalance. This includes demonstration of its efficacy in COVID-19 detection in chest radiographs. Our study here also demonstrates the importance of preprocessing and lung segmentation for COVID-19 detection. We present the results in terms of classification accuracy, sensitivity, recall, specificity, precision, F1 score, and Area under the Receiver Operating Characteristic curve (AUC). We also present the class activation mapping results to show the key image structures influencing the decision making of each method. We studied the performance of our proposed approaches both in terms of 10-fold cross validation and hold-out validation.

The remainder of this paper is organized as follows.

Section 2 presents a brief background on CAD tools.

Section 3 presents the datasets utilized for this research.

Section 4 describes the three CAD systems studied here for COVID-19 detection on chest radiographs.

Section 5 presents the experimental results. In

Section 6, a discussion of the results is presented. Finally, conclusions are offered in

Section 7.

2. Background and Related Works

Research on the diagnosis and treatment of the novel coronavirus is in high demand in both the medical imaging and machine learning fields. However, due to its sudden emergence and with limited publicly available data for developing machine learning models, it is a challenging problem to address. Fortunately, several CAD tools have been developed in the literature for chest radiographs, and we build on those approaches for detecting COVID-19.

In [

5], chest radiographs are preprocessed using Local Contrast Enhancement (LCE) and are segmented using an Active Shape Model. Next, a set of 114 handcrafted features is computed and fisher linear discriminant classifier is utilized to detect lung nodules on chest radiographs. A novel optimized set of features determined for both clustering and classification for detecting lung nodules on chest radiographs is presented in [

6]. The ‘N-Quoit’ filter is studied for CAD of lung nodules on chest radiographs in [

7]. A set of classification approaches are studied and compared for the detection of lung nodules in [

8]. A U-Net architecture is presented for automated lung segmentation in chest radiographs in [

9]. In [

10], a novel two-stage architecture to detect and diagnose pneumonia is presented using transfer learning approaches. Independent architectures are used for pneumonia detection and diagnosis. Detection of pneumonia is implemented using established transfer learning approaches [

10] and later lung regions are segmented using U-Net architecture before passing it to classification architecture for diagnosing pneumonia patients as bacterial or viral. A CAD tool based on wavelet transforms is presented to detect pneumonia in [

11]. Multiple CAD tools for various diseases have been discussed, as well as how chest radiography can be utilized to detect various diseases [

12,

13]. A CAD algorithm to detect interstitial opacities in chest radiographs is presented in [

14]. A radiologist-level CAD algorithm to detect pneumonia is presented using deep learning approaches in [

15]. This paper also presents a gradient-based visualization method to localize the region of interest [

15]. An attention guided mask algorithm to locate the region of interest for pneumonia detection is presented in [

16]. Several CNN-based approaches are studied for the detection and diagnosis of pneumonia [

17]. Transfer learning-based approaches using established networks such GoogLeNet [

36] and ResNet50 [

31] are studied to detect tuberculosis along with the class activation mapping results [

18]. Some of the other related notable CAD papers are available in [

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30].

Recent research work clearly indicates that deep learning has proven to be highly effective for CAD tools in chest radiographs [

3,

9,

10,

15,

16,

17,

18]. However, balanced sets of chest radiographs with COVID-19 markings are available in limited quantity, making it a difficult problem to address using traditional deep learning approaches. Data augmentation is one method to overcome the limited quantity of images. However, traditional augmentation techniques such as flipping, rotating, color jittering, and random cropping [

37] cannot be applied to chest radiographs. Moreover, medical imaging applications tend to have different visual characteristics exhibiting high inter-class variability and hence traditional augmentation methods are found to be less effective [

38]. Generative Adversarial Networks (GANs) are often used for synthetic data generation [

39]. However, these models are computationally complex, and to date, have not proven efficacious in medical imaging. Moreover, GAN models are highly sensitive to model architecture and parameter choice, and might even degrade the classification performance after creating synthetic data. In [

40], a COVID-19 detection study is conducted utilizing traditional CNN approaches after segmenting the lungs. A novel CNN architecture for detection of COVID-19 in chest radiographs is presented in [

41]. Authors in [

41] utilize a Projection-Expansion-Projection technique for classification of COVID-19 from pneumonia and other normal classes. In this paper, we propose a novel training mechanism using transfer learning approach to mitigate class imbalance with limited training images.

3. Materials and Methods

In this section, we present the publicly available datasets used for this study. In total, we used six different datasets and these are described in

Table 1. Two of the datasets contained COVID-19 cases. The other four were previously established datasets for non-COVID-19 cases. These included cases marked by radiologists for various other lung disorders (lung cancer, tuberculosis, bacterial pneumonia and viral pneumonia) as well as cases that had been marked as normal (i.e., free of these diseases). We included these cases so that the algorithm was able to distinguish COVID-19 from other lung diseases.

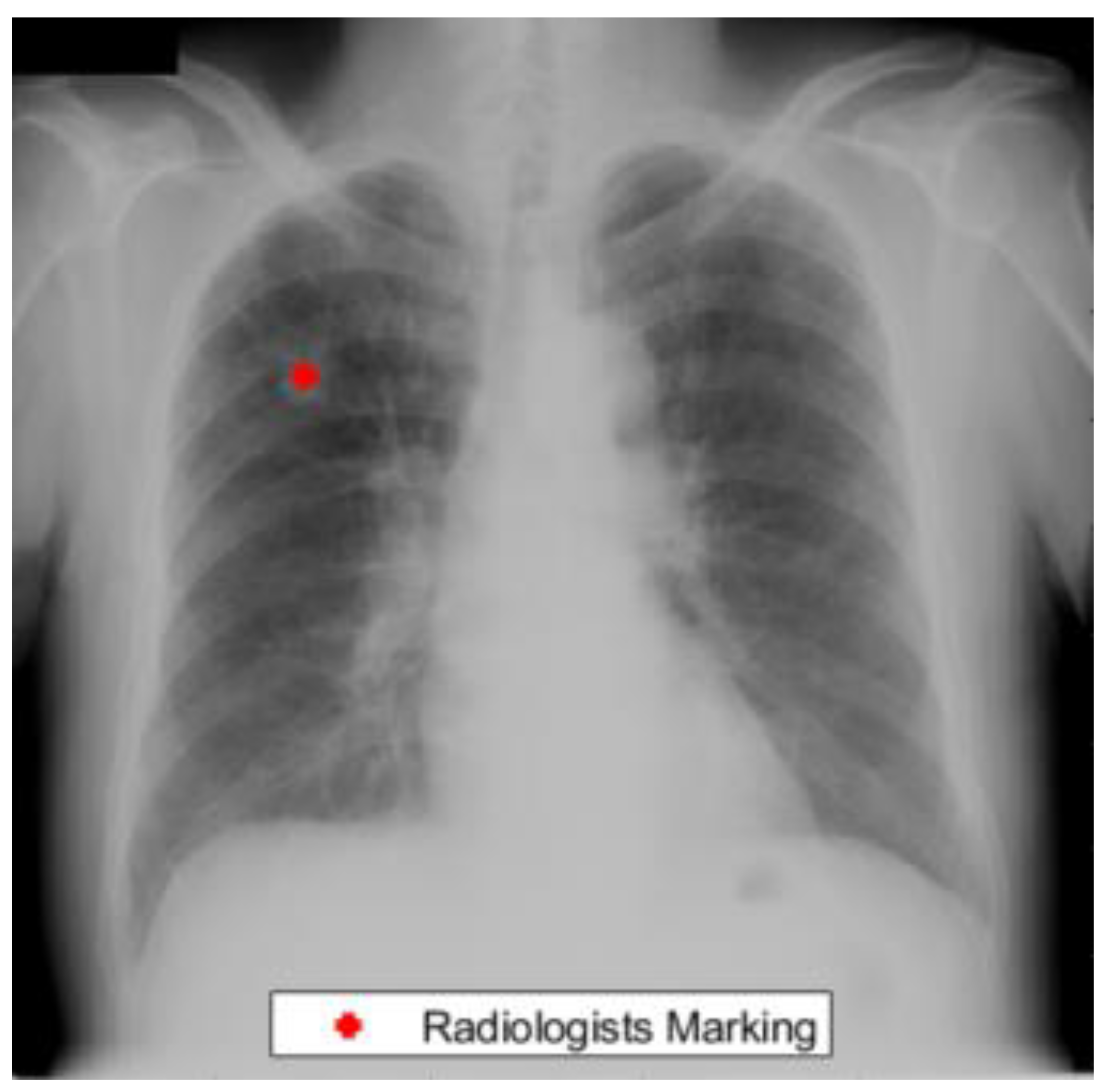

The Japanese Radiological Scientific Technology (JRST) dataset was marked by radiologists for the detection of lung nodules [

42]. This dataset contained 247 chest radiographs out of which 154 cases contained nodule markings. In addition, lung masks are provided by [

43] which can be used to study the performance of our lung segmentation algorithm.

Figure 1 presents an example from the JRST dataset with a radiologist’s lung nodule marking.

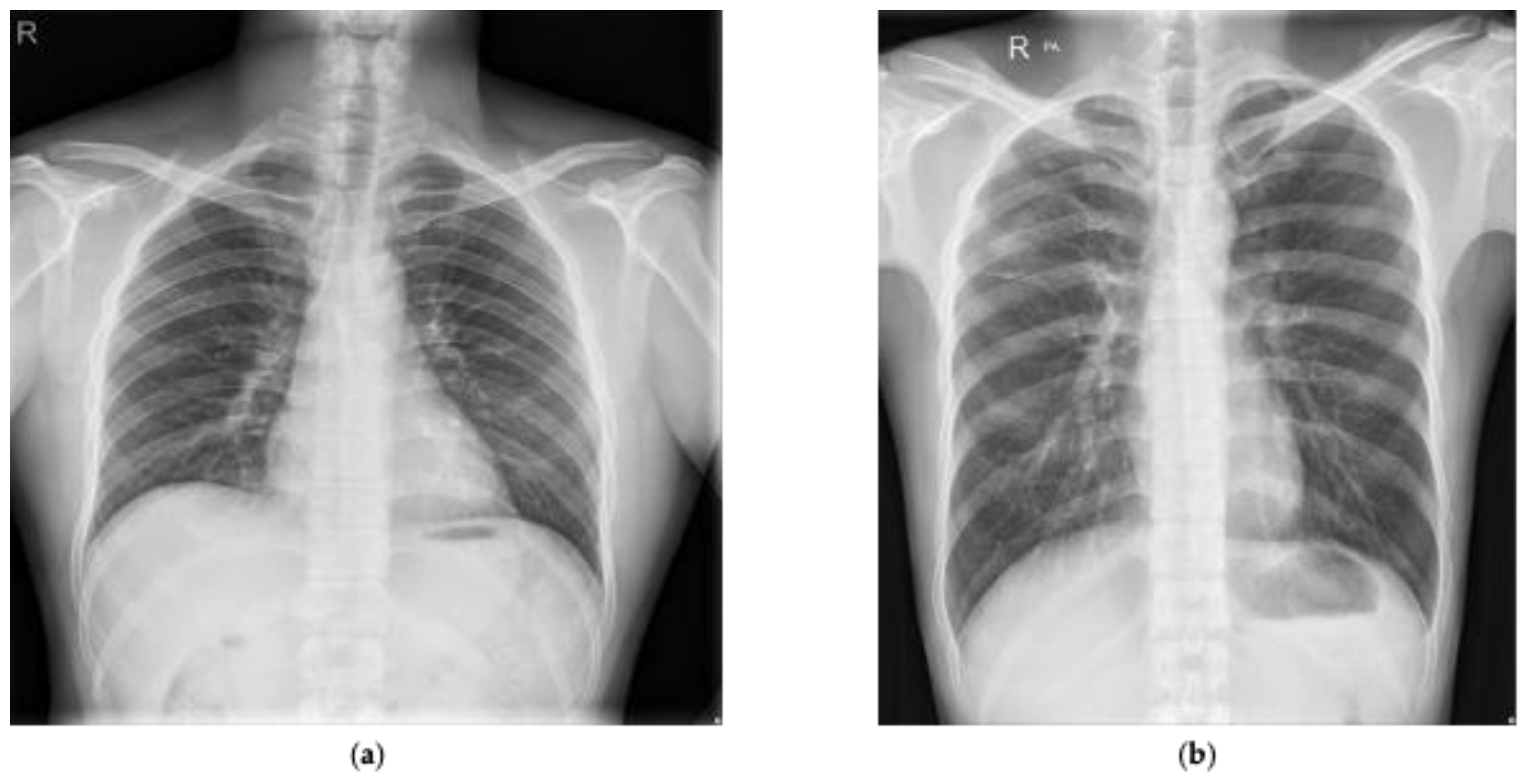

We also utilized a publicly available Shenzhen dataset marked by radiologists for the detection of tuberculosis [

44,

45,

46,

47]. This dataset was composed of 662 annotated chest radiographs with 336 marked as tuberculosis and the rest marked as normal.

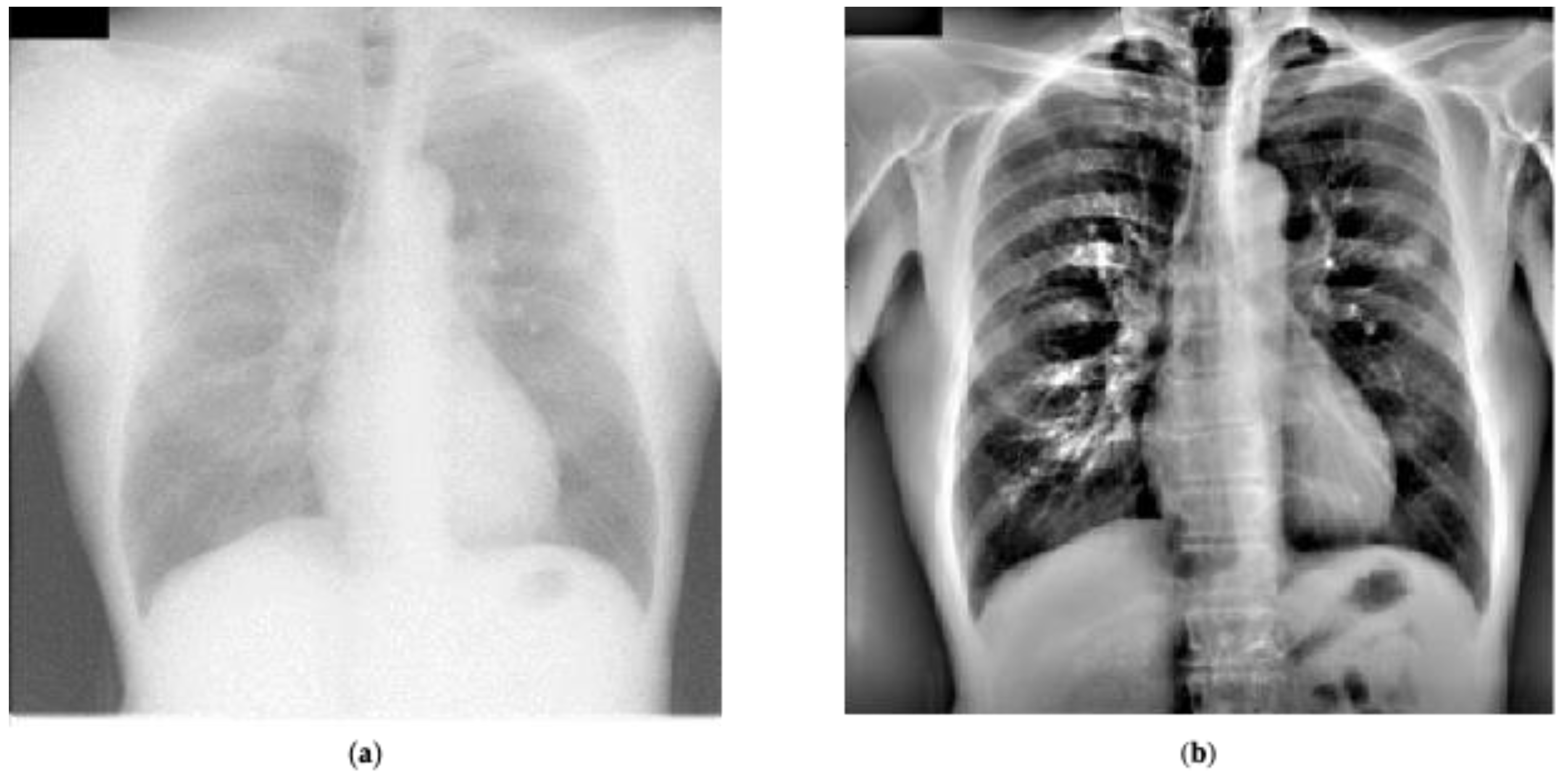

Figure 2 presents two examples presented in the Shenzhen dataset that have been marked as normal and tuberculosis, respectively, by radiologists. In addition, manual lung masks were publicly available for 566 cases in the Shenzhen dataset. Note that we included only those cases for this research.

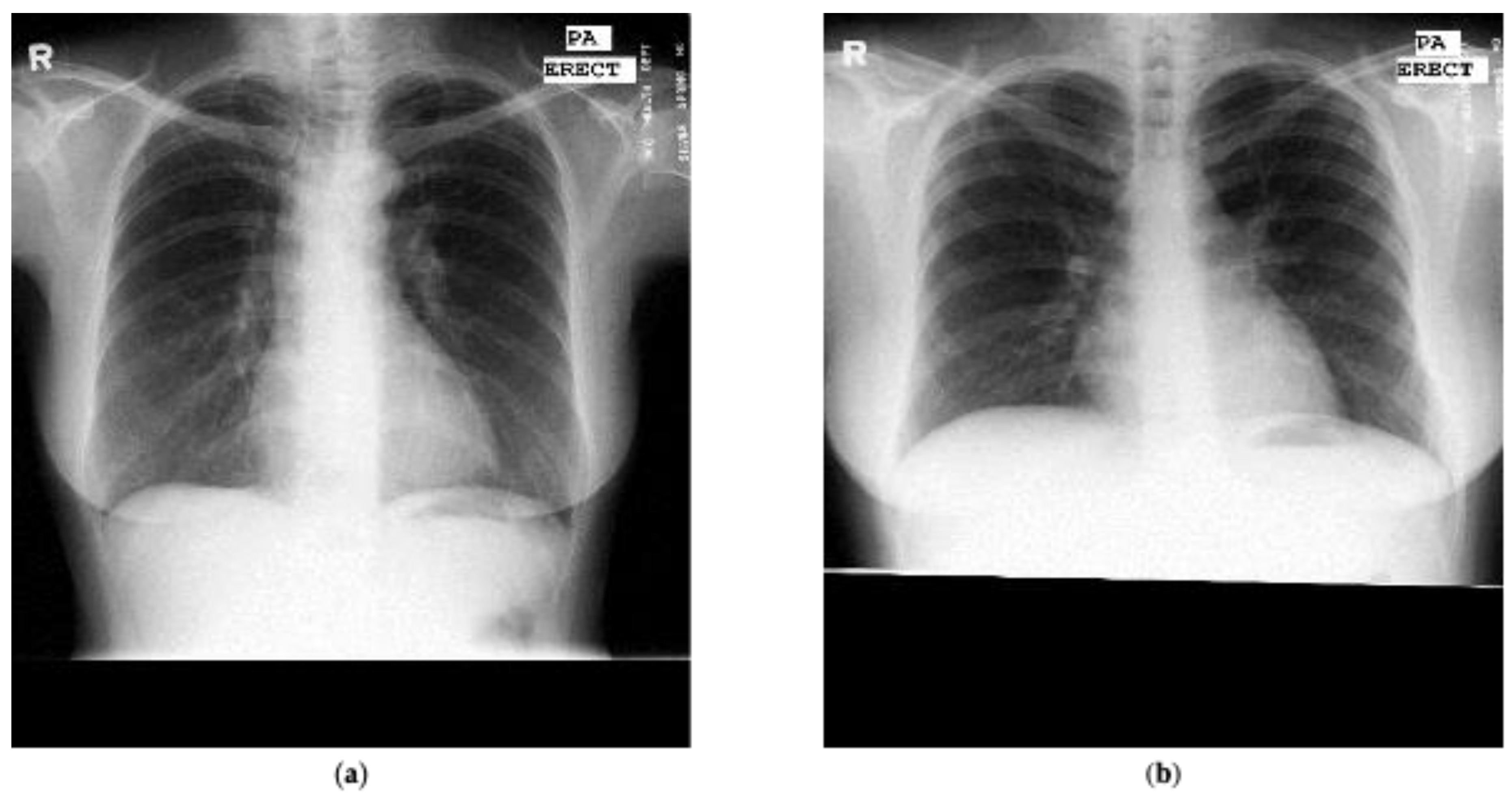

The Montgomery dataset [

45,

46] was marked by radiologists for tuberculosis detection and comprised of 80 chest radiographs marked as normal and 58 cases marked as tuberculosis. Similarly to the Shenzhen dataset, the lung masks marked by radiologists are available publicly.

Figure 3 presents one example marked as normal and one example marked as tuberculosis from the Montgomery dataset.

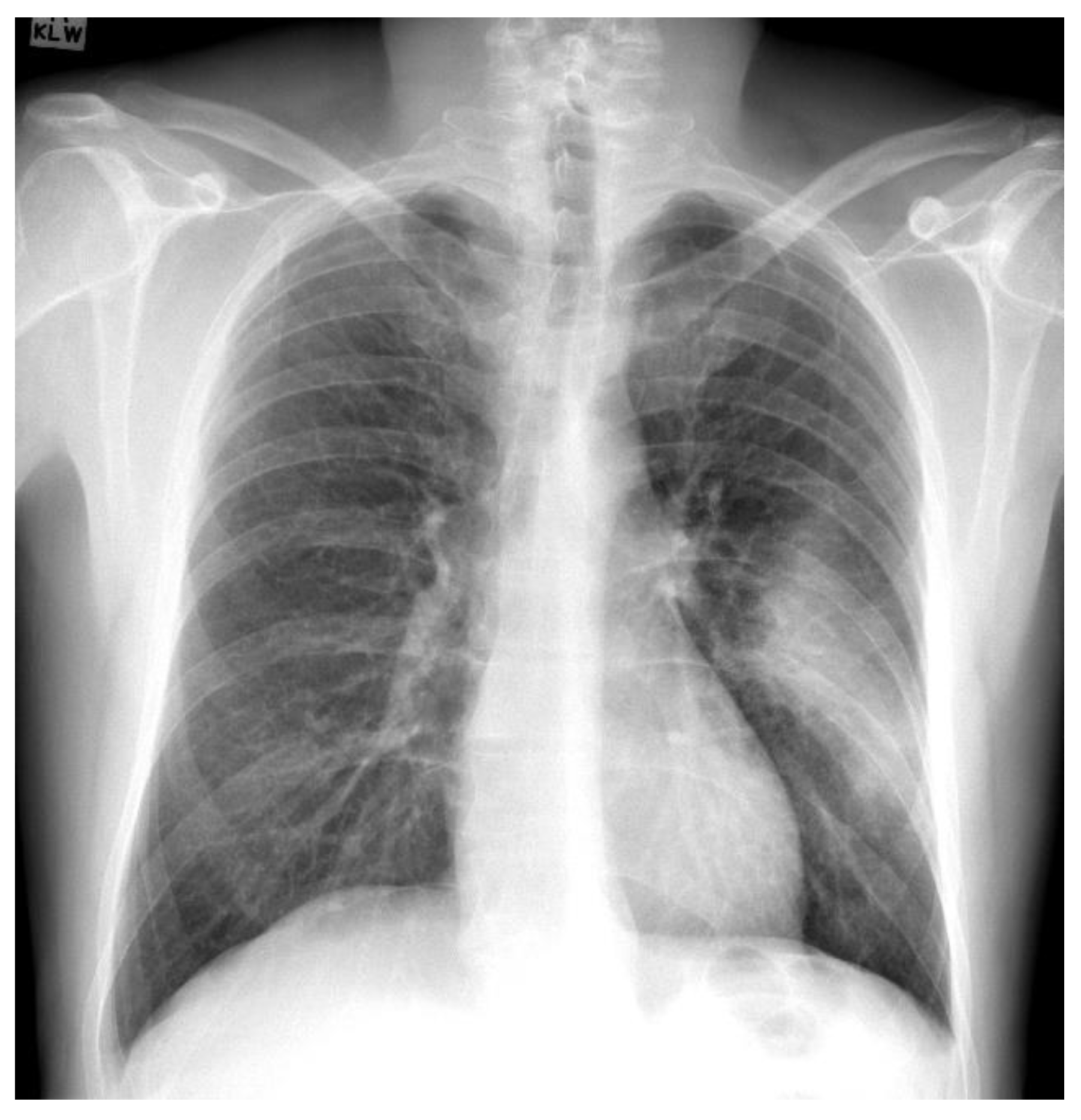

Another publicly available dataset that we refer to is the pneumonia dataset, which contained chest radiographs marked for pneumonia detection [

48]. It contained 5856 chest radiographs manually annotated by radiologists as normal, bacterial or viral pneumonia.

Table 2 presents the composition of the pneumonia dataset.

Figure 4 presents certain sample chest radiographs marked in the dataset.

Finally, for COVID-19 cases, we used the datasets available publicly at [

49,

50]. The dataset presented in [

49] was curated by Dr. Joseph Cohen, a postdoctoral fellow at the University of Montreal and contained images marked as COVID-19 by radiologists. We solely utilized the 239 chest radiographs in frontal angle that had been marked as COVID-19 from this dataset. Note that the dataset used for this paper was downloaded on 16 May 2020 and the images are frequently being updated so the quantity of images might differ.

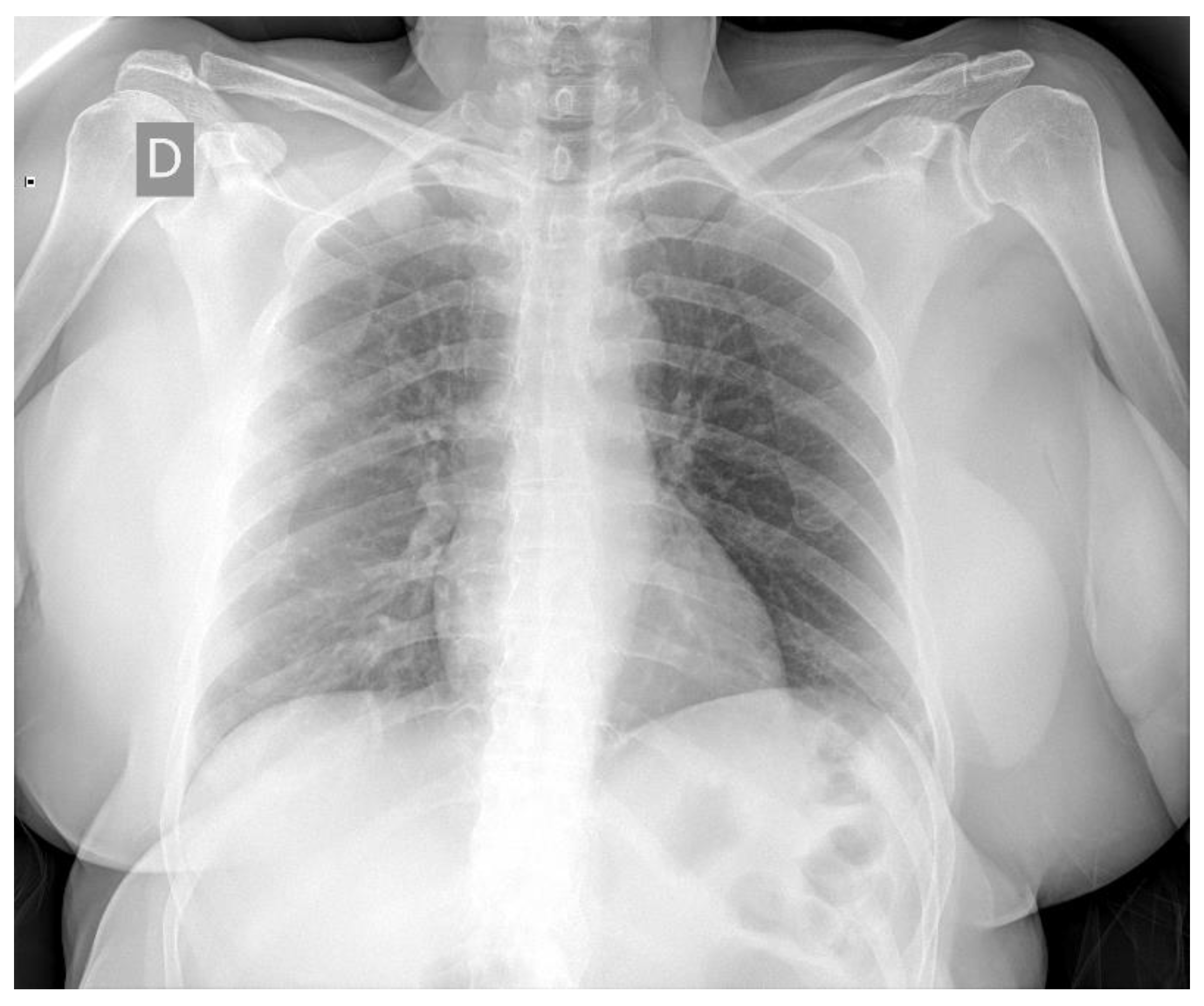

Figure 5 presents an example marked as COVID-19 by radiologists from this dataset. In [

50], a large dataset was introduced from the Valencian Region Medical ImageBank (BIMCV) containing chest radiographs of COVID-19 patients marked by expert readers. This dataset contained radiological findings and location, pathologies, radiological reports, and DICOM metadata for 2265 chest radiographs belonging to 1311 patients. To the best of our knowledge, this is the largest COVID-19 dataset of chest radiographs available publicly. However, note that there are no normal cases in this dataset. We considered all the images in the BIMCV dataset as COVID-19, as reported in the paper associated with the dataset [

50]. All studies of patients in the dataset had at least one positive RT-PCR test.

Figure 6 presents an example marked as COVID-19 by radiologists from this dataset. Note that we utilized all of the datasets except BIMCV for 10-fold cross validation purposes. We conducted a sensitivity analysis experiment using the BIMCV COVID-19 dataset. We also conducted another experiment in which we split all the datasets available in our resources for a thorough hold-out validation.

6. Discussion

Lung segmentation results presented in

Table 3 indicate that our proposed U-Net architecture performed well across two test datasets demonstrating its efficacy, with IoU values of 0.95 and 0.96. Adding these lung masks to the preprocessing used by the enhanced baseline system and recommended system helped improve performance by excluding irrelevant spatial information from the features generated by the CNNs. Classification results presented in

Table 5,

Table 6,

Table 7 and

Table 8 clearly indicate that preprocessing which includes lung segmentation significantly improves the system performance in terms of sensitivity and precision. Sensitivity improvements were in the range of 11–22% for all the networks and precision improvement was by at least 25% across all networks using 10-fold cross validation study. It must also be noted that chest radiographs used in this research have different modalities such as Posterior Anterior (PA), Anterior Posterior (AP) and AP-Supine. These modalities indicate that chest radiographs are taken at different distances from the patient. Therefore, segmenting the lung region helps the classification architectures overcome any bias, aiding in improving the performance further.

Table 9,

Table 10 and

Table 12 clearly indicate that the recommended system using transfer-to-transfer learning mechanism provided the best performance across all methods studied in this paper for all performance metrics. There is a significant boost in terms of sensitivity (about 10%) for all the networks. This type of training procedure helped the algorithm perform significantly better, addressing the class imbalance as weights of the network were adjusted based on each mini training set without any data augmentation. Starting from the recommended system, we took away the transfer-to-transfer learning in the enhanced baseline. We further took away the preprocessing and lung segmentation in the baseline system. Thus, we were able to show the benefits of these algorithm components by ablation. We have elected to preserve the standard CNN architectures and not perform any layer ablation in order to preserve their original integrity. We believe that the main emphasis of this paper is on the training mechanisms to combat class imbalance and help in the detection of COVID-19 on chest radiographs.

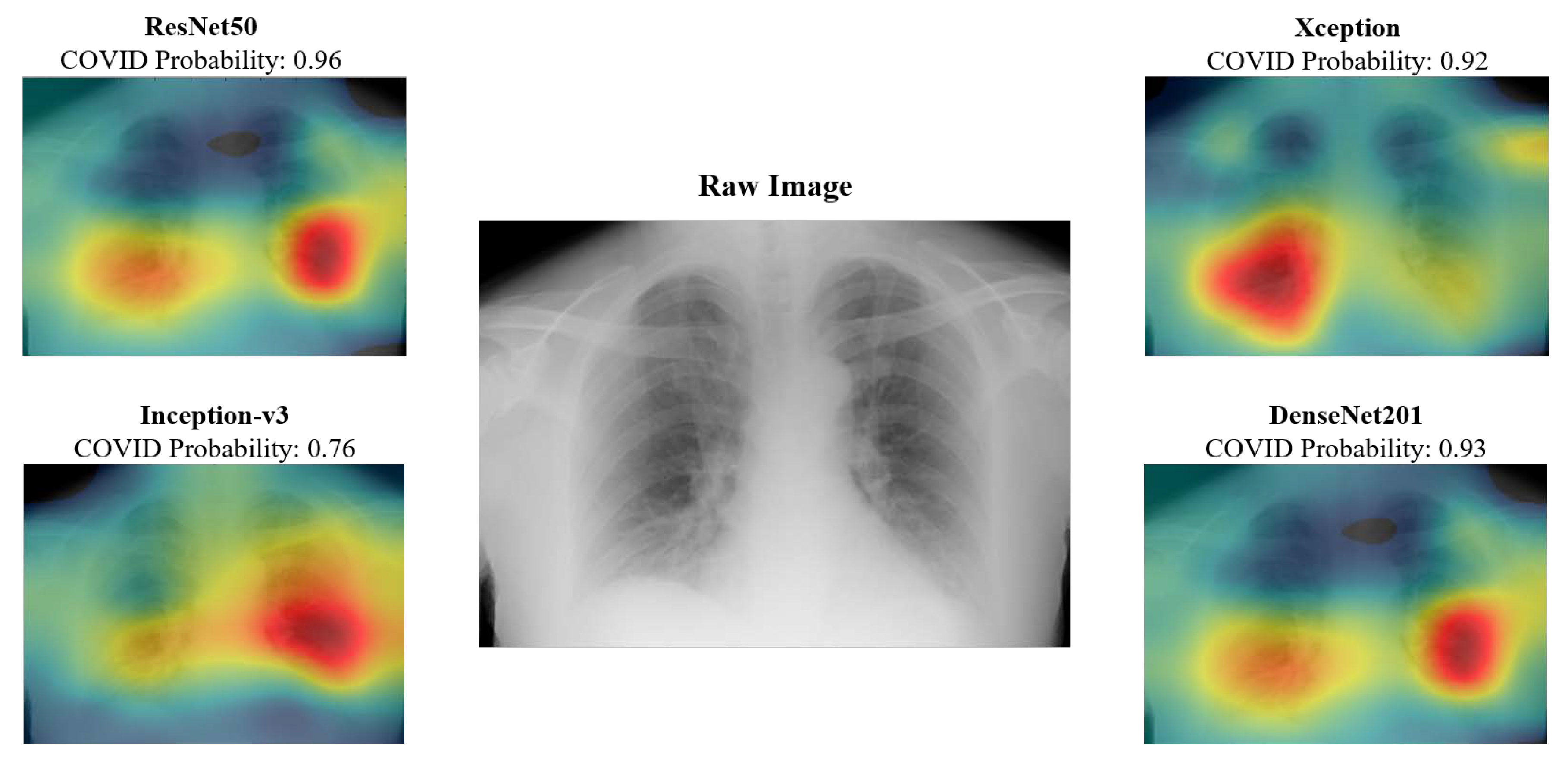

The class activation maps provide radiologists with insight into the anatomical structures that are keyed in on the various networks. For instance, the maps presented in

Figure 10 indicate that right bottom lung region of this patient contributed the most for Xception network output, whereas it is the left bottom lung region for Inception-v3. These types of visualizations could provide insights to radiologists and would help by providing a valuable second opinion. CAD results could be provided for every model and the radiologists could select or reject CAD results based on the whether they believed the class activation map was highlighting the medically relevant areas of the lung for a given patient.

Table 7,

Table 8 and

Table 10 present the results for the BIMCV COVID-19 dataset. The performance for this dataset was studied in terms of true positives, false negatives, and sensitivity, because all the patients present in the dataset were diagnosed with COVID-19. Results indicate that our recommended system significantly outperforms the other approaches. Sensitivity of 0.94 was achieved using our recommended approach for both the hold-out validation experiments. These hold-out validation experiments contained 2265 and 2139 COVID-19 chest radiographs for testing, respectively. Note that these results were achieved using a limited set of COVID-19 training images (239 and 365), which clearly demonstrates the efficacy of the proposed approach.

Table 12 compares the performance of ‘ResNet50’ using the different training methodologies when testing on equal numbers of images belonging to the COVID-19 and non-COVID-19 categories. Note that this experiment was still conducted with imbalanced training dataset. Results clearly indicate that our recommended system performed the best in terms of all performance metrics studied in this paper. We could not run the experiments for the newly discovered COVID-19 negative BIMCV dataset, as their publication is yet to be available. We would like to gain more insights in terms of its marking before utilizing those cases for training or testing to avoid any kind of bias. We did not run the experiments to avoid any type of bias present in the COVID-19 negative dataset; its publication would help us understand the data. Studying the algorithm’s performance utilizing cases that are marked as COVID-19 positive and negative from the set of hospitals would provide better insights about the performance.

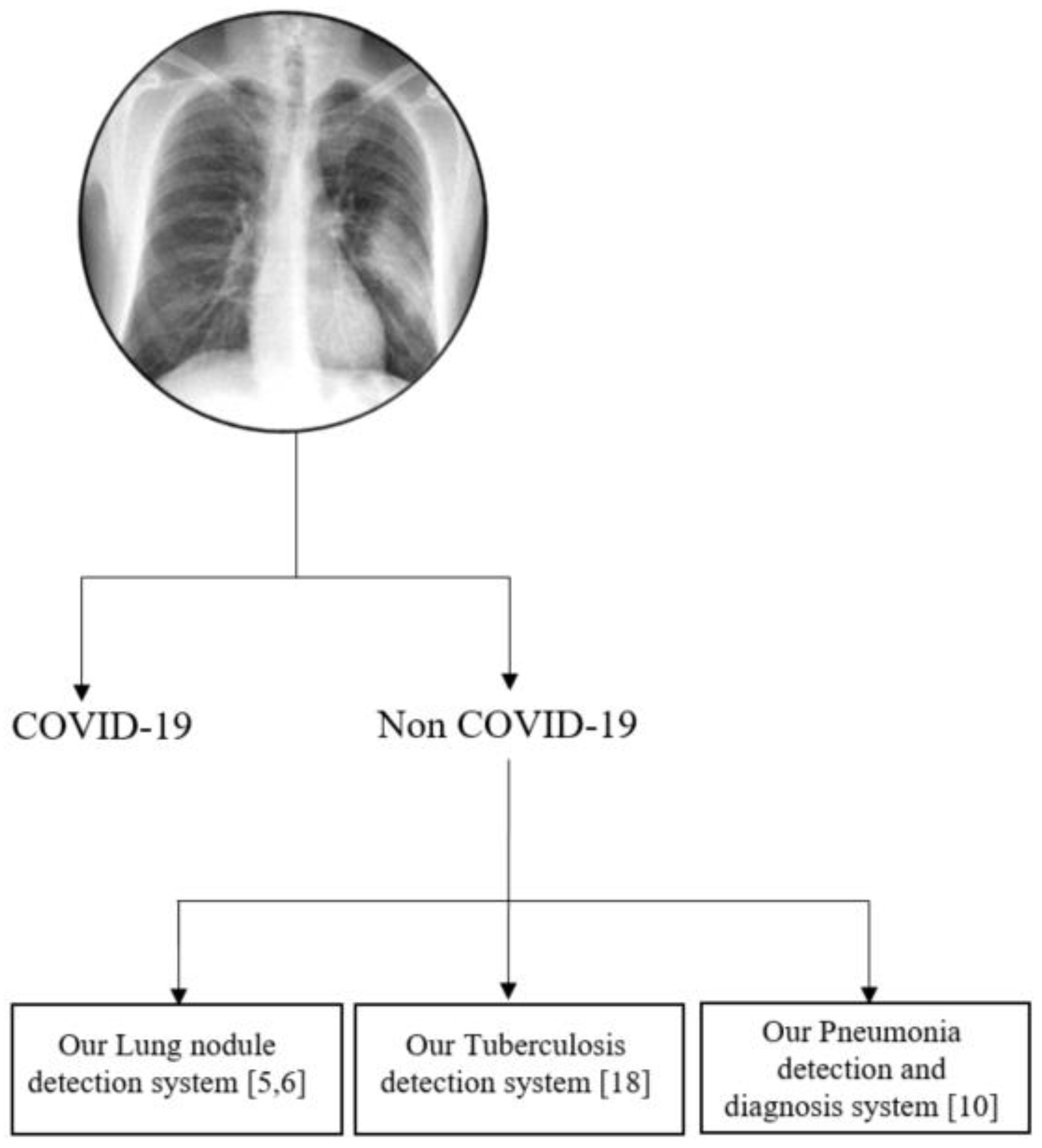

Figure 11 presents the roadmap of our integrated CAD system in chest radiographs for disparate applications. We intend to integrate the proposed COVID-19 detection capability into our existing CAD systems for various lung diseases in chest radiographs. Our CAD system for the detection of tuberculosis achieved an overall accuracy of 91% for the Shenzhen dataset [

18]. In [

10], our CAD system achieved an overall accuracy of 98.9% and 97.9% for pneumonia detection and diagnosis, respectively, using the pneumonia dataset in

Table 1. The proposed technology could be even more important diagnostically for distinguishing normal flu cases with pneumonia from COVID-19 for upcoming flu seasons.

7. Conclusions

CAD algorithms for COVID-19 detection using chest radiographs could be a valuable new tool in the fight against this disease. Such algorithms could potentially provide fast and valuable information regarding a patient’s diagnosis. In this research, we have proposed a novel approach for CAD of COVID-19 in chest radiographs that is robust to class imbalance in training data. We believe such robustness is important because class imbalance is a frequent problem in medical image analysis and classification. This is particularly true for contemporary COVID-19 detection.

Table 9,

Table 10 and

Table 12 indicate that our recommended system using transfer-to-transfer learning methodology outperforms the traditional approaches significantly. In fact, the minimum performance across all networks and testing conditions in terms of sensitivity is 88%, despite class imbalance. Our results also showed that select preprocessing and lung segmentation also helped to provide a significant boost in performance in this application. Class activation maps can be readily generated along with the CAD output, to provide radiologists with insight into the spatial areas that are most important in the CAD classification. This could be important for COVID-19 detection, where radiologists may be looking for specific abnormalities appearing in some areas of the lungs.

We believe that our transfer-to-transfer learning approach helps in maximizing inter-class variance, as every minority class image is trained with majority class batches. We acknowledge that our proposed transfer-to-transfer learning approach is similar to duplicating minority class images to an equivalent amount of majority class images. However, our proposed approach is efficient in terms of memory and each of the majority class image is seen only once by the network, thereby reducing the training time significantly. Furthermore, this CAD system can be easily “transfer-learned” further with new labeled data as it becomes available. This type of approach could help us update the network from the currently developed stage with access to more chest radiographs instead of a traditional approach where we would have to re-train from scratch.

We have presented these results for publicly available datasets thereby setting a benchmark for future research efforts. At first, we studied the performance of traditional transfer learning-based approaches utilizing ResNet50, DenseNet201, Inception-v3, and Xception networks. These results were significantly improved by the application of LCE and lung segmentation for traditional transfer learning approaches.