1. Introduction

Peptide-mediated biomineralization is a promising bio-inspired technique for creating inorganic nanomaterials that possess functional properties [

1]. This biomimetic technique has produced nanomaterials for a wide array of applications, such as highly efficient catalysts [

2], plasmonic materials with tailorable optical properties [

3], and bimetallic nanoparticles for electrooxidation [

4], among others. These interesting materials were produced due to the ability of the biomineralization peptide to regulate the size, shape, and morphology of the nanomaterial. This level of control is achieved due to the binding affinity of the biomineralization peptide towards a particular material surface, which leads to the selective binding of the biomineralization peptide at specific faces of the growing nanomaterial [

5,

6]. The binding affinity of the peptide is known to be affected by peptide properties such as the oligomerization state [

7], conformation [

8], and sequence [

9].

In order to broaden the utility of peptide-mediated biomineralization as an effective platform for nanomaterial synthesis, a method for the quick identification of biomineralization peptide sequences should be developed. Currently, the discovery of biomineralization peptides has mainly depended on the tedious laboratory assays which are combinatorial in nature. Since acquisition of experimental data is costly, typically only small data sets are available in this domain [

10]. Thus, the use of modern computational tools such as artificial intelligence (AI), data mining, and machine learning (ML) for this purpose presents significant potential to address this problem since these tools have been used for the discovery of novel materials [

11,

12,

13] and their properties [

14,

15,

16]. Data from both experimental results and computations from theoretical simulations can be used to train ML models [

17,

18]. ML may therefore accelerate the discovery of novel biomineralization peptide sequences, which will lower the costs of producing nanomaterials through biomineralization. Mainstream ML tools used for Big Data are not optimized for dealing with small data sets [

19]. Interactive ML techniques that combine expert knowledge with information from these small data sets are more appropriate for such applications [

20].

Past works on classifying biomineralization peptide binding affinity class have reported the creation of models that classify biomineralization peptide sequences into strong and weak binders. These models used the Needleman–Wunsch algorithm [

21], graph theory [

22], and support vector machines (SVMs) [

23] for the classification task. While these classification algorithms demonstrate satisfactory predictive ability, the impact of the variables on the classification task is not clearly interpretable. This characteristic limits the practicality of the classification model, and renders the interpretation of the results by material scientists difficult. Thus, a rule-based algorithm is more appropriate for this kind of application. A rule-based algorithm clearly articulates the classification process through a set of rules extracted from the dataset, leading to transparent and unambiguous decision-making. Predictive models that rely on the generation of rules, such as rough sets and decision trees, can provide effective decision support for material scientists via case-based reasoning [

24].

In this work, we develop a rule-based classification model built using the hyperbox algorithm for predicting biomineralization peptide binding class. The rest of this article is organized as follows.

Section 2 describes the methodology itself.

Section 3 applies the classifier model to this nanomaterial discovery problem.

Section 4 gives conclusions and discusses prospects for future work.

2. Methodology

The hyperbox model developed here is an extension of the work of Xu and Papageorgiou [

25]. The original approach they developed used a mixed integer linear programming (MILP) model to generate non-overlapping hyperboxes to enclose clusters of data; additional hyperboxes can be determined iteratively to improve classification performance. Further algorithmic improvements were reported by Maskooki [

26] and Yang et al. [

27]. The presence of user-defined parameters makes the training of hyperbox models highly interactive. Expert inputs can be integrated into the procedure to augment small data sets with mechanistic domain knowledge. The resulting optimized hyperboxes constitute a rule-based model that can be used as a classification model. One of the key advantages of this technique is that the hyperboxes can be readily interpreted as IF–THEN rules, which can be used effectively and intuitively to support decisions [

27].

The main features of the model extension developed here are as follows:

The model is a binary classifier such that a pre-defined number of hyperboxes are meant to enclose samples which positively belong to the group and to exclude samples which do not. The initial default assumption is that of negative classification, but the activation of at least one rule results in a positive classification. This approach eliminates the need for negative classification rules. The number of hyperboxes is user-defined and the training is done interactively with expert knowledge inputs to augment small data sets that are typical in this domain [

19,

20]. This consideration makes it possible to identify alternative rule sets which may make more sense to the expert or may improve the performance of the model. However, a balance should be made between generalizability and over-fitting.

A user-defined margin is utilized as the minimum distance needed to separate the negative samples from the boundaries of the resulting hyperboxes. Thus, each hyperbox actually consists of concentric inner and outer hyperboxes separated by the said margin. This feature serves the same purpose as the gap between parallel hyperplanes in SVMs.

The model accounts for Type I (number of false positives) and Type II (number of false negatives) errors such that a user may select which type of error should be minimized while indicating an upper limit for the other. This feature was introduced in an extension of the hyperbox model by Yang et al. [

27].

The model is meant to define the dimensions of the hyperboxes to adequately classify the training data. Reduction of attributes can result in more parsimonious ML models and is thus regarded as an important feature during training [

28]. These dimensions may extend to infinity, rendering some attributes to be insignificant for classification. This feature is achieved by enabling the model to remove the lower bound and/or upper bound of each hyperbox along each dimension, as needed.

The overall objective of the model is to minimize Type I errors, α, while ensuring that Type II errors, β, are kept below a defined threshold ε. Type I (

α) and Type II (

β) errors are then defined where

j represents a sample in the training set S

T.

Each sample

j in the training data has performance level in attribute

i with the value of

The lower (

) and upper (

) limits along dimension

i for hyperbox

k are determined to enclose as many positive samples as possible. The dimensions of the outer hyperbox and the dimensions of the inner hyperbox are separated by the user-defined marginΔ.

The possibility of semi-infinite (i.e., having no lower limit or no upper limit) or infinite dimensions (i.e., having no lower and upper limits) for the hyperbox are also considered. In the latter case, the absence of lower and upper limits allows the hyperbox to be projected to a lower dimensional space, and removes the corresponding attribute from the associated decision rule.

For instances in which sample

j lies outside the boundaries of the hyperbox, sample

may lie below the lower limit of hyperbox

k in dimension

i by more than the user-defined margin,

(11) or

may lie above the upper limit of hyperbox

k in dimension

i by more than the user-defined margin,

(12). If the sample satisfies any of the two conditions, then this indicates that the sample is outside the hyperbox and

or

will be equal to 1. Consequently, in such instances, the sample should be considered a negative sample and

.

In instances in which there are multiple hyperboxes, a sample is said to be a positive sample,

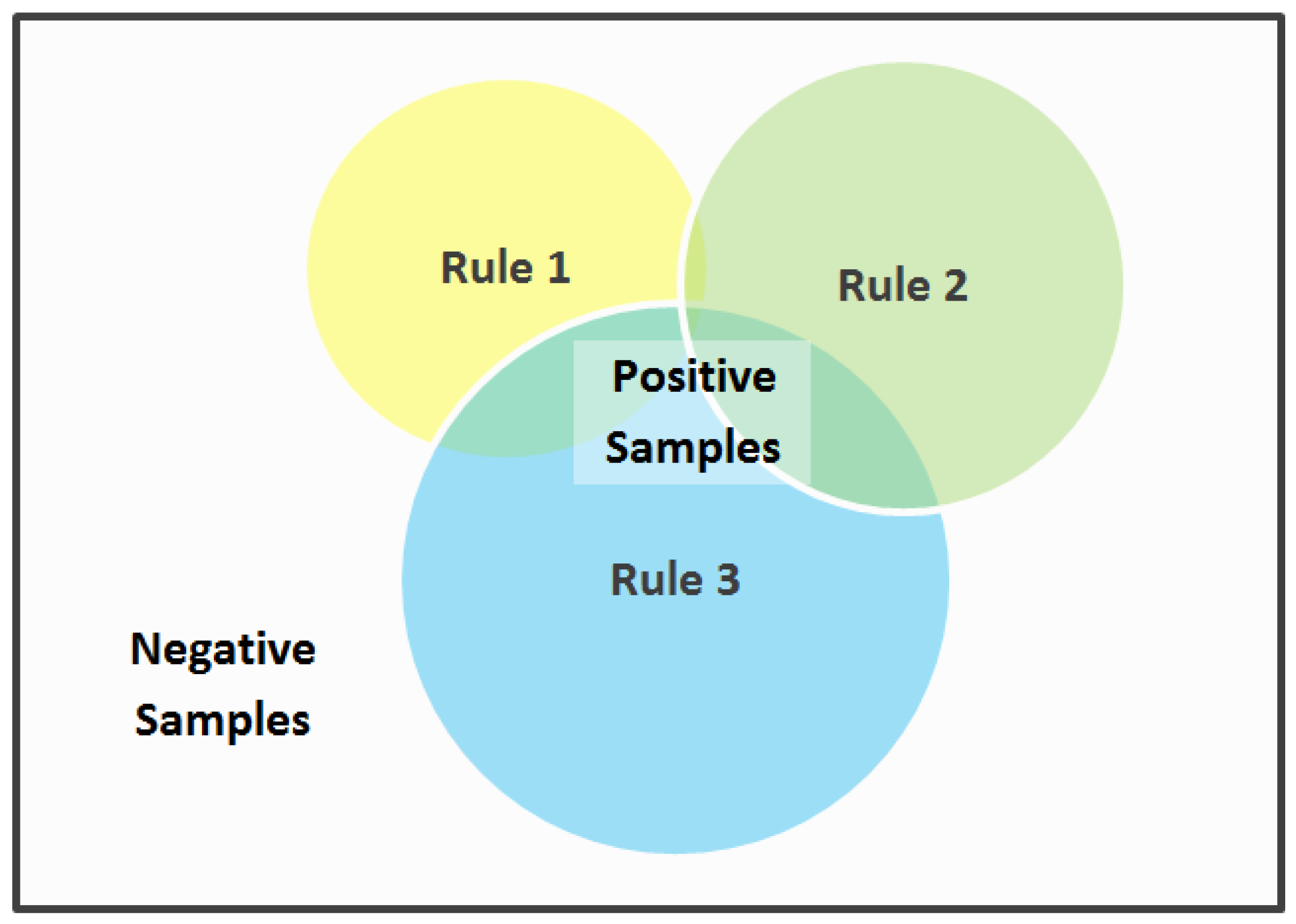

, if it is enclosed by at least one hyperbox. Thus, each hyperbox corresponds to one rule, and the resulting classifier consists of a set of disjunctive rules. The relationship among a set of such rules can be represented by a Venn diagram as shown in

Figure 1. Samples that do not activate any of the rules (i.e., are not enclosed by any of the hyperboxes) are classified as negative by default.

Finally, the binary variables are as follows:

This MILP model can be solved to global optimality using the branch-and-bound algorithm, which is available as a standard feature in many commercial optimization software packages. Alternative solutions (rule sets) can be determined for any given MILP using standard integer-cut features in such software; additional solutions can also be found by adjusting the model parameters.

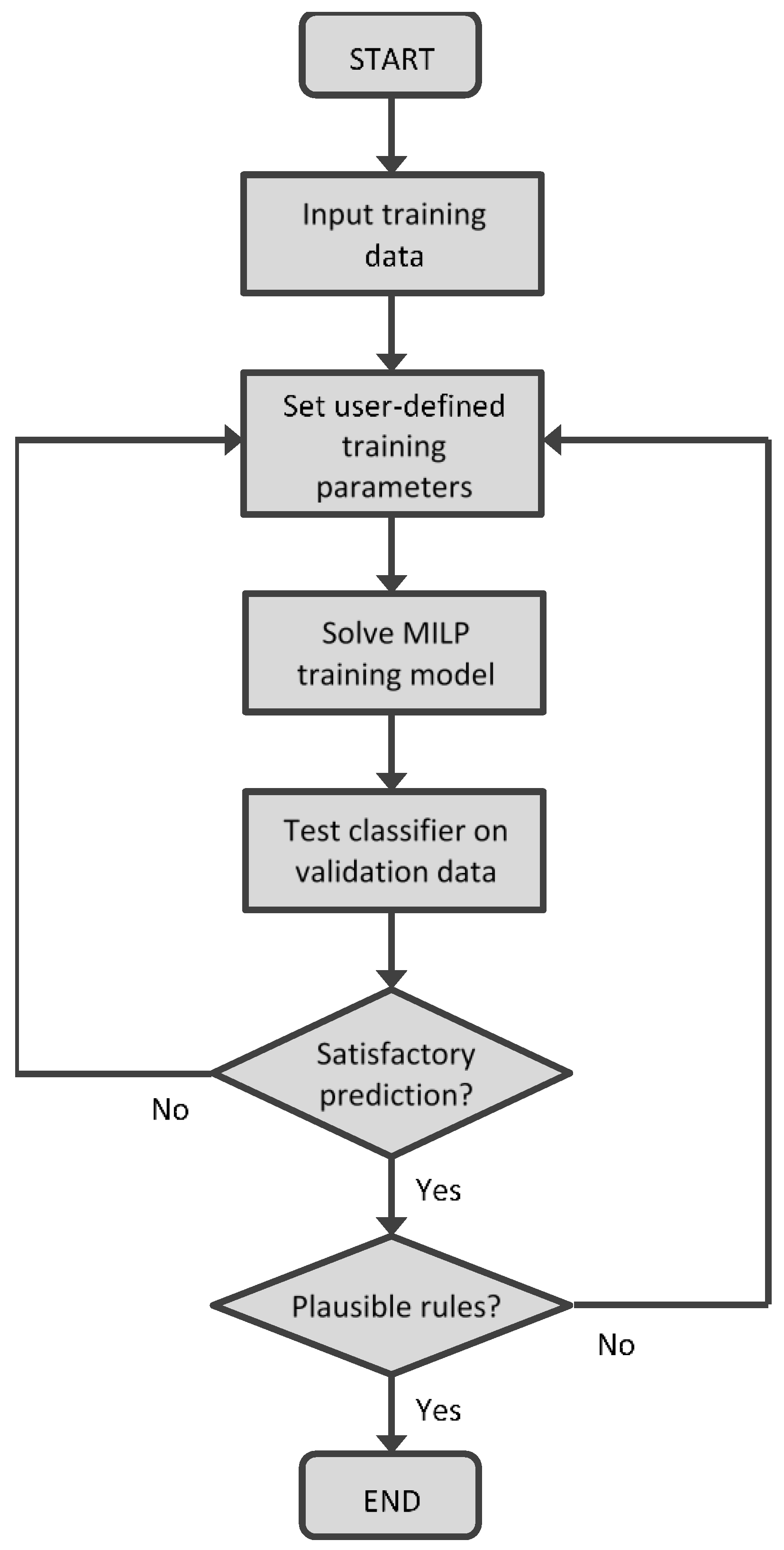

Figure 2 shows the interactive training procedure for the hyperbox classifier.

The case study utilizes data from the work of Janairo [

23], which looks into the classification of the biomineralization peptide binding affinity using SVM. There are 31 cases, each representing a biomineralization peptide sequence. These biomineralization peptide sequences are characterized by 10 parameters, called the Kidera factors, and the peptides are classified into two categories of either weak or strong binding affinity. Kidera factors are descriptors related to the structure and physico-chemical properties of proteins derived from rigorous statistical analyses [

29]. The 10 Kidera factors are K1: helix/bend preference, K2: side-chain size, K3: extended structure preference, K4: hydrophobicity, K5: double-bend preference, K6: partial specific volume, K7: flat extended preference, K8: occurrence in alpha region, K9: pK-C, K10: surrounding hydrophobicity. The datapoints were randomized and further divided into two sets with 20 datapoints used for training and the remaining 11 used for validation. The data was initially normalized to transform

to

, where

is the lowest value in dimension

i among all samples

j while

is the largest value observed in dimension

i among all samples

j. The raw data for all 31 datapoints are included in

Table S1 of the supplementary material.

The training was done by solving (1) subject to constraints defined in (2) to (17) with a Δ = 0.05, ZLik = −50.00 and ZUik = 50.00. The model was implemented in LINGO 18.0 and solved using a laptop with an Intel® CoreTM i7-6500U 2.5 GHz CPU with 8.0 GB RAM.

3. Case Study

Using just one hyperbox, with

and a constraint that at least one attribute can be removed, the optimal solution was obtained in 0.20 s. The optimal solution was able to correctly classify all training data such that

. The resulting dimensions for the 10 attributes considered are shown in

Table 1 where shaded entries indicate that there is no limit for the lower or upper bound for the corresponding attribute.

Table 1 can be translated into IF–THEN rules as follows. Only five attributes remained relevant—K3, K6, K7, K9, and K10—with K3, K6, K7, and K9 having one-sided limits and K10 being the only attribute bound by an upper and a lower limit.

Using this rule to classify the validation data resulted in all weak binding samples (total of eight out of 11) being correctly classified and all strong binding samples (total of three out of three) also correctly classified. The confusion matrix for this optimal rule is summarized in

Table 2. The previous SVM classifier also found that K3, K6, K7, and K9 as significant descriptors for the prediction of the biomineralization peptide binding affinity class [

23]. However, the SVM classifier arrived at this model after 12 optimization steps, as opposed to the quick and straightforward manner of the present hyperbox model. Moreover, using the same set of descriptors, the hyperbox model outperforms the prediction accuracy of the SVM classifier, which was 92%.

It is also possible to identify alternative sets of rules from degenerate solutions or near-optimal solutions. An example is given in

Table 3, which corresponds to the 10th solution for the problem considered. The rule generated is relatively more complex than

Rule 1 because seven attributes (i.e., K2, K5, K6, K7, K8, K9, and K10) are needed to predict peptide binding affinity.

This rule was able to classify the training data correctly with

and

still equal to 0. Similarly, it was effective in classifying all 11 samples in the validation data as summarized in

Table 4.

The model is then extended to consider five hyperboxes with

. The consideration of additional hyperboxes enables the possibility of identifying alternative rule sets which can be used to classify data and potentially improve the performance of the model. Five hyperboxes, for example, translates to having five different rule sets to classify objects. Additional constraints to limit attribute overlaps between the boxes have been added as indicated in (19) to (21).

Optimizing with

results in the boundaries summarized in

Table 5. The optimal solution was obtained in 5 s computational time and was able to classify seven out of nine positive samples and 11 out of 11 negative samples from the training data. The rules are disjunctive, and can be summarized as follows:

Rule 3a: IF and IF and IF and IF and IF and IF IF THEN binding is Strong.

or

Rule 3b: IF and IF THEN binding is Strong.

or

Rule 3c: IF and IF and IF and IF THEN binding is Strong.

or

Rule 3d: IF and IF and IF and IF and IF and IF THEN binding is Strong.

or

Rule 3e: IF and IF and IF and IF and IF and IF .

If a sample meets at least one of these rules, then the sample can be considered to have Strong binding. The rules were then used to evaluate the validation data; its performance is summarized in

Table 6.

Again, an alternative set of rules (fifth near-optimal solution) is explored and the results are as shown in

Table 7. These can be translated into the following:

Rule 4a: IF and IF and IF and IF and IF THEN binding is Strong.

or

Rule 4b: IF and IF and IF and IF THEN binding is Strong.

or

Rule 4c: IF and IF and IF and IF THEN binding is Strong.

or

Rule 4d: IF and IF and IF THEN binding is Strong.

or

Rule 4e: IF and IF and IF and IF THEN binding is Strong.

This alternative solution was able to classify eight out of nine positive samples and 11 out of 11 negative samples, resulting in

and

for the training data set. The rules were then used for the validation data set and the confusion matrix is shown in

Table 8.

The performance of the algorithm was further tested by performing the procedure five more times using a different sampling of training and validation data each time. The k-fold validation was completed in 1 min and 38 s. The performance of the rules for training and validation data is summarized in

Table 9.

The variables that the enhanced hyperbox algorithm automatically determined to be significant in making the biomineralization peptide binding class prediction were K3 (extended structure preference), K6 (partial specific volume), K7 (flat extended preference), K9 (pK-C), and K10 (surrounding hydrophobicity). The inclusion of these variables in the algorithm affirms and reinforces the findings of past studies which systematically analyzed the factors that governed peptide binding to surfaces. The inclusion of the variables that relate to the peptide conformation (K3 and K7) and protonation state (K9) are consistent with the findings of Hughes et al., wherein they concluded that peptide conformation is a major feature that influences the size, shape, and stability of peptide-capped materials [

30]. In addition, the incorporation of peptide variables related to water interaction, such as K6 and K10, likewise supports the results of the atomistic simulations of Verde et al., wherein they reported how peptide solvation influences structural flexibility and surface adsorption [

31]. Thus, the presented enhanced hyperbox model has formalized these associations into a concise classifier, with a clearly articulated set of rules. Aside from transparency on how the decision was reached through rule generation, another major advantage of the enhanced hyperbox model is its accuracy, as shown in

Table 10. The present model outperforms common machine learning algorithms, which were simulated in R [

32], in terms of accuracy, sensitivity, and specificity.