Evaluation of the Quality of ChatGPT’s Responses to Top 20 Questions about Robotic Hip and Knee Arthroplasty: Findings, Perspectives and Critical Remarks on Healthcare Education

Abstract

1. Introduction

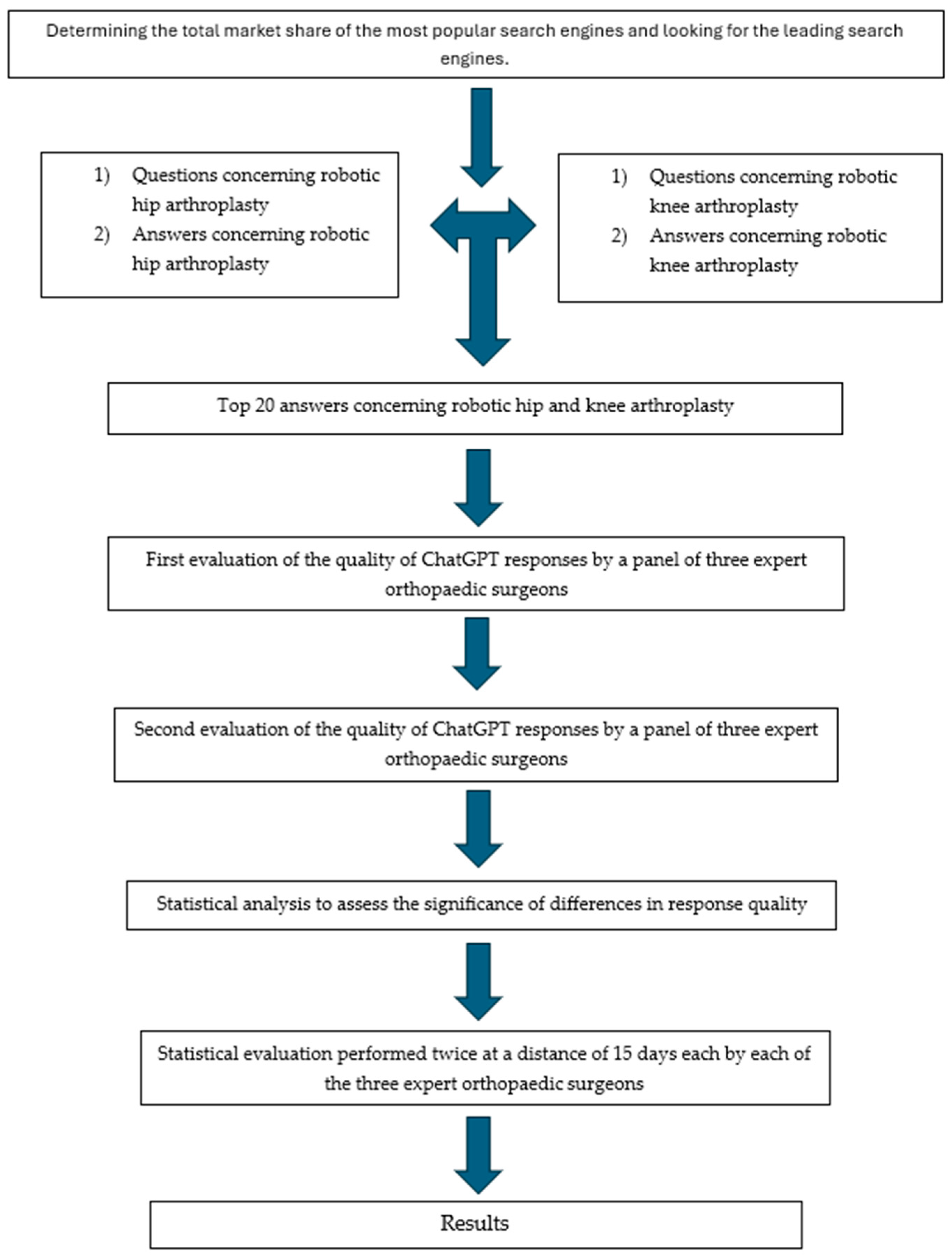

2. Materials and Methods

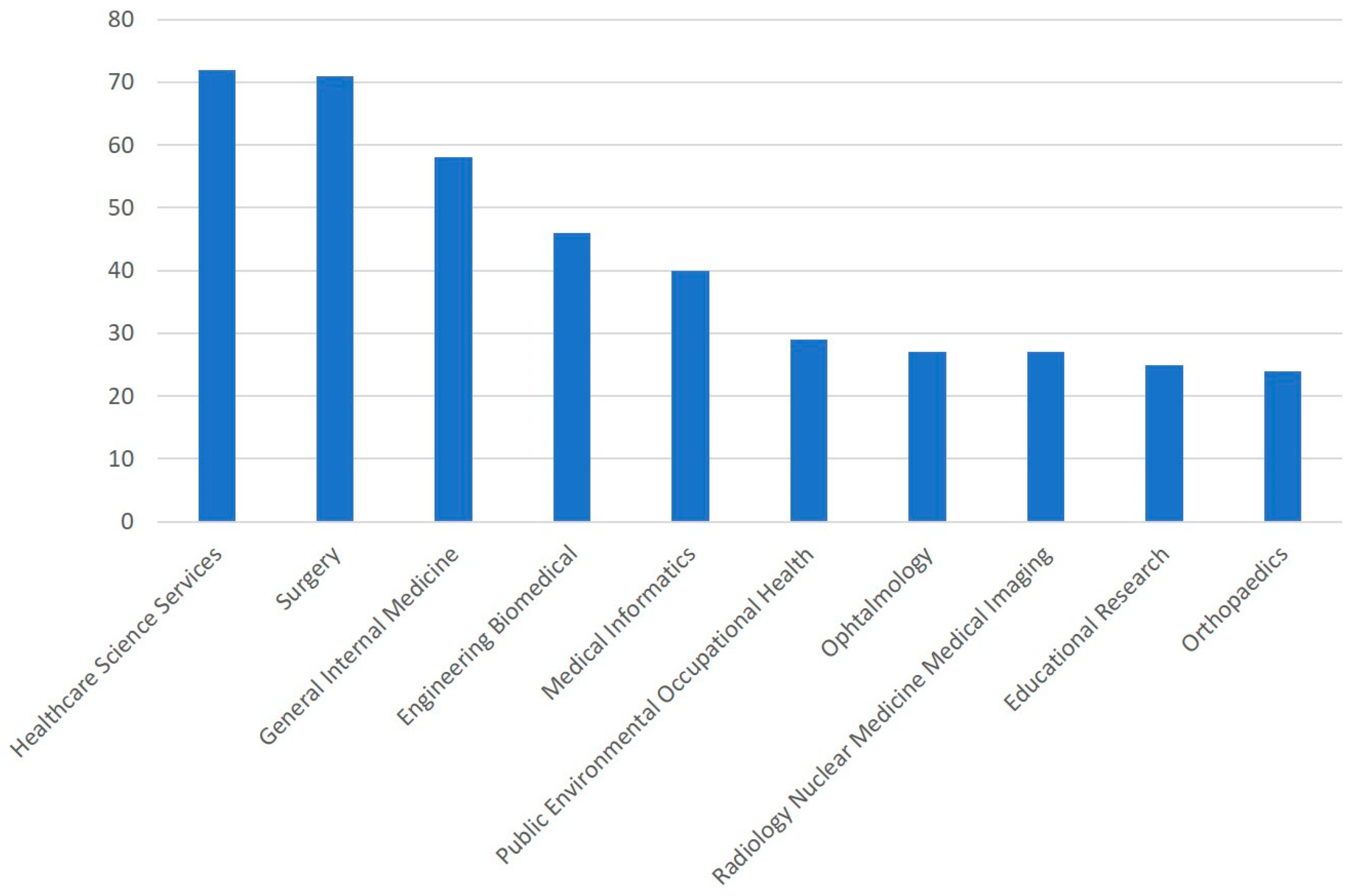

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Marchand, K.B.; Moody, R.; Scholl, L.Y.; Bhowmik-Stoker, M.; Taylor, K.B.; Mont, M.A.; Marchand, R.C. Results of Robotic-Assisted Versus Manual Total Knee Arthroplasty at 2-Year Follow-up. J. Knee Surg. 2023, 36, 159–166. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.H.; Yoon, S.H.; Park, J.W. Does Robotic-assisted TKA Result in Better Outcome Scores or Long-Term Survivorship Than Conventional TKA? A Randomized, Controlled Trial. Clin. Orthop. Relat. Res. 2020, 478, 266–275. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Xiong, J.; Wang, P.; Zhu, S.; Qi, W.; Peng, H.; Yu, L.; Qian, W. Robotic-assisted compared with conventional total hip arthroplasty: Systematic review and meta-analysis. Postgrad. Med. J. 2018, 94, 335–341. [Google Scholar] [CrossRef] [PubMed]

- Marmotti, A.; Rossi, R.; Castoldi, F.; Roveda, E.; Michielon, G.; Peretti, G.M. PRP and articular cartilage: A clinical update. Biomed Res. Int. 2015, 2015, 542502. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Deckey, D.G.; Rosenow, C.S.; Verhey, J.T.; Brinkman, J.C.; Mayfield, C.K.; Clarke, H.D.; Bingham, J.S. Robotic-assisted total knee arthroplasty improves accuracy and precision compared to conventional techniques. Bone Joint J. 2021, 103, 74–80. [Google Scholar] [CrossRef]

- Rajan, P.V.; Khlopas, A.; Klika, A.; Molloy, R.; Krebs, V.; Piuzzi, N.S. The Cost-Effectiveness of Robotic-Assisted Versus Manual Total knee Arthroplasty: A Markov Model-Based Evaluation. J. Am. Acad. Orthop. Surg. 2022, 30, 168–176. [Google Scholar] [CrossRef] [PubMed]

- Pierce, J.; Needham, K.; Adams, C.; Coppolecchia, A.; Lavernia, C. Robotic-assisted total hip arthroplasty: An economic analysis. J. Comp. Eff. Res. 2021, 10, 1225–1234. [Google Scholar] [CrossRef]

- Hossain, E.; Rana, R.; Higgins, N.; Soar, J.; Barua, P.D.; Pisani, A.R.; Turner, K. Natural Language Processing in Electronic Health Records in relation to healthcare decision-making: A systematic review. Comput. Biol. Med. 2023, 155, 106649. [Google Scholar] [CrossRef] [PubMed]

- Goodman, R.S.; Patrinely, J.R., Jr.; Osterman, T.; Wheless, L.; Johnson, D.B. On the cusp: Considering the impact of artificial intelligence language models in healthcare. Med 2023, 4, 139–140. [Google Scholar] [CrossRef] [PubMed]

- Shahsavar, Y.; Choudhury, A. User Intentions to Use ChatGPT for Self-Diagnosis and Health-Related Purposes: Cross-sectional Survey Study. JMIR Hum. Factors 2023, 10, e47564. [Google Scholar] [CrossRef]

- Shaikh, H.J.F.; Hasan, S.S.; Woo, J.J.; Lavoie-Gagne, O.; Long, W.J.; Ramkumar, P.N. Exposure to Extended Reality and Artificial Intelligence-Based Manifestations: A Primer on the Future of Hip and Knee Arthroplasty. J. Arthroplast. 2023, 38, 2096–2104. [Google Scholar] [CrossRef]

- Andriollo, L.; Picchi, A.; Sangaletti, R.; Perticarini, L.; Rossi, S.M.P.; Logroscino, G.; Benazzo, F. The Role of Artificial Intelligence in Anterior Cruciate Ligament Injuries: Current Concepts and Future Perspectives. Healthcare 2024, 12, 300. [Google Scholar] [CrossRef]

- Fucentese, S.F.; Koch, P.P. A novel augmented reality-based surgical guidance system for total knee arthroplasty. Arch. Orthop. Trauma Surg. 2021, 141, 2227–2233. [Google Scholar] [CrossRef]

- Fotouhi, J.; Alexander, C.P.; Unberath, M.; Taylor, G.; Lee, S.C.; Fuerst, B.; Johnson, A.; Osgood, G.; Taylor, R.H.; Khanuja, H.; et al. Plan in 2-D, execute in 3-D: An augmented reality solution for cup placement in total hip arthroplasty. J. Med. Imaging 2018, 5, 021205. [Google Scholar] [CrossRef]

- Pokhrel, S.; Alsadoon, A.; Prasad, P.W.C.; Paul, M. A novel augmented reality (AR) scheme for knee replacement surgery by considering cutting error accuracy. Int. J. Med. Robot. 2019, 15, e1958. [Google Scholar] [CrossRef]

- Suarez-Ahedo, C.; Lopez-Reyes, A.; Martinez-Armenta, C.; Martinez-Gomez, L.E.; Martinez-Nava, G.A.; Pineda, C.; Vanegas-Contla, D.R.; Domb, B. Revolutionizing orthopedics: A comprehensive review of robot-assisted surgery, clinical outcomes, and the future of patient care. J. Robot. Surg. 2023, 17, 2575–2581. [Google Scholar] [CrossRef]

- Brinkman, J.C.; Christopher, Z.K.; Moore, M.L.; Pollock, J.R.; Haglin, J.M.; Bingham, J.S. Patient Interest in Robotic Total Joint Arthroplasty Is Exponential: A 10-Year Google Trends Analysis. Arthroplast. Today 2022, 15, 13–18. [Google Scholar] [CrossRef]

- Griffiths, S.Z.; Albana, M.F.; Bianco, L.D.; Pontes, M.C.; Wu, E.S. Robotic-Assisted Total Knee Arthroplasty: An Assessment of Content, Quality, and Readability of Available Internet Resources. J. Arthroplast. 2021, 36, 946–952. [Google Scholar] [CrossRef]

- Meo, S.A.; Al-Masri, A.A.; Alotaibi, M.; Meo, M.Z.S.; Meo, M.O.S. ChatGPT Knowledge Evaluation in Basic and Clinical Medical Sciences: Multiple Choice Question Examination-Based Performance. Healthcare 2023, 11, 2046. [Google Scholar] [CrossRef]

- Van Bulck, L.; Moons, P. What if your patient switches from Dr. Google to Dr. ChatGPT? A vignette-based survey of the trustworthiness, value and danger of ChatGPT-generated responses to health questions. Eur. J. Cardiovasc. Nurs. 2024, 23, 95–98. [Google Scholar] [CrossRef]

- Hou, Y.; Yeung, J.; Xu, H.; Su, C.; Wang, F.; Zhang, R. From Answers to Insights: Unveiling the Strengths and Limitations of ChatGPT and Biomedical Knowledge Graphs. Res. Sq. 2023, 3, rs-3185632. [Google Scholar] [CrossRef]

- American Academy of Orthopaedic Surgeons. Total Knee Replacement Exercise Guide, 2014. Available online: https://orthoinfo.aaos.org/en/recovery/total-knee-replacement-exercise-guide/ (accessed on 6 November 2023).

- Nogalo, C.; Meena, A.; Abermann, E.; Fink, C. Complications and downsides of the robotic total knee arthroplasty: A systematic review. Knee Surg. Sports Traumatol. Arthrosc. 2023, 31, 736–750. [Google Scholar] [CrossRef]

- Hasan, S.; Ahmed, A.; Waheed, M.A.; Saleh, E.S.; Omari, A. Transforming Orthopedic Joint Surgeries: The Role of Artificial Intelligence (AI) and Robotics. Cureus 2023, 15, e43289. [Google Scholar] [CrossRef]

- Kienzle, A.; Niemann, M.; Meller, S.; Gwinner, C. ChatGPT May Offer an Adequate Substitute for Informed Consent to Patients Prior to Total Knee Arthroplasty-Yet Caution Is Needed. J. Pers. Med. 2024, 14, 69. [Google Scholar] [CrossRef] [PubMed]

- Mika, A.P.; Martin, J.R.; Engstrom, S.M.; Polkowski, G.G.; Wilson, J.M. Assessing ChatGPT Responses to Common Patient Questions Regarding Total Hip Arthroplasty. J. Bone Joint Surg. Am. 2023, 105, 1519–1526. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Ardavanis, K.S.; Slack, K.E.; Fernando, N.D.; Della Valle, C.J.; Hernandez, N.M. Chat Generative Pre-Trained Transformer (ChatGPT) and Bard: Artificial Intelligence Does Not Yet Provide Clinically Supported Answers for Hip and Knee Osteoarthritis. J. Arthroplast. 2024, 39, 1184–1190. [Google Scholar] [CrossRef] [PubMed]

- Walker, H.L.; Ghani, S.; Kuemmerli, C.; Nebiker, C.A.; Müller, B.P.; Raptis, D.A.; Staubli, S.M. Reliability of Medical Information Provided by ChatGPT: Assessment Against Clinical Guidelines and Patient Information Quality Instrument. J. Med. Internet Res. 2023, 25, e47479. [Google Scholar] [CrossRef] [PubMed]

- Eastin, M.S. Credibility assessments of online health information: The effects of source expertise and knowledge of content. J. Comp. Mediated Commun. 2001, 6, JCMC643. [Google Scholar] [CrossRef]

- Venosa, M.; Romanini, E.; Cerciello, S.; Angelozzi, M.; Graziani, M.; Calvisi, V. ChatGPT and healthcare: Is the future already here? Opportunities, challenges, and ethical concerns. A narrative mini-review. Acta Biomed. 2024, 95, e2024005. [Google Scholar]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef] [PubMed]

- Miller, A. The intrinsically linked future for human and Artificial Intelligence interaction. J. Big Data 2019, 6, 38. [Google Scholar] [CrossRef]

- Pirhonen, A.; Silvennoinen, M.; Sillence, E. Patient Education as an Information System, Healthcare Tool and Interaction. J. Inf. Syst. Educ. 2014, 25, 327–332. [Google Scholar]

- Feng, S.; Shen, Y. ChatGPT and the Future of Medical Education. Acad. Med. 2023, 98, 867–868. [Google Scholar] [CrossRef] [PubMed]

- Yeo, Y.H.; Samaan, J.S.; Ng, W.H.; Ting, P.S.; Trivedi, H.; Vipani, A.; Ayoub, W.; Yang, J.D.; Liran, O.; Spiegel, B.; et al. Assessing the performance of ChatGPT in answering questions regarding cirrhosis and hepatocellular carcinoma. Clin. Mol. Hepatol. 2023, 29, 721–732. [Google Scholar] [CrossRef] [PubMed]

- Cakir, H.; Caglar, U.; Yildiz, O.; Meric, A.; Ayranci, A.; Ozgor, F. Evaluating the performance of ChatGPT in answering questions related to urolithiasis. Int. Urol. Nephrol. 2024, 56, 17–21. [Google Scholar] [CrossRef]

- Johnson, D.; Goodman, R.; Patrinely, J.; Stone, C.; Zimmerman, E.; Donald, R.; Chang, S.; Berkowitz, S.; Finn, A.; Jahangir, E.; et al. Assessing the Accuracy and Reliability of AI-Generated Medical Responses: An Evaluation of the Chat-GPT Model. Res Sq. 2023, 3, rs-2566942. [Google Scholar] [CrossRef]

- Ozgor, B.Y.; Simavi, M.A. Accuracy and reproducibility of ChatGPT’s free version answers about endometriosis. Int. J. Gynaecol. Obstet. 2024, 165, 691–695. [Google Scholar] [CrossRef] [PubMed]

| First Prompt Utilized |

| A. Exact Wording of the Prompt: “Write the top 20 questions performed on Google concerning robotic-assisted hip and knee arthroplasty” |

| B. Objective of the Prompt: 1. To gather and analyze the most common inquiries from the public 2. To gain insights into public interest and concerns regarding the procedure |

| Second Prompt Utilized |

| A. Exact Wording of the Prompt: “Now, please, provide detailed, complete and exhaustive answers to each of these questions concerning robotic hip and knee arthroplasty” |

| B. Objective of the Prompt: 1. To ensure thorough responses to the identified top 20 questions 2. To enhance public understanding and address common concerns comprehensively |

| Accuracy | Completeness | ||||

|---|---|---|---|---|---|

| Hip | Knee | Hip | Knee | ||

| Surgeon 1 | t1 | 4.4 (0.94) | 4.65 (0.59) | 1.95 (0.39) | 2 (0.32) |

| t2 | 4.4 (0.94) | 4.65 (0.59) | 1.95 (0.39) | 2 (0.32) | |

| Within surgeon p | 1 | 1 | 1 | 1 | |

| Surgeon 2 | t1 | 4.3 (0.92) | 4.5 (0.61) | 2.15 (0.42) | 2.05 (0.39) |

| t2 | 4.4 (0.94) | 4.65 (0.59) | 2.0 (0.32) | 2.1 (0.45) | |

| within surgeon p | 0.35 | 0.15 | 0.23 | 0.77 | |

| Surgeon 3 | t1 | 4.3 (0.86) | 4.65 (0.59) | 2.05 (0.51) | 2.1 (0.45) |

| t2 | 4.4 (0.82) | 4.5 (0.61) | 2.05 (0.39) | 2.15 (0.37) | |

| within surgeon p | 0.35 | 0.15 | 1 | 0.77 | |

| Between surgeon p | t1 | 1 | 1 | 0.49 | 1 |

| t2 | 1 | 1 | 1 | 0.56 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Venosa, M.; Calvisi, V.; Iademarco, G.; Romanini, E.; Ciminello, E.; Cerciello, S.; Logroscino, G. Evaluation of the Quality of ChatGPT’s Responses to Top 20 Questions about Robotic Hip and Knee Arthroplasty: Findings, Perspectives and Critical Remarks on Healthcare Education. Prosthesis 2024, 6, 913-922. https://doi.org/10.3390/prosthesis6040066

Venosa M, Calvisi V, Iademarco G, Romanini E, Ciminello E, Cerciello S, Logroscino G. Evaluation of the Quality of ChatGPT’s Responses to Top 20 Questions about Robotic Hip and Knee Arthroplasty: Findings, Perspectives and Critical Remarks on Healthcare Education. Prosthesis. 2024; 6(4):913-922. https://doi.org/10.3390/prosthesis6040066

Chicago/Turabian StyleVenosa, Michele, Vittorio Calvisi, Giulio Iademarco, Emilio Romanini, Enrico Ciminello, Simone Cerciello, and Giandomenico Logroscino. 2024. "Evaluation of the Quality of ChatGPT’s Responses to Top 20 Questions about Robotic Hip and Knee Arthroplasty: Findings, Perspectives and Critical Remarks on Healthcare Education" Prosthesis 6, no. 4: 913-922. https://doi.org/10.3390/prosthesis6040066

APA StyleVenosa, M., Calvisi, V., Iademarco, G., Romanini, E., Ciminello, E., Cerciello, S., & Logroscino, G. (2024). Evaluation of the Quality of ChatGPT’s Responses to Top 20 Questions about Robotic Hip and Knee Arthroplasty: Findings, Perspectives and Critical Remarks on Healthcare Education. Prosthesis, 6(4), 913-922. https://doi.org/10.3390/prosthesis6040066