1. Introduction

Autonomous vehicle (AV) navigation comprises several tasks, including perception, planning, and control. Obstacle detection, distance estimation, and tracking the obstacle with respect to the vehicle (called an ego vehicle) are considered crucial steps of the perception task. This can be accomplished with perception sensors like Camera and Light Detection and Ranging (LiDAR) or Laser Range Finder (LRF). In general, the camera image provides texture and color information about the object, and its spatial resolution is higher. On the other hand, 3D/2D LiDAR can generate accurate point cloud data, but its spatial resolution is lower. However, by fusing the data from LiDAR and cameras, object detection and its range estimation accuracy can be improved. Hence, the primary objective of this work is to develop a low-cost hybrid perception system (HPS)—which consists of a camera and 2D LiDAR along with the data fusion algorithm (i.e., extrinsic calibration).

The first step in the development of the HPS is the calibration of sensors—essentially to establish the relationship between the sensor modalities (in the present case, 2D LiDAR and camera). The various calibration methods widely used can be broadly classified based on the type of LiDAR and camera combination, i.e., 2D LiDAR–camera [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18] and 3D LiDAR–camera [

19,

20,

21,

22,

23]. Furthermore, to perform the calibration, the corresponding points from the LiDAR–camera have to be obtained. Hence, based on the technique used to obtain these corresponding points, the calibration approaches can be classified as target [

1,

2,

3,

4,

5,

6,

7,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

22,

23] and targetless [

8,

20,

21].

In target-based methods, a calibration pattern is used to obtain the corresponding points to set up the constraint equations to compute the transformation matrix (i.e., rotational and translational vectors called extrinsic parameters). Commonly used calibration patterns to obtain the corresponding points between LiDAR–camera systems are the planar board [

5,

6,

7,

12,

15,

17], the V-shaped board [

4,

9,

10,

14], and the triangular board [

1,

11]. In [

1], Li used a triangular checkerboard to compute the extrinsic parameters by minimizing the distance between the calculated projection and the real projection of the edges of the calibration pattern. In [

4], Kwak manually selected the line features between the LiDAR–camera system that fetches the transformation matrix by minimizing the distance between line features using the Huber cost function. Chao provided an analytical least squares method to estimate the 6 degrees-of-freedom LiDAR–camera transformation in [

5]. However, the root-mean-square error (RMSE) of this method was not within the acceptable range. Using plane-to-line correspondence, the authors of [

6,

7] firstly computed the rotation matrix using singular variable decomposition (SVD), and subsequently using that rotation matrix, a set of linear equations were framed to obtain the translation vector. Dong in [

9] computed the relative pose between the LiDAR–camera from point-to-plane constraints, by using the V-shaped triangular pattern. Itami in [

10] used a point-like target to obtain the point-to-point correspondence. The extrinsic parameters were computed by minimizing the reprojected points and actual image points. Unlike [

4], the authors of [

11] obtained the line features automatically on the LiDAR point cloud and camera image by considering the triangular calibration pattern. Furthermore, the calibration parameters were estimated using a non-linear least squares approach. The same triangular pattern-based calibration approach was used by Chu in [

12]. The initial calibration matrix was computed using SVD on the point-line correspondence points. Later, optimization was carried out to minimize the reprojection error. Kim [

14] used the V-shaped pattern to obtain the LiDAR control point (three points) by tracing the LiDAR trajectory using an infrared (IR) camera. Furthermore, using a non-linear optimization technique, the calibration parameters were estimated. The same authors of [

10] extended their work by obtaining the corresponding points for two different orientations (0° and 90°) in [

15]. This modified approach strengthened the point-to-plane correspondence and thus yielded reasonable results.

Abanay [

17] has developed an approach to compute the plan-to-plan homography matrix using the manually selected image points obtained with the help of the IR image of the dual planar calibration patterns. The authors have used the SVD method to compute the homography matrix; however, the reprojection error was higher in this approach. The calibration accuracy heavily depends on the potential corresponding points between two sensor modalities. Obtaining corresponding points only with a simple planar type of calibration pattern is not sufficient. Hence, to improve the correspondence, some approaches have used a special calibration pattern. In [

2], Willis used a sequence of four black and white boxes touching at the corners to obtain the corresponding points; then, the system of equations was solved through SVD. To take advantage of the LiDAR intensity, in [

3], Meng used a planar board with a black-and-white color pattern to calibrate a dual 2D laser range sensor against the camera. Multiple spherical balls have been used by Palmer in [

13] to compute the calibration parameters, thus minimizing the reprojection error. However, this approach required that the camera is placed at the same height as the LiDAR plane. The corners of the building have been used as a target called an arbitrary trihedron [

16] to set up the equations to be solved for calibration matrix. Recently, Manouchehri in [

18] used ping pong balls hung in front of the target board to calibrate the 2D LiDAR and stereo camera. Beltran in [

19] has presented a planar calibration pattern with four holes to obtain accurate correspondence. The holes detected in the LiDAR point cloud and camera image are fitted together to estimate the extrinsic parameters. A pyramidal-shaped calibration pattern has been used by Zhang [

22]. He estimated the calibration parameters by aligning the feature points from the 3D LiDAR–Camera using the iterative closest point (ICP) algorithm. Fan [

23] used a new calibration target (planar board with a sphere at the center) to compute the rotation and translation parameters by solving a non-convex optimization problem that yielded a 0.5-pixel reprojection error.

In targetless approaches, the correspondence is obtained without using any calibration patterns. Ahmad [

8] used the building corners to establish the point-to-line correspondence to be solved for rotation and translation parameters. Initially, a non-linear least squares method was used to compute the initial approximate solution. Later, the approximate solution was revised based on the geometry of the scene (building corners), which is easier to establish. On the downside, the constraint equation was still based on the line features. In [

20], using a cross-model object matching technique, the real-time accurate LiDAR–camera calibration was carried out by calibrating the 3D LiDAR against the 2D bounding box of the road participants (i.e., car) obtained from the camera image. Considering the mutual information between the LiDAR–camera data, based on the statistical dependence, the corresponding points were established in [

21]. This method used normalized information distance constraint with cross-model-based mutual information to refine the initial estimation.

Accurate calibration matrix estimation demands the best correspondence between the LiDAR–camera system. The corresponding points obtained using the available approaches (target and targetless) are well suited for the 2D/3D LiDAR that has a constant horizontal angular resolution with a higher distance accuracy, and these LiDARs are expensive. However, if a LiDAR with lower distance accuracy and varying horizontal angular resolution is used (similar to HPS), it leads to an uncertain/sparse LiDAR point cloud. In such conditions, precise calibration is needed using the available correspondence between the sensors.

The present work addresses the above problem by considering the corresponding points from a movable and static pattern for homography matrix estimation. The key contributions of this paper are mainly divided into three aspects:

- (1)

This paper introduces a low-cost hybrid perception system (HPS) that fuses the camera image and 2D LiDAR point cloud for better environmental perception.

- (2)

The corresponding points between the camera and LiDAR are established through a semi-automated approach and the initial homography is estimated by solving the system of equations using SVD. Then, the estimated homography matrix is optimized by using the Levenberg–Marquardt optimization method, which provides the reduced rotational error.

- (3)

Finally, Procrustes analysis-based translation error minimization is proposed and evaluated through experiments (varying distance and varying orientation).

It should be noted that this new approach can be used to calibrate any low-resolution 2D LiDAR and monocular camera. The remainder of this paper is organized as follows.

Section 2 describes the materials and methods. In

Section 3, experimental results are discussed. Finally,

Section 4 concludes the work and presents future research directions.

2. Materials and Methods

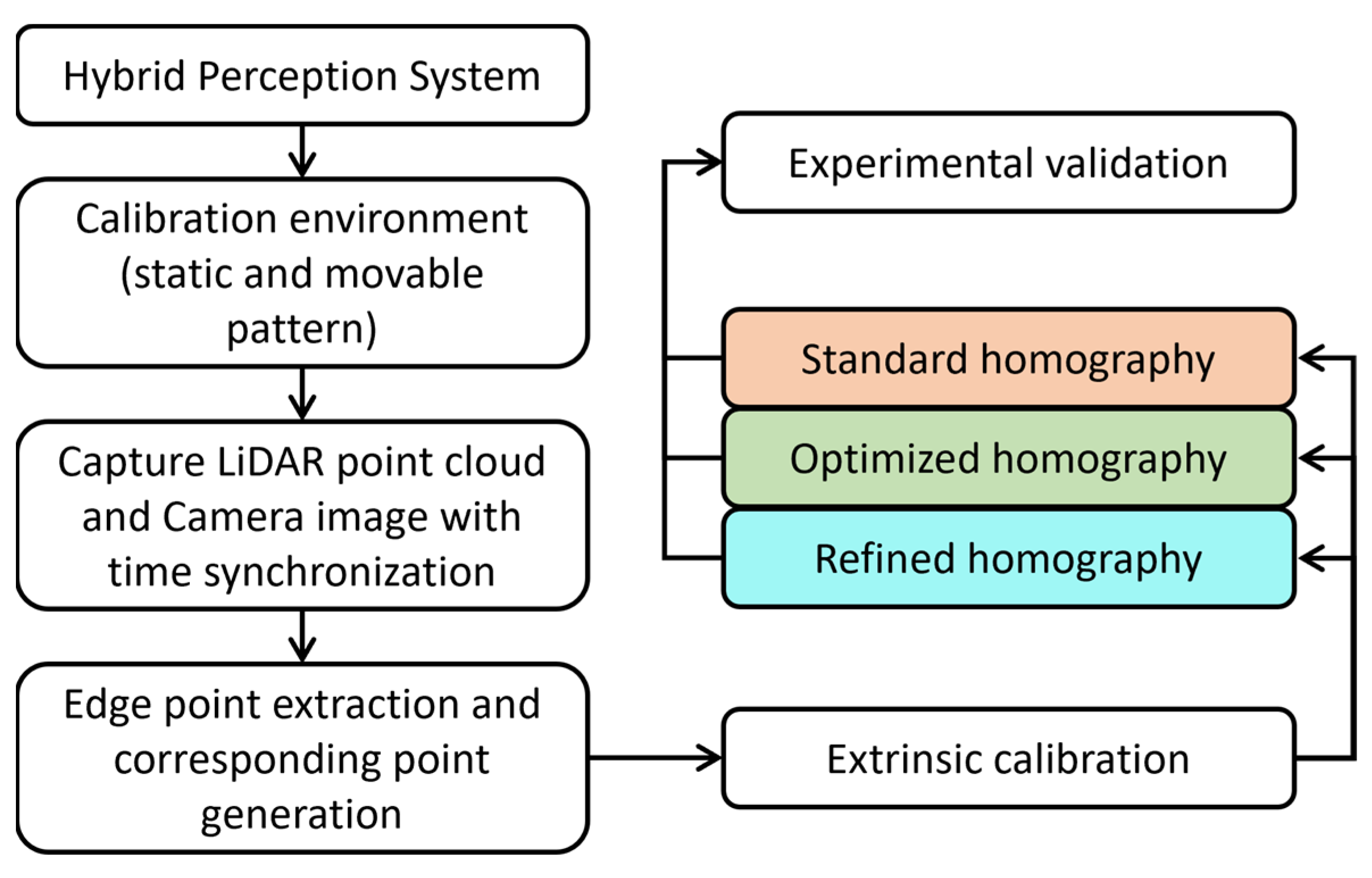

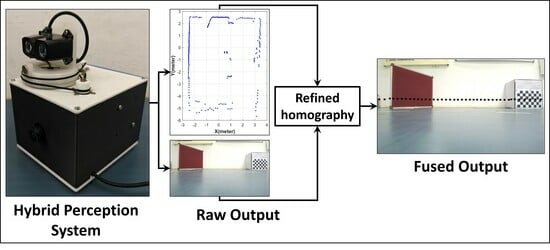

This section describes the development of an HPS, which consists of a rotating 2D LiDAR and a monocular camera. Subsequently, the developed calibration technique for fusing the data from both sensors is discussed. The overview of the developed calibration technique for the HPS is illustrated in

Figure 1.

2.1. Hybrid Perception System (HPS)

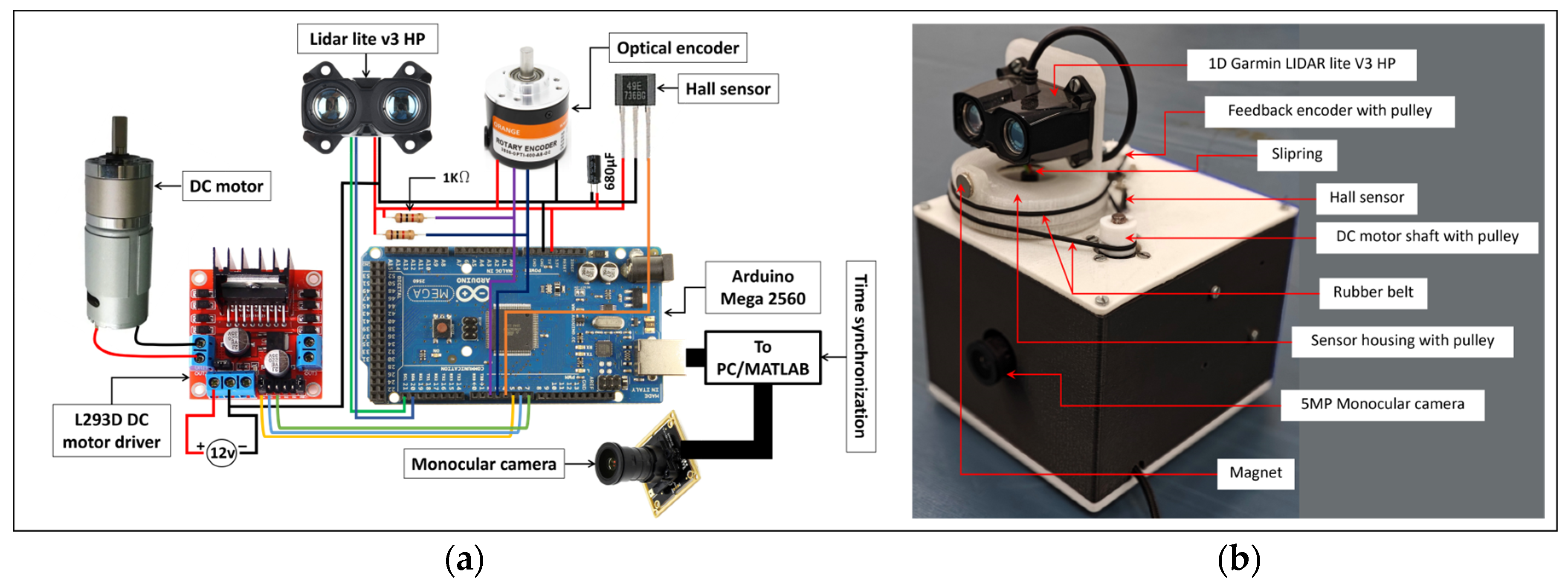

The developed HPS uses a Garmin 1D LiDAR (Model: LiDAR lite V3 HP—an IR LiDAR manufactured by Garmin, Olathe, KS, USA) and a 5MP USB monocular camera (IMX335 sourced from Evelta, Navi Mumbai, India). The selected LiDAR has an operating range of 40 m with a resolution of 1 cm and an accuracy of cm. It should be noted that the operating voltage of the LiDAR is 5 V and the current consumption is 85 mA. This 1D LiDAR is converted into 2D LiDAR by providing continuous rotation using a DC motor, and the LiDAR rotation angle is obtained by attaching a rotary optical encoder to the motor shaft. An Arduino Mega 2560 microcontroller receives the LiDAR angular position from the feedback encoder (600 counts per revolution), and the distance from the 1D LiDAR at each angular position and finds the 2D cartesian coordinates . The HPS housing was designed and fabricated using the fused filament fabrication (FFF) process.

Figure 2 shows the construction of the HPS. A 438 RPM DC motor is used to provide continuous rotation to the LiDAR. The DC motor is driven by the L293D motor driver, which is powered by a 12 V power supply. The speed of the motor is controlled by the PWM signal from the Arduino Mega 2560 microcontroller. The LiDAR is fixed on the sensor housing with a pulley (as shown in

Figure 2b), which is attached to the motor through a rubber belt (nitrile O-ring). Since the LiDAR rotates, it is connected to the microcontroller through the slip ring (used to make a connection between static and rotary parts). To obtain the angular position of the rotating sensor housing, an optical rotary encoder, which provides 600 counts per revolution, was used. An additional Hall sensor was used to track the completion of each revolution of the rotating LiDAR. The microcontroller reads the distance data from the LiDAR, the position counts from the encoder, and the Hall sensor output. Furthermore, the monocular camera of the HPS is interfaced with the PC to capture the streamed images and process them using the algorithm developed with the MATLAB R2022a software. This algorithm captures the camera image immediately after receiving the LiDAR scan from the microcontroller through serial communication on MATLAB. The camera resolution was set to 720 × 1280 pixels with other parameters such as brightness = 32, contrast = 25, and backlight compensation = 1.

Considering the sensor housing design parameters, such as the motor–sensor housing pulley ratio (1:5.75) and encoder–sensor housing pulley ratio (1:6), the angular position was computed as (6 × 600 = 3600 counts). If the angular position reaches 3600 counts, it is the completion of one LiDAR scan. However, since the sensor housing is an additively manufactured component, the error between design and fabrication persists. Hence, manually, it is estimated that the encoder–sensor housing pulley ratio is 1:5 with this, and the maximum angular position for a single LiDAR scan was set to 3000 counts. The 2D LiDAR scans (consecutive ten scans) obtained using this approach are shown in

Figure 3a. From

Figure 3a, it can be noticed that, even if the HPS is kept static, the LiDAR scans deviate or have an angular error over time due to setting the maximum angular position count by considering the encoder count and encoder–sensor housing pulley ratio. Moreover, with this problem, it is impossible to estimate the pose of the ego vehicle with LiDAR using any scan matching algorithm (i.e., even if the ego vehicle is static, the LiDAR scan will have angular deviation). To overcome this issue, a Hall sensor with a magnet was implemented to detect the closing angle of 0° or 360° for every LiDAR scan.

The procedure to obtain a closing angle with the Hall sensor is discussed below. Generally, the Hall sensor determines the output as “zero—if magnet detected” or “one—if magnet not detected” or vice-versa. In this work, the accurate closing angle was computed from the Hall sensor output.

Figure 3b shows the output of the Hall sensor and the closing angle is exactly at the center of the magnet or zeros (i.e., Meanpeak). To find this Meanpeak, it is essential to find the one-to-zero (Endpeak) and zero-to-one (Startpeak) transition, which is depicted as a RED point and BLUE point, respectively, in

Figure 3b. Now, the Meanpeak (GREEN point) is obtained for consecutive LiDAR scans and the encoder count is separated for each LiDAR scan. However, the encoder is an incremental encoder i.e., for the first scan, it counts from 0 to 3000, and for the second scan, the count value increases from 3001 to 6000, and so on. Hence, to obtain the absolute value of the encoder count for each scan, the first encoder count has to be detected (i.e., 0 and 3001) and then subtracted with all the encoder counts. Now, all the LiDAR scans have encoder count values between 0 and 3000. Subsequently, the encoder count can be converted into an angular position using Equation (1).

where

is the last value of the

for each LiDAR scan,

is the angular position that ranges between 0° and 360° or from 0 to 2π, and ‘

’ is the number of samples present in a single LiDAR scan. The angular deviation-corrected 2D LiDAR scan is shown in

Figure 3c. The 2D Cartesian coordinates can be computed using Equation (2). One problem with this approach is that the angular resolution is not a fixed value (like an industrial LiDAR sensor), which leads to inconsistent or uncertain LiDAR points that demand the system to develop a newer calibration technique.

Moreover, it should be noted that the developed HPS is a cost-effective alternate perception system to the existing 2D LiDAR systems. The cost of the developed HPS prototype is approximately USD 290, whereas the cost of established 2D LiDAR systems, such as RP LiDAR A3 (USD 780), RP LiDAR S2E (USD 516), YD LiDAR G4 (USD 504), and YD LiDAR TG15 (USD 624), are comparatively higher than the developed HPS. Furthermore, it is worth noting that the LiDAR used in the HPS offers a 40 m range, whereas the above-mentioned existing sensors offer a maximum range of 30 m. Also, the existing 2D LiDARs cannot perceive spatial information, which is a built-in feature of the developed HPS. Hence, an additional camera needs to be integrated with the existing 2D LIDARs to achieve the same capability of HPS, thereby increasing the cost. The major limitation of the developed HPS is that the sampling frequency of the LiDAR used is 1 kHz, whereas the existing LiDARs have a minimum sampling frequency of 2 kHz. This lower sampling frequency of the HPS is due to the limited frequency at which the laser pulses are generated in the LiDAR lite V3 HP used in it. If the laser pulse width and its frequency are controlled externally, it is possible to improve the sampling frequency of HPS, which is a part of our future work.

2.2. Software Time Synchronization

Once the LiDAR points are obtained, the camera image has to be captured at that time instant. The present work utilized software time synchronization for capturing the LiDAR scans and camera image frames at the same time. Since both the 2D LiDAR and camera are connected to the PC with MATLAB, as shown in

Figure 2, the multi-sensor data acquisition system acquires the camera images immediately after receiving the LiDAR points. The camera used in the present work acquires image frames at 30 Hz frequency (i.e., for every 33 milliseconds images can be captured), which is higher than that of LiDAR. The image that exactly represents the same scene point should be acquired within 33 milliseconds of the obtained 2D LiDAR scan. As mentioned in [

24], the present work assumed that the time taken to capture one LiDAR scan is

and for capturing camera images, it is

. For ‘

’ LiDAR scans, the captured image frames are

. For time synchronization, the time difference between LiDAR and camera data

should be less than

, where ‘

’ is the frequency of the camera images (i.e., 30 Hz) and ‘

’ is the number of images. In the present work, from the multi-sensor data acquisition system, it is found that the average time taken for

is 1.75 milliseconds, which is lower than 33 milliseconds. Hence, the captured LiDAR and camera data are well synchronized and represent the same scene point accurately in both sensor modalities.

2.3. Extraction of LiDAR–Camera Corresponding Points

The LiDAR–camera calibration setup is shown in

Figure 4. This work utilized a static pattern and a movable planar board to obtain the corresponding points between two sensors to estimate the homography matrix (i.e., a 3 × 3 matrix). Generally, estimating homography requires four or more corresponding points with no more than two collinear points [

17]. To satisfy this condition, in this work, the edges of the calibration pattern, called edge points (five edges labeled as

and

), were considered as the correspondence points. These edge points were marked as BLACK-colored dots, as shown in

Figure 4, and from these dots, the feature points on the camera image could be obtained.

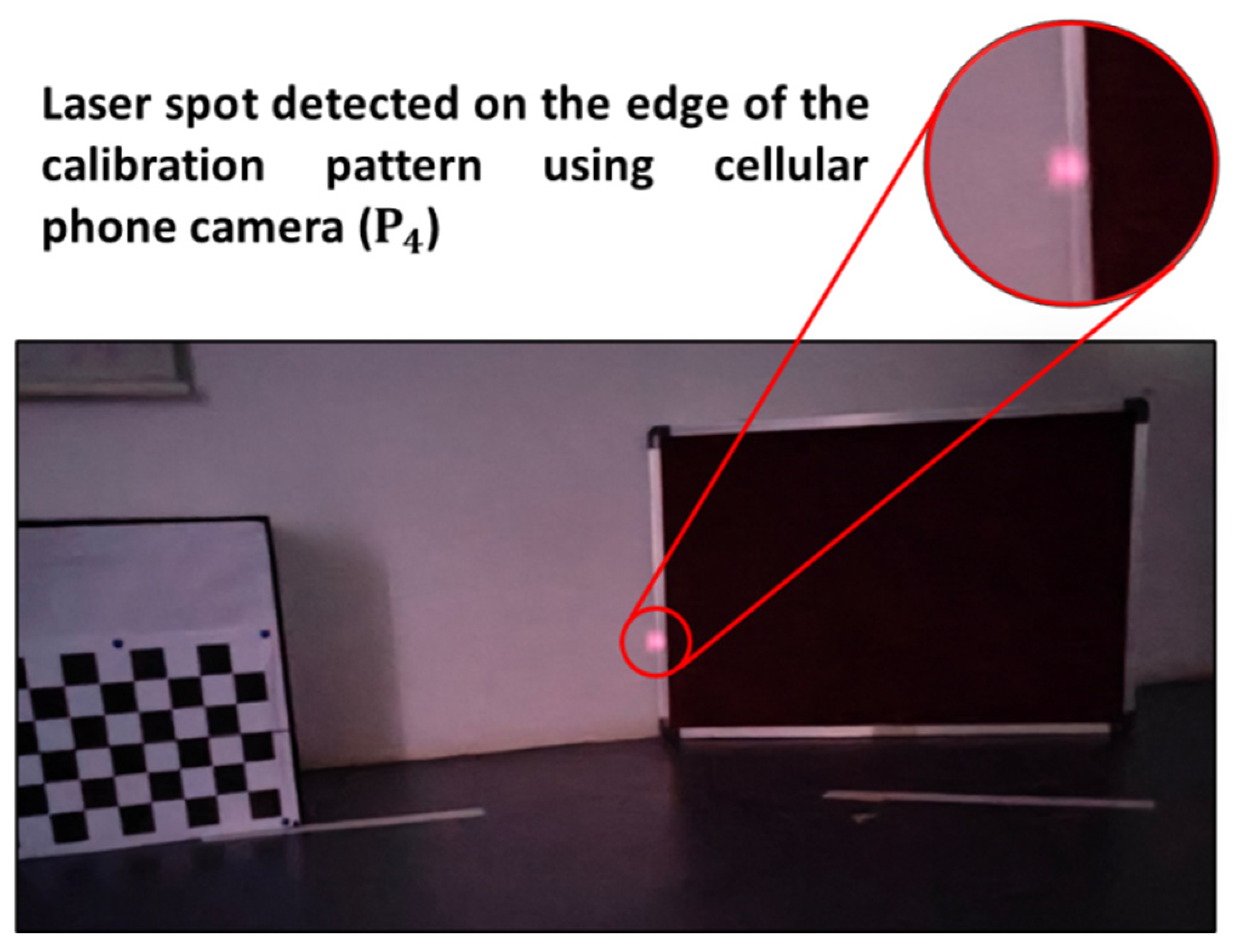

It can be said that this plane (RED/BLUE line from

Figure 4) is the LiDAR plane, where

. However, to find these points on the 2D image, an IR camera was used to identify the LASER spots at the edges of the calibration pattern, as shown in

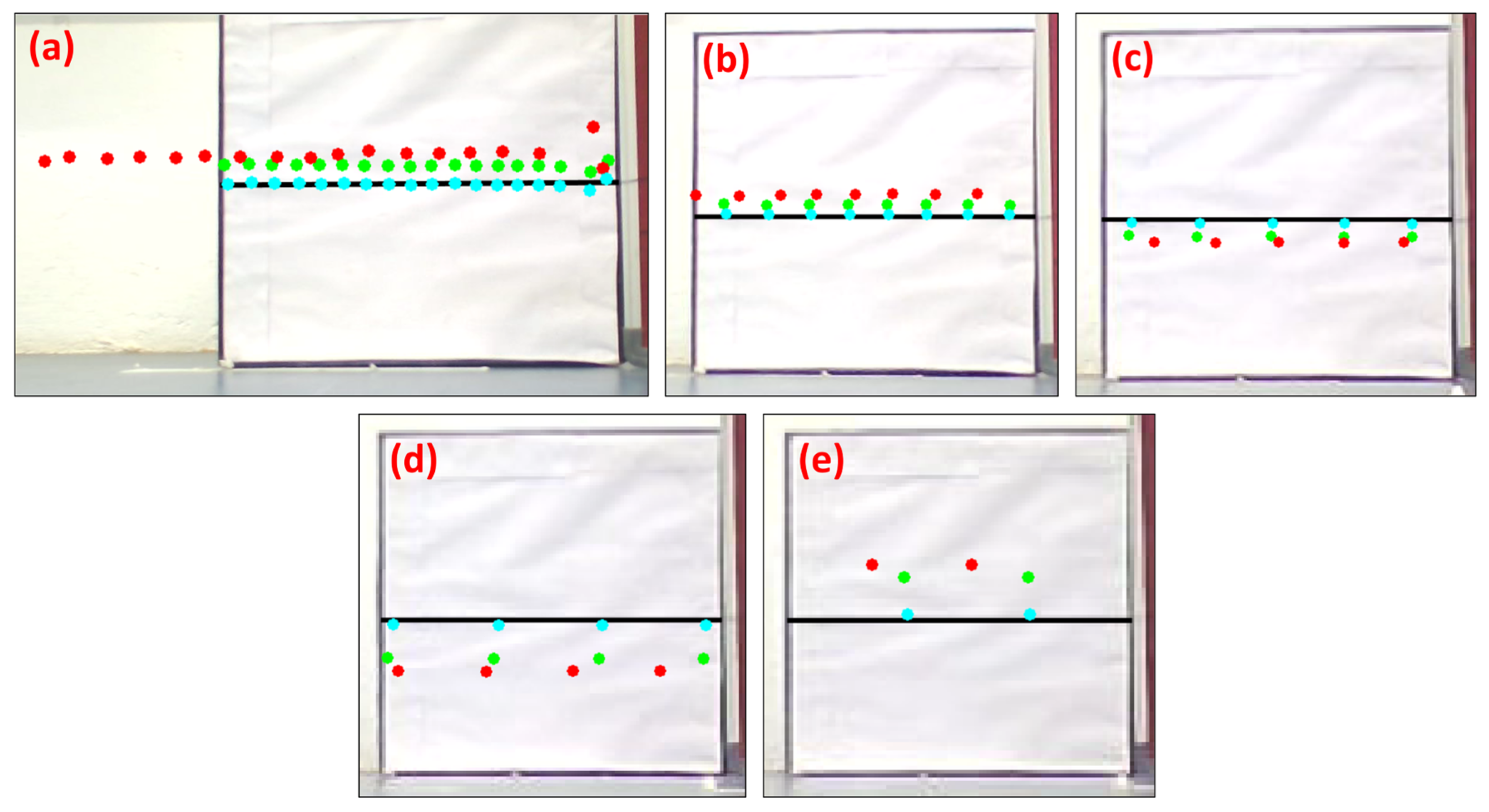

Figure 5. However, finding the respective edge points in the LiDAR scan is difficult, since the LiDAR points may not be obtained at the edges due to their angular resolution.

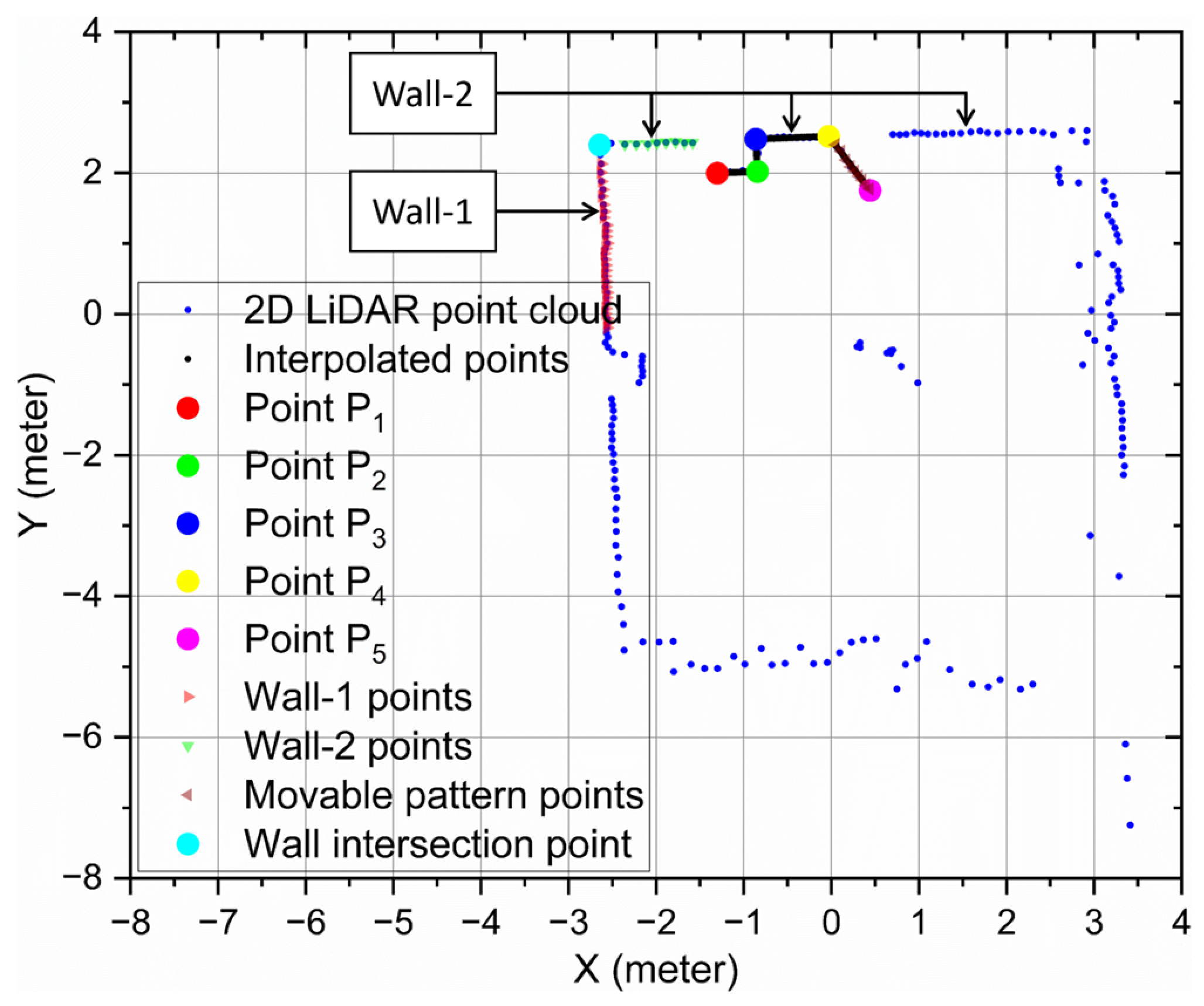

For a given LiDAR point cloud, the edge points (

) are extracted using a semi-automated approach, which is nothing but a separate edge point extraction algorithm coded in MATLAB. In this algorithm, initially, the Wall-1 and Wall-2 points (RED and GREEN triangle points shown in

Figure 6 are selected using the MATLAB ‘ginput’ function. Generally, the ‘ginput’ function identifies the cursor movement and its click. Let us say that there are ten points present on a line segment AB. If the cursor is clicked at A and B, the number of points present between the AB line segments can be obtained. In the present case, as shown in

Figure 6, the cursor is clicked twice (starting and ending point) on the Wall-1 LiDAR points (RED triangle), clicked twice on the Wall-2 (GREEN triangle), and clicked twice on the movable pattern (MAROON triangle) to extract the LiDAR points present between the two locations. Since the dimensions of the static and movable patterns are known (also the location of the static pattern), if the intersection of the Wall-1 and Wall-2 points is obtained, only with the movable pattern LiDAR points can the edge points’ location be computed automatically. To obtain the Wall-1 and Wall-2 intersection point, the slope along the

x-axis and

y-axis is considered as shown in Equation (3) and Equation (4), respectively. Using

and

, the Wall-1 and Wall-2 points can be separated and fitted with the least squares method. For Wall-1, the fitting equation is

, and for Wall-2, it is

. From the coefficients

, the Wall intersection points

can be obtained from Equation (5).

Using the known location of the static pattern and Wall intersection point

, the edge point (

) is computed as a point that is located at a known distance away from the Wall intersection point on the

x-axis. Subsequently, with the known dimension of the static pattern, the edge points (

and

) are obtained. Furthermore, with the LiDAR points obtained on the movable pattern (MAROON triangle), the intersection (Equation (5)) of the Wall-2 and the movable pattern is the next edge point (

). Finally, with the known dimension of the movable pattern, the edge point (

) is obtained. Thus, a total of five edge points (corresponding points) are obtained. However, if we have more correspondence points, the HPS calibration accuracy will be better. Hence, to obtain more correspondence points between the detected edge points, a 1D interpolation was used. The 1D interpolation was conducted between (

,

,

, and

), and between each pair (let us say

), 25 equally spaced points were taken. Therefore, a total of 4 point pairs were available per LiDAR scan/camera image pair (i.e., 4 × 25 = 100). Hence, for each LiDAR scan/camera image pair, 100 corresponding points (interpolated) were considered. The corresponding points obtained from the image and LiDAR scan are shown in

Figure 6 and

Figure 7, respectively.

2.4. Standard Homography Estimation

Generally, any LiDAR point can be projected onto the camera image using a 3 × 3 homography matrix

, as shown in Equation (6). The standard homography matrix can be computed using the singular variable decomposition (SVD) or direct linear transform (DLT) method by solving the system of equations.

The system of equations can be expressed as in Equation (7).

The image pixel coordinates can be obtained using the following Equation (8).

where

is the homogeneous representation of the actual 2D camera image point

of a 3D scene point and

is its 2D LiDAR coordinate, and ‘

’ is the scale factor. The standard homography matrix is computed by establishing the system of equations with the corresponding points. However, reprojection using the standard homography matrix experiences a higher rotation error, which increases the reprojection error.

2.5. Optimized Homography Matrix

Hence, to minimize the rotation error, non-linear least squares optimization was performed using the Levenberg–Marquardt (LM) algorithm. LM optimization is an iterative method that finds the optimal homography matrix by minimizing the error between the real image points and projected LiDAR points onto the image. The homography matrix computed using SVD is introduced as the initial guess to the LM algorithm for quicker convergence.

The error function

can be expressed as in Equation (9).

The optimized homography matrix can be obtained by minimizing the error function

using the LM technique, as shown in Equation (10).

Now, with the optimized homography matrix, the rotation error is minimized. However, as the developed HPS has inconsistent LiDAR points due to the varying angular resolution, with the established corresponding points, the LM method optimizes the standard homography matrix with the local convergence or becomes trapped in the local minimum. In other words, the translation (distance) error is not minimized.

2.6. Refined Homography Using Procrustes Analysis

To minimize the translation error with the optimized homography matrix, a Procrustes analysis was performed. Procrustes analysis is a technique used to compare and align two sets of points or shapes to find an optimal transformation that minimizes the differences between them. Here, the actual 2D image points

are compared to the

obtained using an optimized homography matrix.

In Equation (11),

is the near-accurate projected LiDAR point onto the image, and ‘

’ is the scale factor

. If

, the distance between the projected points is reduced, and, if

, the points are stretched; however, if

, the points are projected on the expected location of the image.

is the transformation matrix; if

, it is a reflection matrix, or if

,

is a rotation matrix. In 2D space, the reflection matrix can be expressed as

and the rotation matrix can be expressed as

. However, in both cases, ‘

’ has to be computed, and it can be computed using Equation (12).

Furthermore, is the refined translation vector and it can be obtained as the mean of the predicted points (i.e., ), and the scale factor can be obtained as the root-mean-square distance (i.e., , where ‘’ is the number of points). By substituting all the parameters in Equation (11), the refined reprojected LiDAR points onto the image can be computed. The next section discusses the performance of the proposed calibration approach through various experiments.