An IoT-Enabled Digital Twin Architecture with Feature-Optimized Transformer-Based Triage Classifier on a Cloud Platform

Abstract

1. Introduction

2. Related Work

3. Methodology

3.1. System Design Considerations

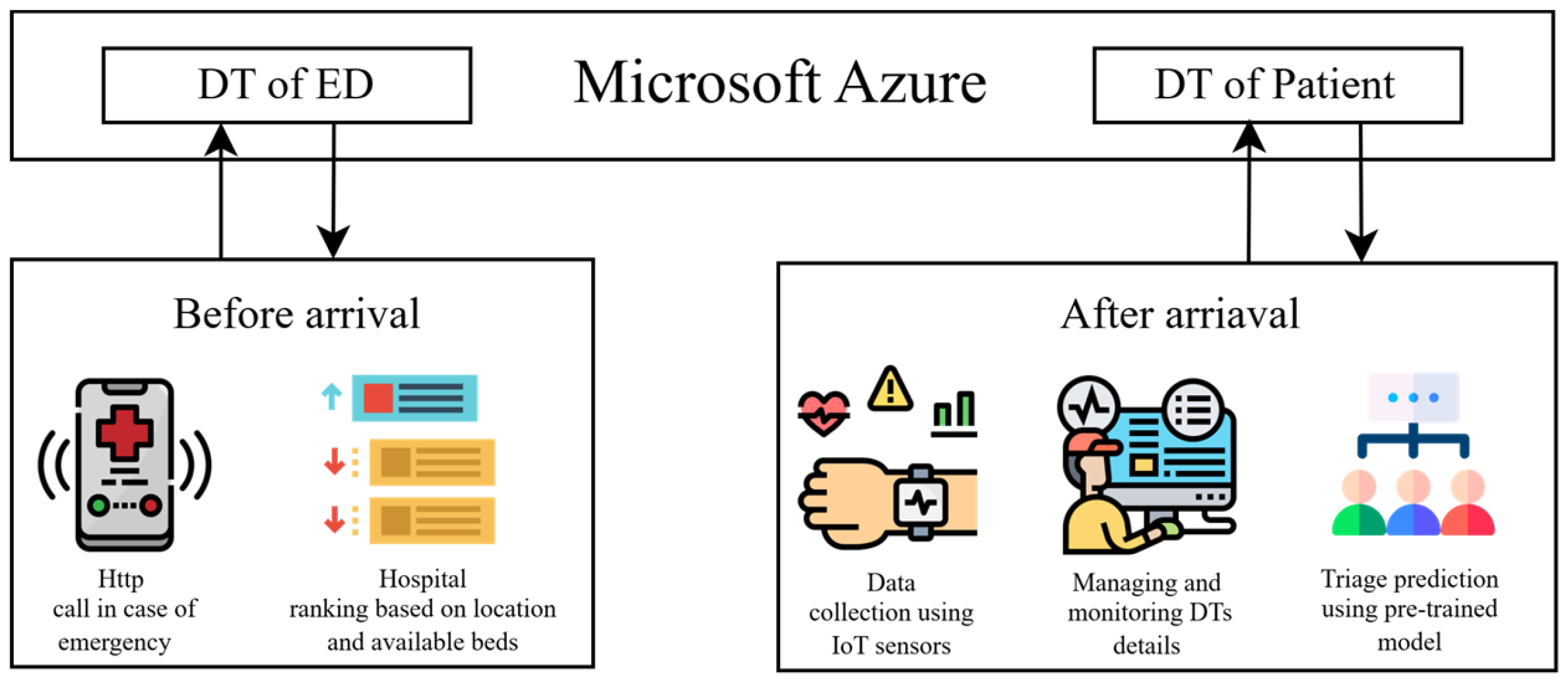

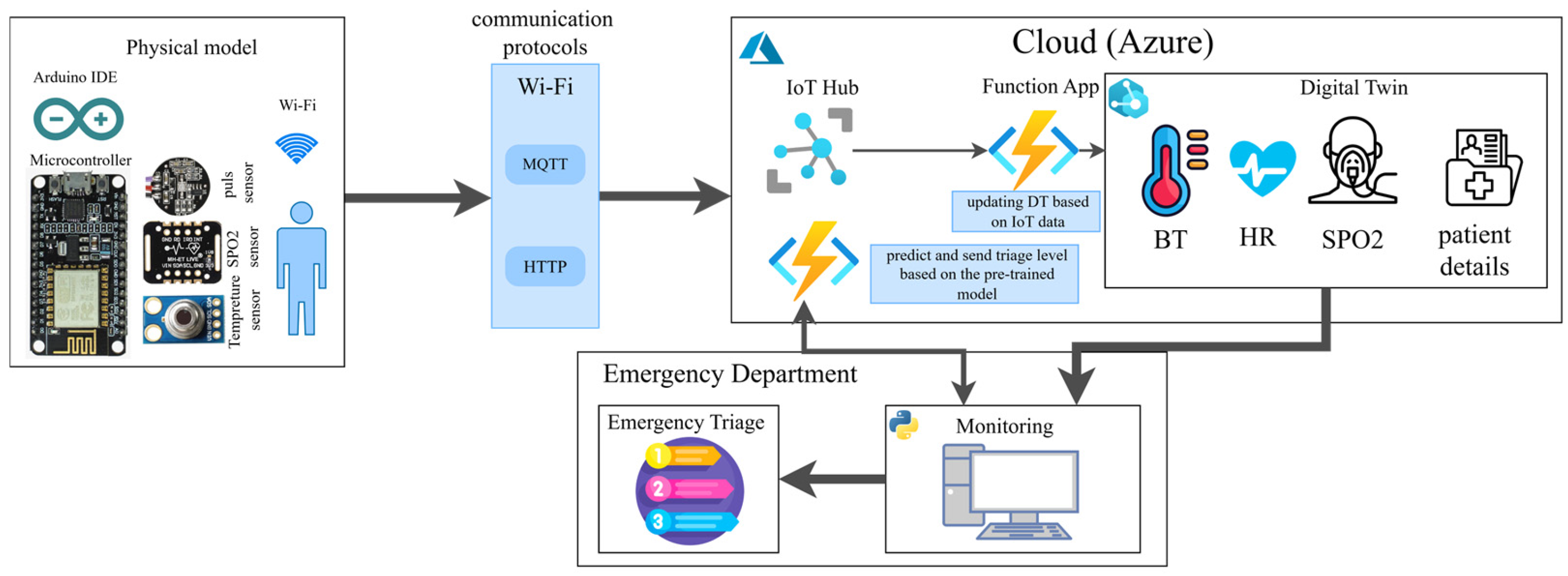

3.2. System Overview

3.3. DT Modeling

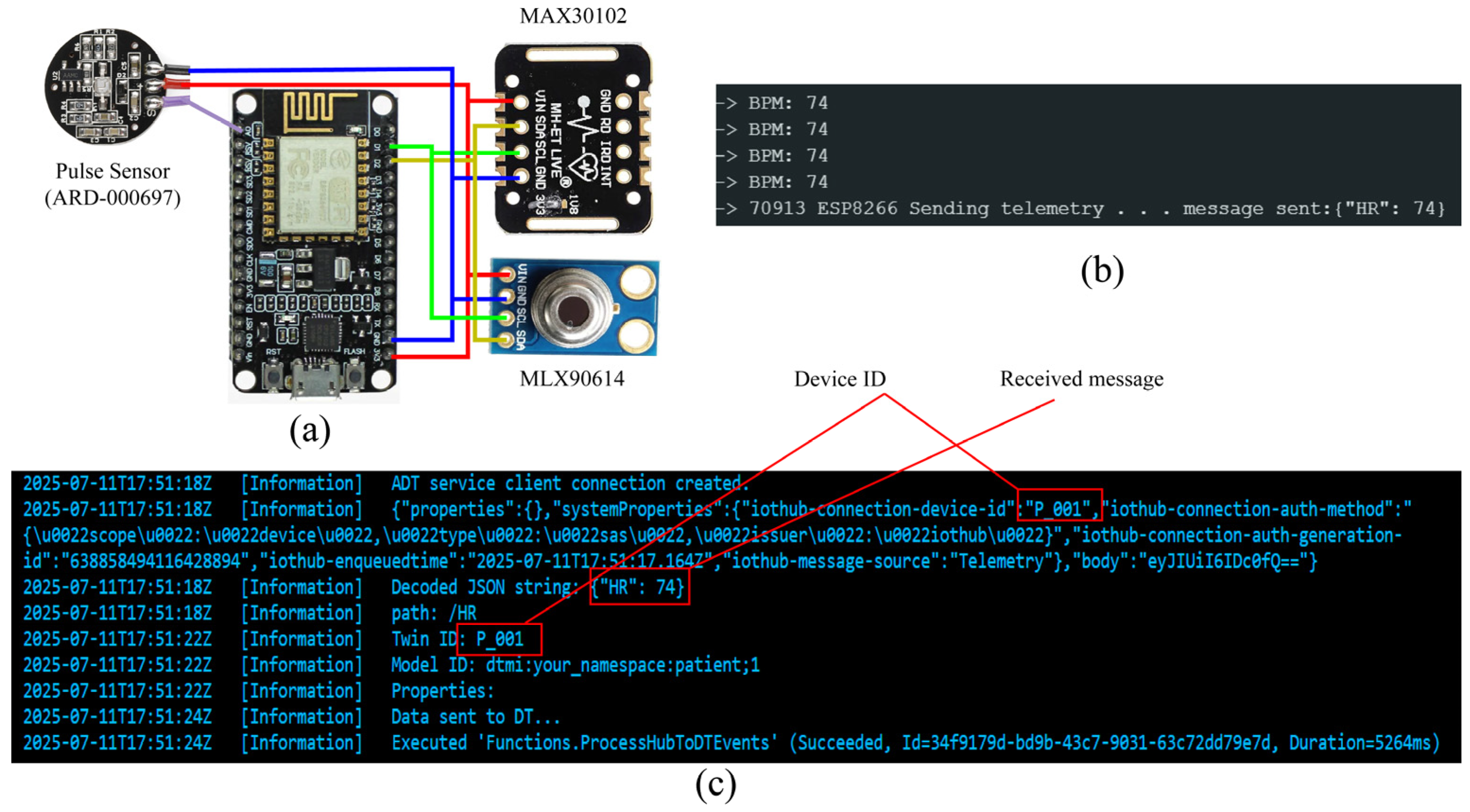

3.4. IoT Devices

| Algorithm 1 Data transmission using IoT devices | ||||||||

| Input:Wi-Fi information (ssid, password), device key | ||||||||

| Output: send data to IoT Hub | ||||||||

| 1 | Initializeconnection: Initialize Wi-Fi and device key connection | |||||||

| 2 | States ← {HR, Temperature, SPO2} | //each sensor has its state | ||||||

| 3 | Duration_start_time ← Current_time | |||||||

| 4 | Duration_time ← 15000 ms | //set the duration of each interval as 15 s | ||||||

| 5 | While True: | |||||||

| 6 | If Curren_time – Duration_start_time > duration_time | //if end of duration | ||||||

| 7 | Sent data (state) to IoT Hub | //send the data after the end of the duration | ||||||

| 8 | Next State | |||||||

| 9 | Duration_start_time ← Current_time | |||||||

| 10 | End if | |||||||

| 11 | Switch state: | |||||||

| 12 | Case (HR): | |||||||

| 13 | HR ← pulse sensor | //reading data from sensor | ||||||

| 14 | Case (Temperature): | |||||||

| 15 | Temperature ← MLX90614 | //reading data from sensor | ||||||

| 16 | Case (SPO2): | |||||||

| 17 | SPO2 ← MAX30102 | //reading data from sensor | ||||||

| 18 | End Switch | |||||||

| 19 | End while | |||||||

3.5. Cloud Computation Infrastructure

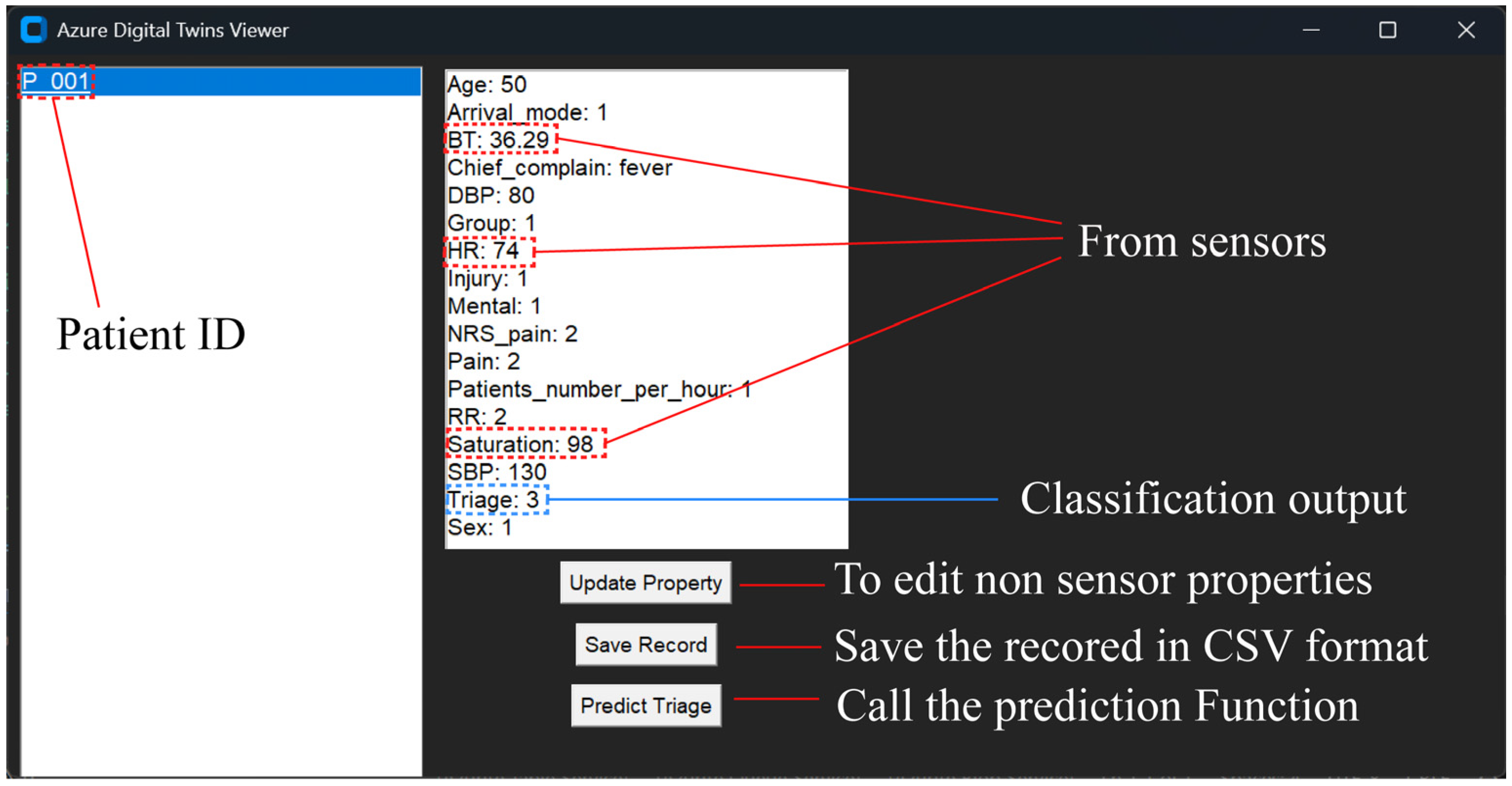

3.6. End User Interface

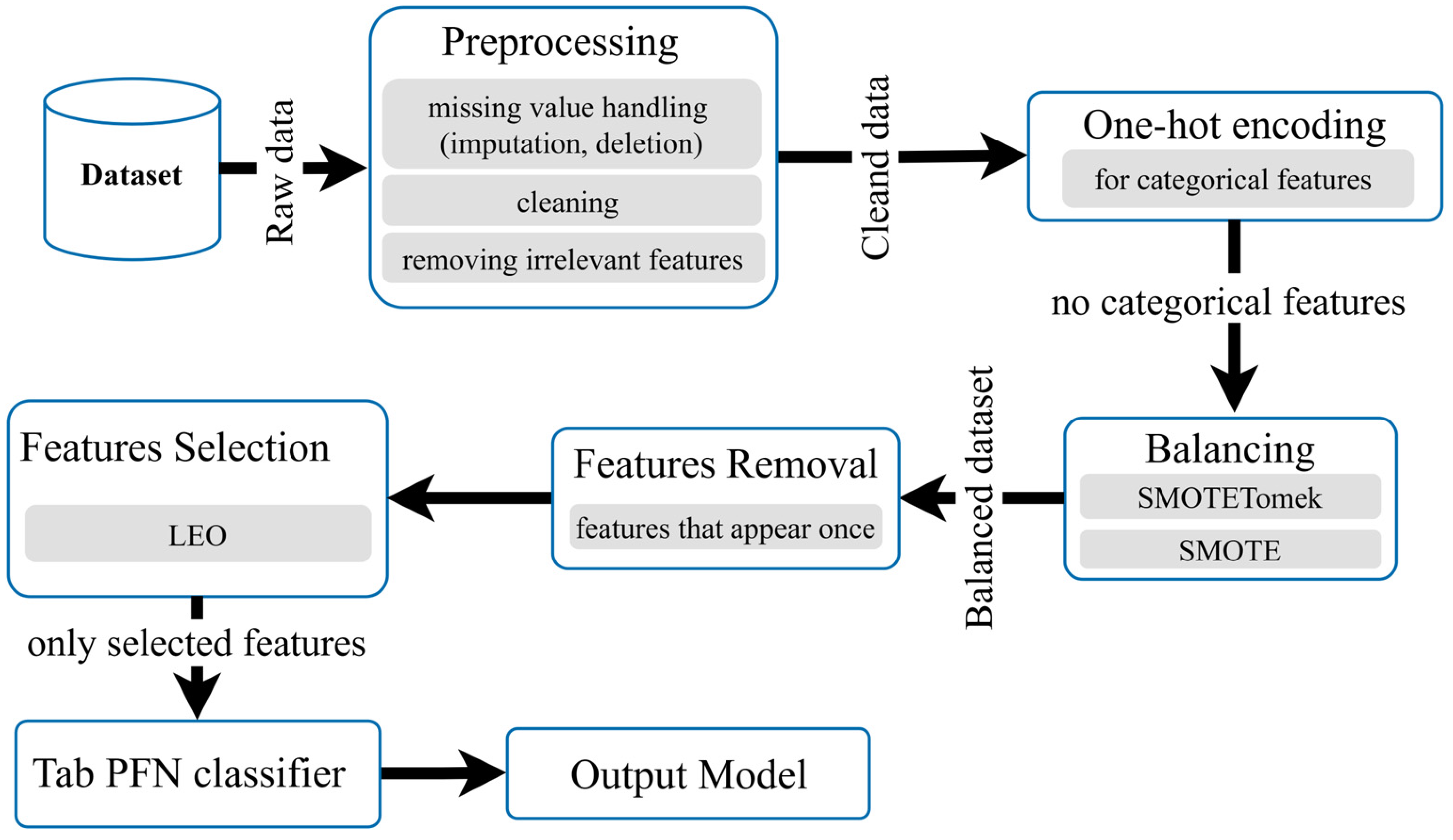

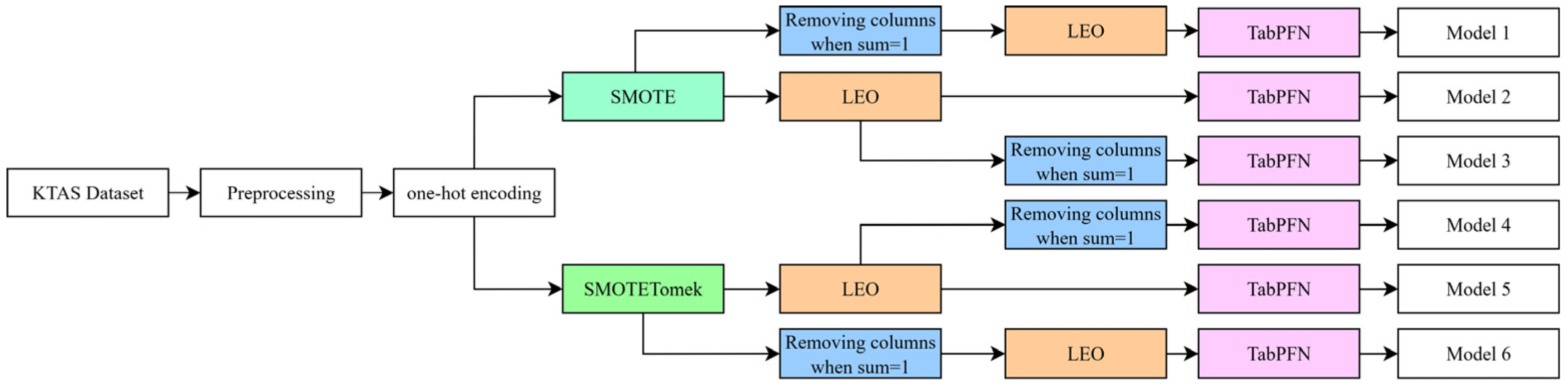

3.7. Classification Model

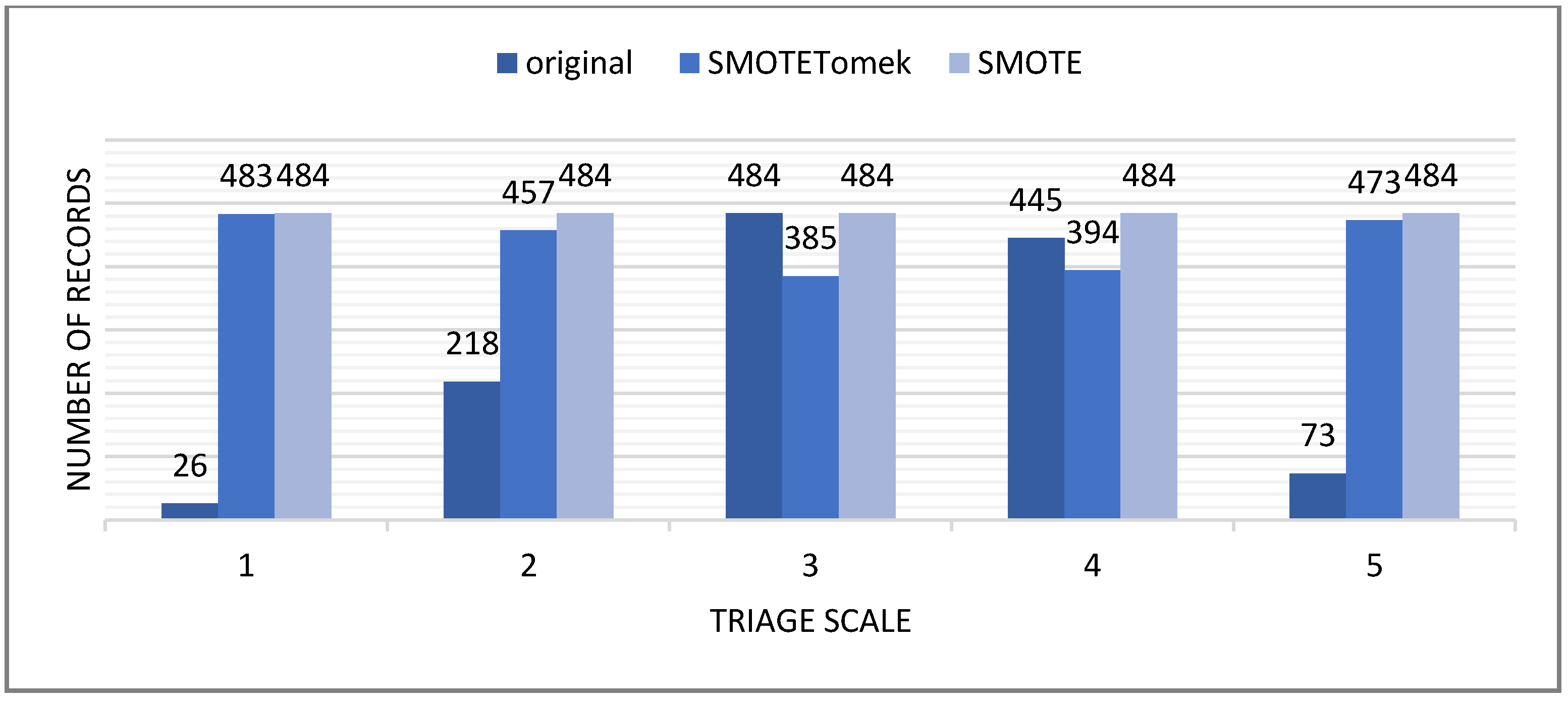

3.7.1. Data Balancing

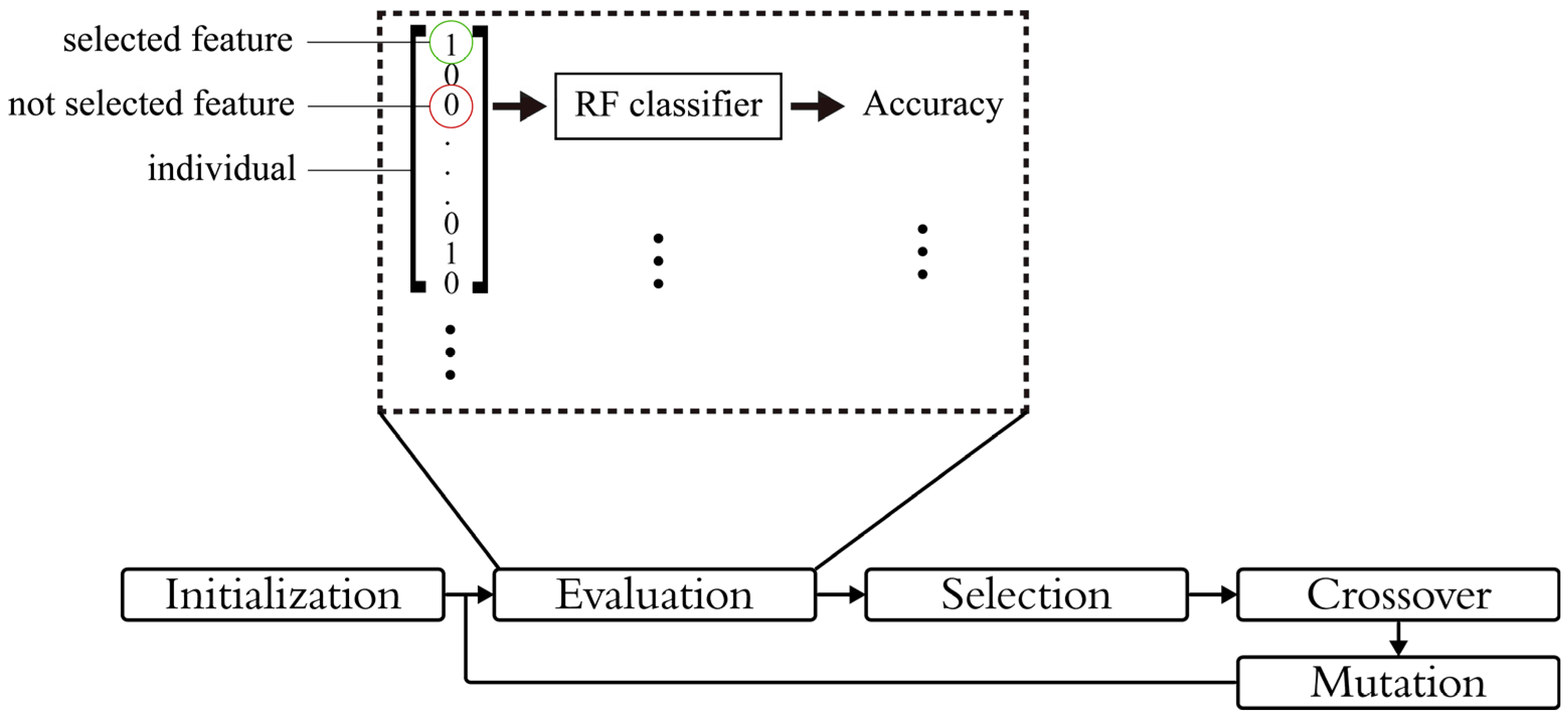

3.7.2. Feature Selection and Optimization

| Algorithm 2 LEO-based feature selection | |||||

| Input:dataset, features, population size, max iterations, crossover pressure, mutation probability | |||||

| Output: best feature subset | |||||

| 1 | Representation: each individual is a binary vector | ||||

| 2 | Initialization: Generate initial population of random binary vectors | ||||

| 3 | While stopping condition is not met | ||||

| 4 | Fitness evaluation: based on lightweight classifier (RF) | ||||

| 5 | Selection: | ||||

| 6 | Sort the population based on fitness | ||||

| 7 | Select the first half and divide it into two groups fd and sd | ||||

| 8 | If fd include parents | ||||

| 9 | Ifpopulation highest fitness <= fd highest fitness | ||||

| 10 | new population = fd | ||||

| 11 | else | ||||

| 12 | new population = sd | ||||

| 13 | End if | ||||

| 14 | else | ||||

| 15 | New population = sd | ||||

| 16 | End if | ||||

| 17 | Crossover: implementation of Equations (2) and (3) | ||||

| 18 | Mutation: implementation of Equation (4) | ||||

| 19 | End while | ||||

3.7.3. Classification

4. Data Collection and Preprocessing

5. System Evaluation

6. Results

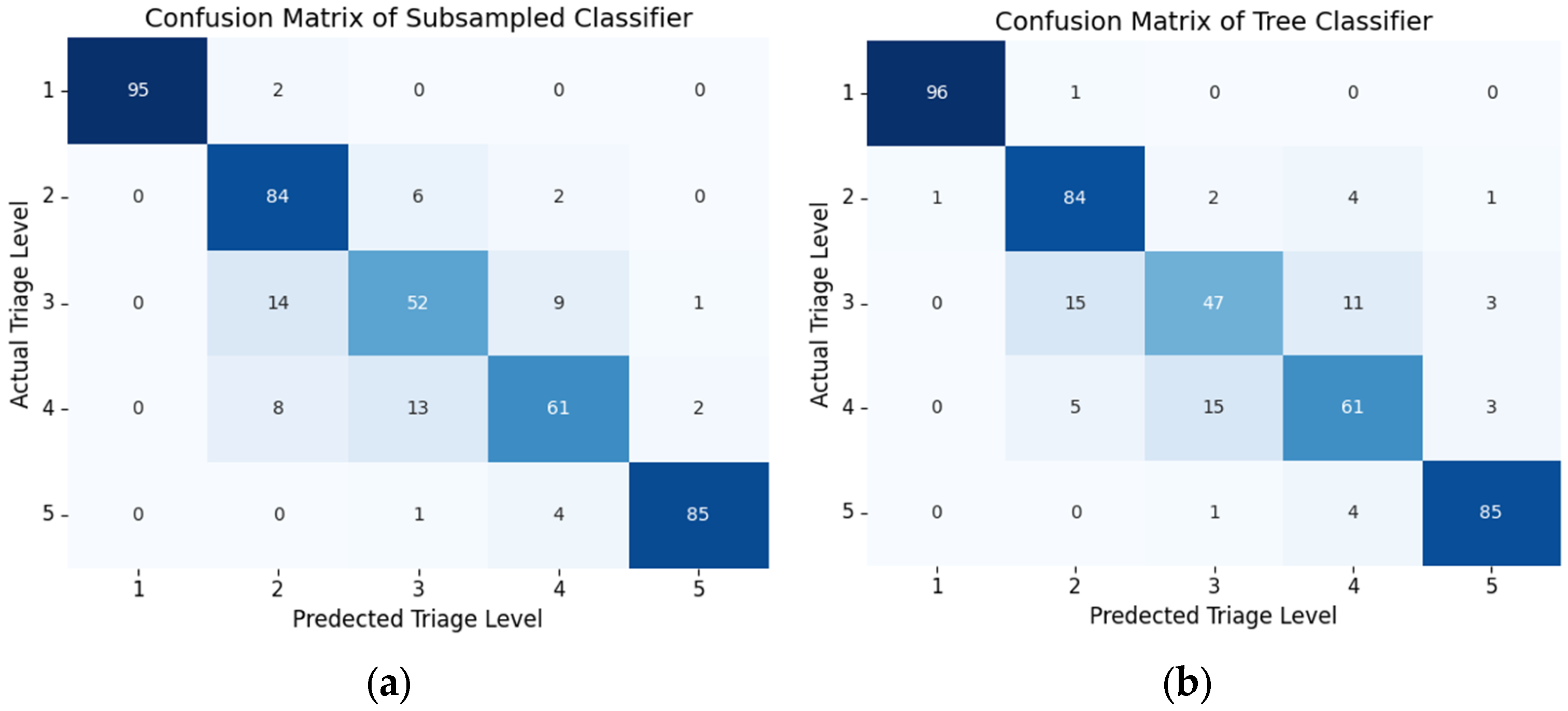

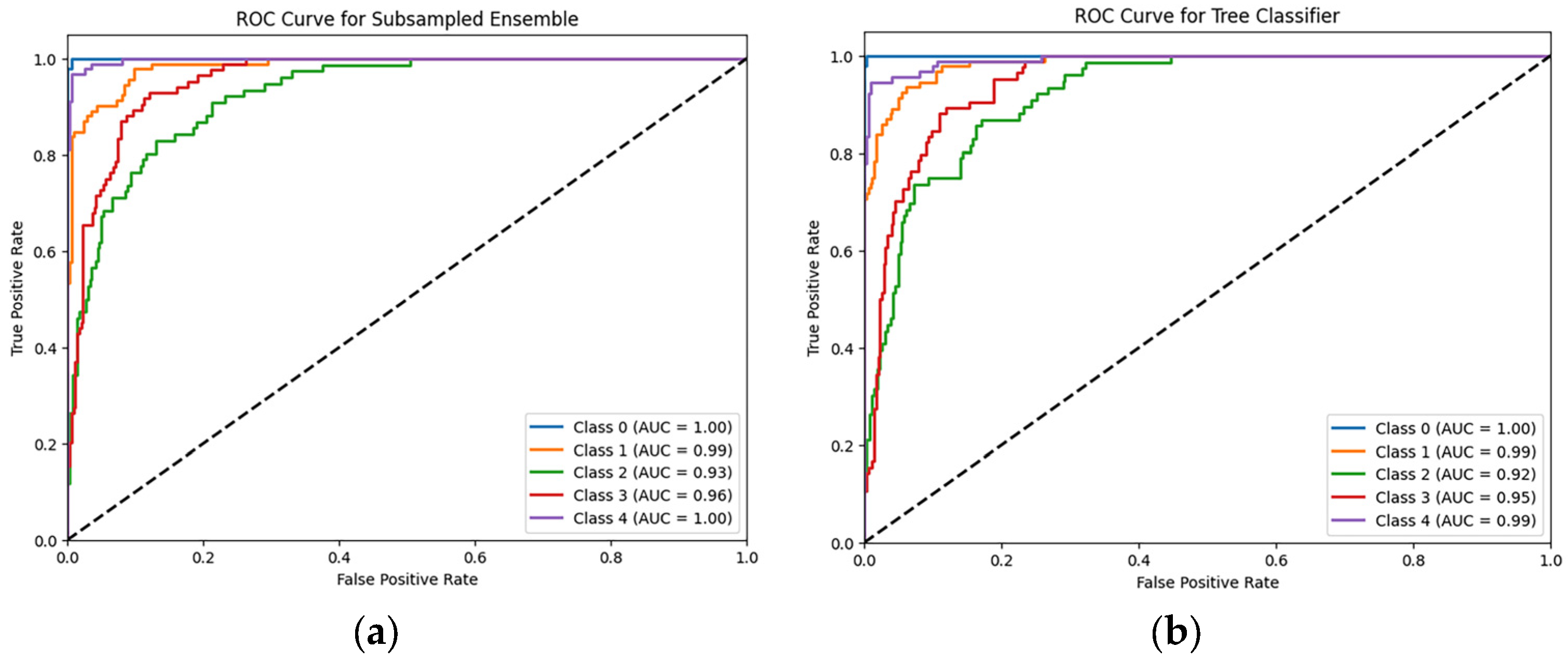

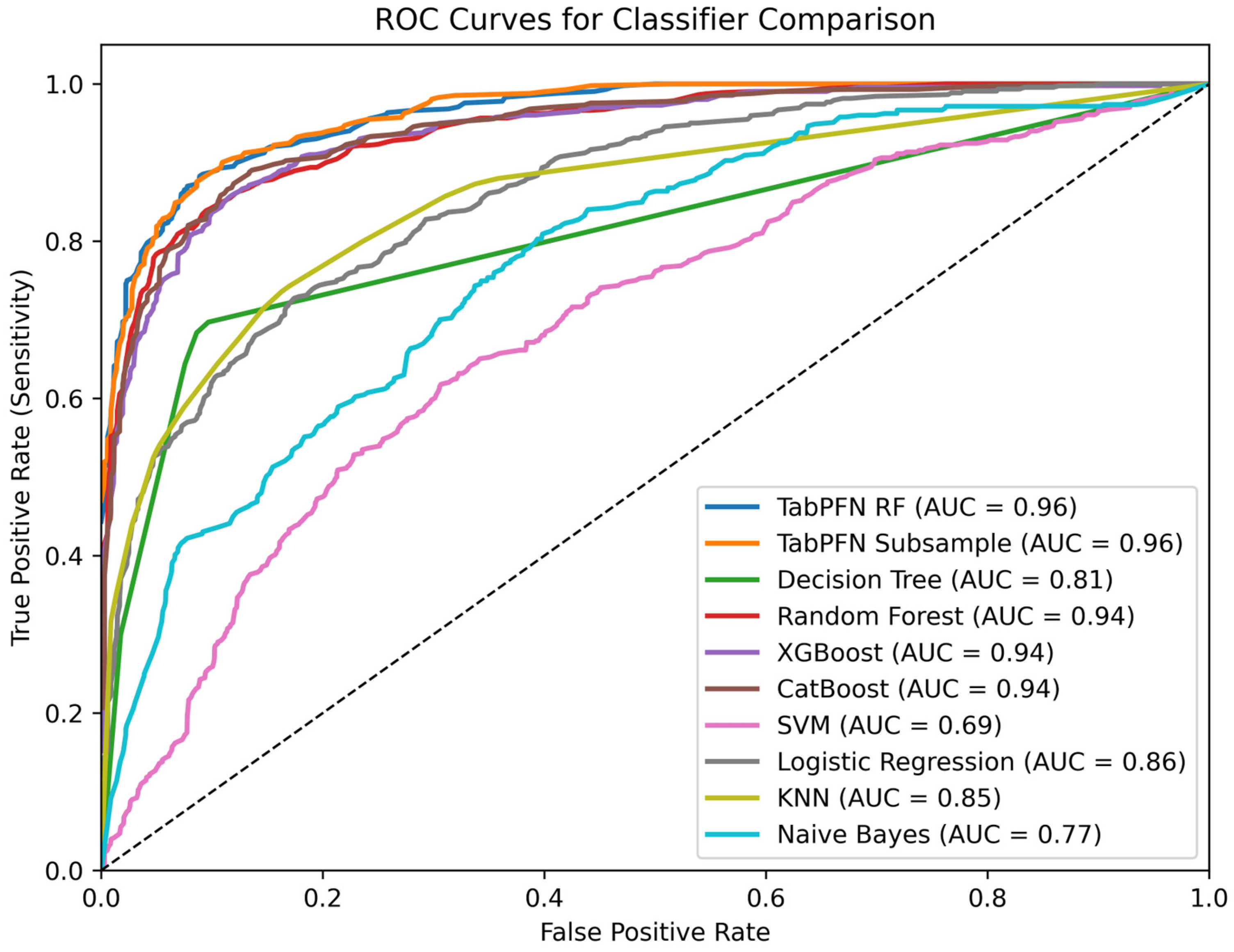

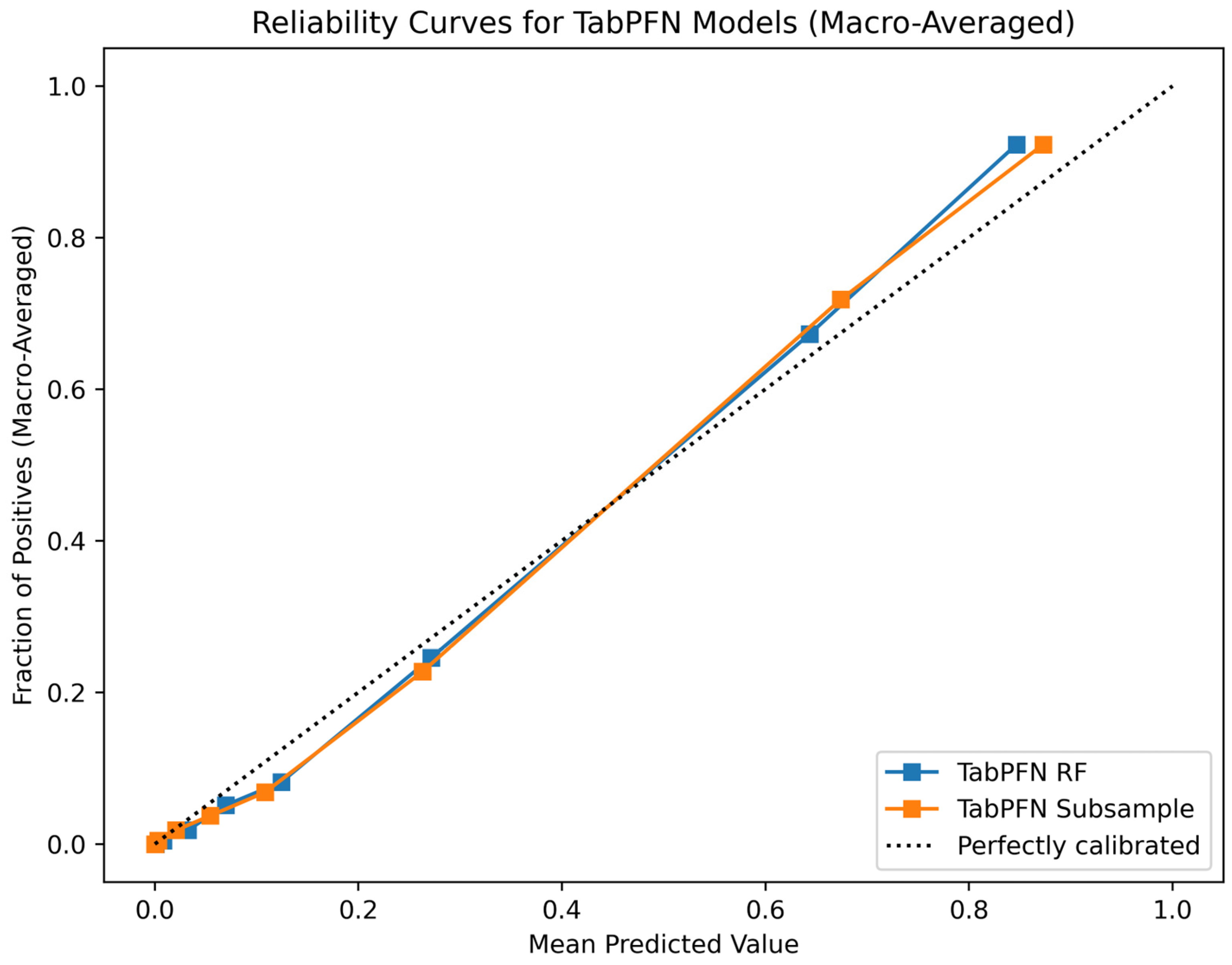

6.1. Classifcation Model Performance

6.2. IoT System Performance

7. Discussion

System Limitations and Challenges

8. Conclusion and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SMOTE | Synthetic Minority Oversampling Technique |

| ED | Emergency Department |

| DT | Digital Twin |

| IoT | Internet of Things |

| AI | Artificial Intelligence |

| ML | Machine Learning |

| ATS | Australasian Triage Scale |

| CTAS | Canadian Triage and Acuity Scale |

| MTS | Manchester Triage System |

| ESI | Emergency Severity Index |

| SATS | South African Triage Scale |

| KTAS | Korean Triage and Acuity Scale |

| DTDL | Digital Twin Definition Language |

| SMOTETomek | Synthetic Minority Oversampling Technique Combined with Tomek Links |

| LEO | Lagrange Element Optimization |

| TabPFN | Tabular Prior-Data Fitted Network |

| MQTT | Message Queuing Telemetry Transport |

| HTTP | Hyper-text Transfer Protocol |

| HR | Heart Rate |

| SpO2 | Oxygen Saturation |

| I2C | Inter-Integrated Circuit |

| SDK | Software Development Kit |

| UI | User Interface |

| CSV | Comma-separated Values |

| Ssyn | Generated Samples |

| r | Random Number between 0 and 1 |

| SKNN | k-Nearest Neighbor Samples |

| Sf | Feature Samples |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under Curve |

References

- Samadbeik, M.; Staib, A.; Boyle, J.; Khanna, S.; Bosley, E.; Bodnar, D.; Lind, J.; Austin, J.A.; Tanner, S.; Meshkat, Y.; et al. Patient flow in emergency departments: A comprehensive umbrella review of solutions and challenges across the health system. BMC Health Serv. Res. 2024, 24, 274. [Google Scholar] [CrossRef] [PubMed]

- Abu-Alsaad, H.A.; Al-Taie, R.R.K. NLP analysis in social media using big data technologies. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2021, 19, 1840. [Google Scholar] [CrossRef]

- Suamchaiyaphum, K.; Jones, A.R.; Markaki, A. Triage Accuracy of Emergency Nurses: An Evidence-Based Review. J. Emerg. Nurs. 2024, 50, 44–54. [Google Scholar] [CrossRef] [PubMed]

- Suamchaiyaphum, K.; Jones, A.R.; Polancich, S. The accuracy of triage classification using Emergency Severity Index. Int. Emerg. Nurs. 2024, 77, 101537. [Google Scholar] [CrossRef] [PubMed]

- Singh, M.; Fuenmayor, E.; Hinchy, E.P.; Qiao, Y.; Murray, N.; Devine, D. Digital twin: Origin to future. Appl. Syst. Innov. 2021, 4, 36. [Google Scholar] [CrossRef]

- Siam, A.I.; Almaiah, M.A.; Al-Zahrani, A.; Elazm, A.A.; El Banby, G.M.; El-Shafai, W.; El-Samie, F.E.A.; El-Bahnasawy, N.A. Secure Health Monitoring Communication Systems Based on IoT and Cloud Computing for Medical Emergency Applications. Comput. Intell. Neurosci. 2021, 2021, 8016525. [Google Scholar] [CrossRef]

- Georgieva-Tsaneva, G.; Cheshmedzhiev, K.; Tsanev, Y.-A.; Dechev, M.; Popovska, E. Healthcare Monitoring Using an Internet of Things-Based Cardio System. IoT 2025, 6, 10. [Google Scholar] [CrossRef]

- Da’Costa, A.; Teke, J.; Origbo, J.E.; Osonuga, A.; Egbon, E.; Olawade, D.B. AI-driven triage in emergency departments: A review of benefits, challenges, and future directions. Int. J. Med. Inform. 2025, 197, 105838. [Google Scholar] [CrossRef]

- Saleh, B.J.; Al_Taie, R.R.K.; Mhawes, A.A. Machine Learning Architecture for Heart Disease Detection: A Case Study in Iraq. Int. J. Online Biomed. Eng. (iJOE) 2022, 18, 141–153. [Google Scholar] [CrossRef]

- Morais, D.; Pinto, F.G.; Pires, I.M.; Garcia, N.M.; Gouveia, A.J. The influence of cloud computing on the healthcare industry: A review of applications, opportunities, and challenges for the CIO. Procedia Comput. Sci. 2022, 203, 714–720. [Google Scholar] [CrossRef]

- Mutashar, H.Q.; Mahmoud, S.M.; Abu-Alsaad, H.A. Cloud-based Digital Twin Framework and IoT for Smart Emergency Departments in Hospitals. Eng. Technol. Appl. Sci. Res. 2025, 15, 22269–22277. [Google Scholar] [CrossRef]

- Moon, S.H.; Shim, J.L.; Park, K.S.; Park, C.S. Triage accuracy and causes of mistriage using the Korean Triage and Acuity Scale. PLoS ONE Public Libr. Sci. 2019, 14, e0216972. [Google Scholar] [CrossRef] [PubMed]

- DTDL Models-Azure Digital Twins|Microsoft Learn [Internet]. Available online: https://learn.microsoft.com/en-us/azure/digital-twins/concepts-models (accessed on 17 July 2025).

- Cloud Computing Services|Microsoft Azure [Internet]. Available online: https://azure.microsoft.com/en-us/ (accessed on 17 July 2025).

- Azure IoT Hub Documentation|Microsoft Learn [Internet]. Available online: https://learn.microsoft.com/en-us/azure/iot-hub/ (accessed on 14 October 2024).

- Azure Functions Documentation|Microsoft Learn [Internet]. Available online: https://learn.microsoft.com/en-us/azure/azure-functions/ (accessed on 26 October 2024).

- What is Azure Digital Twins?-Azure Digital Twins|Microsoft Learn [Internet]. Available online: https://learn.microsoft.com/en-us/azure/digital-twins/overview (accessed on 17 July 2025).

- Aladdin, A.M.; Rashid, T.A. LEO: Lagrange elementary optimization. Neural. Comput. Appl. 2025, 37, 14365–14397. [Google Scholar] [CrossRef]

- Hollmann, N.; Müller, S.; Eggensperger, K.; Hutter, F. TabPFN: A Transformer That Solves Small Tabular Classification Problems in a Second. arXiv 2023, arXiv:2207.01848. [Google Scholar] [CrossRef]

- Alshathri, S.; El-Din Hemdan, E.; El-Shafai, W.; Sayed, A. Digital Twin-Based Automated Fault Diagnosis in Industrial IoT Applications. Comput. Mater. Contin. 2023, 75, 183–196. [Google Scholar] [CrossRef]

- Lu, Q.; Shen, X.; Zhou, J.; Li, M. MBD-Enhanced Asset Administration Shell for Generic Production Line Design. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 5593–5605. [Google Scholar] [CrossRef]

- Wu, Z.; Ma, C.; Zhang, L.; Gui, H.; Liu, J.; Liu, Z. Predicting and compensating for small-sample thermal information data in precision machine tools: A spatial-temporal interactive integration network and digital twin system approach. Appl. Soft Comput. 2024, 161, 111760. [Google Scholar] [CrossRef]

- Wang, E.; Tayebi, P.; Song, Y.-T. Cloud-Based Digital Twins’ Storage in Emergency Healthcare. Int. J. Networked Distrib. Comput. 2023, 11, 75–87. [Google Scholar] [CrossRef]

- Corral-Acero, J.; Margara, F.; Marciniak, M.; Rodero, C.; Loncaric, F.; Feng, Y.; Gilbert, A.; Fernandes, J.F.; Bukhari, H.A.; Wajdan, A.; et al. The “Digital Twin” to enable the vision of precision cardiology. Eur. Heart J. 2025, 41, 4556–4564. [Google Scholar] [CrossRef]

- Jameil, A.K.; Al-Raweshidy, H. A digital twin framework for real-time healthcare monitoring: Leveraging AI and secure systems for enhanced patient outcomes. Discov. Internet Things 2025, 5, 37. [Google Scholar] [CrossRef]

- Chen, J.; Yi, C.; Du, H.; Niyato, D.; Kang, J.; Cai, J.; Shen, X. A Revolution of Personalized Healthcare: Enabling Human Digital Twin with Mobile AIGC. Available online: https://haptx.com (accessed on 6 January 2025).

- Ramya, M.C.; Elamathi, S.; Nithya, D.; Priyadarshini, K.; Suganthini, S. IoT-Powered Digital Twin Solution for Real-Time Health Monitoring [Internet]. Available online: www.ijert.org (accessed on 1 July 2025).

- Kang, H.Y.J.; Ko, M.; Ryu, K.S. Tabular transformer generative adversarial network for heterogeneous distribution in healthcare. Sci. Rep. 2025, 15, 10254. [Google Scholar] [CrossRef] [PubMed]

- Hall, J.N.; Galaev, R.; Gavrilov, M.; Mondoux, S. Development of a machine learning-based acuity score prediction model for virtual care settings. BMC Med. Inf. Decis. Mak. 2023, 23, 200. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Y.; Zhang, J.; Chi, C.; Ma, Y.; Song, A. Criticality and clinical department prediction of ED patients using machine learning based on heterogeneous medical data. Comput. Biol. Med. 2023, 165, 107390. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Li, W.; Bradlow, A.; Bazuaye, E.; Chan, A.T.Y. Improving triaging from primary care into secondary care using heterogeneous data-driven hybrid machine learning. Decis. Support Syst. 2023, 166, 113899. [Google Scholar] [CrossRef]

- Feretzakis, G.; Sakagianni, A.; Anastasiou, A.; Kapogianni, I.; Tsoni, R.; Koufopoulou, C.; Karapiperis, D.; Kaldis, V.; Kalles, D.; Verykios, V.S. Machine Learning in Medical Triage: A Predictive Model for Emergency Department Disposition. Appl. Sci. 2024, 14, 6623. [Google Scholar] [CrossRef]

- Feretzakis, G.; Karlis, G.; Loupelis, E.; Kalles, D.; Chatzikyriakou, R.; Trakas, N.; Karakou, E.; Sakagianni, A.; Tzelves, L.; Petropoulou, S.; et al. Using Machine Learning Techniques to Predict Hospital Admission at the Emergency Department. J. Crit. Care Med. 2022, 8, 107–116. [Google Scholar] [CrossRef]

- Jiang, H.; Mao, H.; Lu, H.; Lin, P.; Garry, W.; Lu, H.; Yang, G.; Rainer, T.H.; Chen, X. Machine learning-based models to support decision-making in emergency department triage for patients with suspected cardiovascular disease. Int. J. Med. Inform. 2021, 145, 104326. [Google Scholar] [CrossRef]

- Araouchi, Z.; Adda, M. TriageIntelli: AI-Assisted Multimodal Triage System for Health Centers. Procedia Comput. Sci. 2024, 251, 430–437. [Google Scholar] [CrossRef]

- Mebrahtu, T.F.; Skyrme, S.; Randell, R.; Keenan, A.-M.; Bloor, K.; Yang, H.; Andre, D.; Ledward, A.; King, H.; Thompson, C. Effects of computerised clinical decision support systems (CDSS) on nursing and allied health professional performance and patient outcomes: A systematic review of experimental and observational studies. BMJ Open 2021, 11, e053886. [Google Scholar] [CrossRef] [PubMed]

- Ahmed Abdalhalim, A.Z.; Nureldaim Ahmed, S.N.; Dawoud Ezzelarab, A.M.; Mustafa, M.; Ali Albasheer, M.G.; Abdelgadir Ahmed, R.E.; Galal Eldin Elsayed, M.B. Clinical Impact of Artificial Intelligence-Based Triage Systems in Emergency Departments: A Systematic Review. Cureus 2025, 17, e85667. [Google Scholar] [CrossRef] [PubMed]

- Stone, E.L. Clinical Decision Support Systems in the Emergency Department: Opportunities to Improve Triage Accuracy. J. Emerg. Nurs. 2019, 45, 220–222. [Google Scholar] [CrossRef] [PubMed]

- Alasmary, H. ScalableDigitalHealth (SDH): An IoT-Based Scalable Framework for Remote Patient Monitoring. Sensors 2024, 24, 1346. [Google Scholar] [CrossRef] [PubMed]

- Yew, H.T.; Wong, G.X.; Wong, F.; Mamat, M.; Chung, S.K. IoT-Based Patient Monitoring System. Int. J. Comp. Commun. Instrum. Engg. 2024, 4, 19–43. [Google Scholar] [CrossRef]

- MLX90614 family Single and Dual Zone Infra Red Thermometer in TO-39 Features and Benefits. 2013. Available online: https://www.alldatasheet.com/datasheet-pdf/pdf/859400/MAXIM/MAX30102.html (accessed on 1 July 2025).

- Akintade, O.O.; Yesufu, T.K.; Kehinde, L.O. Development of Power Consumption Models for ESP8266-Enabled Low-Cost IoT Monitoring Nodes. Adv. Internet Things 2019, 09, 1–14. [Google Scholar] [CrossRef]

- Jang, D.-H.; Cho, S. A 43.4μW photoplethysmogram-based heart-rate sensor using heart-beat-locked loop. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; IEEE: New York, NY, USA, 2018; pp. 474–476. [Google Scholar] [CrossRef]

- Yu, L.; Zhou, R.; Chen, R.; Lai, K.K. Missing Data Preprocessing in Credit Classification: One-Hot Encoding or Imputation? Emerg. Mark. Financ. Trade 2022, 58, 472–482. [Google Scholar] [CrossRef]

- Pradipta, G.A.; Wardoyo, R.; Musdholifah, A.; Sanjaya, I.N.H.; Ismail, M. SMOTE for Handling Imbalanced Data Problem: A Review. In Proceedings of the 2021 Sixth International Conference on Informatics and Computing (ICIC), Jakarta, Indonesia, 3–4 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Swana, E.F.; Doorsamy, W.; Bokoro, P. Tomek Link and SMOTE Approaches for Machine Fault Classification with an Imbalanced Dataset. Sensors 2022, 22, 3246. [Google Scholar] [CrossRef]

- Tuysuzoglu, G.; Dogan, Y.; Ozturk Kiyak, E.; Ersahin, M.; Ghasemkhani, B.; Ulas Birant, K.; Birant, D. Joint Tomek Links (JTL): An Innovative Approach to Noise Reduction for Enhanced Classification Performance. IEEE Access 2025, 13, 123059–123082. [Google Scholar] [CrossRef]

- Shadeed, G.A.; Tawfeeq, M.A.; Mahmoud, S.M. Deep learning model for thorax diseases detection. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2020, 18, 441. [Google Scholar] [CrossRef]

- García-Valls, M.; Palomar-Cosín, E. An Evaluation Process for IoT Platforms in Time-Sensitive Domains. Sensors 2022, 22, 9501. [Google Scholar] [CrossRef]

| Feature | Feature Description |

|---|---|

| Group | ED type [1 = local ED, 2 = reginal ED] |

| Sex | Patient's gender, 1 for male and 2 for female |

| Age | Patient's age |

| Patients number per hour | Number of patients in each hour |

| Arrival mode | 1 for walking, 2 for public ambulance, 3 for private vehicle, 4 for private ambulance, and 5,6,7 for other |

| Injury | Reason visit, 1 for No and 2 for Yes |

| Chief_complain | The patient's complaint |

| Mental | Mental state, 1 for alert, 2 for verbal response, 3 for pain response, and 4 for unresponsive |

| Pain | Patient has pain, 1 for Yes and 0 for No |

| NRS_pain | Patient pain based on nurses’ assessment [1–10] |

| SBP | Systolic blood pressure in mmHg |

| DBP | Diastolic blood pressure in mmHg |

| HR | Heart rate in beats per minute (bpm) |

| RR | Respiratory rate in breaths per minute (breaths/min) |

| BT | Body temperature in °C |

| Saturation | Oxygen in blood |

| KTAS_expert | Triage scale [1,2,3 = emergency, 4,5 = non-emergency] |

| Method | Accuracy % | Recall % | Precision % | F1-Score % |

|---|---|---|---|---|

| DT | 57 ± 1.87 | 57 ± 1.78 | 57 ± 1.64 | 57 ± 1.64 |

| RF | 57 ± 1.93 | 57 ± 1.57 | 57 ± 1.84 | 57 ± 1.66 |

| XGBoost | 66 ± 2.32 | 66 ± 1.8 | 67 ± 1.89 | 65 ± 1.87 |

| SVM | 54 ± 3.69 | 54 ± 2.22 | 56 ± 4.39 | 51 ± 2.93 |

| LR | 66 ± 2.65 | 66 ± 2.66 | 69 ± 2.79 | 66 ± 2.77 |

| KNN | 60 ± 2.73 | 60 ± 3.35 | 63 ± 3.93 | 57 ± 3.58 |

| NB | 28 ± 6.03 | 28 ± 5.45 | 55 ± 3.93 | 31 ± 3.58 |

| Stacked model [35] | 80.05 | 73.26 | 80.27 | 74.41 |

| Subsampled PFN | 85.88 ± 2.42 | 86 ± 2.22 | 86 ± 2.41 | 86 ± 2.28 |

| Tree PFN | 84.97 ± 2.12 | 85 ± 1.86 | 85 ± 2 | 85 ± 1.97 |

| Configuration | Classifier | Accuracy % | Recall % | Precession % | F1-Score % |

|---|---|---|---|---|---|

| Preprocessing only | Subsampled PFN | 70.8 ± 2.28 | 71 ± 4.85 | 74 ± 9.73 | 70 ± 4.58 |

| Tree PFN | 70.8 ± 1.67 | 71 ± 3.11 | 72 ± 6.57 | 70 ± 2.29 | |

| Without data balancing | Subsampled PFN | 71.2 ± 2.13 | 71 ± 3.18 | 73 ± 7.73 | 70 ± 2.48 |

| Tree PFN | 71.6 ± 3.35 | 72 ± 3.25 | 71 ± 9.8 | 70 ± 2.81 | |

| SMOTE only | |||||

| Model 1 | Subsampled PFN | 83.26 ± 1.42 | 84 ± 1.45 | 83 ± 1.43 | 83 ± 1.45 |

| Tree PFN | 79.55 ± 0.87 | 80 ± 0.86 | 80 ± 0.71 | 79 ± 0.77 | |

| Model 2 | Subsampled PFN | 84.09 ± 1.17 | 85 ± 1.24 | 84 ± 1.16 | 84 ± 1.21 |

| Tree PFN | 81.40 ± 0.82 | 82 ± 0.94 | 81 ± 0.91 | 81 ± 0.95 | |

| Model 3 | Subsampled PFN | 84.09 ± 1.48 | 85 ± 1.57 | 84 ± 1.54 | 84 ± 157 |

| Tree PFN | 80.58 ± 1.6 | 81 ± 1.65 | 81 ± 1.79 | 81 ± 1.72 | |

| without LEO | Subsampled PFN | 82.23 ± 1.52 | 82 ± 1.46 | 82 ± 1.63 | 82 ± 1.56 |

| Tree PFN | 79.13 ± 1.21 | 79 ± 1.14 | 79 ± 1.28 | 79 ± 1.18 | |

| SMOTE and Tomek | |||||

| Model 4 | Subsampled PFN | 84.51 ± 1.94 | 84 ± 1.53 | 85 ± 1.72 | 84 ± 1.66 |

| Tree PFN | 84.28 ± 1.67 | 84 ± 1.35 | 84 ± 1.45 | 84 ± 1.48 | |

| Model 5 | Subsampled PFN | 84.97 ± 1.9 | 85 ± 1.41 | 85 ± 1.51 | 85 ± 1.48 |

| Tree PFN | 84.28 ± 1.69 | 84 ± 1.29 | 84 ± 1.52 | 84 ± 1.43 | |

| Model 6 | Subsampled PFN | 85.88 ± 2.42 | 86 ± 2.22 | 86 ± 2.41 | 86 ± 2.28 |

| Tree PFN | 84.97 ± 2.12 | 85 ± 1.86 | 85 ± 2 | 85 ± 1.97 | |

| without LEO | Subsampled PFN | 80.87 ± 1.94 | 81 ± 1.46 | 81 ± 1.59 | 81 ± 1.51 |

| Tree PFN | 78.59 ± 2.05 | 78 ± 2.12 | 79 ± 2.21 | 78 ± 2.29 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mutashar, H.Q.; Abu-Alsaad, H.A.; Mahmoud, S.M. An IoT-Enabled Digital Twin Architecture with Feature-Optimized Transformer-Based Triage Classifier on a Cloud Platform. IoT 2025, 6, 73. https://doi.org/10.3390/iot6040073

Mutashar HQ, Abu-Alsaad HA, Mahmoud SM. An IoT-Enabled Digital Twin Architecture with Feature-Optimized Transformer-Based Triage Classifier on a Cloud Platform. IoT. 2025; 6(4):73. https://doi.org/10.3390/iot6040073

Chicago/Turabian StyleMutashar, Haider Q., Hiba A. Abu-Alsaad, and Sawsan M. Mahmoud. 2025. "An IoT-Enabled Digital Twin Architecture with Feature-Optimized Transformer-Based Triage Classifier on a Cloud Platform" IoT 6, no. 4: 73. https://doi.org/10.3390/iot6040073

APA StyleMutashar, H. Q., Abu-Alsaad, H. A., & Mahmoud, S. M. (2025). An IoT-Enabled Digital Twin Architecture with Feature-Optimized Transformer-Based Triage Classifier on a Cloud Platform. IoT, 6(4), 73. https://doi.org/10.3390/iot6040073