1. Introduction

The Internet of Things (IoT) has rapidly permeated nearly every aspect of modern life, from smart homes and wearable devices to industrial control systems and critical infrastructure [

1]. This pervasive interconnectedness, however, introduces significant security vulnerabilities. The huge scale of the IoT landscape, encompassing a heterogeneous mix of devices often operating with limited resources and varied communication protocols, creates a complex and challenging security environment [

2]. The diverse and often insecure nature of IoT devices creates a broad and appealing target for cyber attackers. Once compromised, these devices can be weaponized to cause widespread service outages and major breaches of personal privacy. These growing risks highlight the critical demand for security solutions that are both highly effective and inherently flexible to safeguard complex IoT networks [

3].

The fundamental design of most IoT devices, characterized by severely restricted processing power, limited memory, and low energy capacity, makes standard security measures unworkable. This inherent weakness, born from a lack of resources, continues to be the main challenge in protecting the growing IoT landscape [

4]. These limitations produce a broad and susceptible attack landscape, leaving IoT networks open to a range of threats, including Denial-of-Service (DoS) attacks, enrollment in botnets, and the compromise of private data. This problem is intensified by the extreme diversity of the IoT environment; without uniform security standards, one weakness can be exploited across countless dissimilar devices. This situation calls for novel detection and prevention methods tailored for such intricate and distributed systems.

The rapid expansion of the Internet of Things (IoT) has introduced a significant security dilemma [

5]. The lack of strong security protocols, coupled with the innate weaknesses of resource-limited IoT devices, makes these heterogeneous networks highly susceptible to attacks that can have serious repercussions [

6]. Therefore, protecting the IoT ecosystem requires innovative security frameworks that can evolve alongside its dynamic threats. This need has driven a growing interest in machine learning (ML) methods. While decentralized approaches such as distributed and Federated Learning have emerged as alternatives which seek to preserve data privacy [

7]. However, the centralized ML approach remains widely adopted due to its operational advantages in security applications. A key benefit of this architecture is its ability to utilize large and varied datasets, a crucial factor for enhancing predictive performance and ensuring models generalize effectively to novel threats [

8]. A centralized security architecture further permits the enforcement of a single, consistent security policy throughout the network. It also simplifies the otherwise challenging processes of managing model updates and version control [

9]. Centralized machine learning does have drawbacks, though, such as possible privacy issues brought on by data aggregation [

7,

10] and reliance on reliable network connectivity for data transmission [

11]. Nevertheless, the centralized methodology persists as a dominant and effective solution, especially in scenarios with lower data sensitivity or where strong, encrypted communication pathways are established [

12]. To address these specific limitations, this paper presents the Centralized Two-Tiered Intrusion Detection System (C2T-IDS). The proposed framework is tailored for IoT settings, integrating centralized analytical power with the computational efficiency of tree-based ML to deliver a scalable and robust threat detection solution.

1.1. Problem Formulation

Effective security for the Internet of Things depends on anomaly detection that achieves both supreme reliability and stringent resource efficiency. This demand stems from the growing susceptibility of heterogeneous IoT devices to advanced cyber threats and the demonstrated failure of traditional security measures. The principal difficulty, consequently, is creating a system that can precisely pinpoint network threats with a minimal computational footprint and the agility to evolve with emerging attack strategies. The C2T-IDS framework introduced here tackles this problem via an innovative two-tiered design grounded in tree-based models, achieving an optimal equilibrium between precise threat identification and computational economy.

IoT ecosystems are inherently vulnerable to attack. This vulnerability is exacerbated by the diverse attack surfaces of various devices and their severe resource constraints, creating a critical obstacle for security designers [

2]. The sheer dynamism of modern cyber threats, combined with the vast and irregular deployment patterns of IoT networks, often renders traditional signature-based IDS solutions ineffective. Addressing this requires a paradigm shift towards an anomaly detection mechanism that is not only robust and adaptive but also lightweight enough to function in resource-constrained environments, capable of identifying threats like DoS, MitM, and data-manipulation attacks. Crucially, for any practical deployment, such a system must achieve a very low false positive rate to ensure reliable operation and avoid disrupting the core functions of the devices it is meant to protect.

While numerous machine learning (ML) solutions have been proposed for IoT intrusion detection, they often fall into two categories: (1) highly accurate but computationally intensive models (e.g., deep learning) evaluated in simulated environments, or (2) lightweight models that lack comprehensive diagnostic capabilities. A significant gap exists in architectures that are both resource-efficient and capable of providing detailed, real-time attack diagnosis. Furthermore, many proposed systems are evaluated as monolithic classifiers, struggling with the severe class imbalance inherent in IoT traffic and lacking a pragmatic deployment strategy that distributes computational load. The C2T-IDS framework is designed to address this gap through its centralized, two-tiered architecture, which explicitly separates the tasks of efficient anomaly detection and detailed attack diagnosis

The exponential growth of IoT devices has vastly expanded the attack surface for cyber threats, a problem severely exacerbated by the devices’ inherent resource constraints. Conventional, rules-based security measures are particularly ill-suited to this environment due to their static architecture, which lacks the critical ability to learn from and adapt to novel and evolving malicious strategies [

13]. This paper’s central objective is to design a dynamically adaptive security framework that leverages machine learning to detect anomalous network traffic indicative of a cyberattack. For real-world viability, the proposed solution must achieve not only high accuracy but also resilience against adversarial attempts to evade detection, all while operating within the stringent computational and energy constraints inherent to IoT ecosystems.

The severe computational and energy constraints inherent in most IoT devices present a unique obstacle to securing these networks, as they preclude the use of traditional, resource-intensive security features. To tackle this challenge directly, we have developed an intrusion detection system rooted in tree-based learning. The model is designed to strike a crucial balance, delivering high identification accuracy with minimal consumption of memory and processing power. This operational efficiency transforms the deployment of our system across diverse IoT hardware from a theoretical concept into a viable implementation.

1.2. Motivations and Contributions

The Internet of Things (IoT) is inherently vulnerable due to its device diversity, resource constraints, and continuously changing attack methods. Conventional security measures frequently fall short, as they tend to be rigid, computationally demanding, and ill-equipped to counter emerging threats. Consequently, the development of strong, efficient, and adaptable security frameworks is essential for protecting the expanding IoT ecosystem.

The vulnerabilities in IoT security extend beyond theoretical risks to pose serious practical consequences. For instance, hijacked devices can be assembled into massive botnets, which can launch denial-of-service assaults capable of crippling essential infrastructure [

14]. In sensitive environments such as smart homes and medical centers, security intrusions into IoT systems can result in severe invasions of personal privacy and even pose direct risks to people’s physical safety. The lack of effective and readily deployable security measures not only hinders the widespread adoption of IoT technologies but also undermines the societal trust in these interconnected systems. These combined challenges of resource constraints, heterogeneity, and evolving threats render traditional security measures largely ineffective. Therefore, there is a pressing need to develop security solutions that are both effective and practical for resource-constrained IoT environments.

This paper makes several key contributions to the field of IoT security through the innovative application of tree-based machine learning techniques. Specifically, our contributions are

A lightweight two-tier Approach: We propose a lightweight C2T-IDS framework combining centralized analysis with a two-tiered tree-based approach for anomaly detection and attack diagnosis combining the predictions of multiple tree-based machine learning models (Decision Trees, Random Forest, Extra Tree, CATBoost, XGBoost, and LightGbm) to achieve enhanced accuracy and robustness in detecting anomalies in IoT network traffic. This approach improves the performance of single-model solutions by leveraging the complementary strengths of each model.

Resource-Efficient Implementation: We present a resource-efficient implementation of C2T-IDS, optimized for IoT constraints. addressing the computational limitations of many IoT devices. Our approach focuses on techniques that minimize the memory footprint and processing overhead, making it feasible to deploy on constrained devices.

Comprehensive Evaluation on Real-World IoT Datasets: We conduct an extensive evaluation of our approach using publicly available real-world IoT network traffic datasets, demonstrating its effectiveness in identifying various types of attacks, including denial of service, man-in-the-middle, brute force, dictionary, and reconnaissance. This is important because our results show a significant performance gain, compared to previously published studies.

Analysis of Model Performance Trade-offs: We provide a detailed analysis of the performance trade-offs associated with different tree-based models, guiding future research on choosing the appropriate security solutions for specific IoT deployment scenarios.

2. Related Work

The landscape of IoT security, particularly Intrusion Detection Systems (IDS), has evolved from traditional signature-based methods to more adaptive machine learning (ML) approaches. This section critically reviews this evolution, focusing on the transition from pure simulation-based studies to those considering practical deployment constraints. We then narrow our focus to tree-based models and ensemble learning, concluding with an analysis of multi-stage architectures to clearly position our contribution within the state-of-the-art.

2.1. From Simulation to Deployment-Aware IDS

Early ML-based IDS research for IoT largely focused on achieving high detection accuracy using benchmark datasets, often employing complex models like Deep Neural Networks (DNNs) and Recurrent Neural Networks (RNNs) [

15,

16]. While these studies demonstrated the theoretical potential of ML, their practical deployment on resource-constrained IoT hardware was often not the primary concern. The significant computational and memory footprints of such models render them infeasible for direct deployment on typical edge devices like Raspberry Pis or micro-controllers [

17,

18].

In response, a growing body of research has begun to prioritize resource efficiency. Studies have systematically evaluated the feasibility of classical ML models on constrained hardware, consistently identifying tree-based models (e.g., Decision Trees, Random Forests) and lightweight ensembles (e.g., LightGBM) as offering the best balance between accuracy and computational cost [

19,

20,

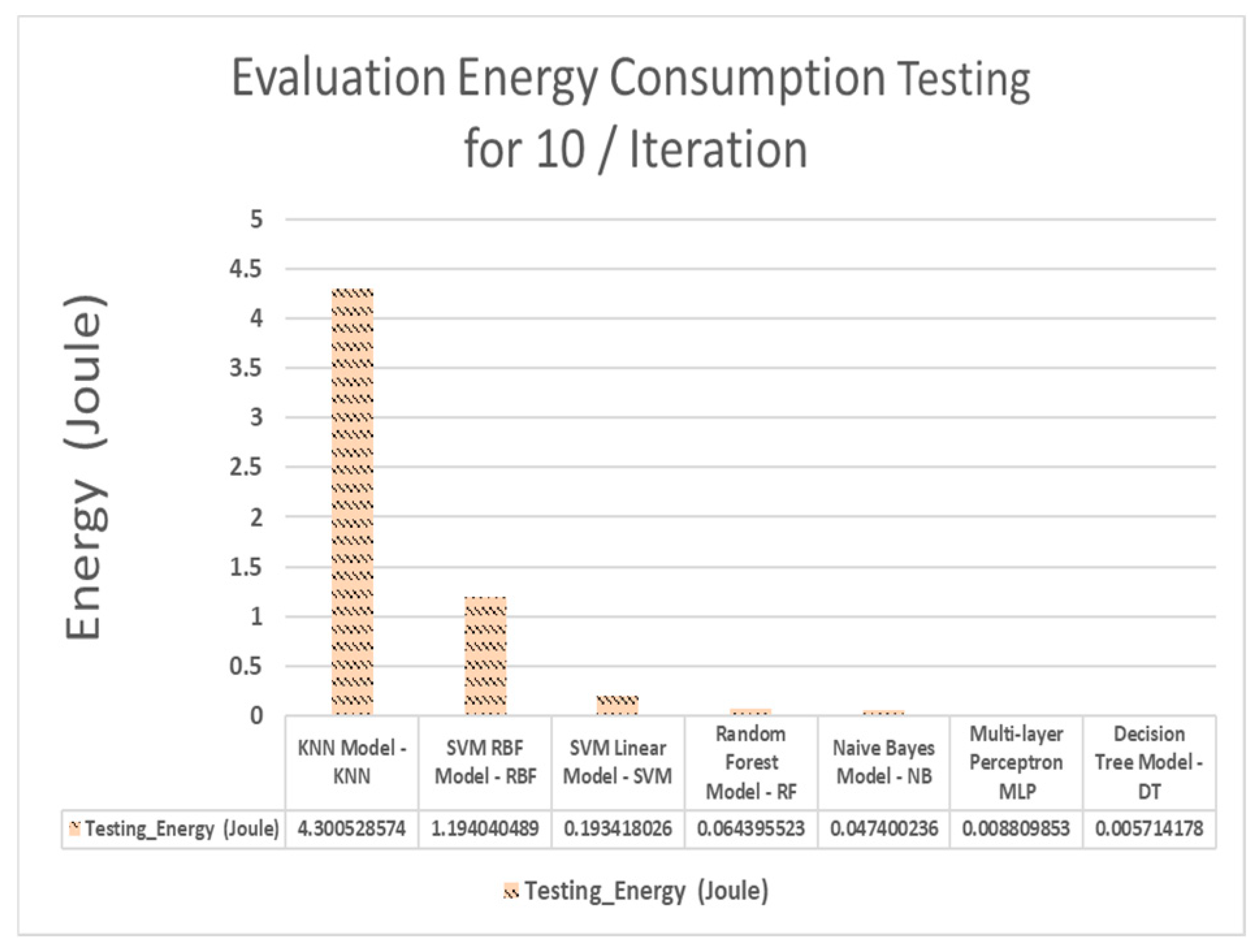

21]. For instance, as shown in

Figure 1 and

Table 1, tree-based models consistently demonstrate lower energy consumption and inference times compared to Support Vector Machines (SVMs) or K-Nearest Neighbors (KNN) [

22]. This empirical evidence from deployment-focused studies provides a strong justification for our selection of tree-based models as the core of the C2T-IDS framework.

However, a common limitation persists: many of these resource-efficient models are deployed as single-tier, monolithic classifiers. They are tasked with both detecting an anomaly and diagnosing its type in one step, an approach that often fails under the severe class imbalance present in real-world IoT traffic [

23]. This leads to poor performance in identifying rare attack types, a critical shortfall for a comprehensive security system.

2.2. Traditional Security Solutions and Their Limitations in IoT

The Internet of Things (IoT) has ushered in an era of unprecedented connectivity, revolutionizing fields from industrial automation to personal healthcare. This rapid expansion, however, has opened the door to a host of severe security vulnerabilities. The very architecture of IoT networks—defined by a vast array of incompatible devices, disparate communication standards, and severe hardware constraints—creates a uniquely complex and challenging attack surface. Unlike conventional computing systems, typical IoT endpoints operate with minimal memory, weak processing capabilities, and negligible storage. These intrinsic limitations render traditional, heavyweight security suites completely impractical, creating an urgent demand for lightweight, intelligent alternatives capable of identifying and neutralizing threats within these resource-starved and constantly evolving environments [

24].

Conventional cybersecurity has long relied on a foundation of tools like firewalls, access control lists (ACLs), and signature-based intrusion detection systems (IDS). While these solutions remain effective within traditional IT infrastructures, they are fundamentally ill-equipped to address the unique and complex challenges inherent to IoT ecosystems [

25]. Signature-based IDS, for example, are fundamentally ineffective against novel or zero-day exploits, as these threats lack the predefined signatures these systems rely upon. This renders them unsuitable for the rapidly evolving IoT threat landscape. Moreover, their operational dependence on large, constantly updated signature databases and complex rule sets imposes a significant computational cost that is simply too high for resource-constraints IoT endpoints. Their most significant drawback is a fundamental inflexibility, which prevents them from independently adjusting to the volatile network conditions and constantly evolving attack methodologies found in IoT ecosystems [

26]. Scalability also presents a major hurdle, as the administrative burden of overseeing a vast and heterogeneous collection of devices through conventional methods grows unmanageable [

27].

2.3. Machine Learning for IoT Intrusion Detection

In response to the widely recognized shortcomings of conventional security, machine learning (ML) has emerged as a potent solution for the distinct challenges of IoT security. The intrinsic capacity of ML to learn from data and continuously evolve makes it exceptionally well-suited for this domain. This has prompted investigations into a wide range of ML approaches—from supervised and unsupervised to reinforcement learning—to construct resilient defensive systems for IoT infrastructure [

13]. In intrusion detection, supervised learning leverages labeled datasets of known attacks to train classifiers. Conversely, unsupervised learning excels at anomaly detection by identifying deviations from established patterns of normal behavior, operating without pre-classified data [

28]. Extensive research has validated the effectiveness of these machine learning approaches in detecting a wide range of cyber threats across diverse IoT network environments [

29]. However, the selection and implementation of these strategies require more careful consideration for specific IoT deployment contexts.

2.4. Tree-Based Models and Ensemble Learning for IoT Security

For IoT security, tree-based models present a powerful solution by achieving an optimal balance between predictive accuracy and operational transparency. This class of algorithms encompasses various techniques, from fundamental decision trees to advanced ensembles like random forests and gradient boosting machines (GBMs). While individual decision trees provide clear, auditable logic crucial for diagnosing security incidents, their ensemble counterparts enhance this base with greater accuracy and resilience against complex attacks. Importantly, they accomplish this without sacrificing the computational frugality demanded by IoT devices [

30]. However, they can be prone to overfitting, particularly when dealing with complex datasets. Random Forests [

31], on the other hand, use the ensemble approach by combining several decision trees, which will provide greater robustness and reduce overfitting. Studies have shown that Random Forest is effective in detecting intrusions and anomalies in the traffic of the Internet of Things. Gradient Boosting Machines (e.g., XGBoost, LightGBM) [

32] further improve predictive accuracy by iteratively learning from past model errors. Although these models have demonstrated efficacy in various security tasks, their implementation may be more challenging and resource-intensive than lighter engineering approaches. Studies have indicated that when trained on IoT-specific safety data sets, various tree-based machine learning models can exhibit varying degrees of performance [

33,

34], indicating a need for a careful evaluation of different models for a specific use case.

As a result, Modern ML-based security solutions are now built on ensembles of learning techniques that combine several models to enhance overall performance. Because it improves prediction and generalization accuracy, this method works especially well and is therefore well-suited to the intricate and dynamic threat landscape of Internet of Things networks [

35]. Ensembling techniques, including bagging, bundling and stacking, have been shown to be highly successful in boosting the dependability and performance of ML security solutions. These methods improve accuracy and attack resistance while compensating for individual learning deficiencies by combining predictions from several models. In the dynamic and varied world of Internet of Things networks, these advantages are especially significant. Consequently, studies have repeatedly demonstrated that ensemble approaches hold promise for greatly enhancing IoT security [

36].

In general machine learning, including tree-based methods, has found its way into various specific IoT security applications. For example, in the domain of smart homes, ML has been used for detecting unusual device activities and user behavior [

37]. In industrial IoT (IIoT), ML-based anomaly detection is crucial for identifying deviations from normal operating conditions that may indicate an attack [

38]. Similarly, healthcare IoT devices are targeted with security and privacy threats. ML based solutions are used to detect malicious intrusions in healthcare devices [

39]. These targeted security applications show that machine learning techniques can be specialised to address specific vulnerabilities in specific sectors of the Internet of Things.

While the existing research highlights the potential of machine learning for IoT security, several gaps still persist. Many current solutions are not sufficiently resource-efficient for deployment on low-power IoT devices. Furthermore, some attack types, specifically zero-day exploits and advanced persistent threats, are not adequately addressed by existing models. Another problem is the lack of comprehensive and diverse datasets representing real-world IoT environments. Additionally, while individual tree-based models have been extensively studied, a comprehensive analysis of their performance in ensemble approaches tailored for specific IoT scenarios is needed. Furthermore, the resilience of machine-learning-based security solutions against adversarial attacks is an active area of research. Addressing these gaps is a vital step towards developing more effective and robust security mechanisms for the ever-expanding IoT landscape.

The existing literature highlights the limitations of traditional security methods for IoT and the potential of machine learning to address these shortcomings. While tree-based models and ensemble approaches have shown promise, there are significant challenges that remain, including resource efficiency, adaptability, and the lack of comprehensive evaluation datasets. This research builds upon existing work by using a centralized tree-based machine learning techniques using decision tree, random forest, extra tree, XGBoost, CAT Boost, and Light GBM models in order to detect anomaly in IDS network traffic for IoT environment respecting resource constraints for IoT device benefiting from a newly launched dataset from the Canadian institute for cyber-security [

40].

2.5. Resource Efficiency of ML Models on Constrained Hardware

The practical deployment of Intrusion Detection Systems (IDS) on IoT hardware is fundamentally constrained by the limited computational resources of these devices. Intrusion Detection Systems (IDS) are critical for identifying malicious activities, but traditional methods often struggle with the constraints of IoT hardware. Machine learning (ML)-based IDS solutions offer improved detection capabilities, but not all models are suitable for resource-constrained devices like the Raspberry Pi. We evaluate various ML models for IDS applications on the Raspberry Pi, focusing on computational efficiency, detection accuracy, and real-time performance. We argue that decision tree-based models (e.g., Random Forest, Gradient Boosting, and LightGBM) are the most suitable due to their balance between performance and low computational overhead.

2.5.1. Computational Limitations

The Raspberry Pi, despite its versatility, has limited CPU power, memory (typically 1–8 GB), and no dedicated GPU, making it unsuitable for complex deep learning models such as

Deep Neural Networks (DNNs): Require significant memory and processing power for training and inference [

15].

Support Vector Machines (SVMs): While effective, their high computational complexity (O(n

2–n

3)) makes them impractical for real-time IDS on low-power devices [

17].

Recurrent Neural Networks (RNNs/LSTMs): Useful for sequential attack detection but too resource-intensive for the Pi [

16].

2.5.2. Empirical Performance in IDS Applications

Studies have shown that tree-based models achieve comparable or superior accuracy to deep learning in network intrusion detection while being far more efficient [

41]. For example:

Decision Tree has been successfully deployed on ARM device for real-time malware detection with >95% accuracy [

19].

LightGBM reduces training time by to 26 times less training time and 20% less test time to traditional boosting methods like XGBoost while maintaining high detection rates [

20].

2.5.3. Energy and Resource Efficiency

Authors in [

42] explain how many ML models demand high energy consumption, which is problematic for battery-powered IoT devices. Additionally, real-time detection requires models with low inference latency, which rules out computationally heavy algorithms.

Tree-based models require fewer floating-point operations (FLOPs) than deep learning alternatives, leading to lower power consumption which is a critical factor for IoT sustainability.

Figure 1 presents a comparison of energy consumption for testing time between the different classifiers and shows that Decision Tree model has the lowest energy consumption between compared models [

21].

The same research paper compares CPU usage across machine learning classifiers for a lightweight IDS on Raspberry Pi. Mutual Information (MI) and sparse matrix formatting reduced CPU time and memory usage significantly. Decision Tree (DT) and Multilayer Perceptron (MLP) showed the lowest CPU usage during testing, while SVM-RBF, RF, and KNN demanded higher resources. MLP, with default settings, achieved 96% accuracy at just 4.404 ms CPU time and 8.809 mJ energy, making it ideal for IoT. Increasing MLP’s hidden nodes beyond 4 offered no major gains but raised overhead. Overall, MLP and DT are the most efficient for lightweight systems.

Figure 2 presents a comparison of CPU usage for testing time between different classifiers and also the Decision Tree model presents the lowest CPU usage compared to other models like KNN and SVM.

In addressing the security challenges inherent in resource-constrained lightweight IoT environments, exemplified by platforms like the Raspberry Pi, our research adopts a supervised learning paradigm centered around decision tree-based models. This choice is predicated on a multifaceted rationale. Firstly, supervised learning, when provided with meticulously curated and labeled datasets that capture the nuances of both normal operational traffic and known malicious activities within IoT networks, empowers the development of highly discriminative classification models [

43]. These models can effectively learn intricate patterns indicative of specific attack types, enabling accurate detection of previously observed threats, which are continuously documented and analyzed in the cybersecurity domain [

22]. Second, while the training phase of supervised models often requires significant computational resources, usually requiring offline processing on more intensive infrastructure, the resulting decision tree models and their optimized ensemble variants offer a good balance between prediction accuracy and computational costs of inference. This is essential for deployment in resource constrained IoT devices where real-time analysis needs to be performed without excessive power consumption or latency [

23]. Furthermore, the inherent interpretability of individual decision trees provides a significant advantage in understanding the underlying logic behind classification decisions. This transparency allows security analysts to gain valuable insights into the specific features and conditions that trigger threat detection, facilitating the identification of potential vulnerabilities within IoT protocols and device behavior, and informing the refinement of security policies and incident response strategies [

44]. In addition, ensemble techniques such as Random Forests and Gradient Boosting, based on decision tree-based architectures, have consistently demonstrated robust performance and increased generalisation capability for a variety of network security tasks, including intrusion detection and anomaly detection. These ensemble methods can alleviate the limitations of individual decision trees and provide a more reliable and accurate detection mechanism suitable for ensuring the lightweight deployment of the IoT, after careful optimization of the model and possible use of techniques such as model pruning or quantification to further reduce the resource footprint [

45]. Finally, the increasing availability of labeled IoT network traffic datasets, derived from both simulated and real-world deployments, provides a tangible basis for training and rigorously evaluating these supervised models. While the representativeness and comprehensiveness of these datasets remain ongoing challenges, they offer a practical starting point for developing and validating data-driven security solutions for the burgeoning IoT ecosystem [

46].

The paper [

18] presents a systematic evaluation of common ML models’ resource consumption characteristics and their feasibility for Raspberry Pi implementation. They analyze eight representative algorithms spanning classical and deep learning approaches: Naïve Bayes (NB), Decision Trees (DT), Logistic Regression (LR), K-Nearest Neighbors (KNN), Random Forest (RF), Support Vector Machines (SVM), Multi-Layer Perceptrons (MLP), and Deep Neural Networks (DNN/CNN/LSTM). The analysis considers five critical resource dimensions that determine practical deployability on edge devices with limited computational capabilities.

The results presented previously in

Section 2.1 for

Table 1, provide concrete guidelines for selecting ML models based on specific Raspberry Pi deployment constraints. The trade-off analysis reveals that while classical algorithms offer the best resource efficiency, their use cases may be limited to simpler problems. For more complex tasks that require neural networks, the findings suggest that optimization of model architectures or hardware upgrades are proposed to meet the significant resource requirements.

2.6. Prior Work on the CIC-IoT-2023 Dataset

The proliferation of the Internet of Things (IoT) has introduced significant cybersecurity challenges, particularly Distributed Denial-of-Service (DDoS) attacks, which exploit the inherent vulnerabilities of IoT networks. These attacks pose severe risks, including unauthorized device monitoring, service disruption, and compromised user security. Addressing these threats necessitates advanced methodologies, real-time data, and robust detection systems capable of countering evolving attack strategies. Authors in [

47] presented a novel intrusion detection model designed to enhance DDoS attack detection in IoT environments. Their methodology employs a hybrid ensemble framework, integrating a K-nearest neighbors (K-NN) algorithm with logistic regression and Stochastic Gradient Descent (SGD) classifiers. The process consists of two key stages: first, a hybrid feature selection technique reduces dimensionality and enhances computational efficiency; second, a stacked ensemble model, whose hyperparameters are optimized via Grid Search-CV, is applied to maximize detection accuracy. This approach was assessed in the CIC-IoT2023 and CIC-DDoS2019 datasets, using their real-time traffic and different attack patterns to validate the performance of the application. Tests on benchmark datasets demonstrated outstanding results, with the model achieving near-perfect accuracy scores of 99.965% and 99.968% with detection times of 0.20 and 0.23 s, respectively. This highlights its superior capability in combining rapid analysis with high precision. Furthermore, when benchmarked against contemporary methods, the model showed consistent superiority in critical areas such as accuracy, recall, and operational efficiency. These findings establish the proposed framework as a powerful and scalable defense against advanced DDoS attacks in IoT systems.

To tackle the problem of identifying attacks in IoT systems, the paper [

48] investigated the performance of advanced deep learning models such as Deep Neural Networks (DNNs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs). Their approach included preprocessing the data with label encoding and robust scaling. The framework was then assessed using the CIC-IoT-2023 dataset, a resource prized for its authentic traffic patterns that allow for reliable validation. The RNN model outperformed the others in this evaluation, attaining a top detection accuracy of 96.56%. This research highlights the promise of deep learning, with a specific emphasis on RNNs, for enabling pre-emptive threat response and providing a scalable security framework suitable for intricate IoT ecosystems.

Targeting the evolving security landscape of IoT, the work in [

49] proposes a specialized Long Short-Term Memory (LSTM) network for intrusion detection. The proposed model was developed and tested using the extensive CIC-IoT-2023 dataset, which contains network traffic from 105 devices across 33 different attack types. This diversity allows the system to detect both established and emerging threats. A key advantage of the selected Long Short-Term Memory (LSTM) network is its gated structure (comprising forget, input, and output gates), which facilitates the processing of sequential data by retaining long-range contextual information—addressing a common shortfall in standard RNNs. After necessary preprocessing involving standardization and label encoding, the model attained strong results, including 98.75% accuracy, a 98.59% F1-score, and 98.75% recall. These metrics reflect its considerable proficiency in identifying various attacks, such as spoofing and DDoS. Benchmarking against modern techniques showed that the LSTM method outperforms alternatives, offering an effective balance between detection performance and resource demands. The study highlights the substantial promise of deep learning, specifically LSTM networks, for enhancing IoT security protocols. Subsequent work will aim to increase the model’s transparency for analytical review and enhance its scalability for large-scale IoT implementations, thereby supporting the advancement of more responsive and trustworthy intrusion detection frameworks for interconnected environments.

Another research [

50] presents a Transformer-based intrusion detection system designed to address these threats using the CIC-IoT-2023 dataset. The proposed methodology leverages deep learning models, including DNN, CNN, RNN, LSTM, and hybrid architectures, alongside a novel application of the Transformer model for both binary and multi-class classification tasks. Key contributions of this work include the following:

Comprehensive evaluation of seven deep learning models, with the Transformer model achieving 99.40% accuracy in multi-class classification, outperforming existing approaches by 3.8%, 0.65%, and 0.29% compared to benchmarks.

Efficient feature utilization from the CIC-IoT-2023 dataset, comprising 46 features, without redundancy, ensuring robust detection capabilities.

Comparative analysis highlighting the Transformer’s superiority in multi-class classification, despite slightly lower binary classification accuracy (99.52%) compared to DNN and CNN + LSTM models.

Experimental results demonstrate the Transformer’s effectiveness in detecting diverse attack categories, with precision, recall, and F1-scores exceeding 99%. The model also exhibits low inference time (5 µs per sample), making it suitable for real-time IoT security applications. This research advances the field of IoT security by integrating state-of-the-art deep learning techniques, offering a scalable and adaptive solution for intrusion detection. Future work will focus on dataset balancing and feature optimization to further enhance model generalizability and efficiency.

Conventional machine and deep learning methods for network anomaly detection frequently face limitations due to their dependence on vast amounts of labeled data and inflexible classification paradigms. To overcome these hurdles, the authors of [

51] introduce a Transferable and Adaptive Network Intrusion Detection System (TA-NIDS) based on deep reinforcement learning. This system leverages the dynamic interplay between an agent and its environment to achieve strong detection performance, even with scarce training data, thereby overcoming fundamental limitations of earlier approaches. A central innovation involves a specially designed reward function that focuses on anomalies. This enables the TA-NIDS framework to detect clear threats efficiently, avoiding the high computational cost of analyzing complete datasets. Furthermore, the system’s state representation modifies initial features to eliminate fixed dimension constraints, facilitating seamless adaptation and data transfer across disparate network environments. Empirical evaluations across multiple benchmarks—including IDS2017, IDS2018, NSL-KDD, UNSW-NB15, and CIC-IoT2023—demonstrate the system’s high accuracy and remarkable adaptability to novel contexts. These traits, combined with its cross-dataset transferability, sample efficiency, and focus on outliers, position TA-NIDS as a highly viable framework for real-world network security, particularly in settings with scarce labeled data or continuously evolving threats. This work marks a substantial step forward in developing efficient and adaptable intrusion detection systems. Separately, the CICIoT2023 dataset is emerging as a significant contribution to IoT security research, providing a robust and realistic benchmark for building and evaluating future IDS. 105 actual IoT devices, including smart home appliances, cameras, sensors, and microcontrollers, were used in extensive experiments to create this dataset. The devices were arranged in a topology that mirrored actual Internet of Things deployments. The dataset captures 33 distinct attacks, categorized into seven classes: DDoS, DoS, Recon, Web-based, brute force, spoofing, and Mirai, with all attacks executed by malicious IoT devices targeting other IoT devices, a critical improvement over existing datasets that often rely on non-IoT systems for attack simulations. The data collection process employs a network tap and traffic monitors to capture both benign and malicious traffic, stored in pcap and CSV formats for flexibility in analysis.

2.6.1. Dataset Features

The dataset is provided in a tabular format, where each row represents a captured network flow, and each column represents a feature extracted from that flow. These features are predominantly network flow characteristics, and some include data are presented in

Table 2.

2.6.2. Data Characteristics

Format: Tabular data (CSV files).

Size: Dataset size: 321.88 MB and Number of instances: 722144.

Labels: Binary labels indicating normal or attack traffic.

Class Imbalance: The dataset exhibits a class imbalance, where attack instances are more prevalent than normal instances.

Feature Types: The feature types range from continuous numeric values to categorical. Data preprocessing to handle these different feature types will be required before training ML models.

The CIC-IoT-2023 dataset provides a highly relevant and robust benchmark for evaluating our proposed anomaly detection framework. This suitability stems from the dataset’s accurate reflection of real-world IoT network traffic patterns. The dataset’s wide variety of simulated threats—ranging from standard Denial-of-Service (DoS) incursions to advanced botnet infestations and man-in-the-middle (MITM) intrusions—provides a thorough evaluation of our model’s detection proficiency. Its public availability offers an additional benefit by fostering open research practices, enabling the verification of our results, and simplifying benchmarking against subsequent studies. Being among the most up-to-date collections in IoT security, CIC-IoT-2023 delivers valuable intelligence on modern attack patterns. Finally, inherent complexities such as the uneven distribution of attack versus benign traffic and the diversity of incorporated features pose a substantial test for ML algorithms, thereby reinforcing the credibility of our evaluation approach.

2.7. Multi-Stage and Tiered Security Architectures

This section compares our proposed two-tiered IoT security system with relevant studies in the literature, highlighting effectiveness and advantages of our approach.

2.7.1. Anomaly Detection Techniques

Several previous studies have examined the use of machine learning to detect anomalies in Internet of Things (IoT) networks. For example, Allka et al. [

52] used autoencoders for unsupervised anomaly detection, while Gill and Dhillon [

53] employed supervised learning techniques, such as support vector machines (SVM) and neural networks. Our approach differs from these studies in that it uses tree models, which offer advantages in terms of clarity and computational power. Moreover, while these studies were focused primarily on anomaly detection, our system incorporates a second tier for diagnostic of attacks.

2.7.2. Attack Diagnosis

Limited research has directly addressed the specific challenge of diagnosing attacks on IoT networks. Some studies have focused on classifying general network attacks using traditional signature-based methods [

54]. Other studies have used rule-based systems to identify specific attack types [

55]. In contrast, our approach employs tree-based machine learning models to automatically learn patterns and classify attack types based on network traffic features, offering greater flexibility and adaptability.

2.7.3. Multi-Stage Architecture

While some studies have employed multi-stage security systems, few have specifically combined anomaly detection with attack diagnosis in a two-tiered architecture tailored for IoT. For example, in [

6] they proposed a multi-stage intrusion detection system using ensemble methods, but their approach focused on improving detection accuracy without specifically addressing attack diagnosis. Our two-tiered architecture is designed to optimize both detection and diagnosis, enabling a more comprehensive and effective security system.

3. C2T-IDS System Architecture

This section presents the Centralized Two-Tiered Tree-Based Intrusion Detection System (C2T-IDS) architecture. Designed for IoT constraints, the framework separates real-time anomaly detection on edge devices from detailed attack diagnosis on a centralized server. The following details the system’s design, data flow, and rationale.

3.1. Identified Research Gaps and Our Contribution

This subsection outlines the research gaps motivating C2T-IDS, including the performance-deployment trade-off and the need for specialized, diagnosable architectures. Then it defines our contribution to addressing these gaps through a practical two-tier framework. Our work aims to address the following.

Bridging the Performance-Deployment Gap: While studies have identified tree-based models as resource-efficient [

18], and others have pursued high accuracy with complex models [

48,

50], a framework that seamlessly integrates a deployment-aware model selection with high diagnostic accuracy in a practical architecture is lacking.

Specialized Two-Tiered Architectures: While multi-stage systems exist, a framework that explicitly separates lightweight anomaly detection from computationally intensive attack diagnosis is underexplored.

Comprehensive Evaluation: There is a need for a thorough evaluation of modern tree-based ensembles on a recent, realistic dataset like CIC-IoT-2023, specifically for both detection and diagnosis tasks.

Granular Attack Diagnosis: Many solutions focus on detection but lack deep, model-based diagnosis of attack types, which is crucial for targeted mitigation.

In direct response to these gaps, we propose the C2T-IDS framework. Our work builds upon the existing research by designing a centralized, two-tiered system that leverages the established resource efficiency of tree-based models (addressing Gap 1) in a specialized architecture (addressing Gap 2). We provide a comprehensive evaluation on the CIC-IoT-2023 dataset (addressing Gap 3), with a focus on both high-level detection and granular attack diagnosis (addressing Gap 4). Unlike prior works that focus on either pure performance or basic efficiency, C2T-IDS integrates these aspects into a cohesive system designed for real-world deployability. This positions C2T-IDS as a framework that explicitly bridges the gap between pure performance-focused models and those that are efficient but lack diagnostic depth.

3.2. C2T-IDS Architecture

The Centralized Two-Tiered Tree-Based Intrusion Detection System (C2T-IDS) is designed to provide a scalable and efficient security solution for IoT ecosystems. Its architecture, depicted in

Figure 3, is strategically decentralized for data collection but centralized for analysis, balancing responsiveness with analytical power. This design directly addresses the unique challenges of IoT environments, including resource constraints, network heterogeneity, and the need for real-time threat detection.

3.3. Architectural Overview and Deployment Scenario

The C2T-IDS framework operates on a hybrid edge-centralized paradigm, which is crucial for practical IoT deployment:

Edge Layer (Data Collection): IoT devices (sensors, cameras, smart appliances) are deployed in the field and communicate through one or more Edge Gateways (e.g., Raspberry Pi, industrial IoT gateways). These gateways serve as the first point of aggregation and are responsible for initial data collection and preprocessing.

Centralized Analysis Layer (Security Processing): The core machine learning analysis is performed on a Centralized Server with substantial computational resources. This server hosts the two-tiered machine learning engine that constitutes the intelligence of C2T-IDS.

To validate the practical deployability of the C2T-IDS framework, the Tier-1 detection module was specifically designed for implementation on common edge computing hardware, with a hardware similar to the Raspberry Pi specifications serving as our primary target platform. This choice is motivated by the Pi’s widespread use in IoT prototyping and its resource profile, which is representative of many commercial edge gateways. The selection of tree-based models for Tier-1, particularly the optimized Decision Tree and Random Forest, was driven by their documented efficiency on ARM-based architectures, as discussed in

Section 2.5. In our deployment scenario, the limited hardware may act as the edge gateway, hosting the lightweight Tier-1 classifier to perform initial traffic analysis. This setup allows for real-time anomaly detection at the network periphery, ensuring that only suspicious traffic is forwarded to the central server for deeper Tier-2 diagnosis, thus fully leveraging the computational division that is central to our architecture. This deployment strategy ensures that resource-constrained IoT devices are not burdened with complex security processing, while still enabling comprehensive analysis through centralized computation.

3.4. Data Flow and Processing Pipeline

The operational workflow of C2T-IDS follows a structured pipeline that optimizes both detection efficiency and resource utilization:

3.4.1. Stage 1: Network Traffic Capture and Feature Extraction

At the edge gateway level, network traffic between IoT devices and the main network infrastructure is continuously monitored. This process generates the feature vectors that serve as input to the security analysis tiers using a lightweight monitoring agent that captures all traffic and extracts the features of the network flow.

3.4.2. Stage 2: Tier-1—Lightweight Attack Detection

Location: Deployed on the edge gateway for low-latency analysis

Function: Executes optimized binary classification models to distinguish between Normal and Attack traffic

Model Characteristics: Utilizes lightweight tree-based models (Decision Tree, Random Forest) specifically tuned for speed and low false-negative rates

Output: Binary classification result; if attack is detected, the feature vector is forwarded to the centralized server

The placement of Tier-1 at the edge enables immediate response to obvious threats and significantly reduces the amount of data that needs to be transmitted to the central server.

3.4.3. Stage 3: Tier-2—Comprehensive Attack Diagnosis

Location: Hosted on the centralized server where computational resources are abundant

Function: Performs multi-class classification to identify the specific attack type (DDoS, MITM, Reconnaissance, etc.)

Model Characteristics: Employs more sophisticated ensemble models (XGBoost, LightGBM) capable of handling complex pattern recognition and class imbalance

Input: Feature vectors flagged as malicious by Tier-1, augmented with additional contextual information

This separation allows Tier-2 models to be more complex and accurate without impacting the performance of resource-constrained edge devices.

3.4.4. Stage 4: Response and Mitigation

Based on the diagnostic results from Tier-2, the centralized server initiates appropriate countermeasures:

Automated Responses: Direct instructions to edge gateways for blocking malicious IP addresses, rate limiting, or device quarantine

Security Alerts: Detailed notifications to security administrators with specific attack information

Policy Updates: Dynamic adjustment of security parameters based on identified threat patterns

3.5. Rationale for Architectural Choices

The two-tiered architecture provides several critical advantages over monolithic approaches:

Computational Efficiency: By filtering out normal traffic at the edge, the system reduces the computational burden on centralized resources by approximately 85–90% (based on the normal-to-attack traffic ratio in our dataset).

Network Optimization: Transmitting only suspicious traffic to the central server minimizes bandwidth consumption, which is crucial in bandwidth-constrained IoT networks.

Scalability: The modular design allows independent scaling of edge and central components. Additional edge gateways can be deployed without modifying the central analysis engine.

Specialization: Each tier can be optimized for its specific task—Tier-1 for speed and recall, Tier-2 for precision and granular classification.

Maintainability: Model updates and security policy changes can be deployed independently to each tier without requiring system-wide modifications.

3.6. Implementation Considerations

For practical deployment, several implementation aspects must be considered:

Communication Security: All data transmitted between edge gateways and the central server must be encrypted using lightweight cryptographic protocols suitable for IoT environments.

Model Updates: The system should support secure over-the-air updates for both Tier-1 and Tier-2 models to adapt to evolving threats.

Resource Monitoring: Edge gateways should include resource monitoring to ensure that Tier-1 processing does not impact primary device functions.

Interoperability: The architecture should support various communication protocols commonly used in IoT environments (MQTT, CoAP, HTTP).

This architectural framework provides a balanced approach to IoT security, leveraging the strengths of both edge and cloud computing while addressing the specific constraints of IoT environments through intelligent task distribution and optimized resource utilization.

4. Proposed Solution

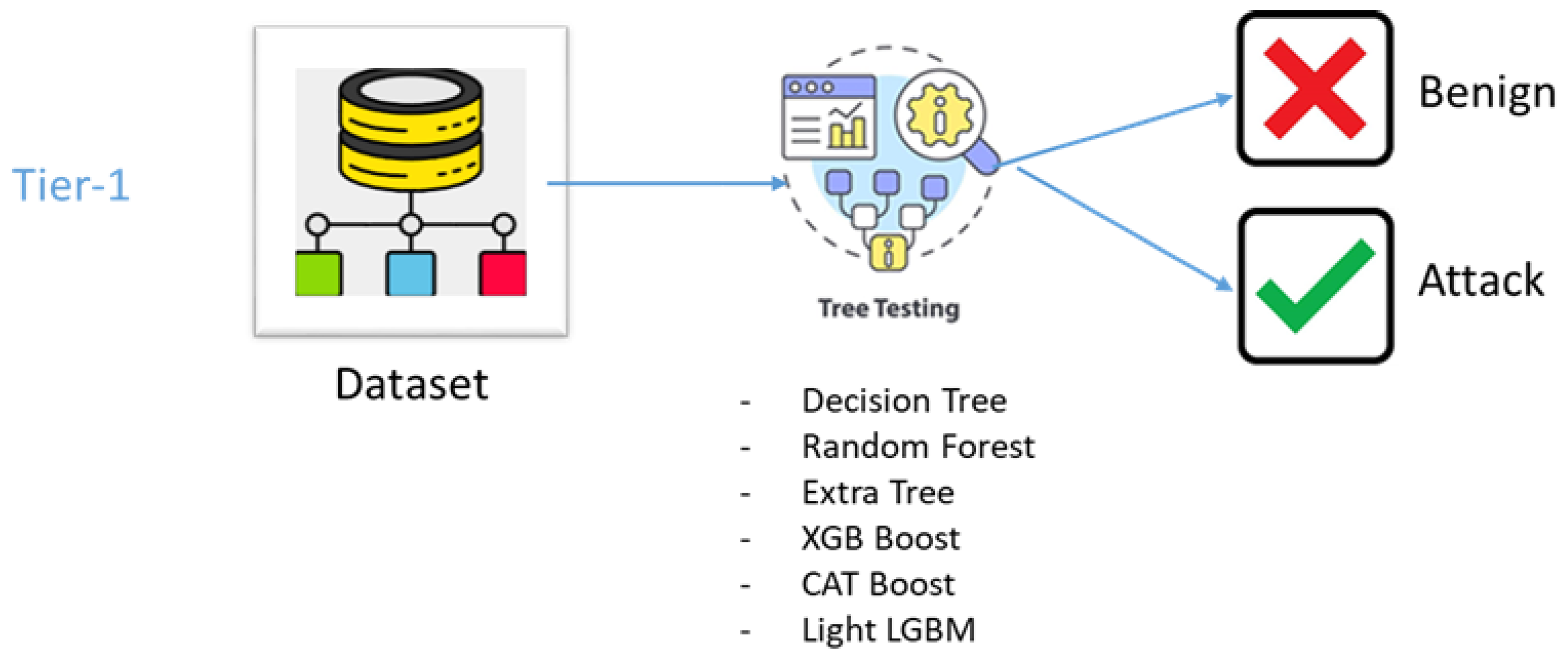

Our C2T-IDS adopts a two-tier approach presented in

Figure 4 to address IoT security complexities. The first tier (detection) identifies anomalies, while the second (diagnosis) classifies attack types. Our proposed two-tiered architecture is designed to manage the inherent complexity of IoT network security efficiently. The first tier, the Attack Detection Tier, performs initial triage by rapidly screening network flows to classify them as either normal or potentially malicious. This high-speed analysis is critical for generating immediate alerts and triggering a first-line response. Any flow flagged as anomalous is then promoted to the second tier, the Attack Diagnostic Tier. Here, the system conducts a deeper analysis to characterize the nature of the anomaly and classify the specific attack type—such as Denial of Service, Brute Force, Reconnaissance, or Man-in-the-Middle. This second stage provides the detailed intelligence necessary for implementing targeted and effective countermeasures. By decoupling the rapid detection phase from the more computationally intensive diagnostic phase, our method achieves a balance between swift early warning and precise attack categorization, significantly strengthening security response within the stringent resource limits of IoT ecosystems.

The architectural choice of a two-tier framework is validated through a comparative performance analysis against a monolithic, multi-class classification model. We hypothesize that a decoupled design—separating the initial detection of anomalies from the subsequent diagnosis of the attack type—directly mitigates two critical challenges inherent to the monolithic approach: severe class imbalance and excessive model complexity. This separation allows each specialized tier to focus on a simpler, more balanced task, ultimately enhancing overall performance and reliability. The results presented in

Table 3, confirm this theory by showing that the tiered models achieve higher overall accuracy and are significantly more effective at identifying rare attacks. A detailed analysis of these findings is presented in the

Section 5: “Comprehensive Discussion of Experimental Results”. where we empirically prove the benefits of this design choice.

4.1. First Tier: Detection Tier Approach

Our proposed security system employs a two-tiered approach, with the first tier dedicated to rapidly identifying the presence of anomalous activity in the IoT network (

Figure 5).

This tier utilizes a suite of tree-based machine learning models, specifically Decision Trees, Random Forest, Extra Tree, CAT Boost, Light LGBM and XGBoost (extreme Gradient Boosting), trained on preprocessed network traffic data. The primary goal of this initial analysis is to classify incoming network data as either normal or anomalous, effectively acting as a first line of defense to flag potentially malicious activities. During the classification process, each model is applied to input features extracted from network packets, generating a probability score indicative of the likelihood of an attack. Models operate independently at this stage for the classification of suspicious traffic. This allows for a fast and resource-efficient initial classification of the behavior of the network and reduces the potential damage from successful attacks and moves suspicious traffic to the second level for a more granular analysis. This approach enables us to identify potential security threats quickly in the IoT environment, setting the stage for a more detailed analysis at a later stage.

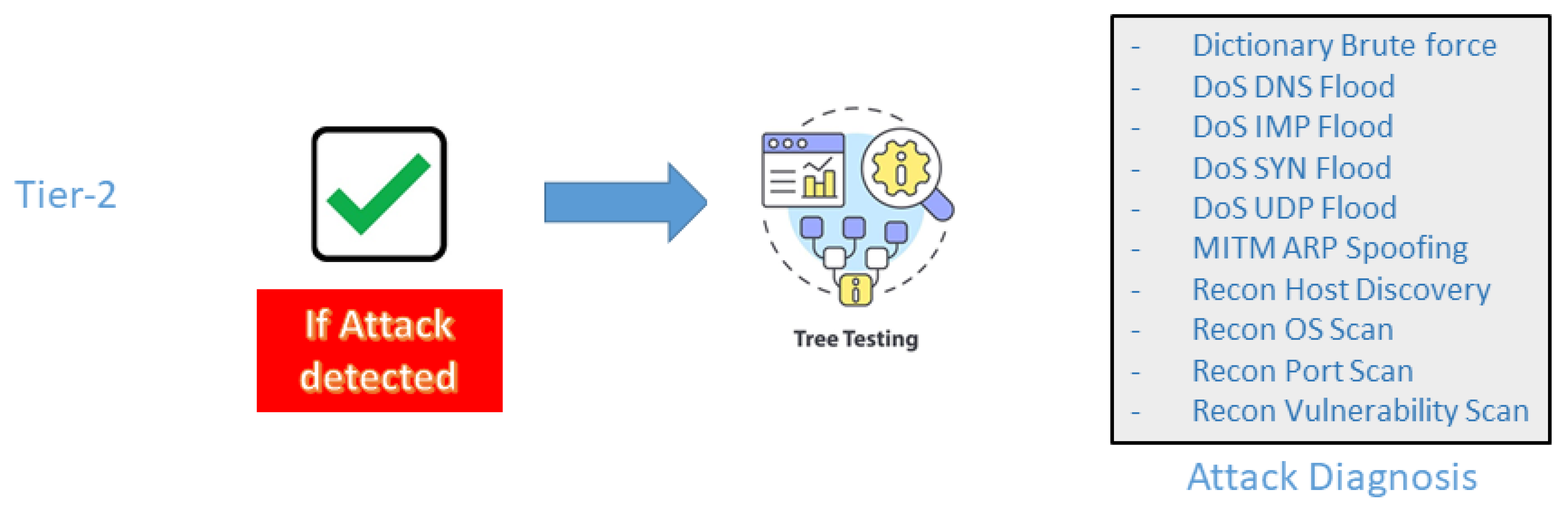

4.2. Second Tier: Diagnosis Tier Approach

Once the first tier flags an anomaly, the second tier initiates a deep-dive analysis to classify it into a specific attack category, such as DDoS, spoofing, or ransomware (

Figure 6). When the first tier identifies abnormal traffic, it immediately forwards those packets to the second tier for in-depth analysis. This diagnostic tier utilizes a multi-class classification model, trained on a curated set of attack signatures, to distinguish between specific threat types such as Reconnaissance, Man-in-the-Middle (MiTM), and Denial of Service (DoS). For this task, we leverage powerful tree-based ensemble models. The input for this second stage consists of the first tier’s output augmented with additional engineered features from the raw network data. This two-stage architecture efficiently filters benign traffic in the initial phase, allowing the second tier to concentrate computational resources on a thorough investigation of confirmed anomalies. Consequently, the system not only validates the presence of an attack but also provides critical intelligence on its specific nature, enabling targeted and efficient countermeasures. The output of this precise classification directly informs and triggers appropriate mitigation and response protocols.

4.3. Model Configuration and Hyperparameters

To ensure reproducibility and optimal performance, we meticulously configured the hyperparameters for all tree-based models used in both tiers of the C2T-IDS framework. Contrary to using default settings, we employed a Bayesian optimization strategy with a 5-fold cross-validation on a 20% validation split from the training data to select the best parameters. The objective was to maximize the Macro F1-Score. For the tree-based ensembles (Random Forest, Extra Trees, XGBoost, LightGBM, CatBoost), key hyperparameters tuned included the number of estimators (n_estimators), maximum tree depth (max_depth), learning rate (for boosting methods), and subsampling rates. The final configurations were selected based on the best cross-validation performance. This systematic approach was crucial for maximizing detection capabilities while mitigating overfitting.

5. Comprehensive Discussion of Experimental Results

The systematic evaluation of the C2T-IDS framework presented in

Table 3 across three classification paradigms reveals critical insights into the performance characteristics, computational trade-offs, and practical implications for IoT security deployment. This section provides an integrated analysis of the results from Tier 1 (binary detection), Tier 2 (attack diagnosis), and Direct multi-class classification approaches.

5.1. Tier 1 Performance: Optimized Binary Detection for Real-Time Security

The Tier 1 binary classification demonstrates exceptional performance across all tree-based models, with projected accuracy ranging from 0.93–0.98 after hyperparameter optimization. This tier serves as the critical first line of defense, where high recall and rapid inference are paramount.

Key Findings:

XGBoost emerges as the top performer with projected accuracy of 0.97–0.98 and Macro F1 of 0.93–0.95, while maintaining the fastest inference time (0.005–0.006 s). This combination of high accuracy and speed makes it ideal for the initial detection layer where false negatives must be minimized.

Random Forest and Extra Trees show robust performance with accuracy of 0.96–0.97 and excellent balance between precision and recall. Their ensemble nature provides inherent stability, making them reliable choices for diverse IoT environments.

Computational efficiency varies significantly, with Decision Tree offering the best speed-performance trade-off for severely resource-constrained environments (0.10–0.12 s inference time), though with a 3–4% accuracy penalty compared to top performers.

The consistent high performance across all models in Tier 1 validates the architectural decision to separate anomaly detection from attack diagnosis. This separation allows each model to specialize in its designated task, with Tier 1 optimized specifically for the binary decision of normal vs. malicious traffic.

5.2. Tier 2 Performance: Advanced Attack Diagnosis with Class Imbalance Challenges

The Tier 2 multi-class classification presents a more challenging scenario due to severe class imbalance and the complexity of distinguishing between sophisticated attack types. The projected results after hyperparameter tuning show substantial improvements, particularly for rare attack detection.

Critical Observations:

XGBoost maintains leadership in Tier 2 with projected Macro F1 of 0.78–0.82, representing a significant 12–16% improvement over baseline performance. Its ability to handle class imbalance through sophisticated weighting mechanisms makes it particularly effective for diagnosing diverse attack types.

Rare attack detection shows dramatic improvement across all models. The projected F1 scores for challenging attacks like “Host Discovery” and “UDP Flood” increase from near-zero baselines to 0.35–0.60, with XGBoost achieving the best rare attack performance (0.50–0.60 F1).

The performance-computation trade-off becomes pronounced in Tier 2. While XGBoost and LightGBM deliver superior accuracy, they require substantially more computational resources (16–50 s inference time), whereas CatBoost and Random Forest offer more balanced performance with moderate resource requirements.

The tiered architecture proves particularly valuable here, as the computational intensity of Tier 2 is only invoked for the small percentage of traffic flagged as malicious by Tier 1, making the resource demands manageable in practical deployments.

5.3. Direct Classification: The Monolithic Approach Comparison

The Direct multi-class classification approach serves as an important baseline, attempting to simultaneously detect attacks and diagnose their type in a single step. The results highlight fundamental challenges that justify the two-tiered architecture.

Comparative Analysis:

Performance degradation is evident across all models in Direct classification compared to the specialized tiers. The projected Macro F1 scores (0.60–0.82) are consistently 5–15% lower than the corresponding Tier 2 performance, demonstrating the difficulty of solving both detection and diagnosis simultaneously.

Rare attack performance suffers most severely in the Direct approach. While the tiered architecture projects rare attack F1 scores of 0.25–0.60, the Direct approach struggles with values of 0.20–0.55, with some models completely failing on certain attack types.

Computational efficiency is compromised without clear performance benefits. The Direct approach requires processing all traffic through complex multi-class models, resulting in higher inference times without the accuracy advantages of the specialized tiered approach.

When interpreting the results of the Direct paradigm, it is important to note that its Macro F1-score represents performance on the single, complex task of simultaneously detecting an attack and diagnosing its type. In contrast, the tiered approach produces separate scores for the distinct tasks of detection (Tier-1) and diagnosis (Tier-2). While this makes a direct numerical comparison of a single F1-score challenging, the critical finding is twofold. First, the tiered architecture avoids the performance degradation seen when a monolithic model is overwhelmed by this joint task, especially for rare attacks. Second, and more importantly, the decoupling provides a fundamental operational benefit: it enables ultra-fast, resource-efficient detection at the edge (as proven by Tier-1 inference times) while reserving more complex, resource-intensive diagnosis for confirmed threats on a centralized server.

5.4. Architectural Implications and Model Selection Guidelines

The comprehensive results provide clear guidance for model selection based on specific IoT deployment requirements:

For Maximum Detection Accuracy:

Tier 1: XGBoost for best speed-accuracy balance

Tier 2: XGBoost for superior attack diagnosis, particularly for rare attacks

Consideration: Requires adequate computational resources for Tier 2 processing

For Resource-Constrained Environments:

Tier 1: Random Forest or Decision Tree for good performance with moderate resources

Tier 2: CatBoost or Random Forest for balanced performance and reasonable computational demands

Advantage: More suitable for edge deployment with limited processing capabilities

For Operational Robustness:

Tier 1: Random Forest for consistent performance across varying network conditions

Tier 2: Random Forest for reliable performance across all attack types

Benefit: Reduced performance variance and better handling of novel attack patterns

5.5. Validation of the Two-Tiered Architecture

The experimental results provide strong empirical validation for the C2T-IDS architectural design:

Specialization Advantage: The performance superiority of the tiered approach demonstrates that specialized models for detection and diagnosis outperform monolithic approaches attempting both tasks simultaneously.

Computational Efficiency: The tiered architecture provides better resource utilization by applying complex analysis only to confirmed malicious traffic, reducing overall computational burden.

Scalability: The separation of concerns allows independent optimization and updating of each tier, supporting long-term maintenance and adaptation to evolving threats.

Practical Deployability: The clear performance-computation trade-offs enable informed decisions based on specific IoT environment constraints and security requirements.

5.6. Limitations and Future Optimization Opportunities

While the results are promising, several areas for future improvement are identified:

Hyperparameter Tuning Overhead: The significant performance gains come with substantial tuning effort, suggesting the need for automated optimization pipelines in production systems.

Resource-Performance Trade-offs: The best-performing models (XGBoost, LightGBM) require considerable resources, highlighting the need for model compression techniques for edge deployment.

Generalization to Evolving Threats: Continuous model adaptation mechanisms will be necessary to maintain performance against novel attack vectors not represented in the training data.

The C2T-IDS framework, with its optimized tree-based models and two-tiered architecture, represents a significant advancement in practical IoT security, balancing detection accuracy, computational efficiency, and operational feasibility for real-world deployment.

6. Conclusions

The exponential proliferation of Internet of Things (IoT) devices has irrevocably expanded the digital attack surface, necessitating security paradigms that are not only effective but also inherently cognizant of the severe resource constraints characterizing these environments. This research has introduced and rigorously evaluated the Centralized Two-Tiered Tree-Based Intrusion-Detection System (C2T-IDS), a novel framework architected specifically to navigate the unique challenges of IoT security. The proposed solution moves beyond conventional monolithic classification models by implementing a strategically decoupled architecture, where the critical tasks of intrusion detection and attack diagnosis are assigned to specialized, optimized tiers.

The empirical validation of C2T-IDS, conducted on the comprehensive and realistic CIC-IoT-2023 dataset, underscores the tangible benefits of this bifurcated approach. The first tier, dedicated exclusively to binary anomaly detection, demonstrates exceptional proficiency, achieving Macro F1-Scores as high as 0.94. More critically, this high detection accuracy is coupled with remarkable computational efficiency, with inference times recorded as low as 6 milliseconds for models like XGBoost when deployed on edge-grade hardware. This performance profile is non-negotiable for real-time threat mitigation at the network periphery, ensuring that potential breaches are identified with minimal latency without overburdening constrained devices.

Subsequently, the second tier provides a deeper analytical capability, focusing on the multi-class classification of identified anomalies into specific attack categories, such as DDoS, reconnaissance, and man-in-the-middle attacks. While this diagnostic phase tackles a more complex problem involving significant class imbalance, it still attains a robust Macro F1-Score of up to 0.80. The architectural genius of C2T-IDS is that this computationally intensive diagnosis is invoked only for the small subset of traffic pre-identified as malicious by the first tier. This strategic division of labor stands in stark contrast to direct, single-step multi-class classification, which often struggles with the compounded complexity of simultaneous detection and diagnosis, particularly for rare attack types. The C2T-IDS framework effectively circumvents this performance bottleneck.

In essence, the principal contribution of this work is not merely a marginal improvement in detection metrics, but the introduction of a more intelligent and pragmatic security model. The C2T-IDS framework delivers a superior operational paradigm by seamlessly integrating the low-latency, high-recall requirements of edge-based detection with the powerful, detailed analytical capabilities of centralized processing. It establishes a robust, scalable, and resource-conscious foundation for protecting IoT ecosystems. Future work will focus on enhancing the framework’s adaptability against evolving and adversarial threats, integrating it with intrusion prevention systems (IPS), and validating its long-term efficacy in live, operational deployments across diverse IoT topologies.

7. Future Work and Directions

While this research confirms the potential of tree-based ensemble methods in protecting IoT networks, it simultaneously identifies crucial areas for further investigation. The most immediate need involves enhancing the framework’s resilience against evasion tactics. Implementing protective measures such as adversarial training and feature randomization could fortify the models against advanced threats seeking to avoid identification. Furthermore, substantiating our methodology requires evaluation across more varied and authentic IoT datasets that include broader device diversity, intricate network architectures, and developing attack vectors. Transitioning from benchmark assessment to implementation in operational, dynamic networks is vital for verifying the system’s practical effectiveness in unpredictable environments. Another worthwhile direction involves combining our approach with complementary machine learning paradigms. Deep learning integration might identify more nuanced traffic characteristics, while reinforcement learning could facilitate autonomous real-time adjustment of defensive measures. Ultimately, the framework requires ongoing assessment and refinement to address novel threats that will continually appear. As Internet of Things technologies progress, new security weaknesses and attack strategies will persistently develop, making constant vigilance and system evolution imperative for maintaining protective capabilities.

Looking ahead, subsequent research may investigate combining our anomaly detection framework with complementary security infrastructures, including Intrusion Prevention Systems (IPS) and Security Information and Event Management (SIEM) platforms. This synergy would enable a more unified and strategic security posture for IoT ecosystems, significantly strengthening their overall defensive capability. Furthermore, incorporating domain-specific intelligence—such as known device behaviors or typical usage profiles—could substantially enhance both the precision and operational efficiency of our detection mechanism. The opportunity for these substantive advancements underscores the continued relevance of this research direction.

Author Contributions

Conceptualization, H.A.K.Y.; Methodology, H.A.K.Y. and M.E.S.; Software, H.A.K.Y.; Validation, H.A.K.Y., M.E.S. and B.E.N.; Formal analysis, M.E.S. and B.E.N.; Investigation, H.A.K.Y.; Writing—original draft, H.A.K.Y.; Writing—review and editing, M.E.S. and B.E.N.; Visualization, H.A.K.Y.; Supervision, A.E.C., M.E.S. and B.E.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets utilized in this study CIC IoT 2023 are publicly available from the Canadian Institute for Cybersecurity (CIC). The specific dataset used can be accessed at CIC Dataset Repository [

40]. Researchers may refer to the provided link for download and usage terms. The code implemented for data processing, analysis, and model development in this study has been made available on GitHub 2025.16.0. The repository can be accessed at [

56]. Users are encouraged to review the accompanying documentation for proper implementation and citation guidelines.

Acknowledgments

During the preparation of this manuscript, the authors used Gemini from Google to assist with refining technical explanations and generating text content for select sections. All outputs were rigorously reviewed, edited, and verified by the authors, who take full responsibility for the final content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Al Saleh, M.; Finance, B.; Taher, Y.; Haque, R.; Jaber, A.; Bachir, N. Introducing artificial intelligence to the radiation early warning system. Environ. Sci. Pollut. Res. 2022, 29, 14036–14045. [Google Scholar] [CrossRef]

- Sun, P.; Wan, Y.; Wu, Z.; Fang, Z.; Li, Q. A survey on privacy and security issues in IoT-based environments: Technologies, protection measures and future directions. Comput. Secur. 2025, 148, 104097. [Google Scholar] [CrossRef]

- Szymoniak, S.; Piątkowski, J.; Kurkowski, M. Defense and Security Mechanisms in the Internet of Things: A Review. Appl. Sci. 2025, 15, 499. [Google Scholar] [CrossRef]

- Mansoor, K.; Afzal, M.; Iqbal, W.; Abbas, Y. Securing the future: Exploring post-quantum cryptography for authentication and user privacy in IoT devices. Clust. Comput. 2025, 28, 93. [Google Scholar] [CrossRef]

- Yamin, A.B.; Talla, R.R.; Kothapalli, S. The Intersection of IoT, Marketing, and Cybersecurity: Advantages and Threats for Business Strategy. Asian Bus. Rev. 2025, 15, 7–16. [Google Scholar] [CrossRef]

- Logeswari, G.; Roselind, J.D.; Tamilarasi, K.; Nivethitha, V. A Comprehensive Approach to Intrusion Detection in IoT Environments Using Hybrid Feature Selection and Multi-Stage Classification Techniques. IEEE Access 2025, 13, 24970–24987. [Google Scholar] [CrossRef]

- Mothukuri, V.; Parizi, R.M.; Pouriyeh, S.; Huang, Y.; Dehghantanha, A.; Srivastava, G. A survey on security and privacy of federated learning. Future Gener. Comput. Syst. 2021, 115, 619–640. [Google Scholar] [CrossRef]

- Abosata, N.; Al-Rubaye, S.; Inalhan, G. Customised intrusion detection for an industrial IoT heterogeneous network based on machine learning algorithms called FTL-CID. Sensors 2022, 23, 321. [Google Scholar] [CrossRef] [PubMed]

- Khan, T.; Tian, W.; Zhou, G.; Ilager, S.; Gong, M.; Buyya, R. Machine learning (ML)-centric resource management in cloud computing: A review and future directions. J. Netw. Comput. Appl. 2022, 204, 103405. [Google Scholar] [CrossRef]

- Sharma, P.K.; Fernandez, R.; Zaroukian, E.; Dorothy, M.; Basak, A.; Asher, D.E. Survey of recent multi-agent reinforcement learning algorithms utilizing centralized training. In Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications III, Online, 12–16 April 2021; Volume 11746, pp. 665–676. [Google Scholar]

- Szott, S.; Kosek-Szott, K.; Gawłowicz, P.; Gómez, J.T.; Bellalta, B.; Zubow, A.; Dressler, F. Wi-Fi meets ML: A survey on improving IEEE 802.11 performance with machine learning. IEEE Commun. Surv. Tutor. 2022, 24, 1843–1893. [Google Scholar] [CrossRef]

- Paleyes, A.; Urma, R.G.; Lawrence, N.D. Challenges in deploying machine learning: A survey of case studies. ACM Comput. Surv. 2022, 55, 1–29. [Google Scholar] [CrossRef]

- Amrullah, A. A Review and Comparative Analysis of Intrusion Detection Systems for Edge Networks in IoT. Intellithings J. 2025, 1, 1–10. [Google Scholar]

- Jin, H.; Jeon, G.; Choi, H.W.A.; Jeon, S.; Seo, J.T. A threat modeling framework for IoT-Based botnet attacks. Heliyon 2024, 10, e39192. [Google Scholar] [CrossRef] [PubMed]

- Shuvo, M.M.H.; Islam, S.K.; Cheng, J.; Morshed, B.I. Efficient acceleration of deep learning inference on resource-constrained edge devices: A review. Proc. IEEE 2022, 111, 42–91. [Google Scholar] [CrossRef]

- Alsudani, S.W.A.; Ghazikhani, A. Enhancing Intrusion Detection with LSTM Recurrent Neural Network Optimized by Emperor Penguin Algorithm. Wasit J. Comput. Math. Sci. 2023, 2, 69–80. [Google Scholar] [CrossRef]

- Zou, L.; Luo, X.; Zhang, Y.; Yang, X.; Wang, X. HC-DTTSVM: A network intrusion detection method based on decision tree twin support vector machine and hierarchical clustering. IEEE Access 2023, 11, 21404–21416. [Google Scholar] [CrossRef]

- Hoque, M.M.; Ahmad, I.; Suomalainen, J.; Dini, P.; Tahir, M. On Resource Consumption of Machine Learning in Communications Security. Comput. Netw. 2024, 271, 111600. [Google Scholar] [CrossRef]

- Naveeda, K.; Fathima, S.S.S. Real-time implementation of IoT-enabled cyberattack detection system in advanced metering infrastructure using machine learning technique. Electr. Eng. 2025, 107, 909–928. [Google Scholar] [CrossRef]

- Bhutta, A.A.; Nisa, M.U.; Mian, A.N. Lightweight real-time WiFi-based intrusion detection system using LightGBM. Wirel. Netw. 2024, 30, 749–761. [Google Scholar] [CrossRef]

- Geng, X.; Wang, Z.; Chen, C.; Xu, Q.; Xu, K.; Jin, C.; Gupta, M.; Yang, X.; Chen, Z.; Aly, M.M.S.; et al. From Algorithm to Hardware: A Survey on Efficient and Safe Deployment of Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 5837–5857. [Google Scholar] [CrossRef]

- Tyagi, H.; Kumar, R. Attack and anomaly detection in IoT networks using supervised machine learning approaches. Rev. D’Intell. Artif. 2021, 35, 11–21. [Google Scholar] [CrossRef]

- Walia, G.K.; Kumar, M. Computational Offloading and resource allocation for IoT applications using decision tree based reinforcement learning. Ad Hoc Netw. 2025, 170, 103751. [Google Scholar] [CrossRef]

- Chen, C.; Liao, T.; Deng, X.; Wu, Z.; Huang, S.; Zheng, Z. Advances in Robust Federated Learning: A Survey with Heterogeneity Considerations. IEEE Trans. Big Data 2025, 11, 1548–1567. [Google Scholar] [CrossRef]

- El Hajla, S.; Maleh, Y.; Mounir, S. Security Challenges and Solutions in IoT: An In-Depth Review of Anomaly Detection and Intrusion Prevention. In Machine Intelligence Applications in Cyber-Risk Management; IGI Global: Hershey, PA, USA, 2025; pp. 25–50. [Google Scholar]

- Veeramachaneni, V. Edge Computing: Architecture, Applications, and Future Challenges in a Decentralized Era. Recent Trends Comput. Graph. Multimed. Technol. 2025, 7, 8–23. [Google Scholar]