Case-Based Data Quality Management for IoT Logs: A Case Study Focusing on Detection of Data Quality Issues

Abstract

1. Introduction

2. Foundations

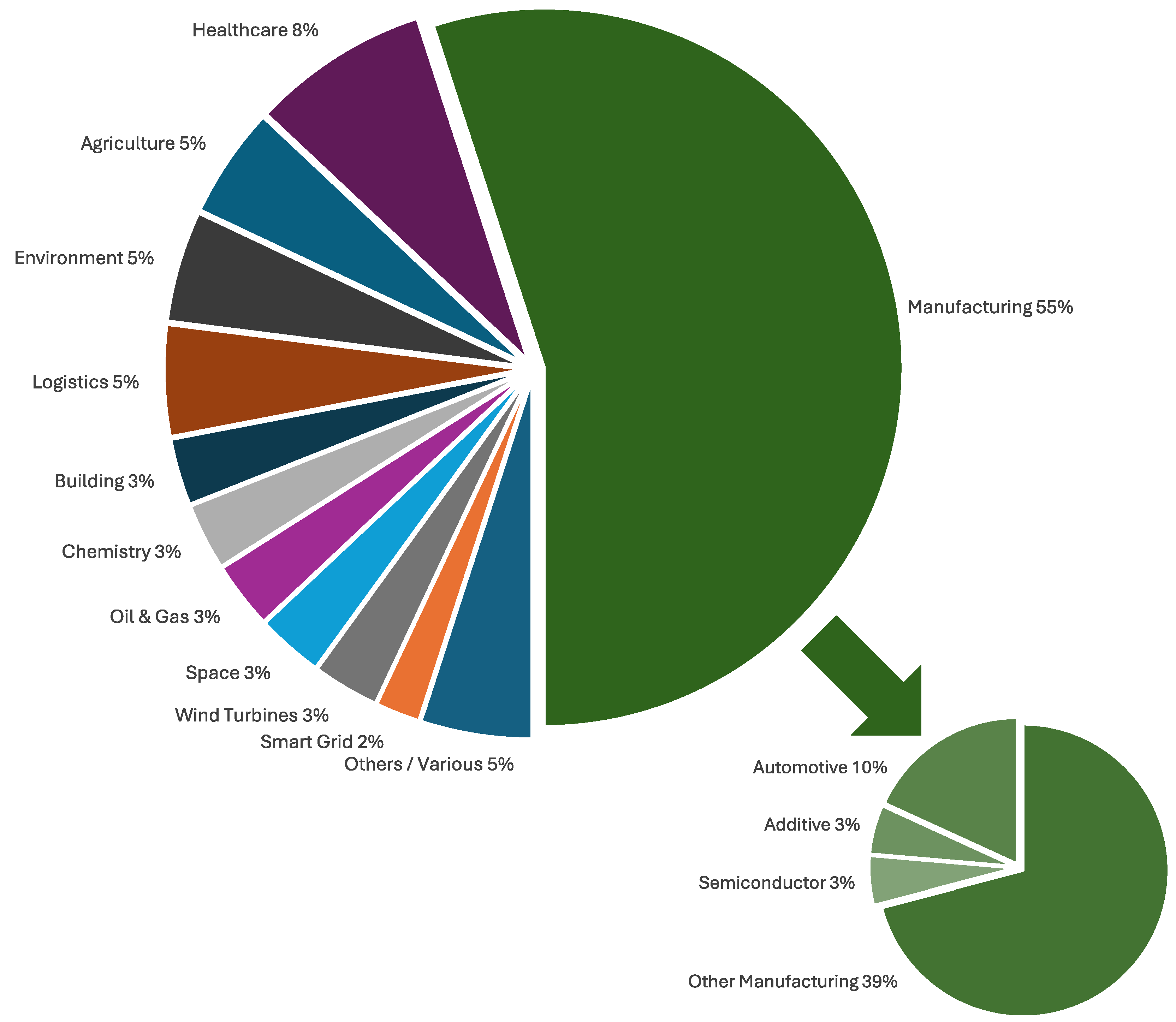

2.1. Industrial IoT for Business Process Management

- (1)

- The IIoT typically focuses on large, complex assets such as machines or production lines.

- (2)

- IIoT systems can perform autonomous adaptation of system behavior without human intervention.

- (3)

- IIoT systems enable real-time monitoring of the operational processes they support.

- (4)

- The IIoT enables the explicit pursuit of economic value, e.g., higher productivity or lower energy consumption.

2.2. Data Quality Issues in the Industrial IoT

2.3. Patterns of Data Quality Issues in Business Process Management

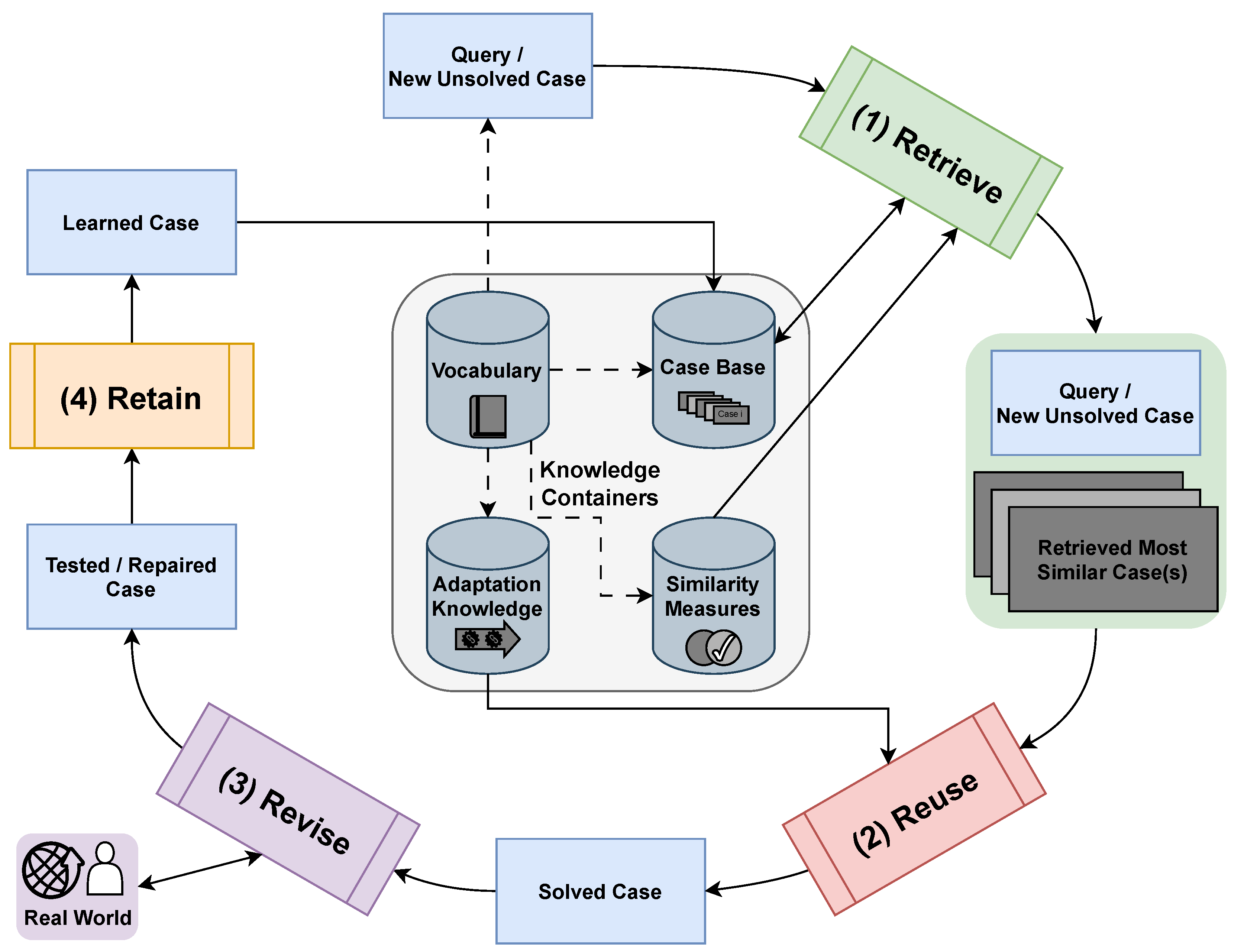

2.4. Case-Based Reasoning

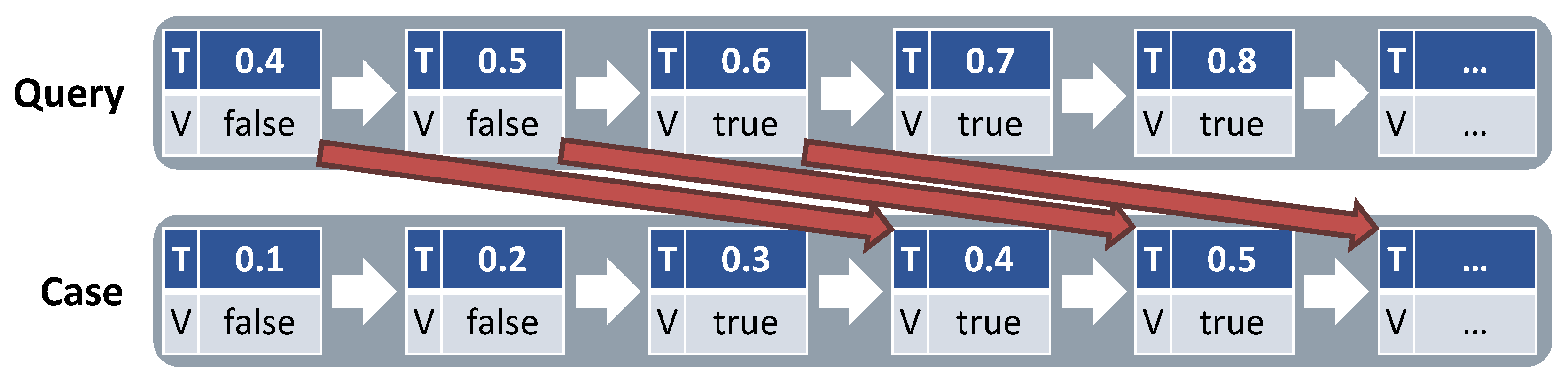

2.5. Temporal Case-Based Reasoning

3. Related Work

3.1. Data Quality Management for IoT Systems

- (1)

- Identify target profiles to manage sensor data faults;

- (2)

- Monitor the conditions of connected products and detect abnormal conditions;

- (3)

- Identify sensor data faults;

- (4)

- Determine the causes of the identified sensor data faults; and

- (5)

- Remove the determined causes.

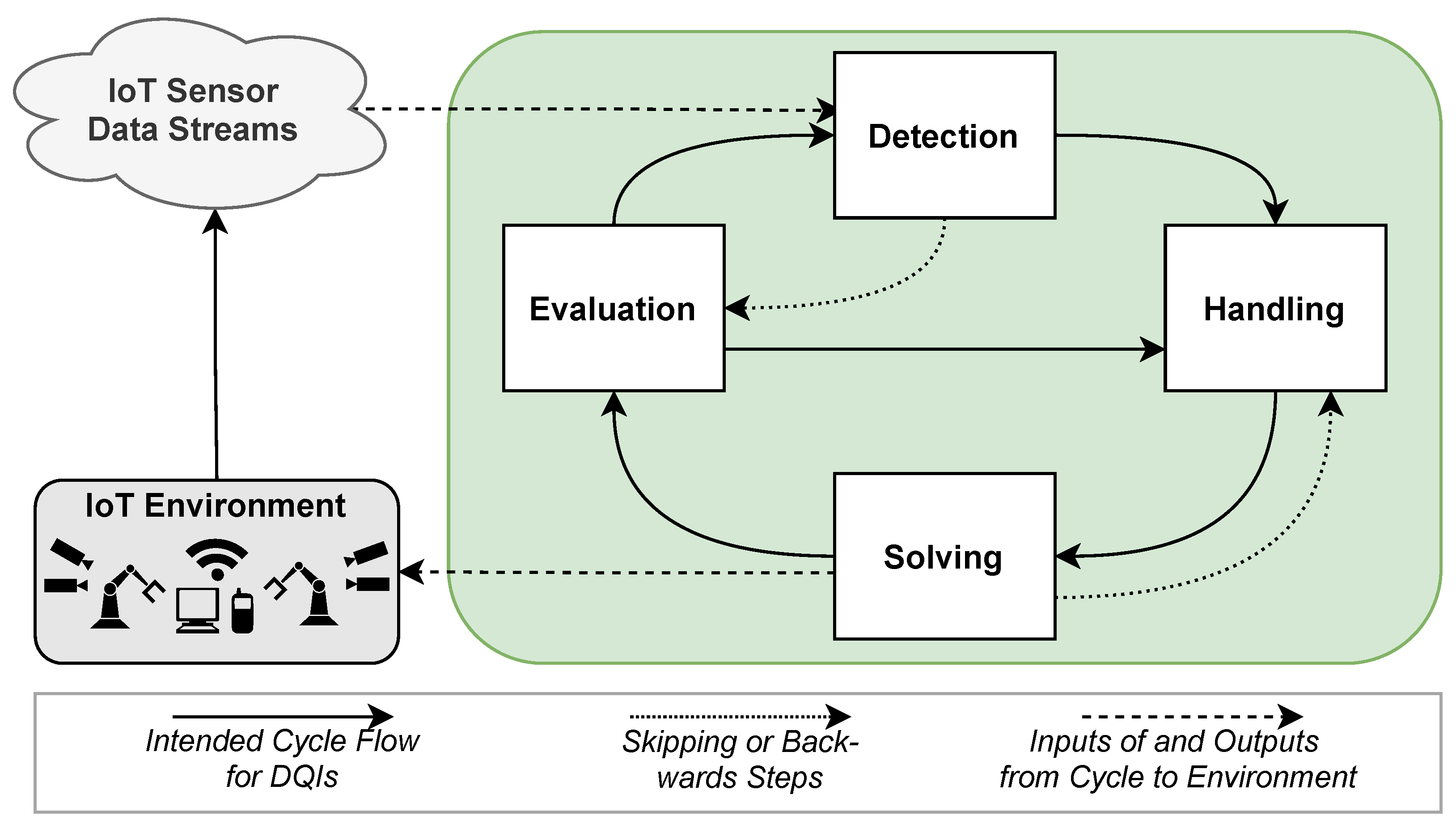

3.2. Approaches for Data Quality Management in IoT Business Process Management

- 1.

- The Detection phase involves monitoring incoming sensor data to identify and classify potential DQIs. This step can use techniques for DQI detection mentioned earlier, such as local outlier factor [13] or isolation forests [12]. When issues are detected, the data is forwarded to the handling phase for further processing.

- 2.

- In the Handling phase, action recommendations are developed based on the previously classified DQIs. These recommendations aim to address both the underlying hardware components causing the issues and the necessary event log cleaning procedures, such as smoothing for denoising.

- 3.

- The Solving phase prioritizes and schedules the recommended actions through a process that identifies duplications and interdependencies [65]. Implementation may occur automatically, semi-automatically, or manually. If an action proves unfeasible, the issue is redirected to the handling task.

- 4.

- Finally, the Evaluation phase verifies whether the implemented solutions have successfully resolved the DQIs and thoroughly cleaned the log. This stage also examines whether new DQIs have emerged due to the applied data cleaning techniques. If quality remains insufficient, the process evaluates whether the initial detection and classification were accurate and whether the executed actions effectively addressed the problems. When necessary, the detection and handling tasks may be reinitiated.

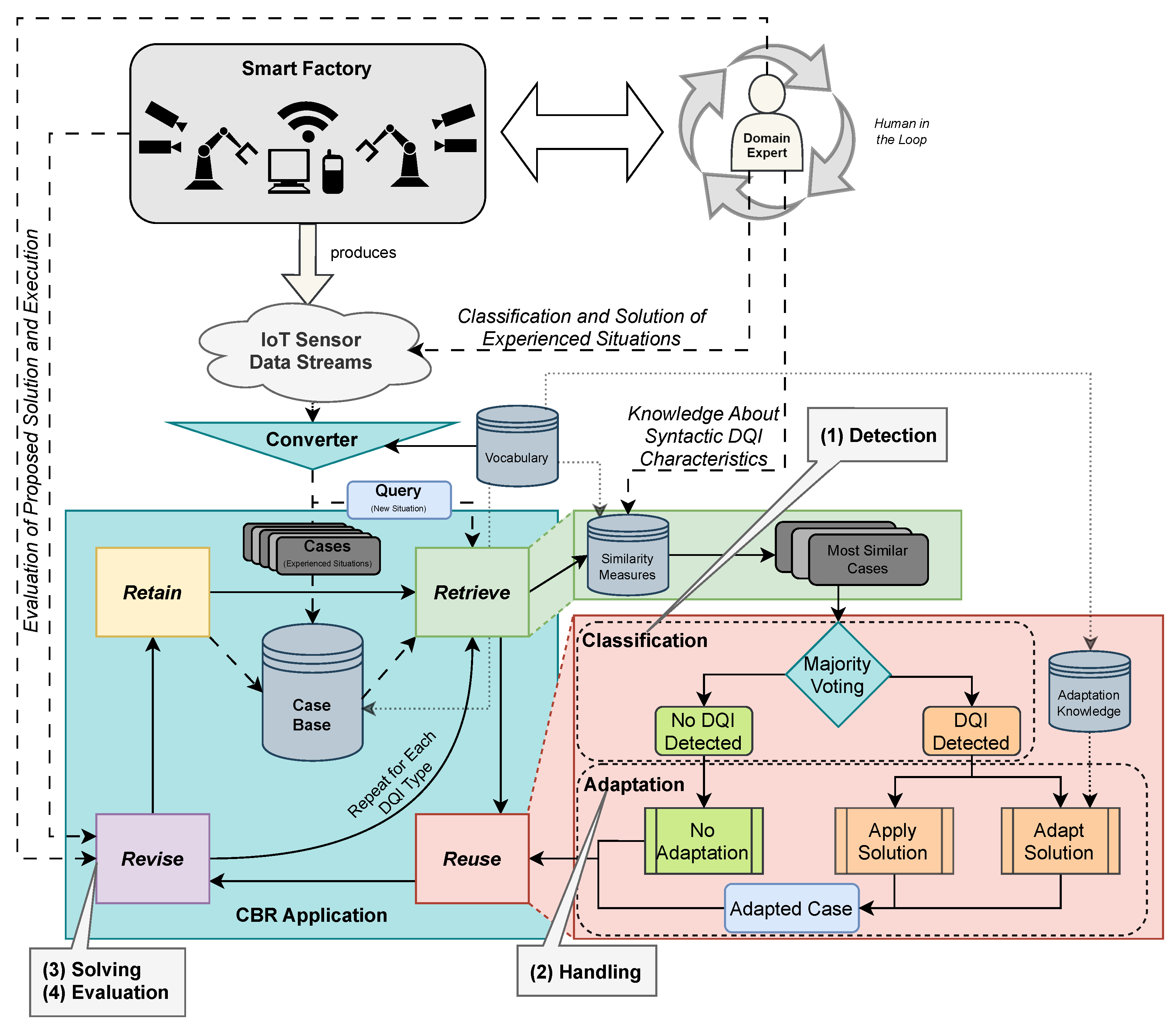

4. Case-Based Detection of Data Quality Issues

4.1. Procedure of Using Case-Based Reasoning for DQI Detection

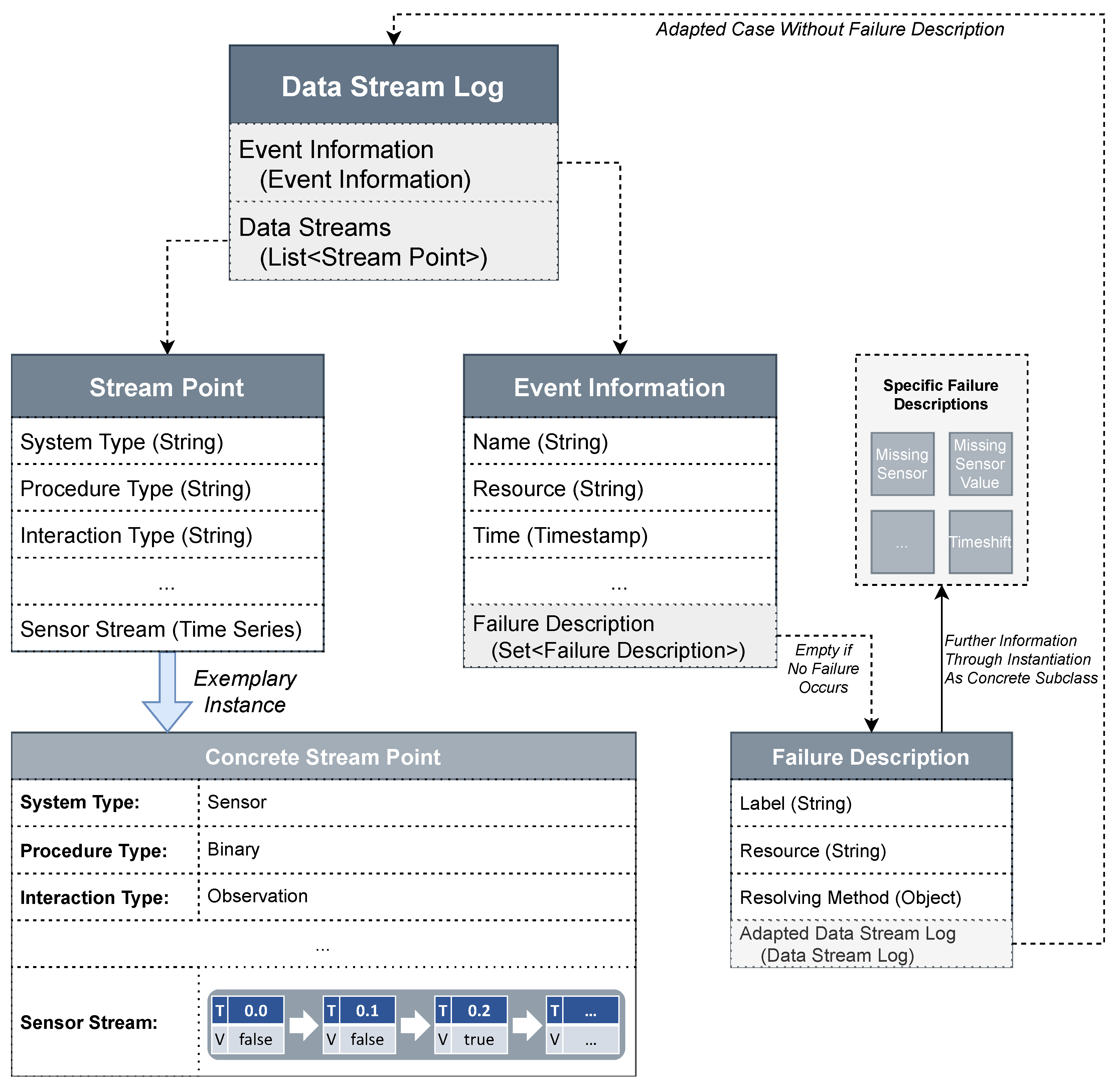

4.2. Vocabulary for Case Description

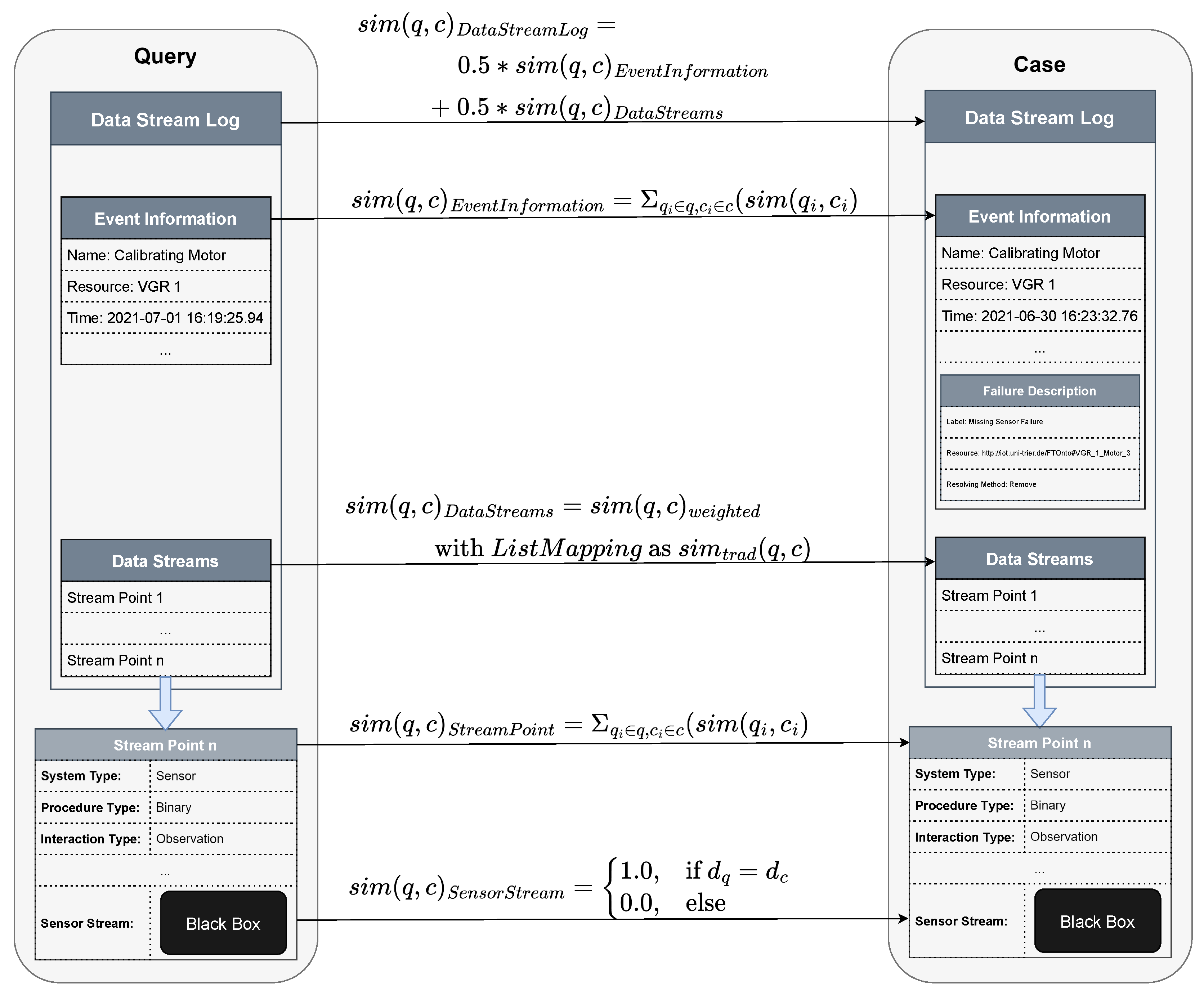

4.3. Similarity Measures for Data Quality Issues

4.3.1. Missing Sensor Values

4.3.2. Missing Sensors

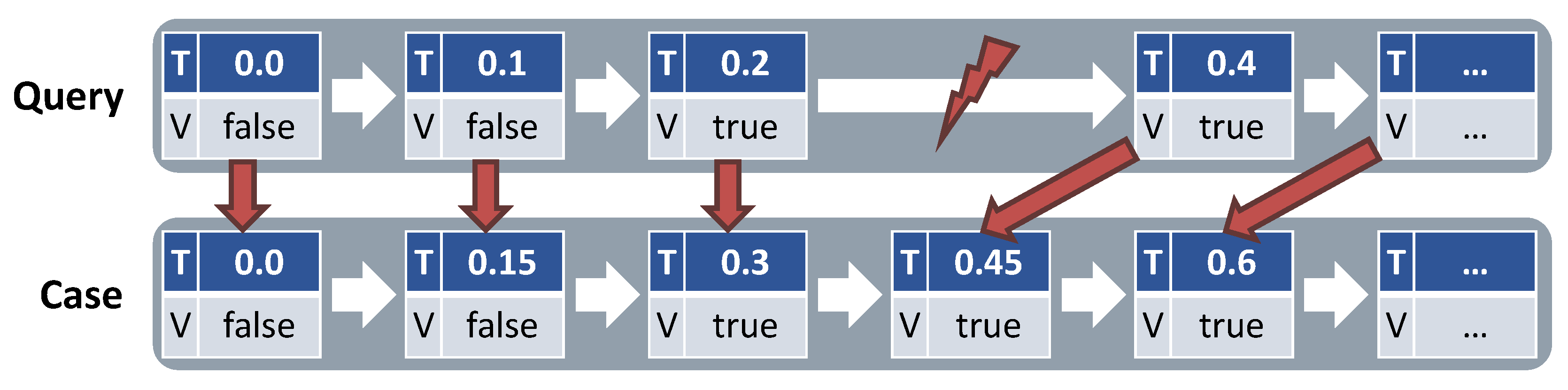

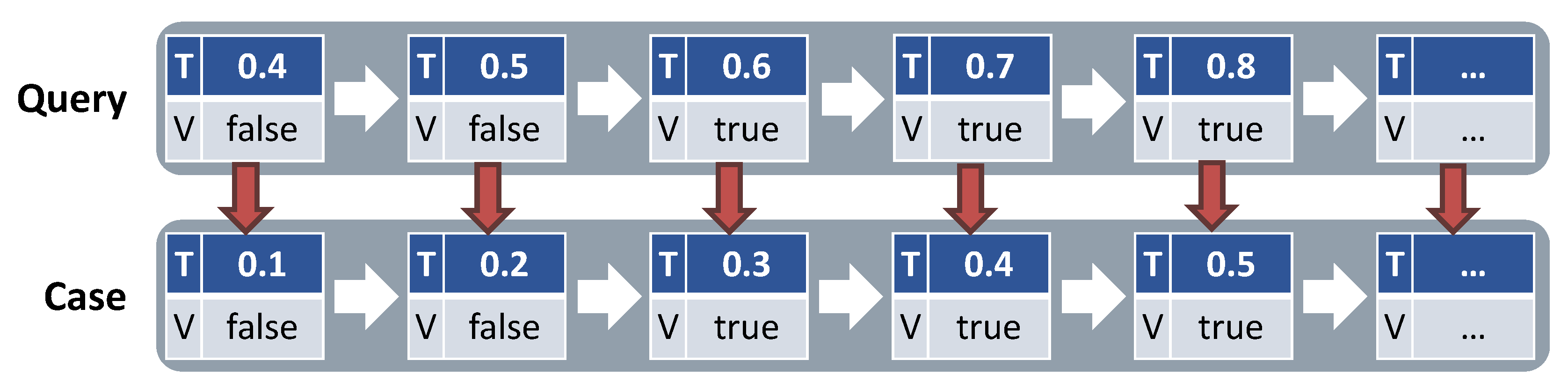

4.3.3. Time Shifts

4.4. Detection and Case-Based Solving of Data Quality Issues

4.4.1. Classification of Data Quality Issues

4.4.2. Solution Adaptation

5. Case Study

5.1. Setup

5.2. Results

5.3. Discussion

5.4. Lessons Learned and Methodological Implications

- LL1—Expert-only Similarity Measures are Fragile. Our retrieval pipeline achieved only recall at false-positives when the similarity assessment is based on the weights obtained in the expert workshops. Manual heuristics did not capture cross-sensor dependencies (e.g., pressure–temperature drift) or temporal motifs (intermittent spikes).

- LL2—Naïve Retrieval Does Not Scale. Similarity computations for two DQI types exhausted 400 GB of RAM and averaged 29 min per query, forcing 157 of 500 retrievals to abort. This makes real-time or edge deployment impracticable.Implication: More efficient similarity-based retrieval techniques (e.g., MAC/FAC retrieval [77], index-based case base structures [78], and GPU-accelerated retrieval [79]) and leaner case representations (temporal abstraction [48,80] and constant-value pruning [47]) are mandatory before the approach can be used in practice.

- LL3—One-Size-Fits-All Similarity Measure Fails Across DQI Types. For Missing Sensors and Time Shift failures, the system produced recall—despite identical case representation and training effort. This indicates that each DQI type manifests a distinct failure signature that the current uniform similarity function cannot generalize over.Implication: Adopt failure-specific similarity measures or a cascading architecture in which a lightweight detector first routes the query to a tailored preprocessing step in which cases are enriched with structural and temporal context (e.g., sensor topology, or process phase) to boost discriminative power.

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| BPM | Business Process Management |

| CBR | Case-Based Reasoning |

| DL | Deep Learning |

| DQI | Data Quality Issue |

| DQMC | Data Quality Management Cycle |

| DTW | Dynamic Time Warping |

| IIoT | Industrial Internet of Things |

| IoT | Internet of Things |

| ML | Machine Learning |

| PM | Process Mining |

| PredM | Predictive Maintenance |

| RAM | Random-Access Memory |

| TCBR | Temporal Case-Based Reasoning |

References

- Gilchrist, A. Industry 4.0: The Industrial Internet of Things; Apress: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Tran, K.P. Artificial Intelligence for Smart Manufacturing: Methods and Applications. Sensors 2021, 21, 5584. [Google Scholar] [CrossRef]

- Zonta, T.; Da Costa, C.A.; da Rosa Righi, R.; de Lima, M.J.; da Trindade, E.S.; Li, G.P. Predictive maintenance in the Industry 4.0: A systematic literature review. Comput. Ind. Eng. 2020, 150, 106889. [Google Scholar] [CrossRef]

- Klein, P. Combining Expert Knowledge and Deep Learning with Case-Based Reasoning for Predictive Maintenance, 1st ed.; Springer Vieweg: Wiesbaden, Germany, 2025. [Google Scholar] [CrossRef]

- Karkouch, A.; Mousannif, H.; Al Moatassime, H.; Noel, T. Data Quality in Internet of Things: A state-of-the-art survey. J. Netw. Comput. Appl. 2016, 73, 57–81. [Google Scholar] [CrossRef]

- Teh, H.Y.; Kempa-Liehr, A.W.; Wang, K.I.K. Sensor data quality: A systematic review. J. Big Data 2020, 7, 11. [Google Scholar] [CrossRef]

- Goknil, A.; Nguyen, P.; Sen, S.; Politaki, D.; Niavis, H.; Pedersen, K.J.; Suyuthi, A.; Anand, A.; Ziegenbein, A. A Systematic Review of Data Quality in CPS and IoT for Industry 4.0. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- van der Aalst, W.M.P. Process Mining—Data Science in Action, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Rangineni, S. An Analysis of Data Quality Requirements for Machine Learning Development Pipelines Frameworks. Int. J. Comput. Trends Technol. 2023, 71, 16–27. [Google Scholar] [CrossRef]

- Mangler, J.; Seiger, R.; Benzin, J.; Grüger, J.; Kirikkayis, Y.; Gallik, F.; Malburg, L.; Ehrendorfer, M.; Bertrand, Y.; Franceschetti, M.; et al. From Internet of Things Data to Business Processes: Challenges and a Framework. arXiv 2024, arXiv:2405.08528. [Google Scholar] [CrossRef]

- Bertrand, Y.; Schultheis, A.; Malburg, L.; Grüger, J.; Serral Asensio, E.; Bergmann, R. Challenges in Data Quality Management for IoT-Enhanced Event Logs. In International Conference on Research Challenges in Information Science; Springer: Berlin/Heidelberg, Germany, 2025; pp. 20–36. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation-based anomaly detection. ACM Trans. Knowl. Discov. Data 2012, 6, 1–39. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.; Ng, R.T.; Sander, J. LOF: Identifying Density-Based Local Outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 16–18 May 2000; pp. 93–104. [Google Scholar] [CrossRef]

- Jäger, G.; Zug, S.; Brade, T.; Dietrich, A.; Steup, C.; Moewes, C.; Cretu, A.M. Assessing neural networks for sensor fault detection. In Proceedings of the 2014 CIVEMSA, Ottawa, ON, Canada, 5–7 May 2014; pp. 70–75. [Google Scholar] [CrossRef]

- D’Aniello, G.; Gaeta, M.; Hong, T.P. Effective Quality-Aware Sensor Data Management. IEEE Trans. Emerg. Top. Comput. Intell. 2017, 2, 65–77. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, J.; Sun, Z.; Li, Y.; Huang, D. A probabilistic self-validating soft-sensor with application to wastewater treatment. Comput. Chem. Eng. 2014, 71, 263–280. [Google Scholar] [CrossRef]

- Hou, Z.; Lian, Z.; Yao, Y.; Yuan, X. Data mining based sensor fault diagnosis and validation for building air conditioning system. Energy Convers. Manag. 2006, 47, 2479–2490. [Google Scholar] [CrossRef]

- Grüger, J.; Malburg, L.; Bergmann, R. IoT-enriched event log generation and quality analytics: A case study. it-Inf. Technol. 2023, 65, 128–138. [Google Scholar] [CrossRef]

- Mitchell, T.M. Machine Learning; McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Power, D.J. Decision Support Systems: Concepts and Resources for Managers; Quorum Books: Westport, CT, USA, 2002. [Google Scholar]

- Aamodt, A.; Plaza, E. Case-Based Reasoning: Foundational Issues, Methodological Variations, and System Approaches. AI Commun. 1994, 7, 39–59. [Google Scholar] [CrossRef]

- Bergmann, R. Experience Management: Foundations, Development Methodology, and Internet-Based Applications; LNCS; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2432. [Google Scholar] [CrossRef]

- Sørmo, F.; Cassens, J.; Aamodt, A. Explanation in Case-Based Reasoning—Perspectives and Goals. Artif. Intell. Rev. 2005, 24, 109–143. [Google Scholar] [CrossRef]

- Zanzotto, F.M. Viewpoint: Human-in-the-loop Artificial Intelligence. J. Artif. Intell. Res. 2019, 64, 243–252. [Google Scholar] [CrossRef]

- Richter, M.M. Knowledge Containers. In Readings in Case-Based Reasoning; Morgan Kaufmann Publishers: Cambridge, MA, USA, 2003. [Google Scholar]

- Dorsemaine, B.; Gaulier, J.P.; Wary, J.P.; Kheir, N.; Urien, P. Internet of Things: A Definition & Taxonomy. In Proceedings of the 2015 9th International Conference on Next Generation Mobile Applications, Services and Technologies, Cambridge, UK, 9–11 September 2015; pp. 72–77. [Google Scholar] [CrossRef]

- Radziwon, A.; Bilberg, A.; Bogers, M.; Madsen, E.S. The Smart Factory: Exploring Adaptive and Flexible Manufacturing Solutions. Procedia Eng. 2014, 69, 1184–1190. [Google Scholar] [CrossRef]

- Hehenberger, P.; Vogel-Heuser, B.; Bradley, D.; Eynard, B.; Tomiyama, T.; Achiche, S. Design, modelling, simulation and integration of cyber physical systems: Methods and applications. Comput. Ind. 2016, 82, 273–289. [Google Scholar] [CrossRef]

- Boyes, H.; Hallaq, B.; Cunningham, J.; Watson, T. The industrial internet of things (IIoT): An analysis framework. Comput. Ind. 2018, 101, 1–12. [Google Scholar] [CrossRef]

- Vijayaraghavan, V.; Rian Leevinson, J. Internet of Things Applications and Use Cases in the Era of Industry 4.0. In The Internet of Things in the Industrial Sector: Security and Device Connectivity, Smart Environments, and Industry 4.0; Springer International Publishing: Cham, Switzerland, 2019; pp. 279–298. [Google Scholar] [CrossRef]

- Janiesch, C.; Koschmider, A.; Mecella, M.; Weber, B.; Burattin, A.; Ciccio, C.D.; Gal, A.; Kannengiesser, U.; Mannhardt, F.; Mendling, J.; et al. The Internet-of-Things Meets Business Process Management. A Manifesto. IEEE Syst. Man Cybern. Mag. 2020, 6, 34–44. [Google Scholar] [CrossRef]

- Schultheis, A.; Jilg, D.; Malburg, L.; Bergweiler, S.; Bergmann, R. Towards Flexible Control of Production Processes: A Requirements Analysis for Adaptive Workflow Management and Evaluation of Suitable Process Modeling Languages. Processes 2024, 12, 2714. [Google Scholar] [CrossRef]

- Scheibel, B.; Rinderle-Ma, S. Online Decision Mining and Monitoring in Process-Aware Information Systems. In International Conference on Conceptual Modeling; LNCS; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13607, pp. 271–280. [Google Scholar] [CrossRef]

- Rodríguez-Fernández, V.; Trzcionkowska, A.; González-Pardo, A.; Brzychczy, E.; Nalepa, G.J.; Camacho, D. Conformance Checking for Time-Series-Aware Processes. IEEE Trans. Ind. Inform. 2021, 17, 871–881. [Google Scholar] [CrossRef]

- Ehrendorfer, M.; Mangler, J.; Rinderle-Ma, S. Assessing the Impact of Context Data on Process Outcomes During Runtime. In International Conference on Service-Oriented Computing 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 3–18. [Google Scholar] [CrossRef]

- Bertrand, Y.; Belle, R.V.; Weerdt, J.D.; Serral, E. Defining Data Quality Issues in Process Mining with IoT Data. In International Conference on Process Mining 2022; LNBIP; Springer: Berlin/Heidelberg, Germany, 2022; Volume 468, pp. 422–434. [Google Scholar] [CrossRef]

- Bose, J.C.J.C.; Mans, R.S.; van der Aalst, W.M.P. Wanna improve process mining results? In Proceedings of the CIDM, Singapore, 16–19 April 2013; pp. 127–134. [Google Scholar] [CrossRef]

- Verhulst, R. Evaluating Quality of Event Data within Event Logs: An Extensible Framework. Master’s Thesis, Eindhoven University of Technology, Eindhoven, The Netherlands, 2016. [Google Scholar]

- Kuemper, D.; Iggena, T.; Toenjes, R.; Pulvermüller, E. Valid.IoT: A framework for sensor data quality analysis and interpolation. In Proceedings of the 9th ACM Multimedia Systems Conference, Amsterdam, The Netherlands, 12–15 June 2018; pp. 294–303. [Google Scholar] [CrossRef]

- Reinkemeyer, L. Process Mining in a Nutshell. In Process Mining in Action: Principles, Use Cases and Outlook; Springer: Berlin/Heidelberg, Germany, 2020; pp. 3–10. [Google Scholar] [CrossRef]

- Suriadi, S.; Andrews, R.; ter Hofstede, A.H.M.; Wynn, M.T. Event log imperfection patterns for process mining: Towards a systematic approach to cleaning event logs. Inf. Syst. 2017, 64, 132–150. [Google Scholar] [CrossRef]

- Brzychczy, E.; Trzcionkowska, A. Creation of an Event Log From a Low-Level Machinery Monitoring System for Process Mining Purposes. In International Conference on Intelligent Data Engineering and Automated Learning; Springer: Berlin/Heidelberg, Germany, 2018; pp. 54–63. [Google Scholar] [CrossRef]

- Watson, I. Case-based reasoning is a methodology not a technology. Knowl. Based Syst. 1999, 12, 303–308. [Google Scholar] [CrossRef]

- Kolodner, J.L. Case-Based Reasoning; Morgan Kaufmann: Cambridge, MA, USA, 1993. [Google Scholar] [CrossRef]

- Jære, M.D.; Aamodt, A.; Skalle, P. Representing Temporal Knowledge for Case-Based Prediction. In European Conference on Case-Based Reasoning 2002; LNCS; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2416, pp. 174–188. [Google Scholar] [CrossRef]

- López, B. Case-Based Reasoning: A Concise Introduction; SLAIML; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar] [CrossRef]

- Malburg, L.; Schultheis, A.; Bergmann, R. Modeling and Using Complex IoT Time Series Data in Case-Based Reasoning: From Application Scenarios to Implementations. In ICCBR Workshops; Springer: Cham, Switzerland, 2023; Volume 3438, pp. 81–96. [Google Scholar]

- Shahar, Y. A Framework for Knowledge-Based Temporal Abstraction. Artif. Intell. 1997, 90, 79–133. [Google Scholar] [CrossRef]

- Schultheis, A.; Malburg, L.; Grüger, J.; Weich, J.; Bertrand, Y.; Bergmann, R.; Serral Asensio, E. Identifying Missing Sensor Values in IoT Time Series Data: A Weight-Based Extension of Similarity Measures for Smart Manufacturing. In International Conference on Case-Based Reasoning 2024; LNCS; Springer: Berlin/Heidelberg, Germany, 2024; Volume 14775, pp. 240–257. [Google Scholar] [CrossRef]

- Stahl, A. Learning of Knowledge-Intensive Similarity Measures in Case-Based Reasoning. Ph.D. Thesis, University of Kaiserslautern, der Pfalz, Germany, 2004. [Google Scholar]

- Nakanishi, T. Semantic Waveform Model for Similarity Measure by Time-series Variation in Meaning. In Proceedings of the 2021 10th International Congress on Advanced Applied Informatics (IIAI-AAI), Online, 11–16 July 2021; pp. 382–387. [Google Scholar] [CrossRef]

- Corchado, J.M.; Lees, B. Adaptation of Cases for Case Based Forecasting with Neural Network Support. In Soft Computing in CBR; Springer: Berlin/Heidelberg, Germany, 2001; pp. 293–319. [Google Scholar] [CrossRef]

- Smith, T.F.; Waterman, M.S. Identification of Common Molecular Subsequences. J. Mol. Biol. 1981, 147, 195–197. [Google Scholar] [CrossRef]

- Sakoe, H.; Chiba, S. Dynamic Programming Algorithm Optimization for Spoken Word Recognition. IEEE Trans. Acoust. Speech, Signal Process. 1978, 26, 43–49. [Google Scholar] [CrossRef]

- Zhang, L.; Jeong, D.; Lee, S. Data Quality Management in the Internet of Things. Sensors 2021, 21, 5834. [Google Scholar] [CrossRef]

- Wang, R.Y.; Strong, D.M. Beyond Accuracy: What Data Quality Means to Data Consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Wang, R.Y. A product perspective on total data quality management. Commun. ACM 1998, 41, 58–65. [Google Scholar] [CrossRef]

- English, L.P. Improving Data Warehouse and Business Information Quality: Methods for Reducing Costs and Increasing Profits; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1999. [Google Scholar]

- Geisler, S.; Quix, C.; Weber, S.; Jarke, M. Ontology-Based Data Quality Management for Data Streams. J. Data Inf. Qual. 2016, 7, 1–34. [Google Scholar] [CrossRef]

- Perez-Castillo, R.; Carretero, A.G.; Caballero, I.; Rodriguez, M.; Piattini, M.; Mate, A.; Kim, S.; Lee, D. DAQUA-MASS: An ISO 8000-61 Based Data Quality Management Methodology for Sensor Data. Sensors 2018, 18, 3105. [Google Scholar] [CrossRef]

- Moen, R.; Norman, C. Evolution of the PDCA Cycle, 2006. In Proceedings of the 7th ANQ Congress, Tokyo, Japan, 17 September 2017. [Google Scholar]

- Perez-Castillo, R.; Carretero, A.G.; Rodriguez, M.; Caballero, I.; Piattini, M.; Mate, A.; Kim, S.; Lee, D. Data Quality Best Practices in IoT Environments. In Proceedings of the 2018 11th International Conference on the Quality of Information and Communications Technology (QUATIC), Coimbra, Portugal, 4–7 September 2018; pp. 272–275. [Google Scholar] [CrossRef]

- Chakraborty, N.; Sharma, A.; Dutta, J.; Kumar, H.D. Privacy-Preserving Data Quality Assessment for Time-Series IoT Sensors. In Proceedings of the 2024 IEEE International Conference on Internet of Things and Intelligence Systems (IoTaIS), Bali, Indonesia, 28–30 November 2024; pp. 51–57. [Google Scholar] [CrossRef]

- Kim, S.; Del Castillo, R.P.; Caballero, I.; Lee, J.; Lee, C.; Lee, D.; Lee, S.; Mate, A. Extending Data Quality Management for Smart Connected Product Operations. IEEE Access 2019, 7, 144663–144678. [Google Scholar] [CrossRef]

- Ehrlinger, L.; Wöß, W. Automated Data Quality Monitoring. In Proceedings of the 22nd ICIQ, Little Rock, AR, USA, 6–7 October 2017. [Google Scholar]

- Seiger, R.; Schultheis, A.; Bergmann, R. Case-Based Activity Detection from Segmented Internet of Things Data. In International Conference on Case-Based Reasoning; LNCS; Springer: Berlin/Heidelberg, Germany, 2025; Volume 15662, pp. 438–453. [Google Scholar] [CrossRef]

- Corrales, D.C.; Ledezma, A.; Corrales, J.C. A case-based reasoning system for recommendation of data cleaning algorithms in classification and regression tasks. Appl. Soft Comput. 2020, 90, 106180. [Google Scholar] [CrossRef]

- Mangler, J.; Grüger, J.; Malburg, L.; Ehrendorfer, M.; Bertrand, Y.; Benzin, J.V.; Rinderle-Ma, S.; Serral Asensio, E.; Bergmann, R. DataStream XES Extension: Embedding IoT Sensor Data into Extensible Event Stream Logs. Future Internet 2023, 15, 109. [Google Scholar] [CrossRef]

- IEEE Std 1849-2016; IEEE Standard for eXtensible Event Stream (XES) for Achieving Interoperability in Event Logs and Event Streams. IEEE: New York, NY, USA, 2016. [CrossRef]

- IEEE Std 1849-2023 (Revision of IEEE Std 1849-2016); IEEE Standard for eXtensible Event Stream (XES) for Achieving Interoperability in Event Logs and Event Streams. IEEE: New York, NY, USA, 2023. [CrossRef]

- Stekhoven, D.J.; Bühlmann, P. MissForest—Non-parametric missing value imputation for mixed-type data. Bioinformatics 2012, 28, 112–118. [Google Scholar] [CrossRef]

- Wang, J.; Du, W.; Cao, W.; Zhang, K.; Wang, W.; Liang, Y.; Wen, Q. Deep Learning for Multivariate Time Series Imputation: A Survey. arXiv 2024, arXiv:2402.04059. [Google Scholar] [CrossRef]

- Levenshtein, V.I. Binary Codes Capable of Correcting Deletions, Insertions and Reversals. In Soviet Physics-Doklady; Soviet Union: Moscow, Russia, 1966; Volume 10, pp. 707–710. [Google Scholar]

- Bergmann, R.; Grumbach, L.; Malburg, L.; Zeyen, C. ProCAKE: A Process-Oriented Case-Based Reasoning Framework. In Proceedings of the 27th ICCBR Workshop, Otzenhausen, Germany, 8–12 September 2019; Volume 2567, pp. 156–161. [Google Scholar]

- Mahalanobis, P.C. On the Generalized Distance in Statistics. Proc. Natl. Inst. Sci. India 1936, 2, 49–55, reprinted in Sankhyā: Indian J. Stat. 2018, 80-A (Suppl. 1), S1–S7. [Google Scholar]

- Chicco, D. Siamese Neural Networks: An Overview. In Artificial Neural Networks, 3rd ed.; Methods in Molecular Biology; Springer: Berlin/Heidelberg, Germany, 2021; Volume 2190, pp. 73–94. [Google Scholar] [CrossRef]

- Forbus, K.D.; Gentner, D.; Law, K. MAC/FAC: A Model of Similarity-Based Retrieval. Cogn. Sci. 1995, 19, 141–205. [Google Scholar] [CrossRef]

- Craw, S.; Jarmulak, J.; Rowe, R. Maintaining Retrieval Knowledge in a Case-Based Reasoning System. Comput. Intell. 2001, 17, 346–363. [Google Scholar] [CrossRef]

- Malburg, L.; Hoffmann, M.; Trumm, S.; Bergmann, R. Improving Similarity-Based Retrieval Efficiency by Using Graphic Processing Units in Case-Based Reasoning. In Proceedings of the International FLAIRS Conference Proceedings, North Miami Beach, FL, USA, 19–21 May 2021. [Google Scholar] [CrossRef]

- Allen, J.F. Maintaining Knowledge about Temporal Intervals. Commun. ACM 1983, 26, 832–843. [Google Scholar] [CrossRef]

- Weich, J.; Schultheis, A.; Hoffmann, M.; Bergmann, R. Integration of Time Series Embedding for Efficient Retrieval in Case-Based Reasoning. In International Conference on Case-Based Reasoning; LNCS; Springer: Berlin/Heidelberg, Germany, 2025; Volume 15662, pp. 328–344. [Google Scholar] [CrossRef]

- Hoffmann, M. Hybrid AI for Process Management: Improving Similarity Assessment in Process-Oriented Case-Based Reasoning via Deep Learning. Ph.D. Thesis, Trier University, Trier, Germany, 2025. [Google Scholar]

- West, N.; Deuse, J. Industrial Screw Driving Dataset Collection: Time Series Data for Process Monitoring and Anomaly Detection. 2025. Available online: https://zenodo.org/records/14860571 (accessed on 2 July 2025).

- Augusti, F.; Albertini, D.; Esmer, K.; Sannino, R.; Bernardini, A. IMAD-DS: A Dataset for Industrial Multi-Sensor Anomaly Detection Under Domain Shift Conditions. In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2024, Tokyo, Japan, 23–25 October 2024. [Google Scholar] [CrossRef]

- Ehrendorfer, M.; Mangler, J.; Rinderle-Ma, S. Clustering Raw Sensor Data in Process Logs to Detect Data Streams. In International Conference on Cooperative Information Systems 2023; LNCS; Springer: Berlin/Heidelberg, Germany, 2023; Volume 14353, pp. 438–447. [Google Scholar] [CrossRef]

- Schmidt, R.; Gierl, L. A prognostic model for temporal courses that combines temporal abstraction and case-based reasoning. Int. J. Med. Inform. 2005, 74, 307–315. [Google Scholar] [CrossRef]

- Plaza, E.; McGinty, L. Distributed case-based reasoning. Knowl. Eng. Rev. 2005, 20, 261–265. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Schultheis, A.; Alt, B.; Bast, S.; Guldner, A.; Jilg, D.; Katic, D.; Mundorf, J.; Schlagenhauf, T.; Weber, S.; Bergmann, R.; et al. EASY: Energy-Efficient Analysis and Control Processes in the Dynamic Edge-Cloud Continuum for Industrial Manufacturing. Künstliche Intell. 2024, 39, 161–166. [Google Scholar] [CrossRef]

- Chebel-Morello, B.; Haouchine, M.K.; Zerhouni, N. Case-based maintenance: Structuring and incrementing the case base. Knowl. Based Syst. 2015, 88, 165–183. [Google Scholar] [CrossRef]

- Smyth, B.; Keane, M.T. Remembering To Forget: A Competence-Preserving Case Deletion Policy for Case-Based Reasoning Systems. In Proceedings of the 14th IJCAI, Montreal, QC, Canada, 20–25 August 1995; Morgan Kaufmann: Cambridge, MA, USA, 1995; pp. 377–383. [Google Scholar]

- Buduma, N. Fundamentals of Deep Learning: Designing Next-Generation Machine Intelligence Algorithms, 1st ed.; O’Reilly: Sebastopol, CA, USA, 2017. [Google Scholar]

| Category 1 | Category 2 | Category 3 |

|---|---|---|

| Similarity measures that can only be applied to time series of the same length. These compare only the values at the corresponding times. | Similarity measures that can be applied to time series of different lengths and consider not only the values, but the time points themselves. | Similarity measures, like those in Cat. 2, but that can detect stretching and compression in addition. |

| List Mapping [47] | Smith–Waterman Algorithm (SWA) [53] | Dynamic Time Warping (DTW) [54] |

| Missing Sensor Values (Section 4.3.1) | Missing Sensors (Section 4.3.2) | Time Shift (Section 4.3.3) | |

|---|---|---|---|

| Categorization (Goknil et al. [7]) | Missing Data | Missing Data | Noise |

| Issue Type (Verhulst [38]) | Completeness | Completeness | Correctness |

| Key Challenge | Individual values are missing, but values are not always logged continuously, making it difficult to determine whether a failure has occurred. | Unclear whether sensor time series are intentionally excluded or unintentionally missing. | Time shifts are not reliably detected by common measures like DTW. |

| Solution Approach | Use of a weighted DTW-based similarity measure that selectively identifies deviations in time series. | Comparison on stream level, with strong penalties for missing mappings when metadata matches. | Increased weighting of the timestamp to explicitly penalize time-based deviations. |

| Actual Positive | Actual Negative | |

|---|---|---|

| Predicted Positive | 5 | 3 |

| Predicted Negative | 431 | 3 |

| Actual Positive | Actual Negative | |

|---|---|---|

| Predicted Positive | 0 | 0 |

| Predicted Negative | 25 | 475 |

| Actual Positive | Actual Negative | |

|---|---|---|

| Predicted Positive | 0 | 1 |

| Predicted Negative | 15 | 368 |

| Performance Measure | Missing Sensor Value | Missing Sensor | Time Shift | Average Value for Overall CBR Approach |

|---|---|---|---|---|

| Accuracy | ||||

| Precision | ||||

| Recall | ||||

| Specificity | ||||

| F1-Score | ||||

| False Positive Rate | ||||

| False Negative Rate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schultheis, A.; Bertrand, Y.; Grüger, J.; Malburg, L.; Bergmann, R.; Serral Asensio, E. Case-Based Data Quality Management for IoT Logs: A Case Study Focusing on Detection of Data Quality Issues. IoT 2025, 6, 63. https://doi.org/10.3390/iot6040063

Schultheis A, Bertrand Y, Grüger J, Malburg L, Bergmann R, Serral Asensio E. Case-Based Data Quality Management for IoT Logs: A Case Study Focusing on Detection of Data Quality Issues. IoT. 2025; 6(4):63. https://doi.org/10.3390/iot6040063

Chicago/Turabian StyleSchultheis, Alexander, Yannis Bertrand, Joscha Grüger, Lukas Malburg, Ralph Bergmann, and Estefanía Serral Asensio. 2025. "Case-Based Data Quality Management for IoT Logs: A Case Study Focusing on Detection of Data Quality Issues" IoT 6, no. 4: 63. https://doi.org/10.3390/iot6040063

APA StyleSchultheis, A., Bertrand, Y., Grüger, J., Malburg, L., Bergmann, R., & Serral Asensio, E. (2025). Case-Based Data Quality Management for IoT Logs: A Case Study Focusing on Detection of Data Quality Issues. IoT, 6(4), 63. https://doi.org/10.3390/iot6040063