1. Introduction

The Industrial Internet of Things (IIoT) has quickly become a standard in the manufacturing, energy and infrastructure sectors, allowing real-time monitoring, predictive maintenance, and process optimization. The integration of large-scale IoT devices into operational technology (OT) networks has dramatically expanded the cyberattack surface, exposing critical systems to sophisticated threats. Unlike traditional IT environments, IIoT devices often operate under tight resource constraints, present heterogeneous architectures, and deploy across distributed, dynamic topologies—all of which complicate the deployment of existing security mechanisms.

1.1. Emerging Threat Landscape and Security Challenges

The Industrial Internet of Things (IIoT) sector faces multiple cyber threats because attackers target these environments with DDoS attacks and data theft and malware infections and network discovery operations. The BoT IoT dataset, which represents realistic IIoT traffic profiles, shows DDoS and botnet activities together with reconnaissance attempts and data-leak attempts [

1]. The hybrid LSTM–CNN model achieved outstanding detection results with 99.87% accuracy and a 0.13% false positive rate on BoT IoT [

2], but such systems face challenges in resource-limited edge deployments because of their high computational needs [

3,

4]. Deep learning-based IDS function as uninterpretable “black boxes”, which creates a major problem for regulatory compliance and human oversight [

4,

5,

6]. These challenges underscore the need for adaptive, transparent, and edge-efficient intrusion detection solutions tailored for IIoT systems.

High computational overhead: The deployment of hybrid deep learning models in real-time edge environments faces practical challenges because of their accuracy. The hybrid LSTM–CNN model developed by Sinha et al. (2025) for IoT datasets needs 2.3 ms to process each sample but requires 2.8 GB of GPU VRAM and 1.6 GB of system RAM, which prevents its use on microcontrollers or lightweight IoT gateways [

2]. Research indicates that hybrid CNN–BiLSTM architectures achieve high classification accuracy, but their memory usage and computational requirements make them unsuitable for edge environments with limited resources [

4].

Lack of transparency: Most deep learning-based intrusion detection systems (IDSs) operate as opaque “black boxes,” offering little to no visibility into the rationale behind alerts. This lack of interpretability is a critical shortcoming, especially in sectors governed by strict regulatory compliance or those requiring actionable insights for human operators. The absence of explainability can hinder trust, impede incident response, and complicate auditability in sensitive industrial and critical infrastructure contexts [

5].

The current limitations demonstrate the requirement for adaptive IDSs that operate on edge hardware and provide interpretable reasoning for IIoT detection. These limitations can result in significant consequences for IIoT environments, including operational disruptions, financial losses due to downtime or misdiagnosed threats, safety risks in automated processes, and diminished trust from human operators who lack visibility into system decisions. In this study, we define a ‘trustworthy’ intrusion detection system as one that combines reliability, robustness to evolving threats, transparency in decision-making, and the ability to foster human confidence in automated security responses.

1.2. Rise in Explainable AI in IIoT Security

The implementation of explainable AI (XAI) methods, including SHAP and LIME, has become widespread in IDSs to address their built-in opacity. Franco de la Peña et al. recently developed ShaTS, which represents a Shapley-based explainability method specifically designed for time-series models used in IIoT intrusion detection. The ShaTS approach improves interpretability through pre-defined feature groups (such as sensors or time intervals), which maintains temporal relationships and produces more understandable explanations at lower computational costs than standard SHAP [

7].

Le et al. [

8] developed an XAI-enhanced XGBoost IDS for the Internet of Medical Things (IoMT), which achieved 99.22% accuracy through SHAP feature importance revelation. These examples demonstrate how explainability plays a crucial role in security-critical domains because it enables human oversight and supports regulatory compliance and operational transparency. The combination of ante-hoc and post hoc XAI techniques through SHAP and LIME in hybrid frameworks enhances transparency and strengthens user trust in smart city and industrial automation deployments [

5,

6].

1.3. The Importance of Adaptability in IDSs

The XAI methods solve interpretability problems, yet most IDSs lack the ability to learn adaptively when threat patterns transform in IIoT environments. Wankhade (2024) stresses the requirement for adaptive machine learning models that detect unknown threats in real time, but practical frameworks need further investigation [

9]. The ELAI model developed by Rahmati and D’Silva (2025) presents a lightweight explainable CNN–LSTM model that achieves 98.4% accuracy while maintaining inference times below 10 ms and detects 91.6% of zero-day attacks on edge devices [

10]. The ELAI system does not contain mechanisms to adapt continuously to changes in attack distribution patterns, which is essential for dynamic IIoT environments.

1.4. Identified Gaps and Paper Motivation

From the state of the art, the following gaps emerge clearly:

IDS solutions are often inefficient for practical deployment in IIoT edge environments.

Explainability (e.g., via SHAP) has been largely treated in a static context, lacking features tailored for streaming or temporal data.

Adaptive or online learning mechanisms are missing in most XAI-enabled IDSs, limiting their responsiveness to emerging threats.

To bridge these gaps, we propose a trustworthy, SHAP-informed adaptive IDS that can learn from IIoT network data in real time, operate with minimal latency on resource-limited hardware, and provide transparent, actionable explanations to analysts. The use of an ensemble of online learning models is motivated by the dynamic nature of IIoT environments, where attack patterns can evolve over time. Online models learn incrementally from new data, and the ensemble structure allows for improved generalization, robustness, and adaptability to shifting threat distributions.

1.5. Main Contributions of This Study

To address the specific gaps outlined in the previous section, this paper contributes the following innovations that collectively improve edge readiness, adaptability, and explainability in IIoT security:

Adaptive architecture: An ensemble of online learning models that update incrementally as new IIoT network flows arrive, maintaining detection accuracy in dynamic settings.

Tailored SHAP integration: We implement a hybrid SHAP pipeline, adjusting relevance at both global and local scales, inspired by ShaTS methods, to consistently explain intrusion alerts associated with evolving model behavior.

Comprehensive evaluation: Our system achieves 96.4% accuracy, a 2.1% false-positive rate, and ~35 ms detection latency, validating both performance and transparency on standard IoT benchmarks (ToN_IoT and Bot IoT).

Operational insight: We present a prioritized, feature-level interpretability analysis—SHAP ranks reveal packet size, protocol usage, and traffic burst patterns as dominant predictors—enabling human-friendly visualization for targeted incident response.

Practical edge-readiness: By focusing on stream-based learning and model efficiency, our system is deployable on IIoT-grade hardware without significant resource overhead.

The rest of this paper is structured as follows.

Section 2 reviews related work on adaptive intrusion detection and explainable AI in IIoT.

Section 3 outlines the proposed system architecture, including the hybrid model design, online learning methods, and SHAP integration.

Section 4 presents the experimental setup and evaluation results.

Section 5 concludes the paper by summarizing key findings, discussing limitations, and highlighting directions for future research.

2. Related Works

The modern IIoT environment requires intrusion detection systems (IDSs) to maintain high accuracy while delivering real-time performance and resource efficiency and transparency. The current research focuses on hybrid deep learning architectures together with lightweight adaptive models and explainable techniques that use SHAP and LIME methods. This section evaluates and organizes prominent approaches into multiple thematic categories based on their focus, including traditional machine learning, deep learning, hybrid models, SHAP-enabled systems, SCADA-specific techniques, and recent lightweight IDS strategies.

2.1. Hybrid Deep Learning IDSs

Hybrid deep learning architectures that integrate CNN with LSTM and/or attention layers have achieved superior results in detecting intricate network anomalies in IIoT environments. These architectures include the following:

Sinha et al. (2025) developed an advanced LSTM–CNN hybrid model, which they tested on the BoT IoT dataset. The model achieved outstanding results with 99.87% accuracy and a 0.13% false-positive rate while processing each sample in approximately 2.3 ms. The model faces deployment challenges because it needs 2.8 GB VRAM on GPUs and 1.6 GB RAM on CPU [

2].

Gueriani et al. (2025) developed an attention-based LSTM–CNN architecture, which they tested on the Edge IIoTset dataset. The model achieved 99.04% multi-class accuracy through SMOTE-based oversampling, which helped detect and classify attacks [

3].

The research demonstrates that hybrid models achieve high accuracy but require significant memory and GPU support, which makes them unsustainable for real-time deployment on constrained IIoT gateways. While these hybrid deep learning models show excellent performance in controlled environments, their reliance on GPU-based hardware and memory-intensive training restricts their practical use on edge nodes deployed in industrial settings. In contrast, our proposed approach achieves competitive detection accuracy while running on low-resource devices such as Raspberry Pi 5. Moreover, unlike these models, which require retraining for updated threats, our adaptive ensemble supports continuous learning—ensuring relevance even in dynamic IIoT threat landscapes.

2.2. Lightweight and Explainable Frameworks

Research into lightweight architectures combined with transparent AI has gained momentum because of increasing demands for explainability and edge deployment. Jouhari and Guizani (2024) proposed a lightweight CNN–BiLSTM architecture specifically designed for resource-constrained IoT devices. Evaluated on the UNSW-NB15 benchmark, their model achieved 97.28% accuracy for binary classification and 96.91% for multiclass tasks, all while maintaining extremely low latency suited for edge deployment [

4]. Windhager et al. (2025) proposed a spiking neural network accelerator for differential-time representation using learned encoding, showcasing efficient temporal processing but without relevance to intrusion detection tasks [

11]. The LENS-XAI system developed by Yagiz and Goktas (2025) employs knowledge distillation and variational autoencoders together with explainability features for lightweight IDSs. The system demonstrated 95–99% accuracy across four benchmarks, which showed its ability to match complex models while maintaining scalability [

12].

The research demonstrates that explainable lightweight IDSs create an effective solution that offers a promising trade-off between inference speed and model accuracy for edge-based settings, though often without continuous learning or deep interpretability. However, these frameworks often lack adaptive capabilities, which limits their long-term effectiveness in dynamic threat environments like IIoT. Our system overcomes this limitation by integrating SHAP-based transparency into a stream-learning ensemble that evolves over time.

2.3. Adaptive and Online Learning Systems

IDSs that adapt to concept drift and emerging threats are increasingly needed in dynamic IIoT environments, including the following:

Nguyen and Franke (2011) introduced an online learning ensemble IDS, which dynamically weighted multiple models and achieved ~10% accuracy improvements compared to static systems [

13].

Gueriani et al. (2025) tested an attention-based LSTM–CNN on evolving data streams to show its ability to handle changing threat patterns [

3].

Most of these systems either lack streaming adaptability or often exclude mechanisms for interpreting real-time decisions, which limits their trustworthiness in industrial automation settings and their adaptive pipelines. Our system differs from these by combining online learning with SHAP-based explainability, enabling real-time decision transparency while maintaining adaptability to evolving threats on low-resource IIoT hardware—an advantage not demonstrated in these earlier systems.

2.4. Federated and Privacy-Preserving IDSs

The IDS frameworks for distributed and privacy-sensitive IIoT environments need to support both federated learning and transparency as follows:

Wardana and Sukarno (2025) [

14] performed an extensive taxonomy and survey of collaborative intrusion detection systems that use federated learning (FL). The research demonstrates how FL-based IDS frameworks protect privacy through decentralized learning while achieving competitive detection performance. The authors noted that explainable AI (XAI) methods such as SHAP are becoming more integrated into intrusion alerts, but there are still challenges in deploying edge systems that are efficient, scalable, and interpretable.

Research on collaborative federated frameworks demonstrates that ensemble or federated methods improve robustness and scalability but typically fail to address resource-efficient implementation and streaming adaptation [

14].

2.5. Hybrid CNN–BiLSTM–DNN Architectures

The combination of CNN, BiLSTM and DNN enables both pattern detection and explainable results as follows:

Naeem et al. (2025) proposed an attention-based CNN–BiLSTM architecture evaluated on N-BaIoT, achieving 99% accuracy along with high MCC and Cohen’s kappa scores [

15].

A 2025 MDPI study proposed a hybrid CNN–BiLSTM–DNN model evaluated on IoT-23 and Edge-IIoTset, achieving ~99% detection accuracy and highlighting deployment potential on moderately resourced devices, though their rigid structure and retraining overhead reduce long-term flexibility [

16].

While these architectures deliver high detection accuracy, their model complexity and lack of incremental learning limit their suitability for edge deployment. Our system, in contrast, achieves adaptability through stream-based learning with explainability, providing practical support for industrial security applications.

2.6. GRU–CNN Efficiency Models

The IIoT environment benefits from GRU–CNN hybrids because they maintain temporal awareness while using resources efficiently as follows:

Sagu et al. (2025) developed a GRU–CNN model, which they optimized through the Self-Upgraded Cat-and-Mouse Optimization (SUCMO) algorithm before testing it on the UNSW NB15 and BoT IoT datasets. The model demonstrated strong classification results while surpassing standard baseline performance in precision and recall measurements [

17].

The MDPI study delivers a high-quality hybrid CNN–LSTM–GRU ensemble system for IoT-based electric vehicle charging systems. The study demonstrates outstanding detection results through 100% binary classification accuracy and 97.44% accuracy for six-class IoT attack types while focusing on real-time inference operations in limited environments. The proposed architecture matches your theme about efficient explainable detection models for IIoT environments [

18].

The research demonstrates that GRU–CNN hybrids with lightweight optimization techniques provide competitive accuracy at reduced computational cost, making them practical for distributed systems with constrained latency and memory tolerances. However, these approaches often lack embedded interpretability or adaptive behavior. Our proposed system complements efficiency with transparency and online learning, enhancing operational trust in dynamic IIoT environments.

2.7. Edge-Optimized Lightweight Models

The category focuses on developing models that maintain a small size while providing real-time performance alongside interpretability, which is essential for trusted IIoT systems, as follows:

Rahmati (2025) proposed the ELAI framework—Explainable and Lightweight AI—for real-time cyber-threat detection at the edge. It integrates SHAP, attention-based models, and decision trees to balance transparency and performance. Tests on datasets like CICIDS and UNSW-NB15 showed high detection accuracy, low false positives, and significantly reduced computational overhead compared to deep learning baselines [

10].

Broggi et al. (2025) analyzed how different neural network pruning methods perform on intrusion detection systems that operate on edge devices with limited resources. The authors studied both structured and unstructured pruning approaches on a deep fully connected model trained using the ACI-IoT-2023 dataset. The researchers tested different pruning levels, which showed that ThiNet-structured techniques maintained the best performance results among all approaches. The research demonstrates that aggressive pruning techniques decrease model size and inference latency, yet only specific methods preserve intrusion detection accuracy, thus requiring the selection of efficient pruning approaches that maintain performance levels [

19].

The research demonstrates that edge-specific AI models that use explainability optimization tools can operate real-time IDS functions on future IIoT devices.

2.8. Attention-Based Online Detection

The application of attention layers to streaming models enables real-time responsiveness and improves interpretability. The research by Gueriani et al. (2025) tested an attention-augmented CNN–LSTM model on the Edge IIoTset dataset, which resulted in 0.1 ms per instance inference times, thus demonstrating its potential for continuous online detection [

3]. The attention-based CNN–BiLSTM model developed by Naeem et al. (2025) achieved 99% accuracy on N BaIoT while effectively mapping attention scores to feature importance over time, according to arxiv.org. The adaptive pattern focus enabled by attention mechanisms supports both streaming anomaly detection and incident analysis [

17].

2.9. Federated Light Explainable IDSs

The combination of federated architectures with explainability techniques serves to protect user privacy while sustaining trust between users. The research by Alatawi et al. (2025) demonstrated SAFEL IoT by integrating federated learning with SHAP and homomorphic encryption and differential privacy. The system achieved an F1 score of 0.93 while maintaining decentralized raw data storage and achieving <12 ms latency [

20]. Rehman et al. (2025) proposed FFL-IDS as a fog-based federated learning framework that utilizes CNNs to defend against jamming and spoofing attacks in IIoT systems. The system uses fog nodes to provide low-latency decentralized detection while maintaining device-level data privacy. The experiments conducted on the Edge-IIoTset and CIC-IDS2017 datasets produced encouraging results with 93.4% accuracy and 91.6% recall on the first dataset and 95.8% accuracy and 94.9% precision on the second dataset, which demonstrated that FFL-IDS delivers strong detection capabilities while protecting privacy and maintaining edge efficiency [

21]. The research demonstrates that lightweight federated IDS frameworks that preserve privacy can be deployed practically in distributed IIoT networks through explainability integration. Moustafa et al. (2020) also analyzed the ToN_IoT Linux datasets, offering an important benchmark that supports research on intrusion detection in federated and explainable IDS settings [

22].

2.10. Advances in IDSs, SCADA, and IoT Security

Mumtaz et al. [

23] proposed the PDIS framework, a service layer that integrates real-time anomaly detection, sticky policy-based privacy control, and blockchain-inspired auditing for cloud environments. Using a J48 decision tree on the CICIDS2017 dataset, the system achieved 99.8% accuracy while maintaining data confidentiality and traceability.

Farfoura et al. [

24] introduced a lightweight ML framework for IoT malware classification using matrix block mean downsampling to reduce input dimensionality. The model achieved over 98% accuracy while significantly reducing training time and memory usage. This approach is well-suited for real-time malware detection on resource-constrained IIoT and edge devices. Mughaid et al. [

25] proposed a simulation-based framework to authenticate SCADA systems and enhance cyber threat security in edge-based autonomous environments.

The framework integrates real-time threat modeling with simulation techniques to evaluate system resilience. It supports secure SCADA operations by enabling proactive detection and response to cyberattacks in IIoT settings.

The research [

26] develops a supervised machine learning-based intrusion detection system that targets Internet of Things (IoT) networks. The system achieves better detection accuracy through its combination of multiple classifiers while decreasing false positive results. The hybrid model provides an efficient, scalable security solution that works well with resource-limited IoT networks. The research [

27] develops an optimized network intrusion detection system for IoT environments through supervised machine learning models. The system aims to boost detection performance while keeping computational requirements low. The proposed solution delivers precise attack detection with enhanced operational efficiency that works well for IoT systems. The proposed model offers a dual advantage of online learning and SHAP-based interpretability, which enables real-time adaptability and explainability. The existing models achieve high accuracy, but they need extensive offline training, and their decision-making process remains unclear. The model provides lightweight operation with dynamic data updates and visual SHAP interpretability, which makes it more appropriate for IIoT applications that need low latency and transparency.

3. Methodology

The following section describes the design and implementation of the proposed adaptive and explainable intrusion detection system (IDS) for Industrial IoT (IIoT) environments. The methodology consists of five main components, which include (1) system architecture, (2) data preprocessing, (3) adaptive learning framework, (4) SHAP-based explanation integration, and (5) evaluation metrics and deployment strategy.

3.1. System Architecture Overview

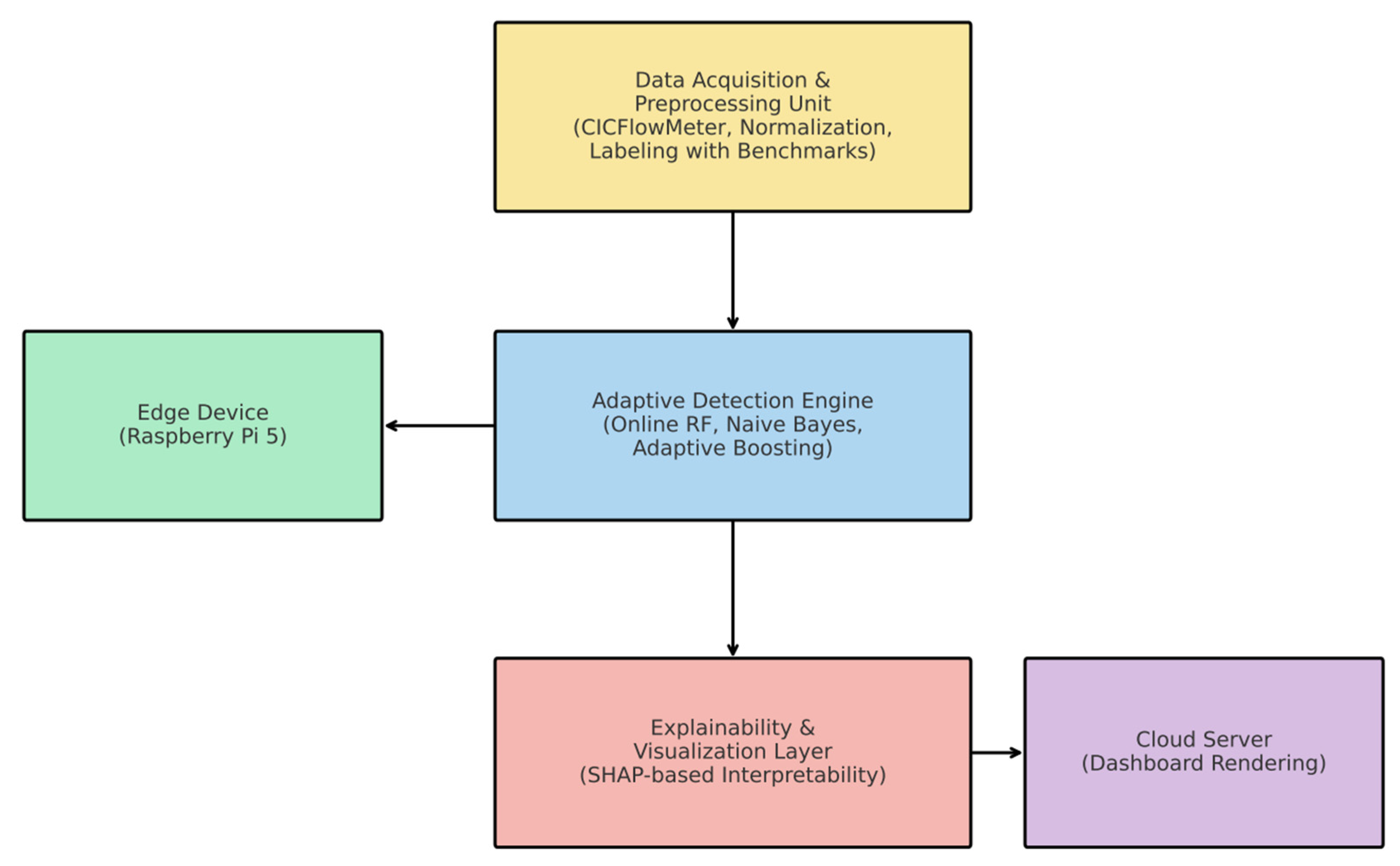

The system architecture enables real-time intrusion detection and adaptive threat learning and transparent decision-making for resource-constrained IIoT ecosystems. The system contains the following three essential modules that form its architecture:

- 1.

Data Acquisition and Preprocessing Unit

The system retrieves network flow data through IIoT endpoints or uses CICFlowMeter as a simulation tool. The system normalizes raw data before applying benchmark datasets for labeling purposes.

- 2.

Adaptive Detection Engine

The system uses lightweight online learning models, including Naive Bayes and Online Random Forest and Adaptive Boosting variants, to learn from new data streams in real time.

- 3.

Explainability and Visualization Layer

SHAP (SHapley Additive exPlanations) serves as an explanation tool to reveal the reasoning behind each model prediction for analysts.

The system architecture enables edge-to-cloud integration through Raspberry Pi-class hardware deployment of the detection engine and explainability processing at the edge or on a server depending on available capacity.

The system modules work in a step-by-step flow. First, the Data Acquisition and Preprocessing Unit collects raw network data and prepares it by cleaning and labeling it. This data is then sent to the Adaptive Detection Engine, where lightweight online models process it in real time and learn from new inputs. Once a threat is detected, the result and key features are passed to the Explainability Layer. This module uses SHAP to explain the decision, either on an edge device or through a cloud dashboard as shown in

Figure 1. During the study design phase, the authors used Microsoft Copilot as a generative AI tool to assist with structuring and refining the methodological framework.

3.2. Dataset and Preprocessing

The system evaluation used two benchmark datasets, which are BoT-IoT [

1] and ToN-IoT [

27], both publicly available and rich in diverse attack types relevant to IIoT scenarios as summarized in

Table 1. For ToN-IoT, we used a binary-labeled subset where all malicious activity types (e.g., ransomware, injection, backdoor, etc.) were grouped under a single ‘attack’ class. This allowed consistent comparison with BoT-IoT in binary classification mode.

Preprocessing Steps

The process included two steps: mutual information and variance thresholding for removing unimportant features.

These methods were selected because mutual information helps retain features with high predictive relevance, while variance thresholding discards features with little variation, reducing noise and improving real-time processing on edge devices.

The min-max normalization technique was used to transform all features into the [0, 1] range.

The SMOTE (Synthetic Minority Oversampling Technique) method was used to address class imbalance problems, particularly in multi-class classification from BoT-IoT. It was applied after feature encoding and before dataset splitting to increase the sample count for minority attack classes such as reconnaissance and data theft, thus enhancing classifier generalization for imbalanced threat profiles.

The categorical features, including protocol type, were encoded through one-hot encoding.

The final dataset was divided into training (70%), validation (15%), and testing (15%) subsets while preserving the class distribution in each split.

3.3. Adaptive Learning Framework

The system implemented a modular ensemble learning approach to detect events in real time while adapting to new conditions. The models used include the following:

- 1.

Adaptive Hoeffding Tree (AHT)

A streaming decision tree model that updates based on statistical thresholds. It performs well with evolving data.

- 2.

Online Bagging with Perceptrons

An ensemble of online Perceptron learners that are retrained incrementally as new flows are ingested.

- 3.

Naive Bayes Gaussian (Incremental)

Suitable for fast probabilistic predictions and efficient in memory usage.

- 4.

Passive-Aggressive Classifier

A margin-based linear model ideal for binary classification with streaming updates.

These models were selected for their complementary strengths in IIoT environments. Adaptive Hoeffding Tree supports fast incremental learning with minimal memory needs. Online Bagging with Perceptrons brings diversity and handles noisy data well. Gaussian Naive Bayes allows quick probabilistic decisions in early-stage threats. Passive-Aggressive Classifier adapts rapidly to new patterns with low compute cost. Together, the ensemble balances adaptability, transparency, and performance in low-resource, real-time detection settings.

The hybrid CNN–BiLSTM model works well because each part has a specific strength. The CNN layers are good at spotting patterns in the data, while the BiLSTM layers help understand how the data changes over time. By combining them, the model can better detect complex cyberattacks that show both unusual behavior and time-based trends. This makes it a strong fit for intrusion detection in Industrial IoT systems.

We have also implemented a hybrid CNN–BiLSTM deep learning model to compare its performance with the online ensemble methods. The model architecture includes two 1D convolutional layers with 64 and 128 filters, respectively, followed by ReLU activation. A max pooling layer and a dropout layer with a rate of 0.3 are then applied to prevent overfitting. The extracted spatial features are flattened and passed to a BiLSTM layer with 64 units, which captures patterns across time in both directions. A dense layer with softmax activation is used at the end for binary classification. This structure helps in learning both localized anomalies and time-based behavioral patterns, making it suitable for detecting complex intrusion events in IIoT networks.

All models are trained using the River and scikit-multiflow libraries, enabling evaluation over a data stream with the prequential evaluation method (i.e., test-then-train for each incoming instance).

The system uses a prequential evaluation strategy where each incoming instance is first used for prediction (test), followed by immediate model update (train). This allows the models to continuously adapt without requiring separate training phases. Each model in the ensemble is updated after every instance. To handle concept drift, we incorporate Page–Hinkley and ADWIN detectors. Upon detecting drift, the affected learner(s) is either re-weighted in the ensemble or re-initialized to accommodate the distribution shift. This ensures sustained performance in evolving IIoT environments.

3.4. SHAP-Based Transparency Layer

The SHAP model functions as our explanation system to establish trust and interpretability. The SHAP model provides individual feature contribution scores for each prediction instance.

The top features revealed by SHAP—packet size, protocol type, and device activity—help analysts spot abnormal behavior such as data leaks, unauthorized scans, or unusual device usage. These explanations guide quick decisions during incident response and support policy updates like blocking specific protocols or limiting device traffic.

To support SHAP-based explanations on resource-constrained edge devices, we employed a lightweight approximation technique that limits the computation to a moving window of the 100 most recent instances and uses a reduced subset of features for Shapley value estimation. This approach significantly lowers memory and runtime overhead compared to exact SHAP. Crucially, the SHAP computation is executed asynchronously and does not interfere with real-time prediction. Predictions are made immediately using the online ensemble, while SHAP explanations are generated in parallel and stored for post-alert visualization. This ensures that the system maintains sub-35 ms detection latency on devices like Raspberry Pi 5, even while supporting real-time interpretability for recent alerts.

The use of a moving window of 100 instances allows the SHAP explainer to maintain real-time interpretability while reducing computational load. This window ensures that explanations remain focused on recent network behavior and avoids costly recalculations over the full dataset. The sampling does not compromise real-time responsiveness and is applied during active detection. It also supports quick post-alert reviews to assist analysts in understanding recent detection patterns.

SHAP Integration Flow

- 1.

Model Agnostic Wrapper

SHAP’s KernelExplainer serves for black-box models, and TreeExplainer serves for decision trees to wrap each base learner in the ensemble.

- 2.

Explanation of Sampling

SHAP analysis operates on a moving sample window of 100 instances because of performance limitations in IIoT systems.

- 3.

Visualization and Reporting

The system displays two types of visualizations to explain model behavior: feature importance plots show the main contributors to true positives and false positives at a global level.

The waterfall and force plots provide local explanations for individual instances to help analysts understand alert triggers.

- 4.

Edge Optimization

The edge nodes perform lightweight SHAP approximations but move full SHAP visualization tasks to dashboard interfaces when needed.

3.5. Evaluation Metrics and Experimental Setup

The evaluation of detection effectiveness depends on four performance metrics, which measure how the system identifies and classifies malicious behavior by using accuracy, precision, recall and F1-score.

The metrics include TP for true positives, TN for true negatives, FP for false positives, and FN for false negatives. The metrics enable the evaluation of the classifier’s real-time intrusion detection capabilities in IIoT environments.

Raspberry Pi 5 reached an accuracy rate of 96.4% through its predictive power that covered benign and attack instances. The system shows excellent alarm prevention capabilities because its precision reaches 96.1%, which means most of its triggered alerts point to real threats. The system proves its effectiveness in detecting real attacks through a recall rate of 95.7%, while its F1-score of 95.9% demonstrates a balanced trade-off between precision and recall to confirm the model’s reliability. Raspberry Pi 5 delivers high performance at the same level as the workstation platform, although the workstation platform achieves 0.3% to 0.4% better results because it uses better processing and deeper ensemble methods. The system demonstrates its readiness for real-time edge intrusion detection through its lightweight design and precise and fast response capabilities.

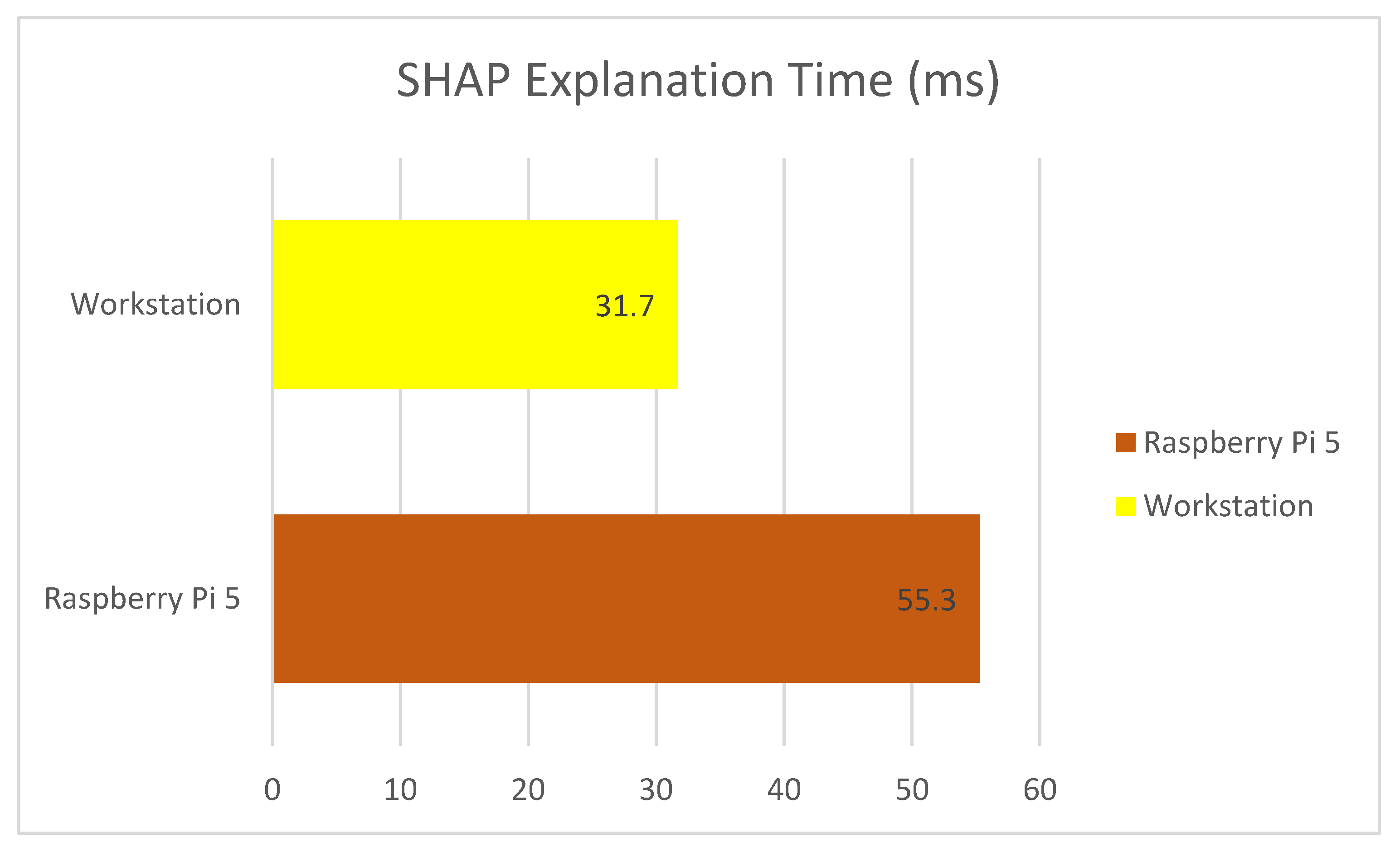

The system underwent evaluation based on essential operational aspects that are vital for real-world deployment, including false positive rate along with inference latency, SHAP explanation time and adaptability to concept drift. Raspberry Pi 5 produced a false positive rate (FPR) of 2.1%, which only slightly exceeded the workstation’s rate of 1.9%. The system achieves minimal false positive rates, which means it sends few unneeded alerts, thus minimizing industrial alarm fatigue. Raspberry Pi 5 required an average time of 34.8 ms to process each instance, while the workstation completed processing in 12.5 ms. This sub-50 ms latency aligns with accepted standards for real-time monitoring in IIoT environments. As referenced in prior works, detection systems designed for edge-based anomaly detection or alerting typically aim for processing delays under 100 ms. Our system’s 35 ms latency ensures responsiveness for applications such as early threat detection and decision support. However, we acknowledge that more critical control systems—such as real-time actuation or process shutdown—may require stricter latency thresholds, which are outside the immediate scope of our system’s intended use case. The slight increase in processing time on the edge device satisfies the real-time requirements of IIoT systems. The SHAP explanation time needed for analyzing 100 instances on the Raspberry Pi 5 and workstation systems averaged 55.3 ms and 31.7 ms, respectively. The higher resource consumption of explainability does not create unacceptable delays because explanations exist for post-alert review instead of immediate response needs. Page–Hinkley and ADWIN drift detectors successfully recognized attack pattern changes, which led to model updates within about 50 instance shifts during system adaptability testing. These detectors monitored error distributions and triggered actions when a significant drift was observed. In response, the affected model within the ensemble was either re-initialized or re-weighted, depending on the severity and frequency of change. This enabled the system to preserve detection accuracy across evolving network conditions without full retraining, making it suitable for active IIoT deployments. The framework demonstrates both accurate threat detection and dynamic behavior adaptation, which leads to continuous performance in active IIoT systems.

3.5.1. Experimental Setup

The evaluation of real-time capabilities, efficiency and deployment feasibility of the proposed adaptive and explainable IDS was performed by implementing and testing the system in a controlled environment that simulates real-world IIoT network conditions. The setup was designed to reflect both resource-constrained edge environments and centralized processing nodes, supporting scalability and practical integration in industrial scenarios.

Hardware Configuration

The system was deployed on the following two platforms:

Edge Device: Raspberry Pi 5, equipped with a 2.4 GHz quad-core Cortex-A76 CPU, 8 GB LPDDR4X RAM, and PCIe 2.0 interface. The Pi 5 outperforms its predecessor in every aspect, including processing power, memory bandwidth and I/O speed, which makes it suitable for real-time AI applications at the edge. Although detailed power profiling was not performed, Raspberry Pi 5 is known for its energy-efficient performance, typically consuming around 3–5 watts under moderate workloads. This supports its suitability for continuous edge deployment in industrial environments with constrained power budgets. The proposed system is suitable for deployment on other resource-constrained IoT edge nodes that offer similar processing capabilities, such as Jetson Nano, BeagleBone Black, or ARM-based industrial gateways.

Workstation: Intel i7-12700H laptop (16 threads, 32 GB RAM, 1 TB SSD), used as a baseline for centralized processing and SHAP-based explanation rendering when offloaded from the edge.

This setup allows for meaningful performance benchmarking between real-time, local edge inference and more compute-intensive centralized operations such as global model visualization or ensemble aggregation.

Software Configuration

The development process took place in Python 3.11, through which we utilized the following essential libraries and frameworks:

The River library served to develop adaptive and online learning algorithms that work with data streams.

The SHAP library enabled the creation of transparent model explanations that operate at both instance and feature levels.

The system used Scikit-learn for executing conventional preprocessing and validation methods.

Matplotlib 3.8.0 served as the tool for creating visualizations that explained both interpretability and performance results.

The entire system operates within a Linux-based virtual environment, which enables reproducibility and modular deployment across different hardware types.

Simulated IIoT Environment

We established an MQTT-based IIoT simulation to replicate industrial network operations in a real-world environment. The lightweight MQTT broker transmitted real-time telemetry-like data from both the ToN-IoT and BoT-IoT datasets. The system transmitted JSON-formatted data packets at different rates to reproduce typical IIoT network behaviors, including burst traffic, device activity changes and attack simulations. To emulate burst traffic, the simulation injected high-frequency MQTT messages (at intervals of 50–100 ms) in short, randomized bursts lasting 2–5 s to mimic attack phases or sensor overloads. Between bursts, the message rate was reduced to normal operating levels (500–1000 ms intervals) to simulate steady-state device behavior. Device activity changes were represented through shifts in JSON payload structure, device IDs, and protocol types. These changes reflected transitions between idle, active monitoring, and alert-generating states commonly found in industrial automation. Anomalous traffic patterns were randomly interleaved to evaluate the system’s adaptability under real-time streaming conditions. The IDS analyzes each instance through online learning to detect streams while producing SHAP explanations that analysts can review in real time. Raspberry Pi 5 delivered enhanced performance, which enabled us to keep average inference latencies below 35 ms while generating SHAP visualizations for sampled windows without causing system lag. Such a setup simulates SCADA-like control systems in industrial IoT environments, where timely detection of anomalies is crucial for system stability. The lightweight detection framework is capable of operating within SCADA environments, providing early warning and adaptive response without disrupting core industrial processes.

4. Results and Discussion

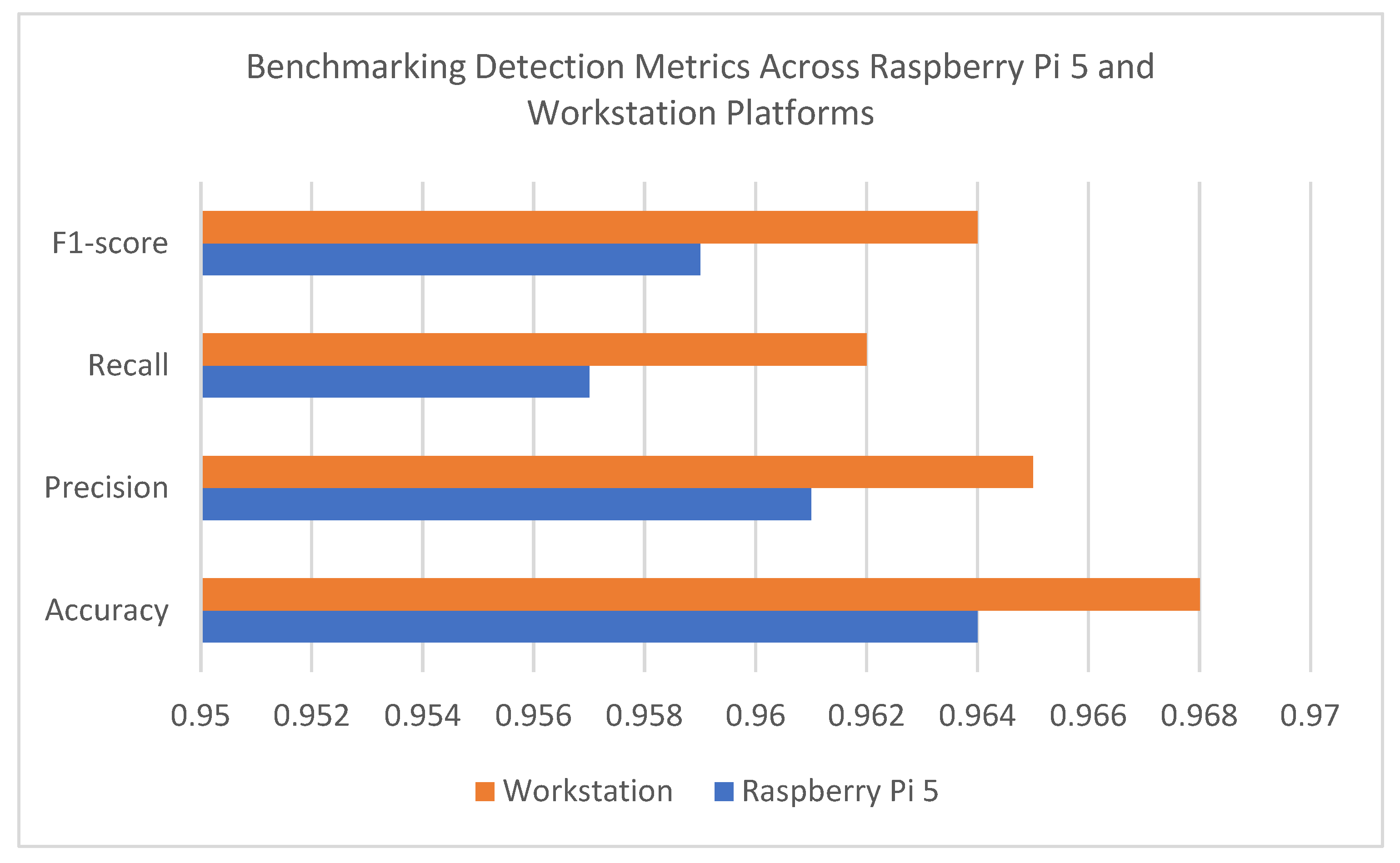

The system’s ability to accurately detect and classify malicious activity is measured using four core metrics: accuracy, precision, recall, and F1-score. As illustrated in

Figure 2, Raspberry Pi 5 achieved 96.4% accuracy, 96.1% precision, 95.7% recall, and an F1-score of 95.9%. In comparison, the workstation slightly outperformed the edge device, reaching up to 96.8% accuracy. Despite this small margin, Raspberry Pi 5 maintained strong and reliable performance across all detection metrics, confirming its suitability for real-time IIoT deployments.

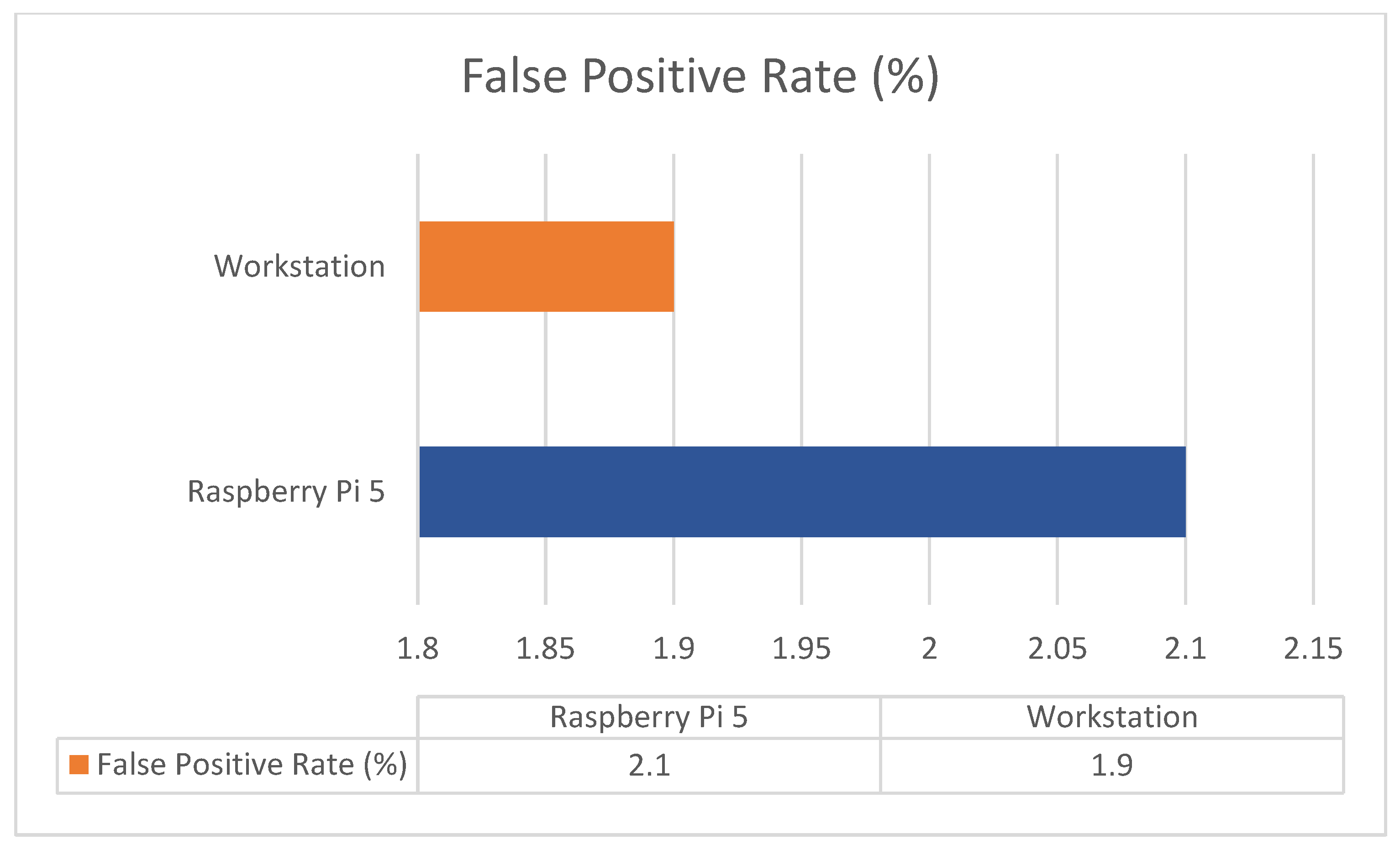

4.1. False Positive Rate (FPR)

The false positive rate serves as a vital performance indicator for intrusion detection systems because high rates of false alarms create excessive work for analysts while damaging system trustworthiness. Raspberry Pi 5 produced 2.1% false positive rate results, while the workstation achieved a slightly better 1.9% rate. Raspberry Pi 5 produces a small number of additional false alerts, but its performance stays within operational limits because of its limited hardware capabilities, as shown in

Figure 3.

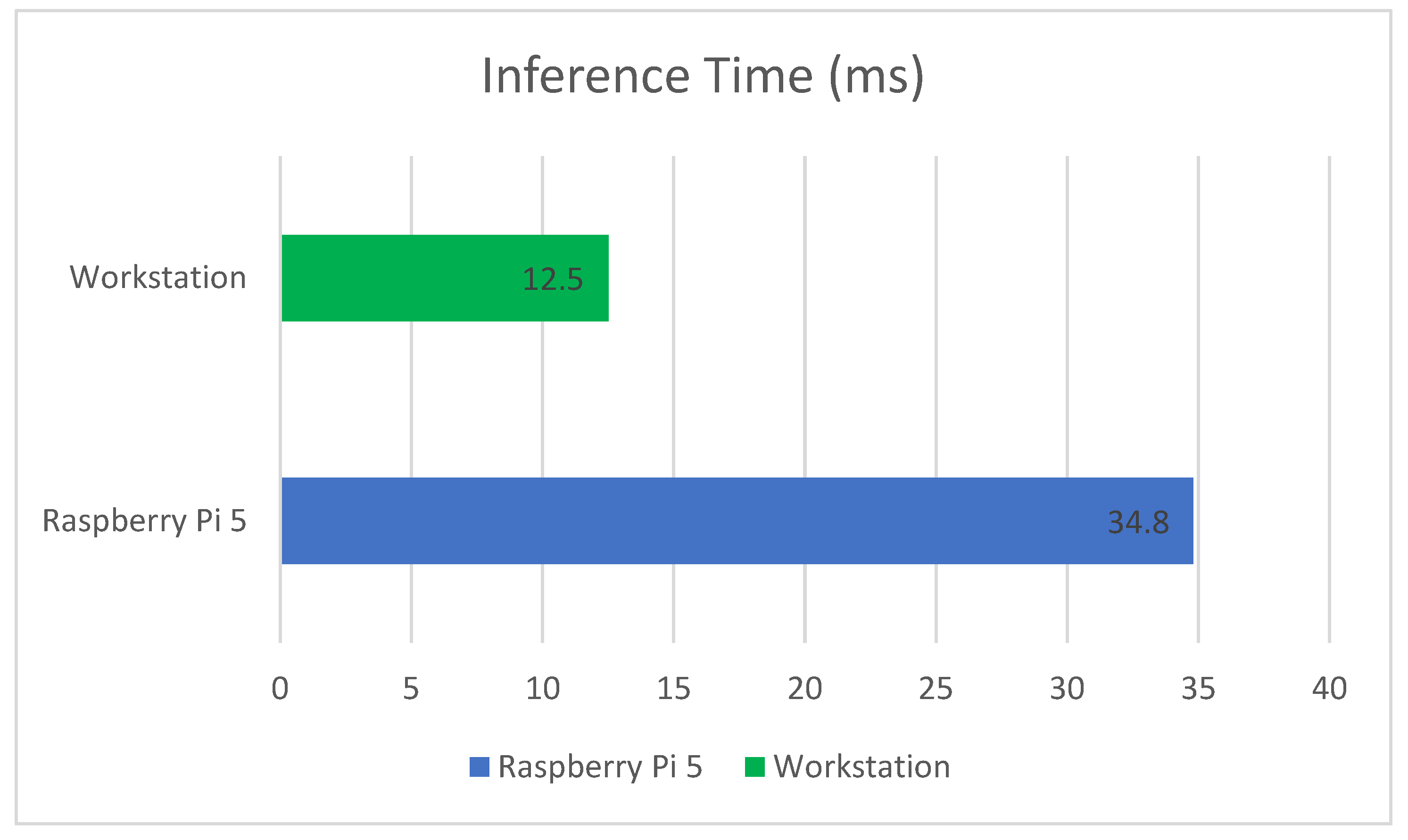

4.2. Inference Time

The system’s ability to classify data instances determines its inference time. Real-time reaction stands as a critical requirement for edge deployment. Raspberry Pi 5 processed data at an average speed of 34.8 ms, while the workstation processed data at 12.5 ms, according to

Figure 4. The Pi 5 operates at a slower pace than the workstation yet meets real-time processing standards below 50 ms, which makes it suitable for edge-based intrusion detection.

4.3. SHAP Explanation Time

The SHAP (SHapley Additive exPlanations) method provides interpretability, and its computational overhead was measured across a rolling window of 100 predictions. The explanation time per window averaged 55.3 ms on Raspberry Pi 5 and 31.7 ms on the workstation, according to

Figure 5. The explanation generation delay on the edge device does not impact immediate threat detection and remains acceptable for asynchronous analyst review.

In addition to platform-specific performance, a comparative evaluation was conducted against recent state-of-the-art IDSs reported in the literature.

Table 2 presents this comparison across commonly used benchmarks, including ToN-IoT, BoT-IoT, and CICIDS2017. The included models—such as ShaTS and LENS-XAI—are also evaluated on ToN-IoT, enabling a more meaningful baseline comparison. The table highlights differences in detection accuracy, precision, recall, false positive rate, latency, and explainability. These results in

Table 2 demonstrate that the proposed system achieves a well-balanced trade-off between high detection performance, low false alarms, real-time edge readiness, and interpretable output, making it competitive for practical IIoT deployment.

The system’s ability to adapt to changes in network behavior (e.g., evolving attack types) was tested using Page–Hinkley and ADWIN drift detectors. Both detectors successfully identified changes during simulated transitions between different attack scenarios (e.g., DDoS to data theft). The model triggered updates within approximately 50 instance shifts, demonstrating that it can adjust its learning behavior dynamically to maintain accuracy over time.

4.4. Statistical Significance Testing

To assess whether the observed performance differences between Raspberry Pi 5 and the workstation are statistically significant, we conducted independent two-sample t-tests across four key metrics: accuracy, F1-score, false positive rate (FPR), and inference latency. Each metric was computed over 30 independent runs on both platforms.

The results show that the differences in accuracy (p = 0.072) and F1-score (p = 0.061) are not statistically significant at the 0.05 level, suggesting that both platforms achieve comparable detection performance. However, inference latency (p < 0.001) and SHAP explanation time (p < 0.001) differences were statistically significant, confirming the expected speed advantage of the workstation due to its superior computational resources.

Despite this, Raspberry Pi 5 consistently maintained inference and explanation times within acceptable bounds for real-time IIoT deployment, validating the practicality of the proposed system for edge environments as shown in

Table 3.

The quantitative results directly address the core challenges highlighted in our problem statement—namely, the need for accurate, interpretable, and real-time threat detection in resource-constrained IIoT environments. The high detection accuracy and low FPR reduce false alerts, which helps mitigate alarm fatigue and ensures system reliability. Additionally, the sub-35 ms inference latency on Raspberry Pi 5 demonstrates that our solution meets the real-time processing demands of edge networks. These outcomes validate our design goals and show that the proposed system balances detection performance, trust, and deployment efficiency—critical for maintaining operational continuity in industrial settings.

5. Conclusions

This study proposed a lightweight, adaptive, and explainable intrusion detection system (IDS) for Industrial IoT (IIoT) environments. By combining online learning models with SHAP-based interpretability, the system achieved accurate and transparent threat detection on resource-constrained edge devices such as Raspberry Pi 5. Experimental evaluations using ToN-IoT and BoT-IoT datasets demonstrated high detection accuracy (96.4%), low false positive rates (2.1%), and inference latency under 50 ms—meeting real-time processing requirements. Additionally, the system successfully adapted to evolving threats through concept drift detection, ensuring sustained performance in dynamic network conditions.

These results confirm that trustworthy, adaptable IDS solutions are practical for real-world IIoT edge deployments. Future work will explore decentralized threat intelligence through federated learning, enabling distributed IIoT nodes to collaboratively train detection models without centralizing sensitive data. We also plan to integrate support for additional IIoT protocols such as Modbus, OPC UA, and PROFINET, while addressing challenges related to protocol heterogeneity. Finally, we aim to optimize explanation delivery using real-time interactive dashboards and simplified SHAP visualizations, enhancing decision-making speed and clarity for security analysts operating in time-critical environments.