1. Introduction

Traditional door-locking mechanisms face challenges such as lost keys, duplication risks, and unauthorized access. Facial recognition offers a secure and user-friendly alternative by leveraging unique biological features [

1]. With advancements in artificial intelligence (AI) and computer vision, facial recognition is now widely adopted in banking, healthcare, and public security, making it a crucial component of smart access control systems.

Facial recognition systems integrate computer vision and AI to enable real-time detection and authentication. Computer vision extracts facial features, while AI continuously improves accuracy through adaptive learning. These technologies create a robust and scalable security solution that is capable of adapting to environmental changes, enhancing its overall reliability.

This paper presents a Raspberry Pi-based face recognition door lock system (Raspberry Pi Ltd., Cambridge, UK), offering affordability, scalability, and advanced technological capabilities. The system employs a camera module for real-time face detection, a solenoid lock for secure access, and software optimizations for improved performance. Adaptive learning allows the system to update facial data dynamically, accommodating variations in appearance due to aging, hairstyles, or accessories without requiring manual retraining. Preprocessing techniques, including lighting normalization and feature extraction, ensure consistent performance across different environmental conditions, making the system effective even in low-light settings.

An additional feature, emotion detection, enhances user interaction by recognizing emotional states such as happiness, anger, or surprise, extending the system’s applications beyond security to workplaces, educational institutions, and public spaces. Lighting condition adaptation ensures reliable operation under varying illumination conditions, reducing the impact of shadows or glare on recognition accuracy. Scaling accuracy further ensures that users can be identified at different distances and angles, increasing flexibility and usability.

The system also integrates real-time notifications, allowing the Raspberry Pi to communicate with connected devices without requiring an app. Recognized users receive voice feedback indicating access, while unauthorized detections trigger alerts, ensuring enhanced security and user awareness. This feature extends the system’s usability to scenarios requiring real-time monitoring, such as office environments and restricted facilities. Multiple authorized face recognition further allows for seamless multi-user support without requiring separate hardware configurations.

By combining adaptive learning, real-time processing, and smart security features, this study delivers a practical, scalable, and efficient solution to modern access control challenges. The proposed system addresses key limitations of existing solutions by enhancing recognition accuracy, improving usability, and enabling real-time adaptability to changing conditions.

This paper presents a Raspberry Pi-based facial recognition door lock system that balances affordability, scalability, and advanced technology. By leveraging the Raspberry Pi’s processing power, the system integrates real-time facial recognition with adaptive learning, refining its accuracy over time. Robust preprocessing techniques mitigate environmental factors like poor lighting and variable distances, ensuring consistent performance. Beyond its technical capabilities, this system offers operational advantages such as real-time notifications for access attempts, keeping users informed and in control. Multi-user management without retraining simplifies access control, making it ideal for shared environments like offices, schools, and multi-tenant buildings. This paper delivers a comprehensive, efficient, and reliable access management solution by addressing limitations of traditional locks and biometric systems. To achieve this, the system integrates a Raspberry Pi, camera module, relay module, and solenoid lock for real-time operation. The software development phase introduces adaptive face recognition, emotion detection, and preprocessing for lighting adaptation, ensuring reliable performance in diverse conditions while maintaining high accuracy and efficiency.

Low cost and standalone design:

Real-time face recognition:

Emotion detection enhancement:

Secure and automated door lock system:

Recognized faces trigger an automated unlocking mechanism.

Unrecognized faces keep the door locked, enhancing security.

Scalability and customization:

2. Related Works

This review assesses research on facial recognition-based access control systems, particularly those utilizing Raspberry Pi for cost-effective implementation. Various approaches integrating machine learning, edge computing, and preprocessing techniques have been explored to enhance recognition accuracy and system adaptability.

Nasreen Dakhil Hasan and Adnan M. Abdulazeez [

2] conducted a comprehensive review of deep learning-based facial recognition techniques. Their study highlights the advancements in convolutional neural networks (CNNs) and autoencoders for improving accuracy across various conditions, including lighting changes, occlusions, and facial expressions. The review also discusses emerging technologies such as 3D facial reconstruction and multimodal biometrics, emphasizing ethical concerns like privacy and bias in AI-driven facial recognition. While their work provides valuable insights into the broader landscape of deep learning applications in face recognition, our study focuses on optimizing real-time performance for Raspberry Pi while maintaining efficiency and adaptability.

M. Alshar’e et al. [

3] proposed a deep learning-based home security system using MobileNet and AlexNet for biometric verification. While their CNN-based hierarchical feature extraction improves recognition under controlled conditions, the computational complexity limits its real-time application on Raspberry Pi. Privacy concerns regarding biometric data storage were also highlighted, advocating for on-device processing and encryption. Our study optimizes recognition efficiency for Raspberry Pi while incorporating adaptive learning and preprocessing enhancements.

A. Jha et al. [

4] developed a low-cost access control system using the Haar cascade classifier on Raspberry Pi. While their approach is efficient for resource-constrained devices, its limitations include poor performance under low lighting, rigid facial angles, and a static face dataset requiring manual updates. Our study addresses these challenges through advanced preprocessing, dynamic face database updates, and real-time notifications for improved scalability and adaptability.

A. D. Singh et al. [

5] implemented a Haar-cascade-based facial recognition system for residential access control, emphasizing affordability and simplicity. However, accuracy issues under varying lighting conditions and the lack of dynamic database updates restricted its effectiveness. We enhance this framework by integrating adaptive learning, robust preprocessing, and real-time notifications for improved usability and security.

D. G. Padhan et al. [

6] utilized the HOG algorithm with IoT integration for facial recognition-based home security. While computationally efficient, this system struggles in low-light conditions and requires manual database updates, limiting its scalability. Our work improves recognition accuracy through preprocessing techniques such as illumination normalization and gamma correction, while adaptive learning automates database updates.

N. F. Nkem et al. [

7] explored PCA-based facial recognition for low-power security applications, emphasizing dimensionality reduction. However, PCA’s sensitivity to lighting and facial orientation and its static database restrict its real-world applicability. Our system overcomes these limitations by integrating adaptive learning and preprocessing techniques, ensuring higher accuracy and robustness.

In summary, prior studies have laid the foundation for Raspberry Pi-based facial recognition systems but often lacked adaptability, real-time learning, and robust preprocessing for varying environmental conditions. Our work builds on these findings by addressing computational constraints, enhancing scalability, and integrating real-time security features to create a more efficient and practical access control system.

3. Design Methodology

The Raspberry Pi-based facial recognition door lock solution required hardware, software, and rigorous testing to address modern access control issues. This study discusses hardware integration, adaptive facial recognition software development, and system testing to verify the system’s performance. The implementation process prioritizes safety, reliability, scalability, and usability. The implementation process necessitated meticulous integration of hardware and software, consideration of Raspberry Pi’s processor limitations, and the resolution of environmental variables.

The HOG algorithm segments an image into small cells, calculates a Histogram of Orientated Gradients for each cell, and subsequently normalizes local contrast in overlapping blocks. It is utilized with machine learning methods such as Support Vector Machines (SVMs) for object detection.

Adaptive learning personalizes educational experiences according to individuals’ requirements and speeds, leveraging technology to deliver customized information, evaluations, and feedback. The adaptive algorithm enhances learning results by addressing each learner’s distinct strengths and limitations. This work addresses personalized learning pathways, which are highly beneficial for recognizing face-customized content.

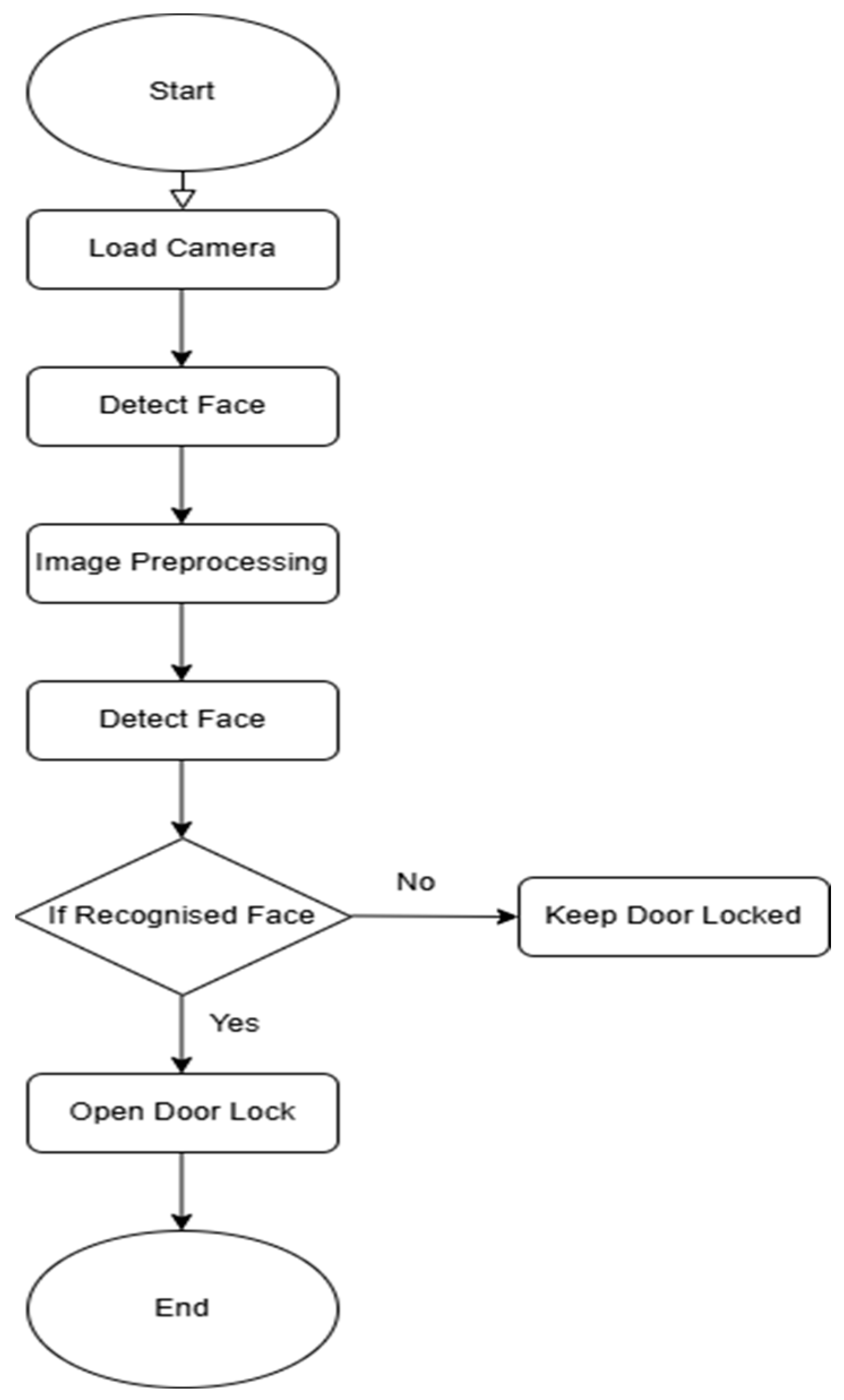

Figure 1 illustrates the system’s operational workflow, from camera initialization to final authentication, providing a structured approach to secure access control.

Figure 1 illustrates the sequential steps in the face recognition door lock system. Each block represents a key process. We turn on the system, initialize it, and prepare it for operation. The local camera is ready to receive customer signals from the door. When the Raspberry Pi receives the signals, it activates the systems and switches to camera mode. The system will consult its library to identify the face. The captured face frame will analyze the image preprocessing within the system and implement various processes for recognizing the face. The system compares the detected face with stored encodings. If the face matches a registered user, the system proceeds to unlock. Otherwise, it keeps the door locked. The system will not permit access if it does not recognize faces.

The system’s main processor, the Raspberry Pi, is connected to a camera module for real-time video, a relay module, and a solenoid lock for physical access. Each hardware component was selected based on compatibility, cost, and low-power applications. The Raspberry Pi and peripheral devices communicated well thanks to precise GPIO connections during assembly. OpenCV and DeepFace are Python 3.12.6 libraries for facial recognition and emotion detection. Adaptive learning dynamically updates the authorized face database, preprocessing adjusts to environmental conditions, and real-time notifications notify users of access events. Coded systems behave smoothly and responsively between hardware and software.

System stability and scalability require extensive testing and validation. The system was evaluated under various conditions, including variations in lighting, distances, and facial orientations, to assess its performance. Metrics such as detection accuracy, processing time, and emotion detection effectiveness were analyzed to ensure robustness. As highlighted in [

8], implementing an embedded facial recognition system on Raspberry Pi requires balancing computational efficiency, image processing techniques, and algorithm selection to achieve optimal performance under real-world conditions. Testing insights led to iterative system modifications, further enhancing its functionality and reliability.

3.1. Hardware Implementation

The hardware implementation of the Raspberry Pi-based facial recognition door lock system ensures smooth operation and reliability. This section details the setup, including components, roles, and connections. The Raspberry Pi Model 3B serves as the central processor, handling facial recognition, emotion detection, and device connectivity. It interfaces with a camera module for real-time video input and a relay module controlling the solenoid lock for secure access.

Key components such as DC barrel jacks, microSD cards, jumper wires, and power supplies ensure stable connections. The relay module enables safe control of the high-voltage solenoid lock circuit, while the 5 V and 12 V power supplies support system reliability. The Raspberry Pi stores the operating system and scripts on a microSD card.

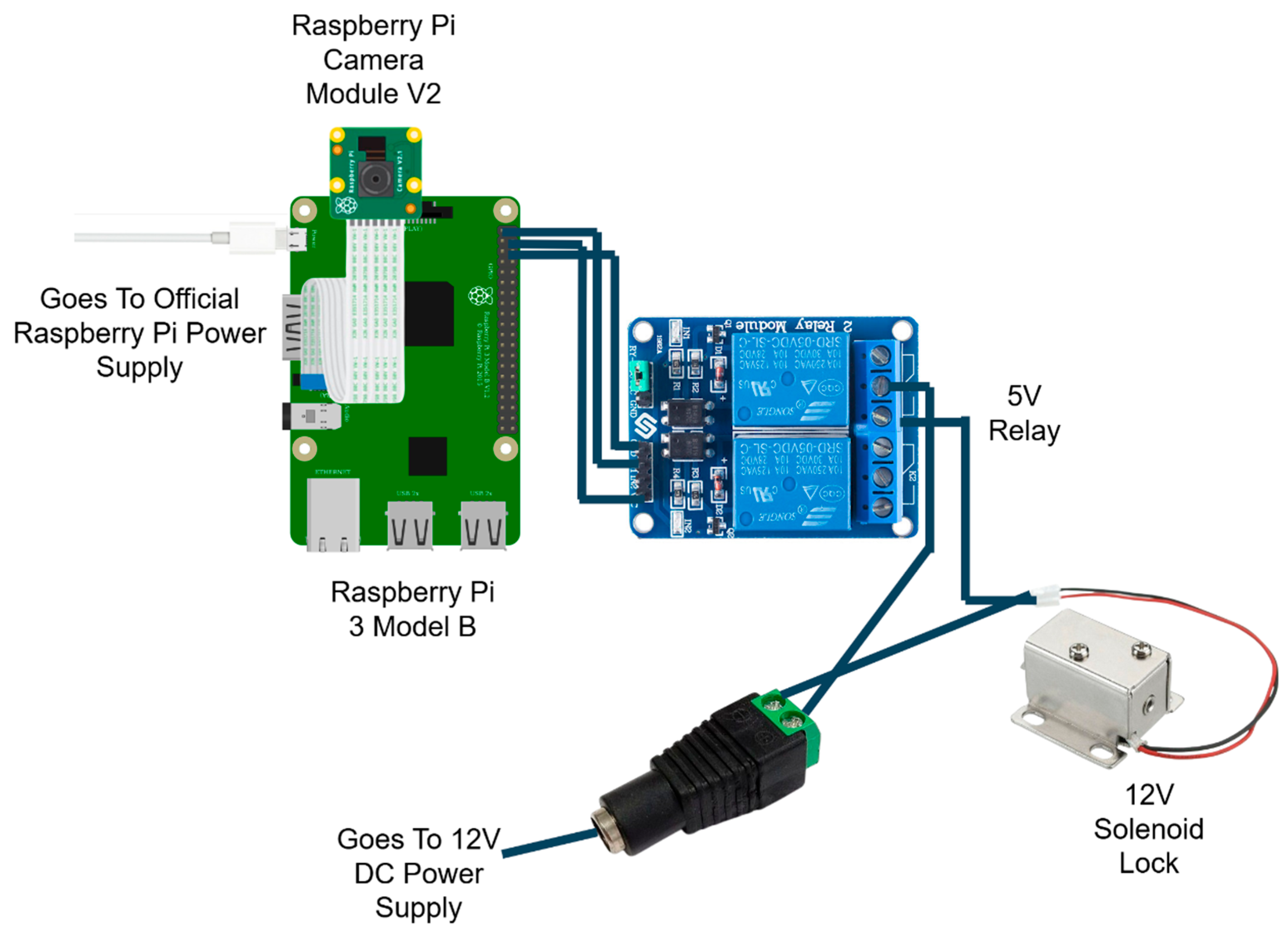

Figure 2 presents a circuit schematic illustrating the interactions between the hardware components, demonstrating the system’s connectivity and functionality.

This diagram visually explains the flow of power and control signals within the system, offering clarity on the role and connectivity of each component. It simplifies the understanding of how the Raspberry Pi interacts with the solenoid lock through the relay module while maintaining separate power supplies for different components.

3.2. Hardware Assembly

This section illustrates the process of assembling the hardware components of the Raspberry Pi-based face recognition door lock system. The assembly integrates the Raspberry Pi, camera module, relay module, solenoid lock, and other necessary components into a functional setup. The objective is to ensure secure connections, operational reliability, and a well-organized layout for easy maintenance. The preloaded microSD card containing the operating system and software is inserted into the Raspberry Pi Model 3B’s slot. The Raspberry Pi Camera Module is connected to the CSI (Camera Serial Interface) port, ensuring that the metal contacts on the ribbon cable face the CSI port for proper connectivity. The Raspberry Pi is secured on a stable platform or within a protective case to prevent damage during assembly. The position of the relay module is close to the Raspberry Pi for tidy and secure wiring. The positive terminal of the 12 V solenoid lock is connected to the relay’s NO (Normally Open) terminal. Basic GPIO scripts are run on the Raspberry Pi to test the functionality of the relay and solenoid lock, ensuring that the relay triggers correctly and the solenoid lock responds as intended. The Raspberry Pi-based face recognition door lock system’s hardware implementation forms the paper’s backbone, integrating multiple components into a cohesive and functional setup. Each component, from the Raspberry Pi Model 3B to the solenoid lock and relay module, is vital in ensuring secure and reliable access control. The carefully designed circuit connections and the logical assembly process provide a robust foundation for the system. Including power supply mechanisms and adaptable configurations further enhances the system’s efficiency and scalability. This meticulously constructed hardware platform sets the stage for seamless integration with the software and testing phases, demonstrating this paper’s commitment to precision and practicality.

3.3. Software Implementation

The software implementation of the Raspberry Pi-based face recognition door lock system involves an intricate combination of algorithms and processes designed for efficiency, accuracy, and adaptability. The development began with a simulation phase, where the feasibility of the face recognition algorithm was tested using a laptop and a pre-trained CNN model. This simulation provided valuable insights into the algorithm’s performance, forming the foundation for the subsequent hardware integration.

Building on this groundwork, the system was implemented on the Raspberry Pi to achieve real-time face detection, recognition, and dynamic adaptability. The system detects faces, recognizes authorized individuals, adapts to changing conditions, and notifies remote devices in real time. The following subsections outline the implementation of key features, including the facial recognition algorithm, the notification system, emotion detection, and the dynamic addition of unknown faces to the authorized list.

The software components were developed with Python, leveraging libraries such as face_recognition, cv2, DeepFace, and others. Advanced preprocessing techniques, such as lighting normalization and gamma correction, ensure reliable performance under varying environmental conditions. These components collectively create a robust and efficient system that is capable of handling diverse use cases, such as recognizing faces at different distances, adapting to dynamic lighting conditions, and processing real-time notifications on remote devices. The project utilizes several essential Python libraries for face recognition, GPIO control, and notification handling.

A preloaded dataset of photos of authorized individuals is stored on the Raspberry Pi. This dataset is processed during initialization to generate 128-dimensional encodings, which are stored in the encodings file. These encodings are later used for face matching during runtime.

3.4. Simulation and Preliminary Testing

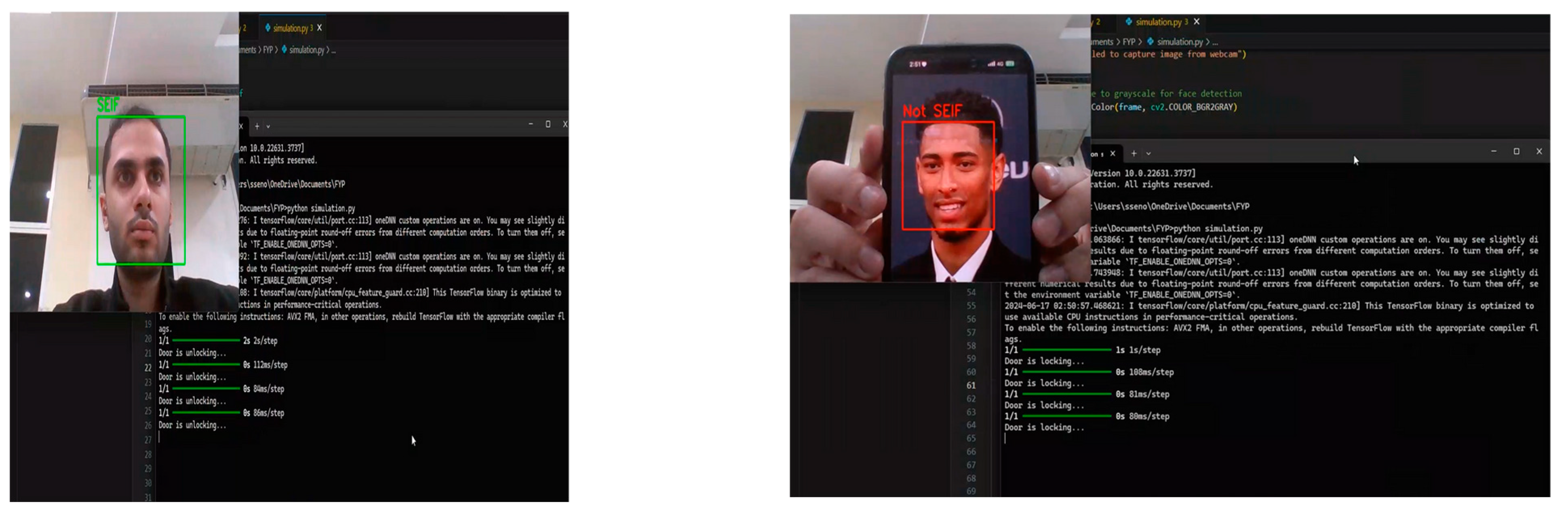

A facial recognition system simulation tested the algorithm’s viability and real-time processing. The simulation included simulated door lock/unlock functionality using facial recognition findings. System setup included the Haar cascade classifier for webcam face detection. The pre-trained CNN model classifies identified faces as authorized (e.g., “SEIF”) or unauthorized (“NOT SEIF”). Real-time frame processing uses the laptop webcam. OpenCV and TensorFlow are the core video processing and model execution libraries.

Three steps were employed for the algorithm and process overview. Face Detection was the Haar cascade classifier that detected faces in the video stream and then cropped and preprocessed them for recognition. The CNN model pre-trained in facial recognition identified faces as SEIF (authorized) or not SEIF. Face photos were resized to 224 × 224 pixels to meet the model’s input criteria, before being normalized and expanded. If SEIF was recognized, the system displayed “Door is unlocking…” to simulate unlocking the door. For unknown faces, the system simulated locking the door with “Door is locking…”.

During the simulation, the system successfully detected and recognized faces in real time, as illustrated in

Figure 3.

3.5. Facial Recognition Algorithm

Face recognition systems deployed on edge devices, such as the Raspberry Pi, must balance computational efficiency with recognition accuracy. Traditional deep learning models, such as CNN-based approaches, offer high accuracy but are computationally expensive, making them impractical for real-time processing on low-power devices. In contrast, lightweight algorithms such as the Histogram of Oriented Gradients (HOG) have demonstrated their effectiveness in edge computing scenarios, enabling efficient face detection without requiring GPU acceleration [

9].

The Histogram of Oriented Gradients (HOG) algorithm has been widely used for efficient face detection [

10]. This method provides real-time performance while maintaining accuracy, making it suitable for resource-constrained environments. HOG-based detection extracts key facial features using gradient orientation patterns, ensuring robustness in different environmental conditions. Additionally, its computational simplicity allows it to outperform more complex deep learning-based approaches in terms of processing speed on embedded hardware.

3.6. System Setup for Facial Recognition

Before delving into the algorithm’s details, it is essential to understand how the system is prepared to execute facial recognition effectively:

Virtual environment: The facial recognition script is executed within a Python virtual environment on the Raspberry Pi. This ensures that all dependencies, such as the OpenCV, DeepFace, and face_recognition libraries, are correctly managed and isolated from the base system. During the system setup, the script initializes the camera module and loads the necessary libraries. The Raspberry Pi terminal logs provide a detailed summary of these initialization steps.

Dataset of authorized faces: A preloaded dataset of photos of authorized individuals is stored on the Raspberry Pi. This dataset is processed during initialization to generate 128-dimensional encodings, which are stored in the encodings file. These encodings are later used for face matching during runtime.

RealVNC Viewer: RealVNC Viewer was utilized during development and testing to provide remote access to the Raspberry Pi. This tool allowed for effective debugging, script execution monitoring, and system setup adjustments.

According to Raspberry Pi Face Recognition by Adrian Rosebrock [

11], while both methods utilize deep learning techniques for face recognition on the Raspberry Pi, this paper’s implementation distinguishes itself by incorporating emotion detection and adaptive learning capabilities, offering a more comprehensive analysis of facial features and expressions.

3.7. Process Overview

The face recognition process begins with face detection, where the HOG model identifies key facial landmarks such as the eyes, nose, and mouth, ensuring real-time performance on the Raspberry Pi 3B. Once a face is detected, it undergoes face encoding, converting it into a unique 128-dimensional vector that acts as a biometric fingerprint. These encodings are pre-stored in the system’s encodings_file, containing authorized individuals’ facial data. During face matching, the system compares the newly detected encoding with stored ones using Euclidean distance, recognizing a face if the distance falls below a 0.4 threshold, and granting access accordingly.

As the system processes video frames, it detects and recognizes faces in real time.

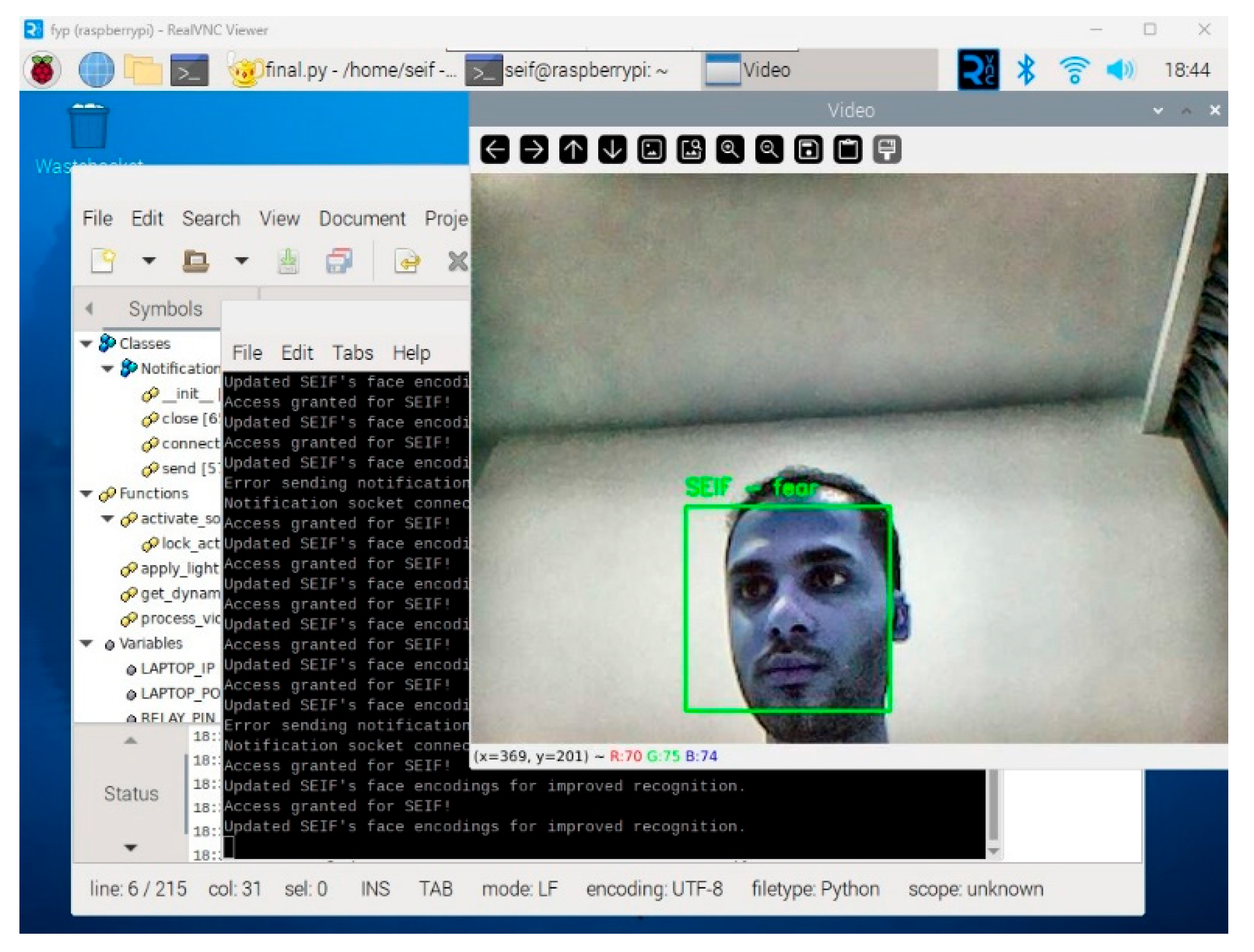

Figure 4 demonstrates the real-time face recognition process, displaying the detected face along with the identified emotion and access status.

To provide a structured overview of the facial recognition process,

Table 1 summarizes the key steps involved in the algorithm. This table outlines the sequential operations, starting from capturing the frame to displaying the recognition results and triggering corresponding actions.

3.8. Lighting Normalization and Gamma Correction

Lighting inconsistencies, such as low-light conditions or bright backgrounds, can negatively impact face detection and recognition accuracy. To address this, the system implements the following enhancements: Contrast-Limited Adaptive Histogram Equalization (CLAHE) enhances image contrast by redistributing the intensity values across the image, ensuring uniform brightness. This is particularly useful in dim or uneven lighting environments. The gamma correction adjusts the brightness dynamically, enhancing darker regions of the frame to reveal facial features. The gamma correction formula is as follows:

where

is the original pixel intensity.

γ is the gamma adjustment factor (e.g., γ = 1.2).

is the brightness-adjusted intensity.

Lighting conditions significantly impact face detection accuracy.

Figure 5 illustrates how the implemented lighting normalization technique enhances visibility and ensures consistent facial recognition even in low-light environments.

3.9. Integration into the System

The algorithm is integrated into the system via a Python script that processes each video frame captured by the Raspberry Pi Camera Module V2. It dynamically adjusts the brightness using the apply_lighting_adaptation function, which incorporates CLAHE and gamma correction. Detected faces are resized to reduce the computational load, and their locations are scaled back for precise visualization. To ensure real-time performance, the system processes resized frames at 25% of their original size during detection. Detected face locations are scaled back to their original dimensions for precise matching. Additionally, the system employs dynamic frame skipping, adjusting the number of frames processed based on CPU usage to avoid overloading the Raspberry Pi.

3.10. Adaptive Learning and Unknown Face Addition

The Raspberry Pi-based facial recognition system’s capacity to dynamically adapt to new inputs is a major improvement. Unlike static face recognition systems, this system uses adaptive learning to update face encodings over time [

12]. This feature improves recognition accuracy by learning from repeated interactions and evolving with the user. Traditional facial recognition systems employ manually updated databases that must be retrained for new users. This work uses adaptive learning to dynamically update facial encodings and recognize new users without retraining them. Incremental learning improves facial recognition accuracy by continuously revising facial encodings based on new observations [

13]. This section details these features’ design, implementation, and significance, showing how adaptive learning improves usability, scalability, and reliability.

Adaptive learning: recognition improvement over time. Every contact improves the system’s knowledge about authorized users through adaptive learning. This feature overcomes common facial recognition issues, such as modest appearance differences due to the following:

Lighting: Adjustments can affect facial features.

Subtle changes in facial contours over time due to aging.

Dynamic factors: Accessories like glasses or caps.

The system updates its encoding when it detects a known face. This continual learning process updates the stored data with the user, reducing false negatives and improving identification accuracy. During each identification cycle, the system compares the detected face’s encoding to that of the stored ones. A match updates the stored encoding with the new one. This captures tiny facial alterations over time, improving the recognition reliability. Continuous recognition accuracy without manual updates is a benefit of adaptive learning. Adaptability allows for natural changes in user appearance, while efficiency ensures minimal retraining.

3.11. Unknown Face Detection and Dynamic Addition

The system identifies faces that do not match stored encodings as “Unknown”. This triggers a prompt to the user, allowing them to dynamically add new individuals to the list of authorized faces. This feature ensures scalability and eliminates the need for preloading datasets for every user. When an unrecognized face is detected, the system displays a real-time prompt on the Raspberry Pi terminal, requesting the user to decide whether to authorize the face. The prompt includes the following options:

This functionality is implemented in the Python script, where the system identifies faces as “Unknown” when no match is found in the encodings_file. Upon receiving the user input to authorize the face, the system saves the corresponding 128-dimensional face encoding along with the name provided by the user. This interactive feature facilitates adaptive learning.

3.12. Storing New Face Encodings

Once a face is authorized, the system dynamically updates its dataset. The 128-dimensional encoding of the newly detected face, along with the name entered by the user, is stored in the encodings_file. This ensures that the face will be recognized in subsequent interactions without requiring a complete system restart or retraining process. This dynamic addition process is efficient and secure, maintaining the robustness of the face recognition system while adapting to new users.

3.13. Recognizing Newly Added Faces

After adding a new face, the system immediately integrates the updated encoding into the recognition pipeline. Subsequent frames demonstrate the system’s ability to correctly identify the newly authorized individual by displaying their name and emotional state on the video stream. This step confirms the success of the adaptive learning feature and showcases the system’s ability to evolve dynamically.

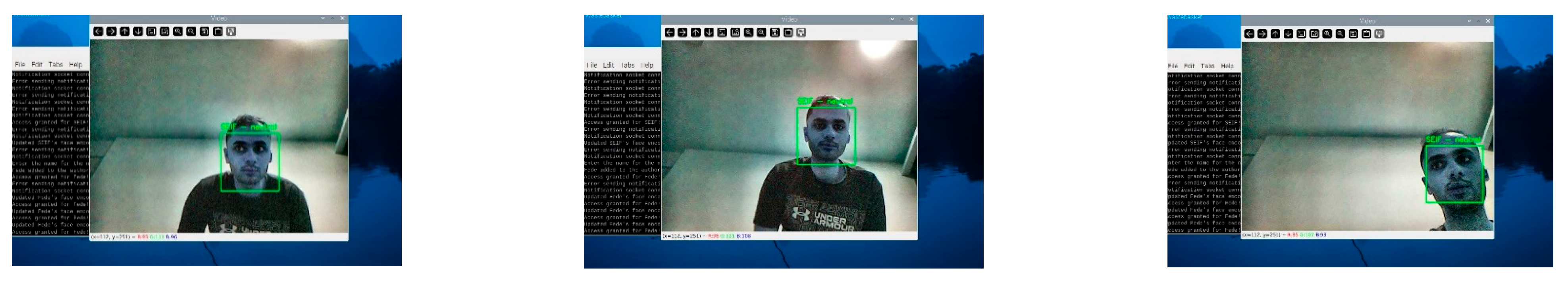

Once the unknown face is authorized and added to the system, it is successfully recognized in subsequent detections, as demonstrated in

Figure 6.

3.14. Integration into the Recognition Workflow

The recognition workflow naturally incorporates adaptive learning and dynamic addition features. “Detect a Face” identifies a face within the frame and calculates its encoding. Align with Established Encodings: We revise the encoded information for the recognized individual upon identification. If we find no match, we classify the face as “unknown”. The “Unknown” classification prompts the user to authorize a face and assign it a name. The Retail Establishment New Encodings represents the latest encoding, with its name saved permanently for future identification. Incorporating additional individuals dynamically guarantees the system’s adaptability to changing user needs. The system grants complete authority over access rights by encouraging users to permit unidentified individuals. Adaptive learning guarantees the system’s accuracy and relevance as users’ appearances evolve. The facial recognition door lock system is much more flexible, scalable, and easy to use when adaptive learning and dynamic integration of unfamiliar faces are used. Because of these features, there is no need for retraining or updating datasets by hand, and the system will always work well in real-world situations. By incorporating these functionalities, the system dynamically grows, providing a strong and user-friendly access control solution.

3.15. Emotion Detection

Emotion detection is a critical feature of the system, enhancing its capabilities beyond basic facial recognition. By analyzing facial expressions in real time, the system determines the dominant emotion displayed by a recognized or unknown individual. This functionality improves both security monitoring and user experience customization.

In terms of security, the system can flag unusual emotional states such as fear, anger, or anxiety in unrecognized individuals. The system also logs emotion data, which can be analyzed to identify potential security risks over time.

Beyond security, emotion detection enhances the overall user experience by enabling personalized responses. In a smart-home setting, future improvements could enable the system to adjust environmental factors such as lighting or music based on the detected mood of the resident. If stress is detected, calming music or dim lighting could be activated to create a more comfortable atmosphere. Emotion detection also has applications in accessibility, as it can assist individuals with disabilities by providing adaptive responses based on their emotional cues. In workplace environments, detecting emotional distress in employees entering restricted areas may help prevent security incidents or unauthorized access.

For enhanced facial recognition and emotion detection, the system integrates DeepFace, a deep learning-based facial analysis library that is capable of performing real-time facial verification and emotion classification [

14]. DeepFace employs a convolutional neural network (CNN) model pre-trained on large datasets, ensuring high accuracy in recognizing user expressions such as happiness, sadness, and anger. Recent studies highlight the effectiveness of CNN-based emotion recognition models, demonstrating their ability to extract subtle facial features that contribute to accurate classification [

15]. The DeepFace library provides pre-trained models that classify facial expressions into predefined emotional categories: happiness, sadness, anger, surprise, fear, disgust, and neutrality. The library was selected due to its high accuracy and efficient integration with Python, making it suitable for real-time processing on the Raspberry Pi. The system captures frames from the Raspberry Pi Camera Module V2. Once a face is detected, the bounding box of the detected face is used to isolate the facial region.

Increased emotion recognition modules in door lock systems can identify both a person’s identification and their emotional state, improving functionality. Such an upgrade can improve security, usability, and adaptability. Adding emotion recognition to a door-locking system can improve security, personalization, and emotional adaptation. The technology uses advanced AI, machine learning algorithms, and sensor data to authenticate users and respond intelligently to their emotions, boosting safety and convenience. However, emotional data must be handled ethically and without privacy concerns.

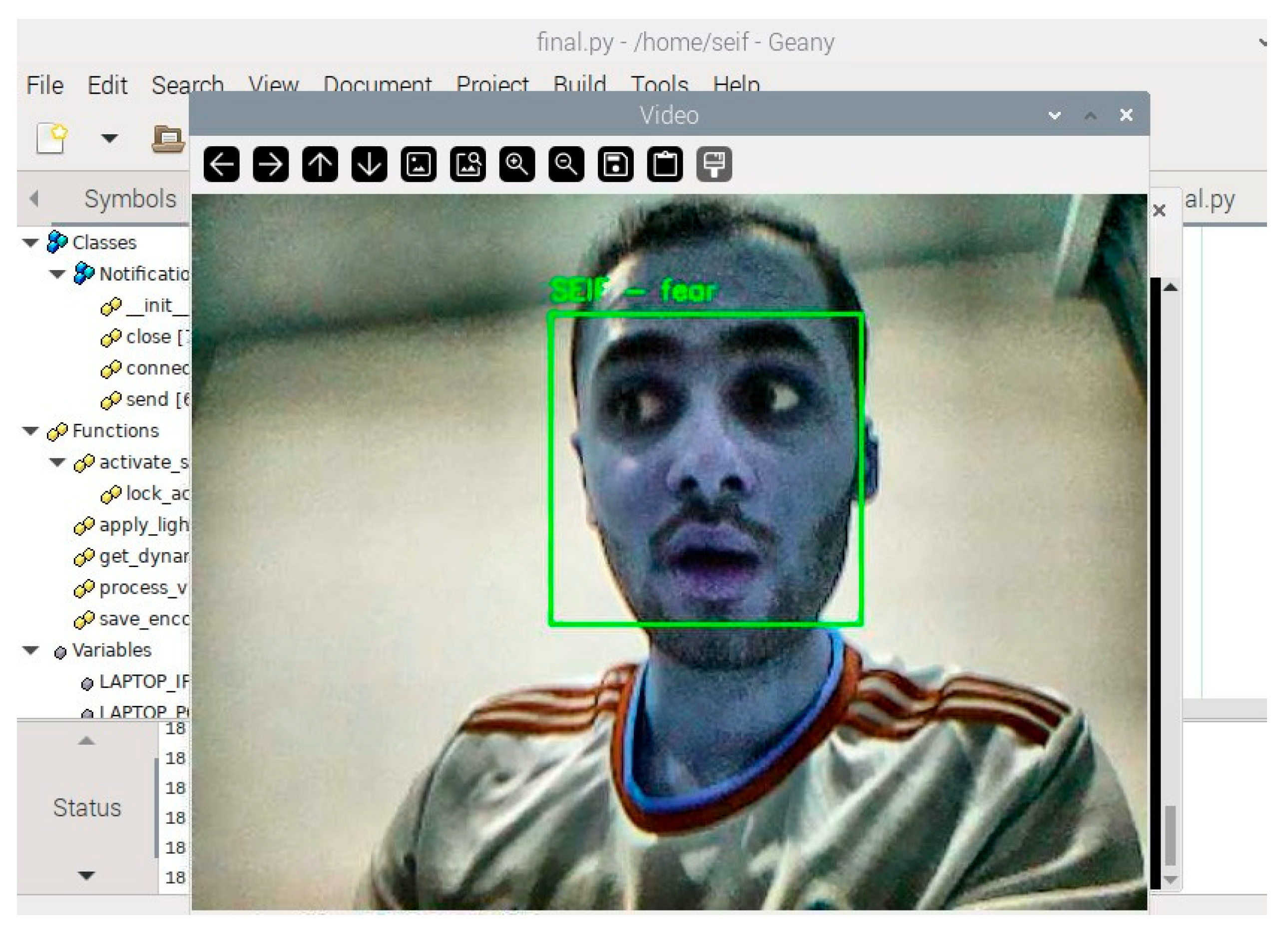

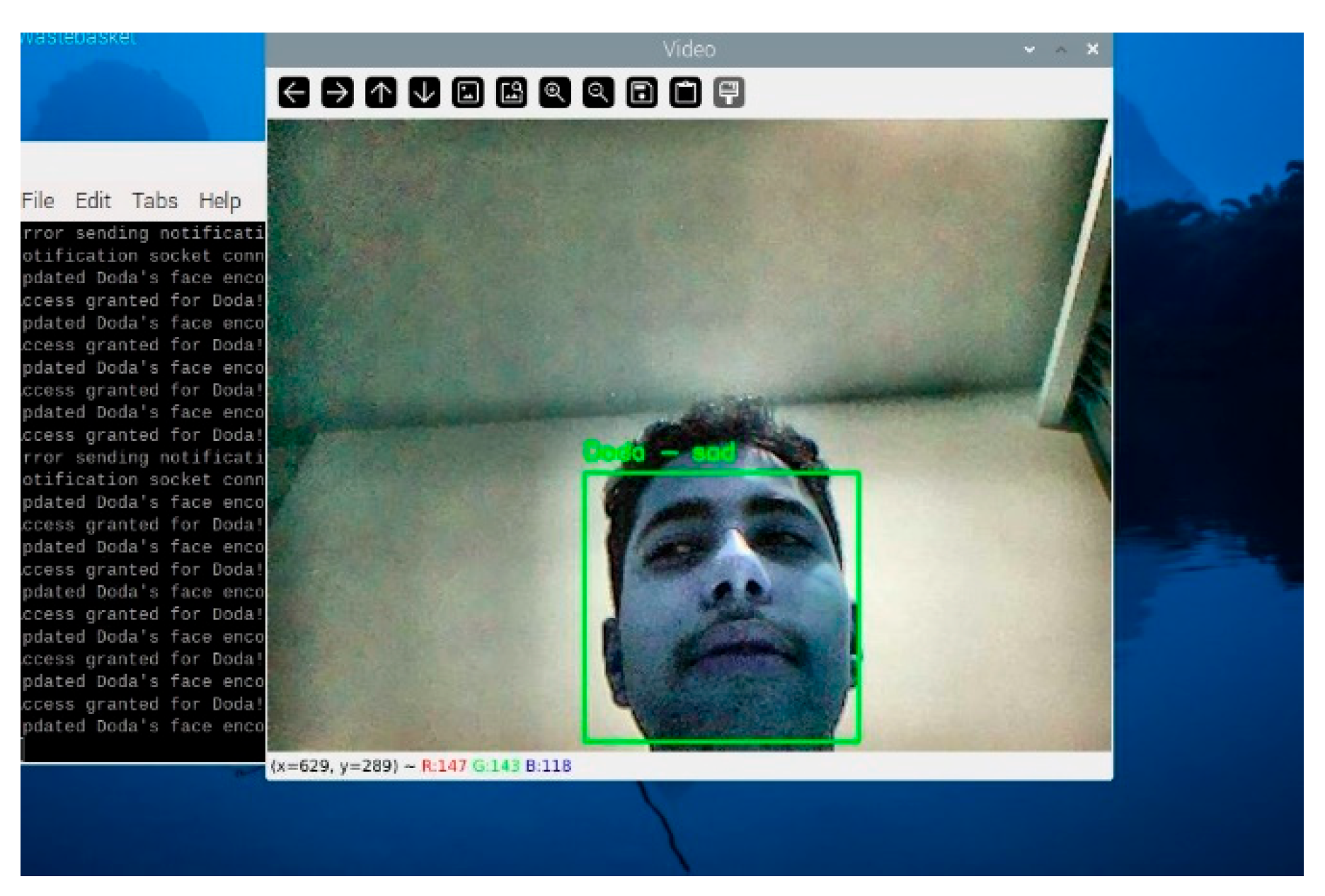

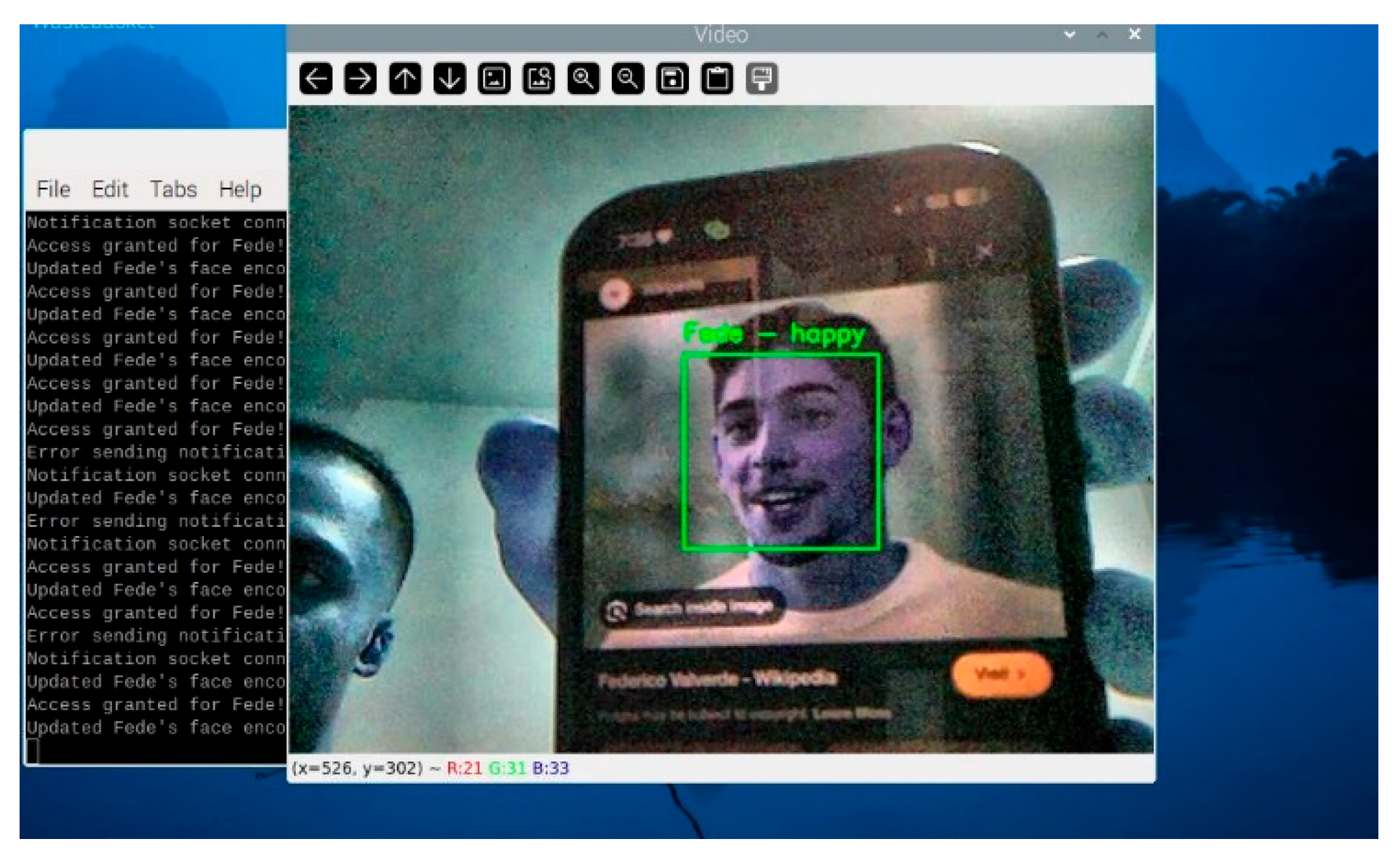

The extracted face region is passed to DeepFace’s emotion recognition module. This module outputs the probabilities for each emotion and identifies the dominant emotion. The detected emotion is displayed on the live video feed, as shown in

Figure 7, and sent to the connected notification system. For example, if a user is detected as “fear”, the system sends a notification stating “Detected emotion: Fear”.

3.16. Enhancements for Real-Time Performance

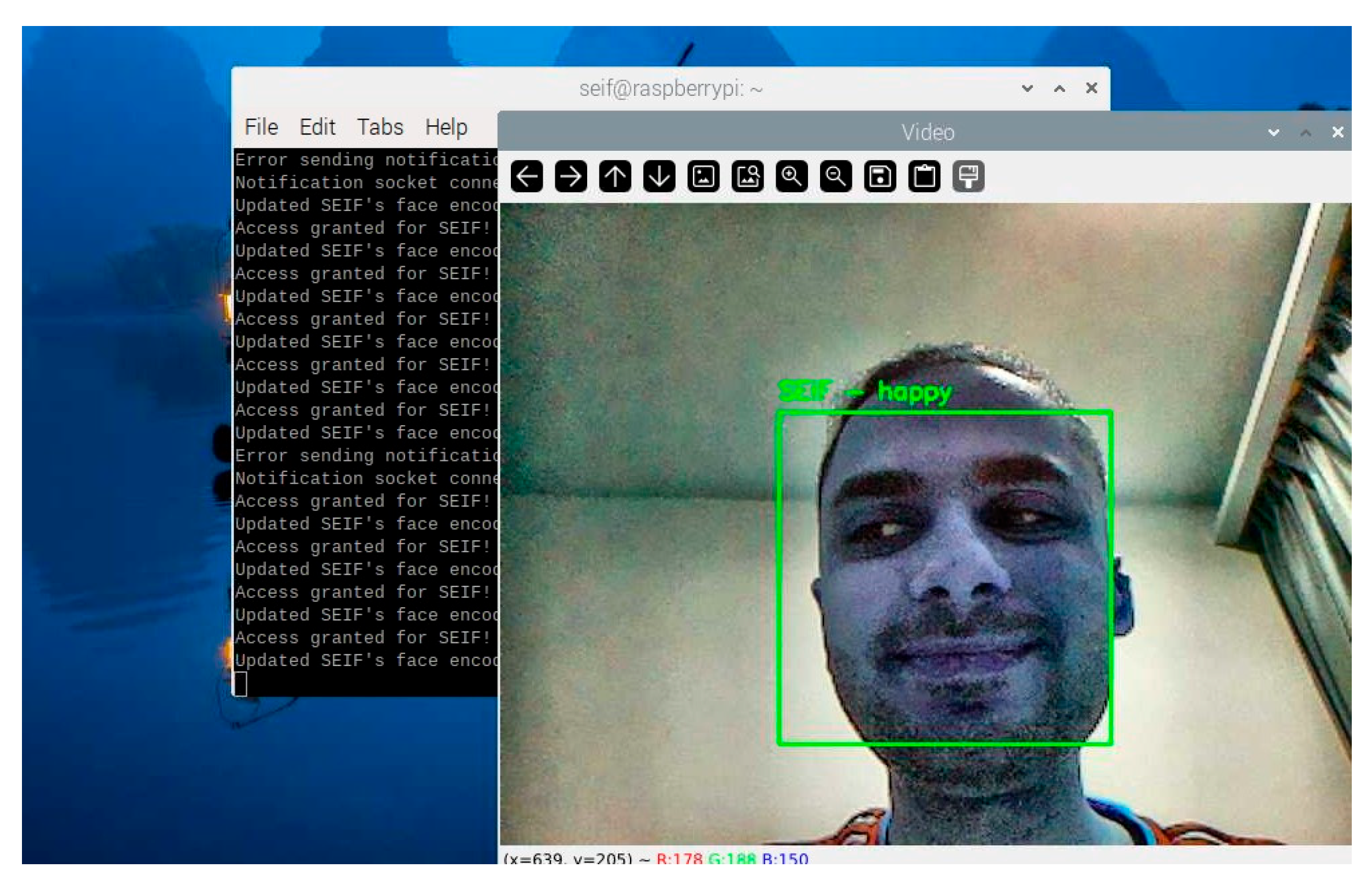

To ensure that the emotion detection module could operate efficiently on the Raspberry Pi, several optimizations were implemented. Emotion detection is computationally intensive. To balance performance and responsiveness, the system skips a configurable number of frames during processing, based on CPU usage. For example, during high CPU loads, emotion detection is performed every 10th frame instead of every frame. The bounding box containing the facial region is resized to a fixed resolution before passing it to DeepFace. This minimizes computation while preserving recognition accuracy. Detected emotions are displayed alongside the recognized name in the live video feed. For example, “SEIF—Happy” is overlaid on the bounding box of a recognized individual, as shown in

Figure 8.

3.17. Notification System

The notification system is essential to the facial recognition door lock system, providing real-time access to event feedback. The system sends textual and auditory notifications for recognized faces, unknown faces, and emotions observed during recognition. It uses a socket-based client–server communication mechanism without hardware or mobile apps. Real-time notifications using IoT protocols improve face recognition systems. This approach logs access events and provides real-time notifications [

16]. Real-time updates offer swift feedback on facial recognition, encompassing successful recognition, identification of unknown faces, and identification of people’s emotions. Improved-usability Text-to-Speech (TTS) on the server (laptop) delivers notifications in text and audio formats. Simplicity and efficiency enable effortless use without a smartphone app or cumbersome configurations, making it user-friendly. This system’s Raspberry Pi client sends messages to the laptop server over a TCP socket. The server processes these alerts, presents them on the terminal, and sounds a TTS alert. This keeps users informed of system activities, whether near or remote.

The notification phases of the system workflow initiate notifications. These are activated when the notification system detects an authorized face, an unknown face, or an emotion. A message includes the recognized person’s name and sentiments. Client-side communication: The client connects to the server via TCP and transmits a notification. The Server-side process logs the notification in the terminal and reads it aloud using TTS. Feedback sent to the user receives real-time system activity updates, allowing them to act (e.g., authorize an unfamiliar face) or stay informed. Real-time input from the notification system improves face recognition door lock usability and efficiency. The socket-based architecture assures low-latency communication, while TTS provides intuitive auditory notifications. This approach meets this paper’s simplicity and accessibility goals by not requiring extra programs or hardware. The notification system is crucial to the paper’s functionality, providing access to authorized users and alerts about unknown faces, and reporting observed emotions.