Abstract

The widespread rise of misinformation across digital platforms has increased the demand for accurate and efficient Fake News Detection (FND) systems. This study introduces an enhanced transformer-based architecture for FND, developed through comprehensive ablation studies and empirical evaluations on multiple benchmark datasets. The proposed model combines improved multi-head attention, dynamic positional encoding, and a lightweight classification head to effectively capture nuanced linguistic patterns, while maintaining computational efficiency. To ensure robust training, techniques such as label smoothing, learning rate warm-up, and reproducibility protocols were incorporated. The model demonstrates strong generalization across three diverse datasets, such as FakeNewsNet, ISOT, and LIAR, achieving an average accuracy of 79.85%. Specifically, it attains 80% accuracy on FakeNewsNet, 100% on ISOT, and 59.56% on LIAR. With just 3.1 to 4.3 million parameters, the model achieves an 85% reduction in size compared to full-sized BERT architectures. These results highlight the model’s effectiveness in balancing high accuracy with resource efficiency, making it suitable for real-world applications such as social media monitoring and automated fact-checking. Future work will explore multilingual extensions, cross-domain generalization, and integration with multimodal misinformation detection systems.

1. Introduction

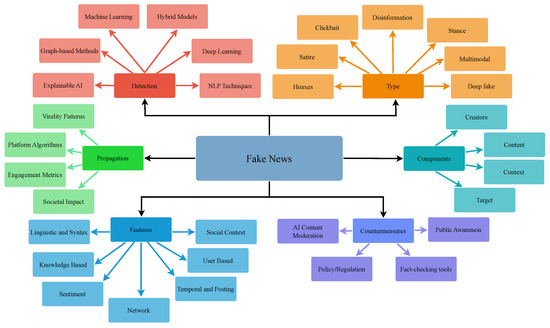

The rapid growth of social media platforms, instant messaging applications, and user-generated content has fundamentally transformed the way information is created, distributed, and consumed. This has created an unparalleled information ecosystem where truth and falsehood can spread with equal velocity. In an era where information travels at the speed of light across digital networks, the integrity of news has become one of the most pressing challenges of our time. Within this complex digital landscape, the intentional drafting of Fake News (FN) or misleading information poses a threat to public discourse, democratic processes, and social cohesion. According to a study, almost ∼46% of FN are related to political affairs, and FN are mostly distributed through social networks [1]. Figure 1 presents a holistic perspective on FN by illustrating its six interconnected dimensions, including detection, propagation, types, components, features, and countermeasures. This comprehensive view signifies the complex nature of FN and the importance of addressing it through interdisciplinary approaches. FN Detection (FND) involves various techniques, such as Machine Learning (ML), Deep Learning (DL), hybrid models, graph-based methods, explainable AI (XAI), and Natural Language Processing (NLP) techniques. Different types of FN include clickbait, satire, hoaxes, disinformation, stance-based content, etc., to ease its spread to a larger audience. Various components of FN refer to its key elements, such as creators, content, context, and goals. The propagation pathway highlights how FN spreads through platform algorithms, virality patterns, engagement metrics, and its societal impact. Different features used to identify FN include linguistic and syntax patterns, sentiment, network structure, knowledge-based elements, temporal and posting behaviors, etc. In addition, its countermeasures aim to mitigate the impact through AI content moderation, policy and regulation, fact-checking tools, and raising public awareness.

Figure 1.

A Holistic Perspective on Fake News.

The theoretical foundations of FND can be understood through information theory and network dynamics [2,3]. When we model information dissemination as signals propagating through a complex network , where nodes represent users and edges represent interactions such as shares, likes, or retweets, we observe that misinformation often exhibits distinct propagation patterns that differ significantly from authentic news. From a probabilistic perspective, the challenge of detecting FN becomes a classification problem in which, given a news article X, we must estimate the posterior probability . This determines whether the content is genuine () or fabricated (). What makes this challenge particularly intriguing from a computational point of view is that FN operates as a distorted signal, a noisy representation of reality that has been intentionally manipulated through bias injection, emotional amplification, or contextual distortion. This signal processing perspective transforms FND into an anomaly detection problem, where we must identify whether a given information signal deviates from the expected distribution of truthful content. The complexity is further increased since FN often exploits cognitive biases, emotional triggers, and social dynamics to achieve viral propagation. It makes it statistically more likely to spread than factual information.

Traditional approaches for FND are found to be inadequate to address the scale and sophistication of modern misinformation campaigns [4]. Although manual fact-checking is accurate, its feasibility cannot match the speed of content creation and distribution [5]. Rule-based systems and crowdsourcing platforms such as PolitiFact [6] and ClaimBuster [7] are valuable but suffer from scalability limitations, subjective interpretations, and language barriers that prevent comprehensive coverage in the global landscape. Classical ML approaches using hand-crafted features such as n grams, Term Frequency-Inverse Document Frequency (TF-IDF) vectors, and sentiment scores capture surface-level patterns but do not understand the nuanced contextual relationships and implicit semantic structures that characterize sophisticated misinformation.

The emergence of transformer-based architectures, particularly Bidirectional Encoder Representations from Transformers (BERT) and its variants, has revolutionized NLP. Their use of powerful self-attention mechanisms can capture long-range dependencies and contextual relationships within text. For a token sequence , BERT generates contextual embeddings that incorporate bidirectional context. It enables the model to understand subtle linguistic cues that can indicate deception or manipulation. When these contextualized representations are processed through sophisticated classification heads, such models demonstrate remarkable performance improvements over traditional approaches.

Despite the promising capabilities of transformer models, current research in FND has several limitations that affect both theoretical understanding and practical deployment. Various studies have observed that these models are treated as black boxes: evaluating their performance without understanding which architectural components contribute the most significantly to their effectiveness [8]. The lack of systematic ablation studies means that researchers cannot determine whether improvements come from attention mechanisms, positional encodings, layer normalization, or other architectural elements. In addition, existing evaluations often lack rigorous statistical validation, reproducibility protocols, and cross-dataset generalization analysis, making it difficult to assess the true robustness and reliability of proposed solutions. It is also important to find the optimal balance between model complexity, computational efficiency, and performance stability in various datasets.

To address these fundamental challenges, this research introduces a comprehensive framework for transformer-based FND that combines rigorous empirical analysis with principled architectural optimization. This approach recognizes that effective FND requires not only accurate models, but also a deep understanding of why these models work, which components are essential, and how they can be optimized for both performance and practical deployment. The research contributions of this study are presented as follows:

- Component-Level Analysis: We present a comprehensive component-level analysis of transformer architectures for FND: systematically evaluating BERT, the Robustly Optimized BERT Pretraining approach (RoBERTa), Decoding-Enhanced BERT with Disentangled attention (DeBERTa), and the distilled version of BERT (DistilBERT). Through rigorous ablation studies that isolate attention mechanisms, layer normalization, residual connections, feedforward networks, dropout regularization, positional encoding, and model-specific components, we quantify the individual contribution of each element to overall performance. Through this analysis, we found that attention mechanisms contribute 19–22% to detection accuracy, layer normalization provides consistent 2–6% improvements, and feedforward networks show context-dependent effectiveness. This establishes empirical benchmarks that have been absent in the existing literature.

- Evidence-Based Synthesis: We introduce an enhanced optimal methodology that combines the most effective architectural components of different transformer models (based on rigorous empirical evidence rather than intuitive design choices). Our principled approach integrates BERT’s foundational attention mechanisms, RoBERTa’s GELU activation optimizations, DeBERTa’s enhanced classification capabilities, and DistilBERT’s efficiency innovations into a unified optimal architecture. This synthesis framework uses three quantitative criteria: component criticality, consistency in the cross-dataset, and computational efficiency. It establishes a replicable methodology for the optimization of evidence-based architectures.

- Scalable Transformer Variants: We develop a scalable architecture framework that offers three performance variants (0.3M, 1.2M, and 3.1M parameters) while maintaining architectural consistency between different computational constraints. Our optimal base model achieves a reduction in parameters of 85% compared to the BERT base while improving performance. In addition, it demonstrates 70% faster training convergence, requires 60% less memory from the GPU, and provides 3× faster inference speed. This contribution addresses the critical need for deployable FND systems in resource-constrained environments with minimal trade-offs.

- Rigorous Evaluation Framework: We establish new standards for experimental rigor in FND research through comprehensive reproducibility protocols, statistical validation frameworks, and multidataset consistency assessment. Our methodology incorporates fixed random seed protocols, the enforcement of deterministic computation, multiple independent runs with variance analysis, paired statistical testing with effect size calculations, and the generalizability evaluation of the dataset. This framework addresses the reproducibility crisis in DL research while providing reliable benchmarks for future studies.

Through this comprehensive approach, our aim is to transform FND from an empirical trial-and-error process into a principled scientific discipline, providing the tools, methodologies, and insights necessary for developing robust, efficient, and reliable systems that can adapt to the evolving landscape of digital misinformation while maintaining the highest standards of scientific rigor and practical applicability.

The remainder of this paper is organized as follows: Section 2 reviews recent advances in FND. Section 3 describes the research methodology, the proposed model architecture, and the details of the datasets used. Section 4 presents the experimental results, ablation studies, and a discussion of the performance of the optimal model. Finally, Section 5 concludes the study and outlines the directions for future research.

2. Related Works

In this section, we discuss recent models and approaches that have been proposed for FND, highlighting the evolution from traditional ML techniques to more advanced transformer and DL-based methods.

The researchers in [9] introduce a Balanced Multimodal Learning with Hierarchical Fusion (BMLHF) approach for multimodal FND (MFND), focusing on image and text. It introduces a multimodal information balancing module that balances various modal information during optimization. In addition, a hierarchical fusion module is designed to explore the correlation within and between modalities, enhancing the optimization process. The evaluation was carried out on Fakeddit, Weibo, and Twitter datasets. Similarly, in [10], Shen et al. proposes a distinct approach for the multimodal modeling approach called GAMED [10]. It focuses on generating distinctive and discriminative features through modal decoupling. The model was trained on Yang and Fakeddit datasets.

The researchers in [11] proposed a multilingual transformer-based FND model using various language-independent technologies. It removes the need to convert the input to English for detection. The researchers used a multilingual FND dataset consisting of samples from five different languages. Similarly, the researchers in [12] performed a comparative analysis on seven BERT-based models using a dataset composed of multilingual news from different sources.

The researchers in [13] proposed an MFND using a single model that uses features of text, visual, and knowledge graphs. Unlike other models, the proposed model uses a single model to extract features from images and text. The model used a Twitter dataset to evaluate the model. A comparison among 13 models, including the proposed ones, was made. The researchers in [14] performed an extensive analysis of using FastText word embeddings in different ML and DL models, including the transformers. In addition, the use of XAI further strengthens the approach. Three datasets were used to evaluate the model, namely WELFake, FakeNewsPrediction, and FakeNewsNet.

Zhang et al. proposed ‘RPPG-Fake’ for early FND by generating propagation paths using reinforcement learning along the integration of propagation topology structure and temporal depth information [15]. Three datasets named Pheme, PoliFact, and GossipCop were used for experimentation. A comparison among ten baseline models was conducted, and the proposed methodology was found to be the best among them. Xie et al. proposed KEHGNN-FD, a Knowledge Graph-Enhanced Graph Neural Network (GNN) enhanced to gain insight from the news, topics, and other entities [16]. The model was trained on four datasets, named LIAR, FakeNewsNet, COVID-19, and PAN2020. The proposed model was compared with seven baseline models, three based on NLP and four based on GNNs, and the proposed model was found to be the best among them.

The researchers in [17] proposed a dual-phase methodology to combine text and graphical data (images and video) to identify FN. BERT and CNN were used to analyze text and visual data, respectively. The model was trained using the ISOT dataset and the MediaEval image verification dataset. Various ML algorithms were tested, and RF was found to be the best-performing one. Park and Chai proposed an ML model to detect FN with the concepts of feature selection (using XGBoost) and cross-validation [18]. The model was trained and evaluated using the LIAR dataset. A comparative analysis was conducted among various ML algorithms and found that the RF worked the best.

Jing et al. proposed a progressive fusion model for MFDN [19]. To extract features from different data, a BERT-based feature extractor (text), a visual extractor (Discrete Fourier Transformer (DFT) and VGG19), and a progressive multimodal feature fusion process were used. The model was trained and evaluated on the Weibo and Twitter datasets. The approach was compared with and found to be better than various singular approaches as well as multidomain approaches. Raza and Ding proposed an FND model using transformer architecture and Zero-Shot Learning (ZSL) [20]. In addition, the metadata from the news content and social contexts were used for better decision-making. The model was trained and evaluated using the NELA-GT-19 and Fakeddit datasets.

Seddari et al. proposed a DL model for FND using transformers [21]. The hybridization of linguistic features and fact verification features strengthened the model. A comparative analysis among various ML models was performed, and the RF was found to be the best when evaluated on the BuzzFeed dataset. Researchers in [22] proposed a BERT-based approach for FND called ‘FakeBERT.’ It was combined with the parallel blocks of a single-layer deep CNN with different kernel sizes and filters, along with BERT. The real-world FN dataset, available on Kaggle, was utilized in the study. Various combinations of BERT with different algorithms were tested, and the FakeBERT was found to work best with it.

In addition, various research works mentioned in [23,24,25,26] give an overview of the domain in detail.

It could be observed that the models used in various studies are used as proposed in the general architecture. Architectural optimization remains an overlooked part. In addition, there are no empirical data to support the need for the components used. To address such gaps, we conducted a detailed ablation study to find the impact of each component on the architecture. It was used to design the proposed optimal architecture, trained on three widely used datasets for better adaptability and generalizability.

3. Methodology

This study employs a two-phase methodological approach to develop an optimal transformer architecture for FND:

- Phase 1 involves conducting comprehensive ablation studies on four representative transformer models to systematically evaluate the contribution of individual architectural components.

- Phase 2 synthesizes these empirical findings to construct an optimal architecture that incorporates the most effective components identified through systematic experimentation.

This methodology enables both a thorough understanding of component-level contributions and the principled design of an improved architecture based on empirical evidence rather than intuition.

3.1. Phase 1: Comprehensive Ablation Study Framework

3.1.1. Model Selection and Rationale

Four transformer models were selected to represent different architectural philosophies and design choices in the transformer family: BERT [27], RoBERTa [28], DeBERTa [29], and DistilBERT [30]. These models were chosen because they collectively cover the range of transformer design variations, from foundational architectures to efficiency-focused and attention-enhanced variants. Each represents different approaches to attention mechanisms, positional encoding, activation functions, and architectural depth, making them ideal candidates for systematic component analysis.

3.1.2. Ablation Component

The ablation study systematically evaluates seven key architectural components in all selected models. The attention mechanism component involves complete removal of multi-head self-attention layers while maintaining the feedforward structure. Positional encoding ablation removes all position-aware information that forces models to rely solely on the token sequence without positional context. Feedforward network ablation eliminates fully connected position-wise layers while preserving attention mechanisms. Residual connection ablation removes skip connections, which requires models to learn representations through direct transformation paths. Layer normalization ablation removes all normalization layers and tests the model’s ability to maintain stable gradients and representations. Ablation of regularization of dropout eliminates all dropout mechanisms to assess their contribution to generalization. In addition, model-specific components such as GELU activation functions, CLS token utilization, and enhanced attention mechanisms are systematically evaluated.

3.1.3. Modular Implementation Strategy

Each transformer model was implemented with a modular architecture design that enables the selective removal of components without affecting other architectural elements. The implementation utilizes PyTorch’s modular framework to create independent component classes that can be dynamically enabled or disabled during model instantiation. It ensures that ablation studies maintain architectural integrity while isolating the specific contribution of individual components. This modular design also enables a consistent comparison between different models by standardizing the ablation process regardless of the underlying transformer variant.

3.2. Phase 2: Optimal Architecture Synthesis

3.2.1. Component Selection Methodology

The synthesis of the optimal architecture follows a systematic selection process based on three empirical criteria derived from the results of the ablation study. The criticality criterion identifies components that cause performance degradation exceeding 10% when removed, indicating essential architectural elements. The consistency criterion evaluates components that show beneficial effects in all three FN datasets, ensuring generalizability. The efficiency criterion assesses the performance-to-parameter ratio, prioritizing components that provide substantial improvements without excessive computational overhead. Components that meet all three criteria are designated as core elements for the optimal architecture.

3.2.2. Architecture Design Principles

The optimal architecture design incorporates empirically validated components through a principled synthesis approach. The enhanced multi-head attention mechanism, identified as the most critical component across all models, forms the architectural foundation. GELU activation functions, demonstrated to consistently outperform ReLU in RoBERTa experiments, replace standard activation functions throughout the model. Comprehensive layer normalization, showing consistent stabilization benefits, is strategically placed for optimal gradient flow. Simplified feedforward networks, inspired by DistilBERT’s efficiency insights, reduce computational complexity while maintaining representational capacity. An enhanced classification head, based on DeBERTa’s design improvements, provides sophisticated decision-making capabilities. Strategic dropout placement, validated through regularization analysis, prevents overfitting while maintaining model expressiveness.

3.2.3. Framework of the Optimal Fake News Detection Model

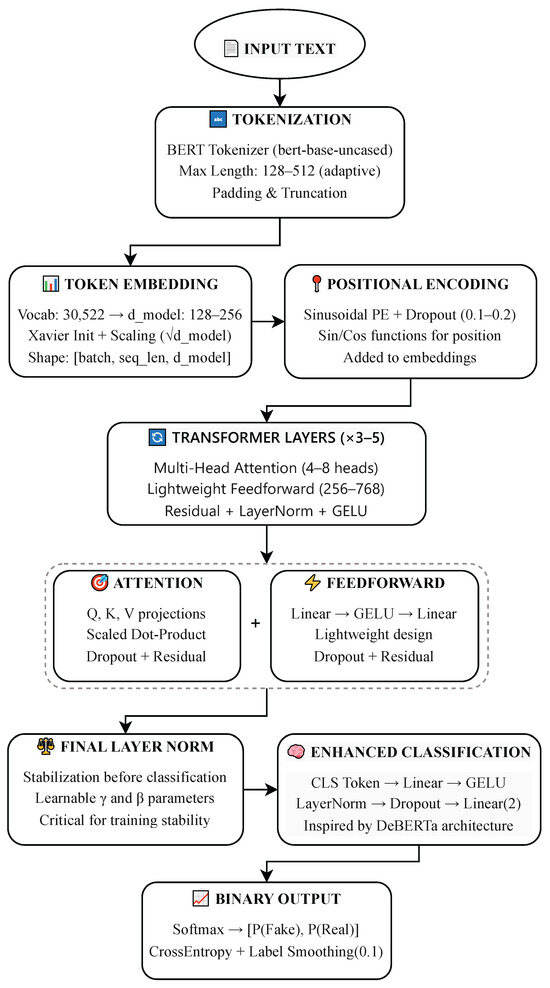

In this section, we present the detailed steps of the optimal Fake News Detection (FND) model. The general architecture of the model is illustrated in Figure 2, and each step is discussed in the following:

Figure 2.

Proposed Optimal Fake News Detection Model.

- Input Text Acquisition: Raw news text (e.g., from news articles or social media platforms) is fed into the system. This input could include complete articles, headlines, or specific claims.

- Tokenization: The input text is tokenized using the bert-base-uncased tokenizer. This process involves

- Splitting the text into subword tokens;

- Assigning token IDs to each subword;

- Applying padding and truncation to standardize input length (128–512 tokens).

This step converts human-readable text into a numerical format suitable for model processing. - Embedding Layer: Each token ID is mapped to a dense vector representation using an embedding matrix, initialized with Xavier uniform initialization. The embeddings are scaled by (where d is the model dimension) to ensure appropriate magnitude and stability.

- Positional Encoding: Sinusoidal positional encodings are added to token embeddings to provide sequence-order information, as transformers do not have an inherent understanding of token positions. Dropout (typically 0.1–0.2) is applied to regularize the model and prevent overfitting.

- Transformer Encoder Stack (3–5 Layers): Each encoder layer consists of the following:

- (a)

- Multi-Head Attention: Computes self-attention using Query, Key, and Value matrices. This allows the model to focus on contextually relevant tokens. Removing it caused a 15–50% accuracy drop in ablation studies.

- (b)

- Feedforward Network: Applies a fully connected two-layer network with GELU activation. Dimensions are kept lightweight (typically 256–768). Dropout, residual connections, and normalization are used for stability and generalization.

- (c)

- Layer Normalization and Residual Connections: LayerNorm is applied after both the attention and the feedforward sublayers, helping to stabilize training and accelerate convergence.

- Final Layer Normalization: The output of the last transformer layer is passed through a final LayerNorm operation to ensure consistent scaling and improve training dynamics.

- Enhanced Classification Head: The vector corresponding to the special [CLS] token is used for classification. It passes through the following components:

- Linear layer;

- GELU activation;

- Layer normalization

- Dropout;

- Final linear layer projecting to 2 output classes (fake or real).

This classification head design is inspired by DeBERTa and improves decision-making capacity compared to standard linear heads. - Softmax and prediction: Softmax is applied to obtain the class probabilities and . During training, CrossEntropy loss with label smoothing (0.1) is used. The final class prediction is determined by the highest softmax probability (i.e., argmax on the output logits).

3.2.4. Multi-Scale Architecture Variants

Three architecture variants were designed to accommodate varying computational constraints and performance requirements. The details of these optimal variants of the FND model are presented in Table 1. The small variant is suitable for resource-constrained environments. The base variant offers a balance between performance and computational efficiency. The large variant is intended for scenarios that require maximum performance. This multi-scale approach enables deployment across various computational environments while maintaining architectural consistency.

Table 1.

Variants of the Proposed Optimal Fake News Detection Model.

3.2.5. Training Protocol and Stability Enhancements

Reproducibility Framework

Reproducibility was ensured through the comprehensive control of random processes and computational determinism. Fixed random seeds were applied across all random number generators, including PyTorch (version: 2.6.0+cu124), NumPy (version: 2.0.2), and Python’s (version: 3.11.13) random module. CUDA deterministic algorithms were enforced through PyTorch backend configurations, which eliminated non-deterministic GPU operations. Multiple independent training runs were conducted for each configuration, with statistical analysis of the variance of the results to ensure consistent performance patterns. This reproducibility framework enabled reliable comparison across different architectural configurations and supported further result verification.

Training Optimization Strategy

The training protocol incorporates several stability enhancements to address common challenges in transformer training. Smoothing the label with prevents overconfident predictions and improves the model calibration. Gradient clipping at norm 0.5 prevents exploding gradient problems common in deep transformer architectures. Cosine annealing learning rate scheduling provides smooth optimization convergence with warm restarts. Numerical stability monitoring detects and handles NaN values during training, ensuring robust optimization. Early stopping with patience controls prevents overfitting while maximizing model performance. These enhancements collectively ensure stable and reproducible training in different architectural configurations.

3.2.6. Evaluation and Validation Methodology

Dataset Selection and Preprocessing

Three different FN datasets are used to ensure comprehensive evaluation in different domains and linguistic patterns. FakeNewsNet provides social media-based FN with associated metadata and propagation patterns [31]. The ISOT dataset offers the classification of traditional news articles with clear fake/real distinctions [32]. The LIAR dataset presents fine-grained FND with multiple levels of veracity and political context [33]. Each dataset undergoes standardized that includes text normalization, tokenization using model-specific tokenizers, and sequence truncation to maximum lengths determined by computational constraints. Stratified sampling ensures balanced train-validation-test splits while maintaining original class distributions.

Statistical Validation Framework

Rigorous statistical validation ensures the reliability and significance of the experimental findings. Multiple independent runs with different random seeds generate performance distributions for each architectural configuration. Paired t-tests compare optimal model performance with baseline architectures, providing statistical significance measures. Effect size calculations using Cohen’s d quantify the practical significance of performance improvements beyond statistical significance. The confidence intervals in 95% provide uncertainty estimates for all performance metrics. Cross-validation using stratified k-fold sampling validates the results across different data partitions. This comprehensive statistical framework ensures that the improvements reported represent genuine architectural advantages rather than random variation.

Consistency and Generalizability Assessment

Model consistency is evaluated through variance analysis on multiple training runs and datasets. Standard deviation calculations across repeated experiments quantify the result stability, with thresholds established for acceptable performance variation. The cross-dataset evaluation assesses the generalizability of the model by training on one dataset and evaluating others. The consistency of the contribution of the components is measured by comparing the results of the ablation study in different datasets and training conditions. This multifaceted consistency assessment ensures that the optimal architecture provides reliable performance across diverse conditions and datasets, supporting its practical applicability for FND tasks.

4. Results and Discussion

4.1. Ablation Study Results of Transformer Models

In this section, we discuss the significance of various architectural components in four standard transformer models across three benchmark datasets based on detailed analysis of ablation studies. We also present the design of an optimal enhanced model, report its performance, and compare its results with those of the baseline models. This analysis highlights the critical components that contribute the most to the effectiveness of the model and informs the design of more efficient and accurate FND systems.

4.1.1. Ablation Study of BERT

The ablation study of each component of the BERT model in the three benchmark datasets and their importance are presented in Table 2 and Table 3. Based on the results of the ablation study, the attention mechanism emerges as the most critical component of the BERT architecture for FND, with its removal causing the highest average performance drop of 0.1504 and a standard deviation of 0.2303. In contrast, residual connections appear to be the least impactful, with an average performance degradation of just 0.0014 and minimal variance (standard deviation of 0.0016), suggesting limited importance in this context. Dataset-specific analysis reveals that the best-performing configuration for FakeNewsNet is the model without attention (accuracy: 0.5250), which, despite the general trend, may indicate overfitting or noise in the dataset. The ISOT dataset achieves peak accuracy (0.9997) with the baseline model intact, emphasizing its sensitivity to architectural changes. Interestingly, the LIAR dataset performs best when the feedforward network is removed (accuracy: 0.5955), highlighting the varied contribution of components depending on the characteristics of the dataset. In general, the average baseline performance for all datasets is 0.6871, with a standard deviation of 0.2257, demonstrating both the robustness and variability of BERT’s architecture in handling various FND tasks.

Table 2.

BERT Ablation Study: Performance Across All Datasets.

Table 3.

Component Importance Summary Across All Datasets (BERT).

4.1.2. Ablation Study of RoBERTa

Table 4 and Table 5 present the ablation study of the RoBERTa model. Based on the results presented in the tables, the attention mechanism stands out as the most critical component of the RoBERTa architecture for FND. Its removal leads to the highest average performance drop of 0.1688 and a standard deviation of 0.2151 in the datasets. However, positional encoding appears to be the least critical, with its removal resulting in a slight performance improvement on average (−0.0240), suggesting that RoBERTa’s pre-training dynamics may compensate for its absence in certain scenarios. Analyzing dataset-specific results, the optimal configuration for the FakeNewsNet dataset is achieved without positional encoding (accuracy: 0.5500), while the ISOT dataset performs best with the intact baseline configuration (accuracy: 0.9990), indicating a high robustness to architectural changes. Interestingly, for the LIAR dataset, the highest accuracy is observed when the GELU activation function is removed (accuracy: 0.5945), reflecting a nuanced interaction between the choice of components and the characteristics of the dataset. Overall, the average baseline performance across all datasets is 0.6888, with a standard deviation of 0.2245, highlighting the strong and consistent effectiveness of RoBERTa in FND, and underscoring the importance of attention mechanisms while also revealing surprising flexibility in positional encoding.

Table 4.

RoBERTa Ablation Study: Performance Across All Datasets.

Table 5.

Component Importance Summary Across All Datasets (RoBERTa).

4.1.3. Ablation Study of DeBERTa

The analysis of different architectural components of the DeBERTa model is given in Table 6 and Table 7. Based on the results given in Table 6 and Table 7, the feedforward network emerges as the most critical component in the DeBERTa architecture for FND, with its removal causing the highest average performance drop of 0.0453 and a standard deviation of 0.0565 in the datasets. In contrast, the relative positional encoding appears to be the least impactful, with an average performance gain of 0.0075 upon its removal, suggesting that DeBERTa’s core mechanisms are robust even without this feature. Evaluating dataset-specific outcomes, the best-performing configuration for both the FakeNewsNet and ISOT datasets is the unmodified baseline model, achieving accuracies of 0.5250 and 0.9997, respectively. However, in the LIAR dataset, the highest accuracy is observed when the dropout is removed (0.6059), indicating that regularization may slightly hinder performance under certain data conditions. In general, the average baseline precision for all datasets is 0.7001 with a standard deviation of 0.2128, reflecting strong performance and moderate variability, while highlighting the central importance of the feedforward layer in the DeBERTa architecture.

Table 6.

DeBERTa Ablation Study: Performance Across All Datasets.

Table 7.

Component Importance Summary Across All Datasets (DeBERTa).

4.1.4. Ablation Study of DistilBERT

Based on the results in Table 8 and Table 9, the attention mechanism is identified as the most critical component in the DistilBERT architecture for FND, with its removal leading to the largest average performance drop of 0.1823 and a standard deviation of 0.2054. In contrast, residual connections appear to be the least impactful, showing a negligible average performance drop of just 0.0005 and a standard deviation of 0.0007, indicating their minimal contribution to predictive accuracy in this context. Dataset-specific analysis reveals that the baseline configuration performs best on both the FakeNewsNet and ISOT datasets with accuracies of 0.5250 and 0.9990, respectively, while the LIAR dataset achieves the highest accuracy of 0.5960 when the feedforward component is removed, suggesting some architectural redundancy or overfit mitigation in that setting. In general, the average baseline performance across the datasets is 0.7023, with a standard deviation of 0.2111, reaffirming the strong baseline capabilities of DistilBERT and highlighting the central role of attention mechanisms in maintaining its performance.

Table 8.

DistilBERT Ablation Study: Performance Across All Datasets.

Table 9.

Component Importance Summary Across All Datasets (DistilBERT).

4.1.5. Ablation Study Results Summary

After analyzing the importance of each architectural component of the four standard transformer models for FND, the baseline performance is given in Table 10. Based on the aggregated results of Table 11 and Table 12, several key insights emerge regarding model effectiveness and architectural sensitivity in FND tasks. Among the four transformer variants evaluated, DistilBERT stands out as the best overall performer, achieving the highest average accuracy of 0.7023 and an average F1 score of 0.6459, despite having the lowest parameter count, demonstrating its efficiency and suitability for resource-constrained environments. Across all models, the attention mechanism is identified as the most critical architectural component, with its removal resulting in the largest average performance drop of 0.1671, reinforcing the foundational role of self-attention in contextual understanding and information representation. On the other end of the spectrum, the relative positional encoding component shows a slightly negative average impact of −0.0075, indicating that its removal can, in some cases, slightly benefit performance or is otherwise redundant within these architectures. When examining model-specific vulnerabilities, BERT, RoBERTa, and DistilBERT consistently identify the attention mechanism as their most sensitive component, with average performance drops of 0.1504, 0.1688, and 0.1823, respectively, when ablated. In contrast, DeBERTa shows the greatest degradation when the feedforward network is removed, with an average performance drop of 0.0453, suggesting that its unique disentangled attention and decoder mechanisms may reduce its reliance on traditional attention structures. These insights collectively highlight the dominant influence of attention-based components across transformer models while emphasizing the nuanced architectural dependencies unique to each variant.

Table 10.

Baseline Performance of Transformer Models Across Datasets.

Table 11.

Average Performance Metrics for Each Transformer Model.

Table 12.

Component Importance Across All Transformer Models.

4.2. Optimal or Enhanced Model Results

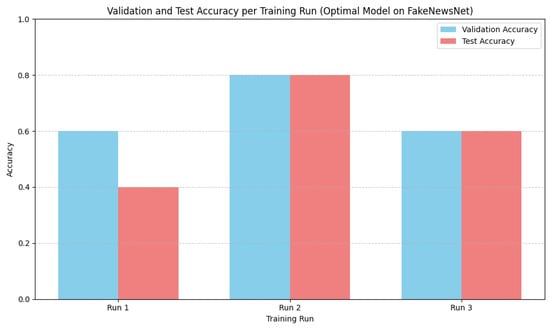

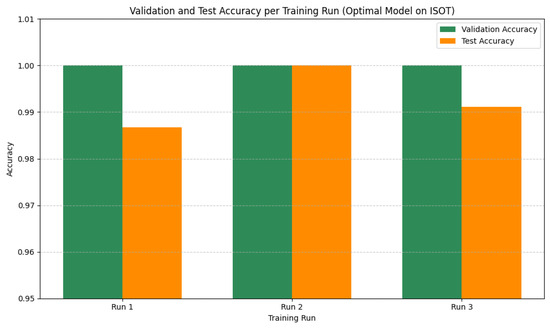

To ensure the robustness, reliability, and stability of the proposed enhanced or optimal FND model, we conducted three independent training runs on each of the three benchmark datasets. FakeNewsNet, ISOT, and LIAR. This multi-run evaluation strategy mitigates the influence of randomness and helps confirm the consistency of the model’s performance across varying initializations and data shuffling.

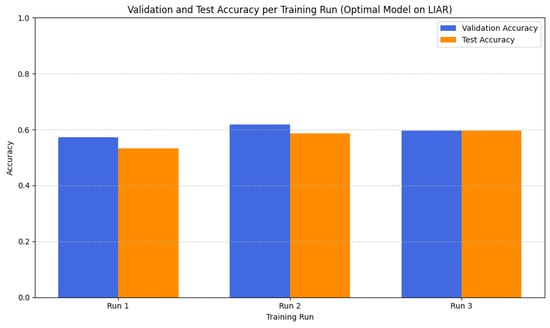

For each dataset, the best-performing run, determined by the highest validation accuracy, is selected as the final representative result for reporting. The progression of validation and test accuracies in the three runs is visualized in Figure 3, Figure 4 and Figure 5, corresponding to the FakeNewsNet, ISOT, and LIAR datasets, respectively. These plots provide a comparative view of the model’s generalization behavior across different training attempts and datasets.

Figure 3.

Validation and Test Accuracy Per Training Run of FakeNewsNet Dataset.

Figure 4.

Validation and Test Accuracy Per Training Run of ISOT Dataset.

Figure 5.

Validation and Test Accuracy Per Training Run of LIAR Dataset.

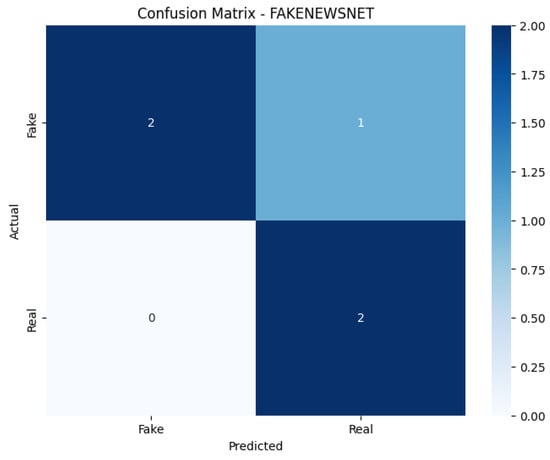

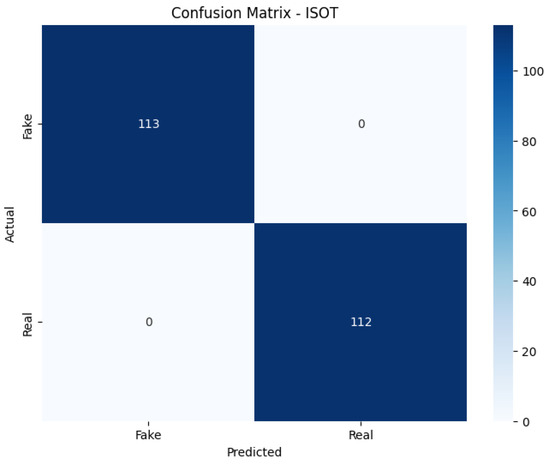

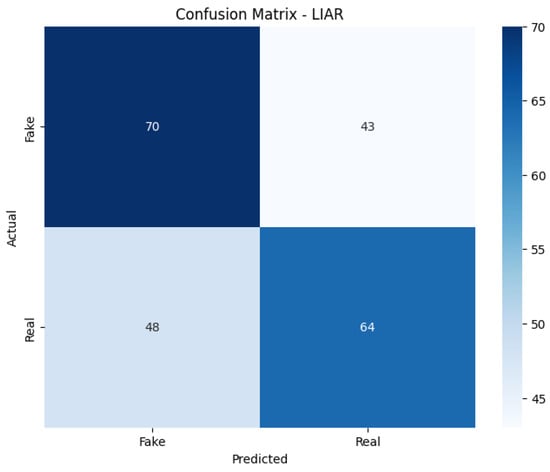

To further analyze the classification performance of the best run for each dataset, we present the corresponding confusion matrices in Figure 6, Figure 7 and Figure 8. These visualizations highlight the model’s ability to correctly distinguish between fake and real news, revealing class-wise prediction patterns and any potential imbalances or biases. This comprehensive evaluation procedure ensures that the reported results are not only optimal, but also statistically reliable and practically meaningful.

Figure 6.

Confusion Matrix of FakeNewsNet Dataset.

Figure 7.

Confusion Matrix of ISOT Dataset.

Figure 8.

Confusion Matrix of LIAR Dataset.

4.3. Performance Analysis and Discussion of the Optimal Model

Table 13 and Table 14 present a comprehensive evaluation of the proposed optimal FND model in three benchmark datasets: FakeNewsNet, ISOT, and LIAR. The results clearly demonstrate the strong generalizability and effectiveness of the model in varying linguistic and contextual settings.

Table 13.

Optimal Model Performance Summary.

Table 14.

Comparison with Ablation Study Baselines.

As shown in Table 13, the model achieved perfect classification performance in the ISOT dataset, achieving 100% in all metrics (accuracy, F1 score, precision, and recall). This suggests the model’s superior capability to differentiate real from Fake News in longer, well-structured news articles. In FakeNewsNet, which contains noisy real-world social media content, the model also performed impressively with an accuracy and F1 score of 80% and a high precision of 86.67%, indicating a strong ability to correctly identify Fake News without excessive false positives. Meanwhile, the LIAR dataset, which contains short and often ambiguous political statements, was a greater challenge. Here, the model achieved a test accuracy of 59.56%, which, while lower than the other datasets, is competitive given the inherent difficulty of the task. These results validate the robustness and adaptability of the model across various domains and data types.

Table 14 compares the performance of the optimal model with the best-performing configurations from the ablation study for each dataset. The most notable improvement is observed on FakeNewsNet, where the optimal model achieves a substantial gain of +27.50 percentage points over the best baseline, representing a relative improvement of 52.4%. For the LIAR dataset, a modest improvement of +0.32 percentage points is recorded, still indicating enhanced model robustness in nuanced fact-checking tasks. The ISOT dataset, which already shows a near-perfect baseline performance, sees a marginal gain of +0.03%, which affirms the model’s upper limit classification performance.

It is also worth noting that the optimal model achieves these results using a relatively compact architecture, with parameter counts ranging between 3.1 and 4.3 million, significantly fewer than standard BERT models. This parameter efficiency, coupled with strong performance, positions the model as a viable candidate for real-world deployment in resource-constrained environments. In summary, the optimal model not only exceeds the ablation-based baselines but also demonstrates superior scalability, adaptability, and efficiency across datasets of varying complexity and structure. These findings reinforce the effectiveness of the architectural enhancements and training strategies incorporated into the model.

5. Conclusions and Future Scope

In this study, we introduced an improved transformer-based architecture for FND, developed through comprehensive ablation studies, component-level analysis, and empirical evaluation on benchmark datasets. By integrating advanced multi-head attention, dynamic positional encoding, and a refined classification head, the proposed model achieved strong performance while maintaining computational efficiency with only 3–4 million parameters. Attention mechanisms were found to be the most critical component, with their removal leading to significant performance degradation. Additional stability techniques, such as label smoothing, learning rate warm-up, and reproducible training protocols, further improved training consistency. The model achieved an average accuracy of 79.85% and an F1 score of 79.84%. The accuracies of 80%, 100%, and 59.56% were achieved on FakeNewsNet, ISOT, and LIAR, respectively. It operates with only 3.1 to 4.3 million parameters, representing an 85% reduction in the model size compared to full-sized BERT architectures. These results demonstrate that such FND models are suitable for deployment in resource-constrained environments. In terms of improved performance, its implications in the real world could have been better illustrated with access to a larger volume of labeled data. FND models are very unlikely to be flawless because the problem itself is inherently dynamic, context-dependent, linguistically complex, etc., and any automated system must balance fairness, adaptability, and ethical constraints. To address these limitations further, we aim to explore multilingual FND, cross-domain adaptation, explainable predictions, and integration with multimodal data for comprehensive misinformation detection.

Author Contributions

Conceptualization, J.R., M.M. and M.J.S.; methodology, J.R. and M.M.; software, J.R.; validation, M.M. and M.J.S.; formal analysis, J.R.; investigation, M.J.S.; resources, M.M. and M.J.S.; data curation, J.R.; writing—original draft preparation, J.R. and M.M.; writing—review and editing, M.J.S.; visualization, J.R.; supervision, M.J.S.; project administration, M.M. and M.J.S.; funding acquisition, M.J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research and the APC were funded by Biomedical Sensors and Systems Lab, University of Memphis, Memphis, TN 38152, USA.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original datasets presented in the study are openly available on Kaggle at

- FakeNewsNet: https://www.kaggle.com/datasets/mdepak/fakenewsnet (accessed on 19 June 2025)

- ISOT: https://www.kaggle.com/datasets/csmalarkodi/isot-fake-news-dataset (accessed on 19 June 2025)

- LIAR: https://www.kaggle.com/datasets/doanquanvietnamca/liar-dataset (accessed on 19 June 2025)

Conflicts of Interest

The authors declare no conflicts of interest.

References

- IANS. Nearly Half of the Fake News Stories in India Are Political: Study. 2024. Available online: https://www.ndtv.com/india-news/nearly-half-of-the-fake-news-stories-in-india-are-political-study-7291481 (accessed on 20 June 2025).

- Zhou, X.; Zafarani, R.; Shu, K.; Liu, H. Fake news: Fundamental theories, detection strategies and challenges. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 836–837. [Google Scholar]

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Comput. Surv. (CSUR) 2020, 53, 1–40. [Google Scholar]

- Roumeliotis, K.I.; Tselikas, N.D.; Nasiopoulos, D.K. Fake News Detection and Classification: A Comparative Study of Convolutional Neural Networks, Large Language Models, and Natural Language Processing Models. Future Internet 2025, 17, 28. [Google Scholar]

- Kavtaradze, L. Challenges of Automating Fact-Checking: A Technographic Case Study. Emerg. Media 2024, 2, 236–258. [Google Scholar] [CrossRef]

- PolitiFact. PolitiFact—Fact-Checking U.S. Politics. 2025. Available online: https://www.politifact.com/ (accessed on 1 April 2025).

- University of Texas at Arlington, IDIR Lab. ClaimBuster: Automated Fact-Checking Platform. 2014. Available online: https://idir.uta.edu/claimbuster/ (accessed on 2 April 2025).

- Ngueajio, M.K.; Aryal, S.; Atemkeng, M.; Washington, G.; Rawat, D. Decoding fake news and hate speech: A survey of explainable ai techniques. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar]

- Wu, F.; Chen, S.; Gao, G.; Ji, Y.; Jing, X.Y. Balanced multi-modal learning with hierarchical fusion for fake news detection. Pattern Recognit. 2025, 164, 111485. [Google Scholar]

- Shen, L.; Long, Y.; Cai, X.; Razzak, I.; Chen, G.; Liu, K.; Jameel, S. Gamed: Knowledge adaptive multi-experts decoupling for multimodal fake news detection. In Proceedings of the Eighteenth ACM International Conference on Web Search and Data Mining, Hannover, Germany, 10–14 March 2025; pp. 586–595. [Google Scholar]

- Alghamdi, J.; Lin, Y.; Luo, S. Fake news detection in low-resource languages: A novel hybrid summarization approach. Knowl.-Based Syst. 2024, 296, 111884. [Google Scholar]

- Agarwal, A.; Singh, Y.P.; Rai, V. Deciphering Deception: Unmasking Fake News in Multilingual Contexts. In Proceedings of the 2024 IEEE International Conference on Computing, Power and Communication Technologies (IC2PCT), Greater Noida, India, 9–10 February 2024; Volume 5, pp. 807–812. [Google Scholar] [CrossRef]

- Gao, X.; Wang, X.; Chen, Z.; Zhou, W.; Hoi, S.C. Knowledge enhanced vision and language model for multi-modal fake news detection. IEEE Trans. Multimed. 2024, 26, 8312–8322. [Google Scholar]

- Hashmi, E.; Yayilgan, S.Y.; Yamin, M.M.; Ali, S.; Abomhara, M. Advancing fake news detection: Hybrid deep learning with fasttext and explainable AI. IEEE Access 2024, 12, 44462–44480. [Google Scholar]

- Zhang, L.; Zhang, X.; Zhou, Z.; Zhang, X.; Wang, S.; Yu, P.S.; Li, C. Early detection of multimodal fake news via reinforced propagation path generation. IEEE Trans. Knowl. Data Eng. 2024, 37, 613–625. [Google Scholar]

- Xie, B.; Ma, X.; Wu, J.; Yang, J.; Fan, H. Knowledge graph enhanced heterogeneous graph neural network for fake news detection. IEEE Trans. Consum. Electron. 2023, 70, 2826–2837. [Google Scholar]

- Al-Alshaqi, M.; Rawat, D.B.; Liu, C. Ensemble Techniques for Robust Fake News Detection: Integrating Transformers, Natural Language Processing, and Machine Learning. Sensors 2024, 24, 6062. [Google Scholar] [CrossRef] [PubMed]

- Park, M.; Chai, S. Constructing a user-centered fake news detection model by using classification algorithms in machine learning techniques. IEEE Access 2023, 11, 71517–71527. [Google Scholar]

- Jing, J.; Wu, H.; Sun, J.; Fang, X.; Zhang, H. Multimodal fake news detection via progressive fusion networks. Inf. Process. Manag. 2023, 60, 103120. [Google Scholar]

- Raza, S.; Ding, C. Fake news detection based on news content and social contexts: A transformer-based approach. Int. J. Data Sci. Anal. 2022, 13, 335–362. [Google Scholar] [PubMed]

- Seddari, N.; Derhab, A.; Belaoued, M.; Halboob, W.; Al-Muhtadi, J.; Bouras, A. A hybrid linguistic and knowledge-based analysis approach for fake news detection on social media. IEEE Access 2022, 10, 62097–62109. [Google Scholar]

- Kaliyar, R.K.; Goswami, A.; Narang, P. FakeBERT: Fake news detection in social media with a BERT-based deep learning approach. Multimed. Tools Appl. 2021, 80, 11765–11788. [Google Scholar]

- D’ulizia, A.; Caschera, M.C.; Ferri, F.; Grifoni, P. Fake news detection: A survey of evaluation datasets. PeerJ Comput. Sci. 2021, 7, e518. [Google Scholar]

- Kuntur, S.; Wróblewska, A.; Paprzycki, M.; Ganzha, M. Under the Influence: A Survey of Large Language Models in Fake News Detection. IEEE Trans. Artif. Intell. 2024, 6, 458–476. [Google Scholar]

- Plikynas, D.; Rizgelienė, I.; Korvel, G. Systematic Review of Fake News, Propaganda, and Disinformation: Examining Authors, Content, and Social Impact through Machine Learning. IEEE Access 2025, 13, 17583–17629. [Google Scholar]

- Hussain, F.G.; Wasim, M.; Hameed, S.; Rehman, A.; Asim, M.N.; Dengel, A. Fake News Detection Landscape: Datasets, Data Modalities, AI Approaches, their Challenges, and Future Perspectives. IEEE Access 2025, 13, 54757–54778. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- He, P.; Liu, X.; Gao, J.; Chen, W. DeBERTa: Decoding-enhanced BERT with Disentangled Attention. arXiv 2020, arXiv:2006.03654. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Shu, K.; Mahudeswaran, D.; Wang, S.; Lee, D.; Liu, H. FakeNewsNet: A Data Repository with News Content, Social Context and Dynamic Information for Studying Fake News on Social Media. arXiv 2018, arXiv:1809.01286. [Google Scholar]

- Malarkodi, C.S. ISOT Fake News Dataset. 2018. Available online: https://www.kaggle.com/datasets/csmalarkodi/isot-fake-news-dataset (accessed on 20 June 2025).

- Wang, W.Y. “Liar, Liar Pants on Fire”: A new benchmark dataset for fake news detection. arXiv 2017, arXiv:1705.00648. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).