1. Introduction

We live in a deeply interconnected society where aspects of someone’s personal and social life, professional affiliations, hobbies, and interests become part of a public profile. A notable example where different facets of a person’s life become publicized is their social network profiles or digital identities. The intricate relationships between online personalities and our physical world have useful applications in the areas of decision making, information fusion, artificial intelligence, pattern recognition, and biometrics. Extensive studies have evaluated intelligent methods and information fusion techniques in the information security domain [

1,

2]. Recent advancements in machine learning and deep learning present new opportunities to extract new knowledge from the publicly available data [

3] and, thus, pose new threats to user privacy. This review article examines how integrating de-identification with other types of auxiliary information, which may be available directly or indirectly, can impact the performance of existing biometric identification systems. Analytical discussions on the de-identification of biometric data to protect user privacy are presented. This article also provides insights into the current and emerging research in the biometric domain and poses some open questions that are of prime import to information privacy and security researchers. The answers to these questions can assist the development of new methods for biometric security and privacy preservation in an increasingly connected society.

Privacy is an essential social and political issue, characterized by a wide range of enabling and supporting technologies and systems [

4]. Amongst these are multimedia, big data, communications, data mining, social networks, and audio-video surveillance [

5,

6]. Along with classical methods of encryption and discretionary access controls, de-identification became one of the primary methods for protecting the privacy of multimedia content [

7]. De-identification is defined as a process of removing personal identifiers by modifying or replacing them to conceal some information from public view [

8]. However, de-identification has not been a primary focus of biometric research despite the pressing need for methodologies to protect personal privacy while ensuring adequate biometric trait recognition.

There is no agreement on a single definition for what de-identification truly is in the literature on the subject. For instance, Meden et al. [

9] defined de-identification as follows: “The process of concealing personal identifiers or replacing them with suitable surrogates in personal information to prevent the disclosure and use of data for purposes unrelated to the purpose for which the data were originally collected”. However, Nelson et al. [

10] proposed the following definition: “De-identification refers to the reversible process of removing or obscuring any personally identifiable information from individual records in a way that minimizes the risk of unintended disclosure of the identity of individuals and information about them. It involves the provision of additional information to enable the extraction of the original identifiers by, for instance, an authorized body”. While the primary goal of de-identification is to protect user data privacy, its implementation is strikingly different depending on the application domain or the commercial value of the designed system. In the subsequent sections, we explore the differences among de-identification methodologies in depth, create a taxonomy of de-identification methods, and introduce new types of de-identification based on auxiliary biometric features.

This article summarizes fragmented research on biometric de-identification and provide a unique classification based on the mechanisms that achieve de-identification. Thus, it makes the following contributions:

For the first time, a systematic review is presented with a circumspect categorization of all de-identification methodologies based on the modalities employed and the types of biometric traits preserved after de-identification.

Four new types of emerging modalities are presented where de-identification is desirable and beneficial, namely sensor-based, emotion-based, social behavioral biometrics-based, and psychological traits-based de-identification.

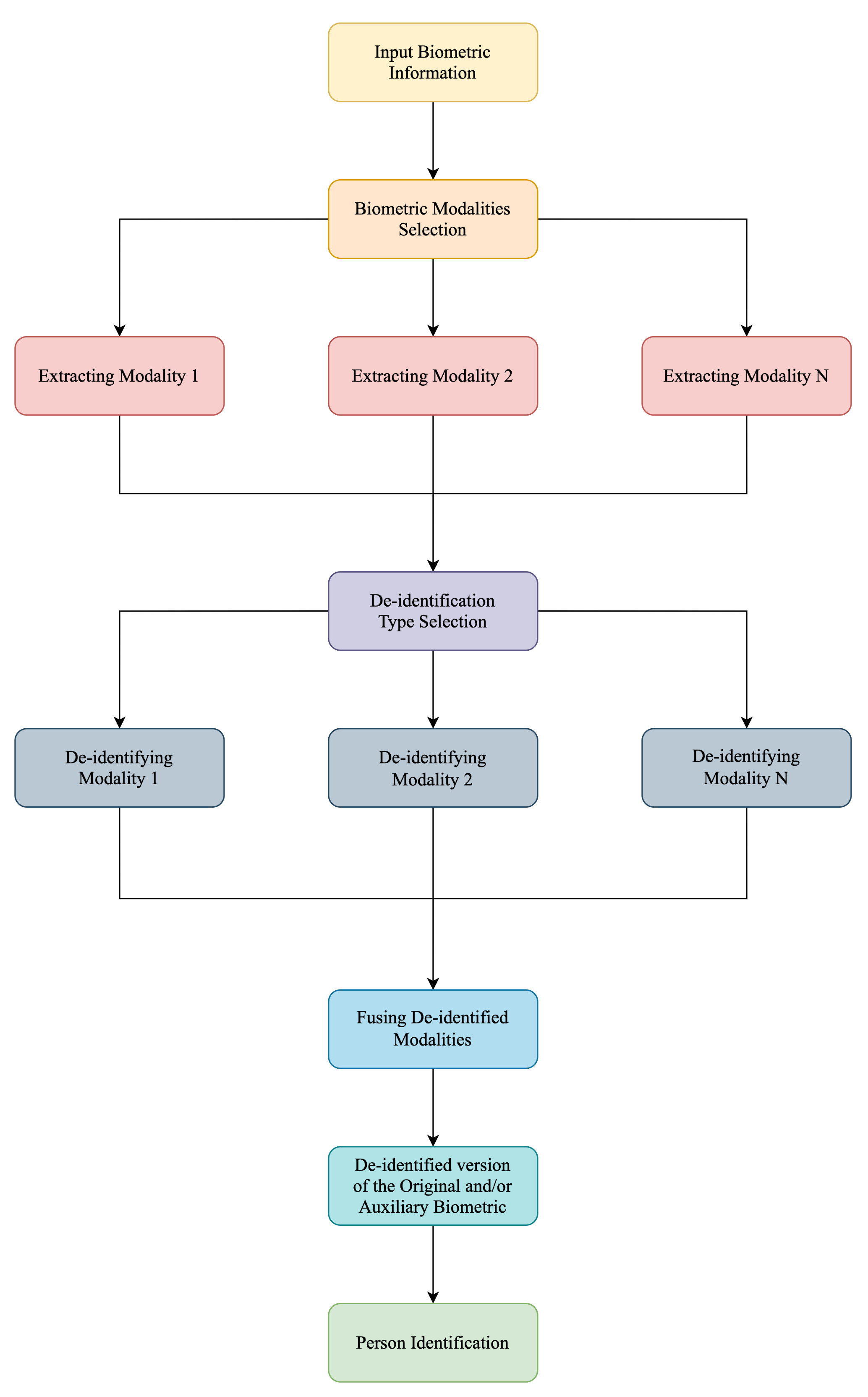

A new paradigm for the design and implementation of multi-modal de-identification is proposed by considering the categories of traditional, soft, and auxiliary biometric traits and their de-identification.

A list of applications in the domains of cybersecurity, surveillance, risk analysis, mental health, and consumer applications is presented, where de-identification can be of critical importance in securing the privacy of biometric data.

2. Taxonomy of Biometric De-Identification

We start by introducing the definitions of biometrics, traditional biometrics, soft biometrics, social behavioral biometrics, and emerging biometrics.

Definition 1. Biometrics: Biometrics are human characteristics that can be used to digitally identify a person to grant access to systems, devices, or data [11]. Definition 2. Traditional Biometrics: Traditional biometrics are defined as well-established biometrics that are categorized as either physiological (face, fingerprint, and iris) or behavioral (gait, voice, and signature) [11]. Definition 3. Soft Biometrics: The term soft biometrics is used to describe traits such as age, gender, ethnicity, height, weight, emotion, body shape, hair color, facial expression, linguistic and paralinguistic features, and tattoos, etc., that possess significantly lower uniqueness than traditional biometric traits [12]. Definition 4. Social Behavioral Biometrics: Social Behavioral Biometrics (SBB) is an identification of an actor (person or avatar) based on their social interactions and communication in different social settings [13]. Definition 5. Emerging Biometrics: Emerging biometrics are new biometric measures that have shown the prospect of enhancing the performance of the traditional biometrics by fusing these new biometric modalities with established ones [14]. Thus, social behavioral biometrics can be considered as one example of emerging biometrics. Sensor-based, emotion-based, or psychological traits-based user identification are others examples of new identification types.

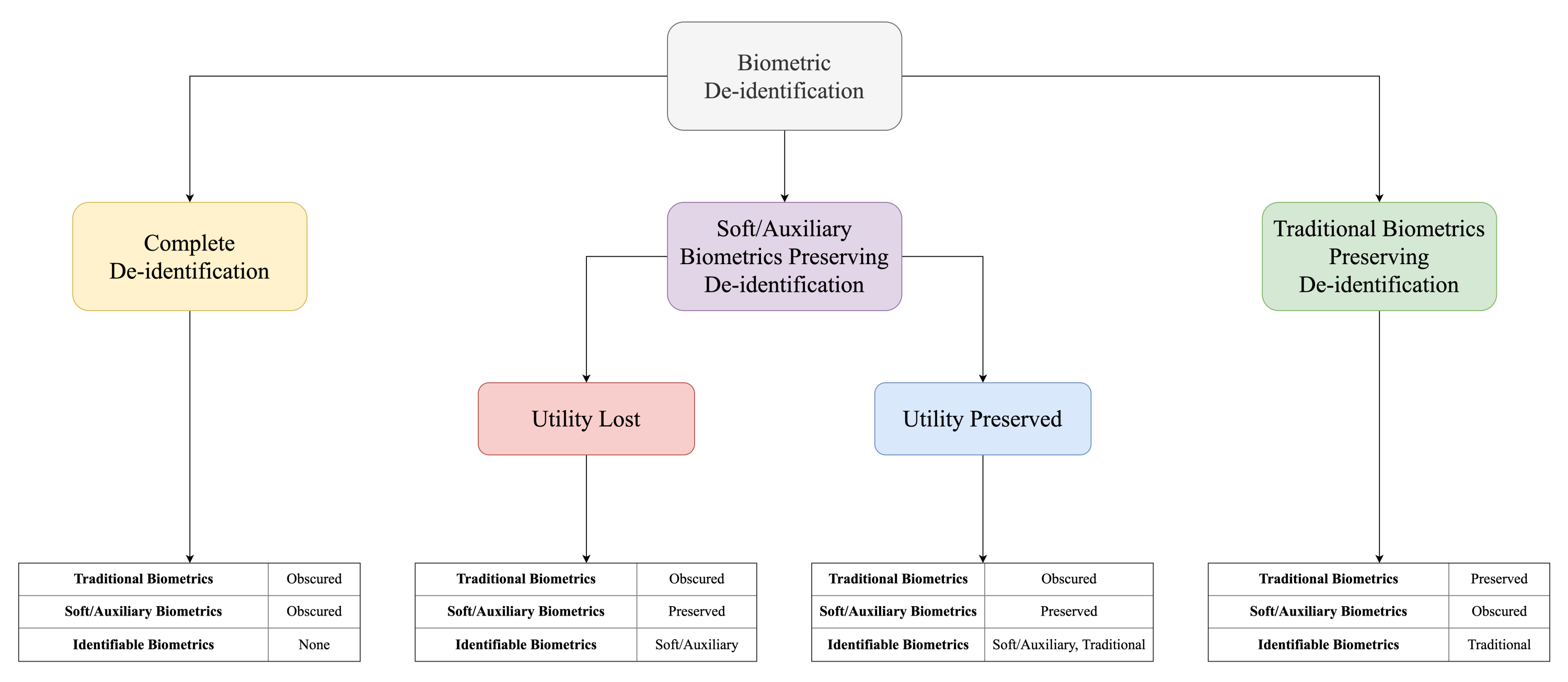

Current research into de-identification is highly dispersed. Hence, there has been no consistent method of classifying different approaches and reconciling various definitions of de-identification. In this review article, we categorize biometric de-identification into three classes based on the biometric type and the ability of a biometric system to identify a subject. The categories are as follows:

Complete de-identification;

Soft biometric preserving de-identification;

- (a)

Utility lost de-identification;

- (b)

Utility retained de-identification;

Traditional biometric preserving de-identification.

The proposed classifications of de-identification are discussed below.

Complete De-identification:

Complete de-identification is the first category of de-identification research. A known problem of pair-wise constraint identification refers to a situation where a system can determine that two de-identified faces in a video belonging to the same individual by using hairstyle, clothing, dressing style, or other soft biometric features [

5]. Thus, in addition to traditional biometric de-identification, soft biometric de-identification is also necessary. We define complete de-identification as a process where the biometric modality of a person is entirely de-identified, for instance, by being fully masked or obscured. Neither the identity of a person based on this biometric modality nor soft biometrics of the de-identified person can be recognized. This is true for human identification through visual inspection, as well as for a more common computer-based biometric system. Complete de-identification is used in mass media or police video footage, where sensitive information needs to be hidden [

15].

Soft Biometric Preserving De-identification:

Soft biometric preserving de-identification is the second proposed category. It is a process of de-identifying a particular traditional biometric trait, while the soft biometric traits remain distinguishable. The purpose of such de-identification methods is to remove the ability to identify a person using the given biometric, while still retaining soft biometric traits. For example, this type of de-identification would prevent face recognition technologies from identifying an individual, while still retaining their gender or age information [

16], making it possible for a user to post a natural-looking video message on a public forum anonymously.

We further subdivide this category into

utility lost and

utility retained de-identification. As established above, in this group of methods, soft biometric traits are preserved, while the key traditional biometric trait/traits is/are obscured. The main difference is that in

utility retained de-identification, the biometric system is able to establish the identity of a person using the obscured key traditional biometric. In

utility lost de-identification, this is no longer possible for a human observer or for a computer [

17].

Traditional Biometric Preserving De-identification:

Traditional biometric preserving de-identification is the third proposed category. It encompasses methods where only the soft biometric traits are obscured, while the key traditional biometric traits are preserved. Both human inspectors and biometric recognition systems are able to identify an individual based on their key biometric trait, whereas the soft biometric traits, such as height or a hair color, are rendered non-identifiable [

18]. For example, the face of an individual remains the same, while the height or a hair color is changed.

Figure 1 depicts the proposed classification of biometric de-identification.

Finally, it is worth noticing that the traditional multi-modal biometric identification system can be classified according to the above taxonomy as having the following characteristics. Key traditional biometrics are preserved, soft biometrics are preserved, and identification can be performed from both traditional and soft biometrics.

In addition to the above-mentioned categories, the de-identification methods can be either

reversible or

non-reversible [

6].

Definition 6. Reversible De-identification: In reversible de-identification, the system is developed such that the modified biometric traits can be reversed back to their original form [6]. Definition 7. Irreversible De-identification: In irreversible de-identification, the transformation is intentionally developed not to be reversible [6]. Recent developments have expanded our traditional understanding of biometric traits from physiological and behavioral to social, temporal, emotional, sensor-based, and other auxiliary traits [

19].

Definition 8. Auxiliary Biometric Trait: All biometric traits that are not unique enough on their own for person identification can be considered as auxiliary biometric traits. Thus, spatio-temporal patterns, idiosyncratic communication styles, personality types, emotions, age, gender, clothing, and social network connectivity are all examples of auxiliary biometric traits [19]. For example, a person’s emotion can be considered as an auxiliary trait, while their face or gait are treated as a traditional biometric trait. Either emotion or a key trait (or both) can be obscured in order to retain user privacy, based on the application domain and the main purpose of the biometric system.

Based on the above discussion, we propose an expansion of the taxonomy of biometric de-identification to include the aforementioned auxiliary categories of emerging biometric traits. The category definitions include the previously introduced taxonomy, where the notion of soft biometrics is expanded to include emerging auxiliary traits:

Complete de-identification;

Auxiliary biometric preserving de-identification;

- (a)

Utility lost de-identification;

- (b)

Utility retained de-identification;

Traditional biometric preserving biometric de-identification.

This classification is reflected in

Figure 1.

3. Comprehensive Classification of Existing De-Identification Methods

Privacy of biometric data is of paramount importance [

20]. The research on biometric de-identification originated about a decade ago, with earlier works considering complete de-identification as the primary method of ensuring user privacy. This section summarizes key findings in the domains of traditional and soft biometric de-identification and classifies existing research studies into the proposed de-identification categories.

Complete de-identification refers to the modification of the original biometric trait such that the identifying information is lost. A comprehensive review of methods for visual data concealment is found in the work by Padilla-Lopez et al. [

21]. They include filtering, encryption, reduction through k-same methods or object removal, visual abstraction, and data hiding. Korshunov et al. [

22] used blurring techniques for complete face de-identification. Their process applied a blurring filter on the localized portion of a face. They also applied the pixelization and masking method to measure its impact on face de-identification. Subsequently, they performed a masking operation on a face image to fully prevent a biometric recognition system from identifying a face. Another work by Cichowski et al. [

23] proposed a reversible complete de-identification method based on reallocating pixels in an original biometric. Recently, a complete generative de-identification system for full body and face was developed, utilizing an adaptive approach to de-identification [

24]. In 2020, an interesting study [

25] considered an effect of video data reduction on user awareness of privacy. Chriskos et al. [

26] used hypersphere projection and singular value decomposition (SVD) to perform de-identification.

Behavioral biometric de-identification is also a popular topic. In one of the earlier works on speaker anonymization, Jin et al. [

27] proposed a speaker de-identification system to prevent revealing the identity of the speaker to unauthorized listeners. The authors used a Gaussian Mixture Model (GMM) and a Phonetic approach for voice transformation and compared the performance. Magarinos et al. [

28] suggested a speaker de-identification and re-identification model to secure the identity of the speaker from unauthorized listeners. In [

29], the authors developed a speaker anonymization system by synthesizing the linguistic and speaker identity features from speech using neural acoustic and waveform models. Patino et al. [

30] designed an irreversible speaker anonymization system using the McAdams coefficient to convert the spectral envelope of voice signals. Most recently, Turner et al. [

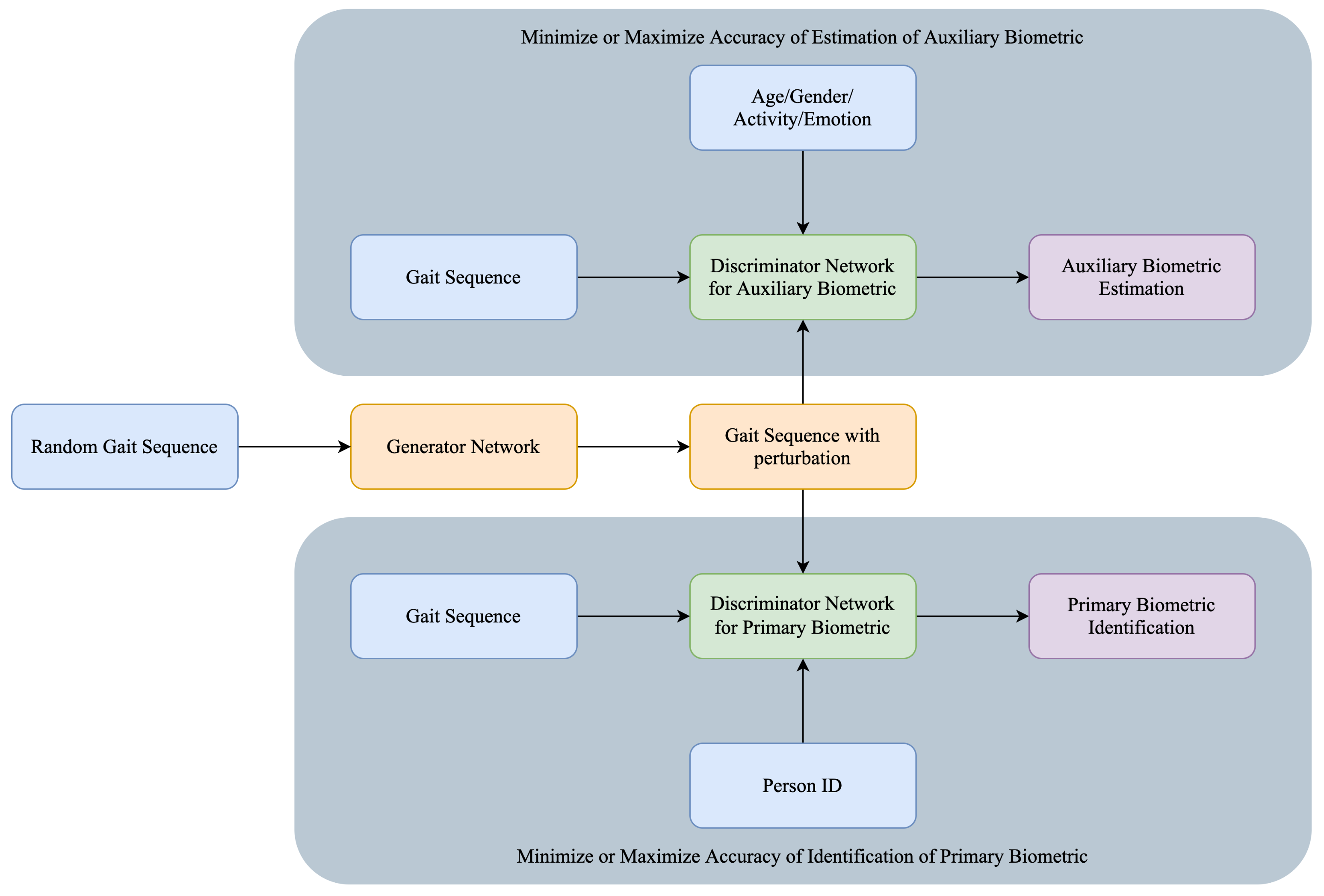

31] presented a voice anonymization system that improved the anonymity of the existing x-vector by learning the distributional properties of the vector space. The generated anonymous voices were highly dissimilar and diverse from the original speakers while preserving the intra-similarity distribution. One of the most recent works on gait de-identification was carried out in 2019 by Tieu et al. [

32]. They developed a gait de-identification system based on Spatio-Temporal Generative Adversarial Network (ST-GAN). The network incorporated noise in the gait distribution to synthesize the gait sequences for anonymization. In [

33], the authors proposed a method that produced fully anonymized speech by adopting many-to-many voice transformation techniques based on variational autoencoders (VAEs). The method changed speaker’s identity vectors of the VAE input in order to anonymize the speech data. The summary of complete de-identification research is presented in

Table 1.

- 2

Soft biometrics preserving de-identification aims to remove traditional biometric traits from the data while retaining the soft or auxiliary traits. For example, in gait recognition, clothing information can be retained, while gait patterns are rendered unrecognizable. The majority of research in this category has been performed on face biometric.

Table 2 summarizes research focusing on

soft biometric preserving utility lost de-identification and

Table 3 summarizes research focusing on

soft biometric preserving utility retained de-identification.

The k-Same technique is the commonly used method for soft biometrics preserving utility lost de-identification [

16]. This method determines the similarity between faces based on a distance metric and creates new faces by averaging image components, which may be the original image pixels or eigenvectors, and is shown to be more effective than pixelation or blurring. Gross et al. [

34] proposed the k-Same-M approach for face de-identification while preserving facial expression as soft biometrics by incorporating the Active Appearance Model (AAM). An active appearance model is a computer vision algorithm for matching a statistical model of object shape and appearance to a new image. Meden et al. [

35] advanced this research further by proposing the k-Same-Net, which combined the k-anonymity algorithm with generative neural network architecture. Their method could de-identify the face while preserving its utility, natural appearance, and emotions. Du et al. [

36] explicitly preserved race, gender, and age attributes in face de-identification. Given a test image, the authors computed the attributes and selected the corresponding attribute-specific AAMs. Meng et al. [

37] adopted a model-based approach, representing faces as AAM features to avoid ghosting artifacts. Their approach identified k faces that were furthest away from a given probe face image. The algorithm calculated the average of the k furthest faces and returned it as a de-identified face, keeping the facial expression unchanged. Wang et al. [

38] proposed a face de-identifying method using multi-mode discriminant analysis with AAM. By using orthogonal decomposition of the multi-attribute face image, they established the independent subspace of each attribute, obtained the corresponding parameter, and selectively changed the parameters of other attributes in addition to the expression. Their system only preserves the facial expression and obscures all the other attributes. The k-Same furthest algorithm guarantees that the face de-identified by it can never be recognized as the original face, as long as the identity distance measure used to recognize the de-identified faces is the same as that used by the k-Same furthest method.

Apart from the k-same based method, several other approaches were proposed for soft biometrics preserving de-identification. Bitouk et al. [

39] introduced an interesting idea of face-swapping, where a new face was blended with an original face and then lighting and contrast were adjusted to create a naturally looking de-identified face. The system preserved the body shape and pose of the person. Li and Lyu [

40] used a neural style transfer method for face-to-face attribute transfer. The target was to preserve the consistency of non-identity attributes between the input and anonymized data while keeping the soft biometrics unaffected by this transfer. Brkic et al. [

41] adopted a neural style transfer technique for face, hairstyle, and clothing de-identification, keeping the body shape preserved. Another work by the same authors focused on transferring a style content of an input image to the target image and performed a full-body and face obfuscation, while the shape of the subject remains identifiable [

42]. Yang et al. [

43] proposed an identity concealing method that preserved the pose, hair color, and facial expression. Their method added an adversarial identity mask to the original face image to remove the identity. Chi and Hu [

44] used Facial Identity Preserving (FIP) features to preserve the aesthesis of the original images, while still achieving k-anonymity-based facial image de-identification. The main characteristic of the FIP features was that the conventional face descriptors significantly reduced intra-identity variances while maintaining inter-identity distinctions. In [

45], the authors designed a gait de-identification system by using 2D Gaussian filtering to blur the human body silhouettes in order to conceal human body posture information while preserving the activity information. Not all work on de-identification involved videos and facial images. Malhotra et al. [

46] proposed an adversarial learning-based perturbation algorithm, which modified the fingerprint of the finger-selfie. The algorithm prevented the finger-selfie to identify the person without authorization, entirely retaining the visual quality. Zhang et al. [

47] proposed an iris de-identification system that prevented iris-based identification; however, it preserved the iris’ biological features of an eye image fully. The iris area was detected based on the Hough transform and the transformation of iris information was performed by using the adopted polar coordinate transform. In [

48], the authors designed a de-identification system using a face-swapping technique, Deepfake. The system retained the body and the face key points were almost unchanged, which were useful for medical purposes. Aggarwal et al. [

49] proposed an architecture for face de-identification using conditional generative adversarial networks. This proposed method successfully preserved emotion while obscuring the identifiable characteristics in a given face image.

The focus of

soft biometric preserving utility retained de-identification is to retain the ability of a system to preserve a person’s soft biometric traits while concealing the primary biometric traits and retaining some face identification abilities. While soft biometrics and other auxiliary biometrics are preserved, key biometric recognition is still possible based on the modified primary traits. Yu et al. [

15] utilized several abstract operations to de-identify a person’s body shape. Recent methods demonstrated that new approaches comprising advanced image processing techniques can be superior to pixelation and blurring. The authors proposed another method to hide the subject’s identity, while preserving his/her facial expression. Jourabloo et al. [

50] proposed a joint model for face de-identification and attribute preservation of facial images by using the active appearance model (AAM) and k-same algorithm. The authors estimated the optimal weights for k-images instead of taking the average Hao et al. [

51] proposed Utility-Preserving Generative Adversarial Network (UP-GAN), which aimed to provide a significant obscuration by generating faces that only depended on the non-identifiable facial features. Nousi et al. [

17] proposed an autoencoder-based method for de-identifying face attributes while keeping the soft biometrics (age and gender) unchanged. This method obscures the face attributes; however, they can still be identified by a face recognition system.

With the widespread use of video surveillance systems, the need for better privacy protection of individuals whose images were recorded increased. Thus, in 2011, Agrawal et al. [

52] developed full-body obscuring video de-identification system. In their work, they preserved the activity, gender, and race of an individual. Meng et al. [

53] used the concept of k-anonymity to de-identify faces while preserving the facial attributes for the face recognition system. In addition to that, emotion is also preserved in the de-identified image. Their method was also applicable for video de-identification. Bahmaninezhad et al. [

54] proposed a voice de-identification system to preserve the speaker’s identity by modifying speech signals. The authors used a convolutional neural network model to map the voice signal, keeping the linguistic and paralinguistic features unchanged. Gafni et al. [

55] proposed a live face de-identification method that automated video modification at high frame rates. Chuanlu et al. [

56] proposed a utility preserving facial image de-identification using appearance subspace decomposition method. They showed that the de-identified faces preserved expressions of the original images while preventing face identity recognition. The system kept the perception, such as pose, expression, lip articulation, illumination, and skin tone identical. The summary of the above discussion is presented in

Table 3.

Traditional biometrics preserving de-identification focuses on de-identifying soft biometrics such as gender, age, race, and ethnicity while preserving as much utility as possible from a traditional biometric trait (such as face, iris, and voice, etc.). Othman and Ross [

57] introduced the method where a face image was modified but it remained recognizable, while the gender information was concealed. Lugini et al. [

58] devised an ad-hoc image filtering-based method for eliminating gender information from the fingerprint images of the subjects, retaining the matching performance as it is. In [

59], the authors proposed an automatic hair color de-identification system preserving face biometrics. The system segmented the image hair area and altered basic hair color for natural-looking de-identified images.

Mirjalili and Ross [

60] proposed a technique that perturbed a face image so that the gender was changed by using a gender classifier while the face recognition capacity was preserved. They used a warping technique to simultaneously modify a group of pixels by using the Delaunay Triangulation application on facial landmark points. The authors have experimented the system using two gender classifiers namely, IntraFace [

61] and Commercial-off-The-Shelf (G-COTS) software. Mirjalili et al. [

62] extended this idea by putting forward a convolutional autoencoder, which could modify an input face image to protect the privacy of a subject. They suggested an adversarial training scheme that was expedited by connecting a semi-adversarial module of a supplementary gender classifier and a face matcher to an autoencoder. The authors further tackled the generalizability of the proposed Semi Adversarial Networks (SANs) through arbitrary gender classifiers via the establishment of an ensemble SAN model, which generates a different set of modified outputs for an input face image. Later, in 2020, Mirjalili et al. [

18] proposed a GAN-based SAN model, called PrivacyNet, which is further advanced to impart selective soft biometric privacy to several soft biometric attributes such as gender, age, and race. They showed that PrivacyNet provides a condition for users to decide which attributes should be obfuscated and which ones should remain unchanged. Chhabra et al. [

63] proposed an adversarial perturbation-based de-identification algorithm, which anonymized k-facial attributes to remove gender, race, sexual orientation, and age information, preserving the identification ability of the face biometrics. Terhörst et al. [

64] proposed a soft biometrics privacy-preserving system to hide binary, categorical, and continuous attributes from face biometric templates using an incremental variable elimination algorithm. Wang [

65] applied face morphing to remove the gender identification attributes from face images. The identification ability of the face images as face biometrics was preserved.

An interesting direction of research is focused on tattoo de-identification. A unique tattoo in this case can be considered as a form of a soft biometric. In [

66], the authors created a system to detect tattoos and de-identified it for privacy protection in still images. Hrkać et al. [

67] designed a system to distinguish between tattooed and non-tattooed areas using a deep convolutional neural network. The neural network grouped the patches into blobs and replaced the pixel color inside the tattoo blob with the surrounding skin color to de-identify it. Another interesting type of soft biometric is clothing color. In 2018, Prinosil [

68] proposed a method to de-identify clothing color as soft biometrics, keeping the traditional biometrics preserved. The system used silhouette splitting and clothing color segmentation algorithms. The components of the HSV color space of the segmented clothing were modified for de-identification. Pena et al. [

69] proposed a method for two face representations that obscured facial expressions associated with emotional responses while preserving face identification. The summary of those methods is presented in

Table 4.

For all discussed categories, the evaluation protocols for de-identification systems consist of human evaluation, re-identification, and diversity methods.

Definition 9. Human Evaluation: Typically, in this evaluation method, experts are asked to recognize the de-identified person by performing a visual inspection [15]. Definition 10. Re-identification: Re-identification refers to identifying a particular person by using a classification method. Before performing de-identification, a classification method is used to classify the biometric data (images, videos, and signals) that will be de-identified. After performing the de-identification, the same method is used to check whether it can re-identify the data successfully [9]. Definition 11. Diversity: This evaluation protocol is used to show how diverse the de-identified face images are from the enrolled template database. As some of the above-mentioned methods use existing face images to de-identify a sample face image, this evaluation protocol determines how likely it is for a biometric recognition software to falsely match the de-identified version of the image with another identity that exists in the template database [17]. One should keep in mind that attacks against de-identified systems are still possible. Thus, biometric systems must be tested against a possible attacker that can attempt to match de-identified images to originals. According to Newton et al. [

16], there are three types of attacks and corresponding protocols to test whether de-identification of biometric information is effective in retaining data privacy. The attacks are as follows:

Matching original images to de-identified images;

Matching de-identified images to original images;

Matching de-identified images to de-identified images.

In the first protocol, the attacker tries to match the original images with de-identified images, and this protocol is named naive recognition [

16]. The gallery set in naive recognition protocol only includes the original images, and the probe set includes the de-identified images. The de-identified images are compared with the original images using standard face recognition software. No significant modification is performed on the original face set by the attacker in naive recognition.

In the second protocol, de-identified images are matched with the original images by the attacker, and this protocol is named reverse recognition [

16]. In this protocol, it is assumed that the attacker has access to the original face images that were de-identified. The purpose for the attacker is to match one-to-one de-identified image with the original image set. Using principal component analysis, it is possible for an attacker to determine a one-to-one similarity between the de-identified image and the original image.

In the third protocol, the attacker tries to match the de-identified images to de-identified images, and this method is called parrot recognition [

16]. Consider a scenario where the attacker already has the original face set of the de-identified images. In parrot recognition, the same distortion or modification is made to the original images as to the de-identified images. For instance, if blurring or pixelation is used for de-identifying an image set, the attacker can perform the same blurring or pixelation technique on the gallery set.

Thus, in order to fully validate the efficiency of de-identification, the above three types of attacks should be investigated, and the de-identified system performance should be tested against them.

5. Application Domains

This section summarizes the above discussion by providing a gamut of applications of emerging de-identification research.

Cybersecurity: Gathering intelligence by surveilling suspects in cyberspace is necessary to maintain a secure internet [

111]. Government-authorized agents have been known to survey the social networks, disguising themselves among malicious users. Social behavioral biometrics-based de-identification can aid security agents in the covert observation and anonymous moderation of cyberspaces.

Continuous Authentication: Continuous authentication refers to a technology that verifies users on an ongoing basis to provide identity confirmation and cybersecurity protection [

112]. Social behavioral biometrics (SBB) authenticates users on social networking sites continuously without any active participation of the users. The templates of users’ writing patterns and acquaintance networks information must be stored in the database for SBB authentication. Instead of storing the identifying templates directly, SBB-based de-identification techniques can be applied to the templates to ensure account security and user privacy.

Protecting Anonymity: Authorized officials often publish case studies and written content of cybercrime victims to create public awareness [

113]. In such cases, social networking portals and blogs are used as convenient media to disseminate information. Typically, the identities of the victims are kept anonymous. However, the content written by the victim and their social behavioral patterns may still contain identifying information. Therefore, de-identification of these published materials helps protect user anonymity when their identity must be kept confidential.

Multi-Factor Authentication: Leveraging the discriminative ability of an individual’s social data and psychological information, a multi-factor authentication system can be implemented [

114]. As a remote and accessible biometric, aesthetic identification can also provide additional security if the primary modality is suspected to be compromised. De-identification in this context would preserve the security of the system when storing a user’s preference template.

Video Surveillance: Anonymization of primary or auxiliary biometric data protects the privacy of the subjects. If the original biometric is perturbed such that primary biometric identification is successful while the auxiliary biometric traits are not easily recognizable, or vice versa, this solution can be integrated with surveillance methods [

115]. In such a situation, the de-identification of primary biometric can ensure the data privacy of individuals who appear in the footage but are not persons-of-interest.

Risk Analysis: The ability to estimate a person’s emotional state using the facial biometric or gait analysis finds potential applications in threat-assessment and risk analysis [

116]. Analysis of emotional state can be applied in the surveillance of public places in order to estimate the threat posed by an individual based on continuous monitoring of their emotional state. Based on the necessity of data protection, primary biometrics can be obscured while preserving the auxiliary information about emotions.

Health Care: Individuals can exhibit postural problems which could be diagnosed through static posture and gait analysis [

117]. In such a case, primary gait biometric can be readily de-identified while preserving auxiliary biometric traits, such as age, gender, activity, and emotion.

Mental illness: Many applications predict and identify mental and/or physical illnesses by monitoring user emotions [

74]. De-identifying any sensitive patient biometric data using the methods in the applications discussed above would ensure patient privacy, which could increase their willingness to opt-in for such services.

Adaptive Caregiving: The ability of an intelligent system to analyze user emotion information and exhibit realistic interactions has high potential [

74]. De-identification of identity while still recognizing client emotions can preserve client privacy.

Advertisement: One reason why many social media companies mine their users’ data is to identify customer interests and gain insights that can drive sales [

118]. Naturally, this raises concerns with regards to user data ownership and privacy. De-identifying the corresponding sensitive data while still understanding user’s preferences towards certain products can supplement data mining.

Entertainment: Another possible usage of social behavioral information is adaptive entertainment experiences [

119]. For instance, movies and/or video games that change the narrative based on the user’s emotional responses can be created. However, such applications require the storage and analysis of user information. Users might be more willing to participate when user data are protected and anonymized.

Psychology: Personality traits can be revealed from the digital footprints of the users [

19]. A personality trait de-identification system can be used to protect sensitive user information and implement privacy-preserving user identification systems. Furthermore, this concept can be applied in user behavior modeling problems such as predicting the likeliness to take a particular action, for example, clicking on a particular ad. Moreover, personality traits-based de-identification can be used in conjunction with other privacy-preserving measures such as data anonymization to further ensure user privacy protection within OSNs.

Consumer Services: Replacement of traditional identification cards by biometrics is the future of many establishments, such as driver license offices or financial services. De-identification of some real-time information obtained by security cameras for identity verification would ensure additional protection relative to sensitive user data [

120].

6. Open Problems

The domain of biometric de-identification remains largely unexplored and has many promising avenues for further research. The impact of the perturbation in the original data on the identification of primary biometric and the estimation of auxiliary biometric can be further investigated. Moreover, the design of innovative deep learning architectures for sensor-based biometric de-identification can result in the development of a practical solutions for privacy preserving video surveillance systems. The acceptable obscureness of biometric data while preserving other biometric is open to discussion. Since certain behavioral biometrics may change over time, the procedure to adapt with the updated behavioral biometric in biometric de-identification requires further analysis in the future.

De-identification approaches for gait and gesture rely heavily on the blurring technique. In this scenario, retaining the naturalness of the de-identified video after the individual’s characteristic walking patterns are obscured is one of the main challenges in gait and gesture de-identification. This represents one of the interesting open problems in the domains of gait and gesture de-identification.

Research in emotion-preserving de-identification has been more prevalent with faces than with any other biometric. For gait, EEG, and ECG, which are the most significant features for person identification, are unknown. Hence, the first step with these biometrics will be to identify the biometric features that are crucial for personal identification. Consequently, methods must be developed to obscure any personally identifiable information while retaining the features that represent the subject’s emotion in the data. Additionally, face emotion-based de-identification research has produced some promising results. Hence, increasing person identification error is a likely future research direction for emotion preservation-based facial emotion recognition systems.

In the domain of social behavioral biometrics, de-identifying friendship and acquaintance networks is an open problem. The technique of changing the linguistic patterns of social media tweets while preserving emotions and information, and vice versa, has not been explored previously. The reversibility to the original SBB traits after de-identification and subsequent measures to increase the difficulty of reverse-engineering those traits are other interesting problems to explore.

There are many open problems in applying the concept of psychological trait-based de-identification within the domain of privacy-preserving social behavioral biometrics. While clinical research indicates the permanence of psychological traits among adults, they change over time due to significant life events and circumstances. Considering time dependencies and their effect on data preservation is another interesting open problem.

Psychological traits factorize a wide range of human behaviors into a fixed number of labels. Therefore, any de-identification of psychological traits may result in the loss of a nuanced representation of user-generated content. This loss of information may reduce the accuracy of the downstream prediction task. Mitigating this unwanted effect is one of the open problems. Secondly, the degree to which a dataset is de-identified may not be directly measurable. As humans may not be capable of inferring psychological traits from user content, it is difficult to ascertain if the information regarding psychological traits is truly obfuscated from automated systems. This is another interesting problem that should be investigated further.

Finally, multi-modal biometric de-identification has not been explored before. Common multi-modal biometric authentication systems involve combining traditional biometric traits with emerging biometric traits using information fusion. One potential open problem is to design a multi-modal de-identification system that conceals soft biometric traits. As there can be several fusion methods for combining biometric modalities, experiments aimed at finding the most suitable architecture in the context of an applied problem are needed. For multi-modal de-identification, some applications may require all the biometric traits to be obscured, while some may need only particular traits to be modified. Formalizing the underlying principles for the optimal design of multi-modal biometric systems offers a rich avenue for future investigations.