1. Introduction

According to the Food and Agriculture Organization of the United Nations (FAO), over 1600 chicken breeds are recognized globally, comprising about 63% of all poultry breeds [

1,

2]. These breeds exhibit significant diversity in morphology, physiology, and behavior [

3], which enables farmers to select breeds that meet specific production requirements. The varying breeding challenges and distinct economic value associated with different chicken breeds necessitate precise breed identification for effective farm management. For instance, the broiler chicken industry typically selects breeds with rapid growth and superior meat quality [

4], while the laying hen industry prefers breeds known for high egg production rates and robust adaptability [

5]. Such precise selection is crucial for large-scale breeding operations to avoid hybridization at the farm level [

6]. Additionally, gender determination is vital for managing poultry populations, as it ensures an optimal gender ratio within flocks. This is essential for improving production efficiency, promoting healthy growth, and increasing economic returns [

2]. Therefore, precise species and gender identification is fundamental to boosting the overall efficiency of the poultry farming industry [

7].

The diversity of poultry breeds and the high similarity among certain breeds create significant challenges for effective classification. In addition, the nuanced variations in physical characteristics and behavioral traits complicate accurate identification. Traditional manual classification methods require extensive experience and suffer from inefficiency and high costs, making them unsuitable for modern large-scale breeding operations [

8,

9]. In addition, prolonged close contact between breeders and chickens poses a significant biosecurity risk [

10].

In recent years, with the development of artificial intelligence and computer technology, an increasing number of modern technologies have been applied to agricultural production [

11,

12,

13,

14]. These applications have significantly accelerated the intelligentization process of related industrial tasks [

15,

16]. By fine-tuning the YOLOv4 (You Only Look Once version 4) target detection algorithm, Gupta et al. [

17] achieved recognition of multiple cow breeds with an accuracy of 81.07%. In addition, Jwade et al. [

18] applied transfer learning to the VGG-19 model, fine-tuning it for downstream tasks, and established a sheep breed classifier with an average accuracy of 95.8%. For poultry breed and gender classification, after constructing a dataset containing images of four significant pigeon breeds, Rahman et al. [

19] performed analysis using a convolutional neural network (CNN)-based architecture with transfer learning, achieving a final recognition accuracy of 95.33% during testing. Furthermore, Wu et al. [

20] introduced SE attention to enhance the residual unit of ResNet-50, combining it with an improved activation function and optimizer to boost model performance. The final result showed an accuracy of 98.42% for chicken breed detection. To provide a comprehensive comparison of the advantages and disadvantages of existing intelligent methods, several major approaches are summarized in

Table 1.

After a systematic analysis of the model’s potential advantages for this task and the experimental results, we developed a new image classification system using the Swin Transformer [

21] to cope with the above problems, aimed at accurately and effectively identifying the chicken breed and gender. Our dataset was colllected in real production scenarios and we compared various classic models of image classification and integrated other computer vision techniques such as object detection and data augmentation to enhance the performance of the classification system.

As typical black-box models, the decision mechanisms of deep learning models are often difficult to understand intuitively [

22]. The emergence of some interpretable analysis techniques, such as Grad-CAM(Gradient-weighted Class Activation Mapping) [

23], SHAP(SHapley Additive exPlanation) [

24] and LIME (Local Interpretable Model-Agnostic Explanations) [

25], has alleviated this problem to some extent. These methods offer significant advantages, including enhanced transparency by revealing which parts of the input data influence the model’s decisions, increased trustworthiness by allowing us to understand and verify the model’s behavior, and the ability to identify and mitigate potential biases or errors within the model. Consequently, these methods can assist the application of deep learning techniques in agriculture. We have also applied interpretable analysis techniques to the proposed classification system and the results confirm to some extent the effectiveness of the system for classification.

This study presents a novel image classification system for chicken breed and gender classification, utilizing deep learning models and computer vision techniques. The main contributions include the creation of a high-resolution image dataset containing multiple native Chinese chicken breeds, the incorporation of the Swin Transformer model with target detection, and data augmentation to improve classification performance. Additionally, interpretability analysis methods, such as Grad-CAM, were employed to improve the model’s transparency and trustworthiness. The complete source code is available on GitHub (

https://github.com/quietbamboo/breed-classification, accessed on 15 June 2025). The proposed system outperforms traditional models in real-world agricultural scenarios, offering an efficient and reliable solution for chicken breed and gender classification, with potential benefits for preserving local breeds and advancing the poultry industry.

2. Materials and Methods

2.1. Materials

2.1.1. Data Collection

In this study, we developed a high-resolution image dataset comprising 10,482 images from 13 commonly recognized native Chinese chicken breeds, each with a resolution of 2160 × 3840 pixels. Furthermore, we considered breed and gender as separate distinguishing factors for classification. Each breed was categorized into two distinct groups based on gender, resulting in a total of 26 categories across the 13 breeds. During image collection, approximately 30 individual chickens of each gender were photographed for each breed, with the number of images collected per individual being consistent. However, there were variations in the number of individuals across some of the breeds, resulting in a dataset that was not balanced. Additionally, the backgrounds, angles, lighting conditions, and postures of the chickens were randomly set for each image, aiming to introduce diversity and ensure the algorithm’s applicability in real-world scenarios.

Table 2 provides a detailed illustration of the distribution of individuals and image samples in this dataset.

2.1.2. Dataset Splitting

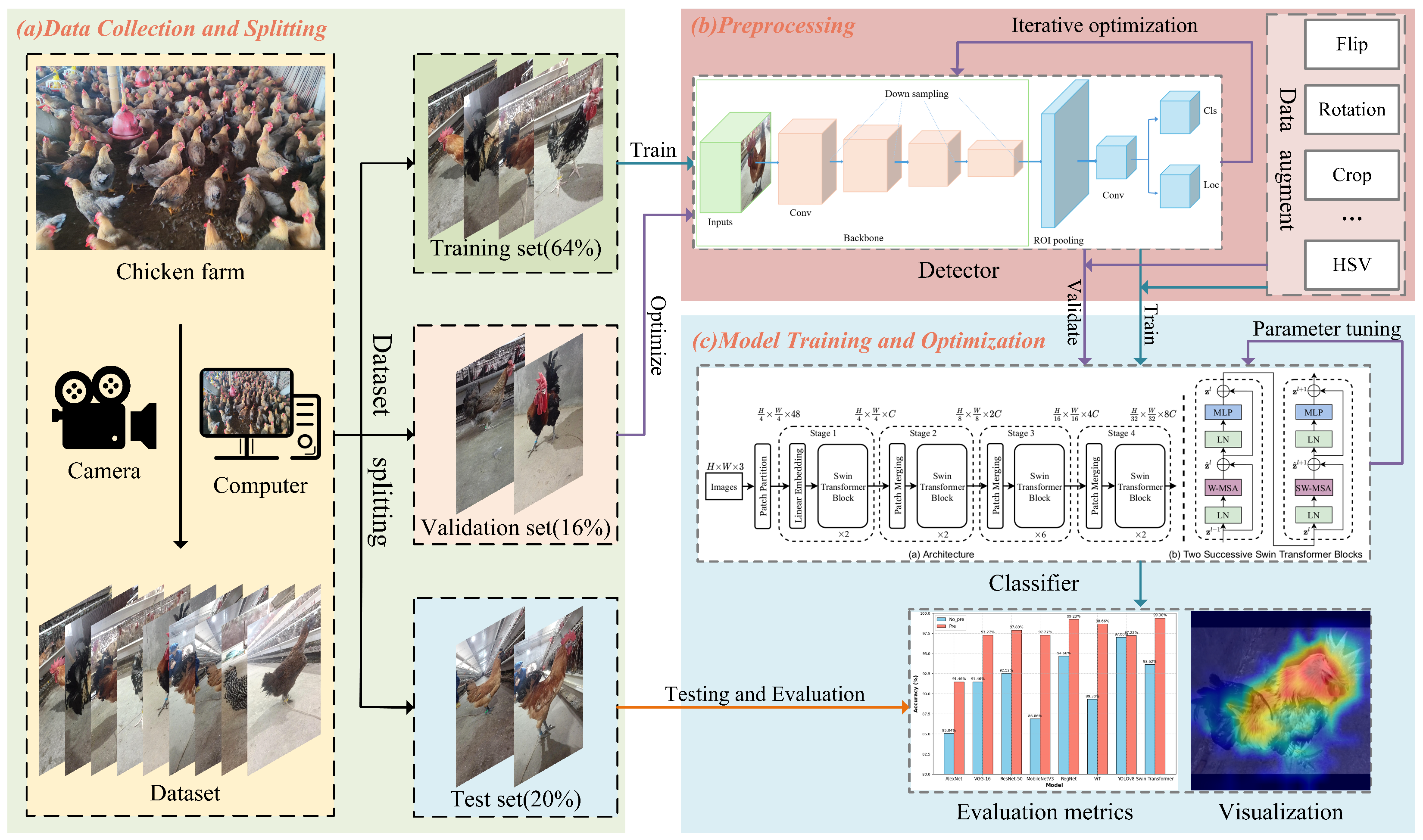

In this study, as illustrated in

Figure 1a, the dataset was divided into training, validation, and test sets in a ratio of 64:16:20 to accurately evaluate the models’ performance. Specially, due to the potential high similarity among multiple images collected from the same chicken, all images from a single individual were treated as a independent entity in data partitioning to prevent data leakage. Specifically, images in different datasets were not collected from the same chicken. This division ensured that each dataset represented the overall data distribution and included images from each category, thereby facilitating comprehensive training and rigorous evaluation of the model. Additionally, fixed seeds were utilized during the data splitting process to partition the dataset consistently, ensuring that each experiment was conducted under identical data conditions. This method guarantees the fairness of comparative analyses.

2.2. Preprocessing

Images captured in real-world scenarios frequently exhibit various uncertainties, such as complex backgrounds, lighting variations, different shooting angles, and scale changes. These factors may significantly impact the results of experiments. To address these issues, a series of preprocessing operations, including object detection and multiple data augmentation techniques, were implemented to standardize the characteristics and quality of images within the dataset, as shown in

Figure 1b.

2.2.1. Object Detection

As shown in

Figure 2, the images in the dataset were derived from real breeding environments, which may have resulted in the presence of distracting elements such as other chickens or the background of the coop. Therefore, we used the object detection methodology based on YOLOv8 (

https://github.com/ultralytics/ultralytics, accessed on 10 September 2024) to accurately segment the target subject. This algorithm is particularly adept at performing real-time object detection tasks within complex images [

26] due to its rapid processing speed and exceptional accuracy.

Relevant hyperparameters were iteratively optimized over multiple epochs, and appropriate data augmentation parameters were selected to further enhance model training. Upon completion of training the object detection model, the trained model was employed to extract targets from images across the entire dataset. Specifically, the bounding rectangle of the chicken in the original image was inferred by the trained object detection model, and a new image was then cropped based on this rectangle, effectively excluding complex background information. Additionally, to meet the requirement of square images for subsequent input layers, edge zero-padding was applied to the segmented images, ensuring no distortion of the chicken’s features. This approach also improves computational efficiency, as padding with zero values prevents activation of units in the following layers, reducing unnecessary computations [

27].

2.2.2. Data Augmentation

We applied a series of data augmentation techniques to increase the variety and diversity of the training dataset, which helped the model generalize better and reduced the risk of overfitting [

28]. These techniques included randomly cropping and resizing images (crop scale range: 0.6–1.0), random horizontal flipping (flip probability: 0.5), random angle rotation (rotation angle range: ±180°), and adjustments to brightness, contrast, saturation, and hue (jitter range for the four image attributes: 0.8–1.2). The technique of randomly cropping and resizing adjusts each image to a predetermined pixel dimension while preserving the flexibility of essential features, thereby enhancing the model’s capacity to capture significant feature information. The methods of random horizontal flipping and random angle rotation introduce variability by flipping images horizontally and rotating them at random angles, respectively, thereby reducing the model’s dependency on specific image orientations or configurations. Adjustments to brightness, contrast, saturation, and hue simulate different lighting and color conditions, aiding the model’s performance in diverse real-world environments.

2.3. Model

In recent years, deep learning networks have been extensively applied in various image processing tasks due to their unique structures and outstanding performance. This study compares eight state-of-the-art (SOTA) image classification models: AlexNet [

29], VGG-16 [

30], ResNet-50 [

31], MobileNetV3 [

32], RegNet [

33], Vision Transformer (ViT) [

34], Swin Transformer [

21], and YOLOv8. AlexNet is one of the earliest convolutional neural networks and marked a major breakthrough in image recognition in 2012 by adopting ReLU activation and multi-GPU training. VGG-16 builds on this by stacking small 3 × 3 convolutional filters, enabling deeper networks with improved feature extraction. However, increasing depth led to the problem of vanishing or exploding gradients [

35,

36], which ResNet-50 solved by introducing residual connections, allowing deeper architectures to train effectively. MobileNetV3 is a lightweight network optimized through neural architecture search for efficient deployment on mobile and edge devices. RegNet was introduced in 2020 and provides a systematic and scalable framework for model design, balancing accuracy and efficiency across various settings. That same year, Vision Transformer (ViT) pioneered the use of self-attention mechanisms on image patches, capturing global context and showing strong performance on large-scale datasets. Building on ViT, Swin Transformer introduces a hierarchical design with shifted windows to enable efficient self-attention over local regions. This reduces computation and allows effective processing of high-resolution images. As a result, it scales well across tasks from classification to detection, segmentation, and 3D scene understanding [

37,

38,

39]. YOLOv8 integrates advanced detection techniques like anchor-free prediction and improved feature pyramids, delivering both high speed and accuracy for real-time vision applications.

We chose these specific models to ensure that our comparison reflects a wide range of image classification methods while maintaining a balance between model novelty, efficiency, and architectural diversity. Other well-known models, such as DenseNet, while very effective, are not included because the models we selected generally cover their design principles. These eight models are evaluated in the classifier component, as illustrated in

Figure 1c, to develop an effective system for chicken breed and gender classification.

This study aims to identify the most effective and comprehensive model for breed and gender classification in chickens. To this end, a series of optimization strategies were employed to enhance model performance and better adapt to the classification task. Firstly, the output layer of each model was adjusted to align with the specific number of target categories in this task. Next, we conducted extensive comparative experiments on the key hyperparameters, as these parameters significantly impact model performance [

40]. All models were fine-tuned to their optimal hyperparameter settings to the best of our ability before comparison. The tuned parameters included input size, batch size, learning rate (lr), optimizer, and the number of training epochs. Secondly, pre-training can usually accelerate model convergence and significantly impact the final performance of models [

29,

41]. So, we performed ablation experiments to assess the contribution of pre-trained weights. Similarly, we designed comparative experiments to evaluate the effectiveness of the preprocessing techniques used. In addition, an early stopping mechanism was introduced to prevent model overfitting. Specifically, training was terminated if the validation accuracy did not improve over 100 consecutive epochs, indicating that the model likely reached its optimal performance. Finally, we applied visualization techniques to the two best-performing models to analyze and interpret their classification processes, providing further insights into their decision-making mechanisms.

After completing hyperparameter tuning, we identified a configuration set that likely leads to optimal model performance. Specifically, all models were trained using the cross-entropy loss function and the Adam optimizer, with a random seed fixed at 123, a batch size of 32, a learning rate of , and a maximum of 1000 training epochs. In addition, We explored the use of a criterion with weighted coefficients and designed a comparative experiment to evaluate its effect on the model’s performance. All models were initialized with ImageNet-1K pre-trained weights released by the original authors. Batch normalization was applied in all models to stabilize training by normalizing the activations of each layer, improving convergence. However, dropout was not used in the models, as its regularization effect was not deemed necessary given the specific characteristics of the dataset. For the Swin Transformer model, the configuration included a patch size of 4, an embedding dimension of 96, layer depths of (2, 2, 6, 2), attention heads of (3, 6, 12, 24), a window size of 7, an MLP ratio of 4, and a stochastic depth rate of 0.1.

2.4. Evaluation Metrics

Four evaluation metrics, which are suitable for multi-classification tasks, were selected to comprehensively evaluate the model’s performance. The calculation formulas are as follows. In these formulas, TP (True Positive) represents the number of samples correctly predicted as positive, while (True Negative), FP (False Positive), and FN (False Negative) can be determined through parity inference. Additionally, n is the total number of sample categories, and is the weight of the i category, calculated by dividing the actual number of samples in that category by the total number of test samples.

Accuracy (ACC) represents the proportion of samples whose predicted results match the actual labels; recall rate indicates the proportion of samples correctly identified as class

i out of the total actual samples of that class. The calculation formula is (

1). In the formula,

and

are elements in the confusion matrix, representing the number of correct predictions for each category and the number of samples whose actual category is

i and predicted category is

j.

Weighted Recall (WR) is the recall rate weighted by the proportion of each category in the total sample. This can be calculated using Equations (

2) and (

3). Equation (

1) and (

3) yield equivalent results, meaning that ACC and WR have the same value in the current multi-class task. Precision indicates the proportion of correctly predicted positive samples out of all predicted positive samples, and Weighted Precision (WP) is the precision weighted by category weights, as shown in Equations (

4) and (

5).

The F1 score is the harmonic mean of precision and recall, used to measure the balanced classification performance of a model for a single category. The Weighted F1 score [

42] is an overall metric obtained by weighting the F1 scores of each category by their sample size, used to measure the model performance on imbalanced datasets. The calculation formulas are illustrated in Equations (

6) and (

7).

The Matthews Correlation Coefficient (MCC) [

43] is a composite indicator for evaluating overall model performance, considering the four categories in the confusion matrix (TN/TP/FN/FP), and its calculation formula is shown in Equation (

8). In the formula,

c represents the number of all correct predictions;

s represents the total number of samples in the test set;

represents the total number of samples predicted to be of class i; and

represents the total number of samples actually of class

i.

2.5. Development Environment

The development and testing platform for the algorithms involved in this study was a high-performance computing server equipped with an AMD EPYC 7742 64-Core Central Processing Unit (CPU), 86 GB of runtime memory, 350 GB of hard disk capacity, an NVIDIA A800 Graphics Processing Unit (GPU), and Ubuntu 22.04. The programming environment includes OpenCV 4.9.0, Python 3.8.0, Torch 1.8.0, Torchvision 0.9.0, and Ultralytics 8.2.27. The labeling software tool used for annotating the chicken’s bounding box in the images was anylabeling (

https://github.com/vietanhdev/anylabeling, accessed on 1 August 2024).

3. Results and Discussion

3.1. Swin Transformer Achieves Superior Accuracy and Robustness in Comparative Model Evaluation

In this study, we compared eight representative image classification deep learning models, AlexNet, VGG-16, ResNet-50, MobileNet-V3, RegNet, ViT, YOLOv8 and Swin Transformer, aiming to develop a more accurate and efficient system for chicken breed and gender classification. In the experiment, the key hyperparameters mentioned in

Section 2.3 were optimized for different models, and the optimal results obtained after adjusting parameters for different models are shown in

Table 3.

The evaluation results indicate that after targeted tuning of different models, all models achieved test accuracies above 90%. These results validate the effectiveness of the data collected in real scenarios and prove the feasibility of using deep learning models for this task. The comprehensive performance of Swin Transformer was better than the other models. Specifically, the Swin Transformer achieved test results of 99.38% for ACC, 99.39% for WP, 99.37% for Weighted F1, and 99.34% for MCC. The Swin Transformer demonstrated a significant improvement over ViT across multiple accuracy metrics. Notably, the ACC of the Swin Transformer surpassed that of ViT by 0.72%. We hypothesize that this improvement is primarily due to the Swin Transformer’s sliding window self-attention mechanism, which better captures both local details and global context in images, leading to more precise handling of complex image tasks.

Furthermore, compared to CNN-based models, the Swin Transformer also exhibited superior performance across various metrics. For instance, the

ACC of the Swin Transformer was 0.96% higher than that of ResNet-50. This performance enhancement can likely be attributed to the Swin Transformer’s multi-scale feature fusion strategy. This strategy effectively integrates local and global feature information, thereby improving its ability to process visual features at different scales. To further illustrate the training dynamics of the Swin Transformer,

Figure A1 (see

Appendix A) presents the accuracy and loss curves for each training epoch, indicating stable convergence and continued performance improvement. In addition,

Figure A2 (see

Appendix A) presents the confusion matrix of the Swin Transformer on the test set, showing strong diagonal dominance. This reflects consistently high accuracy across all chicken breed and gender categories, with no evident class bias. These results confirm the model’s robustness, fairness, and strong generalization in fine-grained classification.

In consideration of the needs of real-world agricultural scenarios, we also added indicators such as GPU inference time, CPU inference time, maximum GPU memory usage, and model parameter quantity. The comparison results show that the Swin Transformer had a disadvantage in terms of the number of Parameters, Inference Time, and Max GPU Usage. Despite these drawbacks, the developed classification systems must be implemented in real-world agricultural scenarios, where misclassification of breed and gender could result in severe consequences, such as improper allocation of resources. In this task, we selected Swin Transformer due to its excellent comprehensive performance.

However, Swin Transformer had a disadvantage in terms of the number of parameters and inference time. The developed classification systems must be implemented in real-world agricultural scenarios, where misclassification of breed and gender could result in severe consequences, such as improper allocation of resources. In this task, we selected Swin Transformer due to its excellent comprehensive performance.

In the current farm environment, we have sufficient computing resources to support its use. However, when resources are limited, opting for a lightweight model such as MobileNetV3 or reducing the image resolution would be a good alternative. All models were trained within 10 h and are suitable for deployment in practical applications. To leverage the full potential of Swin Transformer, the model used in the experiment is the Large version, pre-trained on images with a resolution of 384 × 384 pixels and 22,000 categories. The images are divided into 4 × 4 patches, and the window size for attention computation is set to 12 × 12. Additionally, to support a more comprehensive performance comparison,

Table A1 (see

Appendix A) presents the results of various image classification models evaluated on input images resized to 512 × 512 pixels, enabling further analysis of resolution effects and model differences.

3.2. Target Detection and Data Augmentation Enhance Performance in Chicken Breed and Gender Classification

In this study, we conducted several experiments to analyze the impact of target detection and data augmentation on chicken breed and gender classification tasks, aiming to explore the effectiveness of these preprocessing techniques in improving model performance. The results of these experiments are illustrated in

Table 4.

The effectiveness of the target detection module was validated by replacing the original data, which included complex environmental information, with data identified and cropped through target detection, serving as the model input. As illustrated in

Table 4, the accuracy reached 98.81%. This represents a 1.64% increase in accuracy after applying target detection. This improvement can likely be attributed to the ability of target detection techniques to better localize and identify relevant regions of interest within the images. By focusing on these critical areas, the model can reduce noise and irrelevant background information, leading to more accurate predictions.

We incorporated the data augmentation process described above into the data preprocessing stage to evaluate the effectiveness of the data enhancement module. As shown in

Table 4, the accuracy reached 97.84%. This represents a 0.67% improvement in accuracy after applying data augmentation. This improvement is likely due to the ability of data augmentation to increase the diversity of the training dataset by introducing various transformations such as random cropping, rotation, and flipping adjustment. By exposing the model to a wider range of variations in the training data, data augmentation helps the model generalize better to unseen data, thereby reducing overfitting and improving its overall predictive accuracy. When both target detection and data augmentation were applied simultaneously in the data preprocessing, a final accuracy rate of 99.38% was achieved. These results demonstrate that applying target detection and data augmentation to chicken breed and gender classification tasks is both effective and reliable. In addition, to verify the effectiveness of the weighted coefficient strategy, we similarly designed a comparative experiment. As indicated in

Table 4, the accuracy decreased slightly from 99.38% to 99.19% after incorporating the weighted coefficients. The possible reason is that, although the categories are not perfectly balanced, the imbalance is not severe enough to necessitate special handling. As a result, the effect of the weighting coefficient on improving the model’s performance is limited in this case.

3.3. Appropriate Image Input Size Improves the Accuracy of the Classification Model

The input size of the model represents the resolution of the images used for training and validation, which often has a significant impact on the model’s performance [

44,

45]. Higher resolution images can provide more information but also increase computational complexity and the impact of noise. Currently, most models used for image classification adopt an input size of 224 × 224 for training and validation. However, this standardized size may limit model performance for tasks that require capturing fine-grained image details. In these tasks, the differences between samples are subtle, and these details are crucial for enhancing the classification model’s performance. Smaller image sizes might lead to the loss of these crucial details. To evaluate the potential impact of this issue, we conducted tests using three different input sizes: 224 × 224, 512 × 512, and 1024 × 1024. For each model, three sets of comparative experiments were conducted to evaluate the effect of input size on model performance and to determine the optimal size. The results are presented in

Table 5.

Table 5 shows that increasing the input size from 224 × 224 to 512 × 512 improves the classification accuracy across different models to varying degrees. Notably, Swin Transformer achieved the highest accuracy of 99.38% after an increase of 1.49%. This general improvement in accuracy may be attributed to the higher resolution of input images providing more detailed information, enabling the models to make more precise predictions. However, when the input size was further increased to 1024 × 1024, the models’ performance diverged. Specifically, ResNet-50, MobileNetV3, and YOLOv8 continued to improve their accuracy, reaching 98.42%, 97.79%, and 98.27%, respectively. In contrast, other models experienced varying degrees of accuracy decline with this adjustment. In summary, Swin Transformer performed best at a resolution of 512 × 512, achieving 99.38% accuracy, and maintained high accuracy even at 1024 × 1024, demonstrating good adaptability to different resolutions.

The evaluation results indicate that subtle differences between similar samples may be crucial for the accurate classification of chicken breed and gender. This also partially explains why the classification accuracy improves with an increase in input size. However, the results also demonstrate that an excessively large input size may amplify the impact of noise or features with limited generalization capability, leading to a decline in model performance. As the classification system proposed in this study was developed using Swin Transformer, the input size was set to 512 × 512 to better meet the requirements of this specific task.

3.4. Application of Pre-Trained Weights Enhances Classification Model Performance

Benefiting from the strong visual knowledge distributed in large image datasets, pre-trained models that are fine-tuned with task-specific data tend to perform better on downstream tasks than models without pre-trained weights [

35]. Additionally, utilizing pre-trained weights can enhance model robustness and expedite convergence [

41]. Therefore, in order to further explore the impact of pre-trained weights on model performance, we compared the performance of the eight image classification models used with or without pre-trained weights. The results are illustrated in

Figure 3.

The results illustrated in the bar chart indicate that the application of pre-trained weights improved the prediction accuracy across the eight models used in the experiment to varying degrees. Notably, the lightweight network MobileNet-V3 experienced a 10.41% increase in accuracy. Overall, these results suggest that pre-trained weights can effectively enhance the performance of various models in the current task. The improvement in pre-trained model performance is likely attributable to the extensive visual features acquired from large-scale image datasets during the pre-training phase, enabling the models to adapt more effectively to the specific task. Consequently, we opted to use pre-trained weights to further enhance the model’s classification performance.

3.5. Swin Transformer Exhibits Superior Performance in ROI Visualization, Supporting Previous Experimental Results

In this study, we applied multiple deep learning models to the classification of chicken breeds and genders. Many models demonstrated exceptional performance, comparable to human experts specialized in chicken breeds. Additionally, the evaluation results revealed that all eight models showed accuracy improvements after increasing the image input size from 224 × 224 to 512 × 512. As a typical black-box model, the prediction results of deep learning models are often difficult to interpret intuitively.

Therefore, an interpretability analysis was conducted to provide an intuitive understanding of the models’ classification behavior and the effect of image input size on model performance. This analysis visualized the feature information extracted by the deep learning models, highlighting the contribution of different body parts of the chickens to the model’s decisions. The Grad-CAM [

23] technique was used to visualize the ROI (region of interest) identified by the model, with the results shown in

Figure 4. The varying shades of colors indicate the extent of the region’s contribution to the classification decision: redder colors denote greater contributions, while bluer colors denote lower ones.

The ROIs of RegNet and Swin Transformer were visualized for image input sizes of 224 × 224 and 512 × 512, and four sets of comparative experiments were conducted on multi-class samples. The results show that all four models exhibit significant differences in the ROI for different chicken breeds and genders, closely related to the focus areas used in human classification. This finding confirms the effectiveness of these models in recognizing visual features specific to breeds and genders. The models are able to extract more and more accurate key features at an input size of 512 × 512 compared to an input size of 224 × 224. This proves that appropriately increasing the image input resolution can refine more features for the model, thus facilitating more accurate and effective classifications to some extent. Additionally, under conditions of two different input resolutions, the Swin Transformer demonstrates more accurate and effective ROIs in chickens compared to RegNet, which is consistent with the results illustrated in

Table 5.

Furthermore, the ROI results for the fourth group of samples in

Figure 4 indicate that after increasing the model’s input size, local features are further refined, while background information also has some influence on the model’s classification behavior. The possible reason for this is that, although the influence of the background was somewhat mitigated using independent groups to divide the dataset and target detection strategy during the data division process, the impact of the background on image classification remains non-negligible in the specific context of some samples. Therefore, we aim to explore techniques such as image segmentation to further isolate the effect of the background in our future work.

4. Conclusions

This study constructed a high-precision image dataset of 13 common Chinese native chicken breeds based on real production scenarios. An innovative classification system was developed using Swin Transformer, incorporating optimization strategies such as data preprocessing, object detection, and data augmentation to maximize performance. Following these optimization strategies, the system achieved superior results. These outcomes confirm the significant improvement in system performance, with the Swin Transformer outperforming other baseline models and demonstrating its suitability for practical classification tasks. Although the model has some limitations in computational cost and inference speed, the minor differences do not significantly affect its overall effectiveness. Furthermore, interpretability analysis was applied to better understand the model’s decision-making mechanisms, revealing that the model focused on distinct regions specific to each breed, further validating the reliability of its classification decisions. In conclusion, the developed system offers an effective solution for enhancing the economic benefits of the poultry industry and preserving local breeds, providing a valuable tool for the precise classification of poultry breeds and genders.