1. Introduction

Climate change, sometimes shown in extreme weather or prolonged droughts, and the increasing complexity of weed and disease management can have a negative impact on crop output [

1]. Consumers are becoming increasingly aware of the significant issues with excessive fertiliser and pesticide use in relation to agricultural sustainability [

2,

3].

Over the decades, a series of technical and technological innovations have radically transformed modern agriculture [

4]. Agricultural mechanisation, in particular, has enabled a dramatic increase in the productivity efficiency of agroecosystems. Mechanised systems have reduced manual labour and improved the precision of agricultural operations [

5]. Modern mechanisation challenges are related to operational efficiency, precision, and the implementation of technologically innovative equipment [

6]. A major limitation of mechanisation is the inability of these machines to dynamically adapt to variable field conditions and the specific needs of crops [

7]. In this context, the implementation of artificial intelligence systems plays a decisive role in shaping the research and development lines in the sector. Specifically, machine learning (ML) represents one of the most promising and cutting-edge technologies for overcoming the typical limitations of traditional mechanised systems [

8]. ML algorithms enable agricultural machines to dynamically learn new operational modes through data analysis, adapting with high precision to field variables and thus optimising agricultural operations in real time [

9]. In agriculture, ML is proving essential for optimising crop management, reducing waste, and increasing sustainability. Due to its ability to analyse large amounts of heterogeneous data, ML can enhance the effectiveness of mechanised systems and elevate them to a higher level. Among the main lines of development and implementation of these innovative systems, the following can certainly be found:

Detection of weeds, diseases, and crop stress: through computer vision algorithms, images or data collected by drones, onboard sensors, satellites, etc., can be analysed to proactively identify and assess the presence of weeds, diseases, or stress conditions (e.g., water stress) [

10];

Optimization in the application of agronomic resources [

11];

Automation of guidance systems [

12];

Predictive analysis for strategic crop management [

13].

The combination of agricultural mechanisation and machine learning has led to the development of intelligent, integrated systems, representing the future of crop management. This synergy offers unprecedented advantages, making agriculture more resilient, sustainable, and productive. Among the main practical examples of fruitful integration, we find autonomous robots with computer vision [

14], intelligent tractors [

15], drones for monitoring and spraying [

16], intelligent sprayers with multispectral sensors [

17], automated harvesting [

18], digital precision irrigation [

19,

20], intelligent automated planning of management operations [

21], predictive diagnostics for machinery maintenance [

22], etc.

In this highly dynamic scenario, technological innovations driving digital precision agriculture (DPA) are called upon to play a central and significant role, optimising strategic resource management and improving production sustainability [

23]. These technologies are particularly useful for effectively managing the main operational and management issues of various crops (e.g., fungal diseases, fertiliser management, phytosanitary product management, etc.).

The purpose of this study is to present an organised overview of the latest advances and potential directions in the use of ML in agricultural mechanised systems for autonomous spraying. This strategy is appropriate given the increasing need to improve agricultural methods in order to solve issues with food security, environmental sustainability, and production efficiency.

This paper also seeks to contribute to the understanding of the transformative potential of machine learning in agricultural mechanisation related to spraying systems in agriculture. In this landscape of practical applications, autonomous spraying [

24] represents one of the most advanced and promising areas in precision agriculture, combining mechanical technologies and artificial intelligence to optimise the application of phytosanitary products, fertilisers, and other agronomic resources. In an autonomous sprayer operation, it is essential to define an accurate knowledge representation (KR) so that the state function is referenced in real time through data from sensors and communication systems so that artificial intelligence methods can make decisions [

25].

Three main types of autonomous spraying systems are available: drones [

26], ground robots [

27], and tractor-mounted sprayers [

28]. Drones are a cutting-edge autonomous spraying device that works especially well in challenging terrain. They provide remote sensing via multispectral or thermal cameras, quick coverage, and accurate application using GPS and sophisticated sensors. They optimise the application techniques, cutting waste and enhancing sustainability when combined with machine learning algorithms. Ground robots are self-driving vehicles with sophisticated sensors (thermal, LiDAR, and multispectral), self-navigating systems, and nozzles that can be adjusted to precisely manage crops. They may administer tailored treatments and cut down on waste by using computer vision to analyse real-time photos and identify illnesses or pests. Sprayers mounted on tractors, finally, represent a traditional and widely used solution in precision mechanisation, yet in recent years, they have been significantly improved through the integration of advanced technologies, such as field harvesters [

29]. Equipped with multispectral sensors, assisted or autonomous guidance systems, and variable control nozzles, these sprayers optimise the application of products, reducing waste and ensuring precise operation even on uneven terrain.

2. Research Methodology

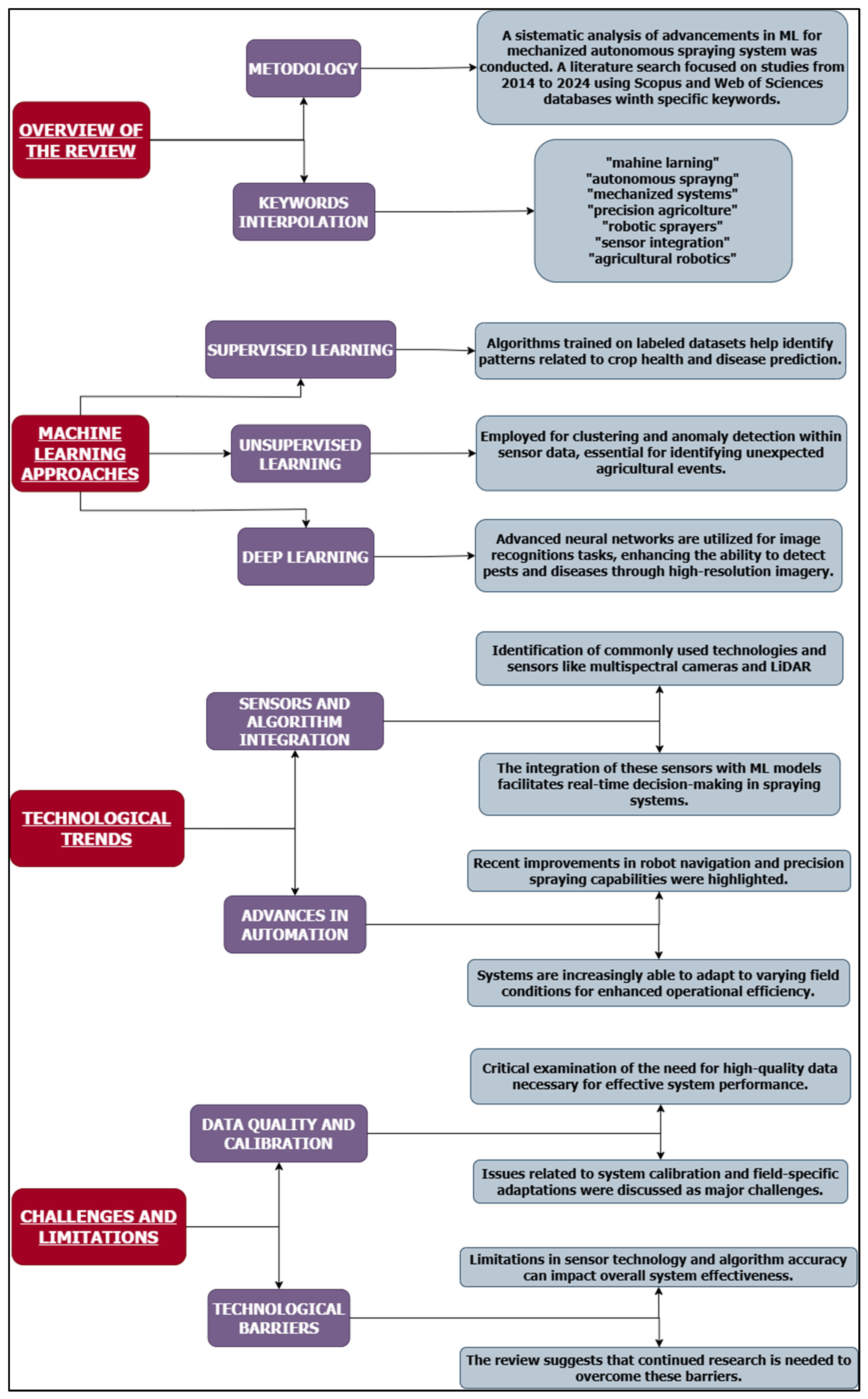

In this brief review, the state of the art regarding the integration of ML in mechanised autonomous spraying systems was analysed. This review was conducted through a systematic examination of recent progress in the field. A comprehensive literature review was carried out, with particular emphasis on the last 10 years (2014–2024), in order to locate the most relevant studies that had been published. The two main databases used for the search were Web of Science and Scopus. The search terms included combinations of keywords such as “machine learning”, “autonomous spraying”, “mechanized systems”, “precision agriculture”, “robotic sprayers”, “sensor integration”, and “agricultural robotics”. Scientific articles, conference papers, and book chapters were considered, and the findings of the latest available reviews in the literature were also analysed.

Following the identification of pertinent contributions, an examination was conducted of the technological elements (sensors, machine learning algorithms, and mechanised systems), the effectiveness, precision, and environmental impact of autonomous spraying systems, as well as particular machine learning applications like disease prediction, pest detection, and resource optimisation.

Inclusion criteria were defined to select peer-reviewed studies focusing on the application of machine learning techniques within autonomous or semi-autonomous spraying systems in agricultural settings. Exclusion criteria involved papers that addressed the general uses of machine learning in agriculture without specific reference to mechanised spraying, studies lacking empirical validation, and works published in non-English languages or not accessible through institutional databases.

Qualitative analysis was used to evaluate the research:

Technological Trends: The most widely utilised machine learning algorithms, sensors, and technology in autonomous spraying systems were determined. The integration of sensors (such as LiDAR and multispectral cameras) with machine learning models for real-time decision-making processes received particular attention.

Progress in Automation: Recent developments in autonomous systems, such as enhanced robot navigation, precise spraying, and the systems’ capacity to adjust to changing field circumstances, were the main emphasis of this review.

Effectiveness and Impact: These systems’ performance was assessed in terms of environmental sustainability, resource conservation, agricultural production enhancement, and operating efficiency.

Challenges and Limitations: The limitations and challenges highlighted in the studies, such as the need for high-quality data, system calibration, or field-specific adaptations, were critically analysed.

This scientific review’s main goal was to comprehend and precisely characterise the ways in which machine learning is combined with sensor technologies in autonomous spraying systems.

Various machine learning model types that fall into three groups were examined and explored in this context:

Supervised Learning: Algorithms trained on labelled datasets to identify patterns and make predictions on crop health, pest infestations, or diseases.

Unsupervised Learning: Algorithms for detecting anomalies and grouping in sensor data, especially to find unanticipated occurrences like fungal infections or water stress.

Deep Learning: High-resolution photographs taken by drones or ground robots can be utilised to diagnose illnesses or infestations using sophisticated neural networks for image identification and analysis.

To provide a structured overview of the key aspects covered in this study,

Figure 1 presents a conceptual map outlining the main themes explored, including machine learning approaches, technological trends, and existing challenges in autonomous spraying systems.

It should be mentioned that although this study offered a thorough examination of the most recent advances in the field, its focus was restricted to research conducted in the context of autonomous spraying. Developing control architectures for sprayers based on behaviours adapted to the unstructured agricultural environment directly leads to the need to develop machine learning methods with a ‘decomposition’ of behaviour into small sub-behaviours that can be modelled in supervised or unsupervised networks [

25]. Crop management techniques other than mechanised spraying and general uses of machine learning in agriculture were excluded. This review’s methodological approach is to provide a systematic and comprehensive overview of the present research situation, pointing out gaps in the literature and offering suggestions for future advancements in the incorporation of machine learning into autonomous spraying systems.

3. Results

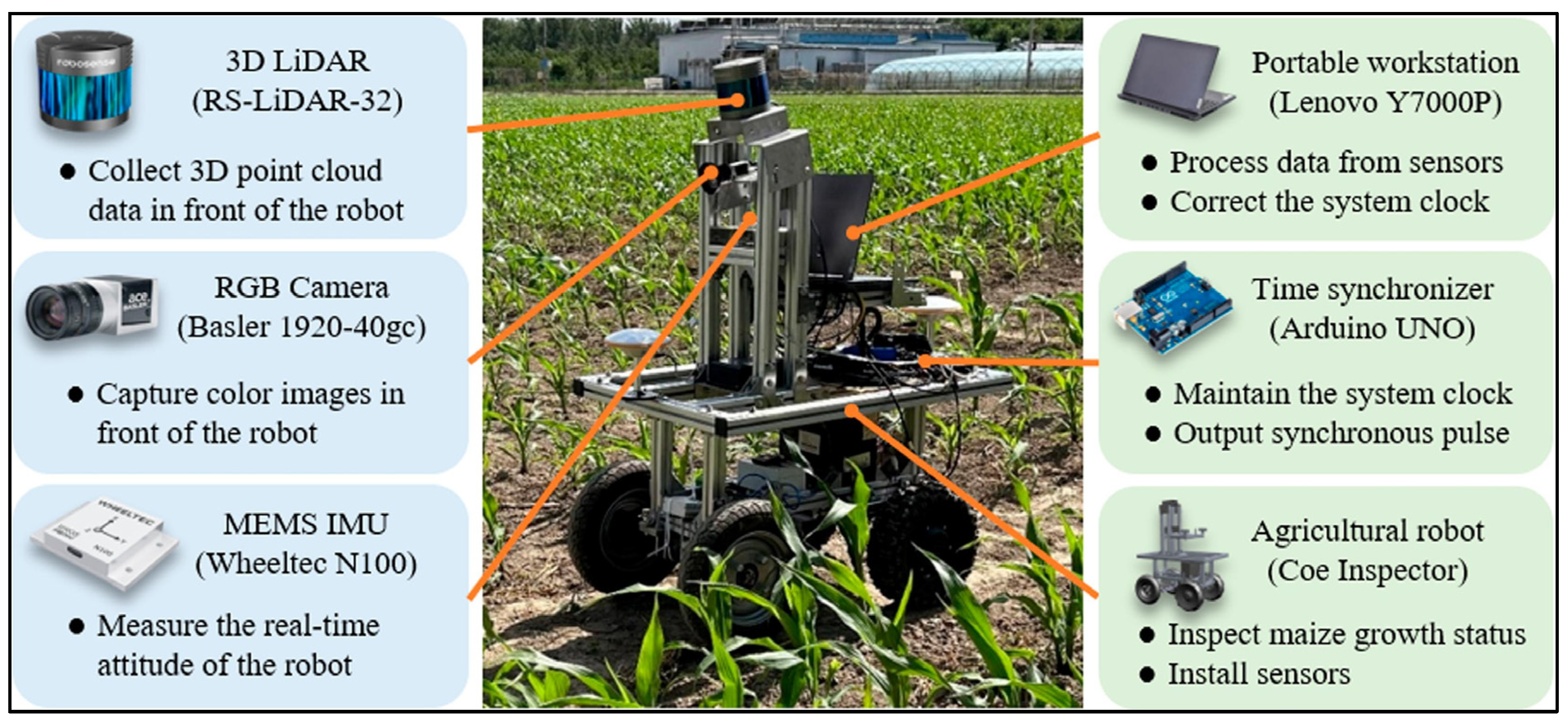

The integration of ML in mechanised autonomous spraying systems has made significant progress in recent years, with a growing focus on the use of advanced sensors and machine learning algorithms. The fusion of data from multiple sensors is crucial for enhancing the accuracy of autonomous spraying systems. The integration of RGB (red, green, and blue) cameras, LiDAR, and multispectral sensors provides a more comprehensive understanding of the agricultural environment. For example, Ban et al. [

30] developed a method based on the fusion of data from a monocular RGB camera, a 3D LiDAR, and an inertial measurement unit (IMU) for the real-time extraction of navigation lines between corn rows (

Figure 2). Using an advanced algorithm for creating maps of green features and filtering LiDAR data, the system achieved a 90% accuracy rate and an average angular error of 1.84°, significantly improving the navigation of agricultural robots in complex environments.

An advanced system based on fully convolutional networks (FCNs) was designed by Lottes et al. [

31] for stem detection and pixel-wise classification of crops and weeds. By using RGB and NIR (near infrared) images, the model demonstrated a high level of generalisation, even on fields not included in the training phase. Additionally, Shi et al. [

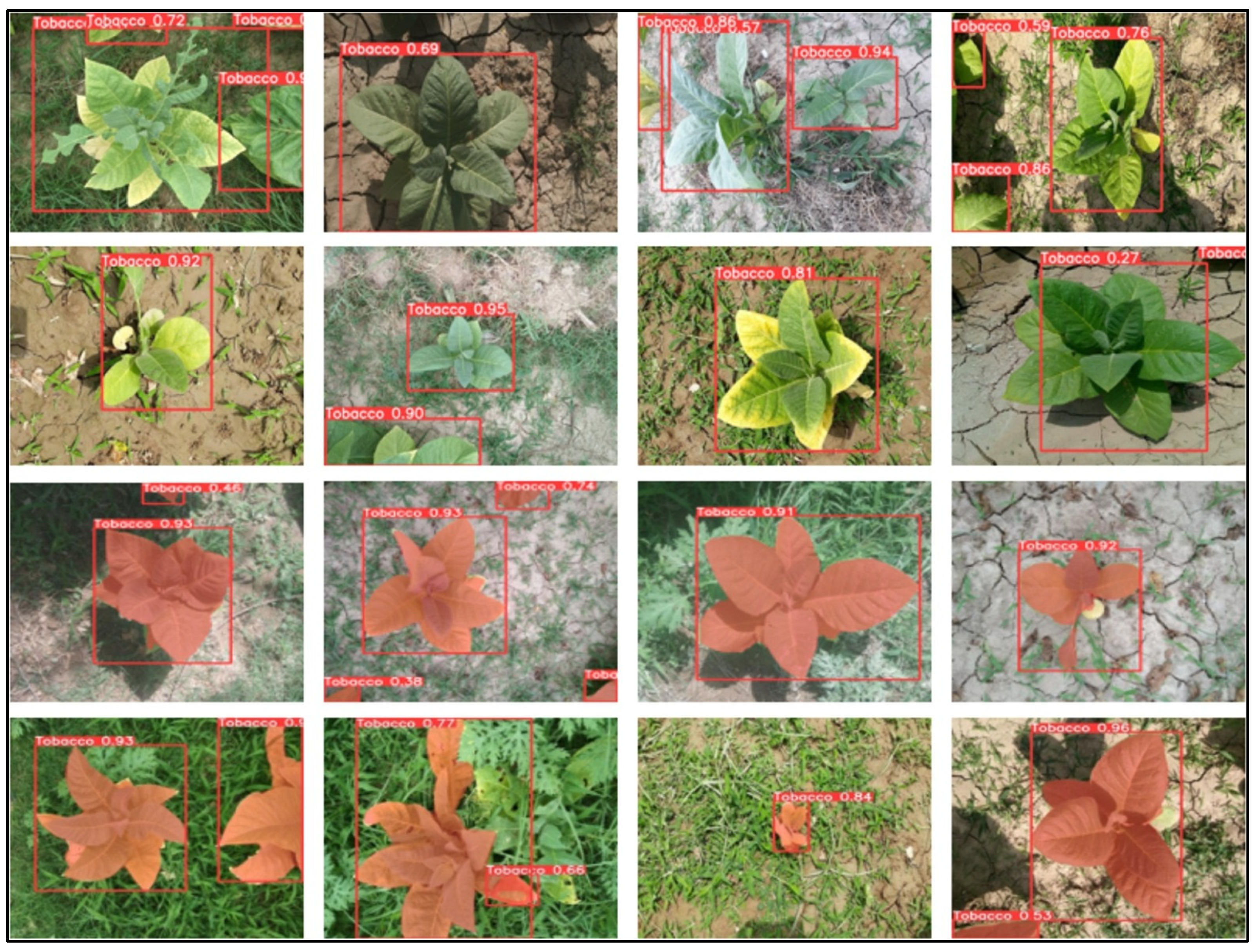

32] analysed advanced techniques for crop row detection, utilising sensors such as monocular, stereo cameras, and LiDAR, combined with deep learning algorithms like Faster R-CNN and YOLO. The multi-sensor fusion enhanced the robustness and accuracy of autonomous navigation in agricultural fields. In their paper, Arsalan et al. [

33] compared the YOLOv5 and YOLOv6 models for tobacco plant detection and segmentation, using the TobSet dataset (

Figure 3).

Aerial photos taken with a Mavic Mini drone were used to assess the models. While YOLOv5-seg fared better with smaller and overlapping plants, YOLOv6 showed higher accuracy and quicker recognition than YOLOv5. Later, Khan et al. [

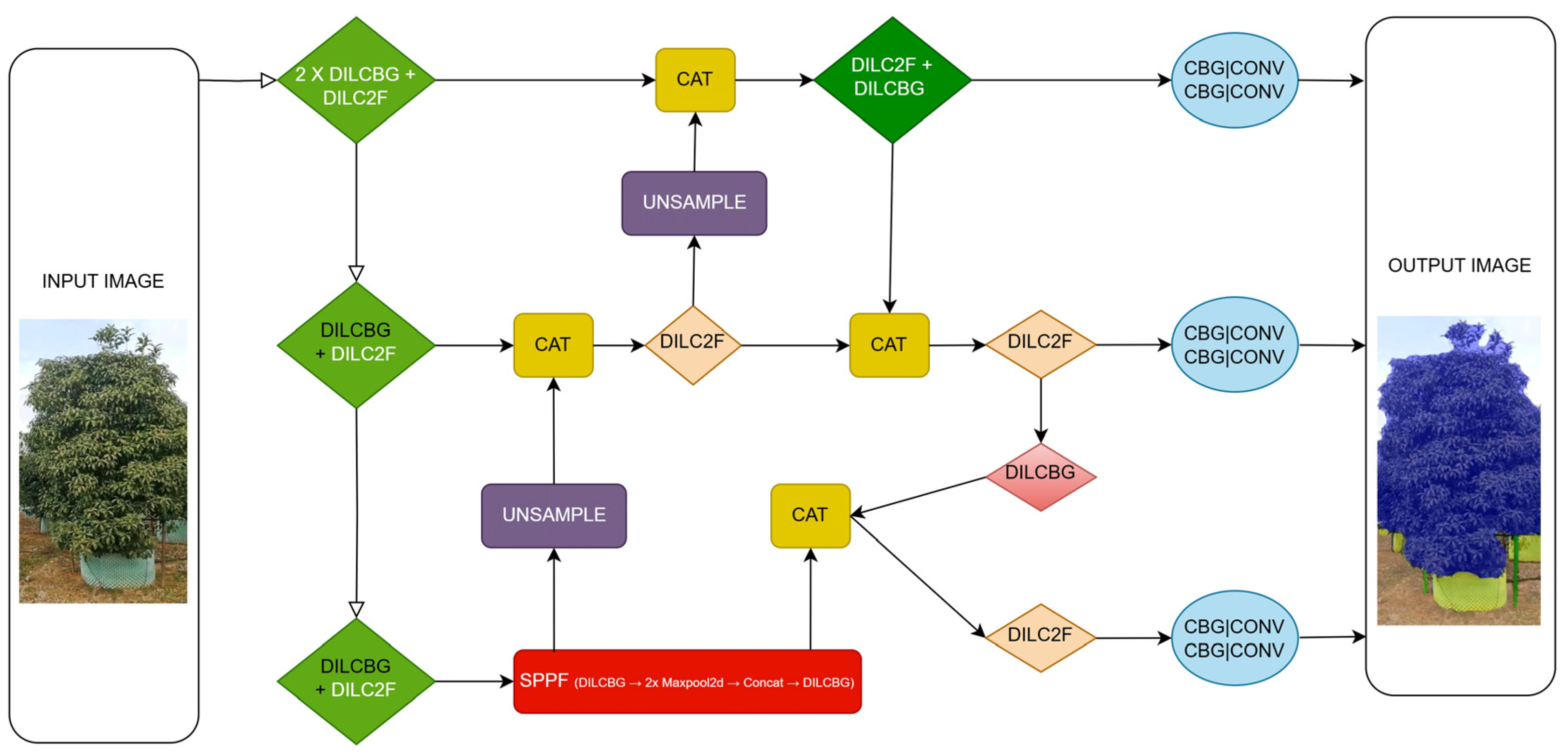

34] used sophisticated methods such dilated convolutions and the adaptive gradient algorithm to enhance the YOLOv8 algorithm for real-time segmentation of fruit tree canopies (

Figure 4).

The study’s average accuracy of 93.3% and the 40% reduction in spraying waste demonstrated the model’s effectiveness in precision spraying for complex agricultural environments. In the study conducted by Jin et al. [

35], the efficacy of deep convolutional neural networks (DCNNs) in recognising and distinguishing turfgrass weeds based on their herbicide sensitivity was demonstrated. The study looked at models like VGG-Net, ShuffleNet-v2, MobileNet-v3, and GoogLeNet. Similarly, a deep (CNN)-based method for weed identification and classification in agricultural environments was developed and assessed by Pattanik et al. [

36]. The work focussed on using sophisticated deep learning methods, including CNNs, rectified linear units (ReLUs), and the SoftMax classifier, to analyse images and differentiate between weeds and crops. To improve crop-weed classification accuracy, the proposed approach makes use of machine learning techniques including scale-invariant feature transform (SIFT) and speeded-up robust features (SURFs) as well as artificial visual analysis systems. When these technologies were combined with neural networks and ensemble learning techniques, it was shown that crop photos could be accurately classified. As an alternative, Huynh and Nguyen [

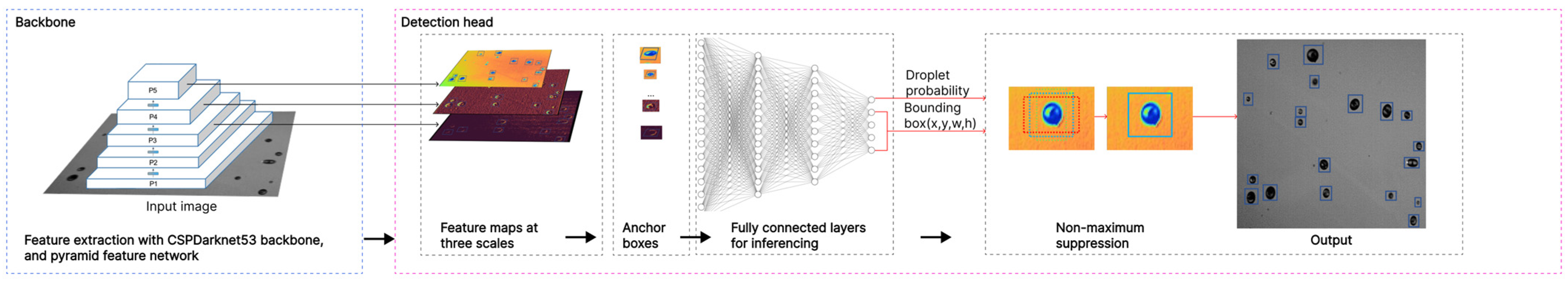

37] provided a real-time droplet identification system for agricultural spraying systems that used a CNN for video input (

Figure 5).

The technology, which is intended for embedded devices with constrained resources, provides excellent droplet detection precision even in the presence of fast movement or low light levels. The system’s capacity to maximise fertiliser and pesticide use, improving spraying operations’ sustainability and efficiency, is highlighted in the results. Modi et al. [

38] created a deep learning-based system for the autonomous identification of weeds in sugarcane fields (

Figure 6) using a dataset of more than 5600 photos and testing models like DarkNet53 and ResNet50. The system achieved an accuracy of 96.6% and an F1 score higher than 99%, demonstrating the potential of deep learning in precise weed management.

Furthermore, Gao et al. [

39] used a machine learning approach based on the mutual subspace method (MSM) to create a recognition system to detect spraying locations utilising unmanned aerial vehicles (UAVs). By efficiently differentiating between areas that need to be sprayed and those that do not, this system seeks to improve precision pesticide spraying while providing high computing speed and accuracy.

The automation of spraying systems has benefited from the use of autonomous vehicles, drones, and agricultural robots. Today, real-time agricultural monitoring systems based on deep learning and wireless sensors are available [

40]. An autonomous unit detects weeds through a camera, transmits the images to a deep learning model, and activates targeted herbicide spraying. In their article, “The Deployment of Machine Learning and On-Board Vision Systems for an Unmanned Aerial Sprayer for Pesticides”, Karrar S. Mohsin et al. [

41] presented the development of an autonomous pesticide spraying system integrating machine learning and computer vision technologies. The drone can recognise and find target regions thanks to the computer vision technologies, and the system uses machine learning algorithms to evaluate the environmental data and optimise spraying parameters. By enhancing pesticide application efficiency and accuracy, this technology combination seeks to minimise environmental effects and chemical consumption. The suggested strategy provides a novel approach to agricultural management, encouraging more focused and sustainable methods.

Kameswari et al. [

42] presented an autonomous pesticide-spraying robot based on machine learning techniques in the field of agricultural robotics with the goal of increasing crop output while lowering labour needs. The system employs an support vector machine (SVM) image classifier to automatically detect the path, operating with a supervised learning approach. As the robot moves, it captures images with a Pi-Camera, analyses them to identify the path, and sprays water or pesticides based on the needs. Additionally, it uses the Plantix app to monitor plant health by analysing real-time photographs. The Raspberry Pi 3 CPU controls the complete system and gives the motor driver instructions to manoeuvre the robot. This project is an innovative example of agricultural automation, utilising machine learning and robotics to boost the productivity of agricultural operations. Also, Wijesundara et al. [

43] described the development of an autonomous agricultural robot designed for precise pesticide application. The system uses state-of-the-art computer vision technologies for accurate plant identification and RTK-GPS technology for navigation along present paths. Positive outcomes from tests carried out in a field of potted plants demonstrated how well the robot works to increase agricultural productivity and encourage more environmentally friendly methods. Similar to this, Khan et al. [

44] demonstrated a GPS-guided autonomous agricultural robot equipped with an automatic weeding and fertilisation system. This gadget selectively applies pesticides and detects weeds using machine learning techniques. The system combines Raspberry Pi, Arduino, and RTK GPS for precise navigation. Although weed recognition and the addition of more sophisticated sensors might be improved, experimental testing validated the robot’s capacity to function independently in fields. Huynh et al. [

45] described an autonomous agricultural robot that combines a deep learning algorithm for targeted plant spraying with a sophisticated navigation system. With the use of sophisticated sensors to identify impediments and optimise routes, the robot can move around the field at a steady pace and on its own. By identifying plants with high accuracy, the deep learning system increased spraying efficiency and decreased the usage of pesticides. The results from experiments support the system’s efficacy and suggest that it might improve agricultural productivity and sustainability. Faikal et al. [

46], on the other hand, have already created autonomous UAVs for pesticide spraying by combining artificial neural networks (PSO-ANNs) and optimisation approaches (particle swarm optimisation). The technology provided an efficient and long-lasting solution for autonomous crop management by increasing spraying accuracy and minimising environmental impact. GöktogÌan and Sukkarieh [

47] investigated UAV systems further and used unmanned aerial vehicles (UAVs) to monitor and control weeds in challenging regions. They tested using UAVs with multispectral sensors to create maps of infestations and automatically administer herbicides in different parts of Australia. The solution reduced costs and operational risks, demonstrating the effectiveness of UAVs in precision agriculture management. Significant gains in sustainability and operating efficiency have been demonstrated by autonomous spraying systems. Using a 2D LiDAR system to identify tree trunks and move between rows, Jiang et al. [

48] created an autonomous robot for orchard spraying (

Figure 7).

The system processes point clouds and plans the robot’s itinerary using clustering techniques including DBSCAN, K-means, and RANSAC. With mean positioning errors varying from 11.4 cm to 15.5 cm depending on the terrain and turning orientation, field testing has shown that the robot can safely and efficiently perform spraying duties while calculating its path in real time. Researchers have studied the development of vision-based autonomous vehicles for agricultural applications in great detail. The use of machine learning algorithms including regressions, SVM, and KNN was studied by Thakur et al. [

49]. The use of ensemble methods and hybrid algorithms, which integrate many base estimators to improve accuracy and resilience, is growing in popularity. These methods, which are mostly used with Python and R, increase the processing speed for big datasets and optimise farming operations like harvesting and spraying. When Balasingham et al. [

50] created SPARROW, an autonomous agricultural robot for weed elimination, they integrated a targeted spraying algorithm to autonomously recognise weeds and arrange the direction of the nozzle, confirming the effectiveness of robot spraying. The robot can accurately follow crop rows by using a vision-based navigation system that synchronises with the spraying algorithm. This inexpensive approach seeks to minimise environmental effect, increase efficiency, and decrease the usage of herbicides. Additionally, Ji et al. [

51] created an autonomous indoor irrigation robot by combining cutting-edge sensors and navigation algorithms to guarantee accurate and consistent watering. While the frame stays still, the robot may spray a large variety of walls since it travels along the Y-axis. Its sophisticated sensors enable accurate and autonomous navigation by tracking position and monitoring ambient variables. Effective irrigation is ensured by the spraying system’s ability to distribute liquid uniformly across target surfaces. The robot’s mechanical design increases its adaptability in interior areas by allowing it to adjust to different ambient conditions. The robot’s autonomy has led to more efficient irrigation management, reducing the time and resources required for operation. This system guarantees precise and uniform irrigation, reducing human intervention and increasing process efficiency. Despite progress, a number of obstacles still stand in the way of these technologies’ widespread deployment. As noted in 2020 by Danton et al. [

52], one of the main challenges in the creation of an autonomous agricultural robot intended for the accurate delivery of fertiliser is the requirement for high-quality data to train machine learning algorithms. The device incorporates sensors to track soil conditions, actuators to distribute fertiliser precisely, and GPS for self-navigating. Robot control is made simpler by the user interface and navigation algorithms. Adoption of such technology can encourage more sustainable farming practices, since field testing has shown effective waste reduction and increased agricultural efficiency. Moreover, in 2024, Alshbatat et al. [

53] emphasised the difficulties in adapting these systems to variable environmental conditions, especially for early disease detection in medicinal herb plants. However, the authors succeeded in developing an intelligent autonomous agricultural robot to detect and treat diseases in medicinal herb plants. With the use of artificial intelligence and Internet of Things technology, the robot can identify problems like leaf yellowing early and halt movement to administer preventative therapies. The system’s deep learning algorithms and Pixy camera allow producers to make quick judgements, increasing greenhouse plant disease management effectiveness. Lastly, Singh et al. [

54] described the creation and experimental verification of an agricultural intelligent drone that uses machine learning and image processing methods to spray crops. By using machine learning algorithms to evaluate photos taken by onboard drone sensors, the suggested solution makes it possible to precisely identify regions that require treatment and apply fertiliser or pesticide where it is needed. By reducing excessive chemical use, this system seeks to minimise environmental damage and encourage more sustainable agriculture practices. The drone’s ability to increase spraying efficiency, guarantee consistent treatment distribution, and lower operating costs has been shown through experimental validation.

Practically speaking, the findings and comparative analysis presented in this paper offer concrete recommendations for the selection and implementation of autonomous machine learning (ML)-based spraying systems across different agricultural settings. By analysing ML techniques and sensor integration—together with an evaluation of technological maturity, adaptability, and decision-making autonomy—this review provides practical insights to guide the identification of the most appropriate solutions based on crop type, farm size, and specific operational requirements.

As an example, platforms integrating Camera-LiDAR-IMU fusion with YOLOv8-based segmentation demonstrate strong perceptual performance and a high degree of technological maturity, positioning them as viable options for commercial deployment in structured environments like orchards and vineyards. In contrast, systems still in early development—such as autonomous sprayers for indoor agriculture—are currently better suited for experimental trials or use within controlled cropping systems. This study’s comparative methodology also offers a helpful guide for making investment decisions. Larger operations could profit from more complex platforms combining UAVs, LiDAR, and cutting-edge deep learning techniques, while small and medium-sized farms might find it easier to implement lightweight and affordable solutions (such as CNNs combined with embedded video cameras).

The potential of integrating these technologies into broader digital agricultural platforms and decision support systems is highlighted by their emphasis on flexibility and decision autonomy. It is precisely this integration that allows autonomous spraying systems to be able to react instantaneously to crop conditions, weather patterns or insect outbreaks, thus facilitating more adaptable and sustainable agricultural management techniques.

4. Discussion

Mechanised autonomous spraying systems that use ML have shown notable improvements in accuracy, productivity, and environmental sustainability.

ML offers significant advantages over traditional, classical methods in precision agriculture, particularly in the context of autonomous spraying systems. These systems benefit from enhanced accuracy, efficiency, and sustainability when compared to conventional approaches, which often rely on uniform spraying techniques that can lead to overuse of chemicals and unnecessary environmental impact. ML-based systems, on the other hand, can dynamically adjust to specific environmental conditions, detect plant and weed types more accurately, and target pesticide application more precisely. This precision reduces the quantity of pesticides and fertilisers used, directly supporting sustainability goals by minimising waste and environmental harm. Furthermore, ML systems continuously improve through training, adapting to changing variables in the field—such as crop growth stages, weather conditions, and terrain diversity—enabling better decision-making and real-time adjustments that traditional methods simply cannot match. Nevertheless, in order to facilitate widespread adoption, a number of issues still need to be looked at and resolved. Large amounts of high-quality labelled data are required for ML-based technological solutions in order to guarantee correct generalisation and reduce mistakes throughout the spraying process. In this regard, obtaining good performance in machine learning models depends critically on the calibre and volume of data utilised for training. A fundamental aspect concerns the fusion of data from heterogeneous sensors, such as monocular cameras, LiDAR, and multispectral sensors.

The integration of advanced technologies into autonomous spraying systems is revolutionising precision agriculture by enhancing monitoring capabilities and enabling targeted interventions. Recent studies have highlighted the key role of unmanned aerial vehicles (UAVs) equipped with hyperspectral and multispectral sensors in supporting more accurate agronomic decisions. Sousa et al. [

55] demonstrated how push-broom and snapshot sensors mounted on UAVs can optimise targeted spraying in viticulture applications, allowing for more precise pesticide distribution and reduced environmental impact. Similarly, Pádua et al. [

56] showed the effectiveness of multi-temporal vegetation monitoring using UAV-based RGB imagery to improve vineyard spraying management, enabling timely and localised interventions. Furthermore, the adoption of machine learning algorithms for data analysis has opened new avenues for intelligent pest control. Guimarães et al. [

57] developed a system based on multispectral UAV data and ML classifiers to detect aphids in vineyards, allowing for a more precise application of biological or chemical control agents. These findings confirm that the fusion of machine learning, advanced sensor technology, and UAV systems is one of the most promising strategies for optimising autonomous spraying, ensuring more efficient resource use and greater sustainability in agricultural practices. The integration of sensors that operate at different frequencies and provide information with varying levels of detail presents challenges that are still being optimised, despite the fact that data fusion approaches, like the one suggested by Ban et al. [

30], have produced notable results in terms of accuracy and navigation. For instance, using RGB and LiDAR sensors together is beneficial for autonomous navigation management; nevertheless, it necessitates sophisticated data processing to synchronise and align data from various devices without sacrificing navigation system accuracy.

Another area of discussion involves the ability of ML models to adapt to variable conditions, such as terrain diversity, lighting variations, and weather conditions. According to Pattanik et al. [

36], deep learning methods and models like CNNs have been created for weed identification; however, further testing and optimisation are needed to determine how well ML models function in dynamic agricultural situations. In particular, the resilience of the model has to be strengthened to manage situations in which environmental factors, such the presence of clouds or variations in sunshine, might impact the quality of pictures obtained by sensors. Thus, it is crucial to create deep learning algorithms that can adjust to changes in the environment in real time without sacrificing the precision of plant and weed identification. The combination of computer vision and ML algorithms has shown promising results in the automation of targeted spraying. However, interoperability issues between different systems remain. For instance, the deployment of drones and autonomous robots for spraying requires synchronisation between visual sensors, GPS units, and navigation algorithms, as highlighted in the studies by Jiang et al. [

48] and Thakur et al. [

49]. The real-time management of these complex systems, especially in agricultural environments characterised by uneven surfaces and growing plants, necessitates continuous improvements in localisation and mapping models to minimise positioning errors and ensure precise and effective spraying operations. Choosing the right ML techniques and sensors to maximise detection and navigation is a critical step in the creation of autonomous spraying systems. The advantages, limitations, and primary uses of some of the most popular machine learning techniques and sensors are contrasted in

Table 1. This comparison aids in understanding how various technical combinations might be used to optimise autonomous systems’ efficiency in agricultural settings.

Reducing the usage of pesticides and fertilisers is one of these technologies’ main advantages, which has important sustainability ramifications.

Autonomous spraying systems, such those developed by Khan et al. [

34], are more accurate in identifying and delivering chemicals, reducing spraying waste by 40%. In addition to lowering farmers’ operational costs, this promotes more environmentally friendly farming practices and helps to conserve the environment.

Significant investments in infrastructure, technology, and training are needed to deploy autonomous agricultural drones and robots. Managing real-time data and the computing capacity required for image analysis provide additional difficulties, especially for small and medium-sized farms. However, due to ongoing developments in machine learning technologies, sensor miniaturisation, and production cost reductions, these challenges ought to be overcome in the years to come.

In evaluating the state-of-the-art swarm robotics technologies, thirty-four agricultural swarm robotics technologies were assessed from seven key aspects:

Technology Readiness Level (TRL): ranging from the basic principles of technology (TRL 1) to fully proven systems in operating environments (TRL 9).

Configurability (Config): the robot’s ability to be configured for specific tasks.

Adaptability (Adapt): how well the system adjusts to different working scenarios.

Perception Ability (Perc): the robot’s capacity to perceive its environment using various sensors.

Decision Autonomy (Decis): the ability of the robot to act autonomously, based on its environment and task requirements.

Each of these aspects was evaluated using a three-level scoring system (3 = fully addressed, 2 = partially addressed, 1 = not addressed), and this helped in drawing comparisons across technologies. This evaluation framework can be integrated with the analysis of machine learning approaches for autonomous spraying systems, as both domains share a focus on improving task execution efficiency and environmental adaptation in agricultural robotics (

Table 2).

The assessment of autonomous spraying technologies [

27,

58] based on machine learning reveals significant variations in technological preparedness, adaptability, configurability, perceptual capacity, and decision autonomy. These elements provide information on the actual implementation and possible enhancement of these systems in precision farming. The majority of the evaluated systems are at advanced stages of development in terms of technological readiness, with several of them reaching TRL 6 or 7. This suggests successful demonstration in relevant or operational environments but indicates the need for further refinement before large-scale commercial adoption.

High technological maturity, for instance, positions the Camera-LiDAR-IMU Fusion Method (Ban et al. [

30]) and YOLOv8 Instance Segmentation for Orchard Canopies (Khan et al. [

34]) for integration into precision spraying applications. For autonomous spraying systems to be adapted to a variety of agricultural environments, configuration is crucial. Technologies with great configurability, such as deep learning-based weed identification [

35] and row detection-based navigation [

32], may adapt to different crop varieties and field circumstances. The intricacy of combining several sensors and machine learning models, however, is the main reason why certain systems still present customisation challenges. When it comes to optimising autonomous spraying systems, adaptability is still crucial. While many ML-based approaches show moderate adaptability, enabling them to cope with changes in environmental conditions such as lighting and crop growth stages, further improvements are necessary for optimal performance in dynamic settings [

59]. Improving adaptability would make the systems more successful overall by allowing them to respond more quickly to changes in the agricultural environment. The perceptual ability of a system has a significant impact on its capacity to observe its surroundings and make precise spraying judgements. Because they increase the accuracy of object detection and segmentation, multi-sensor integration technologies, such as LiDAR and multispectral cameras, are typically linked to high perception scores. However, systems that just use RGB cameras could not work as effectively in inclement weather or when they are hidden by dense foliage.

Lastly, the ability of completely autonomous systems to make decisions on their own is referred to as decision autonomy. Even if a number of the technologies under evaluation have excellent decision-making skills, modifying spraying actions in response to real-time data, some still need to be optimised in order to reach full autonomy. In practical agricultural applications, system independence and accuracy might be further increased by refining decision-making algorithms and including reinforcement learning. Considering these developments, there are still obstacles to the broad use of these technologies. The high price of sophisticated technologies and the requirement for suitable infrastructure are two of the primary challenges.

In conclusion, integrating machine learning into autonomous spraying systems is one of the most promising approaches to addressing the problems brought up by precision agriculture. Even if operational and technological issues still need to be resolved, these systems have the potential to revolutionise agriculture through increased production, less environmental impact, and the encouragement of more sustainable agricultural methods with more research and refinement.

5. Future Prospects

Recent advances in agricultural nozzle design and the use of mechatronic spraying systems have greatly increased the accuracy, efficiency, and versatility of pesticide and agrochemical applications. These developments have established a strong foundation for the transition to more sustainable and digitally integrated plant protection methods.

Nonetheless, several key challenges still warrant deeper investigation—particularly when it comes to improving atomisation control in smart farming systems, minimising environmental footprints, and ensuring seamless data interoperability across platforms. Progress in spraying technologies will likely emerge from a multidisciplinary blend of robotics, digital agriculture, fluid mechanics, precision engineering, and artificial intelligence. Computational fluid dynamics (CFD) and additive manufacturing are effective techniques for improving both material properties and nozzle design. These methods help optimise the fit for specific crop structures and canopy types while allowing for the creation of spray patterns that are finely tuned to improve efficiency and precision. As a result, they enhance droplet accuracy, reduce off-target drift, and optimise pesticide application.

More selective and energy-efficient droplet generation may also be facilitated by recent developments in biomimetic nozzle design. The integration of real-time sensing technologies—such as LiDAR, hyperspectral and multispectral imaging, and advanced machine vision systems—with AI-based control algorithms is poised to transform conventional spraying into a dynamic and site-specific process.

Current smart spraying systems are made to react instantly to field circumstances that change, such as abrupt weather changes, insect outbreaks, or signs of crop stress. They can instantly change important parameters like spray pressure, droplet size, and flow rate, guaranteeing that treatments remain accurate and efficient in a variety of situations.

Such adaptability is expected to drive the transition from conventional high-volume spraying toward ultra-low-volume (ULV) or micro-dosing techniques, maintaining treatment precision and efficacy while substantially lowering chemical usage and reducing environmental impact.

Another significant dimension of future spraying systems is their interoperability and digital traceability. The adoption of Internet of Things (IoT) communication protocols, blockchain infrastructures, and standardised data formats (e.g., ISOBUS) will support the secure, transparent, and tamper-proof recording of spraying data. These characteristics play a crucial role in meeting the demands of tightening regulatory standards, while also strengthening transparency, traceability, and accountability throughout the agri-food supply chain. Future advances in atomisation will be strongly related to the creation of self-governing robotic systems that can be smoothly combined with intelligent spraying technologies that can instantly adapt to changing field circumstances.

These systems, whether deployed on the ground or from the air, are capable of carrying out targeted interventions with minimal human oversight, delivering reliable results across diverse terrains and crop varieties. Integration with farm management platforms and digital twins could allow for fully autonomous planning, execution, and optimisation of spraying tasks at the field or even plant level.

Concurrently, there is an increasing need to understand and mitigate spraying technology’s ecological and toxicological impacts. Future studies should look at how formulation chemistry, droplet size and speed, and interactions with non-target organisms—such as pollinators, aquatic animals, and soil fauna—affect the ecosystem. It is equally crucial to investigate the cumulative effects of repeated spraying, air drift, and residue behaviour in complex agroecosystems. To solve these difficulties, long-term, cross-disciplinary research will be required. It would also be necessary to actively include stakeholders through participatory procedures in order to develop spraying strategies that are both productive and ecologically sensitive.

Table 3 provides a concise overview of these futuristic approaches, outlining their main areas of emphasis, supporting technology, and expected advantages.

The future of spraying technologies will depend on the integration of advanced nozzle design, real-time sensing and processing capabilities, the possibility of data-driven control and environmentally sustainable agricultural practices, as well as lowering the cost of the technologies. These innovations are key to meeting the challenge of increasing food production while minimising the negative effects of spraying on human health and the environment. Achieving the full potential of new-generation spraying systems will require a coordinated, interdisciplinary approach to ensure the development of sustainable and resilient agri-food systems.

6. Conclusions

Significant gains in accuracy, productivity, and environmental sustainability have been demonstrated by the application of ML in automated spraying systems. Precision agriculture has significantly improved with the use of advanced machine learning models and multi-sensor fusion techniques. This has made it possible to identify plants in real time, apply pesticides precisely, and optimise navigation for autonomous agricultural robots.

By improving weed detection, crop row identification, and disease recognition, the studies reviewed highlight the effectiveness of advanced algorithms such as CNNs, YOLO, and FCNs. This leads to more sustainable agricultural practices by reducing the dependency on pesticides and herbicides. Furthermore, the ability to minimise pesticide use and runoff—factors that negatively affect neighbouring ecosystems—through the integration of machine learning into autonomous spraying systems on the one hand increases operational efficiency, and on the other promotes environmental sustainability. However, despite these important advances, several key challenges persist that prevent the widespread adoption of machine learning-based autonomous spraying systems.

Indeed, major obstacles include the need for high-quality labelled datasets to train robust models that can generalise across different field conditions and crop varieties. The scalability of such systems is limited by the significant resources needed for data collecting and annotation.

Precision farming can be significantly improved by integrating IoT-driven agricultural monitoring networks with machine learning-based autonomous spraying systems. By offering real-time field monitoring, providing farmers with timely data, and optimising resource management, these technologies can enhance both sustainability and productivity. This approach fosters an eco-friendlier farming practice, promoting bio-diversity conservation and minimising the environmental impact of agricultural activities through waste reduction and better resource utilisation.

To ensure that these technologies are used appropriately, attention must also be paid to the ethical and legal considerations, in particular those related to data privacy, environ-mental impact, and acceptance by farmers (especially in settings with strong cultural traditions).

Moreover, the fusion of heterogeneous sensor data, including RGB cameras, LiDAR, and multispectral imagery, presents complexities of synchronisation and alignment that still require continuous refinement to ensure accurate real-time decision-making. The capacity of these systems to adjust to changing environmental factors, such as variations in sunlight, weather, and soil characteristics, is another crucial component. One of the primary areas of study continues to be ensuring dependable performance in various agricultural environments. Furthermore, real-time applications face difficulties due to the computational needs of deep learning methods, especially for embedded devices with constrained processing capacity. Optimising light-weight machine learning models that preserve high accuracy while cutting down on inference times should be the key goal of future research. In conclusion, even though ML has transformed autonomous spraying systems by increasing productivity and decreasing reliance on agrochemicals, more developments are needed to address operational and technological issues. To enable the smooth integration of these technologies into contemporary agriculture, future research should focus on enhancing sensor fusion techniques, creating adaptable machine learning models, and investigating energy-efficient computer options.

Author Contributions

Conceptualization, F.T. and P.D.; methodology, F.T., C.F., L.S.S., R.R.M., D.A., and Ř.T.; formal analysis, F.T., R.R.M., P.D., and C.F.; investigation, F.T., C.F., L.S.S., R.R.M., D.A., M.K., and Ř.T.; resources, F.T. and P.D.; data curation, F.T., C.F., L.S.S., R.R.M., D.A., M.K., and Ř.T.; writing—original draft preparation, F.T., R.R.M., P.D., and C.F.; writing—review and editing, F.T., C.F., L.S.S., R.R.M., D.A., P.D., M.K., and Ř.T.; visualization, L.S.S., R.R.M., D.A., P.D., and Ř.T.; supervision, F.T., R.R.M., C.F., and P.D.; project administration, F.T., C.F., and P.D.; funding acquisition, F.T. and P.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analysed during this study. All supporting data are available upon request from the corresponding author, subject to ethical and privacy restrictions.

Acknowledgments

This work was carried out within the framework of research activities and scientific collaboration between the University of Basilicata and international institutions, including the Agricultural Engineering Department at the Federal University of Vale Jequitinhonha and Mucuri (Brazil), the School of Engineering at the Federal University of Lavras (Brazil), the Faculty of Agricultural Engineering at the State University of Campinas (Brazil), and the Laboratory on Geoinformatics and Cartography at Masaryk University (Czech Republic). This research falls within the scientific-disciplinary fields of agricultural engineering. The authors acknowledge the University of Basilicata and the “Casa delle Tecnologie Emergenti” of Matera, “Laboratorio del Giardino delle Tecnologie Emergenti”—CTEMT; the Smart@Irrifert Project (PSR Basilicata 2014–2020—Sottomisura 16.2, CUP G19J21004870006); and the “Vertical gaRdens with Digital twin of plants” Viridiis Project (PRIN 2022, CUP C53D23000480006) for their support. Martina Klocová was provided with the support for student projects entitled “Dynamics of the natural and social environment in geographical perspective” (MUNI/A/1648/2024).

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ML | Machine Learning |

| DPA | Digital Precision Agriculture |

| RGB | Red, Green, Blue |

| CPU | Central Processing Unit |

| GPS | Global Positioning System |

| LiDAR | Light Detection and Ranging |

| IMU | Inertial Measurement Unit |

| FCN | Fully Convolutional Network |

| VGG | Visual Geometry Group |

| NIR | Near-Infrared |

| YOLO | You Only Look Once |

| DCNN | Deep Convolutional Neural Network |

| CNN | Convolutional Neural Network |

| ReLU | Rectified Linear Unit |

| F1 score | Indicator of classification accuracy |

| SIFT | Scale-Invariant Feature Transform |

| SURF | Speeded-Up Robust Features |

| SVM | Support Vector Machine |

| MSM | Mutual Subspace Method |

| UAV | Unmanned Aerial Vehicle |

| RTK-GPS | Real-Time Kinematic-Global Positioning System |

| PSO-ANN | Particle Swarm Optimization–Artificial Neural Network |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| K-means | A clustering algorithm |

| RANSAC | Random Sample Consensus |

| K-NN | K-Nearest Neighbours |

| TRL | Technology Readiness Level |

| Config | Configuration |

| Adapt | Adaptability |

| Perc | Perception Ability |

| Decis | Decision-making |

References

- Malhi, G.S.; Kaur, M.; Kaushik, P. Impact of Climate Change on Agriculture and Its Mitigation Strategies: A Review. Sustainability 2021, 13, 1318. [Google Scholar] [CrossRef]

- Richard, B.; Qi, A.; Fitt, B.D.L. Control of crop diseases through Integrated Crop Management to deliver climate-smart farming systems for low- and high-input crop production. Plant Pathol. 2021, 70, 13493. [Google Scholar] [CrossRef]

- Jacquet, F.; Jeuffroy, M.-H.; Jouan, J.; Le Cadre, E.; Litrico, I.; Malausa, T.; Reboud, X.; Huyghe, C. Pesticide-free agriculture as a new paradigm for research. Agron. Sustain. Dev. 2022, 42, 8. [Google Scholar] [CrossRef]

- Khan, N.; Ray, R.L.; Sargani, G.R.; Ihtisham, M.; Khayyam, M.; Ismail, S. Current Progress and Future Prospects of Agriculture Technology: Gateway to Sustainable Agriculture. Sustainability 2021, 13, 4883. [Google Scholar] [CrossRef]

- Sims, B.; Kienzle, J. Sustainable Agricultural Mechanization for Smallholders: What Is It and How Can We Implement It? Agriculture 2017, 7, 50. [Google Scholar] [CrossRef]

- Daum, T. Mechanization and sustainable agri-food system transformation in the Global South. A review. Agron. Sustain. Dev. 2023, 43, 16. [Google Scholar] [CrossRef]

- Emami, M.; Almassi, M.; Bakhoda, H.; Kalantari, I. Agricultural mechanization, a key to food security in developing countries: Strategy formulating for Iran. Agric. Food Secur. 2018, 7, 24. [Google Scholar] [CrossRef]

- Scolaro, E.; Beligoj, M.; Estevez, M.P.; Alberti, L.; Renzi, M.; Mattetti, M. Electrification of Agricultural Machinery: A Review. IEEE Access 2021, 9, 164520–164541. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Domingues, T.; Brandão, T.; Ferreira, J.C. Machine Learning for Detection and Prediction of Crop Diseases and Pests: A Comprehensive Survey. Agriculture 2022, 12, 1350. [Google Scholar] [CrossRef]

- Radočaj, D.; Jurišić, M.; Gašparović, M. The Role of Remote Sensing Data and Methods in a Modern Approach to Fertilization in Precision Agriculture. Remote Sens. 2022, 14, 778. [Google Scholar] [CrossRef]

- DߣAntonio, P.; Mehmeti, A.; Toscano, F.; Fiorentino, C. Operating performance of manual, semi-automatic, and automatic tractor guidance systems for precision farming. Res. Agric. Eng. 2023, 69, 179–188. [Google Scholar] [CrossRef]

- Fiorentino, C.; D’Antonio, P.; Toscano, F.; Capece, N.; Conceição, L.A.; Scalcione, E.; Modugno, F.; Sannino, M.; Colonna, R.; Lacetra, E.; et al. Smart Sensor and AI-Driven Alert System for Optimizing PGI Red Peppers Drying in Southern Italy. Sustainability 2025, 17, 1682. [Google Scholar] [CrossRef]

- Bai, Y.; Zhang, B.; Xu, N.; Zhou, J.; Shi, J.; Diao, Z. Vision-based Navigation and Guidance for Agricultural Autonomous Vehicles and Robots: A Review. Comput. Electron. Agric. 2022, 192, 107584. [Google Scholar] [CrossRef]

- Heikkilä, M.; Suomalainen, J.; Saukko, O.; Kippola, T.; Lähetkangas, K.; Koskela, P.; Kalliovaara, J.; Haapala, H.; Pirttiniemi, J.; Yastrebova, A.; et al. Unmanned Agricultural Tractors in Private Mobile Networks. Network 2022, 2, 1–20. [Google Scholar] [CrossRef]

- Toscano, F.; Fiorentino, C.; Capece, N.; Erra, U.; Travascia, D.; Scopa, A. Unmanned Aerial Vehicle for Precision Agriculture: A Review. IEEE Access 2024, 12, 3401018. [Google Scholar] [CrossRef]

- Abbas, I.; Liu, J.; Faheem, M.; Noor, R.S.; Shaikh, S.A.; Solangi, K.A.; Raza, S.M. Different Sensor-Based Intelligent Spraying Systems in Agriculture. Sens. Actuators A Phys. 2020, 312, 112265. [Google Scholar] [CrossRef]

- Kulbacki, M.; Segen, J.; Knieć, W.; Klempous, R.; Kluwak, K.; Kulbacki, J.N.M. Survey of Drones for Agriculture Automation from Planting to Harvest. In Proceedings of the 2018 IEEE 22nd International Conference on Intelligent Engineering Systems (INES), Las Palmas de Gran Canaria, Spain, 21–23 June 2018; pp. 353–358. [Google Scholar] [CrossRef]

- Alves, R.G.; Maia, R.F.; Lima, F. Development of a Digital Twin for Smart Farming: Irrigation Management System for Water Saving. J. Clean. Prod. 2023, 388, 135920. [Google Scholar] [CrossRef]

- Obaideen, K.; Yousef, B.A.A.; AlMallahi, M.N.; Tan, Y.C.; Mahmoud, M.; Jaber, H.; Ramadan, M. An Overview of Smart Irrigation Systems Using IoT. Nexus 2022, 7, 100124. [Google Scholar] [CrossRef]

- Mohamed, E.S.; Belal, A.A.; Abd-Elmabod, S.K.; El-Shirbeny, M.A.; Gad, A.; Zahran, M.B. Smart Farming for Improving Agricultural Management. Eur. J. Remote Sens. 2021, 54, 123–135. [Google Scholar] [CrossRef]

- Ruiz-Sarmiento, J.-R.; Monroy, J.; Moreno, F.-A.; Galindo, C.; Bonelo, J.-M.; Gonzalez-Jimenez, J. A Predictive Model for the Maintenance of Industrial Machinery in the Context of Industry 4.0. Eng. Appl. Artif. Intell. 2020, 82, 103289. [Google Scholar] [CrossRef]

- Muhie, S.H. Novel Approaches and Practices to Sustainable Agriculture. J. Agric. Food Res. 2022, 8, 100446. [Google Scholar] [CrossRef]

- Gonzalez-de-Soto, M.; Emmi, L.; Perez-Ruiz, M.; Aguera, J.; Gonzalez-de-Santos, P. Autonomous Systems for Precise Spraying —Evaluation of a Robotised Patch Sprayer. Biosyst. Eng. 2016, 146, 79–97. [Google Scholar] [CrossRef]

- Albiero, D.; Garcia, A.P.; Umezu, C.K.; de Paulo, R.L. Swarm Robots in Mechanized Agricultural Operations: A Review about Challenges for Research. Comput. Electron. Agric. 2022, 193, 106608. [Google Scholar] [CrossRef]

- Shahrooz, M.; Talaeizadeh, A.; Alasty, A. Agricultural Spraying Drones: Advantages and Disadvantages. In Proceedings of the 2020 Virtual Symposium in Plant Omics Sciences (OMICAS), Bogotá, Colombia, 23–27 November 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Lochan, K.; Khan, A.; Elsayed, I.; Suthar, B.; Seneviratne, L.; Hussain, I. Advancements in Precision Spraying of Agricultural Robots: A Comprehensive Review. IEEE Access 2024, 12, 129447–129483. [Google Scholar] [CrossRef]

- Honrao, D.V.; Awadhani, L.V. Design and development of agricultural spraying system. Mater. Today Proc. 2023, 77, 734–738. [Google Scholar] [CrossRef]

- Řezník, T.; Herman, L.; Klocová, M.; Leitner, F.; Pavelka, T.; Leitgeb, Š.; Trojanová, K.; Štampach, R.; Moshou, D.; Mouazen, A.M.; et al. Towards the Development and Verification of a 3D-Based Advanced Optimized Farm Machinery Trajectory Algorithm. Sensors 2021, 21, 2980. [Google Scholar] [CrossRef]

- Ban, C.; Wang, L.; Chi, R.; Su, T.; Ma, Y. A Camera-LiDAR-IMU Fusion Method for Real-Time Extraction of Navigation Line Between Maize Field Rows. Comput. Electron. Agric. 2024, 223, 109114. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Chebrolu, N.; Milioto, A.; Stachniss, C. Robust Joint Stem Detection and Crop-Weed Classification Using Image Sequences for Plant-Specific Treatment in Precision Farming. J. Field Robot. 2019, 37, 20–34. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Diao, Z.; Zhou, J.; Yao, X.; Zhang, B. Row Detection BASED Navigation and Guidance for Agricultural Robots and Autonomous Vehicles in Row-Crop Fields: Methods and Applications. Agronomy 2023, 13, 1780. [Google Scholar] [CrossRef]

- Arsalan, M.; Rashid, A.; Khan, K.; Imran, A.; Khan, F.; Akbar, M.A.; Cheema, H.M. Real-Time Precision Spraying Application for Tobacco Plants. Smart Agric. Technol. 2024, 8, 100497. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Zeng, X. Deep Learning Improved YOLOv8 Algorithm: Real-Time Precise Instance Segmentation of Crown Region Orchard Canopies in Natural Environment. Comput. Electron. Agric. 2024, 224, 109168. [Google Scholar] [CrossRef]

- Jin, X.; Liu, T.; Chen, Y.; Yu, J. Deep Learning-Based Weed Detection in Turf: A Review. Agronomy 2022, 12, 3051. [Google Scholar] [CrossRef]

- Pattanaik, B.; Malibari, A.; Kumarasamy, M.; Nagaraj, V.; Gopikrishnan, M. Design and Evaluation of a Deep CNN Algorithm for Detecting Farm Weeds. Int. J. Environ. Sci. Manag. Syst. 2023, 71, 71–79. [Google Scholar] [CrossRef]

- Huynh, N.; Nguyen, K.-D. Real-Time Droplet Detection for Agricultural Spraying Systems: A Deep Learning Approach. Mach. Learn. Knowl. Extr. 2024, 6, 259–282. [Google Scholar] [CrossRef]

- Modi, R.U.; Kancheti, M.; Subeesh, A.; Raj, C.; Singh, A.K.; Chandel, N.S.; Dhimate, A.S.; Singh, M.K.; Singh, S. An Automated Weed Identification Framework for Sugarcane Crop: A Deep Learning Approach. Crop Prot. 2023, 173, 106360. [Google Scholar] [CrossRef]

- Gao, P.; Zhang, Y.; Zhang, L.; Noguchi, R.; Ahamed, T. Development of a Recognition System for Spraying Areas from Unmanned Aerial Vehicles Using a Machine Learning Approach. Sensors 2019, 19, 313. [Google Scholar] [CrossRef]

- Mukherjee, D.; Das, A.; Ghosh, N.; Nanda, S. Real Time Agricultural Monitoring with Deep Learning Using Wireless Sensor Framework. In Proceedings of the 2023 International Conference on Electrical, Electronics, Communication and Computers (ELEXCOM), Roorkee, India, 26–27 August 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Mohsin, K.; Chandravadhana, S.; Chaudhari, V.; Balasaranya, K.; Pari, R.; Srinivasarao, B. The Deployment of Machine Learning and On-Board Vision Systems for an Unmanned Aerial Sprayer for Pesticides. J. Mach. Comput. 2025, 5, 600–610. [Google Scholar] [CrossRef]

- Kameswari, S.S.D.; Prabhakar, T.; Kishore, K.K. Autonomous Pesticide Spraying Robot Using SVM. In Proceedings of the International Conference on Wireless Communication, Mumbai, India, 8–9 October 2021; Vasudevan, H., Gajic, Z., Deshmukh, A.A., Eds.; Lecture Notes on Data Engineering and Communications Technologies. Springer: Singapore, 2022; Volume 92. [Google Scholar] [CrossRef]

- Wijesundara, W.M.T.D.; Wanigathunga, T.D.; Waas, M.N.C.; Hithanadura, R.T.; Munasinghe, S.R. Accurate Crop Spraying with RTK and Machine Learning on an Autonomous Field Robot. arXiv 2023. [Google Scholar] [CrossRef]

- Khan, N.; Medlock, G.; Graves, S.; Anwar, S. GPS Guided Autonomous Navigation of a Small Agricultural Robot with Automated Fertilizing System. In SAE Technical Paper; SAE International: Warrendale, PA, USA, 2018. [Google Scholar] [CrossRef]

- Huynh, T.N.; Burgers, T.; Nguyen, K.-D. Efficient Real-Time Droplet Tracking in Crop-Spraying Systems. Agriculture 2024, 14, 1735. [Google Scholar] [CrossRef]

- Faical, B.S.; Ueyama, J.; de Carvalho, A.C.P.L.F. The Use of Autonomous UAVs to Improve Pesticide Application in Crop Fields. In Proceedings of the 2016 17th IEEE International Conference on Mobile Data Management (MDM), Porto, Portugal, 13–16 June 2016; pp. 32–33. [Google Scholar] [CrossRef]

- Göktogan, A.H.; Sukkarieh, S. Autonomous Remote Sensing of Invasive Species from Robotic Aircraft. In Handbook of Unmanned Aerial Vehicles; Springer: Berlin/Heidelberg, Germany, 2015; pp. 2813–2834. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Navigation of an Autonomous Spraying Robot for Orchard Operations Using LiDAR for Tree Trunk Detection. Sensors 2023, 23, 4808. [Google Scholar] [CrossRef] [PubMed]

- Thakur, A.; Kumar, A.; Mishra, S.K. Control Techniques for Vision-Based Autonomous Vehicles for Agricultural Applications: A Meta-analytic Review. In Artificial Intelligence: Theory and Applications; Sharma, H., Chakravorty, A., Hussain, S., Kumari, R., Eds.; Lecture Notes in Networks and Systems; Springer: Singapore, 2024; Volume 843. [Google Scholar] [CrossRef]

- Balasingham, D.; Samarathunga, S.; Arachchige, G.G.; Bandara, A.; Wellalage, S.; Pandithage, D.; Hansika, M.M.D.J.T.; De Silva, R. SPARROW: Smart Precision Agriculture Robot for Ridding of Weeds. In Proceedings of the 2024 5th International Conference for Emerging Technology (INCET), Belgaum, India, 24–26 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Ji, X.; Li, Y.; Hong, K.; Cao, J. Design of a Fully Autonomous Indoor Spray Robot. Int. J. Intell. Robot. Appl. 2023, 7, 763–777. [Google Scholar] [CrossRef]

- Danton, A.; Roux, J.-C.; Dance, B.; Cariou, C.; Lenain, R. Development of a Spraying Robot for Precision Agriculture: An Edge Following Approach. In Proceedings of the 2020 IEEE Conference on Control Technology and Applications (CCTA), Montreal, QC, Canada, 24–26 August 2020; pp. 267–272. [Google Scholar] [CrossRef]

- Alshbatat, A.I.N.; Awawdeh, M. On the Development of Intelligent Autonomous Agricultural Robot. In Proceedings of the 2024 Advances in Science and Engineering Technology International Conferences (ASET), Abu Dhabi, United Arab Emirates, 3–5 June 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Singh, E.; Pratap, A.; Mehta, U.; Azid, S.I. Smart Agriculture Drone for Crop Spraying Using Image-Processing and Machine Learning Techniques: Experimental Validation. IoT 2024, 5, 250–270. [Google Scholar] [CrossRef]

- Sousa, J.J.; Toscano, P.; Matese, A.; Di Gennaro, S.F.; Berton, A.; Gatti, M.; Poni, S.; Pádua, L.; Hruška, J.; Morais, R.; et al. UAV-Based Hyperspectral Monitoring Using Push-Broom and Snapshot Sensors: A Multisite Assessment for Precision Viticulture Applications. Sensors 2022, 22, 6574. [Google Scholar] [CrossRef] [PubMed]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Peres, E.; Morais, R.; Sousa, J.J. Multi-Temporal Vineyard Monitoring through UAV-Based RGB Imagery. Remote Sens. 2018, 10, 1907. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Sousa, J.J.; Bento, A.; Couto, P. Identification of Aphids Using Machine Learning Classifiers on UAV-Based Multispectral Data. In Proceedings of the 2023 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Pasadena, CA, USA, 16–21 July 2023. [Google Scholar] [CrossRef]

- Sitompul, E.; Gubarda, M.R.; Sihombing, P.; Simarmata, T.; Turnip, A. Sprayer System on Autonomous Farming Drone Based on Decision Tree. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Bandung, Indonesia, 21–23 February 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Abioye, A.E.; Larbi, P.A.; Hadwan, A.A.K. Deep Learning Guided Variable Rate Robotic Sprayer Prototype. Smart Agric. Technol. 2024, 9, 100540. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).