1. Introduction

Climate change is increasingly affecting agriculture, but at the same time, the world population is growing, which means that it is necessary to promote the efficiency and productivity of farming. As a result, data-based decision-making plays a crucial role in agriculture, ensuring efficient farming. This also applies to the management of commercial fruit orchards. During the spring, auditing flowering intensity is important for understanding the necessary actions of orchard management [

1]. Flowering influences orchard fertilizing and pruning strategy and the necessity of flower and fruitlet thinning that affects fruit quality and stability of yielding year by year, etc. [

2,

3]. In the end, such information gives the possibility to be better prepared for harvest time—arranging workforce, managing storage facilities, selling activities, etc. Therefore, it is necessary to have tools already in the springtime to estimate flowering intensity and to predict fruit yields [

1].

Yield prediction can be performed using regression models based on the detected number of flowers, fruitlets, or fruits depending on prediction time. Therefore, yield prediction depends on the accuracy of object counting, which is completed by artificial intelligence (AI) using photos collected by agrobots and unmanned aerial vehicles (UAVs). However, the real number of objects must be predicted because AI counts only visible objects, but many flowers, fruitlets, and fruits are hidden in the foliage of trees (observational error) or are invisible from the side of image capture. The real number of objects can be estimated using a linear regression model processing the number of objects detected by computer vision. This approach was applied by Lin, J. et al. (2024) [

4] to count lychee flowers and by Cai, E. et al. (2021) [

5] to count panicles of Sorghum plants. The linear regression model was applied to predict the real number of fruits too, as follows: Nisar, H. et al. (2015) [

6] predicted the yield of dragon fruits, but Brio, D. et al. (2023) [

7] predicted the yield of apples and pears.

Brio, D. et al. (2023) [

7] completed a comprehensive analysis of fruit counting using computer vision. Their experiment showed that the Pearson correlation for manually counted fruits per tree and the count detected by a deep learning model trained from image analysis was up to 0.88 and 0.73 for apples and pears, respectively. Meanwhile, Farjon, G. et al. (2019) [

8] indicated a Pearson correlation of 0.78–0.93 for counting apple flowers. But, Linker, R. (2016) [

9] investigated the accuracy dependence on two or six photos per tree captured from different sides. He concluded that the usage of images from only one side of the tree does not worsen the results significantly.

While the observation error depends on environmental conditions and remote sensing, the measurement error depends on the accuracy of AI. The modern development of AI primarily relies on data-based methods. For example, Brio, D. et al. (2023) [

7] applied YOLOv5s6 and YOLOv7 for apple and pear counting, but Lin, J. et al. (2023) [

4] applied YOLACT++ for litchi (

Litchi chinensis) flower counting. However, the data-based solutions require large amounts of data to train AI. Meanwhile, the annotation of flowers is a time-consuming process because it is hard to distinguish individual flowers in photos due to the low resolution of images, noise-flowers in the background, and the density of flowers in panicles. Our pilot test showed that image annotation takes approximately 15 min per image (320 × 320 px) with flowers of apples.

The aim of this study is to develop a simple method to train YOLO models for flowering intensity estimation. The objectives of this study are as follows: (1) to prepare a small dataset of flowering trees; (2) to train YOLO models for flowering intensity evaluation; and (3) to compare the AI-based method with the manual method. The use-case of the experiment is flowering apple trees. Two popular object detection architectures were selected for the experiment, YOLOv9 [

10] and YOLO11 [

11].

In this study, we present the method of flowering intensity estimation using computer vision. The method is based on the annotation of small images (320 × 320 px), which are randomly grouped into 640 × 640 px images using Python script before YOLO training with an input layer of 640 × 640 because the input layer of pretrained YOLO models is 640 × 640. The experiment showed that 100 images (320 × 320 px) are enough to achieve accuracy of 0.995 and 0.975 mAP@50 and 0.974 and 0.977 mAP@50:95 for YOLOv9m and YOLO11m. When YOLO models were trained, the accuracies of the manual and AI-based methods were compared using data analysis and a MobileNetV2 classifier as an evaluation model.

4. Discussion

The number of fruits is correlated with the number of flowers produced by each tree. Therefore, this parameter is important for fruit growers to plan and allocate human and economic resources during the harvesting season [

19]. Meanwhile, the flowering intensity is an essential parameter for flower thinning, fruit yield, and fruit quality [

3]. Traditional flower counting is completed manually by fruit growers based on their experience and requires frequent observation and professional knowledge. However, this approach is time-consuming and prone to errors [

4]. Therefore, different authors studied the possibility of automating flower counting using computer vision [

3,

5,

19,

20]. However, it is not a trivial object detection problem, and plenty of challenges are mentioned by researchers.

Firstly, there are the tight clusters of flowers. It is extremely difficult for the model to differentiate objects. This issue is not only a problem for the model, but the annotation by hand of the dataset is also very confusing and difficult [

20]. Secondly, the presence of background trees and flowers introduces further complexity, as it can potentially confuse the recognition models [

19]. Additionally, the researchers mention the accuracy limitations of the YOLO architecture to detect small and high-density objects such as flowers [

3,

19]. Lin, J. et al. (2024) [

4] proposed a special framework with YOLACT++, FlowerNet, and regression models to improve flower counting through Density Map. Meanwhile, Estrada, J.S. et al. (2024) [

19] compared the accuracy of YOLOv5, YOLOv7, and YOLOv8 with Density Map, where Density Map showed better results.

Of course, it is important to improve object detection architectures to search small and high-density objects such as flowers. However, there are doubts about AI accuracy. The image depicts only one side of the tree, which can be analyzed by an object detection model. Other flowers must be predicted by a regression model or precise 3D reconstruction of the tree is required. A similar problem was studied by Lin, J. et al. (2024) [

4] and Nisar, H. et al. (2015) [

6], who applied regression models to predict the real number of objects. Meanwhile, Linker, R. (2016) [

9] concluded that the usage of images from only one side of the tree does not worsen the results significantly. Regression models are constructed based on statistical tuples (

x,

y), where

x is the input value, and

y is the predictable value. Therefore, yield predictors must be tuned for each orchard individually to consider the local climate and orchard management. Another important fact is the accuracy of manually counted flowers. For example, Lin, J. et al. (2024) [

4] completed the correlation test between the image flower count and the actual flower count investigating that the actual count of single-panicle flowers is highly positively correlated with both the manually annotated image count (

p < 0.01, r = 0.941) and the predicted flower count from the FlowerNet model (

p < 0.01, r = 0.907). Meanwhile, the annotation of flowers is a subjective process, and a data annotator is restricted to the picture visible on the image. Additionally, if the photo shot is completed from another side, the resulting number of flowers will be different. Considering the linear regression model, the prediction error will be proportional to the input error. For example, Lin, J. et al. (2024) [

4] mentioned that the results obtained from Density Map-based methods can exhibit significant variation when images are captured at different distances. Also, YOLO models work using the parameter “confidence”: the higher the confidence is set, the smaller the number of objects returned by the model. A visual example is presented in

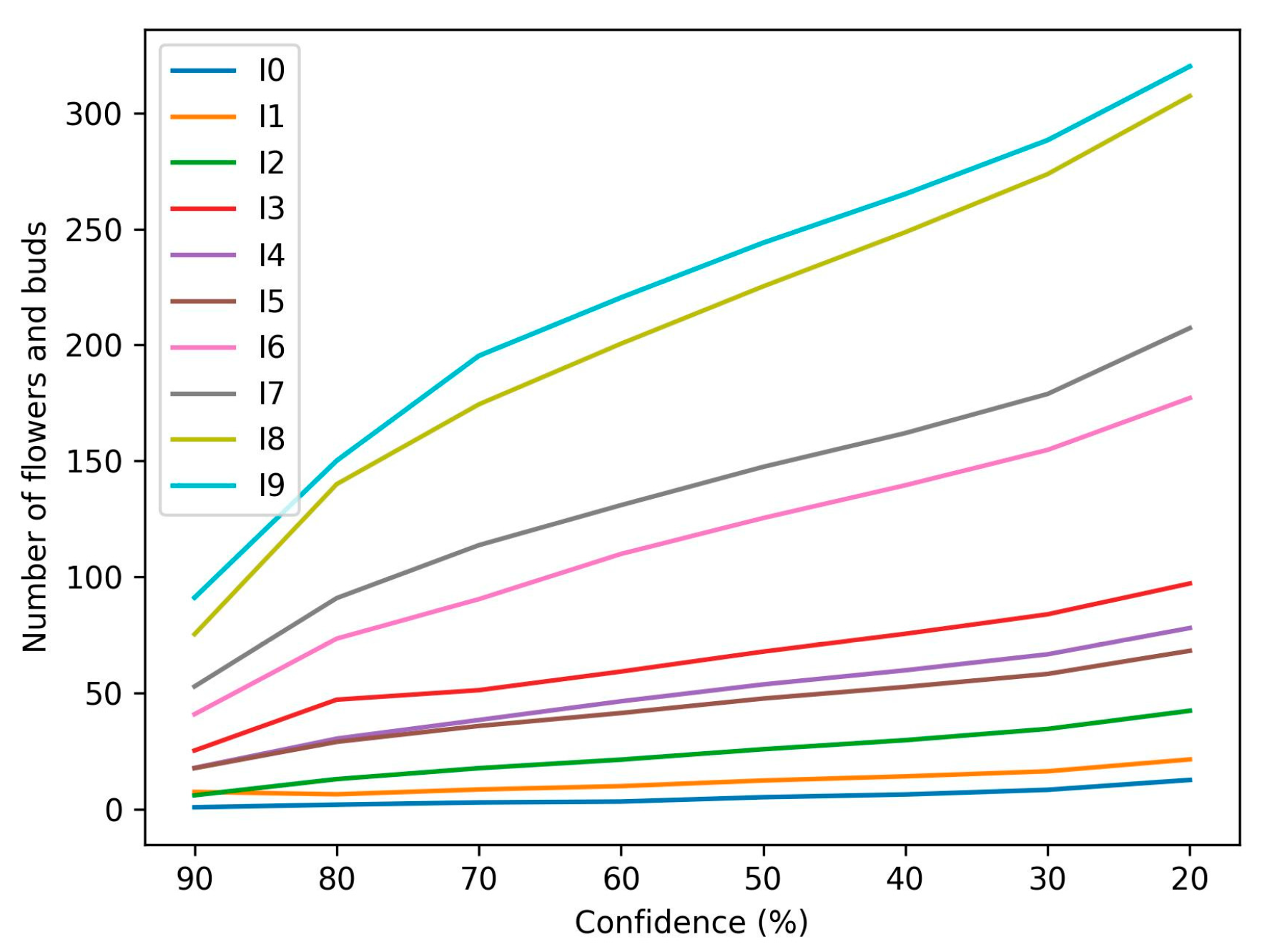

Figure 7 and

Figure 11b. In laboratory conditions, it is simple to find the optimal confidence to obtain minimal errors for a regression model. However, it is not possible in field conditions, where confidence must be strictly defined for a final product.

Another ambiguity is flowering intensity itself. The flowering intensity must be estimated for each cultivar individually, which is performed based on the experience of fruit growers [

4], [

12]. For example, Chen, Z. et al. (2022) [

3] trained the modified YOLOv5 model to detect six stages of apple flower: (1) bud bursts; (2) tight clusters (green bud stage); (3) balloon blossom (most flowers with petals forming a hollow ball—stage 59 by BBCH scale of pome fruits); (4) king bloom (first flower open—BBCH 60); (5) full bloom, where at least 50% of flowers open and first petals are falling (BBCH 65); and (6) petal fall—end of flowering (BBCH 69). It was conducted with the objective of identifying the blooming peak day because the timing of flower thinning is generally decided by the ratio of “king bloom” clusters and “full bloom” clusters. Meanwhile, Lin, J. et al. (2024) [

4] did not differentiate the stages of flowers; the collected dataset contained two litchi flower varieties, Guiwei and Feizi Xiao, and images of the first-period male litchi flowers during the early flowering stage. Estrada, J.S. et al. (2024) [

19] did not identify different stages of flowers too. Lee, J. et al. (2022) [

20] studied one cultivar of apple trees (

Ambrosia) and identified two types of flowers: “Fruit Flower Single” and “Fruit Flower Cluster”. In our study, the fruit growers estimated flower intensity by a 10-point system (0–9 points). So, all developers of flower detectors [

3,

4,

19,

20] wanted to improve manual flower counting (subjective visual estimation) by replacing it with a precise tool. However, all developed models present different flower intensity estimation techniques, which are influenced by local fruit-growing traditions and their understanding by AI developers.

The same initiative motivated our study—to automate flower intensity estimation to assist fruit growers with a smart tool. However, the annotation of flowers and buds is a time-consuming process. Our pilot test showed that image annotation takes approximately 15 min per image (320 × 320 px) with flowers of apples. Considering Adhikari et al. (2018) [

21], the manual annotation takes about 15.35 s per bounding box and its correction for the indoor scene. In our dataset, the average number of objects was 26.42 and the maximum was 92, which would be 6 min 25 sec and 23 min 32 sec, respectively, but the images of flowers are more complex. Therefore, the double long time (15 min) is comparable with the results presented by Adhikari et al. (2018) [

21]. Due to a time-consuming process of the annotation, we investigated the simple training method (see

Figure 4) and achieved excellent accuracy for YOLOv9m, 0.993–0.995 mAP@50 and 0.967–0.974 mAP@50:95, and for YOLO11m, 0.992–0.994 mAP@50 and 0.970–0.977 mAP@50:95.

However, if the ideal flower counter is developed, knowledge about the number of flowers is not sufficient for efficient decision-making because there are plenty of factors that can impact fruit yield, like an orchard system itself. Orchards in uncovered areas are directly affected by external environmental factors—excessive heat, excessive drought, excessive cold, storms, etc.—which have been increasingly observed in recent years. Therefore, risk-based management can be applied in this situation. This means that a risk management plan is developed based on which the yield forecast is created throughout the season. The risk management plan identifies possible risks, classifies risks, and assesses the impact and likelihood of risks. Most importantly, for this information to be used in yield forecasting, based on the results of the risk assessment and the actions determined for each risk (mitigation, buffering, acceptance), the impact of each risk on the yield is quantified. For example, if the storm speed is 35 m/s, the impact on the yield reduction is 15%. In this case, it is assumed that if this risk occurs, the yield will be 15% lower than was initially determined by counting flowers. As mentioned earlier, yield forecasting is essential for making accurate decisions to plan sales, the number of employees needed, etc. Considering the risks, it is not possible to obtain precise yield prediction without monitoring in real time. Meanwhile, the high precision requires the 3D reconstruction of flowering trees, which is challenging and costly for modern industry. Zhang et al. (2023) [

22] presented a solution based on the application of UAVs. The flower intensity estimation is based on the image captured from the top (sky), tree segmentation, and the calculation of flower pixels. This solution provides the next advantages: (1) it automatizes yield monitoring by using UAVs that fly over trees; (2) the images do not have noise-flowers in the background; and (3) considering Linker, R. (2016) [

9], the usage of images from only one side of the tree does not worsen the prediction results, and the linear regression models can be constructed based on pixel indices. However, this approach has disadvantages too—the monitoring distance interrupts to capture the flower buds and provide flower stage recognition. Considering the method, Zhang et al. (2023) [

22] tested the accuracy based on man-made flowering intensity estimation (1–9 points). As it was shown, the manual evaluation is subjective and provides the perception error. Therefore, two technologies must be developed together. Replacing the manual flowering intensity estimation with computer vision, it will be possible to develop more precise linear regression models for UAV solutions. Also, YOLO models can be embedded into agrobots for automatic flower thinning, which is another function and service for horticulture.