In-Field Forage Biomass and Quality Prediction Using Image and VIS-NIR Proximal Sensing with Machine Learning and Covariance-Based Strategies for Livestock Management in Silvopastoral Systems

Abstract

1. Introduction

2. Plant Physiology, Spectral Data, and Imaging for Megathyrsus maximus cv. Mombasa Characterization

2.1. Plant Spectra for Biomass Estimation and Quality Assessment

2.2. Characterization of Biomass and Quality Traits of Megathyrsus maximus cv. Mombasa

3. Materials and Methods

3.1. In-Field Experiments

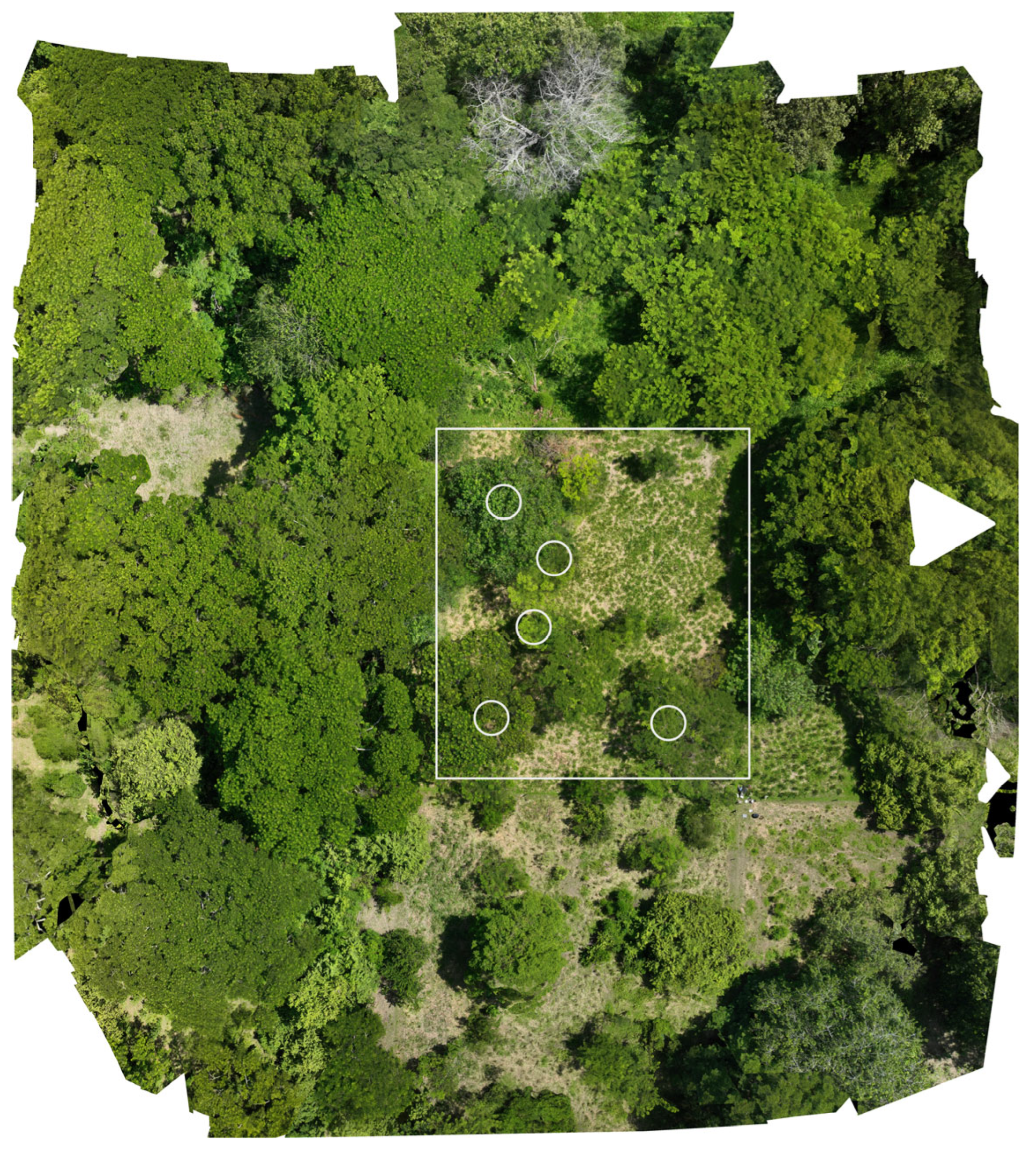

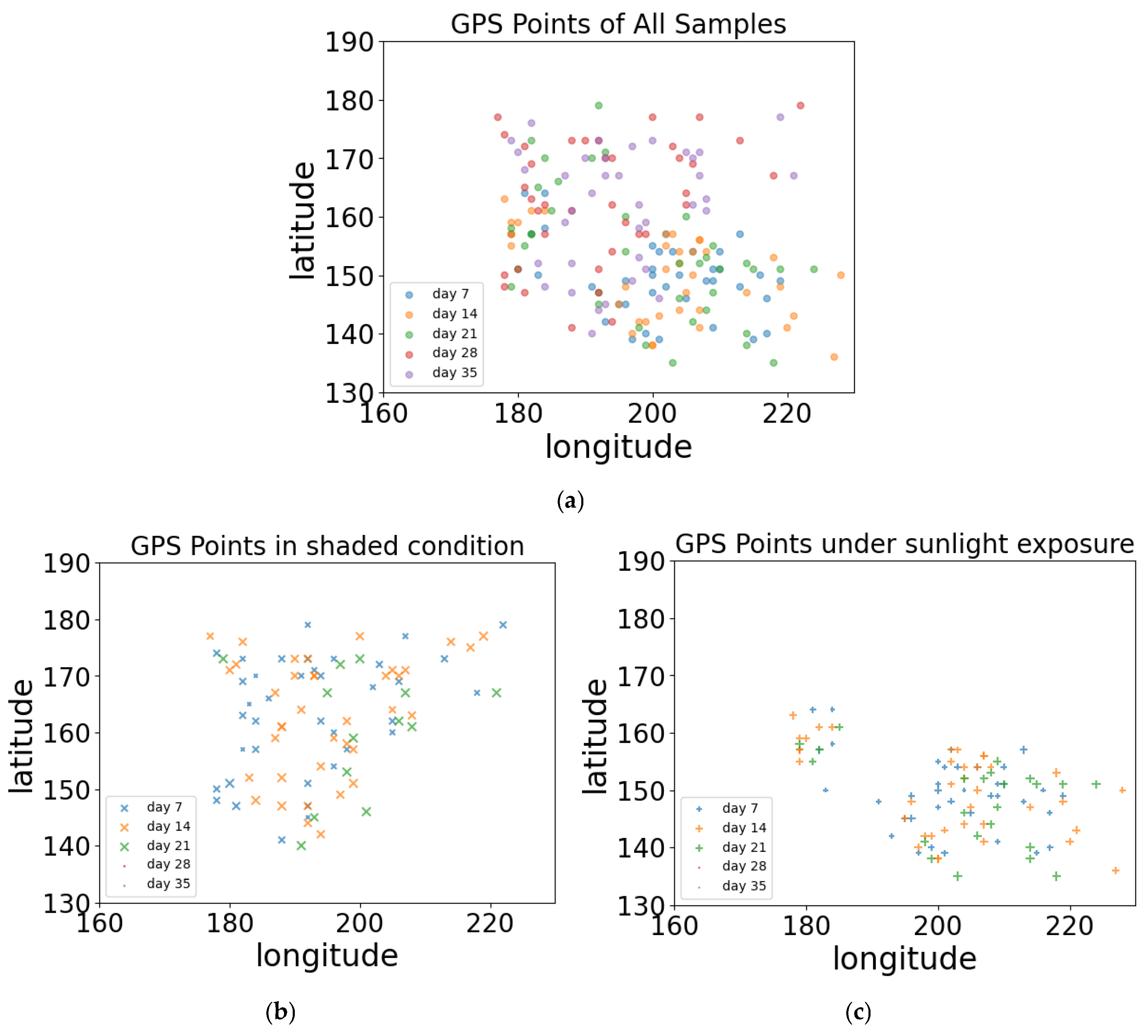

3.1.1. Experimental Design and Sampling Location

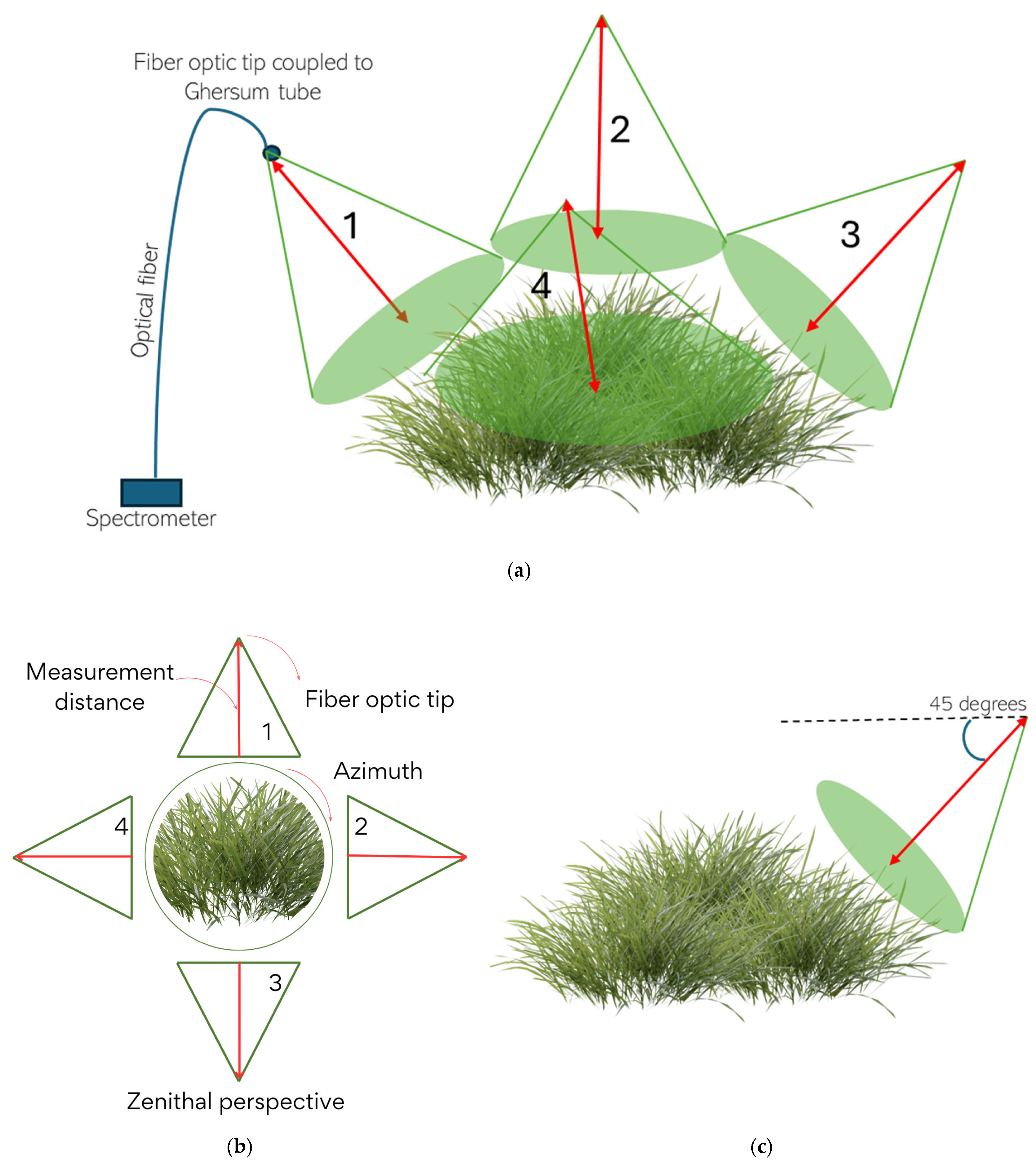

3.1.2. Forage Sampling Method

3.1.3. Forage Evaluation

- Height per tussock: This is measured in cm from the ground to the apical leaf (without compressing or extending it), excluding the inflorescence. A tape measure with a resolution of 0.1 cm was used.

- Green leaves per stem: To estimate the number of green leaves per stem, 20% of the total stems in the tussock were sampled. The green leaves on each stem were counted, from the basal leaf to the apical leaf, and the mode of the values of the sample was recorded.

- Number of total green leaves per tussock: The total number of green leaves was manually counted.

- Biomass per tussock (DMY): A cut was made at a height of 30 cm using an electric hedge trimmer. The material was collected, and its fresh weight was measured in situ using a digital scale with a resolution of 0.1 g. Subsequently, a 250 g subsample was taken and dried in an oven at 65 °C for 48 h to calculate the dry matter based on the difference between the fresh and dry weights of the samples. The dry weight of the tussock was then calculated by multiplying its fresh weight by its dry matter concentration.

3.1.4. Bromatological Analysis

3.2. In-Field Database Analysis

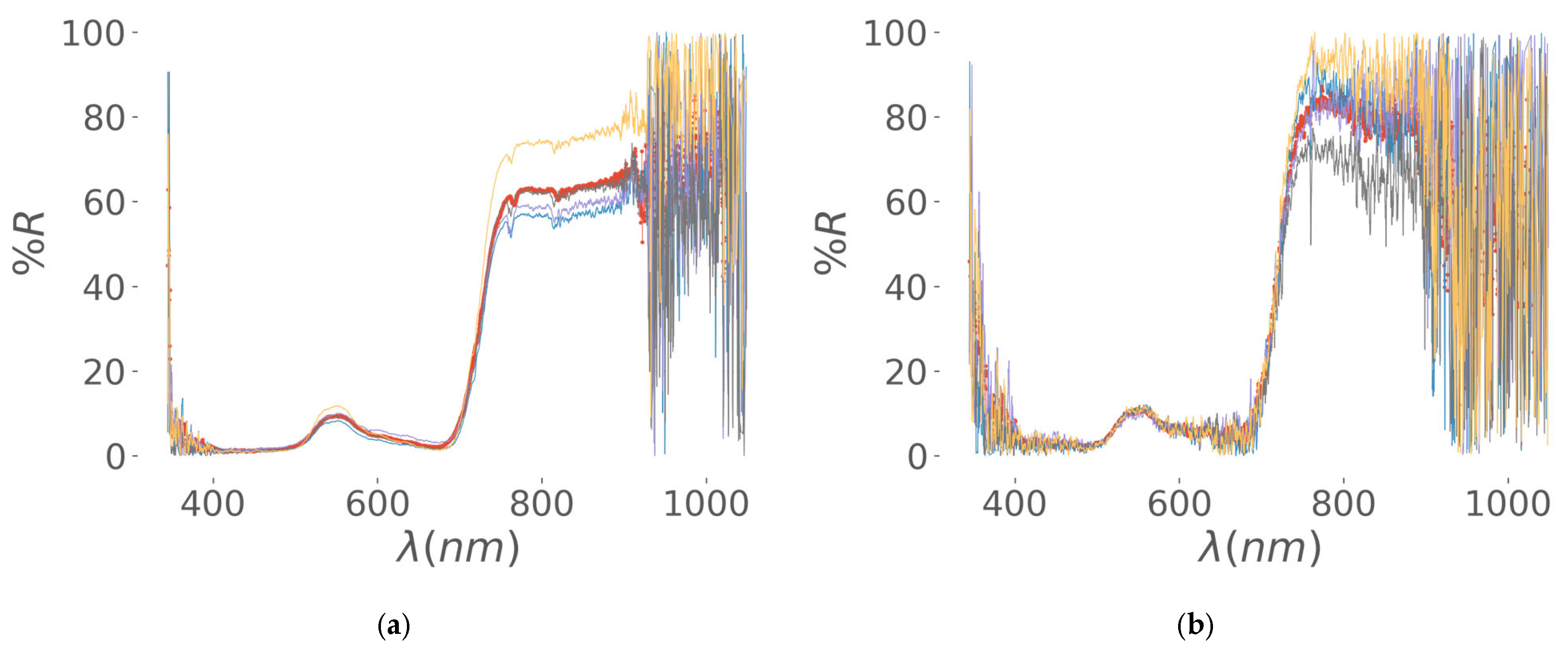

3.2.1. Data Engineering of VIS-NIR Optical Spectra, Segmentation of Visible RGB Images, and Implementation of Covariance Strategies

3.2.2. Machine Learning Model Description

- Ensuring that correlated spectral variables do not distort coefficient estimates.

- Preventing overfitting, especially when working with high dimensional datasets, as seen in spectral analysis.

- Improving generalization by allowing the model to perform better on unseen data by reducing variance.

4. Results

4.1. In-Field Dataset Configuration and Visualization

4.1.1. Forage Sampling Results

4.1.2. Bromatology Quality Results

4.2. Final Database Configuration

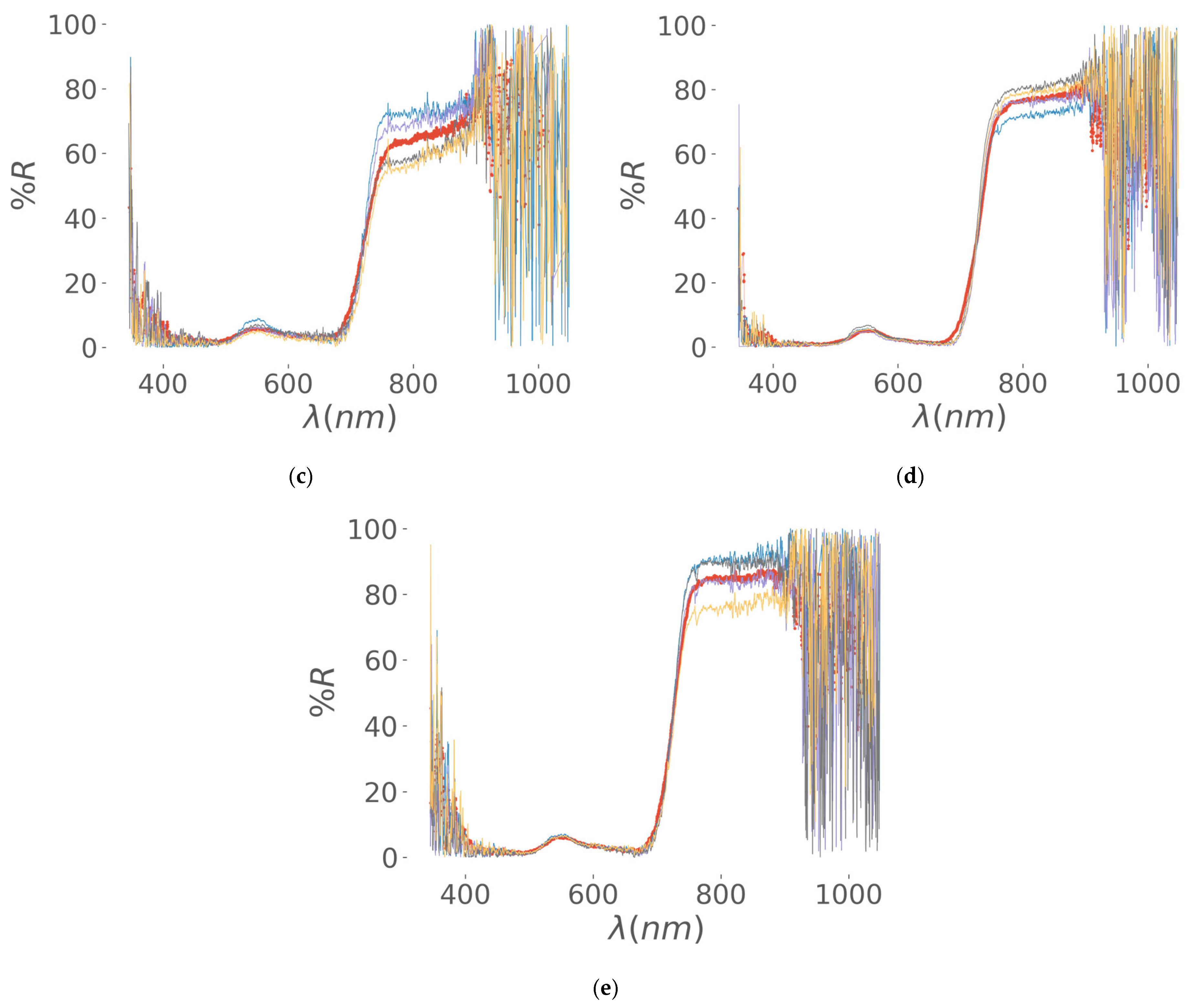

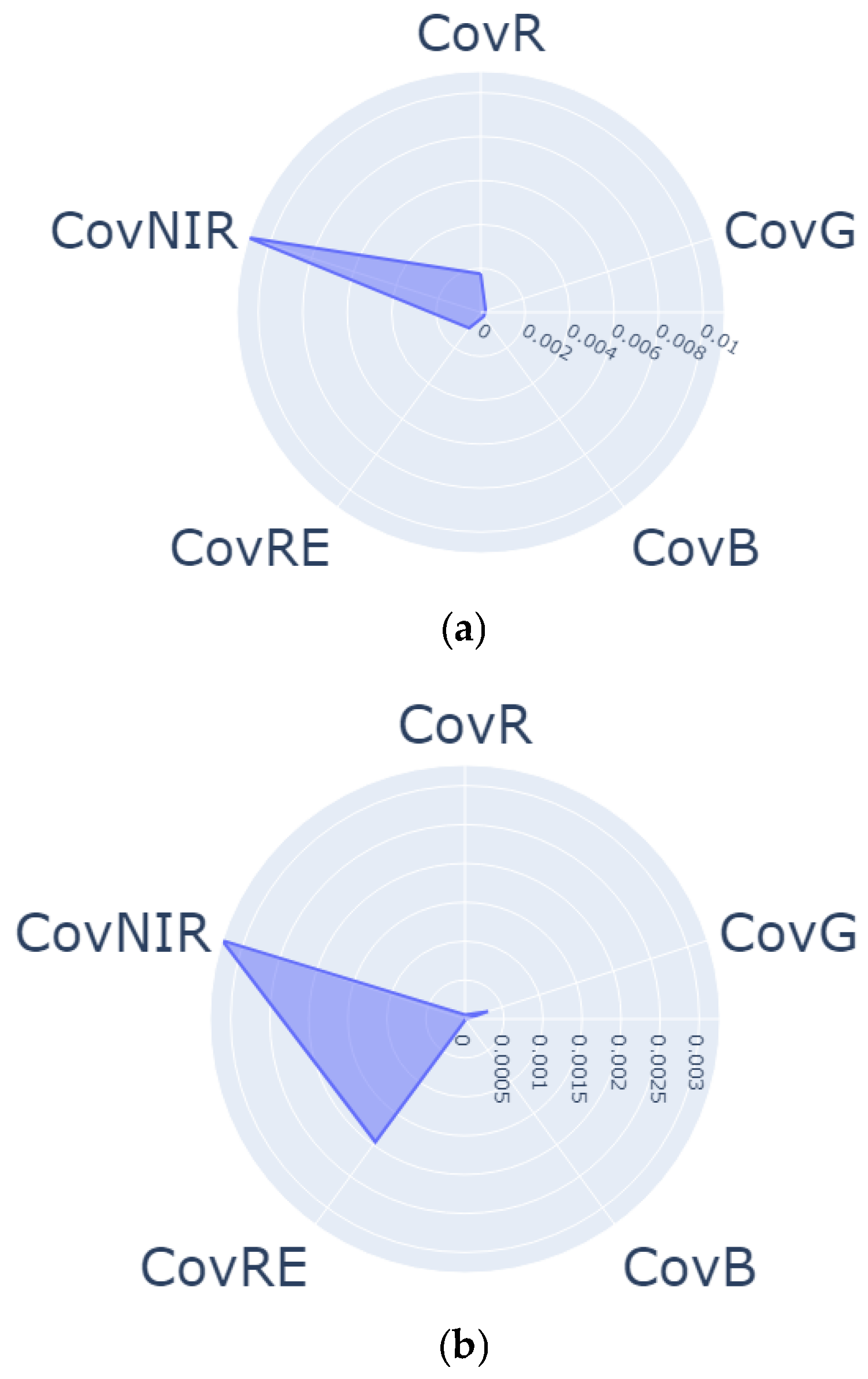

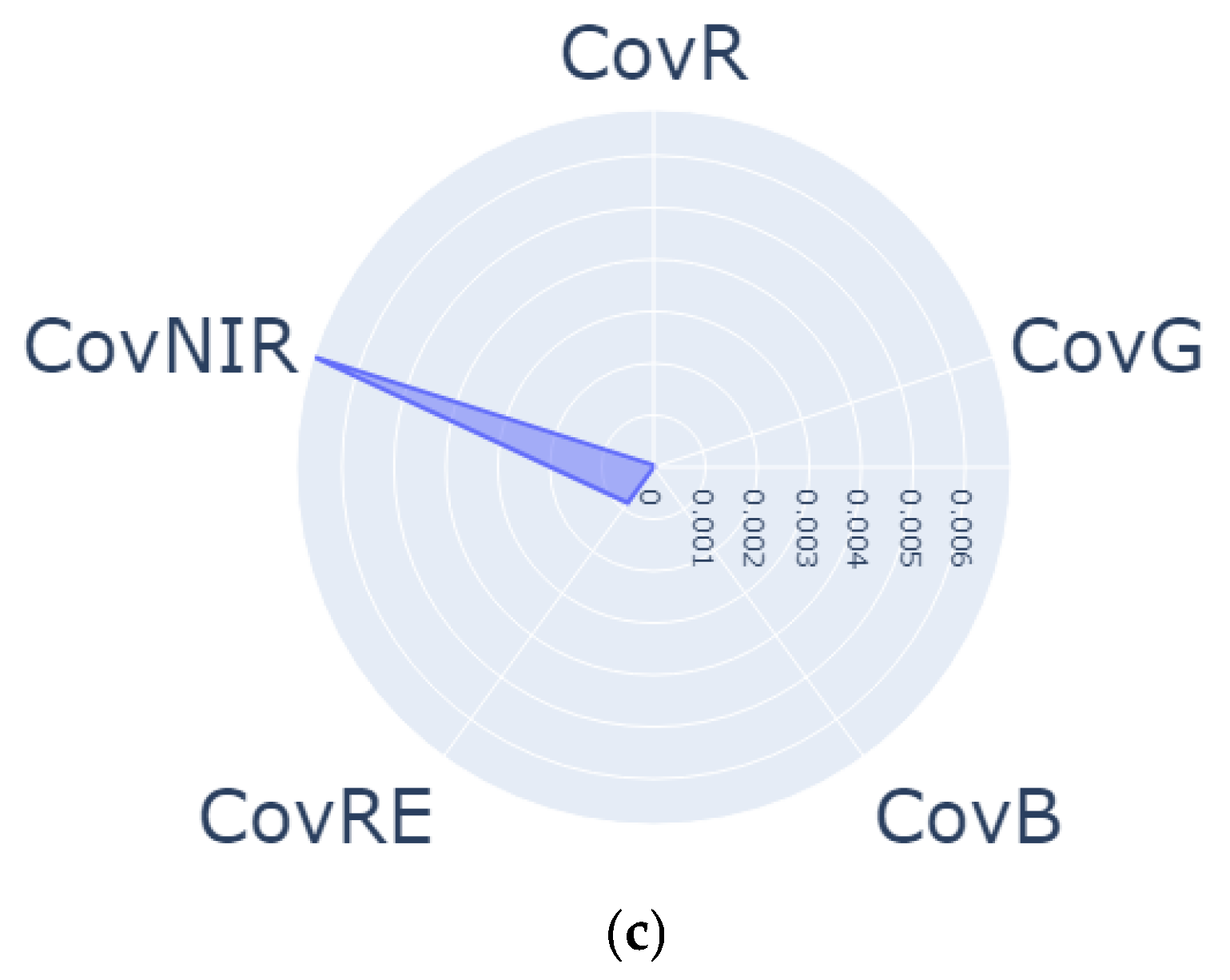

Data Engineering

4.3. Development and Analysis of Machine Learning Models

4.3.1. Prediction of Biomass Based on Dry Matter Yield

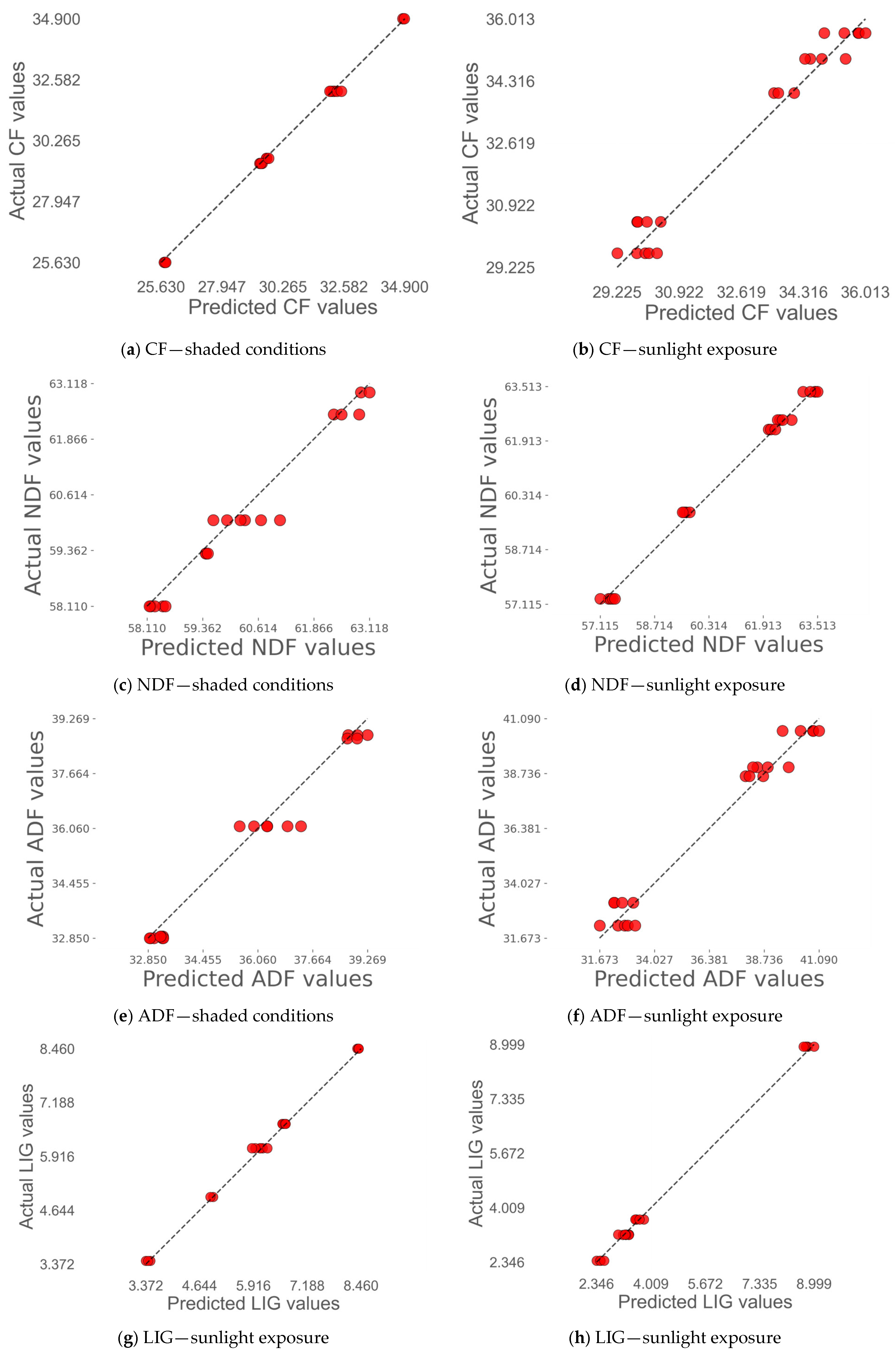

4.3.2. Prediction of Quality Traits

5. Discussion

Practical Applications

- Forage Monitoring and Management:

- Integration with digital tools and technological adoption to promote usability and practical applications.

- Sustainability and data-driven decision making

- Scalability and Future Prospects

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Beeri, O.; Phillips, R.; Hendrickson, J.; Frank, A.B.; Kronberg, S. Estimating Forage Quantity and Quality Using Aerial Hyperspectral Imagery for Northern Mixed-Grass Prairie. Remote Sens. Environ. 2007, 110, 216–225. [Google Scholar] [CrossRef]

- Geipel, J.; Bakken, A.K.; Jørgensen, M.; Korsaeth, A. Forage Yield and Quality Estimation by Means of UAV and Hyperspectral Imaging. Precis. Agric. 2021, 22, 1437–1463. [Google Scholar] [CrossRef]

- Edvan, R.; Bezerra, L.; Marques, C.; Carneiro, M.S.; Oliveira, R.; Ferreira, R. Methods for Estimating Forage Mass in Pastures in a Tropical Climate. Rev. Ciências Agrárias 2016, 39, 36–45. [Google Scholar] [CrossRef]

- Jank, L.; Valle, C.B.; Resende, R. Brazilian Society of Plant Breeding. Printed in Brazil Breeding Tropical Forages; Brazilian Society of Plant Breeding: Londrina, Brazil, 2011; Volume 1. [Google Scholar]

- Mendes de Oliveira, D.; Pasquini, C.; Rita de Araújo Nogueira, A.; Dias Rabelo, M.; Lúcia Ferreira Simeone, M.; Batista de Souza, G. Comparative Analysis of Compact and Benchtop Near-Infrared Spectrometers for Forage Nutritional Trait Measurements. Microchem. J. 2024, 196, 109682. [Google Scholar] [CrossRef]

- Gao, J.; Liang, T.; Liu, J.; Zhang, D.; Wu, C.; Feng, Q.; Xie, H. Hyperspectral remote sensing of forage stoichiometric ratios in the senescent stage of alpine grasslands. Field Crops Res. 2024, 313, 108027. [Google Scholar] [CrossRef]

- Hennessy, A.; Clarke, K.; Lewis, M. Hyperspectral Classification of Plants: A Review of Waveband Selection Generalisability. Remote Sens. 2020, 12, 113. [Google Scholar] [CrossRef]

- Tedesco, D.; Nieto, L.; Hernández, C.; Rybecky, J.; Min, D.; Sharda, A.; Hamilton, K.; Ciampitti, I. Remote Sensing on Alfalfa as an Approach to Optimize Production Outcomes: A Review of Evidence and Directions for Future Assessments. Remote Sens. 2022, 14, 4940. [Google Scholar] [CrossRef]

- Condran, S.; Bewong, M.; Islam, M.Z.; Maphosa, L.; Zheng, L. Machine Learning in Precision Agriculture: A Survey on Trends, Applications and Evaluations over Two Decades. IEEE Access 2022, 10, 73786–73803. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Zhou, Z.; Morel, J.; Parsons, D.; Kucheryavskiy, S.V.; Gustavsson, A.M. Estimation of Yield and Quality of Legume and Grass Mixtures Using Partial Least Squares and Support Vector Machine Analysis of Spectral Data. Comput. Electron. Agric. 2019, 162, 246–253. [Google Scholar] [CrossRef]

- Cevoli, C.; Di Cecilia, L.; Ferrari, L.; Fabbri, A.; Molari, G. Evaluation of Cut Alfalfa Moisture Content and Operative Conditions by Hyperspectral Imaging Combined with Chemometric Tools: In-Field Application. Biosyst. Eng. 2022, 222, 132–141. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, D.; Liu, H.; Huang, X.; Deng, J.; Jia, R.; He, X.; Tahir, M.N.; Lan, Y. Research Hotspots and Frontiers in Agricultural Multispectral Technology: Bibliometrics and Scientometrics Analysis of the Web of Science. Front. Plant Sci. 2022, 13, 955340. [Google Scholar] [CrossRef]

- Liu, H.; Bruning, B.; Garnett, T.; Berger, B. Hyperspectral Imaging and 3D Technologies for Plant Phenotyping: From Satellite to Close-Range Sensing. Comput. Electron. Agric. 2020, 175, 105621. [Google Scholar]

- Gates, D.M.; Keegan, H.J.; Schleter, J.C.; Weidner, V.R. Spectral Properties of Plants. Appl. Opt. 1965, 4, 11–20. [Google Scholar] [CrossRef]

- Dymond, J.R.; Shepherd, J.D.; Qi, J. A Simple Physical Model of Vegetation Reflectance for Standardising Optical Satellite Imagery. Remote Sens. Environ. 2001, 75, 350–359. [Google Scholar] [CrossRef]

- Edward, B. Physical and Physiological Basis for the Reflectance of Visible and Near-Infrared Radiation from Vegetation. Remote Sens. Environ. 1970, 1, 155–159. [Google Scholar]

- Rao, N.R. Development of a Crop-Specific Spectral Library and Discrimination of Various Agricultural Crop Varieties Using Hyperspectral Imagery. Int. J. Remote Sens. 2008, 29, 131–144. [Google Scholar] [CrossRef]

- Singh, V.; Sharma, N.; Singh, S. A Review of Imaging Techniques for Plant Disease Detection. Artif. Intell. Agric. 2020, 4, 229–242. [Google Scholar]

- Zamft, B.M.; Conrado, R.J. Engineering Plants to Reflect Light: Strategies for Engineering Water-Efficient Plants to Adapt to a Changing Climate. Plant Biotechnol. J. 2015, 13, 867–874. [Google Scholar] [CrossRef]

- Mangold, K.; Shaw, J.A.; Vollmer, M. The Physics of Near-Infrared Photography. Eur. J. Phys. 2013, 34, S51. [Google Scholar] [CrossRef]

- Kothari, S.; Schweiger, A.K. Plant Spectra as Integrative Measures of Plant Phenotypes. J. Ecol. 2022, 110, 2536–2554. [Google Scholar]

- Tucker, C. Spectral Estimation of Grass Canopy Variables. Remote Sens. Environ. 1977, 6, 11–26. [Google Scholar] [CrossRef]

- Ahamed, T.; Tian, L.; Zhang, Y.; Ting, K.C. A Review of Remote Sensing Methods for Biomass Feedstock Production. Biomass Bioenergy 2011, 35, 2455–2469. [Google Scholar]

- Araus, J.L.; Kefauver, S.C.; Vergara-Díaz, O.; Gracia-Romero, A.; Rezzouk, F.Z.; Segarra, J.; Buchaillot, M.L.; Chang-Espino, M.; Vatter, T.; Sanchez-Bragado, R.; et al. Crop Phenotyping in a Context of Global Change: What to Measure and How to Do It. J. Integr. Plant Biol. 2022, 64, 592–618. [Google Scholar]

- Cook, C.W. Symposium on Nutrition of Forages and Pastures: Collecting Forage Samples Representative of Ingested Material of Grazing Animals for Nutritional Studies. J. Anim. Sci. 1964, 23, 265–270. [Google Scholar] [CrossRef]

- Weiss, W.P.; Hall, M.B. Laboratory Methods for Evaluating Forage Quality. In Forages; Wiley Online Library: Hoboken, NJ, USA, 2020; pp. 659–672. ISBN 9781119436669. [Google Scholar]

- Hernández Molina, D.D.; Gulfo Galaraga, J.M.; López López, A.M.; Serpa Imbett, C.M. Methods for estimating agricultural cropland yield based on the comparison of NDVI images analyzed by means of Image segmentation algorithms: A tool for spatial planning decisions. Ingeniare. Rev. Chil. Ing. 2023, 31, 224–235. [Google Scholar]

- Wang, Z.; Wang, E.; Zhu, Y. Image Segmentation Evaluation: A Survey of Methods. Artif. Intell. Rev. 2020, 53, 5637–5674. [Google Scholar] [CrossRef]

- Zhang, Y.; Huang, D.; Ji, M.; Xie, F. Image Segmentation Using PSO and PCM with Mahalanobis Distance. Expert Syst. Appl. 2011, 38, 9036–9040. [Google Scholar] [CrossRef]

- Zhou, Z.H. Machine Learning; Springer Nature: Berlin/Heidelberg, Germany, 2021; ISBN 9789811519673. [Google Scholar]

- Raheem, M.A.; Udoh, N.S.; Gbolahan, A.T. Choosing Appropriate Regression Model in the Presence of Multicolinearity. Open J. Stat. 2019, 09, 159–168. [Google Scholar] [CrossRef]

- Théau, J.; Lauzier-Hudon, É.; Aubé, L.; Devillers, N. Estimation of Forage Biomass and Vegetation Cover in Grasslands Using UAV Imagery. PLoS ONE 2021, 16, e0245784. [Google Scholar] [CrossRef]

- Nguyen, P.T.; Shi, F.; Wang, J.; Badenhorst, P.E.; Spangenberg, G.C.; Smith, K.F.; Daetwyler, H.D. Within and Combined Season Prediction Models for Perennial Ryegrass Biomass Yield Using Ground- and Air-Based Sensor Data. Front. Plant Sci. 2022, 13, 950720. [Google Scholar] [CrossRef] [PubMed]

- Gámez, A.L.; Vatter, T.; Santesteban, L.G.; Araus, J.L.; Aranjuelo, I. Onfield Estimation of Quality Parameters in Alfalfa through Hyperspectral Spectrometer Data. Comput. Electron. Agric. 2024, 216, 108463. [Google Scholar] [CrossRef]

- Wijesingha, J.; Astor, T.; Schulze-Brüninghoff, D.; Wengert, M.; Wachendorf, M. Predicting Forage Quality of Grasslands Using UAV-Borne Imaging Spectroscopy. Remote Sens. 2020, 12, 126. [Google Scholar] [CrossRef]

- Kawamura, K.; Tanaka, T.; Yasuda, T.; Okoshi, S.; Hanada, M.; Doi, K.; Saigusa, T.; Yagi, T.; Sudo, K.; Okumura, K.; et al. Legume Content Estimation from UAV Image in Grass-Legume Meadows: Comparison Methods Based on the UAV Coverage vs. Field Biomass. Sci. Rep. 2024, 14, 31705. [Google Scholar] [CrossRef]

- Villoslada Peciña, M.; Bergamo, T.F.; Ward, R.D.; Joyce, C.B.; Sepp, K. A Novel UAV-Based Approach for Biomass Prediction and Grassland Structure Assessment in Coastal Meadows. Ecol. Indic. 2021, 122, 107227. [Google Scholar] [CrossRef]

- McCann, J.A.; Keith, D.A.; Kingsford, R.T. Measuring Plant Biomass Remotely Using Drones in Arid Landscapes. Ecol. Evol. 2022, 12, e8891. [Google Scholar] [CrossRef]

- Bazzo, C.O.G.; Kamali, B.; Hütt, C.; Bareth, G.; Gaiser, T. A Review of Estimation Methods for Aboveground Biomass in Grasslands Using UAV. Remote Sens. 2023, 15, 639. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, D. Improving Forest Aboveground Biomass Estimation Using Seasonal Landsat NDVI Time-Series. ISPRS J. Photogramm. Remote Sens. 2015, 102, 222–231. [Google Scholar] [CrossRef]

- Leenings, R.; Winter, N.R.; Plagwitz, L.; Holstein, V.; Ernsting, J.; Sarink, K.; Fisch, L.; Steenweg, J.; Kleine-Vennekate, L.; Gebker, J.; et al. PHOTONAI—A Python API for Rapid Machine Learning Model Development. PLoS ONE 2021, 16, e0254062. [Google Scholar] [CrossRef]

- Cherney, J.H.; Digman, M.F.; Cherney, D.J. Handheld NIRS for Forage Evaluation. Comput. Electron. Agric. 2021, 190, 106469. [Google Scholar] [CrossRef]

| Regrowth Day | Relative Humidity (%) | Solar Radiation | Precipitation (mm) | Air Temperature |

|---|---|---|---|---|

| 7 | 67.3 | 21.1 | 7.4 | 30.5 |

| 14 | 70.3 | 23.5 | 46.7 | 29.1 |

| 21 | 75.7 | 10.6 | 0.0 | 28.8 |

| 28 | 71.3 | 2.7 | 13.3 | 29.4 |

| 35 | 72.0 | 2.8 | 0.0 | 28.7 |

| Name of Vegetation Index | Equation |

|---|---|

| Normalized Vegetation Difference Index (NDVI) | |

| Green Normalized Difference Vegetation Index (GNDVI) | |

| Normalized Difference Vegetation Red Edge (NDRE) | |

| Plant Senescence Reflectance Index (PSRI) | |

| Triangular Vegetation Index (TVI) | |

| Soil Adjusted Vegetation Index (SAVI) | |

| Optimized Soil Adjusted Vegetation Index (OSAVI) | |

| Atmospherically Resistant Vegetation Index (ARVI) | |

| Soil Adjusted and Atmospherically Resistant Vegetation Index (SARVI) | |

| Soil Adjusted and Atmospherically Resistant Vegetation Index 2 or Enhanced Vegetation Index (SARVI2 o EVI) | |

| Enhanced Vegetation Index 2 (EVI2) | |

| Non-Linear Vegetation Index (NLI) | |

| Visible Atmospherically Resistant Index (VARI) | |

| Chlorophyll Index Green (CLGR) | |

| Chlorophyll Index Red Edge (CLRE) | |

| Normalized Difference Vegetation Water Index (NDWI) | |

| Renormalized Difference Vegetation Index (RDVI) | |

| Renormalized Difference Vegetation Index (WDRVI) | |

| Leaf Area Index (LAI) | |

| Anthocyanin Reflectance Index 1 (ARI1) | |

| Anthocyanin Reflectance Index 2 (ARI2) | |

| Blue Green Pigment Index (BGI) | |

| Normalized Phaeophytinization Index (NPQI) | |

| Plant Senescence Reflectance Index (PSRI) | |

| Structure Insensitive Pigment Index 1 (SIPI1) |

| Data Source | Database Parameters |

|---|---|

| Data recorded in-field | Date Regrowth day Tussock location: Whether it is in the shade or under the sun Number of tussocks (from 0–39) Geolocation recorded by GPS Tussock’s variables: Height Number of total green leaves Green leaves per stem One (1) photo per tussock and four (4) spectra Biomass per tussock: Fresh weight and subsamples |

| Laboratory data | Percent of dry matter (%) gr of dry matter per tussock Quality variables: Crude fiber (CF), neutral detergent fiber (NDF), acid detergent fiber (ADF), lignin content (LIG), crude protein (CP), ether extract (EE), ash (ASH) |

| Data processed | Optical VIS-NIR spectrum values: Optical reflectance of NIR, B, G, RE, R, and vegetation indices (Table 2) NIR covariance, mean green values, pixel numbers, and greenness p Categorical variable: Non-uniformity derived through thresholding classification of covariance values and greenness values |

| Adjusted Hyperparameters for Biomass and Quality Trait Prediction | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Under Shaded Conditions | ||||||||||||||||

| ML Model | g of Dry Matter | CF | NDF | ADF | ||||||||||||

| α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | |

| LASSO | 1.0 | 0.2 | 1.0 | 0.8 | ||||||||||||

| EN | 1.0 | 0.2 | 1.0 | 0.8 | ||||||||||||

| Ridge | 1.0 | 0.2 | 1.0 | 0.8 | ||||||||||||

| k-NN | 25 | 10 | 50 | 35 | ||||||||||||

| DT | 7 | 20 | 4 | 30 | 9 | 20 | 4 | 30 | ||||||||

| ML model | LIG | CP | EE | ASH | ||||||||||||

| α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | |

| LR | ||||||||||||||||

| LASSO | 0.2 | 0.2 | 0.2 | 0.2 | ||||||||||||

| EN | 0.2 | 0.2 | 0.2 | 0.2 | ||||||||||||

| Ridge | 0.2 | 0.2 | 0.2 | 0.2 | ||||||||||||

| k-NN | 50 | 50 | 50 | 25 | ||||||||||||

| DT | 5 | 30 | 9 | 30 | 4 | 30 | 9 | 30 | ||||||||

| Under Sunlight Exposure | ||||||||||||||||

| ML model | g of dry matter | CF | NDF | ADF | ||||||||||||

| α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | |

| LASSO | 1.0 | 1.0 | 0.2 | 1.0 | ||||||||||||

| EN | 1.0 | 1.0 | 0.2 | 1.0 | ||||||||||||

| Ridge | 1.0 | 1.0 | 0.2 | 1.0 | ||||||||||||

| k-NN | 12 | 10 | 10 | 10 | ||||||||||||

| DT | 5 | 30 | 7 | 30 | 7 | 30 | 5 | 30 | ||||||||

| ML model | LIG | CP | EE | ASH | ||||||||||||

| α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | α | N | MD | MF | |

| LASSO | 0.2 | 0.2 | 0.2 | 0.2 | ||||||||||||

| EN | 0.2 | 0.2 | 0.2 | 0.2 | ||||||||||||

| Ridge | 0.2 | 0.2 | 0.2 | 0.2 | ||||||||||||

| k-NN | 10 | 10 | 10 | 10 | ||||||||||||

| DT | 5 | 20 | 4 | 30 | 5 | 10 | 9 | 30 | ||||||||

| Regrowth Day | Tussock’s Location | No. of Green Leaves per Stem | No. of Green Leaves per Tussock | Height’s Tussock (cm) | DMY per Tussock (g) | |||

|---|---|---|---|---|---|---|---|---|

| Mode | Mean | S.D. | Mean | S.D. | Mean | S.D. | ||

| 7 | Shaded | 2 | 72 | 19.64 | 66.26 | 8.41 | 15.19 | 6.41 |

| Sunlight | 2 | 105 | 36.79 | 67.33 | 6.87 | 21.12 | 7.75 | |

| 14 | Shaded | 3 | 183 | 45.15 | 81.00 | 9.49 | 41.05 | 13.78 |

| Sunlight | 3 | 185 | 45.18 | 78.67 | 9.29 | 48.72 | 18.81 | |

| 21 | Shaded | 4 | 192 | 49.41 | 100.53 | 10.82 | 62.82 | 20.09 |

| Sunlight | 4 | 192 | 52.93 | 108.71 | 7.05 | 75.42 | 21.17 | |

| 28 | Shaded | 4 | 215 | 80.46 | 122.11 | 12.62 | 98.18 | 44.08 |

| Sunlight | 4 | 244 | 61.53 | 130.14 | 6.44 | 123.94 | 27.01 | |

| 35 | Shaded | 5 | 331 | 76.31 | 136.84 | 10.55 | 136.66 | 35.66 |

| Sunlight | 5 | 356 | 74.52 | 151.71 | 9.18 | 169.12 | 40.29 | |

| Regrowth Day | Tussock’s Location | CF | NDF | ADF | LIG | CP | EE | ASH |

|---|---|---|---|---|---|---|---|---|

| 7 | Shaded | 29.40 | 58.11 | 32.85 | 6.68 | 18.19 | 2.48 | 12.51 |

| Sunlight | 29.61 | 57.27 | 32.21 | 8.93 | 18.09 | 2.32 | 12.78 | |

| 14 | Shaded | 29.59 | 59.29 | 32.90 | 8.46 | 17.10 | 2.59 | 11.89 |

| Sunlight | 30.47 | 59.81 | 33.19 | 2.39 | 14.44 | 2.48 | 12.55 | |

| 21 | Shaded | 32.14 | 60.04 | 36.12 | 6.11 | 15.69 | 2.30 | 12.24 |

| Sunlight | 33.99 | 62.25 | 38.62 | 3.18 | 11.59 | 2.66 | 13.30 | |

| 28 | Shaded | 35.63 | 62.42 | 38.79 | 3.45 | 12.00 | 1.91 | 11.78 |

| Sunlight | 34.92 | 62.53 | 39.00 | 3.19 | 9.37 | 1.74 | 11.39 | |

| 35 | Shaded | 34.90 | 62.92 | 38.69 | 4.96 | 15.21 | 1.78 | 12.05 |

| Sunlight | 35.63 | 63.36 | 40.56 | 3.65 | 9.75 | 1.78 | 12.27 |

| Biomass and Quality Traits | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Under Shaded Conditions | ||||||||||||||||

| ML Model | g of Dry Matter | CF | NDF | ADF | LIG | CP | EE | ASH | ||||||||

| TR | T | TR | T | TR | T | TR | T | TR | T | TR | T | TR | T | TR | T | |

| LR | 0.953 | 0.353 | ||||||||||||||

| LASSO | 0.903 | 0.865 | 0.918 | 0.843 | 0.844 | 0.864 | 0.841 | 0.826 | 0.723 | 0.642 | 0.889 | 0.915 | 0.809 | 0.797 | 0.342 | 0.368 |

| EN | 0.901 | 0.886 | 0.840 | 0.687 | 0.846 | 0.868 | 0.844 | 0.825 | 0.821 | 0.803 | 0.848 | 0.880 | 0.819 | 0.802 | 0.387 | 0.391 |

| Ridge | 0.913 | 0.815 | 0.999 | 0.998 | 0.985 | 0.953 | 0.989 | 0.966 | 0.998 | 0.996 | 0.998 | 0.996 | 0.998 | 0.993 | 0.999 | 0.997 |

| k-NN | 0.139 | −0.158 | 0.334 | −0.185 | 0.068 | −0.075 | 0.105 | −0.033 | 0.011 | −0.025 | 0.021 | 0.021 | 0.041 | −0.116 | 0.085 | 0.078 |

| DT | 0.999 | 0.786 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 |

| Under Sunlight Exposure | ||||||||||||||||

| LR | 0.954 | 0.529 | ||||||||||||||

| LASSO | 0.924 | 0.934 | 0.906 | 0.881 | 0.910 | 0.810 | 0.898 | 0.879 | 0.836 | 0.701 | 0.899 | 0.904 | 0.771 | 0.786 | 0.521 | 0.591 |

| EN | 0.924 | 0.933 | 0.910 | 0.890 | 0.911 | 0.811 | 0.902 | 0.887 | 0.825 | 0.648 | 0.921 | 0.840 | 0.773 | 0.781 | 0.571 | 0.622 |

| Ridge | 0.934 | 0.902 | 0.986 | 0.969 | 0.998 | 0.994 | 0.986 | 0.969 | 0.999 | 0.997 | 0.998 | 0.995 | 0.998 | 0.996 | 0.999 | 0.998 |

| k-NN | 0.162 | −0.253 | 0.179 | 0.274 | 0.246 | −0.193 | 0.180 | −0.239 | 0.462 | −0.243 | 0.230 | −0.270 | 0.432 | −0.290 | 0.466 | 0.063 |

| DT | 0.979 | 0.929 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.798 | 0.999 | 0.999 |

| Biomass and Quality Traits | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Under Shaded Conditions | ||||||||||||||||

| ML Model | g of Dry Matter | CF | NDF | ADF | LIG | CP | EE | ASH | ||||||||

| TR | T | TR | T | TR | T | TR | T | TR | T | TR | T | TR | T | TR | T | |

| LR | 8.51 | 23.69 | ||||||||||||||

| LASSO | 10.77 | 11.98 | 0.71 | 0.62 | 0.55 | 0.49 | 0.81 | 0.77 | 0.72 | 0.64 | 0.55 | 0.47 | 0.11 | 0.09 | 0.18 | 0.19 |

| EN | 10.80 | 11.03 | 1.00 | 1.23 | 0.56 | 0.49 | 0.80 | 0.77 | 0.59 | 0.51 | 0.64 | 0.54 | 0.10 | 0.09 | 0.17 | 0.18 |

| Ridge | 10.62 | 13.76 | 0.05 | 0.08 | 0.17 | 0.26 | 0.21 | 0.33 | 0.04 | 0.06 | 0.05 | 0.09 | 0.01 | 0.01 | 0.006 | 0.01 |

| k-NN | 40.35 | 35.75 | 2.08 | 2.24 | 1.71 | 1.66 | 2.33 | 2.2 | 1.43 | 1.13 | 1.64 | 1.56 | 0.30 | 0.26 | 0.20 | 0.22 |

| DT | 6.04 | 10.31 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Under Sunlight Exposure | ||||||||||||||||

| LR | 9.39 | 31.03 | ||||||||||||||

| LASSO | 12.15 | 12.78 | 0.60 | 0.75 | 0.52 | 0.85 | 0.82 | 1.06 | 0.74 | 1.12 | 0.83 | 1.30 | 0.14 | 0.15 | 0.39 | 0.31 |

| EN | 12.26 | 12.86 | 0.58 | 0.72 | 0.52 | 0.85 | 0.81 | 1.03 | 0.75 | 1.20 | 0.73 | 1.17 | 0.13 | 0.15 | 0.36 | 0.30 |

| Ridge | 11.34 | 14.92 | 0.22 | 0.39 | 0.07 | 0.15 | 0.30 | 0.53 | 0.05 | 0.11 | 0.11 | 0.21 | 0.01 | 0.01 | 0.01 | 0.02 |

| k-NN | 43.08 | 56.00 | 1.88 | 2.56 | 1.57 | 2.20 | 2.60 | 3.48 | 1.16 | 1.91 | 2.30 | 3.30 | 0.23 | 0.34 | 0.37 | 0.49 |

| DT | 0.02 | 15.89 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.03 | 0.0 | 0.0 |

| Predicted Forage Variables | Under Shaded Conditions | Under Sunlight Exposure |

|---|---|---|

| g of dry matter | ElasticNet (α = 1) In-field predictors: height of tussock, number of green leaves per tussock, Optical predictors: RE derivate, WRDVI, Maximum of spectra, RE, NLI, SARVI | LASSO (α = 1), Decision Tree (MD = 7, MF = 20) In-field predictors: height of tussock, number of green leaves per tussock, Optical Predictors: ARVI, EVI2, G, GNDVI, WRDVI. |

| Predicted Forage Variables | Condition | In-Field Predictors | Optical Predictors | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R-H | DT-H | D a t e | HT | GLT | GR | NP | C L R E | V A R I | N L I | E V I 2 | R D V I | S A V I | S A R V I | S A R V I 2 | R | N D V I | A R V I | A R V I 2 | |||

| α | MD | MF | |||||||||||||||||||

| CF | Sunlight | 0.2 | 7 | 30 | x | x | x | x | x | x | x | x | |||||||||

| Shaded | 1.0 | 4 | 30 | x | x | x | x | x | x | x | x | ||||||||||

| ADF | Sunlight | 0.8 | 5 | 30 | x | x | x | x | x | x | x | x | |||||||||

| Shaded | 1.0 | 4 | 30 | x | x | x | x | x | x | x | x | ||||||||||

| NDF | Sunlight | 1.0 | 7 | 30 | x | x | x | x | x | x | x | x | |||||||||

| Shaded | 0.2 | 9 | 20 | x | x | x | x | x | x | x | x | ||||||||||

| LIG | Sunlight | 0.2 | 5 | 20 | x | x | x | x | x | x | x | x | |||||||||

| Shaded | 0.2 | 5 | 30 | x | x | x | x | x | x | x | |||||||||||

| CP | Sunlight | 0.2 | 4 | 30 | x | x | x | x | x | x | x | x | |||||||||

| Shaded | 0.2 | 9 | 30 | x | x | x | x | x | x | x | x | ||||||||||

| EE | Sunlight | 0.2 | x | x | x | x | x | x | x | x | |||||||||||

| Shaded | 0.2 | 4 | 30 | x | x | x | x | x | x | x | x | ||||||||||

| ASH | Sunlight | 0.2 | 9 | 30 | x | x | x | x | x | x | x | x | |||||||||

| Shaded | 0.2 | 9 | 30 | x | x | x | x | x | x | x | x | ||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Serpa-Imbett, C.M.; Gómez-Palencia, E.L.; Medina-Herrera, D.A.; Mejía-Luquez, J.A.; Martínez, R.R.; Burgos-Paz, W.O.; Aguayo-Ulloa, L.A. In-Field Forage Biomass and Quality Prediction Using Image and VIS-NIR Proximal Sensing with Machine Learning and Covariance-Based Strategies for Livestock Management in Silvopastoral Systems. AgriEngineering 2025, 7, 111. https://doi.org/10.3390/agriengineering7040111

Serpa-Imbett CM, Gómez-Palencia EL, Medina-Herrera DA, Mejía-Luquez JA, Martínez RR, Burgos-Paz WO, Aguayo-Ulloa LA. In-Field Forage Biomass and Quality Prediction Using Image and VIS-NIR Proximal Sensing with Machine Learning and Covariance-Based Strategies for Livestock Management in Silvopastoral Systems. AgriEngineering. 2025; 7(4):111. https://doi.org/10.3390/agriengineering7040111

Chicago/Turabian StyleSerpa-Imbett, Claudia M., Erika L. Gómez-Palencia, Diego A. Medina-Herrera, Jorge A. Mejía-Luquez, Remberto R. Martínez, William O. Burgos-Paz, and Lorena A. Aguayo-Ulloa. 2025. "In-Field Forage Biomass and Quality Prediction Using Image and VIS-NIR Proximal Sensing with Machine Learning and Covariance-Based Strategies for Livestock Management in Silvopastoral Systems" AgriEngineering 7, no. 4: 111. https://doi.org/10.3390/agriengineering7040111

APA StyleSerpa-Imbett, C. M., Gómez-Palencia, E. L., Medina-Herrera, D. A., Mejía-Luquez, J. A., Martínez, R. R., Burgos-Paz, W. O., & Aguayo-Ulloa, L. A. (2025). In-Field Forage Biomass and Quality Prediction Using Image and VIS-NIR Proximal Sensing with Machine Learning and Covariance-Based Strategies for Livestock Management in Silvopastoral Systems. AgriEngineering, 7(4), 111. https://doi.org/10.3390/agriengineering7040111