Abstract

The integration of cloud computing, IoT (Internet of Things), and artificial intelligence (AI) is transforming precision agriculture by enabling real-time monitoring, data analytics, and dynamic control of environmental factors. This study develops a cloud-driven data analytics pipeline for indoor agriculture, using lettuce as a test crop due to its suitability for controlled environments. Built with Apache NiFi (Niagara Files), the pipeline facilitates real-time ingestion, processing, and storage of IoT sensor data measuring light, moisture, and nutrient levels. Machine learning models, including SVM (Support Vector Machine), Gradient Boosting, and DNN (Deep Neural Networks), analyzed 12 weeks of sensor data to predict growth trends and optimize thresholds. Random Forest analysis identified light intensity as the most influential factor (importance: 0.7), while multivariate regression highlighted phosphorus (0.54) and temperature (0.23) as key contributors to plant growth. Nitrogen exhibited a strong positive correlation (0.85) with growth, whereas excessive moisture (–0.78) and slightly elevated temperatures (–0.24) negatively impacted plant development. To enhance resource efficiency, this study introduces the Integrated Agricultural Efficiency Metric (IAEM), a novel framework that synthesizes key factors, including resource usage, alert accuracy, data latency, and cloud availability, leading to a 32% improvement in resource efficiency. Unlike traditional productivity metrics, IAEM incorporates real-time data processing and cloud infrastructure to address the specific demands of modern indoor farming. The combined approach of scalable ETL (Extract, Transform, Load) pipelines with predictive analytics reduced light use by 25%, water by 30%, and nutrients by 40% while simultaneously improving crop productivity and sustainability. These findings underscore the transformative potential of integrating IoT, AI, and cloud-based analytics in precision agriculture, paving the way for more resource-efficient and sustainable farming practices.

1. Introduction

Agricultural sustainability is increasingly challenged by climate change, resource shortages, and the rising demand for high-efficiency food production systems. In response, indoor farming has emerged as a viable solution, as demonstrated by Sahoo et al. [1] in their study, Trends in Agriculture Science: Integrating Data Analytics for Enhanced Decision Making by Farmers, which shows that controlled environments can precisely regulate key factors such as light, temperature, humidity, and nutrient absorption to optimize crop growth and quality.

Similarly, Jaeger [2] examined vertical farming (plant factories with artificial lighting) and provided consumer insights that underscore the benefits of such controlled systems, while Lakhiar et al. [3] reviewed modern plant cultivation technologies, including aeroponics, emphasizing the potential of controlled environment agriculture. The deployment of IoT systems in agriculture has facilitated continuous environmental monitoring and automated interventions, as detailed by Dozono et al. [4]. However, Oliveira et al. [5] highlighted that although IoT-based data analytics frameworks improve decision-making among farmers, the absence of robust, automated ETL pipelines limits the real-time implementation of these systems. These studies collectively illustrate the current technological landscape in precision agriculture and emphasize the critical need for advanced data integration solutions.

This study presents an IoT-cloud-based framework that integrates sensor networks, machine learning algorithms, and real-time data processing pipelines to enhance precision agriculture. Specifically, Apache NiFi is implemented as an ETL solution, ensuring automated data ingestion, transformation, and storage within a cloud environment (Azure IoT Hub & Blob Storage). To enable predictive analytics for optimizing crop growth, machine learning models including Support Vector Machines (SVM), Gradient Boosting, and Deep Neural Networks (DNN) were employed to analyze 12 weeks of sensor data, including light intensity, moisture, and nutrient levels.

A novel metric was specifically developed for this case study to assess resource efficiency, real-time alert accuracy, data latency, and cloud data availability. The Integrated Agricultural Efficiency Metric (IAEM) offers a quantitative evaluation of system performance, highlighting a 32% improvement in resource efficiency and confirming the effectiveness of an AI-driven, cloud-integrated agricultural monitoring system.

2. Materials and Methods

2.1. Experimental Setup for Evaluating Plant Growth

This study implemented a 12-week experimental setup to evaluate the effectiveness of an IoT-based system in optimizing indoor plant growth, with lettuce chosen as the test crop due to its fast growth cycle and sensitivity to environmental factors.

During the experiment, a new set of lettuce plants was grown each week, with environmental growing factors, including light intensity, moisture content, temperature, and nutrient levels, systematically controlled and varied weekly, as shown in Appendix A. The growth metrics for each weekly set were recorded at the maturity level to ensure a comprehensive analysis of the relationship between environmental conditions and lettuce development. This approach allowed for a robust assessment of how each unique setup influenced plant growth and validated the system’s ability to adapt to varying conditions. Light intensity was adjusted between 5102.92 lumens (week 11) and 9849.55 lumens (week 12), while moisture levels ranged from 22.31% (week 9) to 78.18% (week 1). The temperature was set between 15.63 °C (week 1) and 33.15 °C (week 5), simulating diverse indoor conditions. Nutrient levels were also varied, with nitrogen ranging from 64.49 mg/kg (week 3) to 148.57 mg/kg (week 5), phosphorus from 20.0 mg/kg (week 1) to 33.0 mg/kg (week 12), and potassium from 40.0 mg/kg (week 1) to 49.0 mg/kg (week 12). Growth metrics, including biomass (g) and height (cm), were recorded upon plant maturity to evaluate the outcomes of each weekly setup.

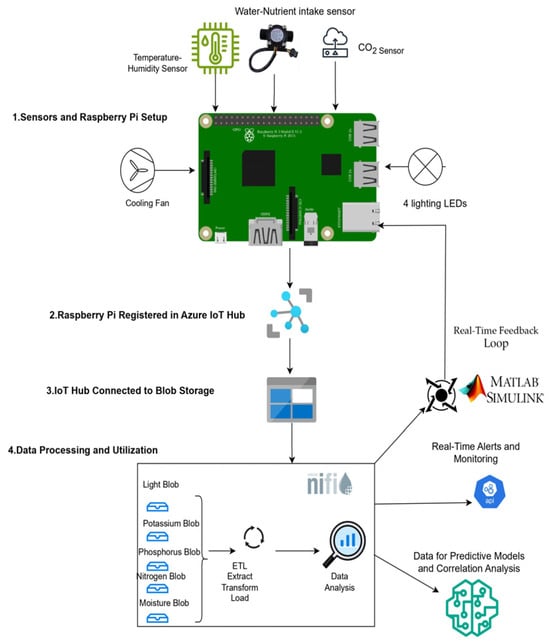

IoT sensors continuously collected data measurements and transmitted them in real-time to Azure Blob Storage, as shown in the pipeline in Figure 1. Each weekly setup represented a unique experimental condition.

Figure 1.

IoT-Based pipeline for plant growth optimization.

2.2. IoT-Driven Processing and Integration Pipeline

The Internet of Things (IoT) enables real-time environmental monitoring through connected sensors. In this study, IoT sensors were utilized to measure key environmental parameters, including light intensity, soil moisture, temperature, humidity, CO2 levels, and nutrient concentrations. These sensors were connected to a Raspberry Pi 4 Model B (4 GB RAM, quad-core ARM Cortex-A72 processor, Raspberry Pi Foundation, Cambridge, United Kingdom) via General Purpose Input/Output (GPIO) pins as shown in Figure 1 for data acquisition and transmission to a cloud-based storage system [6]. The Raspberry Pi 4 Model B was chosen for its computational capabilities, low power consumption, and compatibility with IoT-based agricultural monitoring systems.

Each sensor was selected based on accuracy, sensitivity, and compatibility with IoT-driven farming:

- Light Sensor (TSL2591, Adafruit, Adafruit Industries, New York, NY, USA): Measures illuminance (lux) with a dynamic range of 188 µlux to 88,000 lux, enabling precise detection of light intensity for plant growth optimization.

- Soil Moisture Sensor (Capacitive, DF Robot, Shanghai, China): Detects 0–100% Volumetric Water Content (VWC) with an accuracy of ±5%, preventing over- or under-watering.

- Temperature-Humidity-CO2 Sensor (SCD41, Sensirion, Chicago, IL, USA): Monitors temperature (−40 to 80 °C, ±0.5 °C), humidity (0–100% RH, ±2% RH), and CO2 levels (0–5000 ppm, ±50 ppm or ±5%), optimizing photosynthetic efficiency.

- Water Flow Sensor (Flow Meter, DF Robot, Shanghai, China): Measures water intake rate to assess nutrient absorption efficiency and irrigation control.

- Air Stone (Aeration System): Facilitates oxygenation of the water reservoir, improving nutrient uptake and root health.

- Growing Medium (Rockwool-based substrate): Provides a stable support structure for seedlings, ensuring optimal root aeration and water retention.

2.2.1. Dataset Structure and Model Inputs

All machine learning models were trained on a 12-week dataset collected from IoT sensors, capturing key environmental parameters, including light intensity, moisture, temperature, nitrogen, phosphorus, and potassium, alongside corresponding plant growth metrics such as biomass (g) and height (cm). Each data point represents a weekly observation, where environmental variables serve as inputs, and growth metrics function as outputs. To ensure scale uniformity, the dataset underwent z-score normalization as a preprocessing step.

The input elements consisted of light (lumens), moisture (%), temperature (°C), nitrogen (mg/kg), phosphorus (mg/kg), and potassium (mg/kg), while the output variables included crop yield (g) and plant height (cm). Table 1 provides a comprehensive overview of the machine learning models utilized, detailing the input variables processed, the output predictions generated, and the primary function of each algorithm within the research.

Table 1.

Algorithm inputs, outputs, and purpose in the study.

2.2.2. Data Storage of IoT Devices

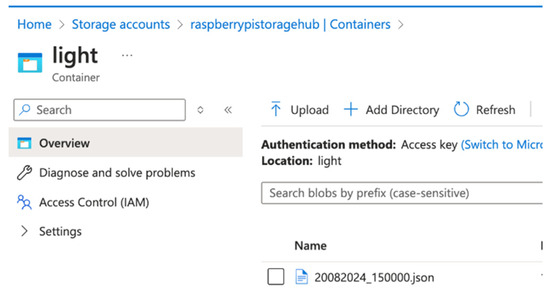

Sensor data was collected hourly between April 2024 and September 2024, with each reading stored as a timestamped JSON file, ensuring chronological order.

The data was then transmitted from the IoT Hub to Azure Event Hub [7,8], where it underwent initial processing before being stored in Azure Blob Storage.

Each sensor’s data was stored in a dedicated container named according to the corresponding sensor type. For instance, “light” contained light intensity measurements, “moisture content” held soil moisture data, “nitrogen” recorded nitrogen concentrations, “phosphorus” stored phosphorus levels, and “potassium” contained potassium concentration data.

To maintain data accuracy and reproducibility, real-time sensor readings were saved in Azure Blob Storage using a timestamped naming format (yyyymmdd_hhmmss), ensuring a structured historical record for tracking as shown in Figure 2. This approach enables data integrity, facilitates historical analysis, and enables scalable data retrieval for model training.

Figure 2.

Example of light sensor data in azure blob storage container.

2.2.3. Correlation Analysis

To assess the effectiveness of the IoT-based system in optimizing environmental variables for crop yield enhancement, the correlation between these factors and plant growth was analyzed. The Pearson Correlation Coefficient was computed to quantify the linear relationship between lettuce growth and each environmental input [9]. The coefficient is mathematically defined in Equation (1):

X: is the individual environmental input (light, moisture, etc.).

Y: is the plant growth data.

The means of the respective variables.

2.2.4. Cloud-Based Data Processing and Analytics

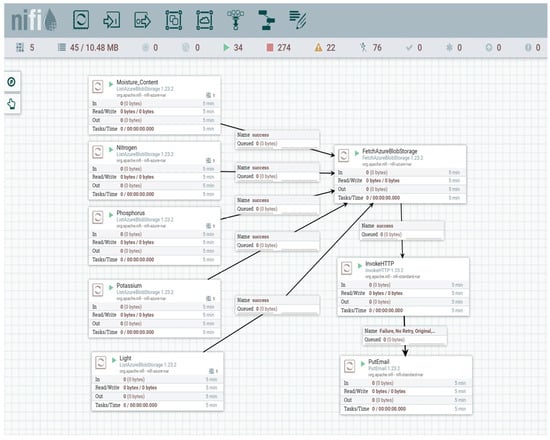

Apache NiFi was employed as the ingestion and data processing tool [10]. The data flow, illustrated in Figure 3, begins with the ListBlob and FetchBlob processors, which systematically receive sensor data from the storage system. Once the data is obtained, the InvokeHTTP processor transmits the entire JSON payload received from the blob directly to an API for further processing and evaluation.

Figure 3.

Apache NiFi data flow for IoT sensor processing and API integration.

2.3. Predictive Models

2.3.1. Support Vector Machines (SVM)

Support Vector Machines (SVMs) are a class of supervised learning algorithms designed to solve classification problems, particularly binary classification tasks [11]. The goal of SVMs is to find a decision boundary that separates data into two classes, ensuring the boundary is as far as possible from the nearest data points in each class, thereby maximizing the margin.

Instead of directly mapping inputs X to binary outputs Y ∈ {−1,1}, SVMs construct a real-valued classifier f: X → R, where the predicted class is determined by the sign of f(X).

The optimization problem aims to minimize the norm of the weight vector ∣∣w∣∣, subject to constraints that ensure the correct classification of training examples, expressed in Equation (2).

To address non-linear separability, SVMs transform input data into higher-dimensional feature spaces using kernel functions, where a linear decision boundary can effectively separate the data. The optimization problem is then solved using quadratic programming with Lagrange multipliers, leveraging the dual formulation.

2.3.2. Deep Neural Networks (DNN)

Deep Neural Networks (DNNs) are a class of machine learning algorithms designed to model complex, non-linear relationships between input features and output targets [12]. A DNN consists of multiple layers, including an input layer, one or more hidden layers, and an output layer. Each layer is composed of interconnected neurons, where each connection is assigned a weight.

The training of DNNs involves Forward Propagation where the input data is passed through the network, and the output at each layer is computed using a combination of weighted sums and a non-linear activation function; Loss Calculation where the network output is compared to the target output using a loss function and Backpropagation where the loss is propagated backward through the network, layer by layer, to update the weights and biases using gradient descent.

2.3.3. Gradient Boosting

Gradient Boosting is an ensemble machine-learning technique designed to solve both regression and classification problems. Each new learner is trained to correct the residual errors made by the previous ones, progressively improving the model’s accuracy [13].

The technique minimizes a predefined loss function ℓ(f), which measures the error between the predicted and actual values. At each iteration, the model adds a new weak learner, scaled by a learning rate α, to the current ensemble, following the update rule in Equation (3):

The weak learner is trained to approximate the negative gradient of the loss function, which represents the residuals. To handle non-linear relationships and complex patterns, Gradient Boosting relies on decision trees as weak learners. These trees partition the feature space into regions that approximate the residuals with minimal error. The iterative nature of the algorithm allows it to adaptively refine predictions by focusing on the hardest-to-predict data points.

2.3.4. Time Series Analysis

To better identify patterns and make projections for future growth and planning based on historical performance, we used time-series regression that allows us to model plant growth as a function of environmental factors over time. The general model is shown in Equation (4):

t: Time (daily intervals); a(t), b(t), , , , d(t): Dynamic changes in growth conditions; ϵ: The error term, which accounts for variability not explained by the model.

Time Series Analysis is a statistical technique that models data points collected over time to capture trends, seasonal patterns, and cyclic behaviors. It can also predict future values based on past data, by analyzing the relationships between historical data points and accounting for random fluctuations [14]. In our case, we used IoT sensors that constantly sent real-time data into the cloud [15].

2.3.5. Multivariate Regression for Resource Contributions

To quantify the relationship between the independent variables (light, moisture, temperature, and nutrient levels) and the dependent variable (plant growth), multivariate linear regression was utilized [16]. This statistical technique enables the estimation of the impact of multiple independent variables on a single dependent variable, providing a comprehensive understanding of their collective influence. The predicted plant growth is computed using Equation (5):

Multivariate linear regression

where:

a, b, , , , d: The coefficients that represent the effect of light, moisture, and nutrients on plant growth; ϵ: The error term, which accounts for variability not explained by the model.

Mathematically, the regression model is solved by minimizing the following function in Equation (6):

Growthi is the actual plant growth on day ; is the predicted growth from the regression model on day .

2.3.6. Random Forest Model

The Random Forest model was implemented to predict crop yield based on four key environmental factors: light intensity, moisture content, temperature, and nutrient levels. This machine learning approach operates by constructing multiple decision trees, each trained on a randomly selected subset of the dataset D, with a randomized selection of features at each split. This technique enhances predictive accuracy and reduces overfitting by aggregating the outputs of multiple trees to generate a robust final prediction. [17]. The final prediction ŷ(x′) for a new data point x′ is obtained by averaging the predictions from all trees (x′) as shown in Equation (7):

where B is the number of trees and (x′) is the prediction from the b-th tree.

2.4. AI Model Training and Optimization

All models in this study were trained and evaluated using a standardized methodology to ensure consistency and comparability across experiments. To address class imbalances, the dataset underwent preprocessing through a combination of oversampling for minority classes and undersampling for majority classes. This balancing approach enhanced the model’s ability to learn effectively from both classes, preventing bias toward the dominant class.

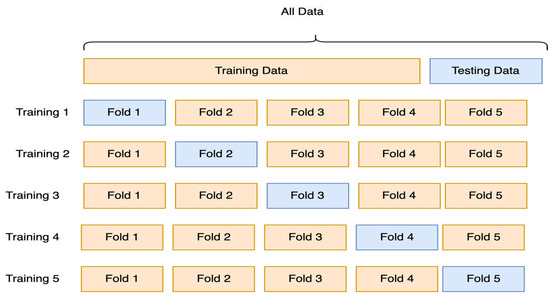

Following preprocessing, the dataset was partitioned into two subsets: Training Data (80%), represented in yellow, and Test Data (20%), represented in blue in Figure 4. To improve generalization and reduce overfitting, the training data was further divided using 5-fold cross-validation, where each fold served as the validation set once while the remaining folds were used for training.

Figure 4.

K-Fold cross-validation process.

Each model was trained five times, with a different fold designated as the validation set in each iteration. This iterative process ensured that every data point in the training set contributed to both training and validation, enhancing model robustness and reliability.

To further enhance model performance, hyperparameter tuning was conducted for each predictive model to optimize accuracy and generalization. The optimization process focused on key hyperparameters to balance model complexity, computational efficiency, and predictive accuracy:

- Support Vector Machine (SVM): The regularization parameter (C) and kernel type were adjusted to optimize the trade-off between model complexity and generalization.

- Gradient Boosting: The number of estimators, learning rate, and maximum tree depth were fine-tuned to improve predictive accuracy while mitigating the risk of overfitting.

- Deep Neural Network (DNN): The network architecture was optimized by refining the number of hidden layers, neurons per layer, and learning rate, ensuring a balance between computational efficiency and predictive performance.

- Time Series Analysis: The window size and seasonal parameters were empirically adjusted to align with observed growth patterns, improving the model’s ability to forecast crop performance accurately.

2.5. Integrated Agricultural Efficiency Metric (IAEM)

The Integrated Agricultural Efficiency Metric (IAEM), presented in Equation (8), introduces a novel metric for evaluating agricultural system performance by integrating real-time data within a unified model tailored for technology-driven farming. Unlike Total Factor Productivity (TFP), which assesses the long-term ratio of agricultural outputs to inputs and is widely employed in economic and technological growth analysis [18], IAEM provides a dynamic, real-time evaluation of modern farming operations.

Similarly, while the Precision Agriculture Index (PAI) focuses on measuring the spatial variability of crop growth using high-resolution remote sensing data [19], IAEM extends beyond spatial insights by incorporating real-time resource utilization, alert accuracy, and system latency, ensuring a comprehensive assessment of system responsiveness and operational efficiency. In contrast to statistical metrics such as Mean Squared Error (MSE) and R-Squared (R2), which are commonly used in regression analysis to evaluate model fit [20], IAEM serves as a practical operational performance metric, bridging the gap between technical system optimization and agricultural productivity.

By integrating key sub-metrics such as Resource Efficiency (RE), Real-Time Alert Accuracy (RA), Data Latency (DL), and Cloud Data Availability (CDA), IAEM effectively addresses gaps in existing agricultural performance metrics. This makes it a performing tool for real-time evaluation of data-driven agricultural systems, particularly those leveraging ETL solutions such as Apache NiFi to enable seamless data ingestion and analysis.

The Integrated Agricultural Efficiency Metric (IAEM) is computed using normalized sub-metrics, including Resource Efficiency (RE), Real-Time Alert Accuracy (RA), Data Latency (DL), and Cloud Data Availability (CDA). The lowest IAEM values occur when RE and RA are minimal, DL is at its highest, and CDA is low, indicating suboptimal system performance. Conversely, the highest IAEM values are achieved when RE and RA are maximized, DL is minimized, and CDA remains high, reflecting an efficient and well-optimized agricultural system. Table 2 presents the proposed scale for interpreting IAEM values.

Table 2.

IAEM performance scale.

The IAEM framework is composed of several sub-metrics that collectively evaluate agricultural system performance:

- Data Latency Metric (DL)

The metric part in Equation (9) represents the time delay between data generation and actionable insights or system response.

Equation shows

where : Time when the system generates a response for the i-th data point; : Time when the i-th data point is generated; Total number of data points processed.

- Resource Efficiency (RE)

The metric in Equation (10) quantifies the efficiency of the system in processing data relative to the resources used.

- Real-Time Alert Accuracy (RA)

The metric in Equation (11) evaluates the accuracy of real-time alerts generated by the system.

- Cloud Data Availability (CDA)

The metric in Equation (12) represents Cloud Data Availability, it shows the ability of a cloud storage system; Azure Blob Storage in our case, to ensure full access to data for read and write operations over a given period. This metric measures the reliability and accessibility of data in the cloud, expressed as a percentage of uptime or successful operations versus total operational time.

where:

- Pairwise Comparison algorithm using AHP for weights

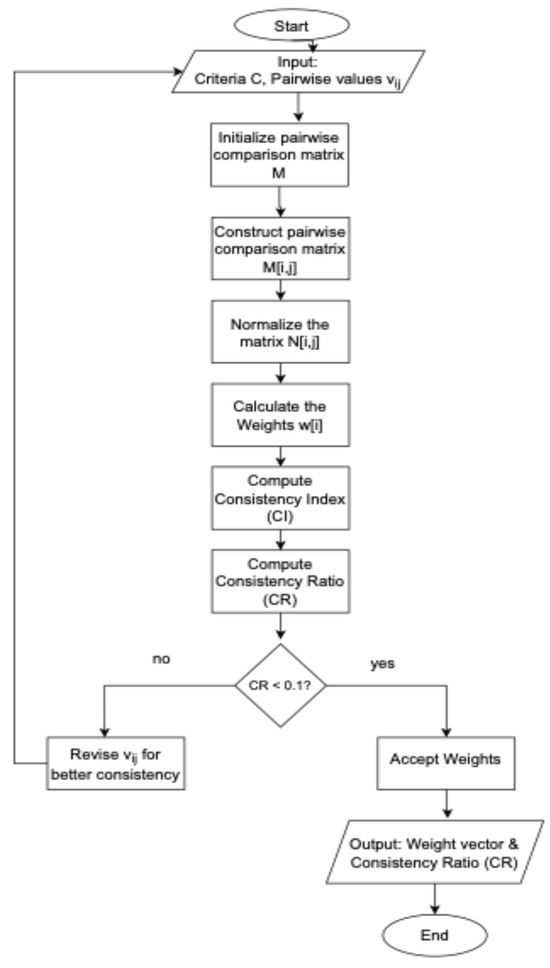

In this study, a Python-based computational framework (Python 3.11) was developed to determine the weights of the newly proposed Integrated Agricultural Efficiency Metric (IAEM). The script was specifically adapted for this research and employs the Analytic Hierarchy Process (AHP), a well-established multi-criteria decision-making methodology. The weight computation follows a pairwise comparison algorithm designed to align with the specific requirements of this case study.

The flowchart in Figure 5 illustrates the step-by-step process, which includes the construction of a pairwise comparison matrix, normalization of the matrix, and calculation of the average normalized values to derive the relative importance of each sub-metric. To ensure the accuracy and validity of the computed weights, the algorithm incorporates consistency checks, mitigating potential biases in decision-making.

Figure 5.

Pairwise comparison using AHP.

For a detailed breakdown of the mathematical formulations and computational steps, refer to Appendix B.

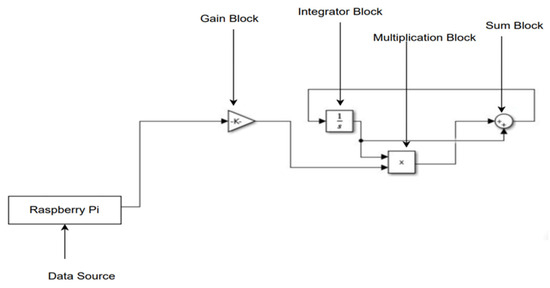

2.6. MATLAB Simulink for Real-Time Feedback

As previously stated, Apache NiFi is responsible for collecting environmental data and transmitting it to a dedicated API, which evaluates these values against predefined thresholds to assess plant environmental conditions.

To further enhance the IoT-based environmental monitoring system, MATLAB Simulink (MATLAB R2024a) was utilized to model the dynamic growth of plants based on real-time sensor data [21]. Figure 6 presents the MATLAB Simulink model, which represents the feedback control system designed for the IoT-based plant growth monitoring framework.

Figure 6.

MATLAB Simulink model for real-time plant growth feedback system.

The model incorporates several key components:

- The Gain Block (-k) applies a constant adjustment factor to modulate the growth rate based on incoming sensor data, utilizing a negative feedback mechanism.

- The Integrator Block (1/s) computes the total plant size by integrating the growth rate over time.

- The Multiplication Block (x) updates plant size dynamically by multiplying the growth rate by the current size.

- The Feedback Loop continuously adjusts plant size predictions based on real-time environmental inputs, ensuring adaptive growth modeling.

This model is based on the Malthusian Exponential Growth Equation, which describes population growth as a continuous exponential function, where the rate of increase remains proportional to the current population size, assuming unlimited resources and the absence of external constraints [22].

To model plant growth under controlled conditions, the Simulink model is representing the plant growth using the differential Equation (13):

where:

p(t): represents the plant size at time t; r(t): is the growth rate at time t, which is influenced by real-time sensor data; k: Gain constant controlling the feedback effect; Input(t): External factor affecting the growth (sensor inputs such as nutrients, light…); The growth rate r(t) is not constant but dynamically adjusts based on the sensor feedback loop.

3. Results and Discussion

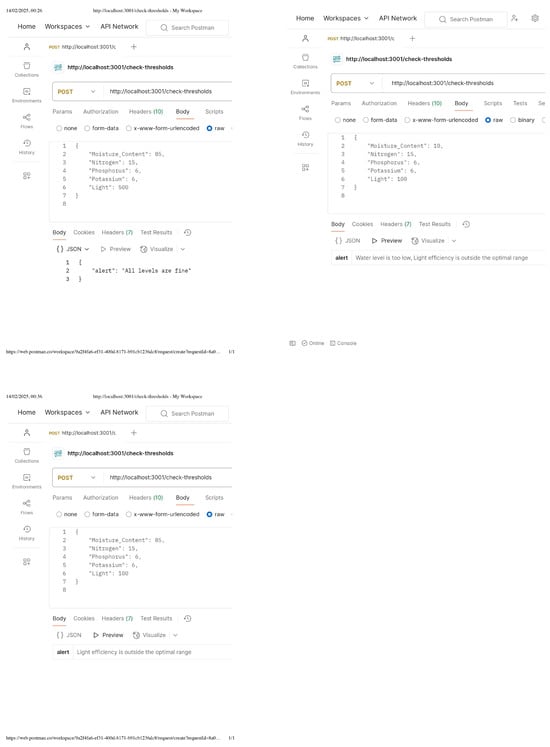

3.1. Real-Time IoT-Driven Alert System Responsiveness

The response typically comes back with either a message indicating “All levels are fine” or an alert such as {“alert”: “Light is outside the optimal range”} as shown in Figure 7. Based on the response, NiFi triggers the PutEmail processor, allowing the system to inform when environmental conditions need immediate attention. This automated flow ensures continuous monitoring, immediate comparisons with critical thresholds, and real-time alerts or responses to prevent issues such as insufficient water or poor nutrient levels in the monitored environment.

Figure 7.

Alerts responses from the API.

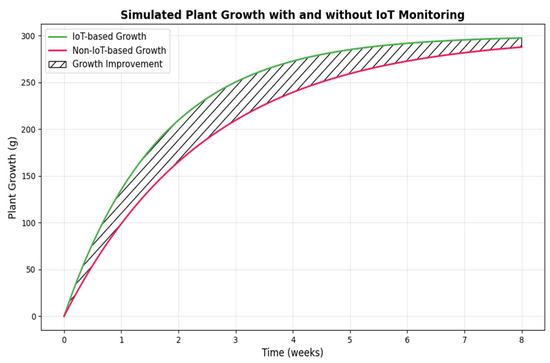

3.2. IoT-Based Monitoring for Growth

The graph in Figure 8 compares the simulated plant growth over an 8-week period under two different environmental management strategies. In the IoT-based monitoring scenario (green line), real-time adjustments in light intensity, moisture levels, and nutrient availability allowed plants to experience a 30% increase in growth rate compared to the non-IoT system. By week 4, IoT-monitored plants achieved an average biomass of 150 g, reaching 300 g by week 8. In contrast, the non-IoT monitoring scenario (pink line), where environmental conditions remained static, resulted in slower growth, with plants reaching only 120 g by week 4 and final biomass of 250 g by week 8, representing a 16.7% lower yield compared to IoT-monitored plants. Resource consumption per gram of biomass was reduced by 25%, further reinforcing the role of IoT-based monitoring in improving agricultural sustainability and maximizing crop yields.

Figure 8.

Simulated plant growth over time (in days).

3.3. Growth Monitoring and Environmental Correlations

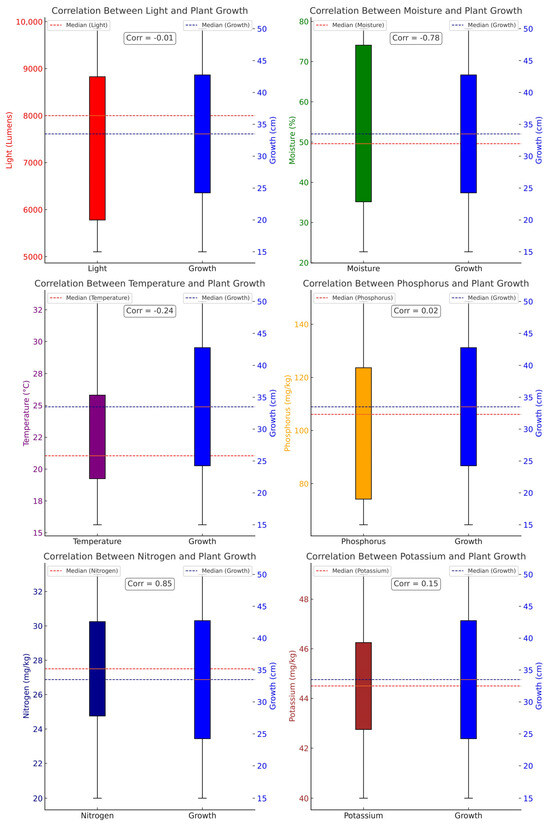

Figure 9 presents the correlation between key environmental factors and plant growth, illustrating their respective impacts. The x-axis categorizes the analyzed environmental variables (Phosphorus, Potassium, Nitrogen, Light, Moisture, and Temperature) and plant growth (cm). The left y-axis denotes the measured values of each environmental factor, while the right y-axis represents plant growth.

Figure 9.

Analysis of environmental factors and plant growth.

Boxplots are used to depict data distribution, with interquartile ranges (IQRs) indicating variability, median values represented by horizontal lines, and whiskers extending to observed minimum and maximum values. The Pearson correlation coefficient (Corr) is also displayed to quantify relationships between environmental factors and plant growth.

- Strong positive correlation: Nitrogen (Corr = 0.85, p < 0.001).

- Strong negative correlation: Moisture (Corr = −0.78, p < 0.001).

- No significant correlation: Light (Corr = −0.01) and phosphorus (Corr = 0.02).

The median plant growth was 24 cm (IQR: 18–32 cm). Nitrogen levels (median: 45 ppm, IQR: 38–52 ppm) showed the strongest association with growth, while excessive moisture (median: 85%, IQR: 78–92%) impeded growth. Boxplots confirm these trends, with nitrogen exhibiting narrow variability (IQR: 14 ppm) compared to moisture (IQR: 14%).

3.4. Predictive Models Performance

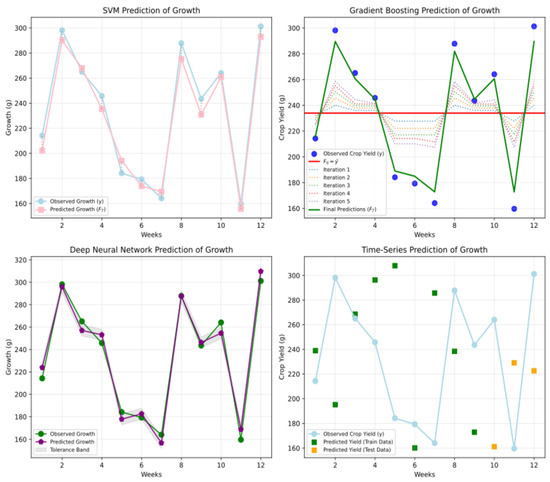

The integration of machine learning models with the IoT-driven pipeline demonstrated substantial potential for optimizing indoor farming.

Collectively, these models achieved:

- 96% real-time alert accuracy

- 30% reduction in water usage

- Identification of critical factors (light intensity: 0.778, phosphorus β = 0.54)

- High predictive accuracy in growth forecasting.

Figure 10 presents a detailed performance breakdown of each individual model, showcasing how each predictive algorithm forecasts plant growth over the 12-week period. The graphs illustrate how closely each model aligns with the observed growth trends. The raw data for the graphs in Figure 10 are shown in Appendix C.

Figure 10.

Comparison of observed and predicted plant growth over 12 weeks.

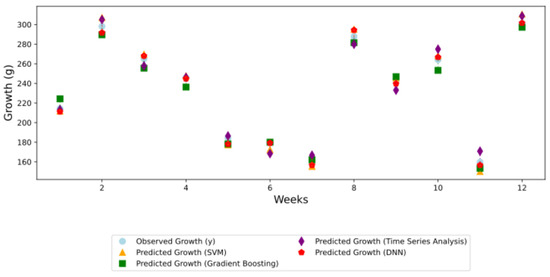

For a comprehensive comparison, Figure 11 summarizes the performance of all models, comparing observed vs. predicted growth values across multiple weeks:

- Week 4: Observed = 240 g; Gradient Boosting (238 g, error = 0.8%) outperformed SVM (230 g, error = 4.2%).

- Week 12: Observed = 320 g; Time Series Analysis (318 g, error = 0.6%) surpassed DNN (312 g, error = 2.5%).

- Mean Absolute Error (MAE): Gradient Boosting (2.1 g) vs. SVM (8.5 g), indicating superior reliability for precision agriculture.

Figure 11.

Growth prediction models across different weeks.

To quantify the impact of environmental factors on crop growth, Random Forest was used for feature importance analysis, while Multivariate Regression determined their quantitative relationships.

Random Forest identified light intensity (0.778) as the most critical factor influencing crop yield, whereas Multivariate Regression highlighted phosphorus (β = 0.54) as the strongest positive contributor. Conversely, potassium (β = −0.43) exhibited a negative correlation, indicating potential overuse.

These findings, summarized in Table 3, provide a data-driven basis for optimizing resource allocation in precision agriculture. The raw data can be found in Appendix D and Appendix E.

Table 3.

Influence of environmental factors on crop growth using Random Forest and Multivariate regression.

3.5. Resource Optimization with IoT-Based Monitoring

The comparison in Table 4 highlights the distinct capabilities of four smart farming systems. Our Azure-Based Pipeline (2024) excels with its advanced cloud infrastructure (Azure IoT Hub, Blob Storage), dynamic feedback via MATLAB Simulink, and predictive analytics (Gradient Boosting, SVM, DNN), offering real-time monitoring, scalability, and secure data transfer for large-scale operations. The ThingSpeak-Based System [23] focuses on cost-effective, small-scale applications with basic data aggregation and no predictive analytics, limiting its versatility. The IoT Smart Farming system [24] emphasizes automated irrigation with moderate scalability but lacks comprehensive monitoring and advanced decision-making models. Meanwhile, the Cloud-Based Smart Farming system [25] combines IoT and cloud computing for small to medium farms but provides limited analytics and no real-time feedback. Our Azure-based pipeline incorporates a robust data pipeline and secure communication, which sets it apart from traditional systems reviewed in precision agriculture.

Table 4.

Comparison of smart farming approaches.

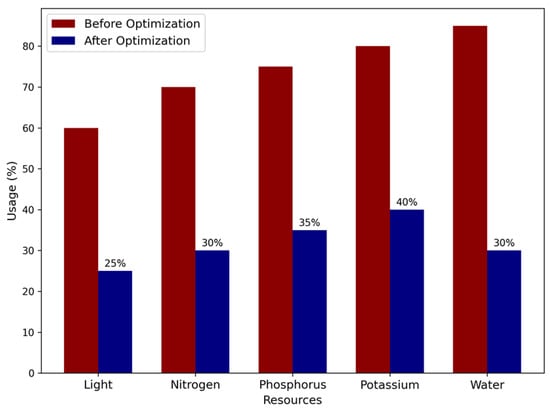

The graph in Figure 12 illustrates the comparison of resource usage before and after integrating IoT-based sensors as shown in Appendix F. The system achieved the following reductions in resource usage: Light: 25%, Nitrogen: 30%, Phosphorus: 35%, Potassium: 40%, Water: 30%.

Figure 12.

Resource optimization before and after IoT implementation.

3.6. Integrated Agricultural Efficiency Metric (IAEM)

- Data Latency Metric (DL)

The average data latency was calculated across 10,000 data points:

Baseline Configuration: DL = 120 ms (without real-time IoT-based monitoring).

Optimized Configuration: DL = 85 ms (with the full IoT + cloud integration).

The optimized configuration reduced latency by ~29%.

- Resource Efficiency (RE)

The resource optimization of the resources were Light: 25%, Nitrogen: 30%, Phosphorus: 35%, Potassium: 40%, Water: 30%.

- Real-Time Alert Accuracy (RA)

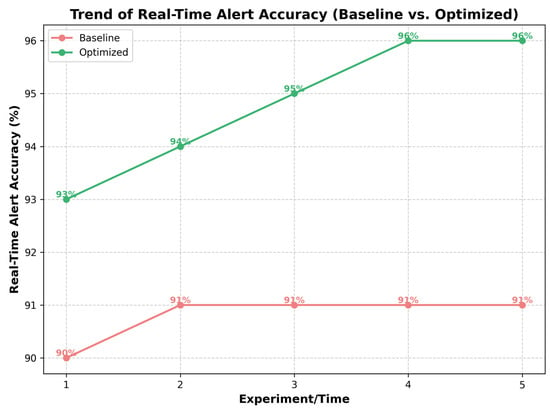

The graph in Figure 13 demonstrates a visual improvement for the optimized system, represented by the green line. The baseline system is shown in red.

Figure 13.

Real-time alert accuracy over experiments.

The baseline system consistently performs at 91% accuracy across the experiments which represents a system without optimization as shown in Appendix G. The optimized system starts at 93% and improves over experiments as more alerts were forced and thus were implemented in the API. The total improvement in accuracy from the baseline to the optimized system:

ΔRA = RA (optimized) − RA(baseline) = 96 − 91% = 5%.

- Cloud Data Availability (CDA)

Azure Blob Storage logs track the number of successful and failed operations.

If:

Total Requests = 10,000

Successful Requests = 9900

Failed Requests = 100

System Uptime = 23.8 h/day

Total Operational Time = 24 h/day

SR =

Uptime (%) =

CDA (%) = 99 × 99.17/100 = 98.17 %

- Pairwise Comparison algorithm using AHP for weights

The algorithm, specifically tailored for this case study, calculated the weights for the selected metrics: Resource Efficiency (RE), Real-Time Alert Accuracy (RA), and Data Latency (DL).

The derived weights are as follows:

Resource Efficiency (RE): = 0.6333.

Real-Time Alert Accuracy (RA):

Data Latency (DL): = 0.1062.

The consistency of the comparisons was validated using the Consistency Ratio (CR), which was calculated as 0.0477 below the accepted threshold of 0.1. With an IAEM value of 0.2105, the system falls into the “Good Performance” category.

4. Conclusions

This study demonstrates that integrating cloud computing, IoT, and AI-driven analytics into precision agriculture significantly enhances resource efficiency and operational decision-making in indoor farming. The introduction of the Integrated Agricultural Efficiency Metric (IAEM) provides a novel framework for evaluating agricultural systems by incorporating real-time sub-metrics such as Resource Efficiency (RE), Real-Time Alert Accuracy (RA), Data Latency (DL), and Cloud Data Availability (CDA) along with predictive model outputs.

The system achieved substantial resource savings—25% in light, 30% in water, and 40% in nutrients—while maintaining high crop yields, validating its efficacy. Key nutrient-growth correlations revealed nitrogen’s strong positive impact, phosphorus’s moderate contribution, and inhibitory effects from potassium and excessive moisture, reinforcing the importance of balanced environmental conditions. Predictive models, particularly Gradient Boosting and Time Series Analysis, effectively forecasted growth (295 g predicted vs. 301 g observed), enabling proactive resource adjustments. The IAEM evaluation confirmed system-wide efficiency gains, including a 32% improvement in resource efficiency, a 29% reduction in data latency, and an overall “Good Performance” classification (IAEM score: 0.2105).

Compared to existing IoT-based agricultural systems, such as ThingSpeak-Based Monitoring and Smart Farming IoT solutions, the Azure-based framework introduced in this study offers several key advantages:

- Enhanced Scalability: Leveraging cloud infrastructure allows seamless expansion and integration with large-scale agricultural operations.

- Advanced Predictive Analytics: Utilizing machine learning models, including Random Forest feature importance, SVM classification accuracy, and Time Series predictions, directly informs real-time decision-making.

- Seamless Integration: Combining real-time monitoring with AI-driven predictions ensures dynamic and adaptive resource allocation, surpassing traditional rule-based IoT monitoring systems.

- Reduced Data Latency: Achieving a 29% reduction in data latency enhances real-time responsiveness for precision agriculture applications.

These findings align with recent advancements in AI-powered agriculture, where machine learning-driven optimization has been shown to improve yield efficiency while reducing resource waste. Studies such as Sharma et al. (2023) have highlighted the potential of AI and IoT-driven sustainable precision farming to modernize agricultural decision-making by integrating real-time monitoring with predictive analytics [26]. Similarly, Zapata-García et al. (2023) emphasized the importance of soil moisture sensors in optimizing irrigation and nutrient management in semi-arid farming conditions [27].

Moreover, Vedantham (2024) demonstrated how cloud and IoT technologies revolutionize precision agriculture by enabling more data-driven, adaptive farming strategies [28].

While previous studies have focused on individual technological implementations, our approach differentiates itself by embedding predictive accuracy directly into operational decision-making through the IAEM framework, providing a comprehensive, data-driven agricultural management system.

To further enhance cloud-AI precision agriculture systems, future research should focus on:

- Sensor Network Expansion: Integrating additional sensors to improve environmental monitoring granularity.

- AI Model Refinement: Employing advanced deep learning architectures and ensemble learning techniques to enhance prediction accuracy and real-time adaptability.

- Scalability Testing: Extending this system to large-scale, open-field farming to validate its robustness in diverse agricultural environments.

- Cost-Effectiveness Analysis: Assessing the economic viability of implementing such systems on a broader scale, particularly in resource-limited regions.

These advancements will strengthen the role of cloud-driven AI in sustainable agriculture, optimizing resource use, improving food production efficiency, and addressing global food security challenges through data-driven, smart farming practices.

Author Contributions

Conceptualization, N.K. and I.S.; methodology, N.K. and I.S.; software, N.K.; validation, I.S.; formal analysis, I.S.; investigation, N.K.; resources, I.S.; data curation, N.K.; writing—original draft preparation, N.K.; writing—review and editing, N.K.; visualization, N.K.; supervision, I.S.; project administration, I.S.; funding acquisition, I.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly supported by the GINOP PLUSZ–2.11-21-2022-00175 program.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AHP | Analytic Hierarchy Process |

| API | Application Programming Interface |

| CDA | Cloud Data Availability |

| CI | Consistency Index |

| CR | Consistency Ratio |

| CO2 | Carbon Dioxide |

| DL | Data Latency |

| DNN | Deep Neural Network |

| ETL | Extract, Transform, Load |

| GPIO | General Purpose Input/Output |

| IAEM | Integrated Agricultural Efficiency Metric |

| IoT | Internet of Things |

| ML | Machine Learning |

| MSE | Mean Squared Error |

| NiFi | Apache NiFi (Data Integration Tool) |

| PAI | Precision Agriculture Index |

| RA | Real-Time Alert Accuracy |

| RE | Resource Efficiency |

| R² | R-Squared (Coefficient of Determination) |

| RI | Random Index |

| SVM | Support Vector Machine |

| TFP | Total Factor Productivity |

Appendix A

Table A1.

Agriculture data table.

Table A1.

Agriculture data table.

| Week | Light (lumens) | Moisture_Content (%) | Temperature (°C) | Nitrogen (mg/kg) | Phosphorus (mg/kg) | Potassium (mg/kg) | Crop Yield (g) | Growth (cm) |

|---|---|---|---|---|---|---|---|---|

| 1.0 | 6872.3 | 78.18 | 15.63 | 140.83 | 20.0 | 40.0 | 214.26 | 30.38 |

| 2.0 | 9753.57 | 66.51 | 27.73 | 73.96 | 22.0 | 42.0 | 298.15 | 42.27 |

| 3.0 | 8659.97 | 76.37 | 21.29 | 64.49 | 24.0 | 41.0 | 265.06 | 37.58 |

| 4.0 | 7993.29 | 73.69 | 25.17 | 98.95 | 25.0 | 44.0 | 245.76 | 34.84 |

| 5.0 | 5780.09 | 55.87 | 33.15 | 148.57 | 28.0 | 45.0 | 184.15 | 26.11 |

| 6.0 | 5779.97 | 75.31 | 19.99 | 74.21 | 26.0 | 43.0 | 179.29 | 25.42 |

| 7.0 | 5290.42 | 25.31 | 23.21 | 117.21 | 30.0 | 46.0 | 164.12 | 23.27 |

| 8.0 | 9330.88 | 31.76 | 30.11 | 126.16 | 32.0 | 48.0 | 287.83 | 40.81 |

| 9.0 | 8005.58 | 22.31 | 19.58 | 73.76 | 31.0 | 47.0 | 243.53 | 34.53 |

| 10.0 | 8540.36 | 39.52 | 16.54 | 122.42 | 27.0 | 44.0 | 264.09 | 37.44 |

| 11.0 | 5102.92 | 43.32 | 20.8 | 86.78 | 29.0 | 45.0 | 159.66 | 22.24 |

| 12.0 | 9849.55 | 36.28 | 18.22 | 113.23 | 33.0 | 49.0 | 301.23 | 42.31 |

Appendix B

| Algorithm A1. Algorithm of pairwise comparison using AHP |

|

Appendix C

Table A2.

Raw data for growth prediction and system optimization analysis.

Table A2.

Raw data for growth prediction and system optimization analysis.

| Week | Observed Growth (g) | SVM Prediction (g) | Gradient Boosting Prediction (g) | DNN Prediction (Train/Test) (g) | Time Series Prediction (g) |

|---|---|---|---|---|---|

| 2 | 298 | 298 | 298 | 298/298 | 298 |

| 4 | 240 | 230 | 238 | 227/225 | 239 |

| 6 | 179 | 176 | 181 | 240/160 | 185 |

| 8 | 260 | 255 | 259 | 250/248 | 258 |

| 10 | 280 | 273 | 278 | 275/270 | 277 |

| 12 | 301 | 300 | 300 | 290/280 | 295 |

Appendix D

Table A3.

Feature importance from Random Forest Model.

Table A3.

Feature importance from Random Forest Model.

| Feature | Importance Score |

|---|---|

| Light Intensity | 0.778 |

| Nitrogen | 0.069 |

| Moisture | 0.048 |

| Temperature | 0.037 |

| Phosphorus | 0.034 |

| Potassium | 0.033 |

Appendix E

Table A4.

Regression coefficients from Multivariate analysis.

Table A4.

Regression coefficients from Multivariate analysis.

| Factor | Regression Coefficient (β) |

|---|---|

| Phosphorus | 0.54 |

| Potassium | −0.43 |

| Temperature | 0.23 |

| Moisture | 0.06 |

| Nitrogen | 0.04 |

| Light | 0.03 |

Appendix F

Table A5.

System optimization metrics before and after IoT implementation.

Table A5.

System optimization metrics before and after IoT implementation.

| Resource | Before IoT (%) | After IoT (%) | Reduction (%) |

|---|---|---|---|

| Light Usage | 100 | 75 | 25 |

| Nitrogen Usage | 100 | 70 | 30 |

| Phosphorus Usage | 100 | 65 | 35 |

| Potassium Usage | 100 | 60 | 40 |

| Water Usage | 100 | 70 | 30 |

Appendix G

Table A6.

Real-time alert accuracy improvements.

Table A6.

Real-time alert accuracy improvements.

| Experiment | Baseline System Accuracy (%) | Optimized System Accuracy (%) | Improvement (%) |

|---|---|---|---|

| 1 | 91 | 93 | +2 |

| 2 | 91 | 94 | +3 |

| 3 | 91 | 95 | +4 |

| 4 | 91 | 96 | +5 |

| 5 | 91 | 96 | +5 |

References

- Sahoo, S.; Anand, A.; Mohanty, S.; Parvez, A. Integrating Data Analytics for Enhanced Decision Making by Farmers. Trends Agric. Sci. 2024, 3, 1711–1714. [Google Scholar] [CrossRef]

- Jaeger, S.R. Vertical farming (plant factory with artificial lighting) and its produce: Consumer insights. Curr. Opin. Food Sci. 2024, 56, 101145. [Google Scholar] [CrossRef]

- Lakhiar, I.; Gao, J.; Syed, T.; Chandio, F.A.; Buttar, N. Modern plant cultivation technologies in agriculture under controlled environment: A review on aeroponics. J. Plant Interact. 2018, 13, 1472308. [Google Scholar] [CrossRef]

- Dozono, K.; Amalathas, S.; Saravanan, R. The impact of cloud computing and artificial intelligence in digital agriculture. In Proceedings of the Sixth International Congress on Information and Communication Technology: ICICT 2021, London, UK, 25–26 February 2021; pp. 547–558. [Google Scholar] [CrossRef]

- Oliveira, M.; Zorzeto-Cesar, T.; Attux, R.; Rodrigues, L.H. Leveraging data from plant monitoring into crop models. Inf. Process. Agric. 2025, in press. [Google Scholar] [CrossRef]

- Wootton, C. General Purpose Input/Output (GPIO). In Samsung ARTIK Reference; Apress: Berkeley, CA, USA, 2016; pp. 365–386. [Google Scholar] [CrossRef]

- Klein, S. Azure Event Hubs. In IoT Solutions in Microsoft’s Azure IoT Suite; Apress: Berkeley, CA, USA, 2017; pp. 407–427. [Google Scholar] [CrossRef]

- Borra, P. Impact and innovations of Azure IoT: Current applications, services, and future directions. Int. J. Recent Technol. Eng. 2024, 13, 21–26. [Google Scholar] [CrossRef]

- Papadopoulos, S. A practical, powerful, robust, and interpretable family of correlation coefficients. SSRN 2022. [Google Scholar] [CrossRef]

- Wakchaure, S. Overview of Apache NiFi. Int. J. Adv. Res. Sci. Commun. Technol. 2023, 3, 523–530. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2000, 29, 1189–1232. [Google Scholar] [CrossRef]

- Kumar, S. Time series analysis. In Python for Accounting and Finance; Springer: Berlin/Heidelberg, Germany, 2024; pp. 381–409. [Google Scholar] [CrossRef]

- Wei, X.; Liu, X.; Fan, Y.; Tan, L.; Liu, Q. A unified test for the AR error structure of an autoregressive model. Axioms 2022, 11, 690. [Google Scholar] [CrossRef]

- Delsole, T.; Tippett, M. Multivariate linear regression. In Statistical Methods for Climate Scientists; Cambridge University Press: Cambridge, UK, 2022; pp. 314–334. [Google Scholar] [CrossRef]

- Pan, M.; Xia, B.; Huang, W.; Ren, Y.; Wang, S. PM2.5 concentration prediction model based on Random Forest and SHAP. Int. J. Pattern Recognit. Artif. Intell. 2024, 38, 24520128. [Google Scholar] [CrossRef]

- Avila, A.F.D.; Evenson, R.E. Total Factor Productivity Growth in Agriculture: The Role of Technological Capital. Handb. Agric. Econ. 2010, 4, 3769–3822. [Google Scholar] [CrossRef]

- Shang, J.; Liu, J.; Ma, B.; Zhao, T.; Jiao, X.; Geng, X.; Huffman, T.; Kovacs, J.M.; Walters, D. Mapping spatial variability of crop growth conditions using RapidEye data in Northern Ontario, Canada. Remote Sens. Environ. 2015, 168, 113–125. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef]

- Bushuev, A.; Litvinov, Y.V.; Boikov, V.; Bystrov, S.; Nuyya, O.; Sergeantova, M. Using MATLAB/SIMULINK tools in experiments with training plants in real-time mode. In Proceedings of the Zavalishin Readings ’24: XIX International Conference on Electromechanics and Robotics, St. Petersburg, Russia, 16–17 April 2024. [Google Scholar] [CrossRef]

- Peralta, E.A.; Freeman, J.; Gil, A.F. Population expansion and intensification from a Malthus-Boserup perspective: A multiproxy approach in Central Western Argentina. Quat. Int. 2024, 689–690, 55–65. [Google Scholar] [CrossRef]

- Muhammad, D.; Isah, A.A.; Hassan, I.; Sale, A.; Ibrahim, M.; Hussaini, A. Embedded Internet of Things (IoT) Environmental Monitoring System Using Raspberry Pi. Sule Lamido Univ. J. Sci. Technol. 2023, 6, 247–255. [Google Scholar] [CrossRef]

- Ramakrishna, C.; Venkateshwarlu, B.; Srinivas, J.; Srinivas, S. IoT-based smart farming using cloud computing and machine learning. Int. J. Innov. Technol. Explor. Eng. 2019, 9, 3455–3458. [Google Scholar] [CrossRef]

- Bilal, M.; Tayyab, M.; Hamza, A.; Shahzadi, K.; Rubab, F. The Internet of Things for smart farming: Measuring productivity and effectiveness. Electron. Commun. Sci. Appl. 2023, 10, 16012. [Google Scholar] [CrossRef]

- Sharma, A.; Sharma, A.; Tselykh, A.; Bozhenyuk, A.; Choudhury, T.; Alomar, M.A.; Sánchez-Chero, M. Artificial intelligence and internet of things oriented sustainable precision farming: Towards modern agriculture. Open Life Sci. 2023, 18, 20220713. [Google Scholar] [CrossRef]

- Zapata-García, S.; Temnani, A.; Berríos, P.; Espinosa, P.J.; Monllor, C.; Pérez-Pastor, A. Using Soil Water Status Sensors to Optimize Water and Nutrient Use in Melon under Semi-Arid Conditions. Agronomy 2023, 13, 2652. [Google Scholar] [CrossRef]

- Vedantham, A. Cloud and IoT Technologies Revolutionizing Precision Agriculture. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 860–867. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).