SVMobileNetV2: A Hybrid and Hierarchical CNN-SVM Network Architecture Utilising UAV-Based Multispectral Images and IoT Nodes for the Precise Classification of Crop Diseases

Abstract

1. Introduction

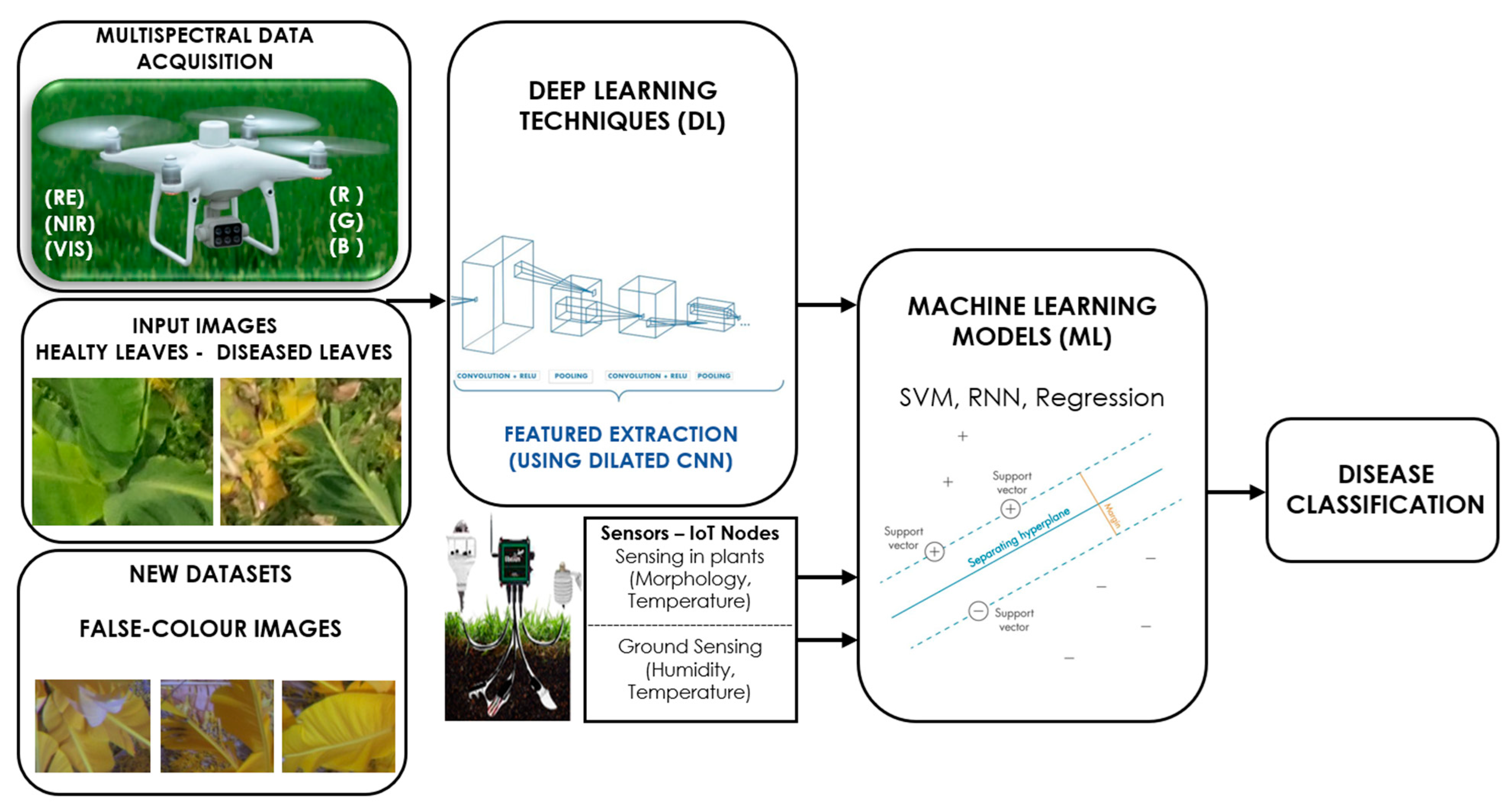

2. Materials and Methods

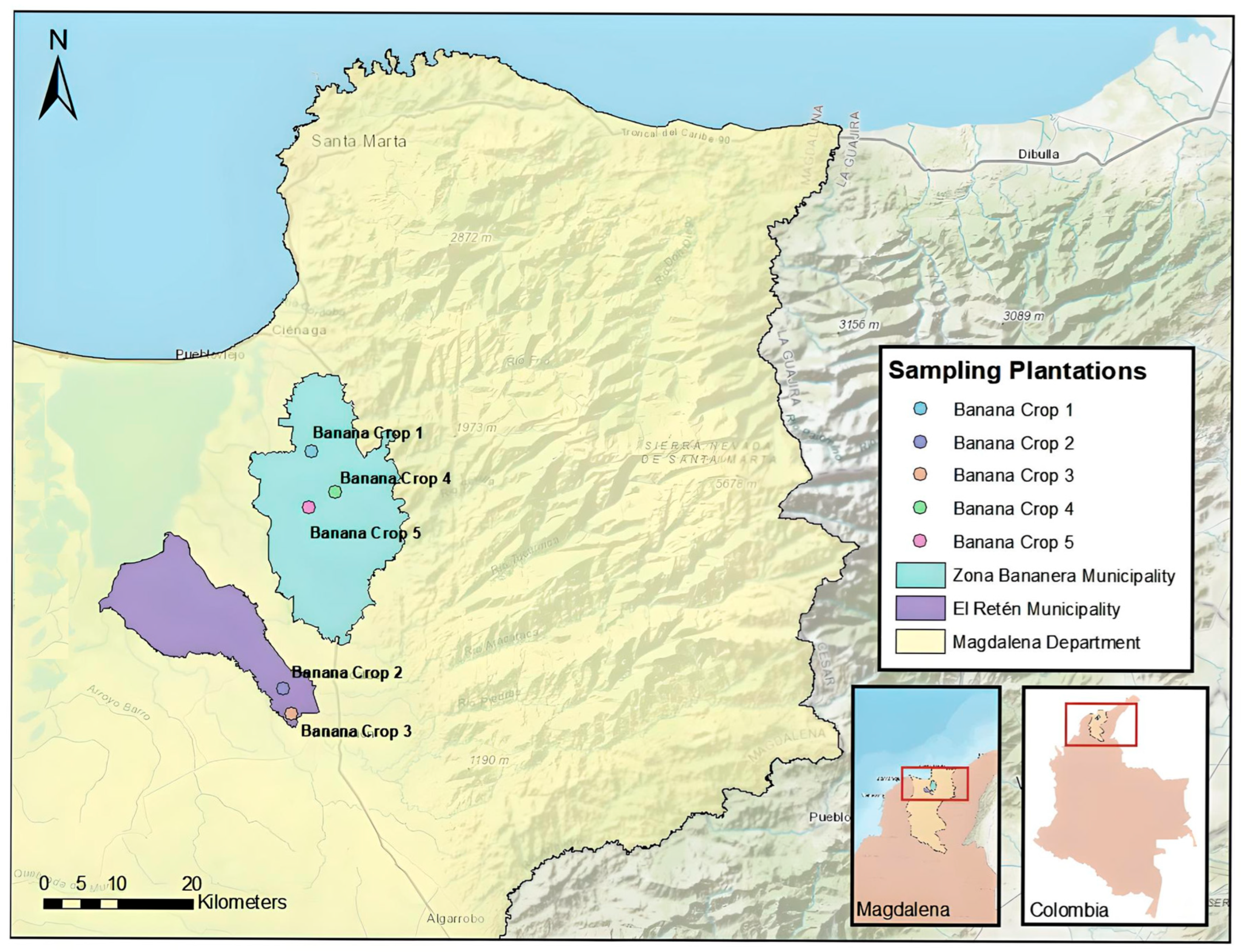

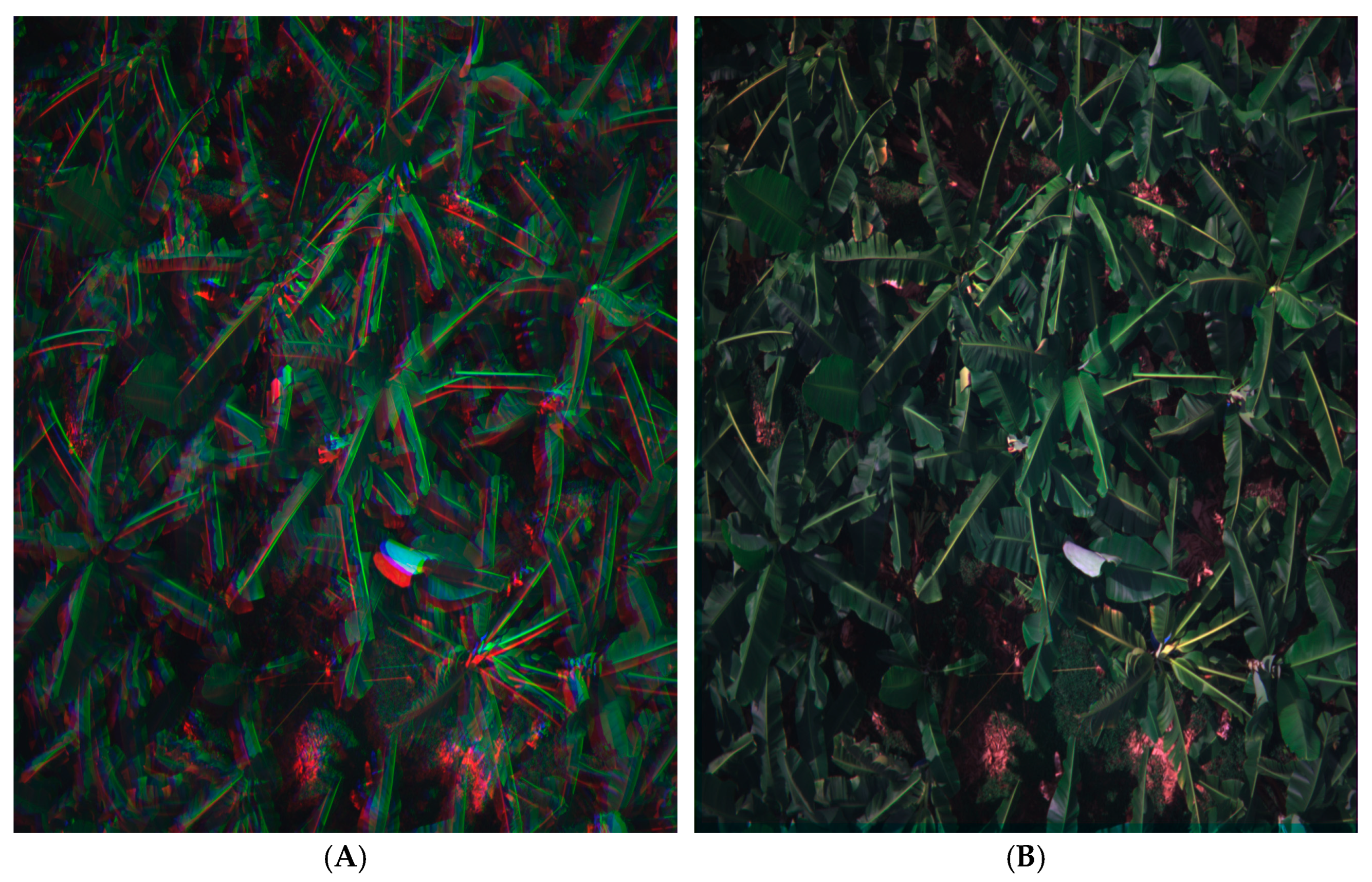

2.1. Image Acquisition

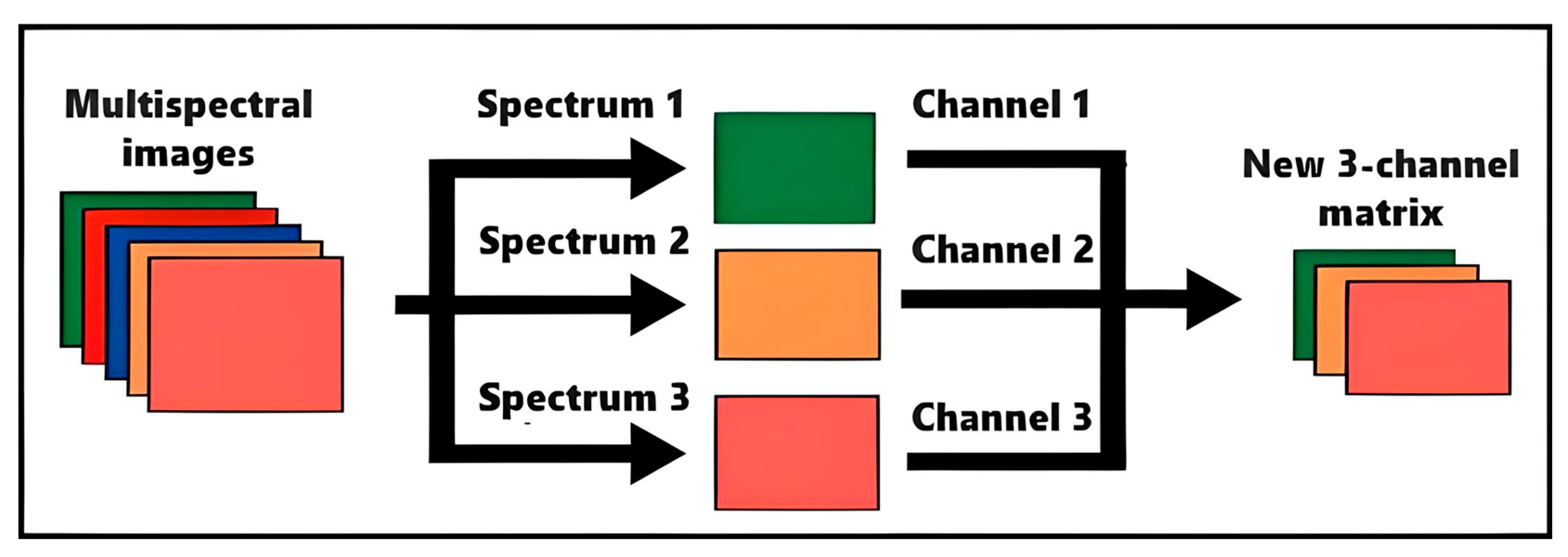

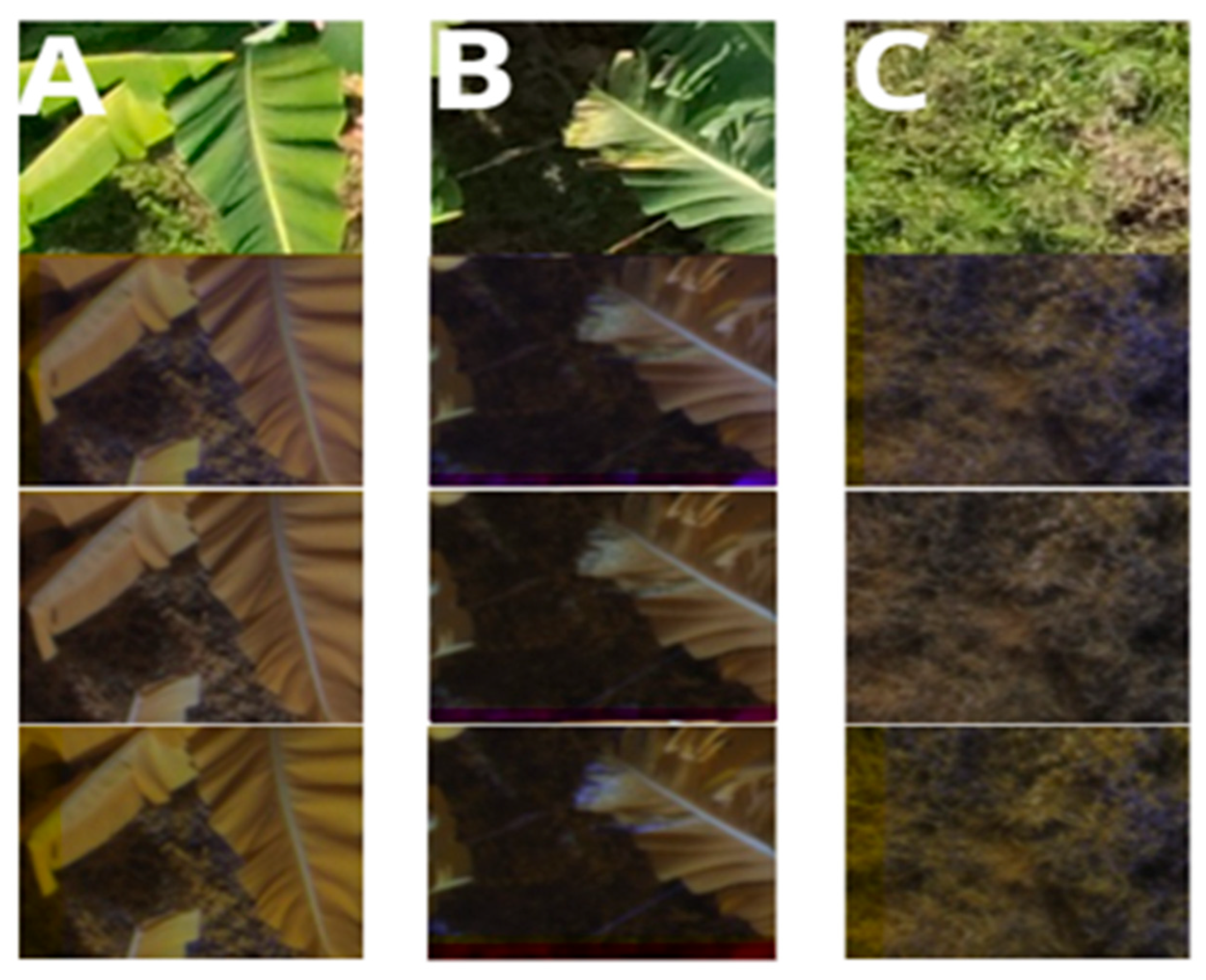

2.2. Dataset Construction

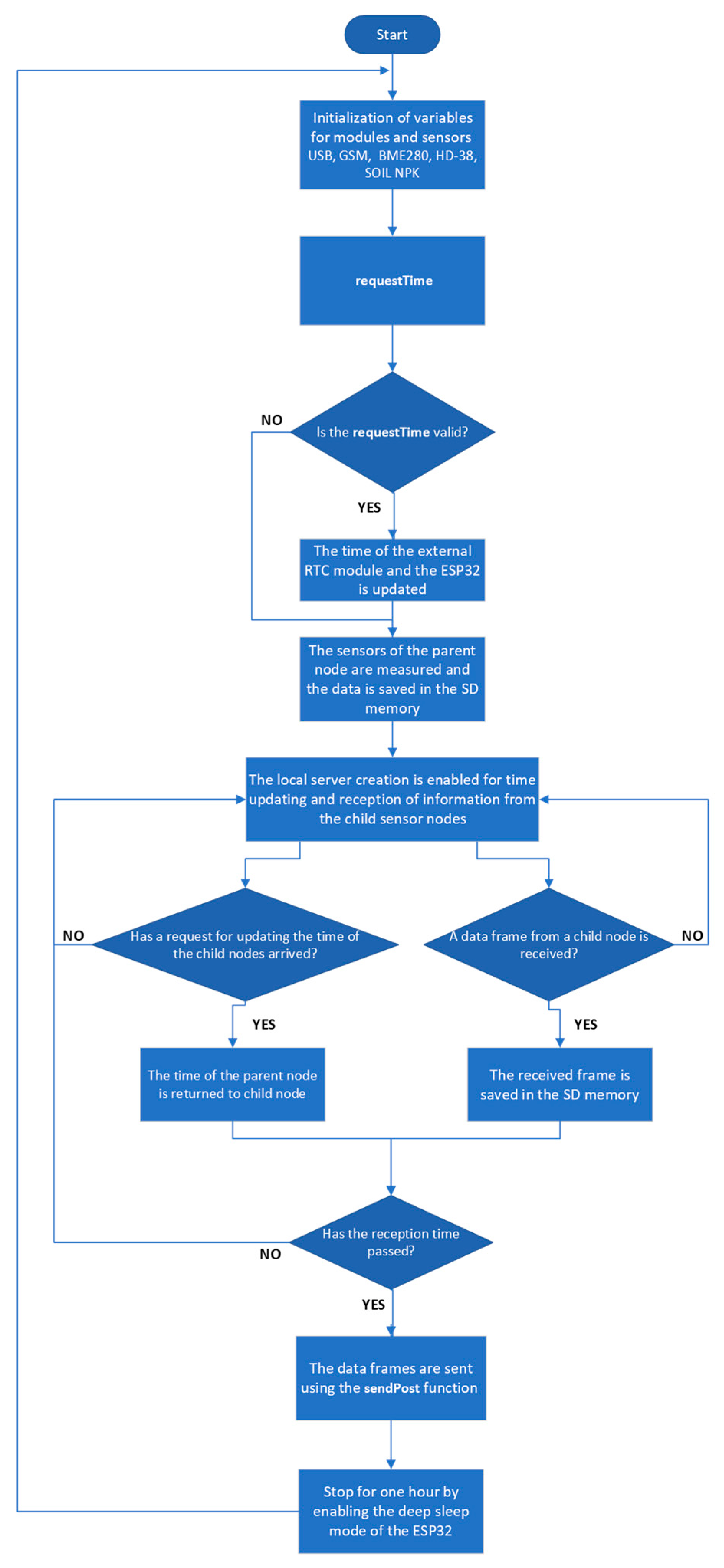

2.3. Environmental Data Acquisition

2.4. Building Multiple Models

2.5. Evaluation of the Models

3. Results

3.1. Dataset Creation

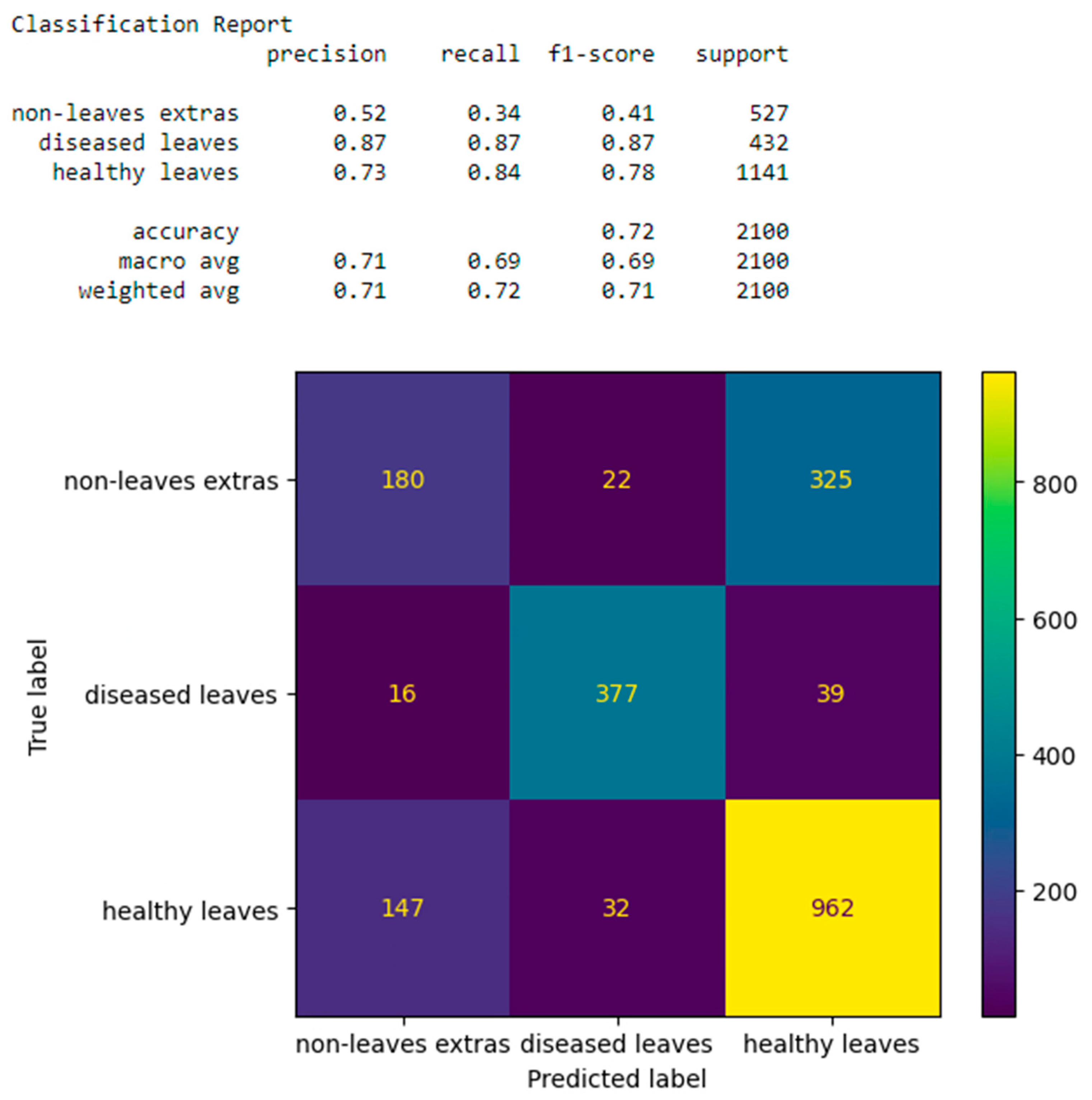

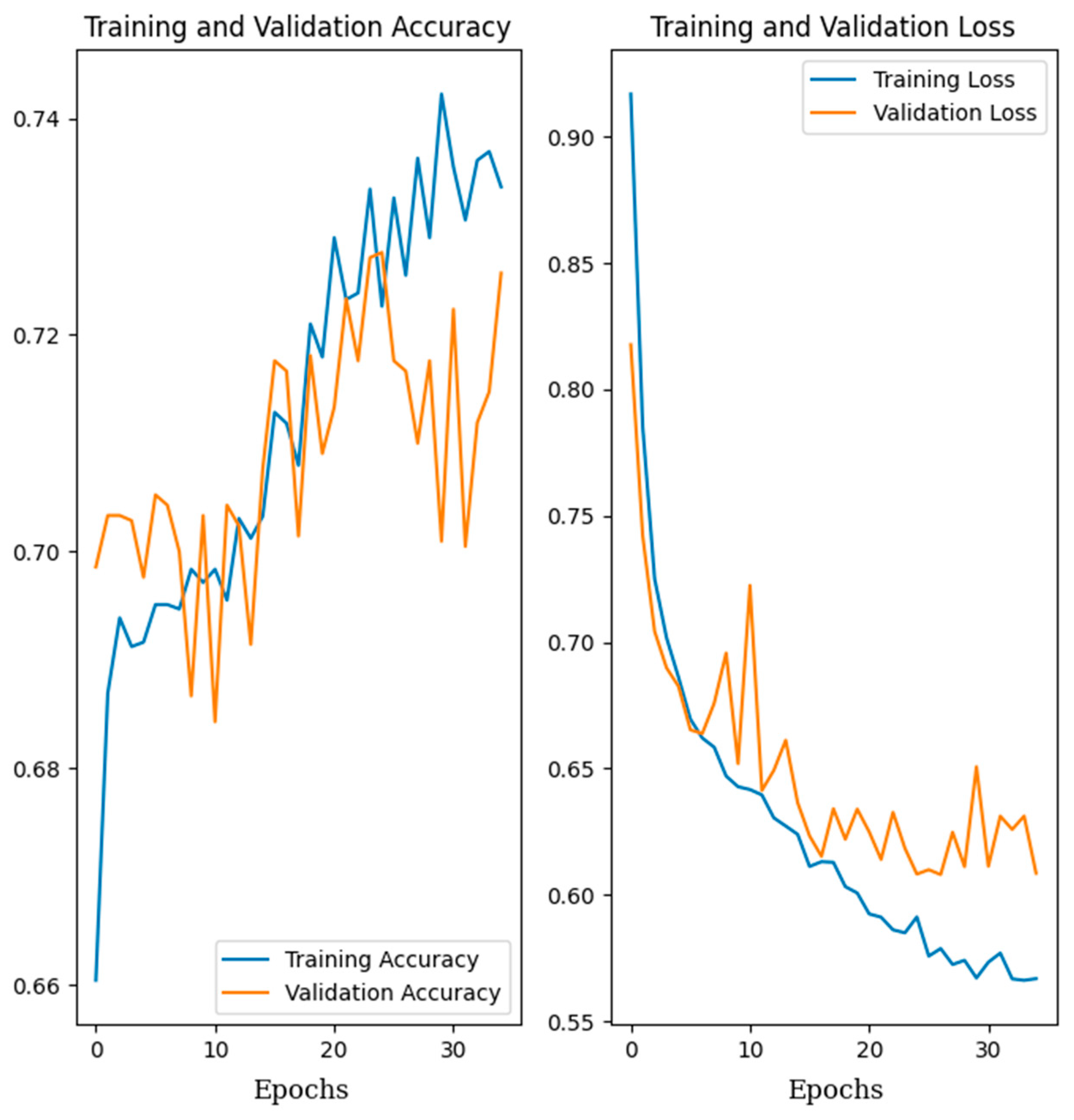

3.2. Training CNN Architectures

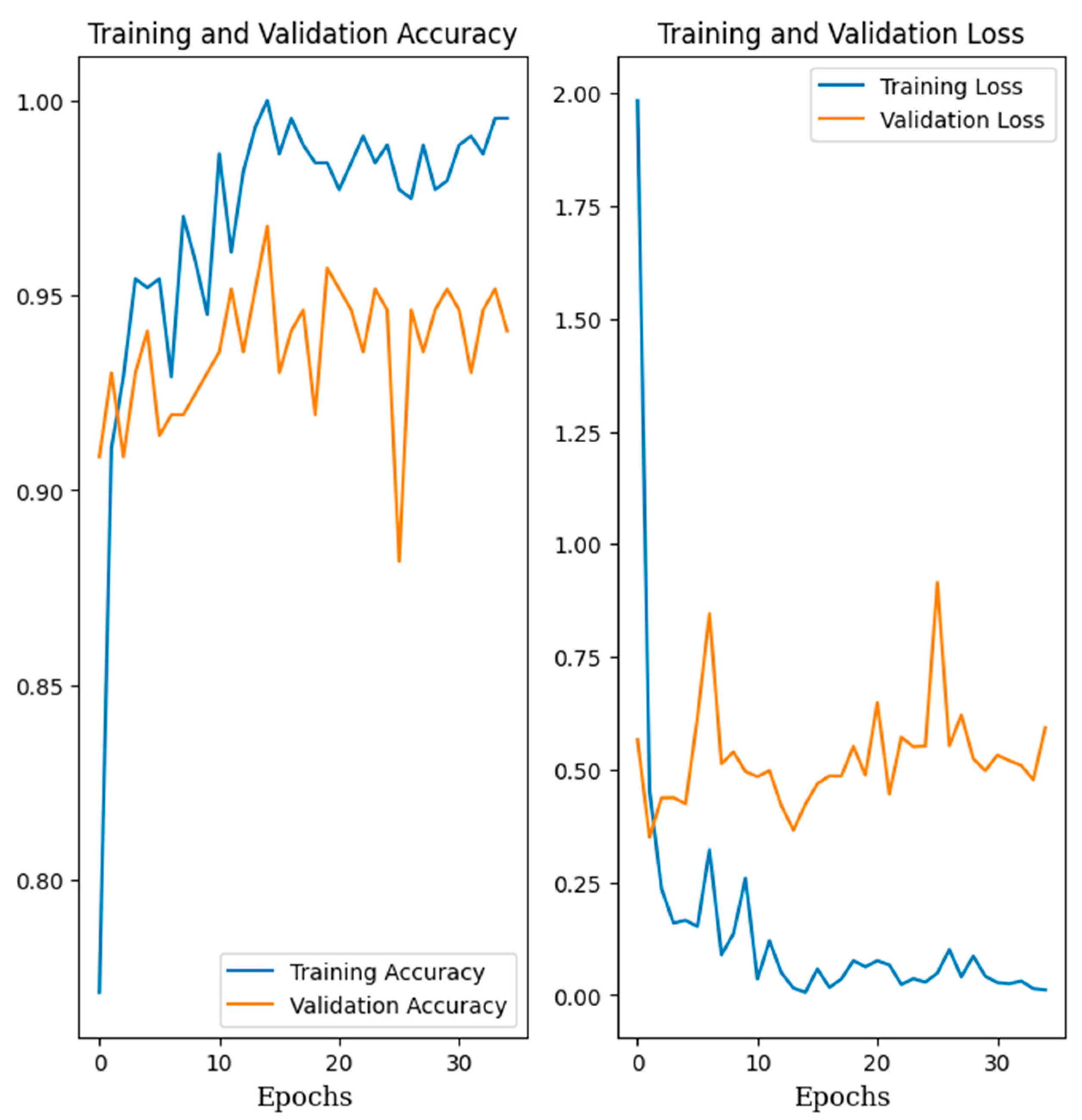

3.3. Training Hybrid Architectures

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Velten, S.; Leventon, J.; Jager, N.; Newig, J. What Is Sustainable Agriculture? A Systematic Review. Sustainability 2015, 7, 7833–7865. [Google Scholar] [CrossRef]

- FAO. Banana Market Review—Preliminary Results 2023. Rome. Available online: https://www.fao.org/markets-and-trade/commodities/bananas/en (accessed on 30 November 2024).

- OECD; FAO. Environmental Sustainability in Agriculture 2023; OECD: Rome, Italy; FAO: Rome, Italy, 2023. [Google Scholar] [CrossRef]

- Soares, V.B.; Parreiras, T.C.; Furuya, D.E.G.; Bolfe, É.L.; Nechet, K.d.L. Mapping Banana and Peach Palm in Diversified Landscapes in the Brazilian Atlantic Forest with Sentinel-2. Agriculture 2025, 15, 2052. [Google Scholar] [CrossRef]

- Datta, S.; Jankowicz-Cieslak, J.; Nielen, S.; Ingelbrecht, I.; Till, B.J. Induction and recovery of copy number variation in banana through gamma irradiation and low-coverage whole-genome sequencing. Plant Biotechnol. J. 2018, 16, 1644–1653. [Google Scholar] [CrossRef]

- Fajardo, J.U.; Andrade, O.B.; Bonilla, R.C.; Cevallos-Cevallos, J.; Mariduena-Zavala, M.; Donoso, D.O.; Villardón, J.L.V. Early detection of black Sigatoka in banana leaves using hyperspectral images. Appl. Plant Sci. 2020, 8, e11383. [Google Scholar] [CrossRef]

- Friesen, T.L. Combating the Sigatoka Disease Complex on Banana. PLoS Genet. 2016, 12, e1006234. [Google Scholar] [CrossRef]

- Brito, F.S.D.; Fraaije, B.; Miller, R.N. Sigatoka disease complex of banana in Brazil: Management practices and future directions. Outlooks Pest Manag. 2015, 26, 78–81. [Google Scholar] [CrossRef]

- Liu, B.-L.; Tzeng, Y.-M. Characterization study of the sporulation kinetics of Bacillus thuringiensis. Biotechnol. Bioeng. 2000, 68, 11–17. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.H.; Han, X. Plant Disease Diagnosis Using Deep Learning Based on Aerial Hyperspectral Images: A Review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Shahi, T.B.; Xu, C.-Y.; Neupane, A.; Guo, W. Recent Advances in Crop Disease Detection Using UAV and Deep Learning Techniques. Remote Sens. 2023, 15, 2450. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Silva, T.C.; Moreira, S.I.; de Souza, D.M.; Christiano, F.S., Jr.; Gasparoto, M.C.G.; Fraaije, B.A.; Goldman, G.H.; Ceresini, P.C. Resistance to Site-Specific Succinate Dehydrogenase Inhibitor Fungicides Is Pervasive in Populations of Black and Yellow Sigatoka Pathogens in Banana Plantations from Southeastern Brazil. Agronomy 2024, 14, 666. [Google Scholar] [CrossRef]

- Raja, N.B.; Selvi Rajendran, P. Comparative Analysis of Banana Leaf Disease Detection and Classification Methods. In Proceedings of the 6th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 29–31 March 2022; IEEE: New York, NY, USA, 2022; pp. 1215–1222. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Shareena, E.M.; Chandy, D.A.; Shemi, P.M.; Poulose, A. A Hybrid Deep Learning Model for Aromatic and Medicinal Plant Species Classification Using a Curated Leaf Image Dataset. AgriEngineering 2025, 7, 243. [Google Scholar] [CrossRef]

- Li, B.; Yu, L.; Zhu, H.; Tan, Z. YOLO-FDLU: A Lightweight Improved YOLO11s-Based Algorithm for Accurate Maize Pest and Disease Detection. AgriEngineering 2025, 7, 323. [Google Scholar] [CrossRef]

- Jiménez, N.; Orellana, S.; Mazon-Olivo, B.; Rivas-Asanza, W.; Ramírez-Morales, I. Detection of Leaf Diseases in Banana Crops Using Deep Learning Techniques. AI 2025, 6, 61. [Google Scholar] [CrossRef]

- Pino, A.F.S.; Moreno, J.D.S.; Valencia, C.I.V.; Narváez, J.A.G. A Leaf Chlorophyll Content Dataset for Crops: A Comparative Study Using Spectrophotometric and Multispectral Imagery Data. Data 2025, 10, 142. [Google Scholar] [CrossRef]

- Oviedo, B.; Zambrano-Vega, C.; Villamar-Torres, R.O.; Yánez-Cajo, D.; Campoverde, K.C. Improved YOLOv8 Segmentation Model for the Detection of Moko and Black Sigatoka Diseases in Banana Crops with UAV Imagery. Technologies 2025, 13, 382. [Google Scholar] [CrossRef]

- Li, H.; Chen, L.; Yao, Z.; Li, N.; Long, L.; Zhang, X. Intelligent Identification of Pine Wilt Disease Infected Individual Trees Using UAV-Based Hyperspectral Imagery. Remote Sens. 2023, 15, 3295. [Google Scholar] [CrossRef]

- Bonet, I.; Caraffini, F.; Peña, A.; Puerta, A.; Gongora, M. Oil Palm Detection via Deep Transfer Learning. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, Z.; Jiang, D.; Chang, Q.; Zheng, Z.; Fu, X.; Li, K.; Mo, H. Estimation of Anthocyanins in Leaves of Trees with Apple Mosaic Disease Based on Hyperspectral Data. Remote Sens. 2023, 15, 1732. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Wang, H.; Xu, Y.; Yu, Y.; Lin, Y.; Ran, J. An Efficient Model for a Vast Number of Bird Species Identification Based on Acoustic Features. Animals 2022, 12, 2434. [Google Scholar] [CrossRef] [PubMed]

- Luis, C.; Maira, G.; Rafa, L. Traditional and modern processing of digital signals and images for the classification of birds from singing. Int. J. Appl. Sci. Eng. 2024, 21, 2023222. [Google Scholar] [CrossRef]

- Jha, K.; Doshi, A.; Patel, P.; Shah, M. A comprehensive review on automation in agriculture using artificial intelligence. Artif. Intell. Agric. 2019, 2, 1–12. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Bendini, H.D.N.; Jacon, A.; Pessoa, A.C.M.; Pavanelli, J.A.P. Caracterização Espectral de Folhas de Bananeira (Musa spp.) para detecção e diferenciação da Sigatoka Negra e Sigatoka Amarela. In Anais XVII Simpósio Brasileiro de Sensoriamento Remoto; Instituto Nacional de Pesquisas Espaciais (INPE): São José dos Campos, Brazil, 2015. [Google Scholar]

- Linero-Ramos, R.; Parra-Rodríguez, C.; Espinosa-Valdez, A.; Gómez-Rojas, J.; Gongora, M. Assessment of Dataset Scalability for Classification of Black Sigatoka in Banana Crops Using UAV-Based Multispectral Images and Deep Learning Techniques. Drones 2024, 8, 503. [Google Scholar] [CrossRef]

- DJI. DJI MAVIC 3M User Manual. 6 July 2020. Available online: https://ag.dji.com/mavic-3-m/downloads (accessed on 6 December 2024).

- Gauhl, F. Epidemiology and Ecology of Black Sigatoka (Mycosphaerella fijiensis Morelet) on Plantain and Banana (Musa spp.) in Costa Rica, Central America; INIBAP: Montpellier, France, 1994. [Google Scholar]

- Li, Q.; Qi, S.; Shen, Y.; Ni, D.; Zhang, H.; Wang, T. Multispectral Image Alignment With Nonlinear Scale-Invariant Keypoint and Enhanced Local Feature Matrix. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1551–1555. [Google Scholar] [CrossRef]

- Valdez, A.E.; Castañeda, M.A.P.; Gomez-Rojas, J.; Ramos, R.L. Canopy Extraction in a Banana Crop From UAV Captured Multispectral Images. In Proceedings of the 2022 IEEE 40th Central America and Panama Convention (CONCAPAN), Panama, Panama, 9–12 November 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Universidad Nacional de Quilmes. Introducción a la Teledetección: La Herramienta de la Teledetección, El análisis Visual Y El Procesamiento de Imágenes. Available online: https://static.uvq.edu.ar/mdm/teledeteccion/unidad-3.html (accessed on 26 June 2024).

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; IEEE: New York, NY, USA, 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Pechlivani, E.-M.; Frangakis, N.; Tzovaras, D. Enhancing Tuta absoluta Detection on Tomato Plants: Ensemble Techniques and Deep Learning. AI 2023, 4, 996–1009. [Google Scholar] [CrossRef]

- Nirmal, M.D.; Jadhav, P.P.; Pawar, S. Pomegranate Leaf Disease Detection Using Supervised and Unsupervised Algorithm Techniques. Cybern. Syst. 2023, 54, 1–12. [Google Scholar] [CrossRef]

- Bhuiyan, M.A.B.; Abdullah, H.M.; Arman, S.E.; Rahman, S.S.; Mahmud, K.A. BananaSqueezeNet: A very fast, lightweight convolutional neural network for the diagnosis of three prominent banana leaf diseases banana leaf diseases. Smart Agric. Technol. 2023, 4, 100214. [Google Scholar] [CrossRef]

- Li, L.; Zhang, S.; Wang, B. Plant Disease Detection and Classification by Deep Learning—A Review. IEEE Access 2021, 9, 56683–56698. [Google Scholar] [CrossRef]

- Radócz, L.; Szabó, A.; Tamás, A.; Illés, Á.; Bojtor, C.; Ragán, P.; Vad, A.; Széles, A.; Harsányi, E.; Radócz, L. Investigation of the Detectability of Corn Smut Fungus (Ustilago maydis DC. Corda) Infection Based on UAV Multispectral Technology. Agronomy 2023, 13, 1499. [Google Scholar] [CrossRef]

- Choosumrong, S.; Hataitara, R.; Sujipuli, K.; Weerawatanakorn, M.; Preechaharn, A.; Premjet, D.; Laywisadkul, S.; Raghavan, V.; Panumonwatee, G. Bananas diseases and insect infestations monitoring using multi-spectral camera RTK UAV images. Spat. Inf. Res. 2023, 31, 371–380. [Google Scholar] [CrossRef]

- Yeom, J.; Jung, J.; Chang, A.; Ashapure, A.; Maeda, M.; Maeda, A.; Landivar, J. Comparison of Vegetation Indices Derived from UAV Data for Differentiation of Tillage Effects in Agriculture. Remote Sens. 2019, 11, 1548. [Google Scholar] [CrossRef]

- Carter, G.A.; Knapp, A.K. Leaf optical properties in higher plants: Linking spectral characteristics to stress and chlorophyll concentration. Am. J. Bot. 2001, 88, 677–684. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.A.; Lakho, G.M.; Keerio, H.A.; Sattar, M.N.; Hussain, G.; Mehdi, M.; Vistro, R.B.; Mahmoud, E.A.; Elansary, H.O. Application of Drone Surveillance for Advance Agriculture Monitoring by Android Application Using Convolution Neural Network. Agronomy 2023, 13, 1764. [Google Scholar] [CrossRef]

| Confusion Matrix | Predicted Labels | ||

|---|---|---|---|

| Actual labels | TP | FP | FP |

| FN | TP | FP | |

| FN | FN | TP | |

| Metric | Equation | Description |

|---|---|---|

| Accuracy (acc) | Measures the overall proportion of correct predictions among all predictions. | |

| Precision (P) | Indicates the proportion of positive identifications that were actually correct. | |

| Recall (R) | Represents the proportion of actual positives that were correctly identified. | |

| F1Score (F1) | Harmonic mean of precision and recall. Particularly useful in scenarios with imbalanced classes. The score ranges between 0 (worst) and 1 (best). |

| Image Combination | Channel 1 Wavelengths | Channel 2 Wavelengths | Channel 2 Wavelengths | Description Colour Space |

|---|---|---|---|---|

| Combination 1 | Red 650 ± 16 nm | Red Edge 730 ± 16 nm | Near-Infrared 840 ± 26 nm | Traditional RGB with near-infrared enhancement using red-edge bands. |

| Combination 2 | Green 560 ± 16 nm | Red Edge 730 ± 16 nm | Near-Infrared 840 ± 26 nm | Emphasises vegetation health by combining green spectrum with red-edge data. |

| Combination 3 | Blue 450 ± 16 nm | Red Edge 730 ± 16 nm | Near-Infrared 840 ± 26 nm | Highlights disease patterns by integrating blue spectrum with red-edge bands. |

| Architecture CNN | Image Combination | Training Accuracy | Validation Accuracy | Precision of Sigatoka | Recall of Sigatoka |

|---|---|---|---|---|---|

| Xception Xception Xception Xception EfficientNetV2B3 | RGB R–RE–NIR G–RE–NIR B–RE–NIR RGB | 0.7052 0.7249 0.7148 0.7167 0.8091 | 0.7027 0.7145 0.7029 0.7043 0.7834 | 0.83 0.86 0.80 0.81 0.76 | 0.87 0.90 0.88 0.83 0.65 |

| EfficientNetV2B3 | R–RE–NIR | 0.8307 | 0.7649 | 0.72 | 0.68 |

| EfficientNetV2B3 | G–RE–NIR | 0.8305 | 0.7563 | 0.69 | 0.59 |

| EfficientNetV2B3 | B–RE–NIR | 0.8338 | 0.7634 | 0.71 | 0.62 |

| VGG19 | RGB | 0.8019 | 0.7715 | 0.69 | 0.78 |

| VGG19 | R–RE–NIR | 0.8044 | 0.7582 | 0.68 | 0.71 |

| VGG19 | G–RE–NIR | 0.8021 | 0.7496 | 0.75 | 0.60 |

| VGG19 | B–RE–NIR | 0.8277 | 0.7477 | 0.68 | 0.71 |

| MobileNetV2 | RGB | 0.8248 | 0.7853 | 0.64 | 0.40 |

| MobileNetV2 | R–RE–NIR | 0.8654 | 0.7891 | 0.76 | 0.73 |

| MobileNetV2 | G–RE–NIR | 0.8258 | 0.7639 | 0.69 | 0.73 |

| MobileNetV2 | B–RE–NIR | 0.8266 | 0.7611 | 0.71 | 0.66 |

| Hybrid Architecture | Image Combination | Training Accuracy | Validation Accuracy | Precision of Sigatoka | Recall of Sigatoka |

|---|---|---|---|---|---|

| Xception—SVM | RGB | 0.7652 | 0.7499 | 0.75 | 0.41 |

| Xception—SVM | R–RE–NIR | 0.8574 | 0.7187 | 0.73 | 0.72 |

| Xception—SVM | G–RE–NIR | 0.8224 | 0.7864 | 0.64 | 0.54 |

| Xception—SVM | B–RE–NIR | 0.8013 | 0.7696 | 0.65 | 0.66 |

| Xception—RNN | RGB | 0.7302 | 0.7838 | 0.63 | 0.41 |

| Xception—RNN | R–RE–NIR | 0.8439 | 0.7823 | 0.75 | 0.78 |

| Xception—RNN | G–RE–NIR | 0.7345 | 0.7743 | 0.70 | 0.88 |

| Xception—RNN | B–RE–NIR | 0.7302 | 0.7799 | 0.77 | 0.78 |

| Xception—REGRESS | RGB | 0.8014 | 0.7103 | 0.80 | 0.46 |

| Xception—REGRESS | R–RE–NIR | 0.8605 | 0.7868 | 0.85 | 0.90 |

| Xception—REGRESS | G–RE–NIR | 0.7055 | 0.7066 | 0.78 | 0.90 |

| Xception—REGRESS | B–RE–NIR | 0.8186 | 0.7196 | 0.70 | 0.65 |

| EfficientNetV2B3—SVM | RGB | 0.8385 | 0.8062 | 0.78 | 0.75 |

| EfficientNetV2B3—SVM | R–RE–NIR | 0.9692 | 0.9261 | 0.84 | 0.80 |

| EfficientNetV2B3—SVM | G–RE–NIR | 0.9343 | 0.9142 | 0.79 | 0.80 |

| EfficientNetV2B3—SVM | B–RE–NIR | 0.9346 | 0.9235 | 0.76 | 0.68 |

| EfficientNetV2B3—RNN | RGB | 0.8539 | 0.8269 | 0.84 | 0.58 |

| EfficientNetV2B3—RNN | R–RE–NIR | 0.8892 | 0.8495 | 0.82 | 0.73 |

| EfficientNetV2B3—RNN | G–RE–NIR | 0.8744 | 0.8149 | 0.78 | 0.73 |

| EfficientNetV2B3—RNN | B–RE–NIR | 0.8518 | 0.8719 | 0.83 | 0.67 |

| EfficientNetV2B3—REGRES | RGB | 0.8032 | 0.7891 | 0.81 | 0.44 |

| EfficientNetV2B3—REGRES | R–RE–NIR | 0.9275 | 0.8879 | 0.77 | 0.84 |

| EfficientNetV2B3—REGRES | G–RE–NIR | 0.9521 | 0.8693 | 0.83 | 0.86 |

| EfficientNetV2B3—REGRES | B–RE–NIR | 0.9339 | 0.8198 | 0.75 | 0.65 |

| VGG19—SVM | RGB | 0.8782 | 0.8732 | 0.81 | 0.51 |

| VGG19—SVM | R–RE–NIR | 0.9309 | 0.8731 | 0.83 | 0.70 |

| VGG19—SVM | G–RE–NIR | 0.9372 | 0.8637 | 0.70 | 0.75 |

| VGG19—SVM | B–RE–NIR | 0.8875 | 0.8656 | 0.65 | 0.59 |

| VGG19—RNN | RGB | 0.8023 | 0.7693 | 0.78 | 0.66 |

| VGG19—RNN | R–RE–NIR | 0.9326 | 0.9091 | 0.82 | 0.72 |

| VGG19—RNN | G–RE–NIR | 0.9025 | 0.8336 | 0.81 | 0.74 |

| VGG19—RNN | B–RE–NIR | 0.9125 | 0.8327 | 0.82 | 0.58 |

| VGG19—REGRESS | RGB | 0.9156 | 0.8772 | 0.73 | 0.86 |

| VGG19—REGRESS | R–RE–NIR | 0.9598 | 0.8565 | 0.85 | 0.89 |

| VGG19—REGRESS | G–RE–NIR | 0.9571 | 0.8313 | 0.82 | 0.89 |

| VGG19—REGRESS | B–RE–NIR | 0.9346 | 0.8082 | 0.78 | 0.66 |

| MobileNetV2—SVM | RGB | 0.8466 | 0.7684 | 0.85 | 0.67 |

| MobileNetV2—SVM | R–RE–NIR | 0.9851 | 0.8611 | 0.87 | 0.85 |

| MobileNetV2—SVM | G–RE–NIR | 0.9541 | 0.8592 | 0.75 | 0.74 |

| MobileNetV2—SVM | B–RE–NIR | 0.9485 | 0.8453 | 0.80 | 0.77 |

| MobileNetV2—RNN | RGB | 0.8247 | 0.7793 | 0.75 | 0.52 |

| MobileNetV2—RNN | R–RE–NIR | 0.7845 | 0.7435 | 0.79 | 0.74 |

| MobileNetV2—RNN | G–RE–NIR | 0.8107 | 0.7134 | 0.71 | 0.83 |

| MobileNetV2—RNN | B–RE–NIR | 0.8508 | 0.7643 | 0.85 | 0.68 |

| MobileNetV2—REGRESS | RGB | 0.8208 | 0.7808 | 0.66 | 0.57 |

| MobileNetV2—REGRESS | R–RE–NIR | 0.8544 | 0.8295 | 0.85 | 0.84 |

| MobileNetV2—REGRESS | G–RE–NIR | 0.8147 | 0.7657 | 0.84 | 0.82 |

| MobileNetV2—REGRESS | B–RE–NIR | 0.8157 | 0.7577 | 0.77 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Linero-Ramos, R.; Parra-Rodríguez, C.; Gongora, M. SVMobileNetV2: A Hybrid and Hierarchical CNN-SVM Network Architecture Utilising UAV-Based Multispectral Images and IoT Nodes for the Precise Classification of Crop Diseases. AgriEngineering 2025, 7, 341. https://doi.org/10.3390/agriengineering7100341

Linero-Ramos R, Parra-Rodríguez C, Gongora M. SVMobileNetV2: A Hybrid and Hierarchical CNN-SVM Network Architecture Utilising UAV-Based Multispectral Images and IoT Nodes for the Precise Classification of Crop Diseases. AgriEngineering. 2025; 7(10):341. https://doi.org/10.3390/agriengineering7100341

Chicago/Turabian StyleLinero-Ramos, Rafael, Carlos Parra-Rodríguez, and Mario Gongora. 2025. "SVMobileNetV2: A Hybrid and Hierarchical CNN-SVM Network Architecture Utilising UAV-Based Multispectral Images and IoT Nodes for the Precise Classification of Crop Diseases" AgriEngineering 7, no. 10: 341. https://doi.org/10.3390/agriengineering7100341

APA StyleLinero-Ramos, R., Parra-Rodríguez, C., & Gongora, M. (2025). SVMobileNetV2: A Hybrid and Hierarchical CNN-SVM Network Architecture Utilising UAV-Based Multispectral Images and IoT Nodes for the Precise Classification of Crop Diseases. AgriEngineering, 7(10), 341. https://doi.org/10.3390/agriengineering7100341