YOLOv7 for Weed Detection in Cotton Fields Using UAV Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Site

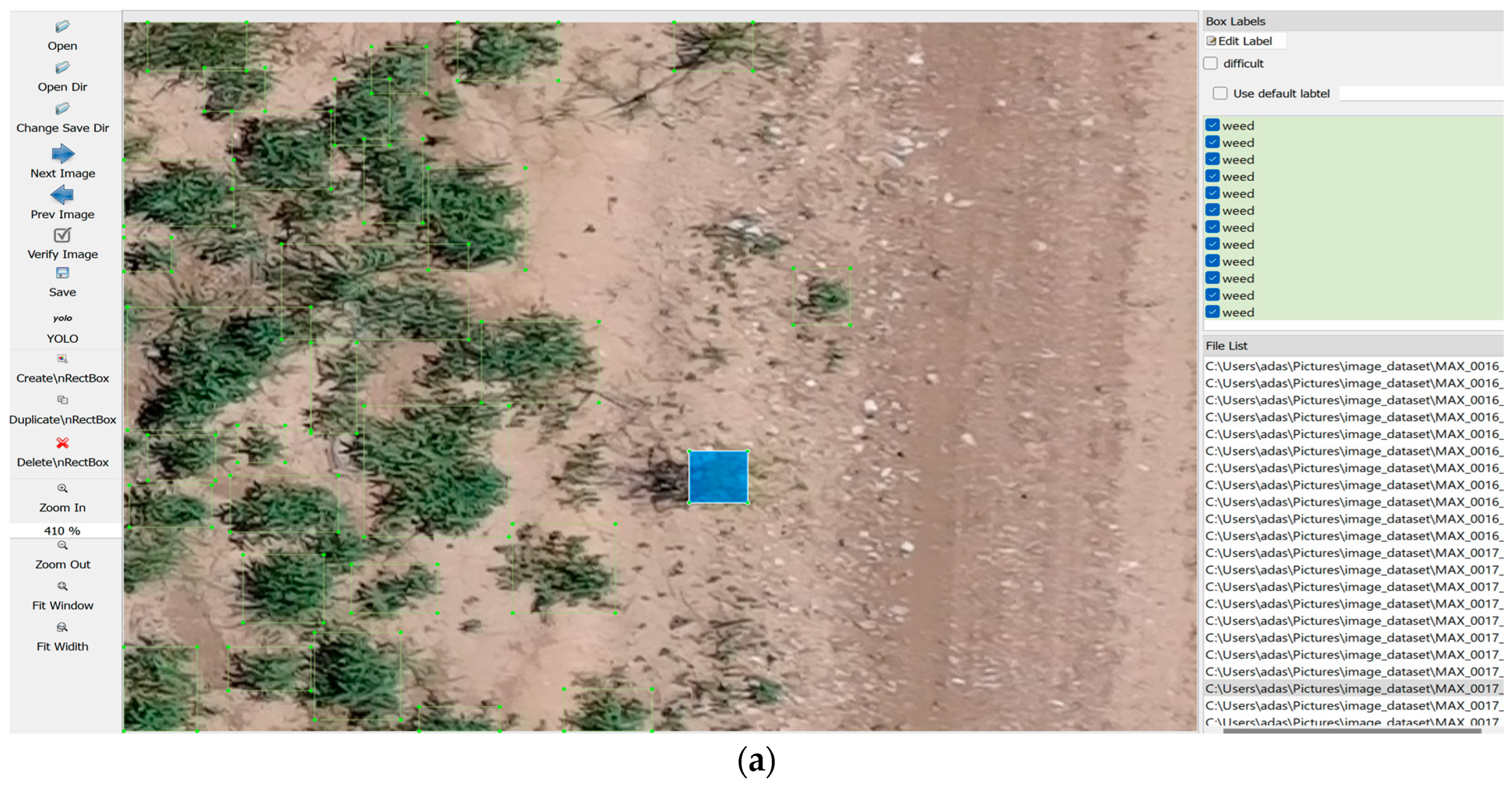

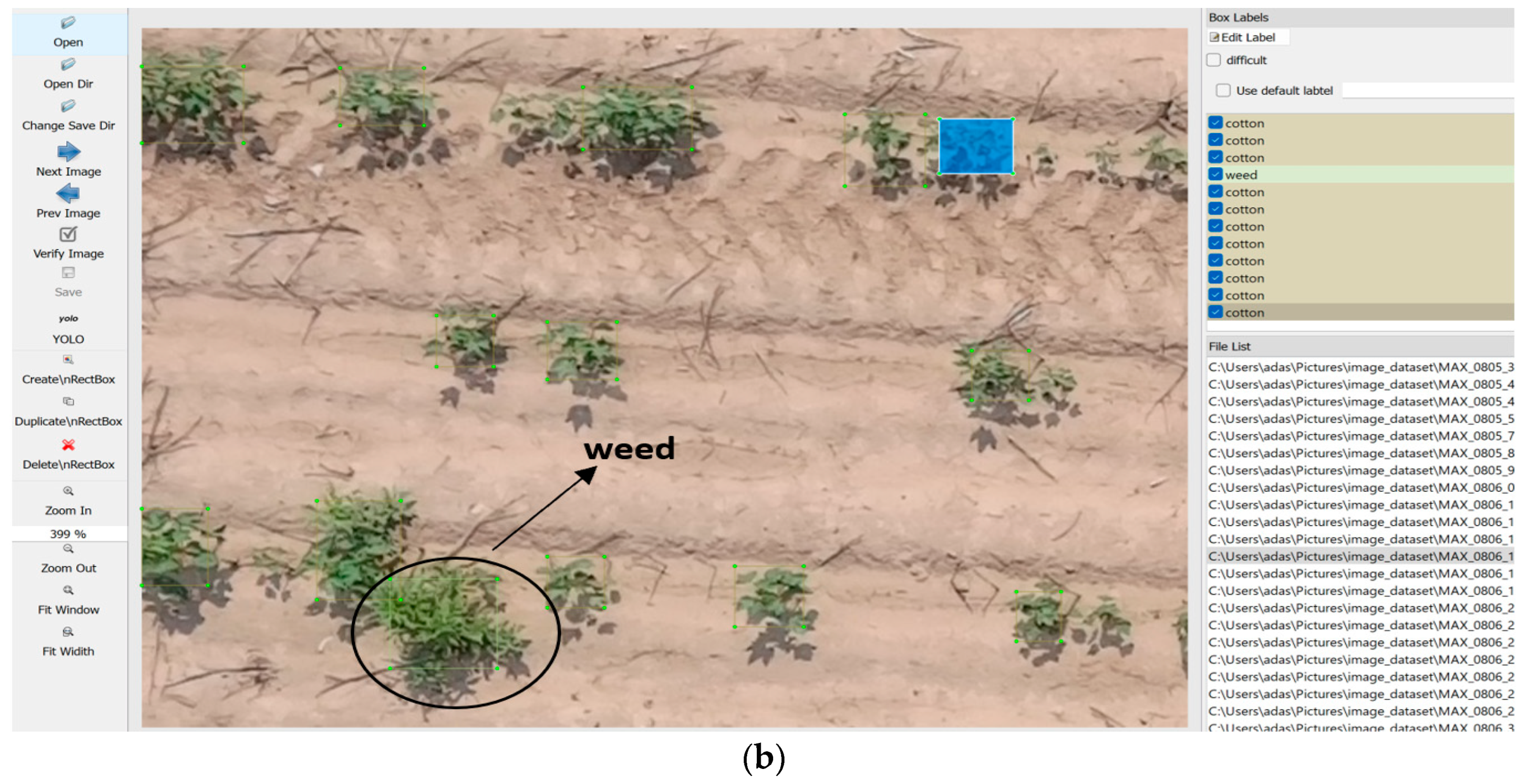

2.2. Dataset Preparation

2.3. Model Selection and Training

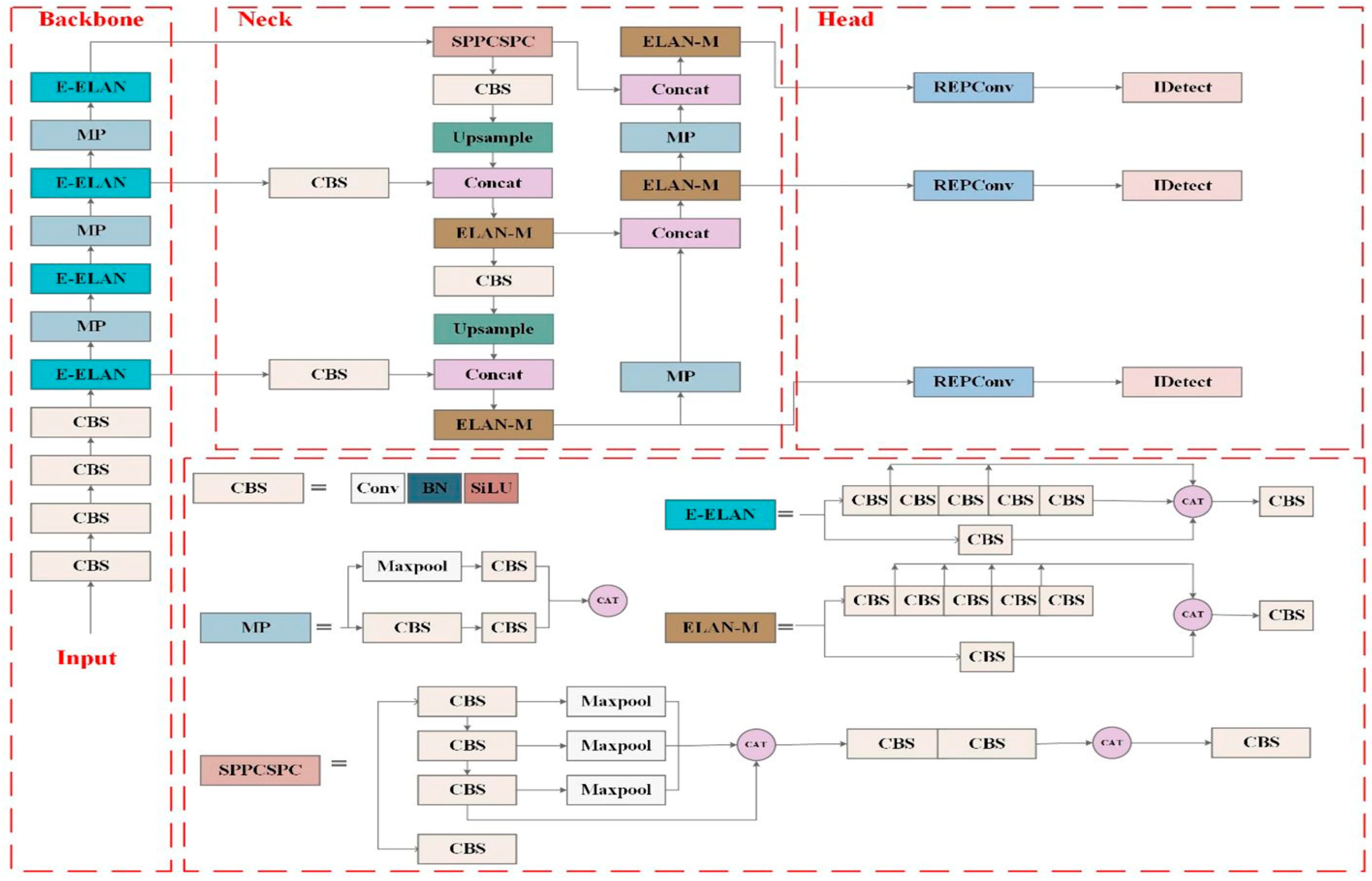

- The input section CBS modules with Convolution (Conv), Batch Normalization (BN) and SiLU activation functions. The layers function to prepare the image by identifying basic patterns which include edges and shapes that the model can interpret [15].

- The Backbone component takes the input image to extract its deeper features. Through E-ELAN blocks (Extended Efficient Layer Aggregation Network) repeated multiple times the model learns to extract information from various depth levels more effectively [16]. The MaxPooling (MP) components function here to decrease data dimensions while preserving crucial data points. The model benefits from this mechanism because it helps focus on important image regions.

- The Neck functions as a component which links the Backbone to prediction layers. SPPCSPC (Spatial Pyramid Pooling—Cross Stage Partial Connections) along with ELAN-M blocks enhance the model’s capacity to detect various object dimensions. The image collection modules gather information across both small and extensive parts of the image. The Neck employs Upsample, Concat (Concatenation), and MaxPooling layers for combining information across various image levels. The complex field environments benefit from this architecture because it detects both small and partially hidden weeds effectively [16,18].

- The Head represents the last portion of this model. The RepConv modules accelerate and enhance the processing speed [24]. The IDetect layers generate the ultimate model outputs by drawing detection boundaries, identifying object types and determining prediction confidence. The model receives improved results throughout training through advanced loss functions that allow it to improve its performance over time.

2.4. Models and Parameters

2.5. Evaluation Indicators

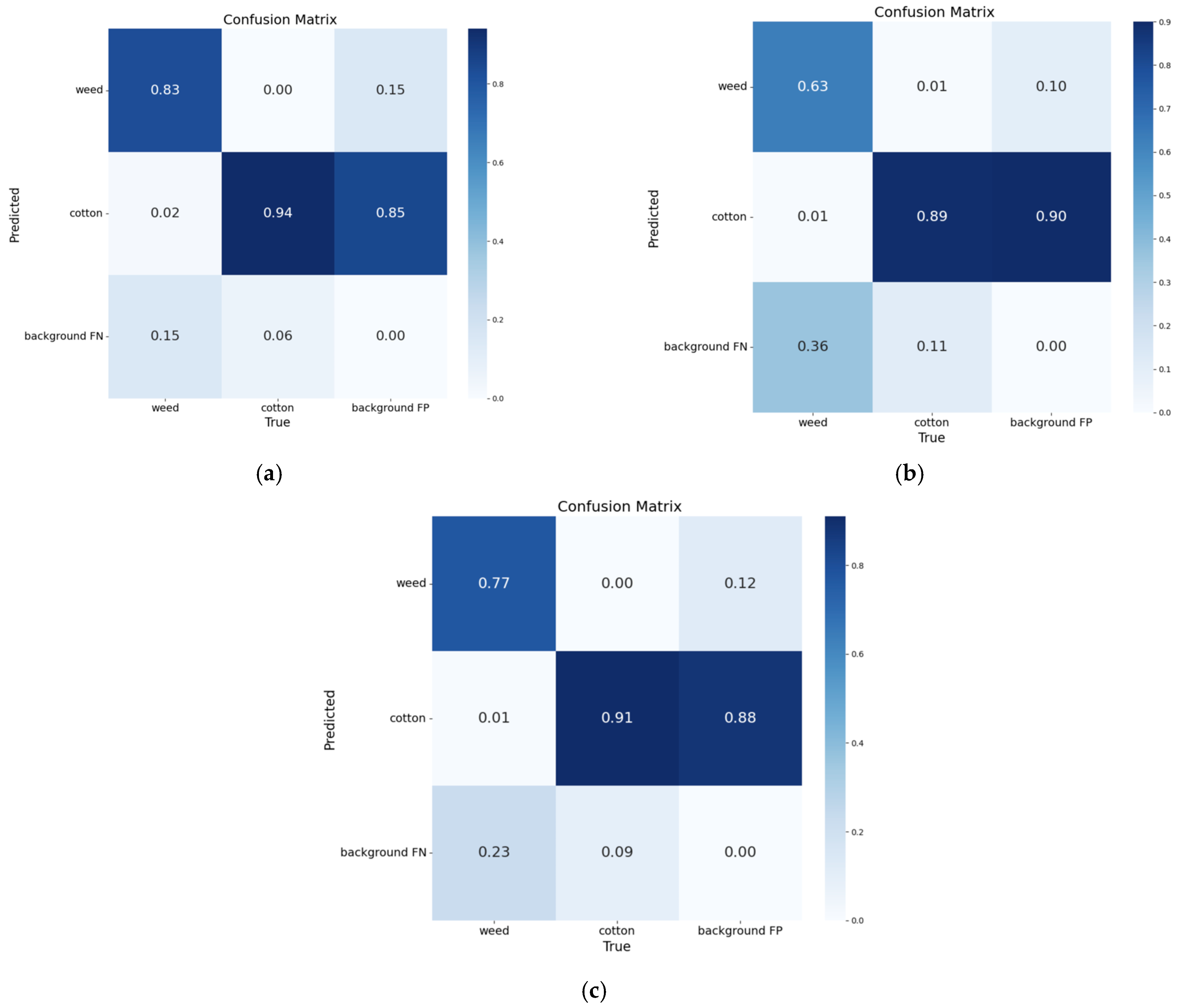

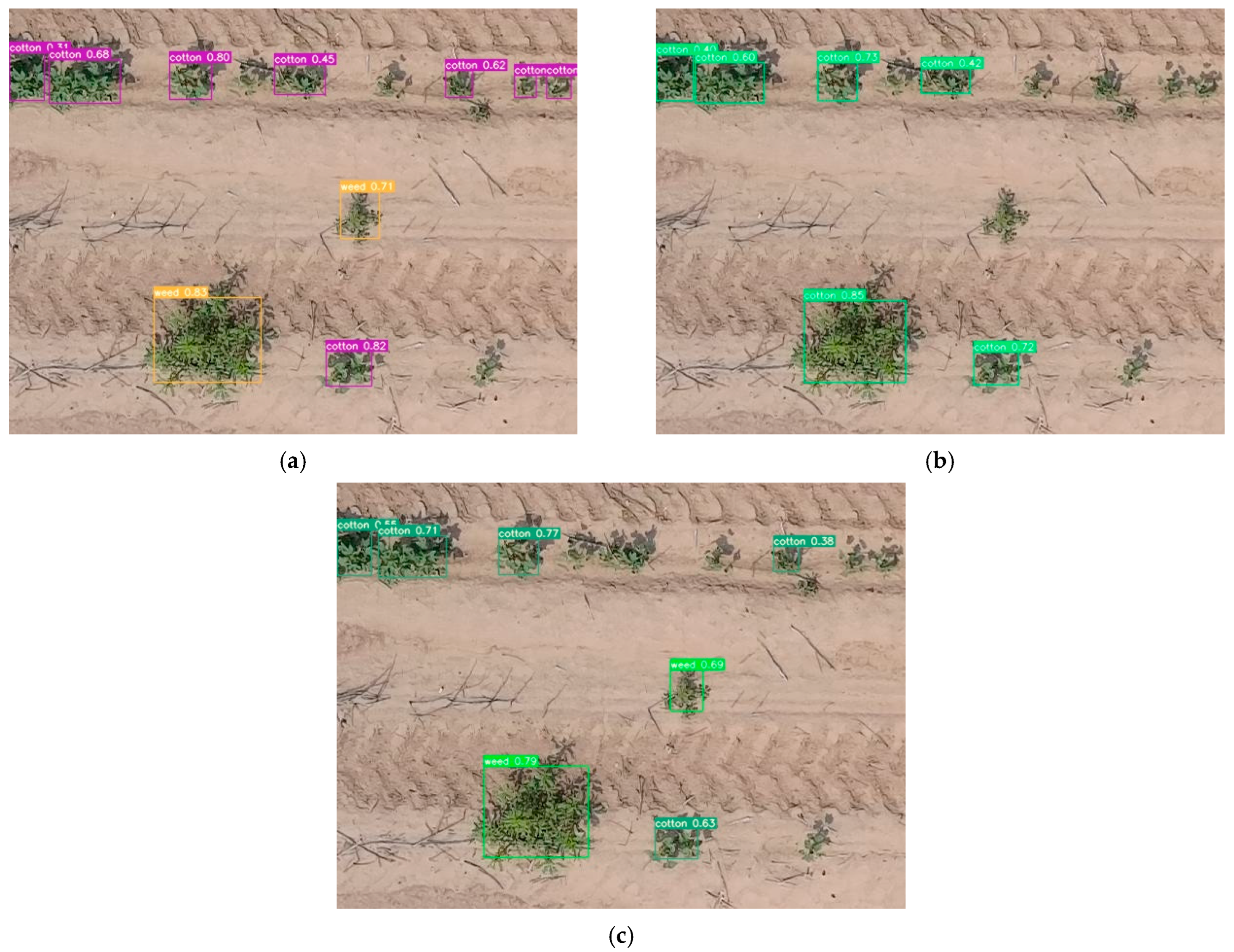

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| YOLO | You Only Look Once |

| CNN | Convolutional Neural Network |

| IoU | Intersection over Union |

| SGD | Stochastic Gradient Descent |

| P | Precision |

| R | Recall |

| AP | Average Precision |

| mAP | Mean Average Precision |

References

- Bonny, S. Genetically Modified Herbicide-Tolerant Crops, Weeds, and Herbicides: Overview and Impact. Environ. Manag. 2016, 57, 31–48. [Google Scholar] [CrossRef]

- Rose, M.T.; Cavagnaro, T.R.; Scanlan, C.A.; Rose, T.J.; Vancov, T.; Kimber, S.; Kennedy, I.R.; Kookana, R.S.; Van Zwieten, L. Impact of Herbicides on Soil Biology and Function. Adv. Agron. 2016, 136, 133–220. [Google Scholar]

- Kent Shannon, D.; Clay, D.E.; Sudduth, K.A. An Introduction to Precision Agriculture. In ASA, CSSA, and SSSA Books; Kent Shannon, D., Clay, D.E., Kitchen, N.R., Eds.; American Society of Agronomy and Soil Science Society of America: Madison, WI, USA, 2018; pp. 1–12. ISBN 978-0-89118-367-9. [Google Scholar]

- Mahlein, A.-K. Plant Disease Detection by Imaging Sensors—Parallels and Specific Demands for Precision Agriculture and Plant Phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Nawar, S.; Corstanje, R.; Halcro, G.; Mulla, D.; Mouazen, A.M. Delineation of Soil Management Zones for Variable-Rate Fertilization. Adv. Agron. 2017, 143, 175–245. [Google Scholar]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A Large-Scale Semantic Weed Mapping Framework Using Aerial Multispectral Imaging and Deep Neural Network for Precision Farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant Species Classification Using Deep Convolutional Neural Network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Gawehn, E.; Hiss, J.A.; Schneider, G. Deep Learning in Drug Discovery. Mol. Inform. 2016, 35, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. ISBN 978-3-319-46447-3. [Google Scholar]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Primicerio, J.; Di Gennaro, S.F.; Fiorillo, E.; Genesio, L.; Lugato, E.; Matese, A.; Vaccari, F.P. A Flexible Unmanned Aerial Vehicle for Precision Agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed Detection in Maize Fields by UAV Images Based on Crop Row Preprocessing and Improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A Novel Benchmark of YOLO Object Detectors for Multi-Class Weed Detection in Cotton Production Systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2022. [Google Scholar]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; La Grassa, R.; Boschetti, M. Deep Object Detection of Crop Weeds: Performance of YOLOv7 on a Real Case Dataset from UAV Images. Remote Sens. 2023, 15, 539. [Google Scholar] [CrossRef]

- Peng, M.; Zhang, W.; Li, F.; Xue, Q.; Yuan, J.; An, P. Weed Detection with Improved Yolov 7. EAI Endorsed Trans. IoT 2023, 9, e1. [Google Scholar] [CrossRef]

- Wang, K.; Hu, X.; Zheng, H.; Lan, M.; Liu, C.; Liu, Y.; Zhong, L.; Li, H.; Tan, S. Weed Detection and Recognition in Complex Wheat Fields Based on an Improved YOLOv7. Front. Plant Sci. 2024, 15, 1372237. [Google Scholar] [CrossRef]

- Gautam, D.; Mawardi, Z.; Elliott, L.; Loewensteiner, D.; Whiteside, T.; Brooks, S. Detection of Invasive Species (Siam Weed) Using Drone-Based Imaging and YOLO Deep Learning Model. Remote Sens. 2025, 17, 120. [Google Scholar] [CrossRef]

- Li, J.; Zhang, W.; Zhou, H.; Yu, C.; Li, Q. Weed Detection in Soybean Fields Using Improved YOLOv7 and Evaluating Herbicide Reduction Efficacy. Front. Plant Sci. 2024, 14, 1284338. [Google Scholar] [CrossRef] [PubMed]

- LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 29 June 2022).

- Roboflow. Available online: https://roboflow.com/ (accessed on 15 July 2024).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-Style ConvNets Great Again. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 13728–13737. [Google Scholar]

- Deng, L.; Miao, Z.; Zhao, X.; Yang, S.; Gao, Y.; Zhai, C.; Zhao, C. HAD-YOLO: An Accurate and Effective Weed Detection Model Based on Improved YOLOV5 Network. Agronomy 2024, 15, 57. [Google Scholar] [CrossRef]

- Lekha, J.; Vijayalakshmi, S. Enhanced Weed Detection in Sustainable Agriculture: A You Only Look Once v7 and Internet of Things Sensor Approach for Maximizing Crop Quality. Eng. Proc. 2024, 82, 100. [Google Scholar]

- Chen, J.; Liu, H.; Zhang, Y.; Zhang, D.; Ouyang, H.; Chen, X. A Multiscale Lightweight and Efficient Model Based on YOLOv7: Applied to Citrus Orchard. Plants 2022, 11, 3260. [Google Scholar] [CrossRef] [PubMed]

- Di, X.; Zhang, Y.; Li, H.; Wang, Q. Toward efficient UAV-based small object detection. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

- Nikouei, M.; Chen, H.; Zhang, Y. Small object detection: A comprehensive survey. Pattern Recognit. 2025, 159, 110123. [Google Scholar] [CrossRef]

- Shi, Y.; Li, J.; Sun, H. FocusDet: An efficient object detector for small object detection. Sci. Rep. 2024, 14, 61136. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep Learning Models for Plant Disease Detection and Diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

| Class | Annotation Box |

|---|---|

| Weed | 5713 |

| Cotton | 50,821 |

| Total | 57,534 |

| Model | Backbone | Params (M) | FPS | Use Case Suitability |

|---|---|---|---|---|

| YOLOv7 | E-ELAN | 36.9 | 161 | Real-time detection, resource-limited setup |

| YOLOv7-w6 | Wider E-ELAN | 70.4 | 84 | Balanced performance and precision |

| YOLOv7-x | Deeper and wider E-ELAN | 71.3 | 114 | Highest accuracy, high-compute environment |

| Parameters | Values |

|---|---|

| Optimizers | SGD |

| Learning rate | |

| Momentum | 0.937 |

| Weigh decay | |

| Pretrained | MS COCO dataset |

| Epoch | 300 |

| Batch size | 32 |

| Workers | 8 |

| Hardware and Software | Configuration |

|---|---|

| CPU | 13th Gen Intel(R) Core(TM) i9-13900H |

| GPU | NVIDIA TESLA V100s |

| Operating system | Windows 11 |

| Computational platform | CUDA 11.7 |

| Programming language | Python 3.11 |

| Deep learning framework | PyTorch 1.13.1 |

| Model | Precision | Recall | F1-Score | mAP@0.5 | mAP@[0.5:0.95] | AP (Weed) | AP (Cotton) |

|---|---|---|---|---|---|---|---|

| YOLOv7 | 0.87 | 0.78 | 0.82 | 0.88 | 0.50 | 0.034 | 0.075 |

| YOLOv7-w6 | 0.77 | 0.73 | 0.75 | 0.79 | 0.43 | 0.067 | 0.03 |

| YOLOv7-x | 0.81 | 0.81 | 0.81 | 0.83 | 0.46 | 0.043 | 0.091 |

| Misclassification Type | Common Conditions Observed | Most Affected Model (s) |

|---|---|---|

| False Positive (Weed → Background) | Background clutter resembling weed texture | YOLOv7, YOLOv7-w6 |

| False Negative (Missed Weed) | Overlapping vegetation; weeds occluded by cotton leaves | All models (most in YOLOv7-w6) |

| False Positive (Cotton → Background) | Deeper and wider E- Low-contrast cotton in shaded areas | YOLOv7, YOLOv7-w6 |

| False Negative (Cotton → Weed) | Cotton rows misaligned with expected patterns; high weed density nearby | YOLOv7-w6 |

| Background False Positive (Dry Soil → Weed) | dark regions with irregular textures | YOLOv7-w6, YOLOv7-x |

| False Negative (Missed Weed) | Overlapping vegetation; occlusion by cotton | All (most in YOLOv7-w6) |

| Model | Accuracy (%) |

|---|---|

| YOLOv7 | 83 |

| YOLOv7-w6 | 63 |

| YOLOv7-x | 77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Das, A.; Yang, Y.; Subburaj, V.H. YOLOv7 for Weed Detection in Cotton Fields Using UAV Imagery. AgriEngineering 2025, 7, 313. https://doi.org/10.3390/agriengineering7100313

Das A, Yang Y, Subburaj VH. YOLOv7 for Weed Detection in Cotton Fields Using UAV Imagery. AgriEngineering. 2025; 7(10):313. https://doi.org/10.3390/agriengineering7100313

Chicago/Turabian StyleDas, Anindita, Yong Yang, and Vinitha Hannah Subburaj. 2025. "YOLOv7 for Weed Detection in Cotton Fields Using UAV Imagery" AgriEngineering 7, no. 10: 313. https://doi.org/10.3390/agriengineering7100313

APA StyleDas, A., Yang, Y., & Subburaj, V. H. (2025). YOLOv7 for Weed Detection in Cotton Fields Using UAV Imagery. AgriEngineering, 7(10), 313. https://doi.org/10.3390/agriengineering7100313