Abstract

Correctly identifying and classifying food is decisive in food safety. The food sector is constantly evolving, and one of the technologies that stands out is augmented reality (AR). During practical studies at Companhia de Entreposto e Armazéns Gerais de São Paulo (CEAGESP), responsible for the largest food storage in South America, difficulties were identified in classifying aromatic herbs due to the large number of species. The project aimed to create an innovative AR application called ARomaticLens to solve the challenges associated with identifying and classifying aromatic herbs using the design science research (DSR) methodology. The research was divided into five stages according to the DSR methodology, from surveying the problem situation at CEAGESP to validating the application through practical tests and an experience questionnaire carried out by CEAGESP specialists. The result of the study presented 100% accuracy in identifying the 18 types of aromatic herbs studied when associated with the application’s local database without the use of an Internet connection, in addition to a score of 8 on a scale of 0 to 10 in terms of the usability of the interface as rated by users. The advantage of the applied method is that the app can be used offline.

1. Introduction

Food security is a central concern in the United Nations Sustainable Development Goals (SDGs). Ensuring that the population has access to safe and healthy food is one of the fundamental pillars for achieving these goals [1]. Accurate identification of aromatic herbs is a challenge in combating food waste [2,3].

The convergence of the pillars of Industry 4.0 with food security outlined by the SDGs establishes a strategic point of convergence in the search for efficient solutions to contemporary food challenges [4]. Industry 4.0, represented by technological pillars, such as the Internet of Things (IoT), augmented reality (AR), big data, and artificial intelligence, offers technological opportunities to optimize the processes involved in the food supply chain in addition to increasing operational efficiency, essential for achieving the SDG objectives [5]. Due to the constant evolution of the food sector, innovation plays a crucial role in the technologies present in Industry 4.0. AR plays a prominent role in this scenario due to its versatility [6]. Overlaying food information with the help of AR is increasingly adopted in the food sector due to the ability to merge virtual data with objects in the physical world [7].

Computer vision (CV) is leading the way in automating and improving food identification processes. By employing algorithms obtained through targeted programming and testing, CV becomes capable of discerning the intrinsic characteristics of food, enabling the accurate and rapid classification of food products in different environments, from production to consumption [8]. Associated with AR, computer vision allows algorithms and machine learning techniques to extract meaningful information from images and videos [9,10].

During technical visits and practical studies carried out at Companhia de Entrepostos e Armazéns Gerais de São Paulo (CEAGESP), responsible for the largest storage and intermediation of fruits, vegetables, flowers and fish in South America, difficulties experienced by users in classifying aromatic herbs were observed due to the number of species available and the geometric proximity between the types of herbs studied [11].

The study addresses several challenges and gaps in the current methods of identifying aromatic herbs, which it aims to solve with the development of an augmented reality application called ARomaticLens. Below, we highlight five challenges and gaps.

- Morphological similarity: Many aromatic herbs have similar visual characteristics, such as leaf shape, color, and texture, which can lead to misidentification. This similarity is problematic as it compromises food safety and generates economic losses due to incorrect classification. Augmented reality performs better in problems involving morphological similarities, consuming fewer computational resources and data networks [12].

- Lack of technical knowledge: Consumers and even some professionals in the food sector may lack the technical knowledge needed to distinguish between different species of aromatic herbs. This lack of knowledge leads to errors in identification and classification, impacting food safety and quality and becoming an opportunity to use mobile devices with augmented reality [13].

- Economic impact: Incorrect identification of herbs can lead to economic losses, such as withdrawal of products from specific locations, waste, and damage to the companies’ reputation. Accurate identification is essential to prevent these economic consequences [14].

- Technological limitations: Current herb identification methods are often limited and require online environments, which may not be feasible in all settings. The proximity of CEAGESP to a provisional detention center limits Internet connectivity due to electromagnetic interference and security restrictions. This restriction prevents the use of cloud-based systems commonly used in augmented reality applications [15].

- Efficiency and user experience: Without advanced technological solutions, the current methods of identifying aromatic herbs are inefficient and do not provide a satisfactory user experience. The traditional printed leaflet method is prone to error and cannot offer detailed, real-time information about the herbs [16].

The ARomaticLens application integrates augmented reality and computer vision to provide an innovative solution, allowing users to identify and classify aromatic herbs offline accurately. It was developed to address these pointed challenges. This application ensures high accuracy, efficiency, and a user-friendly experience, thus improving the overall herb identification and classification [17].

This study aimed to create an AR application called ARomaticLens to solve the challenges associated with identifying and classifying aromatic herbs using the design science research (DSR) methodology [18]. This method will prioritize accuracy, user-friendliness, and efficiency in accessing and retrieving relevant information, allowing users to use the app offline.

2. Background

2.1. Food Security Challenges Involving Aromatic Herbs

The growing demand for fresh, healthy, and safe food requires innovative solutions to guarantee the traceability and quality of products throughout the production chain [19]. The globalization of the food chain and consumers’ growing concern about food safety and quality drive the need for efficient traceability systems [20]. Accurate food identification is essential to ensure the traceability and quality of products throughout the production chain, from production to consumption, as the consumption of inadequately identified or classified foods can result in health risks, including allergic reactions and food poisoning [21].To meet the growing demand for safe and healthy foods, it is essential that consumers and professionals in the food industry fully recognize and distinguish the foods they use [22].

Aromatic herbs come in various species, many of which have similar visual characteristics. This similarity can lead to errors in identification, compromising food safety and generating losses for the sector [23]. Some factors are problematic when identifying aromatic herbs due to morphological similarities when the species have similar visual characteristics, such as leaf shape, color, and texture [24].

Intraspecific variability refers to the morphological characteristics of aromatic herbs, which can vary within the same species due to climatic conditions, soil, and management [25]. Another demand observed in identifying aromatic herbs is the lack of technical knowledge on the part of consumers, which compromises the result involving the different species available [26]. Furthermore, incorrect food identification can lead to economic losses for the food sector, such as the withdrawal of products from specific locations, waste, and damage to the companies’ image [27]. The presence of allergens or unwanted substances mistaken for aromatic herbs can pose additional health risks, especially for those with food allergies. Therefore, food identification and classification challenges are critical for safety [28].

Herb recognition using mobile devices leverages various technologies, including image processing techniques and neural network algorithms, to accurately identify herb species based on their leaves and other characteristics. Various mobile applications have been previously created that utilize deep learning and image processing algorithms to recognize medicinal herbs, with systems achieving high accuracy in classifying different plant species [29,30]. Advanced techniques have been employed, achieving a recognition accuracy close to 98% for distinguishing similar herbs using smartphones [31]. However, most of them require an online environment.

2.2. Augmented Reality and Its Applications in the Food Chain

Augmented reality (AR) offers an innovative solution to address challenges in food identification. This technology allows virtual information to be superimposed on the physical world, offering users an interactive and immersive way to identify foods with different possibilities [32]. Some areas of gastronomy and food services are highlighted by associating AR with their activities, such as food traceability, monitoring storage conditions, and preventing adulteration by providing real-time information about food origin and quality [33].

Optimizing logistics processes with the help of AR minimizes risks. It ensures compliance with food safety standards, improving the quality and integrity of products supplied from packaging to consumers [34]. Consumers can easily access information, as the food industry has adopted AR in product labeling. They can point their smartphones at a product in the supermarket and, through AR information, obtain data on the ingredients, nutritional values, and even recipe suggestions using that product. This application of AR promotes transparency in food labeling and helps consumers make more informed decisions about their food choices [35].

The versatility of AR is also reflected in its ability to be associated with training for professionals in the food sector. Its contribution includes including safety practices in training programs aimed at raising professionals’ awareness of food safety standards [36].

The use of AR technology associated with mobile devices offers versatility; however, it presents limitations when using complex architectures in the food sector. Mobile devices are limited by processing power, memory capacity, and battery life, significantly impacting the performance and feasibility of deploying sophisticated models [37].

Mobile devices typically have less processing power than desktop computers or dedicated servers. This limitation requires lightweight models that work efficiently without taxing the device’s CPU or GPU. Complex architectures, such as deep convolutional neural networks (CNNs) with numerous layers and parameters, can be computationally intensive, leading to slower inference times and reduced responsiveness. Therefore, optimizing the model for mobile deployment frequently involves reducing the parameters and layers, using techniques, such as model pruning, quantization, and knowledge distillation, to create more compact and efficient models [38].

Template size is also essential when deployed to mobile devices. Large models consume more memory, which can be a critical bottleneck given the limited RAM available on most mobile devices. This can lead to issues, like application crashes or slow performance, especially when dealing with high-resolution images or when processing multiple tasks simultaneously. Models must be optimized for size without sacrificing accuracy to mitigate this issue. Certain techniques, such as weight sharing and parameter reduction, can compress the model, making it more suitable for mobile environments [39].

Complex, compute-intensive models can also drain the battery quickly, a critical consideration for mobile applications that must be used throughout the day without frequent charging. The model’s efficient design balances the computational load to ensure that the application remains usable without significantly impacting the device’s battery life [40].

2.3. Computer Vision Associated with Augmented Reality

Computer vision is an area of computer science that aims to develop systems and codes to decipher and understand visual elements of pictures or videos, allowing computers and machines to process, analyze, and extract meaning from visual data. CV systems can be categorized into types, such as 2D image analysis and pattern recognition [41].

CV applications are vast and constantly expanding and can be applied in several areas, such as augmented reality, by superimposing virtual information on the real world. This way, it is possible to improve the user experience and provide real-time interactive data based on images captured by devices, such as smartphones and tablets [42].

Image tracking is a method for creating AR experiences combined with CV by tracking real-world objects in real time. One of the reference software in the AR area is Vuforia, which is currently in version 10.18 and which was developed by the manufacturer PTC. The dynamics created by the program are the comparison of images from a tablet or smartphone camera with a database of known images. When the camera finds a known image, Vuforia places virtual objects on it [43].

Unity is another software that stands out in the AR scene, currently in version 2022.1.16. It allows scripts developed in the C# programming language to associate Vuforia with mobile development for AR applications. By combining the two programs and developing applications involving CV, it is possible to employ three image-tracking techniques: patterns, characteristics, and depth [44].

In pattern recognition, Vuforia uses pattern recognition algorithms to identify known images in the camera image. In feature tracking, Vuforia uses feature tracking algorithms to identify characteristic points in known images and the camera image. In depth tracking, Vuforia uses depth tracking algorithms to measure the distance between the camera and real-world objects. By combining these technologies, Vuforia can track images with high accuracy and reliability, enabling developers to create interactive AR experiences [45].

CV can be employed in several areas of the food sector, such as monitoring plantations by identifying plant diseases and pests, analyzing crop development, and predicting productivity. Soil analysis is another example of CV associated with managing food quality by measuring soil fertility, identifying deficient nutrients, and determining the need for irrigation [46].

Food safety concerns may result in the use of essential applications in several countries associated with CV. Some examples are assessing food quality by detecting fresh or spoiled products, identifying food adulteration, and verifying the origin of products [45]. To assist in choosing healthy foods, nutritional information can be provided by identifying images of foods and their respective packaging through the CV and comparing the captured image with a database of foods and their respective data [47].

2.4. Related Works

Research on the identification of aromatic herbs has advanced considerably in recent years, exploring various approaches and methodologies, such as augmented reality (AR) and artificial intelligence (AI) [48]. This section presents and discusses the major research works in this field.

Antunes et al. [48] proposed and developed a program to identify three aromatic herbs using an object detection algorithm through the YOLO v8 architecture with a convolutional neural network. Mustafa [49] developed a system for species identification and early detection of herbaceous diseases using computer vision and an electronic nose. This system focuses on extracting the smell, shape, color, and texture of herb leaves, as well as intelligent mixing involving systematic inference systems, naive Bayes classifiers (NB), a probabilistic neural network (PNN), and a support vector machine (SVM).

Convolutional neural networks have been successful in solving object identification problems. This study contributes a new approach suitable for herb identification using convolutional neural network models, such as InceptionV3, MobileNetV2, ResNet50V2, VGG16, Xception, and RMSprop and Adam optimizers [50].

In the previous literature [51], a new CNN model was presented for herb identification, utilizing the partial information perception module (PPM) and the species classification module (SCM). Additionally, a new attention mechanism, called the part priority attention mechanism (PPAM), has been proposed, which independently trains the PPM using herb part labels. Another article [52] aimed to implement a system to analyze Ayurvedic leaves using convolutional neural networks (CNNs) based on transfer learning. The recognition of Ayurvedic herbs was performed based on their images. The experiment used 1,835 images of herbs from 30 species of healthy medicinal plants. The success rates for herb identification reached 98.97% accuracy in DenseNet121 Convolutional Neural Network tests. The VGG16, MobileNetV2, and InceptionV3 models reported test accuracies of 93.79%, 96.21%, and 97.59%, respectively.

A previous study [53] proposed the UTHM Herbs Garden Application Using Augmented Reality. This AR technology can facilitate community access to information about five types of herbs. Thus, based on the information provided, users can quickly memorize all the data about the plants through their mobile devices, making the process much more attractive compared to the conventional method of using labeling cards.

The Augmented Reality Portal Application for Medicinal Phytotherapy Plants aims to allow the visualization of medicinal plants and to serve as a source of knowledge and insights for the general public. This is made possible through the advanced technology of the Augmented Reality Portal, which can be accessed via Android smartphones [54].

Augmented reality is gradually becoming popular in education, providing a contextual and adaptive learning experience. In this context, [55] developed a Chinese herbal medicine platform based on AR using 3dsMax and Unity, allowing users to visualize and interact with herb models and learn related information. Users use their mobile cameras to scan a 2D image of the herb, triggering the display of the 3D AR model and corresponding text information on the screen in real time.

The present study describes a mobile application that uses augmented reality to provide information about herbaceous plants commonly found in the Philippines. The application was developed using Unity software and the Vuforia SDK, due to its various features, such as its image converter, camera, tracker, video background renderer, application code, user-defined targets, and device databases. Additionally, the Vuforia QCAR algorithm was used, which identifies the tracking points of the image target from the database, and which was employed to enhance 3D movements [56].

We confirmed that the technologies used for herb identification and mobile devices are centered around artificial intelligence through convolutional neural networks and augmented reality, with more research focusing on AI. The choice of AR technology was due to its capability to work with 3D images, providing a more user-friendly application, consuming fewer computational resources on mobile devices, allowing execution on older devices, and functioning offline, making it suitable for environments without Internet access.

3. Materials and Methods

The design science research (DSR) methodology is a framework for creating technological artifacts, such as innovative technological systems, applications, and models, addressing complex problems and challenges in technology and information systems [18]. DSR is an applied research method that aims to create practical, innovative solutions to real-world problems by employing design principles and scientific approaches. This methodology is widely recognized for producing tangible and relevant results in the academic community and industry [57].

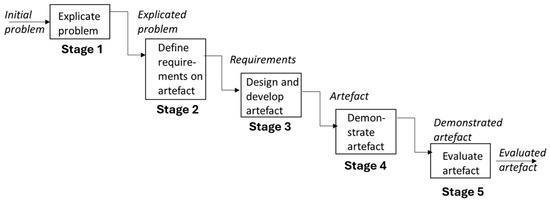

The design science research (DSR) methodology originated in computer science, systems engineering, and information systems management. The design science research (DSR) methodology comprises five well-defined stages that guide the creation of technological artifacts. These stages provide a systematic framework for designing, developing, evaluating, and validating innovative technological solutions [57]. The framework can be seen in Figure 1 [58].

Figure 1.

The 5 stages of the DSR methodology [58].

The DSR methodology is structured into five stages for creating and evaluating artifacts. In the first stage, the problem is explained, and the issue is identified and understood to be resolved. In the second stage, the artifact’s requirements are defined, establishing its characteristics and functionalities. The third stage involves designing and developing the artifact based on the defined requirements. In the fourth stage, the artifact is demonstrated, testing its functionality and applicability in practical scenarios. The artifact is evaluated in the fifth and final stage, analyzing its effectiveness and relevance [18].

3.1. Problem Description

The research occurred at the CEAGESP vegetable fair, where different aromatic herbs were presented. Some difficulties were highlighted after meetings with specialists from the institution’s management and field research carried out with professionals who sell the food.

The first activity raised by the group of professionals in the segment was the difficulty in identifying the types of aromatic herbs sold locally due to the high number of plants that pass through the warehouse daily. Currently, technical information is provided through a printed version of a CEAGESP technical publication containing the 18 main aromatic herbs sold locally, namely rosemary, garlic, chives, coriander, dill, fennel, tarragon, mint, bay leaves, basil, marjoram, nira, oregano, parsley, celery, sage, and thyme. The printed version used at CEAGESP can be seen in Figure 2.

Figure 2.

Technical publication regarding the use of aromatic herbs.

The images used in the printed publication were obtained by experts who created a group with 300 images in the JPG format relating to the study sector. During the meetings and technical views, specialists agreed to make the figures available to develop projects to improve local infrastructure.

The second activity indicated the difficulty in accurately classifying the types of aromatic herbs sold in the institution, even using the printed version. As some aromatic herbs have similar physical characteristics, such as shape, color, and texture, the customer’s choice of product in the warehouse becomes difficult.

The location of CEAGESP close to a provisional detention center presents a problem with regular access to the Wi-Fi network, which is essential for implementing modern technologies for identifying and classifying aromatic herbs. Electromagnetic interference and security restrictions associated with the proximity of the detention center limit the availability and stability of Internet connectivity via Wi-Fi. This limitation prevents using systems based on cloud computing daily in augmented reality applications and requires network support for real-time data updating, information synchronization, and access to remote databases. The dependence on mobile data connections, which are often unstable and insufficient to support high-demand applications, further exacerbates the problem. This results in operational difficulties and technological limitations that directly impact the efficiency and accuracy of identifying aromatic herbs essential for local commerce.

Given the understanding of the scenario, the first stage of the DSR methodology was completed after identifying the main problems faced by customers when purchasing aromatic herbs at the warehouse.

3.2. Define Requirements for Artifacts

The definition of the requirements that guided the development of the proposed article, in this case, the application, emerged after meetings with experts and professionals from CEAGESP, who developed them to transform the complexities previously presented into demands for the project.

The study’s first step consisted of creating an image bank to support the identification of the 18 main aromatic herbs sold at the fair inside the warehouse. To this end, another 300 images were added in the JPG format captured during technical visits to the site, totaling 600 images for the preparation of the project.

The second step was the creation of a CV application associated with AR to overlay virtual information on aromatic herbs recorded in the real world, thus facilitating accurate identification. The leading information indicated by the application is an image blocked by the cell phone camera associated with the type of aromatic herb that appears on the device’s display. Due to the difficulty of accessing the Internet locally via cell phone, the information collected will be processed locally; therefore, the database must be inserted into the application.

The third stage of the research was presenting technical information in the application. The identified aromatic herb must indicate one of the six following aromatic groups to which it belongs:

- Cineol, linalool, camphor, and 4-Terpinenol

- Diallyl organosulfurs

- α-Pinene, carvone, limonene, linalool, and myristicin

- Caryophyllene, thymol, and terpinene

- Estragol and anethole

- Menthyl acetate

The fourth stage is the association of the aromatic herb identified with the botanical family it belongs to, namely Amaryllidaceae, Apiaceae, Asteraceae, Lamiaceae, or Lauraceae.

The fifth stage of the study indicated the application of transforming aromatic herbs into seasoning, considering two variables: time and temperature. Each of the 18 types of aromatic herbs has different values for dehydrating and obtaining the seasoning. Concerning the ideal drying time, celery has the shortest time of 25 min due to its less dense structure, and leek has the longest of 89 min due to its thicker structure. In terms of temperature, thyme, which is more delicate, suffers from dehydration with the lowest temperature of all herbs at 78 °C. At the same time, rosemary, with a more robust composition, needs a higher temperature of 150 °C for effective drying, resulting in the highest value among all herbs. The values were obtained through the current printed publication of CEAGESP, which provides guidelines and variations of 64 min and 72 °C according to the structures and characteristics of the specific aromatic herbs.

The presentation on the application screen will include suggestions for culinary uses according to the aromatic herb identified. Seventeen types of use were classified with possible applications for each herb identified, namely poultry, goat, meat, teas, mushrooms, stews, sauces, breads, pates, fish, pizzas, typical dishes, cheeses, salads, soups, beef pork, and pies.

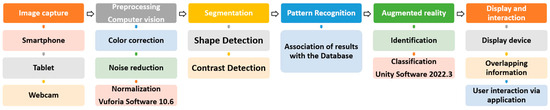

The technical information included in the project was extracted from the CEAGESP technical publication containing the 18 main aromatic herbs sold in the warehouse. The architecture used to obtain the solution can be seen in Figure 3.

Figure 3.

Project architecture for creating the application by integrating technologies.

3.3. Design and Development of the Artifact

3.3.1. Design

The project to obtain the application was segmented, detailing the steps involved, from capturing the image to displaying and interacting with the overlaid digital content. The process begins with capturing an image of the real world using an electronic device, such as a smartphone, tablet, or webcam. The images of the captured aromatic herbs serve as a basis for superimposing virtual elements. The captured image then goes through a pre-processing process to improve its quality and facilitate subsequent analysis. This step includes color correction, adjusting the image’s hue, saturation, and contrast to improve visual fidelity and normalization, and adjusting the image size and resolution to a suitable standard.

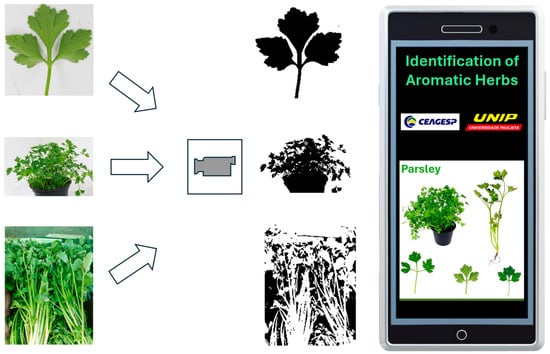

Image segmentation aims to identify and separate objects of interest from the background. This step can be performed through certain techniques, such as shape detection, identifying objects based on their geometric shapes, such as corners, lines, and curves, and contrast detection, i.e., identifying objects based on their contrast differences with the background. Then, the segmented objects are compared with a database of known patterns to identify and classify them.

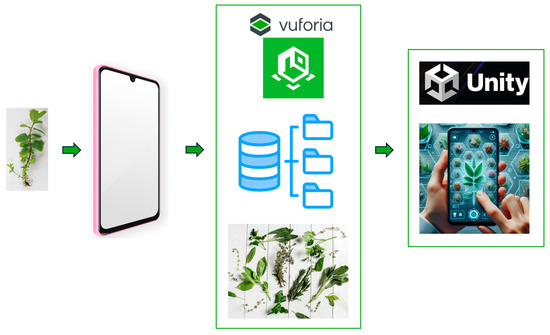

Based on the identification of objects, the information is associated with the database and superimposed on the original image, creating augmented reality. Two reference software in augmented reality were used to develop the proposed solution: Vuforia 10.6 and Unity 2022.3, which allow the insertion of the SDK file and the generation of the application in the APK extension.

Unity is a multiplatform software known for its versatility in developing solutions involving AR and VR technologies. It supports various platforms, including Android and iOS, making it an ideal choice for mobile AR development. Unity’s cross-platform capabilities allow developers to create applications running on multiple devices and operating systems with minimal adjustments. This ensures a wider reach and accessibility of the developed AR applications. Additionally, Unity offers an intuitive interface, an extensive library, and a well-documented API for developers, including 3D models, scripts, and plug-ins, significantly speeding up the development process in AR projects. Unity’s real-time rendering engine ensures high-quality graphics and smooth performance, which is essential for creating immersive AR experiences. Its optimization tools help maintain device performance regardless of operating system versions [44].

Vuforia is an AR platform that integrates with Unity, providing advanced AR functionality. It supports image recognition, object tracking, and 3D target detection, making it a versatile tool for AR development. Some features stand out in the market, such as its tracking capabilities, including image targets, 3D objects, and ground plane detection, allowing the creation of highly interactive AR experiences. The ability to recognize and track multiple targets simultaneously improves the interactivity of the application. Furthermore, Vuforia features a scalable architecture, allowing the creation of applications that handle various types of targets and environments, making it suitable for diverse AR applications [43].

The combination of Unity and Vuforia provides a versatile framework that makes it easy to use Unity’s interface rendering capabilities along with Vuforia’s tracking and integration capabilities when creating AR application projects.

3.3.2. Artifact Development

A local database of aromatic herb images and information has been integrated into the ARomaticLens application, allowing the computer vision algorithm to compare and classify captured images accurately. This database comprises captured reference images and detailed information about each aromatic herb, ensuring a reliable herb identification and classification source.

C# scripts were developed in the application’s backend to manage image processing, data retrieval, and user interactions. These scripts are responsible for controlling image capture, applying pre-processing techniques to improve image quality, and running the computer vision algorithm that analyzes and classifies herbs. Additionally, these scripts facilitate integrating augmented reality (AR) functionalities and computer vision technologies within the application. One of the scripts used in the project can be seen in Figure 4.

Figure 4.

C# Script for image identification.

Augmented reality (AR) allowed information about herbs to be overlaid directly onto the captured image, providing an interactive and informative visualization. This process was managed in real time, ensuring that users received instant feedback on herb identification, which was crucial for practical applications in certain environments, like CEAGESP.

The Vuforia 10.6 software stores and classifies these images based on shapes and contrasts. Vuforia, through its image tracking and recognition capabilities, allowed the precise mapping of herb images, facilitating real-time recognition using the following techniques:

- Image tracking: The application uses Vuforia 10.6 software to track images in real time. Such use involves capturing images using the mobile device’s camera and comparing them with a local database of images of known herbs. When a match is found, virtual objects and information are overlaid onto the real-world image.

- Pattern recognition: Vuforia uses pattern recognition algorithms to identify known images in the camera feed. Such movement involves analyzing herbs’ shapes, contrasts, and geometries to facilitate precise identification.

- Feature tracking: The application uses feature tracking to identify characteristic points in images, allowing the precise overlay of virtual information on physical images of the herb.

- Depth tracking: Depth tracking algorithms measure the distance between the camera and the herbs, ensuring accurate positioning of augmented reality elements.

The application was built on the Unity 2022.3 platform, which offered cross-platform support and tools for creating the user interface interactively. Vuforia’s integration with Unity allowed Unity’s real-time rendering capabilities to ensure correct project performance. C# scripts were created to manage image processing and display of relevant information. The step-by-step application development process can be seen in Figure 5.

Figure 5.

The artifact process flowchart.

3.4. Demonstrate Artifact

The demonstration of the functioning of the technological artifact developed begins with the application installation on mobile devices containing the Android operating system version 8 with API level 26 and enough free space for the installation of 232 MB. Once installed, the app runs, activating the device’s camera and allowing the user to scan different aromatic herb samples. The application processes captured images in real time by using image recognition algorithms, which overlay AR information directly on the device’s screen.

During the demonstration, the user positions the device’s camera over an aromatic herb, and the application automatically identifies the plant. Detailed information about the herb, including scientific name, visual characteristics, and culinary and medicinal uses, is displayed and overlayed over the actual image of the plant.

The demo also illustrates the application’s intuitive interface, highlighting its simple user-directed visual language, designed to be accessible to both experts and laypeople, ensuring a positive user experience.

3.5. Validation

Initially, to validate the application, a system presentation meeting was held, where four experts who work at CEAGESP in the aromatic herbs sector were invited to complete a demonstration of the application. The user interface, image recognition algorithms, and augmented reality functionalities were highlighted during this session. Experts were able to interact with the application, providing immediate feedback on its usability and accuracy in identifying aromatic herbs.

Subsequently, a detailed verification of the application’s functioning was conducted, focusing on the features requested by experts during the requirements stages. Each function was tested individually to ensure that all technical and operational specifications were met. This verification process included performance, stability, and accuracy testing, ensuring the application operates as expected under different usage conditions.

In addition, an evaluation questionnaire was administered to participants in the presentation meeting. This questionnaire was structured to collect qualitative and quantitative data about users’ experience with the application, covering key aspects, such as ease of use, the clarity of the information presented, and general satisfaction. The responses obtained provide valuable insights for possible improvements and adjustments to the application.

Finally, the application was used realistically during the aromatic herb trade fair at CEAGESP. This scenario allowed us to observe the application’s performance in a practical context, evaluating its effectiveness in identifying aromatic herbs in real time and its acceptance by end users. The data collected at this stage complement the evaluation and validation of the artifact, ensuring its applicability.

4. Results and Discussions

Based on the selection of the 18 species of aromatic herbs most sold in the warehouse, 600 images were classified to be inserted into the database. The photographs were captured in different scenarios to associate CV with what is closest to reality. The images were captured in open-air markets, supermarkets, and CEAGESP distribution points. The main images obtained can be viewed in Figure 6.

Figure 6.

Aromatic herbs selected at the research site.

The database containing images was inserted into the Vuforia 10.6 software for storage, classification, and targeting associated with AR. The software, through CV, classifies and categorizes the captured images, considering the variables and apparent geometry of the aromatic herbs. All 600 images were added to the database to be categorized according to their shapes and contrast from the original images. Figure 7 shows the transition process for CV recognition.

Figure 7.

Example of aromatic herbs with thapplication of CV.

The identification capacity of the captured image is categorized by assigning grades from 0 to 5 stars, with a value of 0 for images that could not be processed by the CV, grades from 1 to 4 for images that could be identified by the CV, varying in terms of the recognition time and processing, and with a score 5 for recognition that was considered instantaneous. To highlight the results obtained by the CV classification applied to aromatic herbs, a database was created containing the star ratings attributed to each image, which can be seen in Figure 8.

Figure 8.

Images classified using computer vision.

Some variables interfere with image processing when applying CV, such as the number of aromatic herbs in the same figure, the lighting where the photo was taken, the proximity between the capture lens and the object to be detailed, and the focus and definition of the result. Table 1 presents the results obtained when submitting the bank of 600 images to CV analysis using the Vuforia 10.6 software.

Table 1.

Results of CV analysis using the software.

Images with one star had an average processing time of 30 s. For those rated two stars, recognition took about 20 s. Images with a score of three stars required 8 s to process. Images with four stars were processed in approximately 3 s. Finally, images recognized instantly, in less than 2 s, received the maximum rating of five stars. This attitude provided some benefits, such as the following:

- Increased identification accuracy: By selecting high-quality and accurate images rated five stars, the new database ensures that the recognition system in Unity has a robust reference base, increasing its accuracy in identifying aromatic herbs.

- Improvement in system efficiency: With an optimized database containing only high-quality images, the processing of images by the system becomes more efficient, resulting in a shorter response time and greater agility in the user’s interaction with the application.

- Reduction in errors: Including well-classified images reduces the incidence of errors in recognizing herbs, as the selected images clearly and distinctly represent the characteristics of each species, facilitating correct classification by the system.

- Quality of user experience: With a well-structured and accurate database, the user experience is significantly improved. The augmented reality application offers fast and reliable responses, increasing user satisfaction and the system’s overall usability.

To complement the results and facilitate the understanding of the data collected during development, a table was created detailing the processing of images of aromatic herbs using CV. Table 2 presents information about the original image, the transition, the definition of shapes and contrasts, the number of stars assigned to each image, and the processing time in seconds. This structure allows a comparative analysis of the results found through the CV algorithm used by the Vuforia 10.6 software, highlighting the effectiveness of the previous analysis before using the database. Furthermore, Table 2 helps to identify patterns and trends in the processing of different aromatic herbs, enabling specific adjustments to the algorithm for future optimization.

Table 2.

Details of image processing using CV.

The folders containing the 18 species of aromatic herbs were inserted into the “Assets” environment of the Unity 2022.3 software so that the data could be referenced in augmented reality. A script was created in C# programming language to direct the folders containing the images. This script manages the access and use of images in the context of the application.

Another C# script was developed to reference and retrieve information obtained from an image, such as the title, link, and reference to the associated image. The programming allows integration between the captured image and image processing via CV, selecting the contrasts and geometries obtained and, consequently, the result on the cell phone screen. The development architecture of these stages can be seen in Figure 9.

Figure 9.

Architecture involving CV and AR.

At the launch of the ARomaticLens application, a presentation screen containing information about the partners involved in the project’s development was incorporated. This addition is intended to acknowledge the collaboration and support received and provide users with an initial understanding of the scope of the application. A close button was strategically positioned in the top left corner of the interface, close to the position of the mobile device’s front camera, to improve the app’s usability. This positioning facilitates the user experience, allowing the user to close the presentation screen quickly and intuitively.

To assist users in using the application, a message was inserted in the center of the screen with the text “Point to the aromatic herb you want to identify!”. This message is essential to guide users on the correct procedure for effectively identifying aromatic herbs, ensuring they use the application intuitively. A link to the CEAGESP website was also inserted when a subject used the application in a location with access to a Wi-Fi network. This link allows one to download the PDF version of the printed technical reference made available by CEAGESP, providing an additional source of information detailed information about aromatic herbs.

The application was built to work on the Android operating system, starting from Android version 8 with API Level 26. With a size of 232 MB, the application guarantees the inclusion of all essential functionalities without compromising the performance or usability of the device. This comprehensive version ensures that users can access a robust and efficient tool for aromatic herb identification.

The result of the application’s operation, including its intuitive interface and built-in functionalities, can be seen in Figure 10. The image shows how the application facilitates the identification of aromatic herbs, highlighting the effectiveness of ARomaticLens in meeting users’ needs. The well-designed interface, clear instructions, and access to additional features make ARomaticLens a valuable tool for identifying and classifying aromatic herbs, offering an innovative and efficient solution for the food sector.

Figure 10.

Complete operation of the application.

The implementation involved carrying out the first test of ARomaticLens with the developed features. The application’s interactions with users were simulated, not limited to functionality, but also considering the usability and performance of the application. This ensured that ARomaticLens worked as expected in meeting the established requirements and providing an efficient user experience. The validation was carried out by four experts testing the application at the CEAGESP fair and verifying its operation.

Specialist A is an operational technician with 20 years of experience in the company, and Specialist B is a food engineer with 5 years of experience; Specialist C is an agricultural engineer with 26 years of experience and is the director of the area. Specialist D is an operational technician with 25 years of experience. A demonstration of the application was carried out by asking them to install it on their cell phones and to check its usability and accuracy in identifying the 18 types of aromatic herbs.

The experts reported that the application worked according to the objective of identifying aromatic herbs on mobile devices, with low processing power and without an Internet connection, with 100% accuracy.

After use, a questionnaire was used as a research instrument, containing four open questions and one closed question with a scale between 0 and 10 to evaluate usability. The first question asked whether the application was intuitive or not for use regarding identification. Everyone unanimously reported that the application was intuitive for identifying aromatic herbs. The second question was whether the application was efficient in collecting data. The experts responded that the application was efficient without an Internet connection. One of the experts had an older cell phone with Android version 8 with API Level 26, and the application worked satisfactorily. Question 3 was about the application providing results in real time. The results presented on the experts’ screens were obtained with a processing time of less than 2 s, showing the efficiency of the application in real time, while question 4 referred to how the application worked without an Internet connection. The four cell phones could use the application without needing to use the Internet.

Next, the group of experts was asked to give a grade on the application’s user interface (UI). Specialist A gave a score of 9, specialists B and C gave a score of 8, and specialist D gave a score of 7 for the application’s usability, showing that the application satisfactorily met the usability target proposed in the project.

The implementation phase represents a significant milestone in transforming the concept into a functional technological solution. Figure 11 shows the exact moment the request was validated at CEAGESP.

Figure 11.

Experts validate the application at CEAGESP in the field.

The current study presents a mobile application leveraging smartphone camera capabilities to achieve high-accuracy recognition of common aromatic herbs. The proposed innovation is related to the possibility of its use offline. While the application offers a valuable tool for identifying common aromatic herbs, its usefulness is geographically restricted due to its limited database. Like in previous studies [35,59], the application’s reliance on augmented reality (AR) technology introduces potential limitations. Factors, like lighting and the herb’s physical state, can affect the accuracy of the AR identification. Finally, user experience may be hindered by the need for technical proficiency with smartphones, potentially excluding those less familiar with modern mobile technology.

A previous study [30] features herb recognition accuracy. However, some limitations remain. As in the present study, the system could misidentify plants, particularly in cluttered natural settings. Additionally, as in the present study, the effectiveness of the augmented reality (AR) features hinged on the user’s mobile device. Performance and accuracy can fluctuate depending on hardware specifications. Lastly, expanding the application’s plant library would necessitate significant resources for data collection and model retraining.

The real-time performance is a critical aspect, often constrained by the processing capabilities of the mobile device used. Older or less powerful devices may experience reduced functionality, affecting the fluidity and responsiveness of the AR application, which is essential for maintaining user engagement effectiveness [60]. Additionally, the quality of user interaction with the system plays a pivotal role. Our validation showed that the application is user-friendly; however, this interaction quality largely depends on the user’s prior experience with AR technologies and the design’s intuitiveness.

Furthermore, object recognition accuracy within the AR system is susceptible to environmental variables [36,59]. Conditions, such as inadequate lighting or obstructions in the camera’s visual field, can severely impair the system’s ability to recognize and display information about objects accurately, in this case, herbs. Such challenges highlight the need for robust design considerations in AR applications to ensure they are accessible and effective across varying technical specifications and user experiences. In most agricultural sites and massive warehouses, Internet access is sometimes limited; therefore, the present solution offers the possibility to use it offline.

Many advanced models rely on cloud computing to handle complex processing tasks, which can offload computing from the mobile device. However, this approach requires a stable Internet connection, which is not always available, especially in environments, like CEAGESP, where connectivity is limited due to electromagnetic interference and security restrictions. Therefore, the ARomaticLens application must work offline, requiring all processing to be carried out locally on the device. This requirement further emphasizes the need for lightweight and efficient models to deliver high accuracy while operating completely offline [15].

Model speed is another crucial factor. Users expect real-time performance where the app can quickly identify and classify herbs without noticeable delays. Slow inference times can degrade the user experience and make the application impractical for real-world use. Therefore, optimizing the model for faster inference times is vital. This can be achieved by simplifying the model architecture, using efficient algorithms, and taking advantage of hardware acceleration features available in modern mobile devices. When developing the ARomaticLens app, we considered the limitations associated with mobile devices [13]. By optimizing the model for size, speed, and efficiency, we ensure that the application remains responsive and practical for users in environments with limited connectivity and varying hardware resources. These considerations are fundamental to providing a robust, easy-to-use solution for identifying and classifying aromatic herbs [54].

5. Conclusions

This research achieved the objective of creating an application for identifying and classifying aromatic herbs in an environment with great diversity and similarity between the 18 species studied.

Users unanimously validated the use of the application in a natural environment and proved its functionality in the field. The processing of the images to be identified via computer vision guaranteed the accuracy of the results on the application screen. The processing of results within the application allowed its use without using the Internet, thus guaranteeing usability in the intended environment. The integration between the software allowed the generation of an augmented reality application integrated with computer vision technology.

Author Contributions

All authors contributed equally to the development of the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior—Brasil (CAPES)—Finance Code 001.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- UNDP. United Nations Development Programme. A Goal 2: Zero Hunger-Sustainable Development Goals. Available online: https://www.undp.org/sustainable-development-goals/zero-hunger (accessed on 12 December 2023).

- Chauhan, C.; Dhir, A.; Akram, M.U.; Salo, J. Food loss and waste in food supply chains. A systematic literature review and framework development approach. J. Clean. Prod. 2021, 295, e126438. [Google Scholar] [CrossRef]

- Pages-Rebull, J.; Pérez-Ràfols, C.; Serrano, N.; del Valle, M.; Díaz-Cruz, J.M. Classification and authentication of spices and aromatic herbs using HPLC-UV and chemometrics. Food Biosci. 2023, 52, e102401. [Google Scholar] [CrossRef]

- Galanakis, C.M.; Rizou, M.; Aldawoud, T.M.S.; Ucak, I.; Rowan, N.J. Innovations and technology disruptions in the food sector within the COVID-19 pandemic and post-lockdown era. Trends Food Sci. Technol. 2021, 110, 193–200. [Google Scholar] [CrossRef] [PubMed]

- Kayikci, Y.; Subramanian, N.; Dora, M.; Bhatia, M.S. Food supply chain in the era of Industry 4.0: Blockchain technology implementation opportunities and impediments from the perspective of people, process, performance, and technology. Prod. Plan. Control. 2022, 33, 301–321. [Google Scholar] [CrossRef]

- Ghobakhloo, M.; Fathi, M.; Iranmanesh, M.; Maroufkhani, P.; Morales, M.E. Industry 4.0 ten years on A bibliometric and systematic review of concepts, sustainability value drivers, and success determinants. J. Clean. Prod. 2021, 302, 127052. [Google Scholar] [CrossRef]

- Dubey, S.R.; Jalal, A.S. Application of Image Processing in Fruit and Vegetable Analysis: A Review. J. Intell. Syst. 2015, 24, 405–424. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, e215232. [Google Scholar] [CrossRef] [PubMed]

- Jatnika, R.D.A.; Medyawati, I.; Hustinawaty, H. Augmented Reality Design of Indonesia Fruit Recognition. Int. J. Electr. Comput. Eng. 2018, 8, 4654–4662. [Google Scholar]

- Kalinaki, K.; Shafik, W.; Gutu, T.J.L.; Malik, O.A. Computer Vision and Machine Learning for Smart Farming and Agriculture Practices. In Artificial Intelligence Tools and Technologies for Smart Farming and Agriculture Practices; IGI Global: Hershey, PA, USA, 2023; pp. 79–100. [Google Scholar] [CrossRef]

- Department of Economic and Social Affairs. Food Security, Nutrition, and Sustainable Agriculture. Available online: https://sdgs.un.org/topics/food-security-and-nutrition-and-sustainable-agriculture (accessed on 12 December 2023).

- Cheok, A.D.; Karunanayaka, K. Virtual Taste and Smell Technologies for Multisensory Internet and Virtual Reality; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Liberty, T.J.; Sun, S.; Kucha, C.; Adedeji, A.A.; Agidi, G.; Ngadi, M.O. Augmented reality for food quality assessment: Bridging the physical and digital worlds. J. Food Eng. 2024, 367, 111893. [Google Scholar]

- Velázquez, R.; Rodríguez, A.; Hernández, A.; Casquete, R.; Benito, M.J.; Martín, A. Spice and Herb Frauds: Types, Incidence, and Detection: The State of the Art. Foods 2023, 12, 3373. [Google Scholar] [CrossRef]

- Hossain, M.F.; Jamalipour, A.; Munasinghe, K. A Survey on Virtual Reality over Wireless Networks: Fundamentals, QoE, Enabling Technologies, Research Trends and Open Issues. TechRxiv 2023. [Google Scholar] [CrossRef]

- Ding, H.; Tian, J.; Yu, W.; Wilson, D.I.; Young, B.R.; Cui, X.; Xin, X.; Wang, Z.; Li, W. The Application of Artificial Intelligence and Big Data in the Food Industry. Foods 2023, 12, 4511. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, Y.; Beykal, B.; Qiao, M.; Xiao, Z.; Luo, Y. A mechanistic review on machine learning-supported detection and analysis of volatile organic compounds for food quality and safety. Trends Food Sci. Technol. 2024, 143, 104297. [Google Scholar] [CrossRef]

- Johannesson, P.; Perjons, E. Systems Development and the Method Framework for Design Science Research. In An Introduction to Design Science; Johannesson, P., Perjons, E., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 157–165. [Google Scholar] [CrossRef]

- Moradkhani, H.; Ahmadalipour, A.; Moftakhari, H.; Abbaszadeh, P.; Alipour, A. A review of the 21st century challenges in the food-energy-water security in the Middle East. Water 2019, 11, 682. [Google Scholar] [CrossRef]

- Naylor, R.L.; Hardy, R.W.; Buschmann, A.H.; Bush, S.R.; Cao, L.; Klinger, D.H.; Little, D.C.; Lubchenco, J.; Shumway, S.E.; Troell, M. A 20-year retrospective review of global aquaculture. Nature 2021, 591, 551–563. [Google Scholar] [CrossRef]

- Chapman, J.A.; Bernstein, I.L.; Lee, R.E.; Oppenheimer, J.; Nicklas, R.A.; Portnoy, J.M.; Sicherer, S.H.; Schuller, D.E.; Spector, S.L.; Khan, D.; et al. Food allergy: A practice parameter. Ann. Allergy Asthma Immunol. 2006, 96, S1–S68. [Google Scholar] [CrossRef]

- Gremillion, T.M. Food Safety and Consumer Expectations. In Encyclopedia of Food Safety, 2nd ed.; Smithers, G.W., Ed.; Academic Press: Oxford, UK, 2024; pp. 547–550. [Google Scholar]

- Salgueiro, L.; Martins, A.P.; Correia, H. Raw materials: The importance of quality and safety. A review. Flavour Fragr. J. 2010, 25, 253–271. [Google Scholar] [CrossRef]

- Guiné, R.P.F.; Gonçalves, F.J. Bioactive compounds in some culinary aromatic herbs and their effects on human health. Mini-Rev. Med. Chem. 2016, 16, 855–866. [Google Scholar] [CrossRef]

- Kindlovits, S.; Gonçalves, F.J. Effect of weather conditions on the morphology, production and chemical composition of two cultivated medicinal and aromatic species. Eur. J. Hortic. Sci. 2014, 79, 76–83. [Google Scholar]

- Raffi, J.; Yordanov, N.D.; Chabane, S.; Douifi, L.; Gancheva, V.; Ivanova, S. Identification of irradiation treatment of aromatic herbs, spices and fruits by electron paramagnetic resonance and thermoluminescence. Spectrochim. Acta-Part A Mol. Biomol. Spectrosc. 2000, 56, 409–416. [Google Scholar] [CrossRef]

- Rocha, R.P.; Melo, E.C.; Radünz, L.L. Influence of drying process on the quality of medicinal plants: A review. J. Med. Plant Res. 2011, 5, 7076–7084. [Google Scholar] [CrossRef]

- Lacis-Lee, J.; Brooke-Taylor, S.; Clark, L. Allergens as a Food Safety Hazard: Identifying and Communicating the Risk. In Encyclopedia of Food Safety, 2nd ed.; Smithers, G.W., Ed.; Academic Press: Oxford, UK, 2024; pp. 700–710. [Google Scholar]

- Husin, Z.; Shakaff, A.; Aziz, A.; Farook RS, M.; Jaafar, M.N.; Hashim, U.; Harun, A. Embedded portable device for herb leaves recognition using image processing techniques and neural network algorithm. Comput. Electron. Agric. 2012, 89, 18–29. [Google Scholar] [CrossRef]

- Senevirathne, L.; Shakaff, A.; Aziz, A.; Farook, R.; Jaafar, M.; Hashim, U.; Harun, A. Mobile-based Assistive Tool to Identify & Learn Medicinal Herbs. In Proceedings of the 2nd International Conference on Advancements in Computing (ICAC), Colombo, Sri Lanka, 10–11 December 2020; Volume 1, pp. 97–102. [Google Scholar] [CrossRef]

- Lan, K.; Tsai, T.; Hu, M.; Weng, J.-C.; Zhang, J.-X.; Chang, Y.-S. Toward Recognition of Easily Confused TCM Herbs on the Smartphone Using Hierarchical Clustering Convolutional Neural Network. Evid.-Based Complement. Altern. Med. 2023, 2023, e9095488. [Google Scholar] [CrossRef]

- Weerasinghe, N.C.; AVGHS, A.; Fernando, W.W.R.S.; Rajapaksha, P.R.K.N.; Siriwardana, S.E.; Nadeeshani, M. HABARALA—A Comprehensive Solution for Food Security and Sustainable Agriculture through Alternative Food Resources and Technology. In Proceedings of the 2023 IEEE 17th International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 25–26 August 2023; pp. 175–180. [Google Scholar]

- How augmented reality (AR) is transforming the restaurant sector: Investigating the impact of “Le Petit Chef” on customers’ dining experiences. Technol. Forecast. Soc. Change 2021, 172, 121013. [CrossRef]

- Reif, R.; Walch, D. Augmented & Virtual Reality applications in the field of logistics. Vis. Comput. 2008, 24, 987–994. [Google Scholar]

- Chai, J.J.K.; O’Sullivan, C.; Gowen, A.A.; Rooney, B.; Xu, J.-L. Augmented/mixed reality technologies for food: A review. Trends Food Sci. Technol. 2022, 124, 182–194. [Google Scholar] [CrossRef]

- Domhardt, M.; Tiefengrabner, M.; Dinic, R.; Fötschl, U.; Oostingh, G.J.; Stütz, T.; Stechemesser, L.; Weitgalsser, R.; Ginzinger, S.W. Training of Carbohydrate Estimation for People with Diabetes Using Mobile Augmented Reality. J. Diabetes Sci. Technol. 2015, 9, 516–524. [Google Scholar] [CrossRef] [PubMed]

- Musa, H.S.; Krichen, M.; Altun, A.A.; Ammi, M. Survey on Blockchain-Based Data Storage Security for Android Mobile Applications. Sensors 2023, 23, 8749. [Google Scholar] [CrossRef]

- Graney-Ward, C.; Issac, B.; KETSBAIA, L.; Jacob, S.M. Detection of Cyberbullying Through BERT and Weighted Ensemble of Classifiers. TechRxiv 2022. [Google Scholar] [CrossRef]

- Dunkel, E.R.; Swope, J.; Candela, A.; West, L.; Chien, S.A.; Towfic, Z.; Buckley, L.; Romero-Cañas, J.; Espinosa-Aranda, J.L.; Hervas-Martin, E.; et al. Benchmarking Deep Learning Models on Myriad and Snapdragon Processors for Space Applications. J. Aerosp. Inf. Syst. 2023, 20, 660–674. [Google Scholar] [CrossRef]

- Arroba, P.; Buyya, R.; Cárdenas, R.; Risco-Martín, J.L.; Moya, J.M. Sustainable edge computing: Challenges and future directions. Softw. Pract. Exp. 2024, 1–25. [Google Scholar] [CrossRef]

- Velesaca, H.O.; Suárez, P.L.; Mira, R.; Sappa, A.D. Computer vision based food grain classification: A comprehensive survey. Comput. Electron. Agric. 2021, 187, e106287. [Google Scholar] [CrossRef]

- Poonja, H.A.; Shirazi, M.A.; Khan, M.J.; Javed, K. Engagement detection and enhancement for STEM education through computer vision, augmented reality, and haptics. Image Vis. Comput. 2023, 136, 104720. [Google Scholar] [CrossRef]

- Engine Developer Portal. SDK Download. 2022. Available online: https://developer.vuforia.com/downloads/SDK (accessed on 23 November 2023).

- Unity Technologies. Unity 2020.3.33, Unity: Beijing, China, 2022. Available online: https://unity.com/releases/editor/whats-new/2020.3.33 (accessed on 20 November 2023).

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, e3289801. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Sun, Y.; Wang, J. Automatic Image-Based Plant Disease Severity Estimation Using Deep Learning. Comput. Intell. Neurosci. 2017, 2017, e2917536. [Google Scholar] [CrossRef] [PubMed]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Antunes, S.N.; Okano, M.T.; Nääs, I.D.A.; Lopes, W.A.C.; Aguiar, F.P.L.; Vendrametto, O.; Fernandes, M.E. Model Development for Identifying Aromatic Herbs Using Object Detection Algorithm. AgriEngineering 2024, 6, 1924–1936. [Google Scholar] [CrossRef]

- Mustafa, M.S.; Husin, Z.; Tan, W.K.; Mavi, M.F.; Farook, R.S.M. Development of automated hybrid intelligent system for herbs plant classification and early herbs plant disease detection. Neural Comput. Appl. 2020, 32, 11419–11441. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Moonrinta, J.; Chaiwiwatrakul, S. Convolutional neural networks for herb identification: Plain background and natural environment. Int. J. Adv. Sci. Eng. Inf. Technol. 2022, 12, 1244–1252. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, Z.; Tian, E.; Hu, C.; Zong, H.; Yang, F. A CNN Model for Herb Identification Based on Part Priority Attention Mechanism. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 2565–2571. [Google Scholar]

- Sinha, J.; Chachra, P.; Biswas, S.; Jayswal, A.K. Ayurvedic Herb Classification using Transfer Learning based CNNs. In Proceedings of the 2024 IEEE 2nd International Conference on Advancement in Computation & Computer Technologies (InCACCT), Punjab, India, 2–3 May 2024; pp. 628–634. [Google Scholar]

- Senan, N.; Najib, N.A.M. UTHM Herbs Garden Application Using Augmented Reality. Appl. Inf. Technol. Comput. Sci. 2020, 1, 181–191. [Google Scholar]

- Permana, R.; Tosida, E.T.; Suriansyah, M.I. Development of augmented reality portal for medicininal plants introduction. Int. J. Glob. Oper. Res. 2022, 3, 52–63. [Google Scholar] [CrossRef]

- Zhu, Q.; Xie, Y.; Ye, F.; Gao, Z.; Che, B.; Chen, Z.; Yu, D. Chinese herb medicine in augmented reality. arXiv 2023, arXiv:2309.13909. [Google Scholar]

- Angeles, J.M.; Calanda, F.B.; Bayon-on, T.V.V.; Morco, R.C.; Avestro, J.; Corpuz, M.J.S. Ar plants: Herbal plant mobile application utilizing augmented reality. In Proceedings of the 2017 International Conference on Computer Science and Artificial Intelligence, Jakarta, Indonesia, 5–7 December 2017; pp. 43–48. [Google Scholar]

- Gerber, A.; Baskerville, R. (Eds.) Design Science Research for a New Society: Society 5.0: 18th International Conference on Design Science Research in Information Systems and Technology, DESRIST 2023, Pretoria, South Africa, May 31–June 2 2023, Proceedings; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Sadeghi-Niaraki, A.; Choi, S.-M. A Survey of Marker-Less Tracking and Registration Techniques for Health & Environmental Applications to Augmented Reality and Ubiquitous Geospatial Information Systems. Sensors 2020, 20, 2997. [Google Scholar] [CrossRef]

- Arena, F.; Collotta, M.; Pau, G.; Termine, F. An Overview of Augmented Reality. Computers 2022, 11, 28. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).