Augmented Reality Glasses Applied to Livestock Farming: Potentials and Perspectives

Abstract

1. Introduction

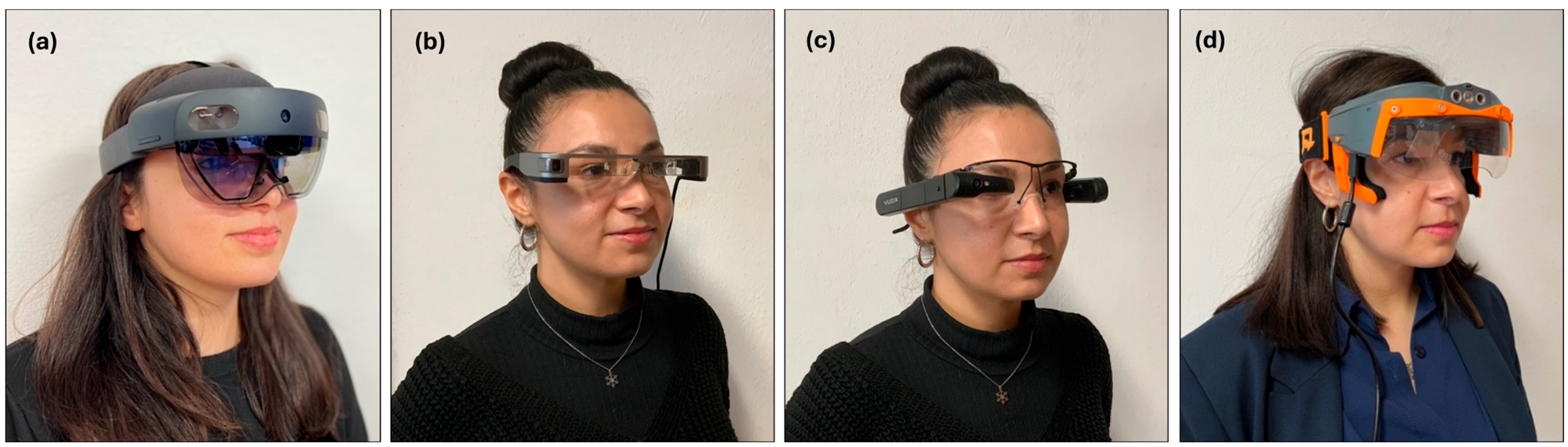

2. Materials and Methods

2.1. Scanning Test of Augmented Reality Markers

2.2. Remote Transmission Quality Assessment

2.3. Smart Glasses Battery Life Test

2.4. Statistical Analysis

3. Results and Discussion

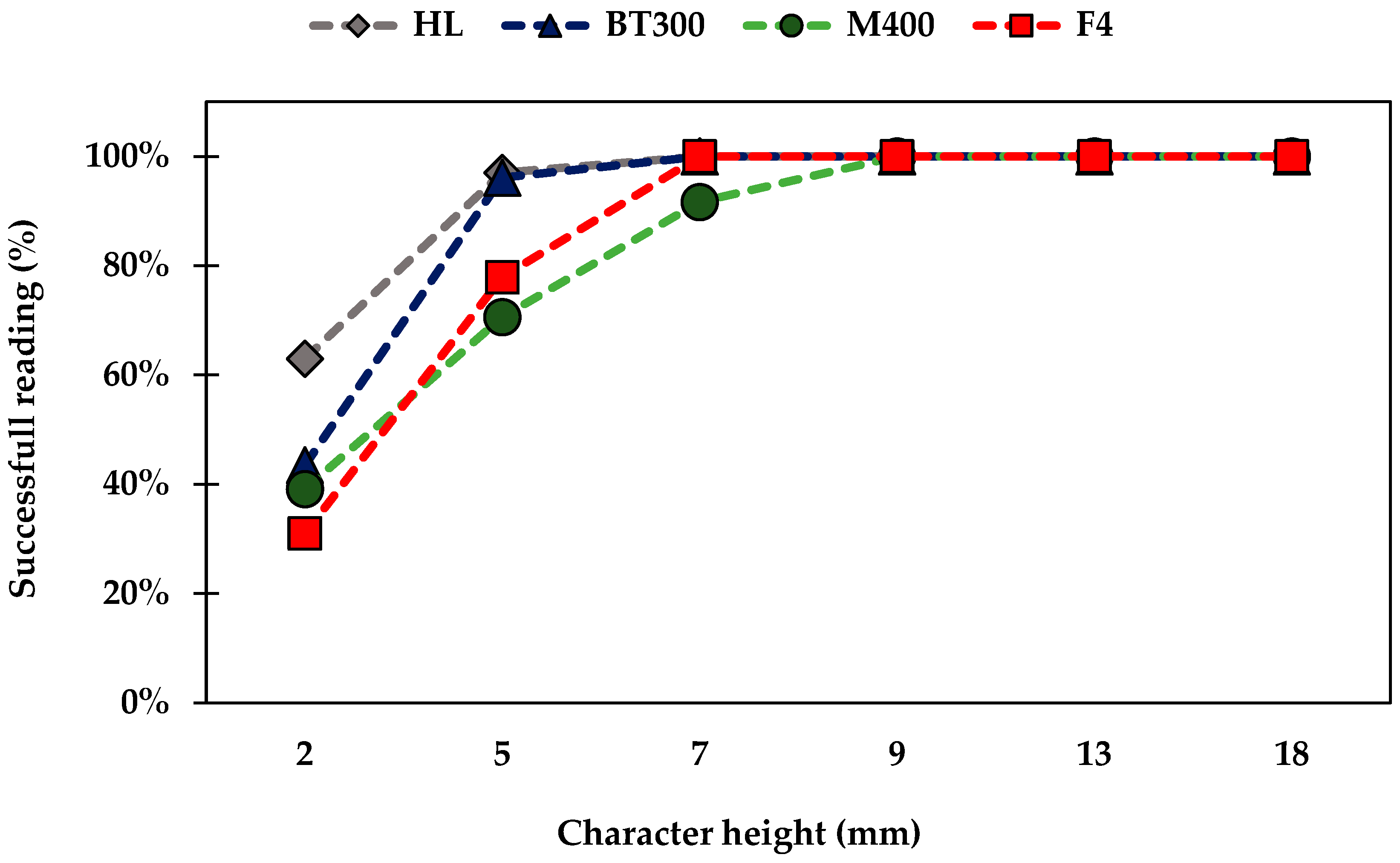

3.1. QR Code Scanning Performance

3.2. Audio and Video Quality Performance

3.3. Smart Glasses Battery Life Test

3.4. Challenges and Future Perspectives

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Azuma, R. A survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Klinker, K.; Wiesche, M.; Krcmar, H. Digital Transformation in Health Care: Augmented Reality for Hands-Free Service Innovation. Inf. Syst. Front. 2020, 22, 1419–1431. [Google Scholar] [CrossRef]

- Lee, L.-H.; Hui, P. Interaction Methods for Smart Glasses: A Survey. IEEE Access 2018, 6, 28712–28732. [Google Scholar] [CrossRef]

- Höllerer, T.; Feiner, S. Mobile Augmented Reality. In Telegeoinformatics: Location-Based Computing and Services; Taylor & Francis Books Ltd.: Abingdon, UK, 2004; p. 21. [Google Scholar]

- Kim, S.; Nussbaum, M.A.; Gabbard, J.L. Influences of augmented reality head-worn display type and user interface design on performance and usability in simulated warehouse order picking. Appl. Ergon. 2019, 74, 186–193. [Google Scholar] [CrossRef] [PubMed]

- Syberfeldt, A.; Danielsson, O.; Gustavsson, P. Augmented Reality Smart Glasses in the Smart Factory: Product Evaluation Guidelines and Review of Available Products. IEEE Access 2017, 5, 9118–9130. [Google Scholar] [CrossRef]

- Billinghurst, M.; Clark, A.; Lee, G. A Survey of Augmented Reality. Found. Trends Hum. –Comput. Interact. 2015, 8, 73–272. [Google Scholar] [CrossRef]

- Chatzopoulos, D.; Bermejo, C.; Huang, Z.; Hui, P. Mobile Augmented Reality Survey: From Where We Are to Where We Go. IEEE Access 2017, 5, 6917–6950. [Google Scholar] [CrossRef]

- Oufqir, Z.; Abderrahmani, A.E.; Satori, K. From marker to markerless in augmented reality. In Embedded Systems and Artificial Intelligence; Springer: Singapore, 2020; pp. 599–612. [Google Scholar]

- Muñoz-Saavedra, L.; Miró-Amarante, L.; Domínguez-Morales, M. Augmented and virtual reality evolution and future ten-dency. Appl. Sci. 2020, 10, 322. [Google Scholar] [CrossRef]

- Szajna, A.; Stryjski, R.; Woźniak, W.; Chamier-Gliszczyński, N.; Kostrzewski, M. Assessment of augmented reality in manual wiring production process with use of mobile AR glasses. Sensors 2020, 20, 4755. [Google Scholar] [CrossRef] [PubMed]

- King, G.R.; Piekarski, W.; Thomas, B. ARVino—Outdoor augmented reality visualisation of viticulture GIS data. In Proceedings of the Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR’05), Vienna, Austria, 5–8 October 2005. [Google Scholar]

- Santana-Fernández, J.; Gómez-Gil, J.; DelPozo-SanCirilo, L. Design and Implementation of a GPS Guidance System for Agri-cultural Tractors Using Augmented Reality Technology. Sensors 2010, 10, 10435–10447. [Google Scholar] [CrossRef] [PubMed]

- Huuskonen, T.; Oksanen, J. Augmented reality for supervising multirobot system in agricultural field operation. IFAC-Pap. 2019, 52, 367–372. [Google Scholar] [CrossRef]

- Thomas, N.; Ickjai, L. Using mobile-based augmented reality and object detection for real-time Abalone growth monitoring. Comput. Electrionics Agric. 2023, 207, 107744. [Google Scholar]

- Garzon, J.; Baldiris, S.; Acevedo, J.; Pavon, J. Augmented Reality-based application to foster sustainable agriculture in the context of aquaponics. In Proceedings of the 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 6–9 July 2020; pp. 316–318. [Google Scholar]

- Larbaigt, J.; Lemercier, C. An Evaluation of the Acceptability of Smart Eyewear for Plot Diagnosis Activity in Agriculture. Ergon. Des. Q. Hum. Factors Appl. 2021, 31, 32–39. [Google Scholar] [CrossRef]

- Caria, M.; Sara, G.; Todde, G.; Polese, M.; Pazzona, A. Exploring smart glasses for augmented reality: A valuable and integrative tool in precision livestock farming. Animals 2019, 9, 903. [Google Scholar] [CrossRef] [PubMed]

- Caria, M.; Todde, G.; Sara, G.; Piras, M.; Pazzona, A. Performance and usability of smartglasses for augmented reality in precision livestock farming operations. Appl. Sci. 2020, 10, 2318. [Google Scholar] [CrossRef]

- Luyu, D.; Yang, L.; Ligen, Y.; Weihong, M.; Qifeng, L.; Ronghua, G.; Qinyang, Y. Real-time monitoring of fan operation in livestock houses based on the image processing. Expert Syst. Appl. 2023, 213, 118683. [Google Scholar]

- Pinna, D.; Sara, G.; Todde, G.; Atzori, A.S.; Artizzu, V.; Spano, L.D.; Caria, M. Advancements in combining electronic animal identification and augmented reality technologies in digital livestock farming. Sci. Rep. 2023, 13, 18282. [Google Scholar] [CrossRef] [PubMed]

- Sara, G.; Todde, G.; Polese, M.; Caria, M. Evaluation of smart glasses for augmented reality: Technical advantages on their integration in agricultural systems. In Proceedings of the European Conference on Agricultural Engineering AgEng2021, Évora, Portugal, 4–8 July 2021; Barbosa, J.C., Silva, L.L., Lourenço, P., Sousa, A., Silva, J.R., Cruz, V.F., Baptista, F., Eds.; Universidade de Évora: Évora, Portugal, 2021; pp. 580–587. [Google Scholar]

- Sara, G.; Todde, G.; Caria, M. Assessment of video see-through smart glasses for augmented reality to support technicians during milking machine maintenance. Sci. Rep. 2022, 12, 15729. [Google Scholar] [CrossRef] [PubMed]

- Muensterer, O.J.; Lacher, M.; Zoeller, C.; Bronstein, M.; Kübler, J. Google Glass in pediatric surgery: An exploratory study. Int. J. Surg. 2014, 12, 281–289. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 19 February 2024).

- Tarjan, L.; Šenk, I.; Tegeltija, S.; Stankovski, S.; Ostojic, G. A readability analysis for QR code application in a traceability system. Comput. Electron. Agric. 2014, 109, 1–11. [Google Scholar] [CrossRef]

- Bai, H.; Zhou, G.; Hu, Y.; Sun, A.; Xu, X.; Liu, X.; Lu, C. Traceability technologies for farm animals and their products in China. Food Control 2017, 79, 35–43. [Google Scholar] [CrossRef]

- Gebresenbet, G.; Bosona, T.; Olsson, S.-O.; Garcia, D. Smart system for the optimization of logistics performance of the pruning biomass value chain. Appl. Sci. 2018, 8, 1162. [Google Scholar] [CrossRef]

- Green, T.; Smith, T.; Hodges, R.; Fry, W.M. A simple and inexpensive way to document simple husbandry in animal care facilities using QR code scanning. Lab. Anim. 2017, 51, 656–659. [Google Scholar] [CrossRef]

- Yang, F.; Wang, K.; Han, Y.; Qiao, Z. A Cloud-Based Digital Farm Management System for Vegetable Production Process Management and Quality Traceability. Sustainability 2018, 10, 4007. [Google Scholar] [CrossRef]

- Kim, M.; Choi, S.H.; Park, K.-B.; Lee, J.Y. User Interactions for augmented reality smart glasses: A comparative evaluation of visual contexts and interaction gestures. Appl. Sci. 2019, 9, 3171. [Google Scholar] [CrossRef]

- Tarkoma, S.; Siekkinen, M.; Lagerspetz, E.; Xiao, Y. Smartphone Energy Consumption: Modeling and Optimization; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Phupattanasilp, P.; Tong, S. Augmented reality in the integrative internet of things (AR-IoT): Application for precision farming. Sustainability 2019, 11, 2658. [Google Scholar] [CrossRef]

- Trendov, N.M.; Varas, S.; Zenf, M. Digital Technologies in Agriculture and Rural Areas: Briefing Paper; Food and Agriculture Organization of the United Nations: Rome, Italy, 2019. [Google Scholar]

| Model | HoloLens2 | BT300 | M400 | F4 |

|---|---|---|---|---|

| Brand | Microsoft | Epson | Vuzix | GlassUp |

| Operating system | Windows Holographic | Android 5.1 | Android 8.1 | |

| Processor | Qualcomm Snapdragon 850 | Intel Atom (Quad-core) | Qualcomm XR1 (Octa-core) | Cortex A9 |

| Sensors | Head tracking, eye tracking, depth sensor, Gyroscope–accelerometer–magnetometer (3 axes) | Gyroscope–accelerometer–magnetometer (3 axes) lux sensor | Gyroscope–accelerometer–er-magnetometer (3 axes) | Accelerometer–gyroscope–compass (9 axis), lux sensor |

| Connectivity | GPS, Wi-fi, Bluetooth, USB-C | GPS, Wi-fi, Bluetooth, micro-USB | GPS, Wi-fi, Bluetooth, USB-C | Wi-Fi, Bluetooth |

| Display | Holographic lenses (1440 × 936) | Si-Oled (1280 × 720) | Occluded OLED (640 × 360) | LCD Full color (640 × 480) |

| Controller input | Hand recognition, Eye recognition, Voice command | External touchpad | Integrated touchpad, 3 buttons, voice command | External Joypad, one button on the glasses |

| Camera | 8 Megapixel | 5 Megapixel | 12.8 Megapixel | 5 Megapixel |

| Field of view | 43° | 23° | 16.8° | 22° |

| Battery life | 2–3 h | 4 h | 2–12 h | 6–8 h |

| Weight | 566 g | 69 g | 190 g | 251 g |

| QR Code Size (cm) | 3.5 | 4 | 7.5 |

|---|---|---|---|

| HL | 2.06 aA | 1.24 bA | 1.84 aA |

| SD | 0.61 | 0.67 | 0.83 |

| BT300 | 6.01 aB | 6.30 aB | 4.71 bB |

| SD | 1.01 | 1.25 | 1.42 |

| M400 | 2.04 abA | 2.04 aC | 1.86 bA |

| SD | 0.34 | 0.31 | 0.31 |

| F4 | 12.07 aC | 8.54 bC | 7.37 bC |

| SD | 5.21 | 3.04 | 2.09 |

| Parameter/ Function | Farming Application/ Implication | HL | BT300 | M400 | F4 |

|---|---|---|---|---|---|

| Battery life | High battery life, reduced recharge times, or interchangeable batteries (M400) allow continuous field use or with less loss of time. | W | W | S | S |

| Marker detection (time/distance) | Obtaining overlap information on animals, plants, crops, and agricultural machinery, in a reduced time interval or at high distances could increase the farmer’s efficiency. | S | W | S | W |

| Audio-Video Transmission | Transmitting images and audio with good quality (clarity, timing, detail) could improve and expand remote technical assistance to farmers in the field. | S | S | W | W |

| Field of View | Limited field of view, <20°, ref. [8] could negatively influence the farmer’s use of SG while working. | S | S | W | W |

| Visualization System | The system could affect the farmer’s safety by limiting the farmer’s field of view, especially in the case of binocular or video see-through systems | S | S | W | S |

| Weight/comfort | Normal glasses weigh about 20 g, an excessive or unbalanced weight of SG could negatively affect its use by farmers | S | S | W | W |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sara, G.; Pinna, D.; Todde, G.; Caria, M. Augmented Reality Glasses Applied to Livestock Farming: Potentials and Perspectives. AgriEngineering 2024, 6, 1859-1869. https://doi.org/10.3390/agriengineering6020108

Sara G, Pinna D, Todde G, Caria M. Augmented Reality Glasses Applied to Livestock Farming: Potentials and Perspectives. AgriEngineering. 2024; 6(2):1859-1869. https://doi.org/10.3390/agriengineering6020108

Chicago/Turabian StyleSara, Gabriele, Daniele Pinna, Giuseppe Todde, and Maria Caria. 2024. "Augmented Reality Glasses Applied to Livestock Farming: Potentials and Perspectives" AgriEngineering 6, no. 2: 1859-1869. https://doi.org/10.3390/agriengineering6020108

APA StyleSara, G., Pinna, D., Todde, G., & Caria, M. (2024). Augmented Reality Glasses Applied to Livestock Farming: Potentials and Perspectives. AgriEngineering, 6(2), 1859-1869. https://doi.org/10.3390/agriengineering6020108