Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design

2.2. Image Data Acquisition and Pre-Processing

2.3. YOLO Architecture

2.3.1. Backbone

2.3.2. Neck

2.3.3. Head

2.4. Performance Metrics

2.4.1. Precision

2.4.2. Recall

2.4.3. Mean Average Precision

2.4.4. F1-Score

2.4.5. Loss Function

- Objectness loss: This loss term encourages the model to correctly predict whether a mortality object is present in each grid cell. Objectness loss (λobj) is computed between the predicted and ground truth objectness scores by the binary cross-entropy loss [36].

- Classification loss: This loss term encourages the model to correctly classify the detected mortality objects into their respective classes. Classification loss (λcls) is computed as the cross-entropy loss between the predicted class probabilities and the ground truth class labels [36].

- Regression loss: This loss term penalizes the model for incorrect predictions of the bounding box coordinates and dimensions [36]. Regression loss (λreg) is computed as the sum of the smooth L1 loss between the predicted and ground truth x and y coordinates, the smooth L1 loss between the predicted and ground truth width and height, and the focal loss between the predicted and ground truth confidence scores [29]. The YOLO-MD Loss is computed as the weighted sum of the mortality objectness loss, classification loss, and regression loss. In general, the importance of each term in the loss function is determined by the user, who sets the corresponding weights accordingly. The YOLO Loss is minimized during the training process using backpropagation and gradient descent, with the goal of reducing the overall prediction error of the model [37].

2.5. Computational Parameters

3. Results

3.1. Model Comparison

3.2. Environmental Condition

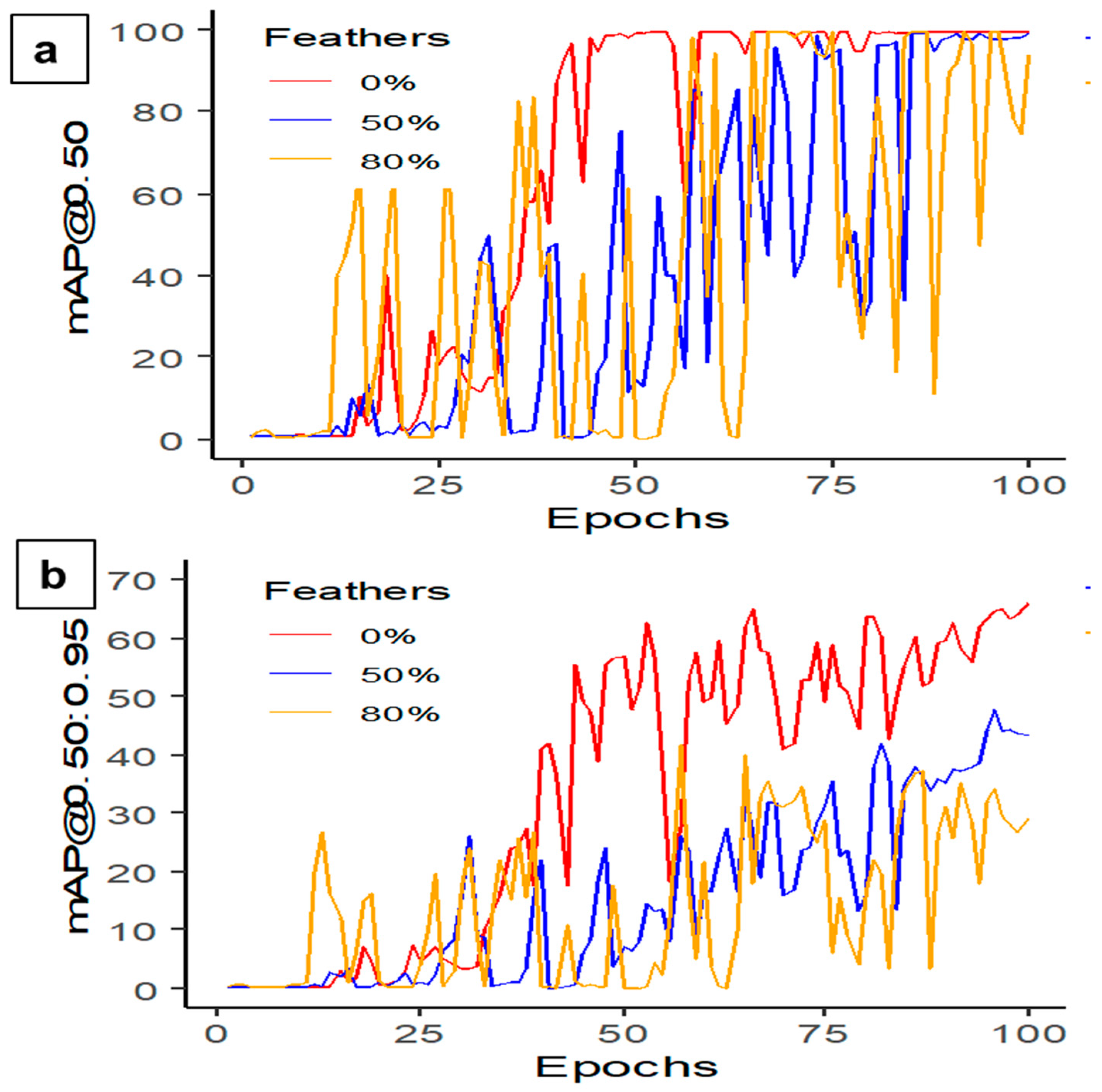

3.2.1. Feathers Covering

3.2.2. Litter Coverage

3.2.3. Camera Settings

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Brochu, N.M.; Guerin, M.T.; Varga, C.; Lillie, B.N.; Brash, M.L.; Susta, L. A Two-Year Prospective Study of Small Poultry Flocks in Ontario, Canada, Part 2: Causes of Morbidity and Mortality. J. Vet. Diagn. Investig. 2019, 31, 336–342. [Google Scholar] [CrossRef]

- Cadmus, K.J.; Mete, A.; Harris, M.; Anderson, D.; Davison, S.; Sato, Y.; Helm, J.; Boger, L.; Odani, J.; Ficken, M.D. Causes of Mortality in Backyard Poultry in Eight States in the United States. J. Vet. Diagn. Investig. 2019, 31, 318–326. [Google Scholar] [CrossRef] [PubMed]

- Dessie, T.; Ogle, B. Village Poultry Production Systems in the Central Highlands of Ethiopia. Trop. Anim. Health Prod. 2001, 33, 521–537. [Google Scholar] [CrossRef] [PubMed]

- Yerpes, M.; Llonch, P.; Manteca, X. Factors Associated with Cumulative First-Week Mortality in Broiler Chicks. Animals 2020, 10, 310. [Google Scholar] [CrossRef] [PubMed]

- Ekiri, A.B.; Armson, B.; Adebowale, K.; Endacott, I.; Galipo, E.; Alafiatayo, R.; Horton, D.L.; Ogwuche, A.; Bankole, O.N.; Galal, H.M. Evaluating Disease Threats to Sustainable Poultry Production in Africa: Newcastle Disease, Infectious Bursal Disease, and Avian Infectious Bronchitis in Commercial Poultry Flocks in Kano and Oyo States, Nigeria. Front. Vet. Sci. 2021, 8, 730159. [Google Scholar] [CrossRef]

- Gray, H.; Davies, R.; Bright, A.; Rayner, A.; Asher, L. Why Do Hens Pile? Hypothesizing the Causes and Consequences. Front. Vet. Sci. 2020, 7, 616836. [Google Scholar] [CrossRef]

- Edwan, E.; Qassem, M.A.; Al-Roos, S.A.; Elnaggar, M.; Ahmed, G.; Ahmed, A.S.; Zaqout, A. Design and Implementation of Monitoring and Control System for a Poultry Farm. In Proceedings of the International Conference on Promising Electronic Technologies (ICPET), Jerusalem, Israel, 16–17 December 2020; pp. 44–49. [Google Scholar] [CrossRef]

- Saeed, M.; Abbas, G.; Alagawany, M.; Kamboh, A.A.; Abd El-Hack, M.E.; Khafaga, A.F.; Chao, S. Heat Stress Management in Poultry Farms: A Comprehensive Overview. J. Therm. Biol. 2019, 84, 414–425. [Google Scholar] [CrossRef]

- Wibisono, F.M.; Wibisono, F.J.; Effendi, M.H.; Plumeriastuti, H.; Hidayatullah, A.R.; Hartadi, E.B.; Sofiana, E.D. A Review of Salmonellosis on Poultry Farms: Public Health Importance. Syst. Rev. Pharm. 2020, 11, 481–486. [Google Scholar]

- Blas, A.; Diezma, B.; Moya, A.; Gomez-Martinez, C. Early Detection of Mortality in Poultry Production Using High Resolution Thermography. Available online: https://www.visavet.es/en/early-detection-of-mortality-in-poultry-production-using-high-resolution-thermography/34=1264/ (accessed on 10 March 2023).

- Muvva, V.V.; Zhao, Y.; Parajuli, P.; Zhang, S.; Tabler, T.; Purswell, J. Automatic Identification of Broiler Mortality Using Image Processing Technology; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2018; p. 1. [Google Scholar] [CrossRef]

- Zhu, W.; Lu, C.; Li, X.; Kong, L. Dead Birds Detection in Modern Chicken Farm Based on SVM. In Proceedings of the 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Bao, Y.; Lu, H.; Zhao, Q.; Yang, Z.; Xu, W.; Bao, Y.; Xu, W. Detection system of dead and sick chickens in large scale farms based on artificial intelligence. Math. Biosci. Eng. 2021, 18, 6117–6135. [Google Scholar] [CrossRef]

- Sentas, A.; Tashiev, İ.; Küçükayvaz, F.; Kul, S.; Eken, S.; Sayar, A.; Becerikli, Y. Performance Evaluation of Support Vector Machine and Convolutional Neural Network Algorithms in Real-Time Vehicle Type and Color Classification. Evol. Intell. 2020, 13, 83–91. [Google Scholar] [CrossRef]

- Yang, X.; Chai, L.; Bist, R.B.; Subedi, S.; Wu, Z. A Deep Learning Model for Detecting Cage-Free Hens on the Litter Floor. Animals 2022, 12, 1983. [Google Scholar] [CrossRef] [PubMed]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking Pecking Behaviors and Damages of Cage-Free Laying Hens with Machine Vision Technologies. Comput. Electron. Agric. 2023, 204, 107545. [Google Scholar] [CrossRef]

- Bist, R.B.; Yang, X.; Subedi, S.; Chai, L. Mislaying behavior detection in cage-free hens with deep learning technologies. Poult. Sci. 2023, 102, 102729. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Regmi, P.; Ding, Y.; Bist, R.B.; Chai, L. Automatic Detection of Brown Hens in Cage Free Houses with Deep Learning Methods. Poult. Sci. 2023, 100, 102784. [Google Scholar] [CrossRef]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking Floor Eggs with Machine Vision in Cage-Free Hen Houses. Poult. Sci. 2023, 102, 102637. [Google Scholar] [CrossRef]

- Liu, H.W.; Chen, C.H.; Tsai, Y.C.; Hsieh, K.W.; Lin, H.T. Identifying images of dead chickens with a chicken removal system integrated with a deep learning algorithm. Sensors 2021, 21, 3579. [Google Scholar] [CrossRef]

- Li, G.; Chesser, G.D.; Purswell, J.L.; Magee, C.; Gates, R.S.; Xiong, Y. Design and Development of a Broiler Mortality Removal Robot. Appl. Eng. Agric. 2022, 38, 853–863. [Google Scholar] [CrossRef]

- Chen, C.; Kong, X.; Wang, Q.; Deng, Z. A Method for Detecting the Death State of Caged Broilers Based on Improved Yolov5. SSRN Electron. J. 2022, 32. [Google Scholar] [CrossRef]

- Bist, R.B.; Chai, L. Advanced Strategies for Mitigating Particulate Matter Generations in Poultry Houses. Appl. Sci. 2022, 12, 11323. [Google Scholar] [CrossRef]

- Bist, R.B.; Subedi, S.; Chai, L.; Yang, X. Ammonia Emissions, Impacts, and Mitigation Strategies for Poultry Production: A Critical Review. J. Environ. Manag. 2023, 328, 116919. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. Available online: http://proceedings.mlr.press/v97/tan19a/tan19a.pdf (accessed on 8 January 2023).

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Lin, Y.; Feng, P.; Guan, J.; Wang, W.; Chambers, J. IENet: Interacting Embranchment One Stage Anchor Free Detector for Orientation Aerial Object Detection. arXiv 2019, arXiv:1912.00969. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Weng, K.; Chu, X.; Xu, X.; Huang, J.; Wei, X. EfficientRep:An Efficient Repvgg-Style ConvNets with Hardware-Aware Neural Network Design. arXiv 2023, arXiv:2302.00386. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 (6.0/6.1) Brief Summary, Issue #6998, Ultralytics/Yolov5. Available online: https://github.com/ultralytics/yolov5/issues/6998 (accessed on 10 March 2023).

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 1–13. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. Real-Time Growth Stage Detection Model for High Degree of Occultation Using DenseNet-Fused YOLOv4. Comput. Electron. Agric. 2022, 193, 106694. [Google Scholar] [CrossRef]

- Tesema, S.N.; Bourennane, E.-B. DenseYOLO: Yet Faster, Lighter and More Accurate YOLO. In Proceedings of the 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 4–7 November 2020; pp. 0534–0539. [Google Scholar] [CrossRef]

- Amrani, M.; Bey, A.; Amamra, A. New SAR target recognition based on YOLO and very deep multi-canonical correlation analysis. Int. J. Remote Sens. 2022, 43, 5800–5819. [Google Scholar] [CrossRef]

| Settings | Parameters | Levels |

|---|---|---|

| Camera | Height | 0.5 m, 1 m, 3 m |

| Feather | Feather covering | 0%, 50%, 80% |

| Litter | Litter covering | 0%, 50%, 80% |

| Class | Original Dataset a | Train (70%) | Validation (20%) | Test (10%) |

|---|---|---|---|---|

| MDLitter | 3000 b | 2100 | 600 | 300 |

| MDFeather | 3000 b | 2100 | 600 | 300 |

| MDHeight | 3000 b | 2100 | 600 | 300 |

| MDModel | 2200 | 1540 | 440 | 220 |

| Data Summary | YOLOv5s-MD | YOLOv5m-MD | YOLOv5x-MD | YOLOv6s-MD | YOLOv6m-MD | YOLOv6l_relu-MD |

|---|---|---|---|---|---|---|

| Recall (%) | 98.4 | 99.6 | 100.0 | 81.6 | 82.4 | 82.8 |

| mAP@0.50 (%) | 99.5 | 99.5 | 99.5 | 98.9 | 99.0 | 98.8 |

| mAP@0.50:0.95 (%) | 82.3 | 81.9 | 81.1 | 77.1 | 78.2 | 78.3 |

| GPU usage (GB) | 1.04 | 1.83 | 4.95 | - | - | - |

| FPS | 55.6 | 42.9 | 29.6 | 51.3 | 43.8 | 40.9 |

| Training time (hrs) | 0.4 | 0.5 | 1.0 | 0.5 | 0.8 | 1.2 |

| Data Summary | YOLOv5s-MD 0% Feather | YOLOv5s-MD 50% Feather | YOLOv5s-MD 80% Feather |

|---|---|---|---|

| Precision (%) | 98.4 | 97.4 | 97.5 |

| Recall (%) | 97.5 | 100.0 | 96.6 |

| mAP@0.50 (%) | 99.4 | 97.7 | 98.0 |

| mAP@0.50:0.95 (%) | 65.5 | 47.7 | 41.6 |

| F1-score | 98.0 | 98.0 | 95.0 |

| Data Summary | YOLOv5s-MD 0% Litter | YOLOv5s-MD 50% Litter | YOLOv5s-MD 80% Litter |

|---|---|---|---|

| Precision (%) | 98.4 | 97.1 | 99.9 |

| Recall (%) | 97.5 | 100.0 | 100.0 |

| mAP@0.50 (%) | 99.4 | 98.6 | 99.5 |

| mAP@0.50:0.95 (%) | 65.5 | 40.8 | 65.6 |

| F1-score | 98.0 | 99.0 | 100.0 |

| Data Summary | YOLOv5s-MD Height 0.5 m | YOLOv5s-MD Height 1 m | YOLOv5s-MD Height 3 m |

|---|---|---|---|

| Precision (%) | 100.0 | 99.8 | 99.0 |

| Recall (%) | 100.0 | 99.0 | 94.8 |

| mAP@0.50 (%) | 99.5 | 99.5 | 98.2 |

| mAP@0.50:0.95 (%) | 85.3 | 72.4 | 59.9 |

| F1-score | 100.0 | 99.0 | 97.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bist, R.B.; Subedi, S.; Yang, X.; Chai, L. Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods. AgriEngineering 2023, 5, 1020-1038. https://doi.org/10.3390/agriengineering5020064

Bist RB, Subedi S, Yang X, Chai L. Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods. AgriEngineering. 2023; 5(2):1020-1038. https://doi.org/10.3390/agriengineering5020064

Chicago/Turabian StyleBist, Ramesh Bahadur, Sachin Subedi, Xiao Yang, and Lilong Chai. 2023. "Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods" AgriEngineering 5, no. 2: 1020-1038. https://doi.org/10.3390/agriengineering5020064

APA StyleBist, R. B., Subedi, S., Yang, X., & Chai, L. (2023). Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods. AgriEngineering, 5(2), 1020-1038. https://doi.org/10.3390/agriengineering5020064