Abstract

Poultry farming plays a significant role in ensuring food security and economic growth in many countries. However, various factors such as feeding management practices, environmental conditions, and diseases lead to poultry mortality (dead birds). Therefore, regular monitoring of flocks and timely veterinary assistance is crucial for maintaining poultry health, well-being, and the success of poultry farming operations. However, the current monitoring method relies on manual inspection by farm workers, which is time-consuming. Therefore, developing an automatic early mortality detection (MD) model with higher accuracy is necessary to prevent the spread of infectious diseases in poultry. This study aimed to develop, evaluate, and test the performance of YOLOv5-MD and YOLOv6-MD models in detecting poultry mortality under various cage-free (CF) housing settings, including camera height, litter condition, and feather coverage. The results demonstrated that the YOLOv5s-MD model performed exceptionally well, achieving a high mAP@0.50 score of 99.5%, a high FPS of 55.6, low GPU usage of 1.04 GB, and a fast-processing time of 0.4 h. Furthermore, this study also evaluated the models’ performances under different CF housing settings, including different levels of feather coverage, litter coverage, and camera height. The YOLOv5s-MD model with 0% feathered covering achieved the best overall performance in object detection, with the highest mAP@0.50 score of 99.4% and a high precision rate of 98.4%. However, 80% litter covering resulted in higher MD. Additionally, the model achieved 100% precision and recall in detecting hens’ mortality at the camera height of 0.5 m but faced challenges at greater heights such as 2 m. These findings suggest that YOLOv5s-MD can detect poultry mortality more accurately than other models, and its performance can be optimized by adjusting various CF housing settings. Therefore, the developed model can assist farmers in promptly responding to mortality events by isolating affected birds, implementing disease prevention measures, and seeking veterinary assistance, thereby helping to reduce the impact of poultry mortality on the industry, ensuring the well-being of poultry and the overall success of poultry farming operations.

1. Introduction

Poultry mortality refers to the death of domestic birds within a flock or population, which might occur due to various reasons, which include diseases (infectious and non-infectious) [1,2], management practices [2,3,4], and environmental factors [4,5,6]. Among these various mortality-causing factors, the main cause of mortality is infectious disease. Common infectious diseases that can cause high mortality rates are avian influenza, Newcastle disease, infectious bronchitis, infectious bursal disease, and coccidiosis [5]. Infectious diseases must be diagnosed early to control disease spread across a flock and provide treatment to decrease substantial mortality rates. On the other hand, non-infectious factors such as overcrowding [6], neoplasia [1], extreme temperatures [7], poor nutrition [1], and poor ventilation [7] might also contribute to mortality inside poultry housing. For example, heat stress can cause chickens to die in large numbers, especially during hot weather conditions [8]. Similarly, overcrowding can lead to stress, disease transmission, and poor air quality, resulting in high mortality rates [6]. Preventing mortality requires a comprehensive approach that involves good management practices, adequate nutrition, and disease prevention and control. For example, farmers should ensure that birds have adequate food, water, and a clean house with a well-ventilated environment. It is also important to implement biosecurity measures, such as limiting access to the flock to prevent the introduction of infectious agents. Therefore, understanding the factors contributing to mortality and implementing good management practices can help reduce mortality rates and improve health and welfare.

In order to stop the spread of infectious diseases and reduce the influence of other factors that can cause mortality, early mortality detection (MD) in poultry is crucial. According to Wibisono et al. [9], infectious diseases are the primary causes of bird mortality. Therefore, early detection of sick or dying birds can help farmers promptly isolate affected birds, implement preventive disease strategies, and seek veterinary assistance when necessary. Additionally, early MD allows farmers to minimize further losses by culling remaining birds to prevent the spread of disease or other risk factors. Therefore, regularly monitoring flocks and seeking veterinary assistance at the first sign of illness or mortality is critical in ensuring the health and well-being of poultry and the success of poultry farming operations. That is why a good MD model is required for early MD and removing dead birds from the farm.

Automatic identification of dead birds in commercial poultry production can save time and labor while providing a crucial function for autonomous mortality removal systems, which eliminates the need for manual identification and helps streamline the identification process. High-resolution thermography has been investigated as a potential method for early MD in poultry production [10]. Previous studies that have utilized MD in broilers involved extracting features and utilizing a pairwise approach to capturing thermal and visual spectrum images [11]. Similarly, Zhu et al. [12] developed a detection model based on Support Vector Machine (SVM) and achieved 95% accuracy in identifying dead birds. The combination of artificial intelligence and sensor networks using five classification algorithms (SVM, K-Nearest Neighbors, Decision Tree, Naïve Bayes, and Bayesian Network) achieved a 95.6% accuracy in identifying dead and sick chickens [13]. However, equipping sensors to each bird in commercial cage-free (CF) housing is not a feasible or cost-effective approach. Comparative studies have shown that the YOLO model outperformed the SVM model in balanced object datasets [14], emphasizing the importance of implementing image analysis techniques for early mortality detection in the industry using the YOLO model.

YOLO models have proven to be highly effective in detecting small objects such as laying hens [15,16,17,18] and eggs [19]. For instance, these models have successfully detected individual hens and their distribution [15,18], and achieved higher accuracy in identifying problematic behaviors such as pecking [16] and mislaying [17]. In the context of dead chicken removal, the implementation of YOLOv4 reached a system accuracy of 95.24% [20]. Another study utilized YOLOv4 and a robot arm under specific lighting conditions (10–20 lux), resulting in a precision range of 74.5% to 86.1% [21]. While the YOLOv5 model demonstrated high precision with an accuracy decrease of 0.1% in detecting dead broilers in a caged broiler house [22], it lacked comprehensive details, and the housing conditions and bird type differed significantly from our current study. Therefore, our research aimed to compare the performance of the YOLOv5-MD and YOLOv6-MD models in detecting dead hens in CF housing settings, considering factors such as camera height, litter condition, and feather coverage. The objectives of this study were to (a) develop and test the performance of different deep learning models; and (b) evaluate the optimal YOLO-MD model’s (e.g., the YOLOv5-MD and YOLOv6-MD models) performance under different CF housing settings.

2. Materials and Methods

2.1. Experimental Design

This study was conducted at the University of Georgia (UGA) in Athens, GA, USA, which involved four identical CF research facilities measuring 7.3 m L × 6.1 m W × 3 m H each. These facilities house approximately 200 Hy-line W36 birds raised from day 1 to day 420. The houses were equipped with feeders, drinkers, perches, nest boxes, and lighting, and the floors were covered with pine shavings measuring 5 cm in depth (Figure 1). The ventilation rates, temperature, relative humidity, and light intensity and duration were all automatically controlled using the Chore-Tronics Model 8 controller (Chore-Time Equipment, Milford, IN, USA). Previous research studies have described the housing system details [15,16]. The animal management and utilization in this study were carefully monitored and approved by the Institutional Animal Care and Use Committee (IACUC) at the UGA, demonstrating a commitment to ethical and responsible research practices.

Figure 1.

Experimental cage-free housing design for hen mortality detection.

2.2. Image Data Acquisition and Pre-Processing

The primary data acquisition tool used in this study to record the mortality of hens was a night-vision network PRO-1080MSB camera (Swann Communications USA Inc., Santa Fe Springs, CA, USA) with six cameras installed in each room, mounted at approximately 3 m above the litter floor. Additionally, two cameras were placed at 0.5 and 1 m above the ground floor. The video recording was performed every day for 24 h using a digital video recorder (DVR-4580) from 50 to 60 weeks of age (WOA), while mortality data was collected when birds were found dead. Eight hens were found dead during that period, and video was recorded of those hens. The video files were saved in .avi format, with a 1920 × 1080-pixel resolution and a sampling rate of 15 frames per second (FPS). This data acquisition method provided a comprehensive and high-quality dataset for analyzing the behavior of the hens, which was instrumental in drawing meaningful conclusions from this study.

The CF housing consists of litter and feathers on the floor [23,24], which might affect the MD of hens. In addition, dustbathing or other hen activities might cover the dead bird fully or partially, so this research considers every scenario. To accurately represent the conditions in CF housing, this study employed video recording to capture the dead hens in different environmental conditions with varying degrees of litter and feather coverage. This research selected three litter and feather coverage levels—0% (no litter or feather coverage), 50% (50% of hen’s body covered with litter or feathers), and 80% (80% of hen’s body covered with litters or feathers)—as detailed in Table 1. The decision to not exceed 80% was motivated by the potential discrepancies between actual hen mortality and the appearance of hen images beyond that level of coverage. The resulting dataset accurately depicts the different litter and feather covering levels in CF housing, enabling the deep learning model to perform effective MD.

Table 1.

Experimental settings for evaluating the optimal YOLO mortality detection models.

In order to expand our image dataset and increase the number of samples, we employed various strategies. Firstly, we positioned the dead birds in different orientations, including vertical, horizontal, upward-facing, and downward-facing positions. Secondly, we placed the birds in various locations within the hen house, such as near perches, drinkers, feeders, walls, and in between perches, feeders, and drinkers. Additionally, we varied the housing conditions by covering the birds with different depths or percentages of litter or feathers. Finally, each time we encountered a dead bird, we recorded 10-min videos capturing the different orientations, placements, and housing conditions.

The video data collected in this study were processed by converting them into individual images in .jpg format using the Free Video to JPG Converter App (version 5.0). Subsequently, the obtained images were augmented to create a large image dataset. Techniques such as rotation, blurring, flipping, and cropping were employed in this study for image augmentation. These methods allowed the generation of additional variations of the collected images, increasing the diversity and size of the dataset for training, validating, and testing the detection models. The images were then labeled in YOLO format with the assistance of the image labeler website (Makesense.AI). This labeling process was essential in ensuring the images were correctly identified and classified for use in the deep learning model. Furthermore, the resulting labeled dataset was essential in developing and training the model for effective and accurate MD (Figure 2). The labeled-image dataset consisting of 9000 images was divided into three sets for training, validation, and testing purposes, with approximately 70%, 20%, and 10% of the total images allocated to each set, respectively (Table 2). The MDModel image dataset was used to compare YOLOv5s, YOLOv5m, YOLOv5x, YOLOv6s, YOLOv6m, and YOLOv6l-relu models.

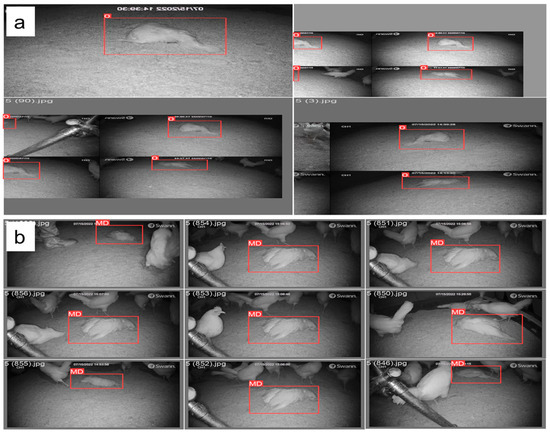

Figure 2.

Pre-processing of mortality image datasets based on (a) training batches and (b) validation batch labels used to detect mortality hens in cage-free houses. “0” represents the mortality label in the image.

Table 2.

Labeled-image datasets used for mortality detection models under various settings.

2.3. YOLO Architecture

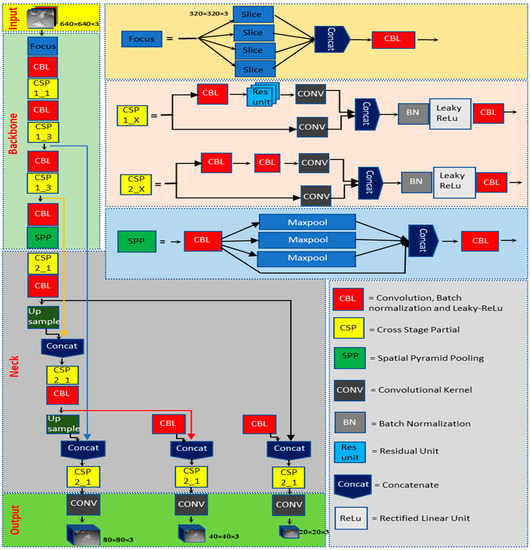

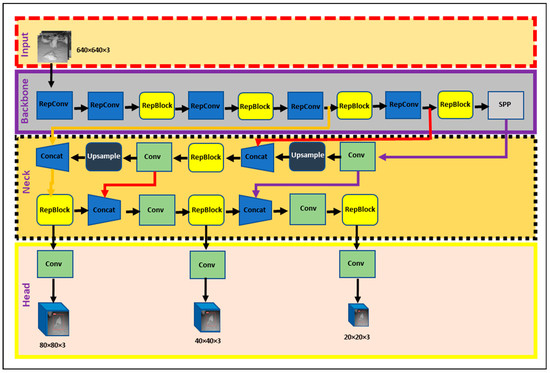

Object detection has been an active area of research in computer vision. Among the popular algorithms in this domain, YOLO (You Only Look Once) algorithms have successfully detected objects such as hens with higher accuracy and in real time [15,16,17,19]. YOLOv5 and YOLOv6 are two variants of the YOLO algorithm, each with its unique network architecture. In this study, the best YOLOv5-MD (YOLOv5s-MD, YOLOv5m-MD, and YOLOv5x-MD) and YOLOv6-MD (YOLOv6s-MD, YOLOv6m-MD, and YOLOv6l-relu-MD) models were used to compare. Both the YOLO-MD models’ architectures were mainly classified into three major parts: backbone, neck, and head. These YOLOv5-MD and YOLOv6-MD models differ in parameters within types. The detailed architecture of YOLOv5-MD and YOLOv6-MD used for this research is shown in Figure 3 and Figure 4, respectively.

Figure 3.

YOLOv5-MD model used in mortality detection.

Figure 4.

YOLOv6-MD model used in mortality detection.

2.3.1. Backbone

The main difference between YOLOv5-MD and YOLOv6-MD is the backbone network architecture. YOLOv5-MD uses a variant of the efficientNet architecture, while YOLOv6-MD uses a pre-trained CNN with an efficientRep backbone or CSP-Backbone for MD. EfficientNet is a family of neural networks designed for efficient and effective model scaling [25]. YOLOv5-MD’s backbone uses convolutional layers at different scales to perform feature extraction from labeled MD image datasets with the help of Spatial pyramid pooling (SPP), which helps it achieve good performance on object-detection tasks while being lightweight and efficient [26]. On the other hand, YOLOv6-MD’s backbone network is typically a pre-trained CNN with SPP and efficientRep architecture [27]. SPP helps max-pooling layers reduce the feature map’s size while maintaining the most important features for MD. EfficientRep backbone is designed to both effectively use the computational resources of hardware such as GPUs and possess robust feature representation abilities compared to the CSP-Backbone utilized by YOLOv5 [28]. In summary, YOLOv5-MD and YOLOv6-MD use different backbone network architectures for feature extraction, affecting their efficiency and performance on object-detection tasks.

2.3.2. Neck

The neck component is essential in YOLOv5-MD and YOLOv6-MD for connecting the backbone to the MD head. YOLO-MD versions employ convolutional layers in their necks, but their specific architectures differ. YOLOv5-MD’s neck utilizes a Feature Pyramid Network (FPN) that includes a top-down and bottom-up path, which captures multi-scale objects with high accuracy [29]. In contrast, YOLOv6 features a more efficient neck design known as the Rep-PAN Neck [30] that utilizes convolutional, pooling, and up-sampling layers to manipulate the backbone network’s features to the desired scale and resolution for the heads. This Rep-PAN Neck design is based on hardware-aware neural network architecture concepts that balance accuracy and speed while optimizing hardware resources [31]. Therefore, YOLOv5-MD and YOLOv6-MD differ in their neck designs, with YOLOv5-MD using an FPN and YOLOv6-MD utilizing a more efficient Rep-PAN Neck design.

2.3.3. Head

The head component in YOLOv5-MD and YOLOv6-MD is responsible for the final stage of object detection by generating predictions from the extracted features. In YOLOv5-MD, the head comprises fully connected layers and a convolutional layer that predicts the detected mortality objects’ bounding boxes and class probabilities. In addition, it has three output layers: a detection layer that predicts object class probabilities, a localization layer that forecasts bounding box coordinates, and an anchoring layer that defines the prior box shapes and scales [32]. The YOLOv5-MD head is designed to be resource-efficient, enabling real-time object detection across a range of devices. On the other hand, YOLOv6’s Decoupled Head design focuses on improving hardware utilization while maintaining high detection accuracy [28]. Overall, YOLOv5-MD and YOLOv6-MD have different head structures, but they aim to generate accurate and efficient object detection predictions.

2.4. Performance Metrics

2.4.1. Precision

Precision measures how many MDs the system made were correct and is calculated by dividing the true positives (TP) and the sum of TP and false positives (FP).

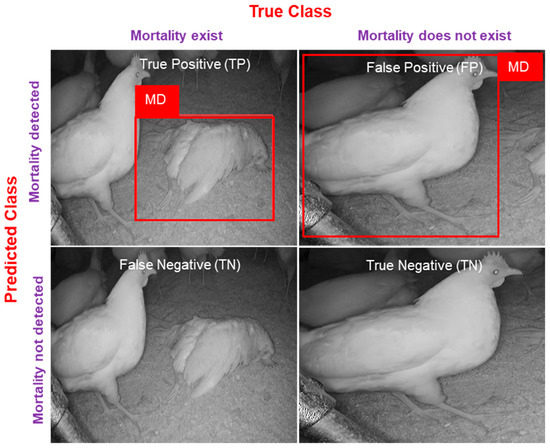

where TP, FP, FN, and TN represent true positives (mortality is present in the image, and the model predicts it correctly), false positives (mortality is not in the image, and the model detects it), and false negatives (mortality is present in the image but the model unable to detect it), and true negatives (mortality is not present in the image and not detected by the model). In detail, visualization for evaluation metrics is mentioned in Figure 5.

Figure 5.

Confusion matrix for evaluating mortality detection.

2.4.2. Recall

Recall measures how many of the actual mortality hens in an image were correctly identified by the system. It is calculated as the ratio of TP to the sum of TP and false negatives (FN):

2.4.3. Mean Average Precision

Mean Average Precision (mAP) is a widely used metric in object-detection tasks that measures the system’s overall accuracy across multiple object classes [33]. It is calculated based on the precision and recall values at a certain threshold or over a range of thresholds. The most common mAP calculation is based on the area under the precision–recall curve and is calculated as follows:

where APi is the average precision of the ith category, and C represents the total number of categories of MD.

2.4.4. F1-Score

The F1-score is a harmonic mean of precision and recall and is a widely used metric in machine learning for evaluating the performance of binary classification models [34]. It combines precision and recall metrics into a single value that indicates the balance between them. The formula for the F1-score is as follows:

In other words, the F1-score gives equal importance to precision and recall, with a higher score indicating better performance. For example, a perfect F1-score of 1.0 indicates that the model has both perfect precision and recall, while a score of 0 indicates that the model has either low precision or recall or both.

2.4.5. Loss Function

The YOLO-MD object-detection algorithm trains the model using a custom loss function called the “YOLO Loss” (Figure 6). The YOLO-MD Loss combines several different loss terms that penalize the model for incorrect predictions and encourage it to make accurate predictions [35]. For example, the YOLO-MD Loss consists of the following loss terms:

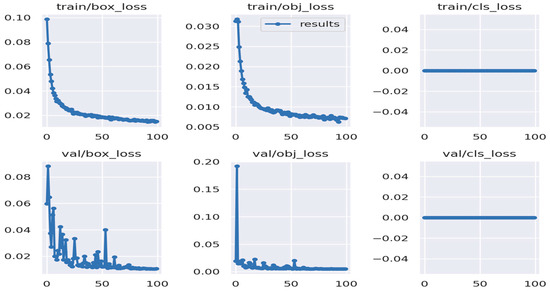

Figure 6.

Loss function results sample for the YOLO-MD model. Where train, val, box, obj, and cls represent the training, validation, bounding box, bounding object, and class used to detect MD.

- Objectness loss: This loss term encourages the model to correctly predict whether a mortality object is present in each grid cell. Objectness loss (λobj) is computed between the predicted and ground truth objectness scores by the binary cross-entropy loss [36].

- Classification loss: This loss term encourages the model to correctly classify the detected mortality objects into their respective classes. Classification loss (λcls) is computed as the cross-entropy loss between the predicted class probabilities and the ground truth class labels [36].

- Regression loss: This loss term penalizes the model for incorrect predictions of the bounding box coordinates and dimensions [36]. Regression loss (λreg) is computed as the sum of the smooth L1 loss between the predicted and ground truth x and y coordinates, the smooth L1 loss between the predicted and ground truth width and height, and the focal loss between the predicted and ground truth confidence scores [29]. The YOLO-MD Loss is computed as the weighted sum of the mortality objectness loss, classification loss, and regression loss. In general, the importance of each term in the loss function is determined by the user, who sets the corresponding weights accordingly. The YOLO Loss is minimized during the training process using backpropagation and gradient descent, with the goal of reducing the overall prediction error of the model [37].

2.5. Computational Parameters

A high-performance computational configuration was utilized to detect mortality. This study employed various configurations on the Oracle cloud to train, validate, and test the image datasets. It has been observed that a higher number of computational parameters can enhance the model’s speed and detection accuracy. The system configuration comprises a 64-core OCPU for CPU processing and four NVIDIA®A10 GPUs, each with 24 GB of memory, for GPU acceleration. The operating system employed is Ubuntu 22.10 (Kinetic Kudu), and the accelerated environment is NVIDIA CUDA. The system has ample storage capacity with 1024 GB of memory and two 7.68 TB NVMe SSD drives. Torch 1.7.0, Torch-vision 0.8.1, OpenCV-python 4.1.1, and NumPy 1.18.5 were the libraries utilized in the system. Together, these components create a high-performance system capable of efficiently running deep learning models for various applications, including MD. In addition, the YOLO model underwent 100 epochs of training with a batch size of 16.

3. Results

3.1. Model Comparison

This study proposed the YOLO-MD model as an effective solution for detecting dead hens in the CF housing system. Table 3 shows the performance and results obtained from validating the proposed models’ effectiveness in this experiment. When comparing different object-detection models, it is important to consider various metrics such as recall, FPS, mAP, GPU usage, and time taken [16,17]. For example, YOLOv5x-MD achieved the highest recall rate of 100%, indicating that it can recall target objects in an image, while YOLOv5s-MD had a recall rate of 98.4%. On the other hand, frames per second (FPS) measures the number of frames processed by the model per second, indicating the speed at which the model can operate. YOLOv5s-MD had the highest FPS (i.e., 55.6) among the models we developed, which means faster image processing than other models, while YOLOv5x-MD had the lowest FPS (i.e., 29.6), indicating slower processing. In terms of accuracy, mAP is a common metric that measures the model’s ability to detect objects of different sizes and types [15]. YOLOv5-MD models achieved higher mAP scores of 99.5% than YOLOv6 models at a confidence threshold of 0.5. However, at higher confidence thresholds, the mAP scores decreased for YOLOv6 models, indicating that the models struggled to detect smaller or more complex objects. Lastly, GPU usage and time taken are important factors when selecting a model [16,17]. YOLOv5x-MD required the most GPU usage of 4.95 GB, while YOLOv5s-MD required the least at 1.04 GB. YOLOv5s-MD also processed data in the least amount of time at 0.4 h, while YOLOv6l_relu-MD took the longest at 1.2 h. The training time was lowest because of the model with a smaller size and parameters used [16,17]. Thus, when selecting a model with higher performance, speed and efficiency are crucial, and YOLOv5s-MD is the preferred option in this study. This study’s findings might provide researchers and producers with assistance in selecting the best MD model or choosing the model for their specific object detection. In addition, they can select based on their priorities for detection performance metrics such as speed, GPU usage, and efficiency.

Table 3.

Performance metrics of different YOLO Models used for mortality detection using 2200 images in each of the six models.

3.2. Environmental Condition

The CF houses mainly consist of litter and broken feathers from the birds [23]. However, in the presence of such litter, the dead bodies of the birds can get covered or buried, making it difficult to detect them accurately. This situation can lead to the decay of hens, resulting in unpleasant odors and various other issues. Therefore, early MD is crucial to prevent such problems, especially if a bird dies due to a severe issue. Therefore, this study considers the factors of litter and feathers in detecting dead birds with deep learning models in research CF houses.

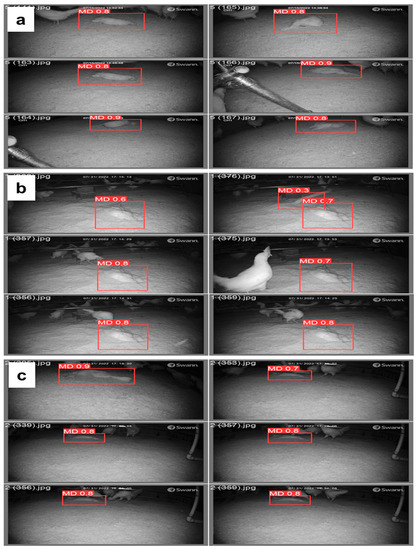

3.2.1. Feathers Covering

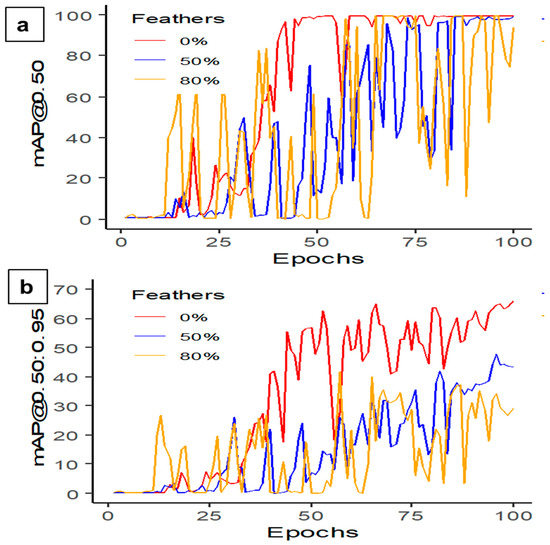

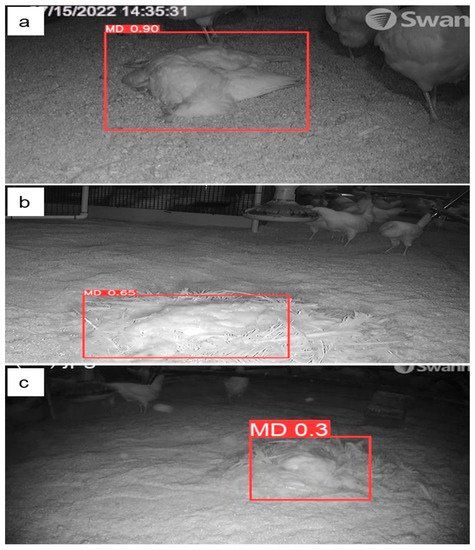

Table 4 summarizes the performance of YOLOv5s-MD with different feather percentages (e.g., 0%, 50%, and 80%). Precision remains relatively consistent across all three models, with recall being the highest at 100% for 50% feather covering. The mAP and F1-scores also show a slight decrease with an increased feathering percentage. The mAP@0.50 score is highest for 0% feather covering at 99.4% and decreases to 98.0% for 80% feathered covering (Figure 7a). The mAP@0.50:0.95 score shows a significant decrease in performance with increased feathering, with 0% feather covering achieving a score of 65.5%, compared to 41.6% for 80% feather covering (Figure 7b). Hence, 0% feather covering resulted in higher MD rates (Figure 8). The mAP scores always increased with increasing epochs from 0 to 100 with batch size 16. However, there is a trade-off between feathering and overall mAP performance, with higher feathering resulting in decreased performance. Therefore, early mortality detection or proper cleaning and maintenance of CF housing is required to increase detection.

Table 4.

Performance of YOLOv5s model on mortality detection at different feather conditions.

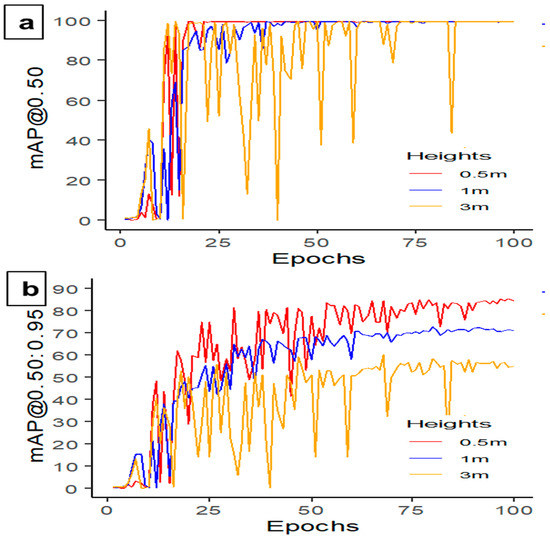

Figure 7.

Mean average precision performance (a) mAP@0.50 and (b) mAP@0.50:0.95 in detecting dead hens at different percentages of feather coverages using the YOLOv5s-MD model at 100 epochs and 16 batch sizes.

Figure 8.

Variation of mortality detection with feather coverage (a) 0% or no feathers, (b) 50% feathers, and (c) 80% feathers in cage-free housing using the YOLOv5s model in test images.

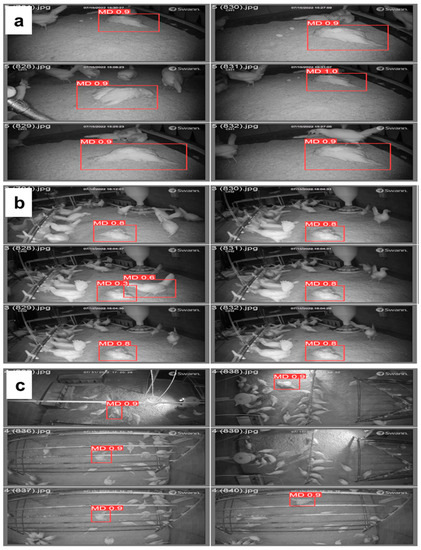

3.2.2. Litter Coverage

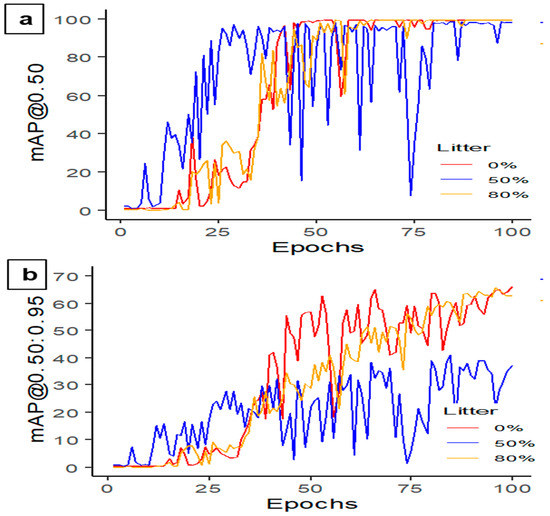

In this study, the performance of YOLOv5s-MD was evaluated in litter detection scenarios with three different levels of litter coverage in the image: 0%, 50%, and 80% (Table 5). YOLOv5s-MD achieved the highest precision rate of 99.9% when the litter coverage was 80%, indicating its accurate detection of dead hens on the litter floor through image analysis. Conversely, the models with 50% litter coverage had the lowest precision rate of 97.1%, suggesting difficulties in accurately detecting dead hens when clutter is in the image. However, all models demonstrated high recall rates ranging from 97.5% to 100%, indicating their ability to accurately recall target objects in the image. YOLOv5s-MD with 80% litter coverage achieved the highest mAP@0.50 (Figure 9a) and F1-score of 99.5 and 100%, respectively, indicating overall better detection performance. Similarly, models with higher litter coverage (80%, Figure 9b) exhibited higher mAP@0.50:0.95 scores, suggesting potential challenges in detecting smaller or more complex objects due to behaviors such as resting, sitting, and dustbathing, which may appear similar at certain points. The 80% litter coverage model performs better, providing more visibility of a small hen’s body parts. Models for the hens with no or less litter coverage may interpret their activities as dustbathing, sitting, or sleeping, which can affect the model’s overall performance. Overall, the results indicate that YOLOv5s-MD can effectively detect litter-covered dead birds in images, but the level of litter coverage in the image may impact its performance (Figure 10).

Table 5.

YOLOv5s model performance on mortality detection across various litter accumulation conditions.

Figure 9.

Mean average precision performance (a) mAP@0.50 and (b) mAP@0.50:0.95 using the YOLOv5s-MD model at 100 epochs and 16 batch sizes in detecting dead hens at different percentages of litter coverages.

Figure 10.

Variation of mortality detection with litter coverage (a) 0% litter or no litter, (b) 50% litter, and (c) 80% litter in cage-free housing using the YOLOv5s model in test images.

3.2.3. Camera Settings

Camera height plays a crucial role in determining object-detection performance. When comparing the performance of YOLOv5s-MD at various camera heights from the dead hen, the model achieved the perfect precision, recall, and F1 score of 100% when detecting objects at the height of 0.5 m (Table 6). As the camera height increased to 1 m and 3 m, the recall rate decreased to 99% and 94.8%, indicating the model’s difficulty detecting objects at greater heights. Regarding accuracy, YOLOv5s-MD demonstrated high mAP@0.50 at two camera heights, with the highest mAP of 99.5% achieved at 0.5 m and 1 m (Figure 11a). However, the model’s ability to detect smaller or more complex objects decreased at higher confidence thresholds, as evidenced by the declining mAP@0.50:0.95 from 85.3% to 59.9% (Figure 11b). Overall, the YOLOv5s-MD model performed well across different camera heights, with the best results achieved at 0.5 m (Figure 12). Nevertheless, the model’s performance deteriorated at greater camera heights, which should be considered for specific applications. For instance, additional labeled-image datasets are necessary to enhance the model’s performance at higher or ceiling heights. In addition, the ceiling-height camera plays a significant role in capturing a larger portion of the CF house compared to the ground camera [17]. Therefore, integrating the optimal ground height and ceiling height in a system can contribute to better detection and removal of dead birds from the farm.

Table 6.

YOLOv5s model performance on mortality detection at different camera heights from the dead hen.

Figure 11.

Mean average precision results (a) mAP@0.50 and (b) mAP@0.50:0.95 observed at different camera heights using the YOLO5s-MD model at 100 epochs and 16 batch sizes.

Figure 12.

Variation of mortality detection with different camera heights (a) 0.5 m, (b) 1 m, and (c) 3 m in cage-free housing using the YOLOv5s model in test images.

The limitation of this study was the use of limited mortality image datasets, which might not fully represent the variable conditions found in commercial CF housing. However, these datasets provide an overview of such conditions. Additionally, there were instances where resting birds were mistakenly identified as dead birds, highlighting the need to introduce a thermal camera for accurate identification. The thermal camera would measure the hen’s body temperature, allowing differentiation between live and dead birds. In future research, it would be valuable to test and implement this study in real-world commercial CF houses, evaluating the model’s performance under authentic conditions. Furthermore, to enhance the MD model, further work can be conducted using larger image datasets incorporating a thermal camera and temperature and humidity sensors as additional tools.

4. Conclusions

This study developed and tested different YOLO deep learning models and identified that YOLOv5s-MD had higher accuracy, faster processing time, and lower GPU usage compared to other models. Additionally, this study revealed that feathering percentage and litter coverage impacted the model’s performance, with 0% feather covering achieving the highest mAP@0.50 and 80% litter covering reaching the highest precision, recall, and mAP. Furthermore, the model’s performance varied with camera height from the target object, with the best precision, recall, and mAP achieved at 0.5 m.

The main achievement of this study is establishing a foundation for developing a mortality scanning system specifically designed for commercial CF houses. The YOLOv5s-MD model, developed and validated in this research, shows promise for accurately detecting and monitoring mortalities within the CF housing system. In the future, the focus will be on implementing the YOLOv5s-MD model in a robot or utilizing a movable camera device capable of navigating commercial CF housing systems. This implementation will enable real-time testing and evaluation of the model’s performance in practical scenarios. Additionally, as necessary, the model will undergo further refinement and improvement to enhance its accuracy and reliability. This study aims to successfully integrate the YOLOv5s-MD model into a functional mortality scanning system for commercial CF houses through these future investigations. This contribution will lead to improved monitoring and management of mortalities, ultimately enhancing welfare and productivity in the poultry industry.

Author Contributions

Methodology, R.B.B. and L.C.; Formal analysis, R.B.B. and L.C.; Investigation, R.B.B., S.S., X.Y. and L.C.; Resources, L.C.; Writing—original draft, R.B.B. and L.C.; Writing—review & editing, L.C.; Project administration, L.C.; Funding acquisition, L.C. All authors have read and agreed to the published version of the manuscript.

Funding

USDA-NIFA (2023-68008-39853); Egg Industry Center; Georgia Research Alliance (Venture Fund); Oracle America (Oracle for Research Grant, CPQ-2060433); UGA CAES Dean’s Office Research Fund; UGA COVID Recovery Research Fund; Hatch projects (GEO00895; Accession Number: 1021519) and (GEO00894; Accession Number: 1021518).

Data Availability Statement

Data will be available per reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Brochu, N.M.; Guerin, M.T.; Varga, C.; Lillie, B.N.; Brash, M.L.; Susta, L. A Two-Year Prospective Study of Small Poultry Flocks in Ontario, Canada, Part 2: Causes of Morbidity and Mortality. J. Vet. Diagn. Investig. 2019, 31, 336–342. [Google Scholar] [CrossRef]

- Cadmus, K.J.; Mete, A.; Harris, M.; Anderson, D.; Davison, S.; Sato, Y.; Helm, J.; Boger, L.; Odani, J.; Ficken, M.D. Causes of Mortality in Backyard Poultry in Eight States in the United States. J. Vet. Diagn. Investig. 2019, 31, 318–326. [Google Scholar] [CrossRef] [PubMed]

- Dessie, T.; Ogle, B. Village Poultry Production Systems in the Central Highlands of Ethiopia. Trop. Anim. Health Prod. 2001, 33, 521–537. [Google Scholar] [CrossRef] [PubMed]

- Yerpes, M.; Llonch, P.; Manteca, X. Factors Associated with Cumulative First-Week Mortality in Broiler Chicks. Animals 2020, 10, 310. [Google Scholar] [CrossRef] [PubMed]

- Ekiri, A.B.; Armson, B.; Adebowale, K.; Endacott, I.; Galipo, E.; Alafiatayo, R.; Horton, D.L.; Ogwuche, A.; Bankole, O.N.; Galal, H.M. Evaluating Disease Threats to Sustainable Poultry Production in Africa: Newcastle Disease, Infectious Bursal Disease, and Avian Infectious Bronchitis in Commercial Poultry Flocks in Kano and Oyo States, Nigeria. Front. Vet. Sci. 2021, 8, 730159. [Google Scholar] [CrossRef]

- Gray, H.; Davies, R.; Bright, A.; Rayner, A.; Asher, L. Why Do Hens Pile? Hypothesizing the Causes and Consequences. Front. Vet. Sci. 2020, 7, 616836. [Google Scholar] [CrossRef]

- Edwan, E.; Qassem, M.A.; Al-Roos, S.A.; Elnaggar, M.; Ahmed, G.; Ahmed, A.S.; Zaqout, A. Design and Implementation of Monitoring and Control System for a Poultry Farm. In Proceedings of the International Conference on Promising Electronic Technologies (ICPET), Jerusalem, Israel, 16–17 December 2020; pp. 44–49. [Google Scholar] [CrossRef]

- Saeed, M.; Abbas, G.; Alagawany, M.; Kamboh, A.A.; Abd El-Hack, M.E.; Khafaga, A.F.; Chao, S. Heat Stress Management in Poultry Farms: A Comprehensive Overview. J. Therm. Biol. 2019, 84, 414–425. [Google Scholar] [CrossRef]

- Wibisono, F.M.; Wibisono, F.J.; Effendi, M.H.; Plumeriastuti, H.; Hidayatullah, A.R.; Hartadi, E.B.; Sofiana, E.D. A Review of Salmonellosis on Poultry Farms: Public Health Importance. Syst. Rev. Pharm. 2020, 11, 481–486. [Google Scholar]

- Blas, A.; Diezma, B.; Moya, A.; Gomez-Martinez, C. Early Detection of Mortality in Poultry Production Using High Resolution Thermography. Available online: https://www.visavet.es/en/early-detection-of-mortality-in-poultry-production-using-high-resolution-thermography/34=1264/ (accessed on 10 March 2023).

- Muvva, V.V.; Zhao, Y.; Parajuli, P.; Zhang, S.; Tabler, T.; Purswell, J. Automatic Identification of Broiler Mortality Using Image Processing Technology; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2018; p. 1. [Google Scholar] [CrossRef]

- Zhu, W.; Lu, C.; Li, X.; Kong, L. Dead Birds Detection in Modern Chicken Farm Based on SVM. In Proceedings of the 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Bao, Y.; Lu, H.; Zhao, Q.; Yang, Z.; Xu, W.; Bao, Y.; Xu, W. Detection system of dead and sick chickens in large scale farms based on artificial intelligence. Math. Biosci. Eng. 2021, 18, 6117–6135. [Google Scholar] [CrossRef]

- Sentas, A.; Tashiev, İ.; Küçükayvaz, F.; Kul, S.; Eken, S.; Sayar, A.; Becerikli, Y. Performance Evaluation of Support Vector Machine and Convolutional Neural Network Algorithms in Real-Time Vehicle Type and Color Classification. Evol. Intell. 2020, 13, 83–91. [Google Scholar] [CrossRef]

- Yang, X.; Chai, L.; Bist, R.B.; Subedi, S.; Wu, Z. A Deep Learning Model for Detecting Cage-Free Hens on the Litter Floor. Animals 2022, 12, 1983. [Google Scholar] [CrossRef] [PubMed]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking Pecking Behaviors and Damages of Cage-Free Laying Hens with Machine Vision Technologies. Comput. Electron. Agric. 2023, 204, 107545. [Google Scholar] [CrossRef]

- Bist, R.B.; Yang, X.; Subedi, S.; Chai, L. Mislaying behavior detection in cage-free hens with deep learning technologies. Poult. Sci. 2023, 102, 102729. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Regmi, P.; Ding, Y.; Bist, R.B.; Chai, L. Automatic Detection of Brown Hens in Cage Free Houses with Deep Learning Methods. Poult. Sci. 2023, 100, 102784. [Google Scholar] [CrossRef]

- Subedi, S.; Bist, R.; Yang, X.; Chai, L. Tracking Floor Eggs with Machine Vision in Cage-Free Hen Houses. Poult. Sci. 2023, 102, 102637. [Google Scholar] [CrossRef]

- Liu, H.W.; Chen, C.H.; Tsai, Y.C.; Hsieh, K.W.; Lin, H.T. Identifying images of dead chickens with a chicken removal system integrated with a deep learning algorithm. Sensors 2021, 21, 3579. [Google Scholar] [CrossRef]

- Li, G.; Chesser, G.D.; Purswell, J.L.; Magee, C.; Gates, R.S.; Xiong, Y. Design and Development of a Broiler Mortality Removal Robot. Appl. Eng. Agric. 2022, 38, 853–863. [Google Scholar] [CrossRef]

- Chen, C.; Kong, X.; Wang, Q.; Deng, Z. A Method for Detecting the Death State of Caged Broilers Based on Improved Yolov5. SSRN Electron. J. 2022, 32. [Google Scholar] [CrossRef]

- Bist, R.B.; Chai, L. Advanced Strategies for Mitigating Particulate Matter Generations in Poultry Houses. Appl. Sci. 2022, 12, 11323. [Google Scholar] [CrossRef]

- Bist, R.B.; Subedi, S.; Chai, L.; Yang, X. Ammonia Emissions, Impacts, and Mitigation Strategies for Poultry Production: A Critical Review. J. Environ. Manag. 2023, 328, 116919. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. Available online: http://proceedings.mlr.press/v97/tan19a/tan19a.pdf (accessed on 8 January 2023).

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Lin, Y.; Feng, P.; Guan, J.; Wang, W.; Chambers, J. IENet: Interacting Embranchment One Stage Anchor Free Detector for Orientation Aerial Object Detection. arXiv 2019, arXiv:1912.00969. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Weng, K.; Chu, X.; Xu, X.; Huang, J.; Wei, X. EfficientRep:An Efficient Repvgg-Style ConvNets with Hardware-Aware Neural Network Design. arXiv 2023, arXiv:2302.00386. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 (6.0/6.1) Brief Summary, Issue #6998, Ultralytics/Yolov5. Available online: https://github.com/ultralytics/yolov5/issues/6998 (accessed on 10 March 2023).

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 1–13. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. Real-Time Growth Stage Detection Model for High Degree of Occultation Using DenseNet-Fused YOLOv4. Comput. Electron. Agric. 2022, 193, 106694. [Google Scholar] [CrossRef]

- Tesema, S.N.; Bourennane, E.-B. DenseYOLO: Yet Faster, Lighter and More Accurate YOLO. In Proceedings of the 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 4–7 November 2020; pp. 0534–0539. [Google Scholar] [CrossRef]

- Amrani, M.; Bey, A.; Amamra, A. New SAR target recognition based on YOLO and very deep multi-canonical correlation analysis. Int. J. Remote Sens. 2022, 43, 5800–5819. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).