Munsell Soil Colour Classification Using Smartphones through a Neuro-Based Multiclass Solution

Abstract

1. Introduction

2. Methodology

2.1. Dataset and Precedent Studies

2.2. Artificial Neural Networks

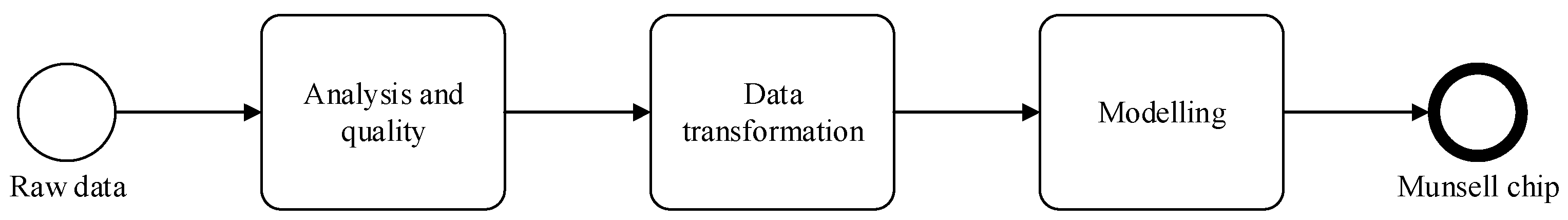

2.3. Method

3. Experiments

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| B | Blue |

| BC | Blue–Canon |

| BG | Blue-Green |

| BN | Blue–Nokia |

| BS | Blue–Samsung |

| C | Chroma |

| FS | Fuzzy System |

| G | Green |

| GC | Green–Canon |

| GN | Green–Nokia |

| GS | Green–Samsung |

| GY | Green-Yellow |

| H | Hue |

| HVC | Hue, Value, and Chroma |

| MCC | Munsell Colour Chart |

| P | Purple |

| PB | Purple-Blue |

| R | Red |

| RC | Red–Canon |

| RGB | Red, Green, and Blue |

| RN | Red–Nokia |

| RP | Red-Purple |

| RS | Red–Samsung |

| V | Value |

| Y | Yellow |

| YR | Yellow-Red |

References

- Poppiel, R.; Lacerda, M.P.; Demattê, J.; Oliveira, M.P., Jr.; Gallo, B.C.; Safanelli, J.L. Pedology and soil class mapping from proximal and remote sensed data. Geoderma 2019, 348, 189–206. [Google Scholar] [CrossRef]

- Swetha, R.K.; Dasgupta, S.; Chakraborty, S.; Li, B.; Weindorf, D.C.; Mancini, M.; Silva, S.H.G.; Ribeiro, B.T.; Curi, N.; Ray, D.P. Using Nix color sensor and Munsell soil color variables to classify contrasting soil types and predict soil organic carbon in Eastern India. Comput. Electron. Agric. 2022, 199. [Google Scholar] [CrossRef]

- Goh, S.-T.; Tan, K.-L. MOSAIC: A fast multi-feature image retrieval system. Data Knowl. Eng. 2000, 33, 219–239. [Google Scholar] [CrossRef]

- Nascimento, M.A.; Tousidou, E.; Chitkara, V.; Manolopoulos, Y. Image indexing and retrieval using signature trees. Data Knowl. Eng. 2002, 43, 57–77. [Google Scholar] [CrossRef]

- Marqués-Mateu, Á.; Moreno-Ramón, H.; Balasch, S.; Ibáñez-Asensio, S. Quantifying the uncertainty of soil colour measurements with Munsell charts using a modified attribute agreement analysis. Catena 2018, 171, 44–53. [Google Scholar] [CrossRef]

- Yoo, W.S.; Kim, J.G.; Kang, K.; Yoo, Y. Extraction of Colour Information from Digital Images towards Cultural Heritage Characterisation Applications. SPAFA J. 2021, 5. [Google Scholar] [CrossRef]

- Mancini, M.; Weindorf, D.C.; Monteiro, M.E.C.; de Faria, A.J.G.; dos Santos Teixeira, A.F.; de Lima, W.; de Lima, F.R.D.; Dijair, T.S.B.; Marques, F.D.A.; Ribeiro, D.; et al. From sensor data to Munsell color system: Machine learning algorithm applied to tropical soil color classification via Nix™ Pro sensor. Geoderma 2020, 375, 114471. [Google Scholar] [CrossRef]

- Jorge, N.F.; Clark, J.; Cárdenas, M.L.; Geoghegan, H.; Shannon, V. Measuring Soil Colour to Estimate Soil Organic Carbon Using a Large-Scale Citizen Science-Based Approach. Sustainability 2021, 13, 11029. [Google Scholar] [CrossRef]

- Massawe, B.H.J.; Subburayalu, S.K.; Kaaya, A.K.; Winowiecki, L.; Slater, B.K. Mapping numerically classified soil taxa in Kilombero Valley, Tanzania using machine learning. Geoderma 2018, 311, 143–148. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, H.; Zhang, X.; Yu, S.; Dou, X.; Xie, Y.; Wang, N. Allocate soil individuals to soil classes with topsoil spectral characteristics and decision trees. Geoderma 2018, 320, 12–22. [Google Scholar] [CrossRef]

- Hof, S. Mapping Soil Variability with a Decision Tree Modelling Approach in the Northern Highlands of Ethiopia. 2014. Available online: https://edepot.wur.nl/306923 (accessed on 1 January 2021).

- Gozukara, G.; Zhang, Y.; Hartemink, A.E. Using vis-NIR and pXRF data to distinguish soil parent materials—An example using 136 pedons from Wisconsin, USA. Geoderma 2021, 396, 115091. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, G.; Chen, S.; Rasmussen, C.; Liu, B. Assessing soil thickness in a black soil watershed in northeast China using random forest and field observations. Int. Soil Water Conserv. Res. 2020, 9, 49–57. [Google Scholar] [CrossRef]

- Liu, F.; Rossiter, D.G.; Zhang, G.-L.; Li, D.-C. A soil colour map of China. Geoderma 2020, 379, 114556. [Google Scholar] [CrossRef]

- Zhang, Y.; Hartemink, A.E. Digital mapping of a soil profile. Eur. J. Soil Sci. 2019, 70, 27–41. [Google Scholar] [CrossRef]

- Syauqy, D.; Fitriyah, H.; Anwar, K. Classification of Physical Soil Condition for Plants using Nearest Neighbor Algorithm with Dimensionality Reduction of Color and Moisture Information. J. Inf. Technol. Comput. Sci. 2018, 3, 175–183. [Google Scholar] [CrossRef]

- Priandana, K. Penelitian Untuk Aplikasi Mobile Munsell Soil Color Chart Berbasis Android Menggunakan Histogram Ruang Citra Hvc Dengan Klasifikasi K-Nn. 2014. Available online: https://repository.ipb.ac.id/handle/123456789/72464 (accessed on 1 January 2021).

- Zhu, A.-X.; Qi, F.; Moore, A.; Burt, J.E. Prediction of soil properties using fuzzy membership values. Geoderma 2010, 158, 199–206. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C.; Jones, D.D.; Hindman, T.W. Intensified fuzzy clusters for classifying plant, soil, and residue regions of interest from color images. Comput. Electron. Agric. 2004, 42, 161–180. [Google Scholar] [CrossRef]

- Pegalajar, M.C.; Ruiz, L.G.B.; Sánchez-Marañón, M.; Mansilla, L. A Munsell colour-based approach for soil classification using Fuzzy Logic and Artificial Neural Networks. Fuzzy Sets Syst. 2019, 401, 38–54. [Google Scholar] [CrossRef]

- Pegalajar, M.C.; Sánchez-Marañón, M.; Ruíz, L.G.B.; Mansilla, L.; Delgado, M. Artificial Neural Networks and Fuzzy Logic for Specifying the Color of an Image Using Munsell Soil-Color Charts; Springer International Publishing: Cham, Switzerland, 2018; pp. 699–709. [Google Scholar] [CrossRef]

- Ataieyan, P.; Moghaddam, P.A.; Sepehr, E. Estimation of soil organic carbon using artificial neural network and multiple linear regression models based on color image processing. J. Agric. Mach. 2018, 8, 137–148. [Google Scholar] [CrossRef]

- Srivastava, P.; Shukla, A.; Bansal, A. A comprehensive review on soil classification using deep learning and computer vision techniques. Multimed. Tools Appl. 2021, 80, 14887–14914. [Google Scholar] [CrossRef]

- Gómez-Robledo, L.; López-Ruiz, N.; Melgosa, M.; Palma, A.J.; Capitán-Vallvey, L.F.; Sánchez-Marañón, M. Using the mobile phone as Munsell soil-colour sensor: An experiment under controlled illumination conditions. Comput. Electron. Agric. 2013, 99, 200–208. [Google Scholar] [CrossRef]

- Han, P.; Dong, D.; Zhao, X.; Jiao, L.; Lang, Y. A smartphone-based soil color sensor: For soil type classification. Comput. Electron. Agric. 2016, 123, 232–241. [Google Scholar] [CrossRef]

- Mehonic, A.; Sebastian, A.; Rajendran, B.; Simeone, O.; Vasilaki, E.; Kenyon, A.J. Memristors—From in-memory computing, deep learning acceleration, and spiking neural networks to the future of neuromorphic and bio-inspired computing. Adv. Intell. Syst. 2020, 2, 2000085. [Google Scholar] [CrossRef]

- Desai, M.; Shah, M. An anatomization on breast cancer detection and diagnosis employing multi-layer perceptron neural network (MLP) and Convolutional neural network (CNN). Clin. eHealth 2020, 4, 1–11. [Google Scholar] [CrossRef]

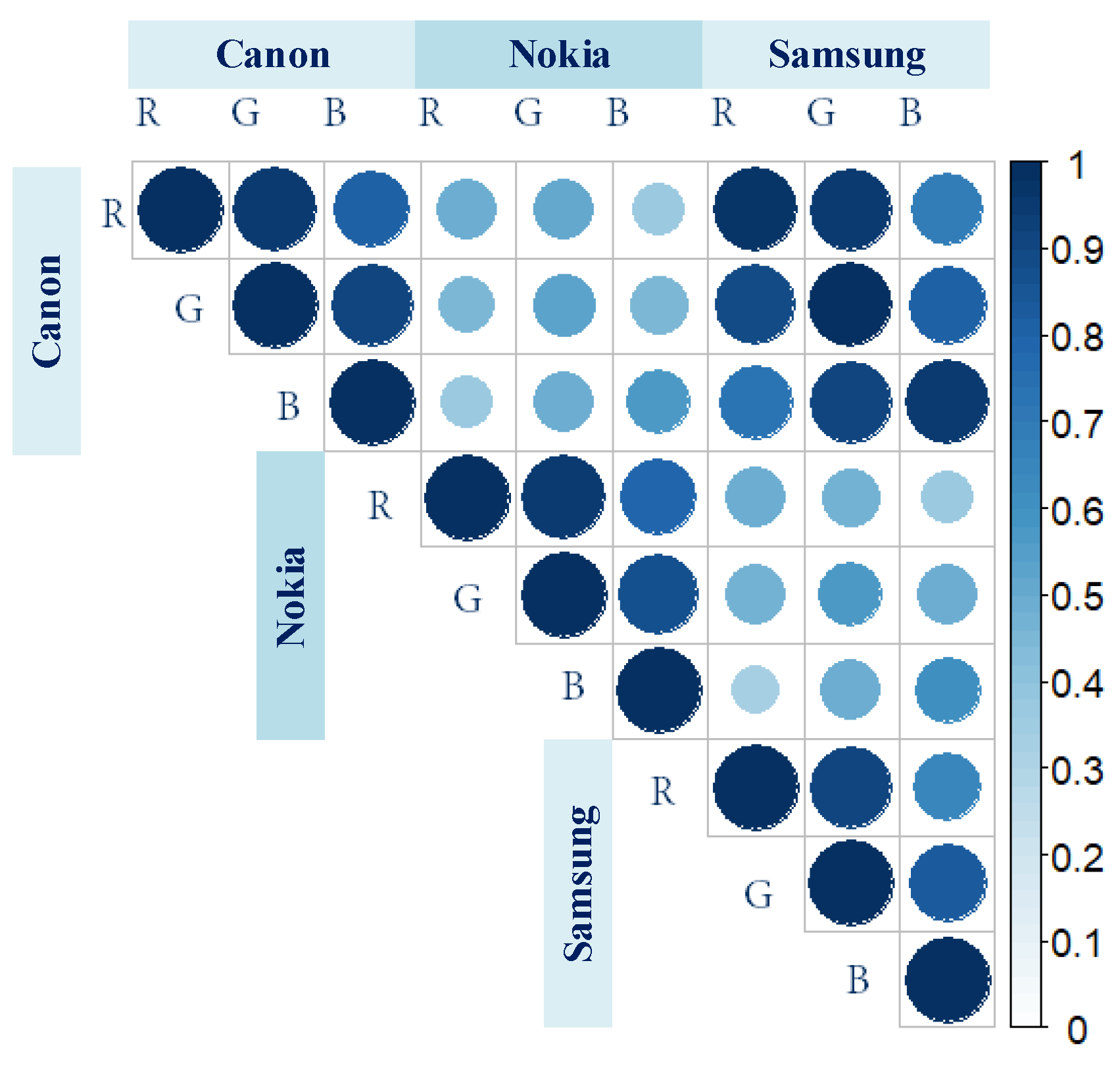

| RC | GC | BC | RN | GN | BN | RS | GS | BS | |

|---|---|---|---|---|---|---|---|---|---|

| RC | 1.0000 | 0.9449 | 0.8112 | 0.4838 | 0.5059 | 0.3694 | 0.9737 | 0.9422 | 0.6986 |

| GC | 0.9449 | 1.0000 | 0.9163 | 0.4429 | 0.5382 | 0.4532 | 0.8820 | 0.9881 | 0.8143 |

| BC | 0.8112 | 0.9163 | 1.0000 | 0.3704 | 0.4866 | 0.5600 | 0.7324 | 0.9010 | 0.9559 |

| RN | 0.4838 | 0.4429 | 0.3704 | 1.0000 | 0.9555 | 0.7952 | 0.4864 | 0.4766 | 0.3687 |

| GN | 0.5059 | 0.5382 | 0.4866 | 0.9555 | 1.0000 | 0.8780 | 0.4774 | 0.5655 | 0.4836 |

| BN | 0.3694 | 0.4532 | 0.5600 | 0.7952 | 0.8780 | 1.0000 | 0.3280 | 0.4835 | 0.6149 |

| RS | 0.9737 | 0.8820 | 0.7324 | 0.4864 | 0.4774 | 0.3280 | 1.0000 | 0.9005 | 0.6489 |

| GS | 0.9422 | 0.9881 | 0.9010 | 0.4766 | 0.5655 | 0.4835 | 0.9005 | 1.0000 | 0.8295 |

| BS | 0.6986 | 0.8143 | 0.9559 | 0.3687 | 0.4836 | 0.6149 | 0.6489 | 0.8295 | 1.0000 |

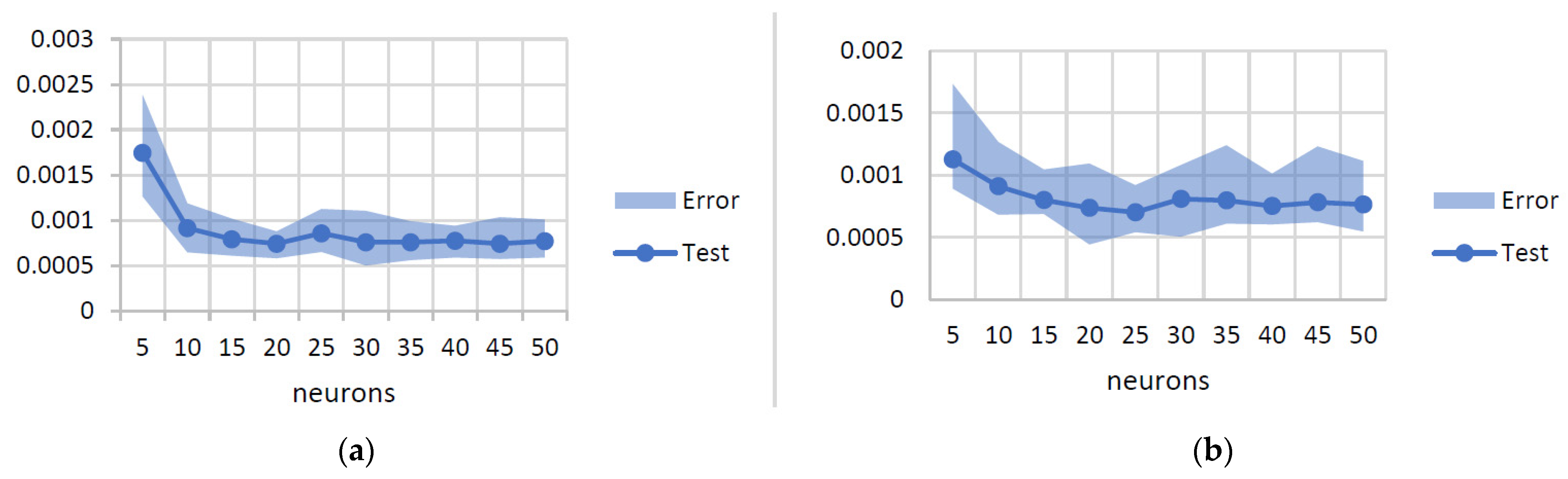

| Device | Neurons | Train | Test | Validation | Denorm. Validation | Min. Test | Min. Validation |

|---|---|---|---|---|---|---|---|

| Nokia | 1 | 0.02669 | 0.02680 | 0.02620 | 1703.1960 | 0.02438 | 0.02312 |

| 5 | 0.00167 | 0.00175 | 0.00167 | 108.43533 | 0.00127 | 0.00111 | |

| 10 | 0.00086 | 0.00092 | 0.00099 | 64.18800 | 0.00065 | 0.00072 | |

| 15 | 0.00075 | 0.00079 | 0.00077 | 49.87453 | 0.00061 | 0.00059 | |

| 20 | 0.00068 | 0.00074 | 0.00079 | 51.64453 | 0.00059 | 0.00060 | |

| 25 | 0.00069 | 0.00086 | 0.00075 | 48.96067 | 0.00065 | 0.00060 | |

| 30 | 0.00060 | 0.00076 | 0.00075 | 49.04147 | 0.00050 | 0.00049 | |

| 35 | 0.00059 | 0.00076 | 0.00081 | 52.91627 | 0.00056 | 0.00054 | |

| 40 | 0.00062 | 0.00078 | 0.00071 | 46.10627 | 0.00059 | 0.00058 | |

| 45 | 0.00053 | 0.00075 | 0.00083 | 53.89320 | 0.00058 | 0.00055 | |

| 50 | 0.00056 | 0.00077 | 0.00082 | 53.50213 | 0.00059 | 0.00062 | |

| Samsung | 1 | 0.00441 | 0.00436 | 0.00444 | 289.00307 | 0.00381 | 0.00395 |

| 5 | 0.00103 | 0.00113 | 0.00100 | 64.83067 | 0.00089 | 0.00071 | |

| 10 | 0.00085 | 0.00091 | 0.00086 | 55.68960 | 0.00068 | 0.00061 | |

| 15 | 0.00079 | 0.00080 | 0.00087 | 56.35720 | 0.00069 | 0.00065 | |

| 20 | 0.00064 | 0.00074 | 0.00079 | 51.57760 | 0.00044 | 0.00057 | |

| 25 | 0.00060 | 0.00070 | 0.00073 | 47.49813 | 0.00054 | 0.00055 | |

| 30 | 0.00055 | 0.00081 | 0.00082 | 53.25147 | 0.00051 | 0.00055 | |

| 35 | 0.00054 | 0.00080 | 0.00073 | 47.49560 | 0.00061 | 0.00054 | |

| 40 | 0.00054 | 0.00075 | 0.00072 | 47.13880 | 0.00060 | 0.00045 | |

| 45 | 0.00053 | 0.00078 | 0.00080 | 51.73093 | 0.00062 | 0.00045 | |

| 50 | 0.00052 | 0.00076 | 0.00075 | 48.97707 | 0.00055 | 0.00053 |

| RC | GC | BC | RN | GN | BN | RS | GS | BS | |

|---|---|---|---|---|---|---|---|---|---|

| RC | 1.0000 | 0.9449 | 0.8112 | 0.9950 | 0.9426 | 0.8129 | 0.9949 | 0.9450 | 0.8127 |

| GC | 0.9449 | 1.0000 | 0.9163 | 0.9441 | 0.9961 | 0.9151 | 0.9441 | 0.9950 | 0.9153 |

| BC | 0.8112 | 0.9163 | 1.0000 | 0.8102 | 0.9115 | 0.9920 | 0.8076 | 0.9090 | 0.9893 |

| RN | 0.9950 | 0.9441 | 0.8102 | 1.0000 | 0.9474 | 0.8174 | 0.9931 | 0.9464 | 0.8143 |

| GN | 0.9426 | 0.9961 | 0.9115 | 0.9474 | 1.0000 | 0.9184 | 0.9440 | 0.9953 | 0.9153 |

| BN | 0.8129 | 0.9151 | 0.9920 | 0.8174 | 0.9184 | 1.0000 | 0.8121 | 0.9131 | 0.9902 |

| RS | 0.9949 | 0.9441 | 0.8076 | 0.9931 | 0.9440 | 0.8121 | 1.0000 | 0.9499 | 0.8172 |

| GS | 0.9450 | 0.9950 | 0.9090 | 0.9464 | 0.9953 | 0.9131 | 0.9499 | 1.0000 | 0.9191 |

| BS | 0.8127 | 0.9153 | 0.9893 | 0.8143 | 0.9153 | 0.9902 | 0.8172 | 0.9191 | 1.0000 |

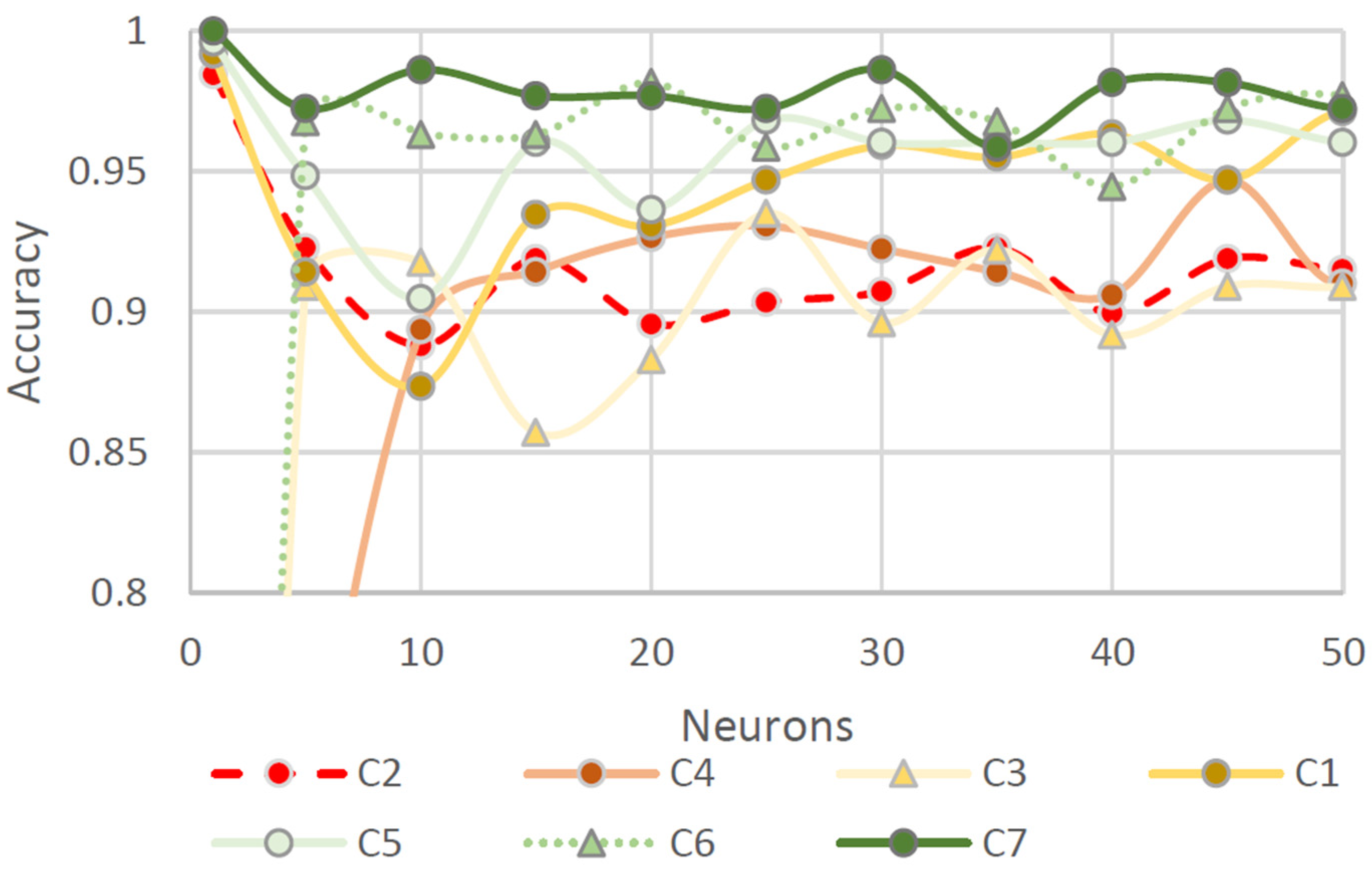

| Device | Space | C1 | C2 | C3 | C4 | C5 | C6 | C7 |

|---|---|---|---|---|---|---|---|---|

| Canon | RGB | 0.9517 | 0.9078 | 0.9208 | 0.9330 | 0.9412 | 0.9393 | 0.9578 |

| HSV | 0.9204 | 0.8473 | 0.8595 | 0.8962 | 0.9380 | 0.9370 | 0.9666 | |

| Nokia | RGB | 0.9044 | 0.8454 | 0.8656 | 0.8540 | 0.8641 | 0.8811 | 0.9452 |

| HSV | 0.7977 | 0.7153 | 0.7740 | 0.7635 | 0.7857 | 0.8813 | 0.9366 | |

| Samsung | RGB | 0.9092 | 0.8450 | 0.8628 | 0.8576 | 0.8588 | 0.8790 | 0.9227 |

| HSV | 0.9048 | 0.7737 | 0.7210 | 0.6307 | 0.5922 | 0.6609 | 0.6071 |

| Device | N | C1 | C2 | C3 | C4 | C5 | C6 | C7 |

|---|---|---|---|---|---|---|---|---|

| Canon | 1 | 0.99184 | 0.98456 | 0 | 0 | 0.99603 | 0 | 1 |

| 5 | 0.91429 | 0.92278 | 0.90909 | 0.67347 | 0.94841 | 0.96774 | 0.97235 | |

| 10 | 0.87347 | 0.88803 | 0.91775 | 0.89388 | 0.90476 | 0.96313 | 0.98618 | |

| 15 | 0.93469 | 0.91892 | 0.85714 | 0.91429 | 0.96032 | 0.96313 | 0.97696 | |

| 20 | 0.93061 | 0.89575 | 0.88312 | 0.92653 | 0.93651 | 0.98157 | 0.97696 | |

| 25 | 0.94694 | 0.90347 | 0.93506 | 0.93061 | 0.96825 | 0.95853 | 0.97235 | |

| 30 | 0.95918 | 0.90734 | 0.8961 | 0.92245 | 0.96032 | 0.97235 | 0.98618 | |

| 35 | 0.9551 | 0.92278 | 0.92208 | 0.91429 | 0.96032 | 0.96774 | 0.95853 | |

| 40 | 0.96327 | 0.89961 | 0.89177 | 0.90612 | 0.96032 | 0.9447 | 0.98157 | |

| 45 | 0.94694 | 0.91892 | 0.90909 | 0.94694 | 0.96825 | 0.97235 | 0.98157 | |

| 50 | 0.97143 | 0.91506 | 0.90909 | 0.9102 | 0.96032 | 0.97696 | 0.97235 | |

| Nokia | 1 | 0.97959 | 0.97683 | 0 | 0.04081 | 0.30159 | 0.98157 | 0.90783 |

| 5 | 0.93878 | 0.79151 | 0.28139 | 0.79184 | 0.67063 | 0.69585 | 0.98157 | |

| 10 | 0.85714 | 0.86873 | 0.78788 | 0.8 | 0.75794 | 0.82488 | 0.91705 | |

| 15 | 0.90204 | 0.84556 | 0.80952 | 0.63265 | 0.7381 | 0.89862 | 0.89401 | |

| 20 | 0.90204 | 0.88031 | 0.69264 | 0.78367 | 0.78968 | 0.87097 | 0.94931 | |

| 25 | 0.92245 | 0.84556 | 0.73593 | 0.73061 | 0.7619 | 0.89862 | 0.91705 | |

| 30 | 0.8449 | 0.83784 | 0.8355 | 0.77959 | 0.72619 | 0.82488 | 0.95392 | |

| 35 | 0.86122 | 0.87645 | 0.81818 | 0.77143 | 0.80159 | 0.8341 | 0.94009 | |

| 40 | 0.90204 | 0.86873 | 0.7013 | 0.82857 | 0.79365 | 0.87558 | 0.94009 | |

| 45 | 0.86939 | 0.8417 | 0.85281 | 0.76327 | 0.74603 | 0.88018 | 0.9447 | |

| 50 | 0.90204 | 0.88803 | 0.82684 | 0.64082 | 0.75794 | 0.80184 | 0.96774 | |

| Samsung | 1 | 0.91837 | 0.94595 | 0 | 0 | 0.1746 | 0.94931 | 0.85714 |

| 5 | 0.89796 | 0.85328 | 0.02164 | 0.72245 | 0.65873 | 0.71889 | 0.93548 | |

| 10 | 0.84082 | 0.79537 | 0.54545 | 0.63673 | 0.72222 | 0.77419 | 0.85714 | |

| 15 | 0.86531 | 0.82625 | 0.59307 | 0.71837 | 0.63095 | 0.75115 | 0.93088 | |

| 20 | 0.82449 | 0.88803 | 0.61472 | 0.70204 | 0.7619 | 0.84332 | 0.86175 | |

| 25 | 0.85306 | 0.91506 | 0.58874 | 0.75102 | 0.72619 | 0.7235 | 0.93088 | |

| 30 | 0.82449 | 0.87645 | 0.58874 | 0.69796 | 0.7619 | 0.80645 | 0.90323 | |

| 35 | 0.89388 | 0.85328 | 0.61905 | 0.66122 | 0.71429 | 0.77419 | 0.89862 | |

| 40 | 0.88163 | 0.92278 | 0.58009 | 0.68571 | 0.69444 | 0.86175 | 0.90783 | |

| 45 | 0.87347 | 0.90347 | 0.68398 | 0.70612 | 0.74603 | 0.82949 | 0.88479 | |

| 50 | 0.86939 | 0.88417 | 0.58874 | 0.62449 | 0.77381 | 0.75576 | 0.9447 |

| Canon | Nokia | Samsung | ||||

|---|---|---|---|---|---|---|

| Neurons | Value | Chroma | Value | Chroma | Value | Chroma |

| 1 | 0.9787 | 0.9756 | 0.9396 | 0.7942 | 0.9313 | 0.8135 |

| 5 | 0.9795 | 0.9743 | 0.9404 | 0.7956 | 0.9336 | 0.8132 |

| 10 | 0.9801 | 0.9744 | 0.9394 | 0.7947 | 0.9345 | 0.8112 |

| 15 | 0.9782 | 0.9749 | 0.9398 | 0.7975 | 0.9318 | 0.8163 |

| 20 | 0.9797 | 0.9740 | 0.9394 | 0.7969 | 0.9304 | 0.8105 |

| 25 | 0.9791 | 0.9741 | 0.9375 | 0.7942 | 0.9321 | 0.8163 |

| 30 | 0.9786 | 0.9756 | 0.9370 | 0.7864 | 0.9315 | 0.8105 |

| 35 | 0.9794 | 0.9769 | 0.9392 | 0.7943 | 0.9322 | 0.8115 |

| 40 | 0.9796 | 0.9735 | 0.9384 | 0.7962 | 0.9340 | 0.8163 |

| 45 | 0.9784 | 0.9739 | 0.9391 | 0.7962 | 0.9361 | 0.8165 |

| 50 | 0.9791 | 0.9747 | 0.9355 | 0.7937 | 0.9310 | 0.8082 |

| Device | Method | C1 | C2 | C3 | C4 | C5 | C6 | C7 |

|---|---|---|---|---|---|---|---|---|

| Canon | New | 0.9918 | 0.9846 | 0.9567 | 0.9674 | 0.9960 | 0.9862 | 1.0000 |

| Previous | 0.8857 | 0.9167 | 0.9677 | 0.9459 | 0.9355 | 0.9697 | 0.9714 | |

| Nokia | New | 0.9796 | 0.9768 | 0.8528 | 0.8286 | 0.8373 | 0.9816 | 0.9816 |

| Previous | 0.7286 | 0.6389 | 0.8871 | 0.8108 | 0.8065 | 0.9091 | 0.6000 | |

| Samsung | New | 0.9306 | 0.9460 | 0.7143 | 0.7714 | 0.8095 | 0.9493 | 0.9493 |

| Previous | 0.8143 | 0.6944 | 0.7419 | 0.7973 | 0.5968 | 0.7273 | 0.7429 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pegalajar, M.C.; Ruiz, L.G.B.; Criado-Ramón, D. Munsell Soil Colour Classification Using Smartphones through a Neuro-Based Multiclass Solution. AgriEngineering 2023, 5, 355-368. https://doi.org/10.3390/agriengineering5010023

Pegalajar MC, Ruiz LGB, Criado-Ramón D. Munsell Soil Colour Classification Using Smartphones through a Neuro-Based Multiclass Solution. AgriEngineering. 2023; 5(1):355-368. https://doi.org/10.3390/agriengineering5010023

Chicago/Turabian StylePegalajar, M. C., L. G. B. Ruiz, and D. Criado-Ramón. 2023. "Munsell Soil Colour Classification Using Smartphones through a Neuro-Based Multiclass Solution" AgriEngineering 5, no. 1: 355-368. https://doi.org/10.3390/agriengineering5010023

APA StylePegalajar, M. C., Ruiz, L. G. B., & Criado-Ramón, D. (2023). Munsell Soil Colour Classification Using Smartphones through a Neuro-Based Multiclass Solution. AgriEngineering, 5(1), 355-368. https://doi.org/10.3390/agriengineering5010023