Abstract

Boosting innovation and research in the agricultural sector is crucial if farmers are asked to produce more with less. Precision agriculture offers different solutions to assist farmers in improving efficiency and reducing labor costs while respecting the legal requirements. Precision spraying enables the treatment of only the plants that require it, with the right amount of products. Our research group has developed a solution based on a reconfigurable vehicle with a high degree of automation for the distribution of plant protection products in vineyards and greenhouses. The synergy between the vehicle and the spraying management system we developed is an innovative solution with high technological content, and attempts to account for the current European and global directives in the field of agricultural techniques. The objectives of our system are the development of an autonomous vehicle and a spraying management system that allows safe and accurate autonomous spraying operations.

1. Introduction

The growing global demand for food will be a major challenge for agriculture, together with the necessity to support the emergence of technologies able to meet environmental and ethical standards, promoting also efficiency and healthy work environments []. The UN Food and Agriculture Organization (FAO) and other research studies estimate that 20–40% of global crops are lost due to plant pests and diseases []. Farmers use chemicals to mitigate damages caused by pests, which interferes with the production of crops, affecting quality. Pesticide residues resulting from the use of plant protection products on food or feed crops may be a risk to public health.

Precision spraying in agriculture represents an innovative methodology that can help farmers to spray only where needed, using the right amount of chemicals []. This can improve the efficiency of treatments, reducing costs and chemical waste. Moreover, precision spraying can help to fulfil legal requirements, in terms of worker health [], environmental sustainability, and food safety and quality. However, most suppliers do not offer robots and integrated systems that are able to operate in narrow and steep spaces or in the presence of obstacles, as in the case of greenhouses or terraced cultivations.

The subject of this work is a system capable of distributing inputs (e.g., crop/plant protection products, bio-agro drugs, pesticides, powders) in an efficient, sustainable, and safe way, in environments where standard agricultural machineries (automated or autonomous) cannot operate: greenhouses, mountainous areas, and heroic cultivations.

The adoption of autonomous robots can help in optimizing the agricultural operations, increasing productivity and safety. Moreover, the costs of technological devices (e.g., electrical motors, batteries, computers, sensors) is reduced from year to year, allowing these robotic systems to become easily wide-spread of among farmers. A quite complete review of the basic concepts of agricultural robotics is reported in [,]. Here common robotic architectures, components, and applications are discussed in depth. Many research activities deal with the adoption of robotics in agriculture []. For example, a robot for crop and plant management is presented in [], while Vasconez et al. [] provide a general description of the potentiality of human–robot interaction (HRI) in many agriculture activities, such us harvesting, handling, and transporting.

Agricultural robots are typically autonomous or semi-autonomous systems that can be operated in several stages of the process. In particular, robots that must operate inside greenhouses and vineyards have to deal with very tight passages while avoiding damages to the plants. Moreover, they should have very good performance regarding path following, during turn maneuvers at headlands. Several localization and navigation solutions have been implemented. A laser-guided path follower technique was tested in []. The system is stated to be very reliable, but requires several lasers to be mounted in the proximity of corridors. In [], different approaches were used regarding sensors and algorithms. Some trials were performed using map-based techniques and using motor encoders for localization.

A combination of the Hector Simultaneous Localization and Mapping (SLAM) and an artificial potential field (APF) controller is used in [] to estimate the robot’s position and to perform autonomous navigation inside the greenhouse.

In Reis et al. [], a multi-antenna receiver Bluetooth system and the obtained transfer functions (from received signal strength indication (RSSI) to distance estimation) are used as redundant artificial landmarks for localization purposes.

In Tourrette et al/. [], a solution based on the cooperation of at least two mobile robots—a leader and a follower, moving from either side of a vine row—is investigated thanks to ultra-wideband (UWB) technology. In particular, the formation control of the follower with respect to a leader is proposed, allowing relative localization without visual contact and avoiding the limitations of GPS when moving near to high vegetation.

Many EU-funded projects concerning vineyards have recently concluded. One of these is Vinerobot [], whose aim was the development of a system able to monitor vineyard physiological parameters and grape composition. Another European project is VinBot []. VinBot is an all-terrain autonomous mobile robot with a set of sensors capable of capturing and analyzing vineyard images and 3D data by means of cloud computing applications, in order to determine the yield of vineyards and to share this information with the winegrowers. The robot will help winegrowers in defoliation, fruit removal, and sequential harvesting by grapes’ ripeness.

As regards spraying robots, image processing algorithms for the detection and localization of the grape cluster and of the foliage for selective spraying are presented in Berenstein et al. []. In this case, the system was tested on a manually driven cart.

A specially designed algorithm to measure complex-shaped targets using a 270° radial laser scanning sensor is presented in Yan et al. [] for the future development of automatic spray systems in greenhouse applications.

Adamides et al. [] compare different user interfaces (i.e., human–machine interface, HMI) for a teleoperated robot for targeted spraying in vineyards. It compares different approaches with dedicated input devices such as a joypad or a standard keyboard, different output devices such as a monitor or a head-mounted display, and different vision mechanisms.

Within the project CROPS [], a robotic system with a six degrees of freedom manipulator, an optical sensor system, and a precision spraying actuator was developed. The precision spraying end-effector is positioned by the robotic manipulator to selectively and accurately apply pesticides. The project Flourish [] is also concerned with autonomous selective spraying operations in open fields using a cooperative unmanned aerial and ground vehicles approach.

However, in most cases, the developed prototypes presented above are just proof-of-concept, and are not sufficiently robust for the real agricultural environment. Our system attempts to bridge the gap between all the prototype solutions described above and those big machines that already work in large crops and farms (i.e., John Deere, New Holland, etc.), which cannot be considered as solutions applicable in greenhouses or heroic cultivations and vineyards. Moreover, in terms of versatility, compared to some of the previous solutions, our system does not require the installation of rails between the rows and can be used in different kinds of crops and terrains.

The heart of the system we developed is represented by an agile autonomous robotic vehicle able to move in environments where standard agricultural machineries (automated or autonomous) cannot operate: greenhouses, mountainous areas, and heroic cultivations. The vehicle, shown in Figure 1, is equipped with a “smart spraying system” capable of optimizing the spraying operations.

Figure 1.

The developed spraying robot while operating in a greenhouse.

Furthermore, the developed human–machine interface also allows unskilled operators to plan spraying treatments, to monitor the mission from a safe distance, and to generate a report of the treatments at the end of the operations.

2. Materials and Methods

The developed architecture consists of four main subsystems, described in the following sub-sections.

2.1. Mobile Tracked Robot

The robot is based on the “U-Go” robot—one of the multifunctional vehicles developed in our DIEEI Robotic Laboratories at University of Catania, mainly to solve problems like transportation, navigation, and inspection in very harsh outdoor environments [,]. It is a rubber-tracked vehicle equipped with electric traction: two motor drivers, connected to two 1 kW 24 V DC brushed motors, acting on the tracks, can be commanded via CAN bus. The compact external dimensions (880 mm (L) × 1020 mm (W)) also make the machine highly maneuverable between narrow passages. It allows the transport of a payload of about 200 kg, at a maximum speed of 3 km/h. It is able to exceed a maximum slope of 30 degrees, and has an autonomy of 6–8 h.

The vehicle is equipped with several sensors for autonomous navigation in rough outdoor environments: a stereo camera, a RTK-DGPS GNSS receiver, an attitude and heading reference system (AHRS), a laser scanner rangefinder, ultrasonic sensors, and motor encoders. An embedded acquisition and control system, based on the sbRIO-9626 board by National Instruments, acts as low-level interface with the sensors and actuators. A custom protocol based on User Datagram Protocol (UDP) is adopted to communicate and interact with the sbRIO over a wired Ethernet or WiFi connection. It is possible to retrieve all the sensors’ data (GPS, attitude, etc.) and to send commands to the board. For example, linear and angular desired speeds can be passed via UDP to the sbRIO; this uses the robot kinematics equations to compute the reference signals to be assigned to the vehicle’s two motor drivers. This kind of architecture ensures hardware and software modularity: an on-board companion computer or a remote base station can be used to interface and communicate with the mobile tracked vehicle in order to implement high-level control algorithms, while demanding the low-level tasks of the sbRIO.

2.2. Localization and Navigation System

This subsystem fuses the information coming from the on-board sensors to accurately determine the position and attitude of the vehicle, used to autonomously execute the assigned trajectories and avoid obstacles.

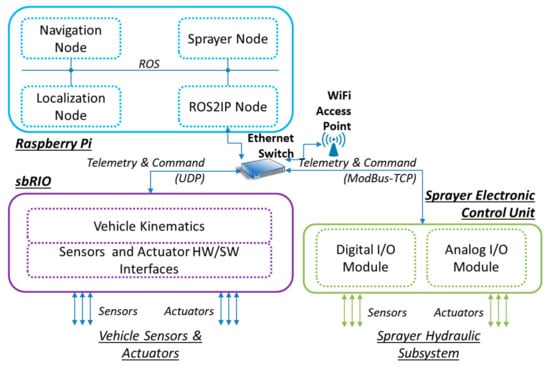

A Raspberry Pi 3 (RPi) was used as an on-board companion computer, running the high-level algorithms of the localization and navigation system. The two software subsystems (Localization and Navigation modules) run in the RPi as two ROS (robot operating system) nodes (Figure 2) []. RPi communicates with the sbRIO by means of the aforementioned UDP protocol: the ROS2IP node of Figure 2 translates custom UDP messages to ROS messages and vice versa.

Figure 2.

The developed on-board HW/SW architecture. ROS: robot operating system.

In particular, the Localization node implements an extended Kalman filter to fuse the data coming from the sensors, in order to evaluate robot position and heading. Moreover, the sensor suite and the Localization node allow several high-level tasks to be performed:

- The stereo camera and laser range finder can be used to perform terrain reconstruction and drivable-surface detection; they can also be used to detect obstacles and to perform odometry-free dead-reckoning [].

- The ultrasonic sensors and/or laser scanner can be used both to detect obstacles and to localize the robot inside greenhouse corridors.

However, in the case of study reported in this work, the Localization node uses only a subset of the sensors suite to evaluate robot position and heading:

- Absolute positions are measured integrating the measures of the encoders with the information coming from a Leica Viva GS10, which is a high-precision GNSS receiver; it uses Leica Geosystems SmartNet—a GNSS Network Real-time Kinematic (RTK) correction service delivered over cellular network—to achieve centimeter-accurate positioning without the need for an RTK base station.

- Attitude is derived from X-Sens MTi-30, a MEMS based AHRS.

- A SICK LMS 200 laser scanner, mounted in the upper-front part of the vehicle, is used to detect static and dynamic obstacles.

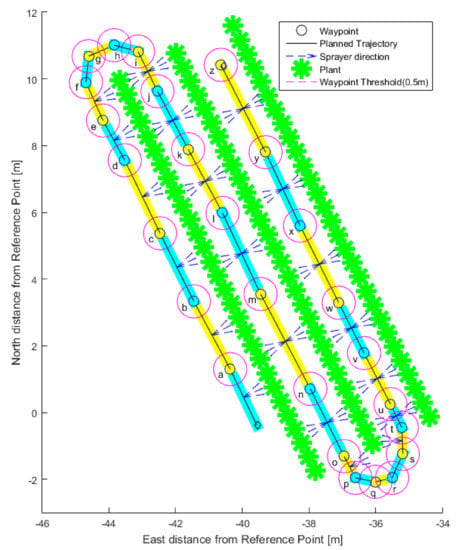

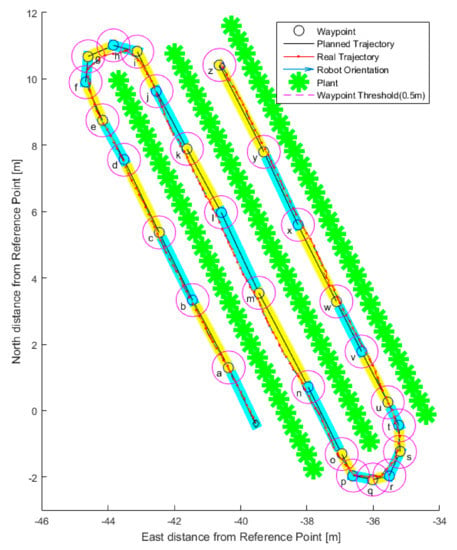

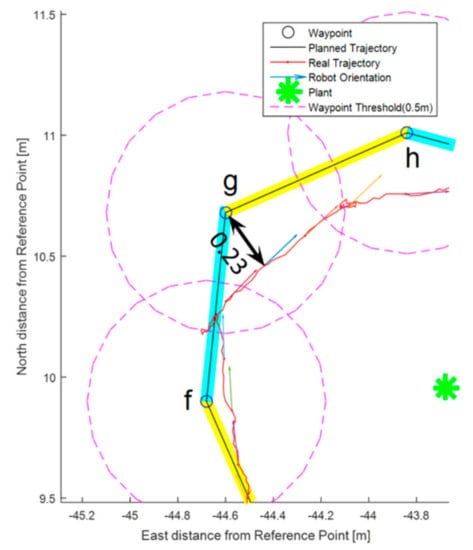

The information coming from the Localization node is passed via ROS to the Navigation node that allows the vehicle to autonomously execute an assigned trajectory, avoiding the obstacles. Processing the laser scanner data, the potential field method (PFM) [] is implemented to follow the desired trajectory, assigned as a list of waypoints (WPs) avoiding obstacles: the next desired waypoint attracts the vehicle, while obstacles exert repulsive force. The implemented control algorithms translate the resulting force in linear and angular speeds that are passed to the sbRIO via UDP.

2.3. Smart Spraying System

The smart spraying system is based on a standard spraying machine made “smart” by add-on technologies enabling the automatic control of the system. The sprayer unit is composed of a hydraulic subsystem and an electronic control unit.

The hydraulic part of the sprayer is composed of a 130 L tank with a 5 L hand-wash separated reservoir, an electrical (for safe operations inside greenhouses) actuated pump with a 1 kW DC motor with a gearbox, a manual pressure regulator with pressure indicator, an electric flux regulator valve, a pressure sensor, a flow rate meter, and two electric on/off valves. These two valves can supply, with the chemical fluid, two vertical stainless-steel bars equipped with anti-drop high-pressure nozzles for spraying operations inside a standard tomato greenhouse. The two bars are respectively placed on the left and right sides of the sprayer, and can be operated separately. The pump has a nominal maximum pressure of 25 bar and a maximum flow rate of 20 L/min. Furthermore, the system is equipped with wind speed and temperature/humidity sensors to alert if weather conditions are inadequate for the treatment.

The electronic control unit is composed of one digital I/O module and one analog I/O module with Ethernet interface. A ModBus-TCP-based protocol allows for interactions with the modules from the on-board computer as well as from any other remote station with a wireless connection: by using a suitable commands set, it is possible to operate the hydraulic components (i.e., valves, flow regulator, pump) and to read information from the pressure sensor and the flow rate sensor, as well as other control unit parameters (e.g., main breaker status, pump current, flux regulator valve status).

A dedicated ROS node, running on the RPi, is devoted to the management of the smart sprayer (“Sprayer Node” in Figure 2). This node interacts with the modules of the sprayer control unit via the ROS2IP node, which translates ModBus-TCP messages to ROS messages and vice versa. While communicating with the Navigation node and Localization node, the Sprayer Node controls the sprayer, relying on the spraying treatments previously planned by the user (as described in the next subsection) or scheduled from a spraying map. While moving, the robot automatically activates the requested valves at the correct waypoint. This aim of this work was not to determine how to obtain the spraying map; nevertheless, when the spraying map is available, the system can use it and locally sprays the required quantity.

2.4. Human–Machine Interface (HMI)

The HMI, running on a tablet PC, allows operators with no engineering knowledge to manage the system. The interface communicates with both the sbRIO and the Raspberry Pi using a WiFi connection.

The HMI can be used for four main operations:

- To visualize the position of the vehicle on a map and to monitor the status of the system (battery level, liquid level in the tank, state of the valves).

- To plan the mission. This operation can be scheduled on the basis of a spraying map from which WP and required chemical dose can be processed. It is also possible to select the WPs by clicking on a georeferenced map, if available. The trajectories can also be assigned by using a teach-and-repeat strategy, driving the robot through the corridors along the desired trajectory: the WPs are automatically stored in the tablet PC. In this case, a joystick connected to the tablet PC allows the operator to manually guide the robot. At the end of the procedure, WPs are uploaded to the Navigation node and stored on-board the RPi.

- To plan the spraying treatments. For each waypoint, the operator can define whether to activate the right or left sprayers and the quantity of sprayed product. This info is sent to the Sprayer Node of the Raspberry Pi and stored on-board the RPi.

- To generate a report. At the end of the spraying mission, a logbook is automatically generated on-board the RPi, according to the national legislation implementing the European directives. The log contains personal data concerning the company, the name of the treated crop and its related extension, date of the treatment, and the type and quantity of chemical products. It is possible to retrieve this data from the HMI to generate a report.

4. Conclusions and Future Works

The interaction between the autonomous vehicle and the spraying management system is a solution with high technological content that allows safe and accurate autonomous spraying operations.

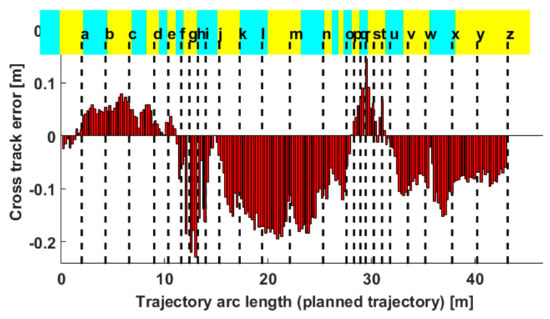

As demonstrated by the experimental results, the proposed architecture showed good performance, even in a scenario such as a greenhouse that could introduce GNSS signal attenuations and create multipath reflections. All the trials were performed without any significant degradation.

The robot autonomously carried out the assigned path between the rows for about 8 h, maintaining a sufficient safe distance from the plants. The smart spraying system regulated the amount of sprayed products in terms of quantity and selective activation of the nozzles based on the planned spraying treatments.

The compactness and robustness of the system paves the way for its adoption in environments where other normal agricultural machinery cannot operate, such as greenhouses, mountains, terraced and heroic cultivations. Further development and trials are needed to confirm the robustness of the system in more challenging environments than those presented here. However, from our preliminary tests, the platform shows good potential to be able to carry out the work also in harder conditions. Further tests in this direction will be done in the near future. Moreover, it must be noted that even though the proposed solution comprises a robotic approach to perform a specific agricultural task (spraying activities in this case), thanks to its modularity, other applications could be investigated in the future (e.g., harvesting, pruning, powder distribution, weed removal, soft tilling). Therefore, the potential applications could be expanded by the high flexibility of the proposed platform in relation to the various possible configurations. Indeed, a future system could be customizable through the use of specific tools that allow different types of tasks to be performed. Although the basic architecture will remain unaltered (i.e., a robotic platform equipped with an independent navigation system), any particular application could be developed through the addition of further equipment and tools.

At the moment, we are working on a software tool for the automatic planning of the whole mission: a georeferenced map of the plantation will be used to automatically generate the trajectory, with the aim of efficiently covering the area of interest. Moreover, the tool will plan the amount of product to spray, in terms of quantity and selective activation of the nozzles on the basis of presence of the plant, foliage density, type of crop, and forward speed of the machine.

Author Contributions

L.C., F.B., D.L. and C.D.M. designed and developed the system and performed the trials. G.S. supervised the development and the trials from the agricultural point of view, G.M. supervised and coordinated the whole project.

Funding

This work was funded by internal research project of DIEEI and DI3A of the University of Catania.

Conflicts of Interest

Authors declare no conflict of interest.

References

- EURACTIVE. Special Report: Innovation–Feeding the World. Available online: https://www.euractiv.com/section/agriculture-food/special_report/innovation-feeding-the-world/ (accessed on 21 June 2019).

- The Future of Food and Agriculture: Trends and Challenges, FAO. 2017. Available online: http://www.fao.org/3/a-i6583e.pdf (accessed on 21 June 2019).

- Song, Y.; Sun, H.; Li, M.; Zhang, Q. Technology Application of Smart Spray in Agriculture: A Review. Intell. Autom. Soft Comput. 2015, 21, 319–333. [Google Scholar] [CrossRef]

- Damalas, C.A.; Koutroubas, S.D. Farmers’ exposure to pesticides: Toxicity types and ways of prevention. Toxics 2016, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations. Part 2: Operations and systems. Biosyst. Eng. 2016, 153, 110–128. [Google Scholar] [CrossRef]

- Robots in Agriculture. Available online: https://www.intorobotics.com/35-robots-in-agriculture/ (accessed on 21 June 2019).

- Bergerman, M.; Singh, S.; Hamner, B. Results with autonomous vehicles operating in specialty crops. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St. Paul, MN, USA, 14–18 May 2012; pp. 1829–1835. [Google Scholar]

- Vasconez, J.P.; Kantor, G.A.; Cheein, F.A.A. Human–robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Sánchez-Hermosilla, J.; González, R.; Rodríguez, F.; Donaire, J.G. Mechatronic description of a laser autoguided vehicle for greenhouse operations. Sensors 2013, 13, 769–784. [Google Scholar] [CrossRef] [PubMed]

- González, R.; Rodríguez, F.; Sánchez-Hermosilla, J.; Donaire, J.G. Navigation techniques for mobile robots in greenhouses. Appl. Eng. Agric. 2009, 25, 153–165. [Google Scholar] [CrossRef]

- Harik, E.H.C.; Korsaeth, A. Combining Hector SLAM and Artificial Potential Field for Autonomous Navigation Inside a Greenhouse. Robotics 2018, 7, 22. [Google Scholar] [CrossRef]

- Reis, R.; Mendes, J.; do Santos, F.N.; Morais, R.; Ferraz, N.; Santos, L.; Sousa, A. Redundant robot localization system based in wireless sensor network. In Proceedings of the IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 154–159. [Google Scholar]

- Tourrette, T.; Deremetz, M.; Naud, O.; Lenain, R.; Laneurit, J.; De Rudnicki, V. Close Coordination of Mobile Robots Using Radio Beacons: A New Concept Aimed at Smart Spraying in Agriculture. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7727–7734. [Google Scholar]

- Vinerobot Project. Available online: http://vinerobot.eu/ (accessed on 21 June 2019).

- Vinbot Project. Available online: http://vinbot.eu/ (accessed on 21 June 2019).

- Berenstein, R.; Shahar, O.B.; Shapiro, A.; Edan, Y. Grape clusters and foliage detection algorithms for autonomous selective vineyard sprayer. Intell. Serv. Robot. 2010, 3, 233–243. [Google Scholar] [CrossRef]

- Yan, T.; Zhu, H.; Sun, L.; Wang, X.; Ling, P. Detection of 3-D objects with a 2-D laser scanning sensor for greenhouse spray applications. Comput. Electron. Agric. 2018, 152, 363–374. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Parmet, Y.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. HRI usability evaluation of interaction modes for a teleoperated agricultural robotic sprayer. Appl. Ergon. 2017, 62, 237–246. [Google Scholar] [CrossRef] [PubMed]

- CROPS Project. Available online: http://www.crops-robots.eu/ (accessed on 21 June 2019).

- Flourish Project. Available online: http://flourish-project.eu/ (accessed on 21 June 2019).

- Muscato, G.; Bonaccorso, F.; Cantelli, L.; Longo, D.; Melita, C.D. Volcanic environments: Robots for exploration and measurement. IEEE Robot. Autom. Mag. 2012, 19, 40–49. [Google Scholar] [CrossRef]

- Longo, D.; Pennisi, A.; Bonsignore, R.; Schillaci, G.; Muscato, G. A small autonomous electrical vehicle as partner for heroic viticulture. Acta Hort. 2013, 978, 391–398. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; p. 5. [Google Scholar]

- Bonaccorso, F.; Muscato, G.; Baglio, S. Laser range data scan-matching algorithm for mobile robot indoor self-localization. In Proceedings of the World Automation Congress (WAC), Puerto Vallarta, Mexico, 24–28 June 2012; pp. 1–5. [Google Scholar]

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Force Control; Springer: New York, NY, USA, 2008; pp. 161–185. [Google Scholar]

- Balloni, S.; Caruso, L.; Cerruto, E.; Emma, G.; Schillaci, G. A Prototype of Self-Propelled Sprayer to Reduce Operator Exposure in Greenhouse Treatment. In Proceedings of the Ragusa SHWA International Conference: Innovation Technology to Empower Safety, Health and Welfare in Agriculture and Agro-food Systems, Ragusa, Italy, 15–17 September 2008. [Google Scholar]

- Cunha, M.; Carvalho, C.; Marcal, A.R.S. Assessing the ability of image processing software to analyse spray quality on water-sensitive papers used as artificial targets. Biosyst. Eng. 2012, 111, 11–23. [Google Scholar] [CrossRef]

- Salyani, M.; Zhu, H.; Sweeb, R.D.; Pai, N. Assessment of spray distribution with water-sensitive paper. Agric. Eng. Int. CIGR J. 2013, 15, 101–111. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).