Unleashing the Potential of Large Language Models in Urban Data Analytics: A Review of Emerging Innovations and Future Research

Highlights

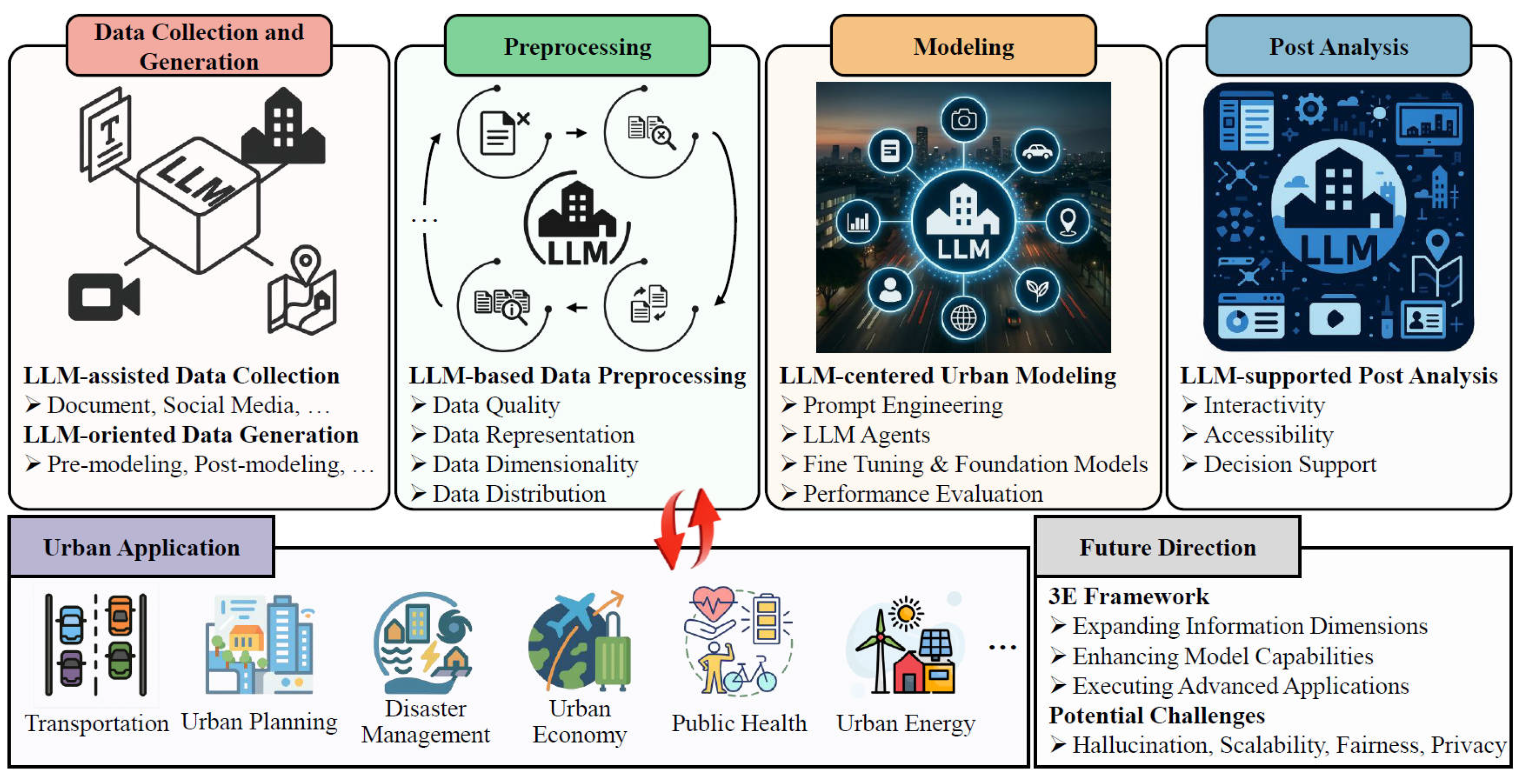

- The review shows how large language models operate across the full urban analytics pipeline and documents their functions using evidence from 178 studies

- The review identifies stable patterns in multimodal integration, synthetic data generation, and human-in-the-loop use in urban workflows.

- The findings clarify how LLMs improve urban analytical capacity through stronger data integration and more accessible analytical interfaces.

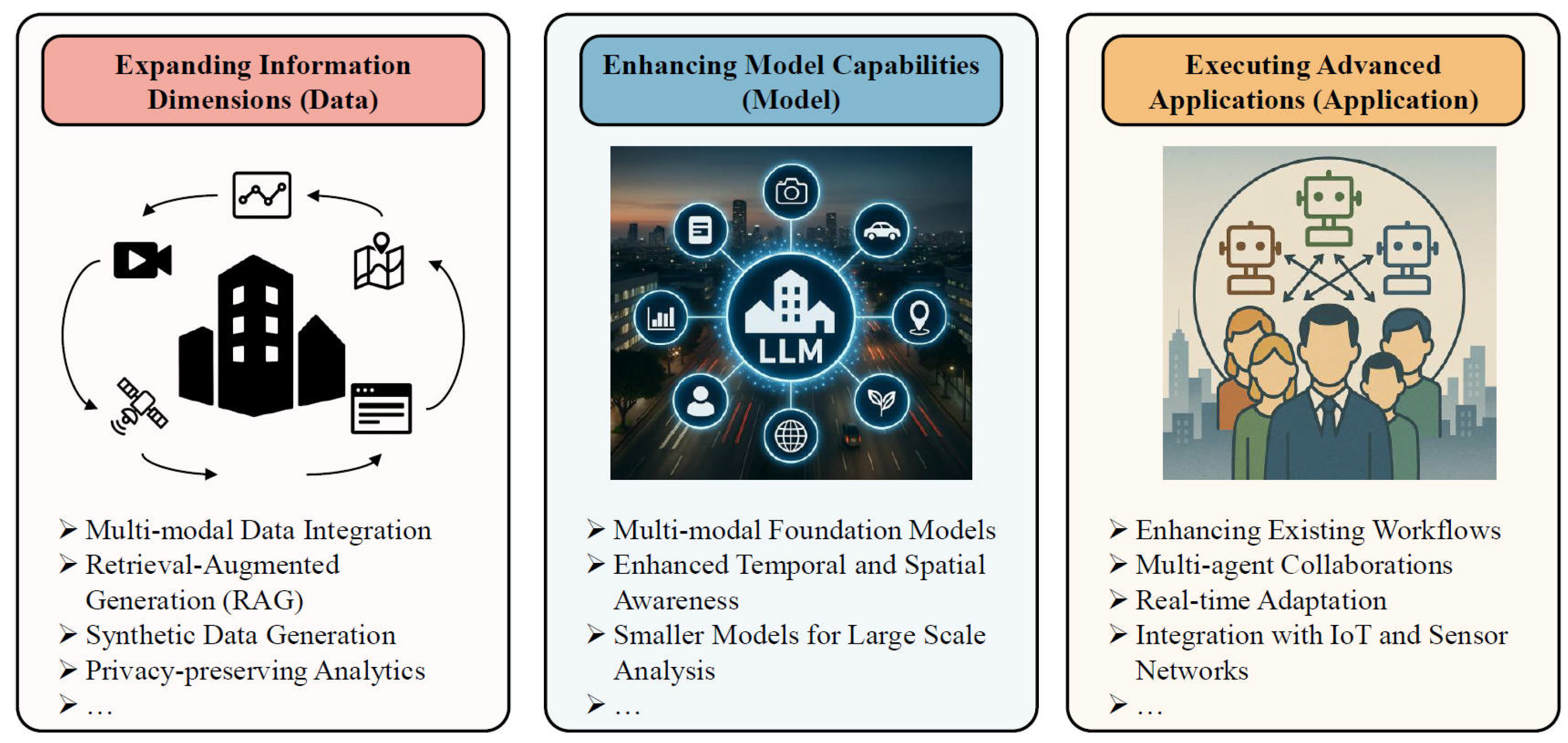

- The 3E framework offers a concise structure to guide future research on data expansion, model enhancement, and advanced urban applications.

Abstract

1. Introduction

1.1. Background

1.2. Motivation and Objectives

- What characteristics and capabilities of LLMs make them suitable for urban data analytics, and how do these properties align with the requirements of urban analytical workflows?

- How are LLMs currently being applied across urban data analytics tasks, and what approaches or implementations demonstrate their practical utility?

- What research directions and innovation opportunities emerge from the existing body of work, and how might these directions improve the effectiveness of LLMs in urban data analytics?

- What technical and operational challenges constrain the integration of LLMs into urban data analytics, and what barriers require further investigation?

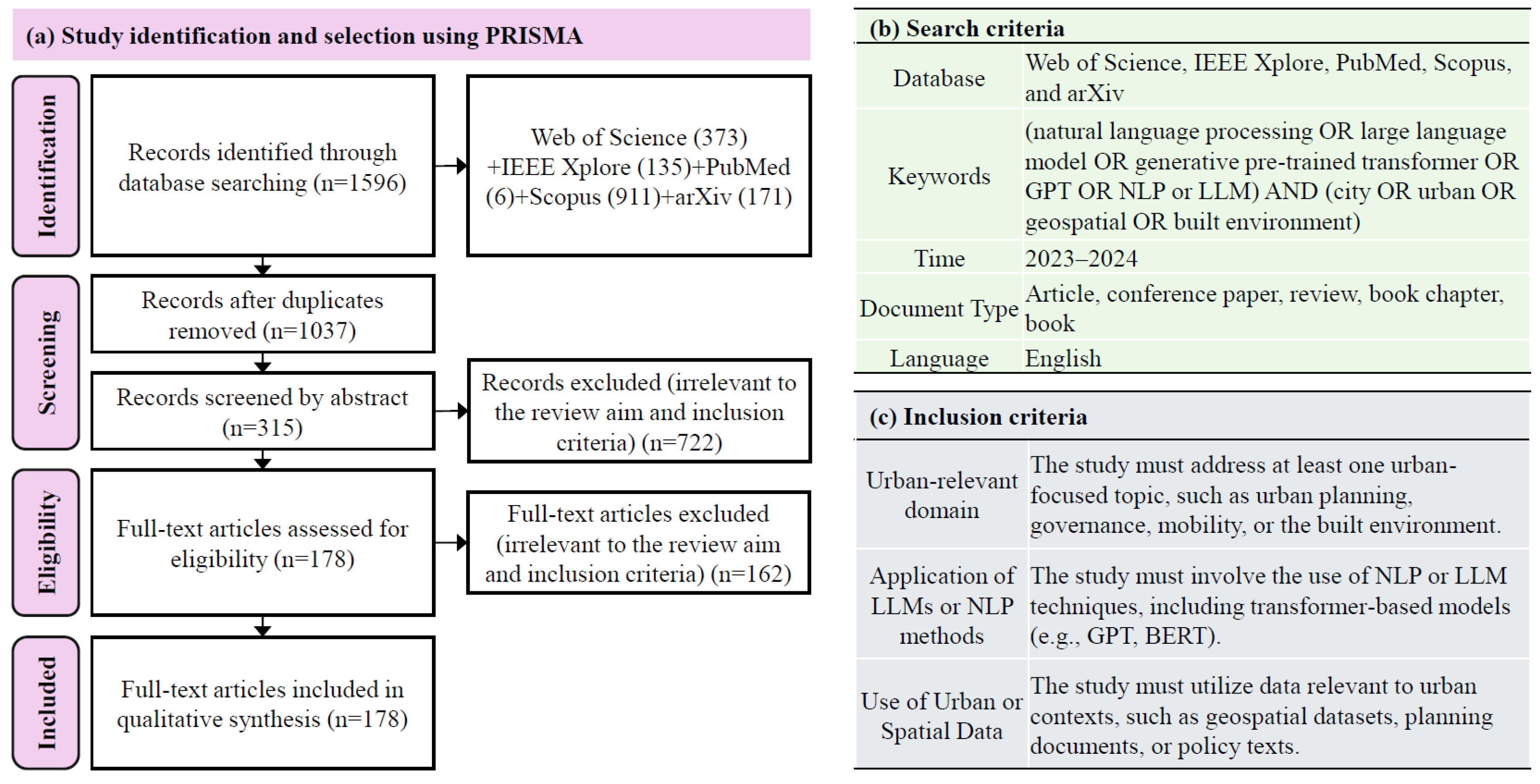

- We present a comprehensive analysis of 178 papers, offering a panoramic view of the current state of LLM applications in urban analytics. This extensive review unveils emerging trends and innovative applications across diverse urban domains, providing valuable insights into both the potential and challenges of these technologies.

- We develop a novel review framework based on the key steps in urban data analytics: data collection, preprocessing, modeling, and post-analysis. This framework ensures a systematic examination and categorization of LLM applications throughout the urban data analytics pipeline.

- We propose a 3E framework (Expanding information dimensions, Enhancing model capabilities, and Executing advanced applications) to chart future directions of LLMs in urban analytics. This framework serves as a roadmap for future research and innovation in this burgeoning field.

2. Overview of Large Language Models

2.1. Landscape of Large Language Models

2.2. Key Characteristics and Capabilities

2.2.1. Key Technologies in Developing LLMs

2.2.2. Major Capabilities of LLMs

- At the basic level, LLMs excel in fundamental text processing (e.g., summarization and sentiment analysis), simple text generation, and simple question answering. Recent studies demonstrate these capabilities through tasks such as generative approaches to aspect-based sentiment analysis and automatic thematic extraction from large text collections [29]. In urban data analytics, LLMs can efficiently process city documents, analyze social media sentiment about urban issues, and classify transportation complaints. For example, they can analyze thousands of resident feedback submissions to identify emerging neighborhood concerns or track sentiment trends about public transit quality.

- At the intermediate level, LLMs demonstrate advanced text understanding, advanced text generation ability (e.g., code generation), complex reasoning, and sophisticated task execution. Studies assessing SQL generation quality for models such as GPT-3.5 and Gemini show how LLMs handle structured analytical tasks and compositional instructions [30]. In urban contexts, these capabilities enable LLMs to interpret complex zoning regulations, generate comprehensive urban development reports, and solve multifaceted problems like the optimization of traffic flow. They can translate technical urban planning documents into accessible language for public consumption or analyze patterns in accident reports to identify unsafe intersections.

- At the advanced level, LLMs push the boundaries with sophisticated knowledge reasoning, tool planning, simulation, multimodal capabilities, and advanced system integration. Recent advances in large-scale information extraction and multimodal reasoning demonstrate how LLMs coordinate tools and integrate diverse signals in complex analytical environments [31]. In urban data analytics, LLMs create comprehensive urban knowledge graphs connecting demographic, economic, and infrastructure data. They simulate urban scenarios (e.g., traffic pattern changes after infrastructure modifications), integrate with IoT networks for real-time city monitoring, and enable collaborative multi-agent systems for emergency response coordination. For instance, an LLM-powered system could integrate traffic sensor data, weather forecasts, and event schedules to predict congestion patterns and dynamically adjust traffic signals.

2.3. Review Methods

3. Applications of LLMs in Urban Data Analytics

3.1. Urban Data Analytics Landscape

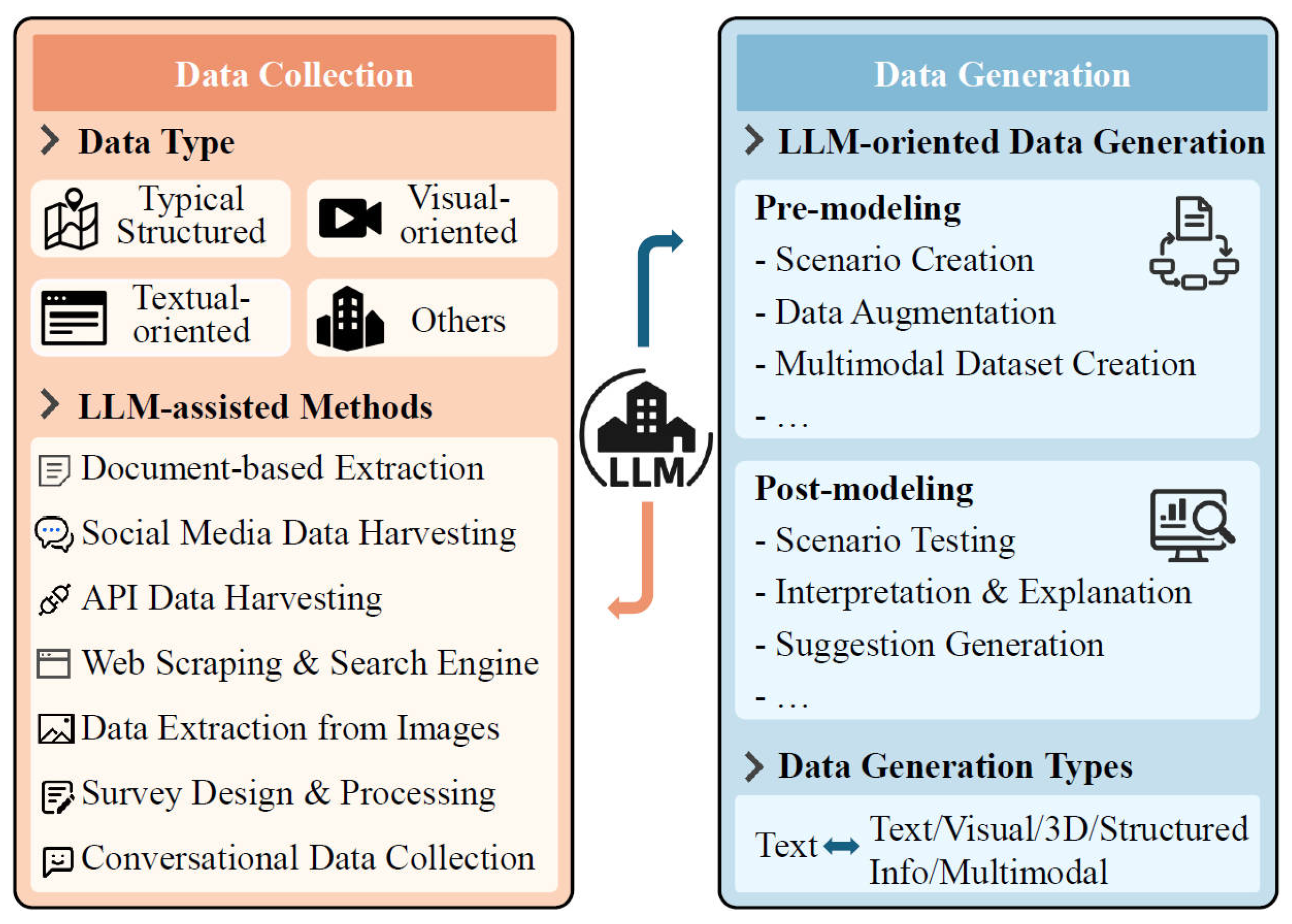

3.2. Data Collection and Generation

3.2.1. Data Collection

| Data Source | LLM-Assisted Method and Description | Examples |

|---|---|---|

| Document | Document-based Data Extraction: Extracts relevant information from various textual sources, such as policies, regulations, forms, and reports, using LLMs. | [146,166] |

| Social Media | Social Media Data Harvesting: Collects and analyzes user-generated content from social media platforms to extract urban-related insights and sentiment. | [112,153] |

| API | API Data Harvesting: Develops LLM-powered agents or generates code to collect spatial and other types of data from various APIs as requested by users through natural language queries. | [164,174] |

| Web | LLM-powered Web Scraping and Search Engine: Utilizes online LLM agents to gather, filter, and synthesize urban data from diverse Web sources and online databases. | [116] |

| Image | Data Extraction from Images: Leverages image understanding and captioning capabilities to collect data from visual urban sources like photographs and satellite imagery. | [47] |

| Survey | Intelligent Survey Design and Processing: Employs LLMs for survey question generation, adaptive questioning, and automated analysis of survey responses. | [34,203] |

| Chatbot | Conversational Data Collection: Utilizes chatbots or voice assistants to gather structured and unstructured data through natural language interactions. | [142] |

3.2.2. Data Generation

Purposes and Applications

- Pre-modeling: During the foundational stage of urban analytics, LLMs enhance data preparation through three key capabilities. First, they excel at scenario creation by generating realistic urban situations—from routine traffic patterns to complex scenarios like public events or emergency situations—providing researchers with diverse datasets for simulation and analysis. Second, LLMs address data scarcity through augmentation, expanding limited datasets by synthesizing plausible variations of existing data points, which is particularly valuable when studying under-represented neighborhoods or rare urban events. Third, these models facilitate the creation of multimodal datasets by transforming unstructured text (such as planning documents, social media posts, or citizen feedback) into structured formats while also integrating various data types. like text-enhanced visual data or location-based information, with textual descriptions. This comprehensive approach enables more nuanced analysis of urban phenomena by capturing multiple dimensions of city life that traditional data collection methods might miss.

- Post-modeling: After urban models are developed, LLMs enhance their validation, interpretation, and practical application in three ways. First, through scenario testing, LLMs generate diverse test cases—such as different weather conditions, varying traffic volumes, or unexpected events—allowing urban planners to evaluate model robustness across a wide range of realistic situations. Second, these models excel at interpretation and explanation by converting complex analytical outputs into clear, contextual narratives that stakeholders can understand. For instance, when a traffic model predicts congestion patterns, an LLM can explain the underlying factors in plain language, making the insights accessible to policymakers and citizens alike. Third, LLMs support suggestion generation by synthesizing model outputs into actionable recommendations, such as by proposing specific policy interventions based on predicted outcomes or generating detailed implementation strategies for urban development projects. This capability transforms raw analytical results into practical guidance for urban planning and policy-making.

Scenario and Solution Generation

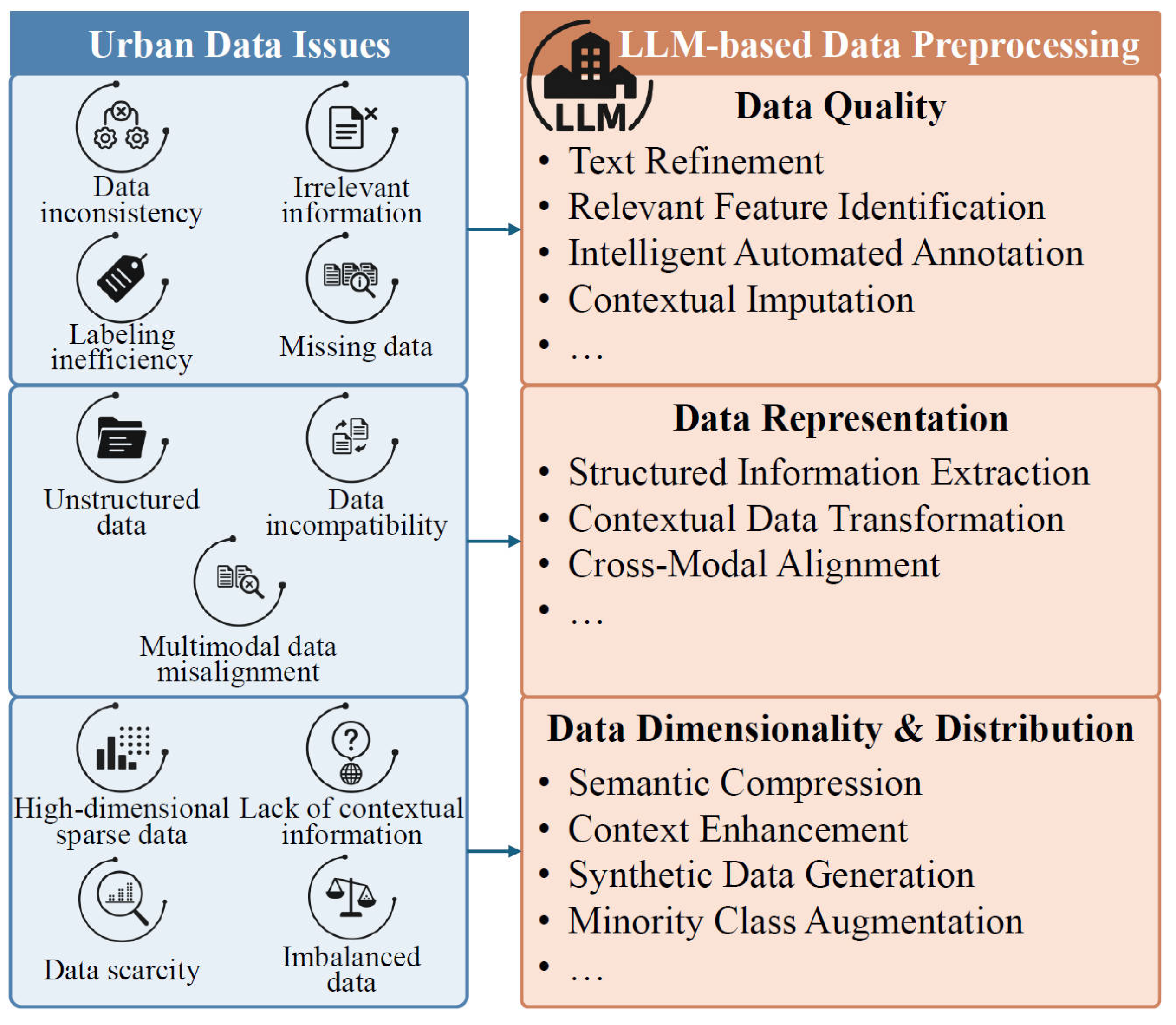

3.3. Preprocessing

3.3.1. Data Quality Issues

3.3.2. Data Representation Issues

3.3.3. Data Dimensionality and Distribution Issues

3.3.4. Representative Application

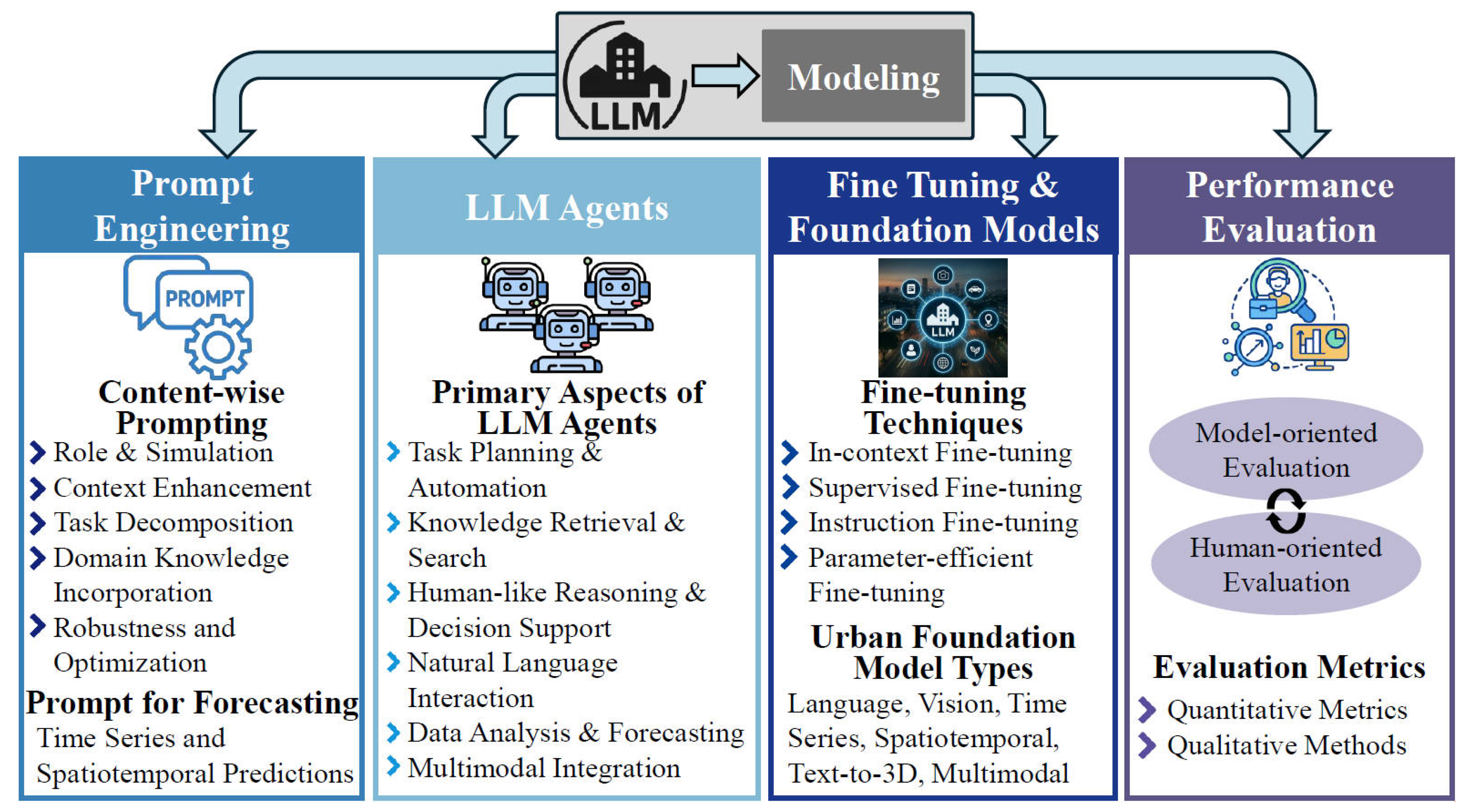

3.4. Modeling

3.4.1. Prompt Engineering

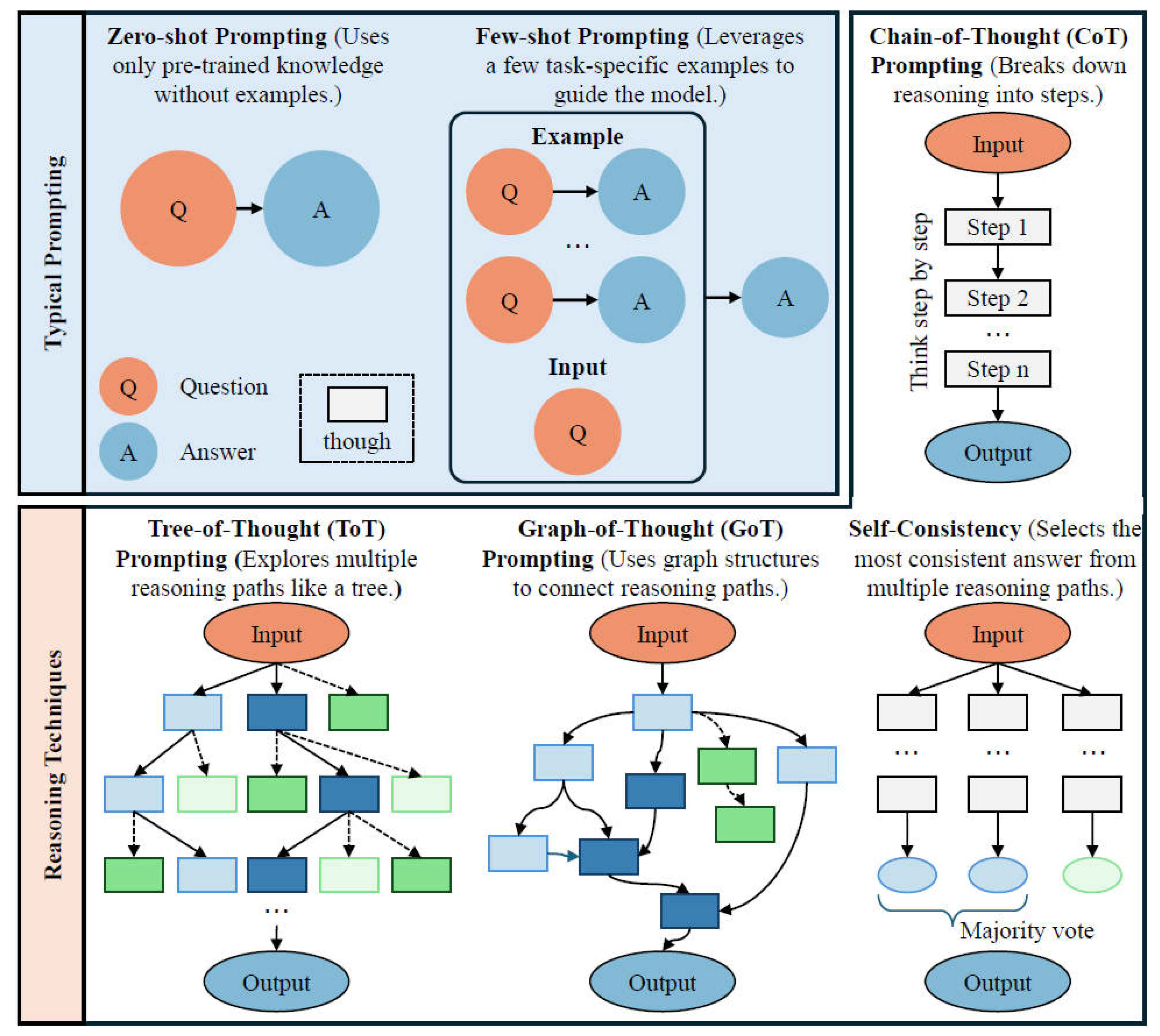

Representative Prompting Techniques

Content-Wise Prompting

Promptsfor Forecasting

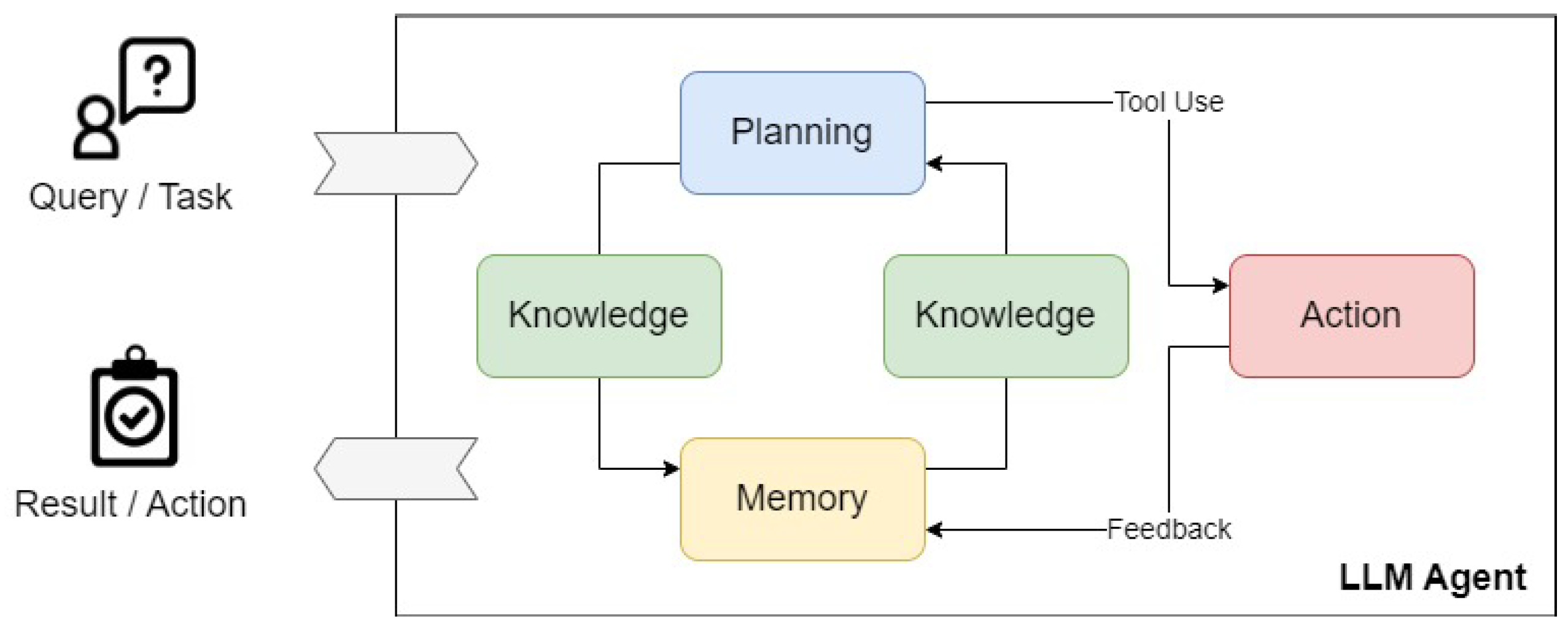

3.4.2. LLM Agents

Key Components

- Knowledge: This foundational element encompasses contextual information and domain expertise, allowing the agent to draw upon relevant urban-specific data for its tasks.

- Planning: This component empowers the agent to break down complex tasks and create strategies to solve them, incorporating various planning approaches and feedback mechanisms.

- Memory: By storing and recalling information from past interactions, the memory component allows the agent to evolve and learn, informing future actions.

- Action: This component translates the agent’s decisions into specific outcomes and tool uses, directly interacting with the environment to achieve defined goals.

LLM Agents in Urban Analytics

3.4.3. Fine-Tuning and Foundation Models

Fine-Tuning

- In-context fine-tuning (Prompt engineering): This technique involves crafting specific prompts and adding few-shot examples to guide the model’s behavior without changing its parameters. It is useful for quick adaptations and when labeled data is scarce.

- Supervised fine-tuning: This approach involves fine-tuning a pre-trained model on a labeled dataset specific to the target task. It is ideal when substantial labeled data is available and domain-specific performance is crucial.

- Instruction fine-tuning: This method involves fine-tuning a pre-trained model on a dataset of instructions and their corresponding outputs. It is particularly effective for task-specific applications and improving model interpretability.

- Parameter-Efficient Fine-tuning (PEFT): PEFT techniques aim to adapt pre-trained models to new tasks while updating only a small subset of the model’s parameters. This approach is suitable when computational resources are not sufficient or when preserving general knowledge is important.

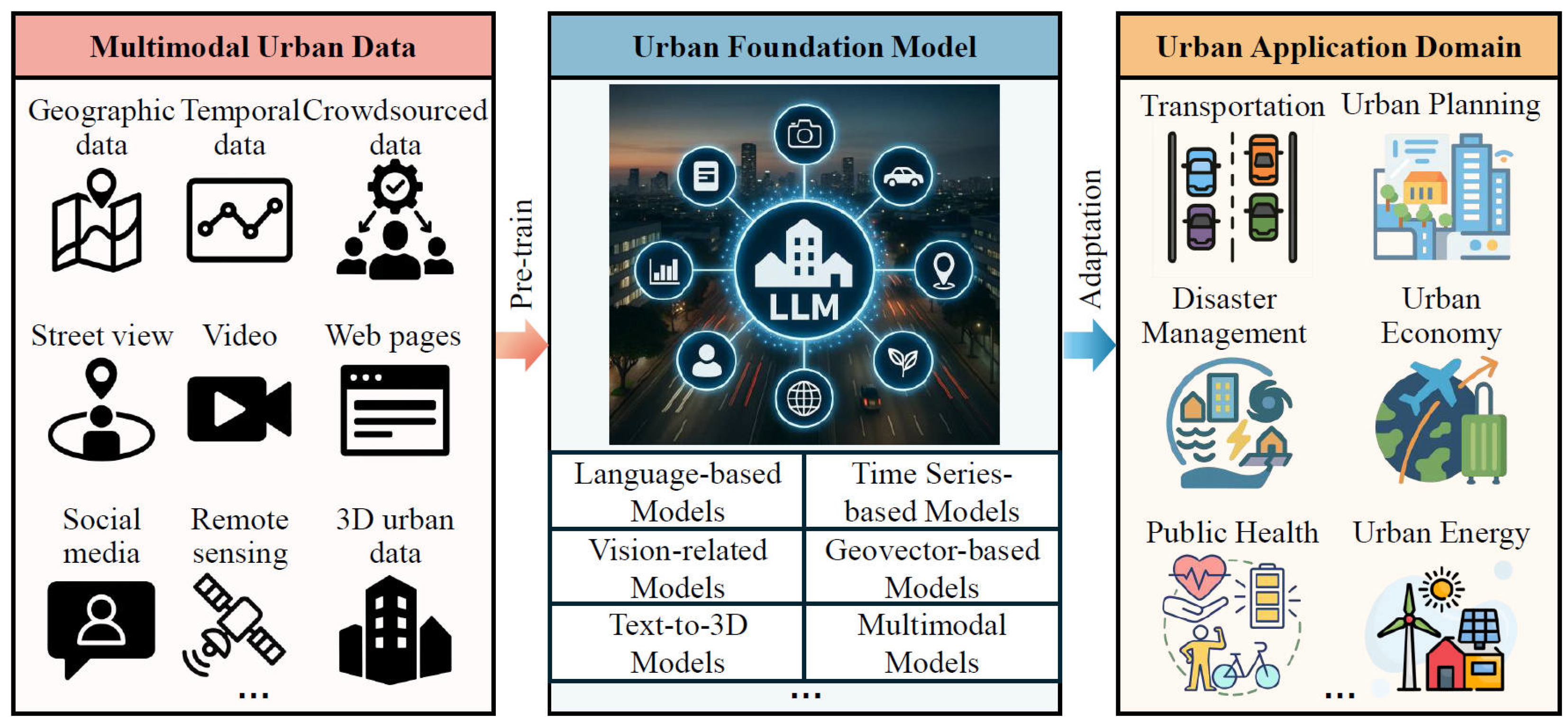

Urban Foundation Models

3.4.4. Performance Evaluation

Model-Oriented Evaluation

- Quality of Generated Data: Assessing the accuracy and realism of synthetic data produced by LLMs is crucial in urban analytics, where data quality directly impacts decision-making. For example, ref. [77] evaluated the realism of AI-generated driving scenarios using metrics like collision rates and emergency braking incidents, demonstrating the importance of high-quality synthetic data in urban transportation planning.

- Prediction Performance: Measuring the accuracy of LLM predictions on various urban tasks is essential for ensuring reliable outcomes. Ref. [63] assessed traffic flow prediction accuracy using metrics such as Mean Absolute Error (MAE) and Root Mean Square Error (RMSE), highlighting the critical role of precise predictions in urban mobility management.

- Task Completion: The ability of LLMs to successfully complete complex urban tasks is another important aspect, particularly in agent-based applications. Ref. [172] measured task completion in GeoLLM-Engine by assessing both the functional correctness of an agent’s tool usage and the success rate of achieving the expected end state of a task, using automated model-checking techniques that compare the agent’s actions and outcomes against pre-defined ground truths. In traffic signal control tasks, a fine-tuned model [85] achieved a 23.6% reduction in average vehicle delay and an 18.9% increase in throughput, while a prompt-based system [64] reduced average waiting time by 21.4%.

- Robustness, Generalization, and Efficiency: While less common in current urban analytics applications, these aspects are gaining importance as LLMs become more integrated into urban systems. Evaluating LLM performance under varying conditions or with noisy input data (robustness), on unseen data or in new urban environments (generalization), and in terms of computational resources and time required (efficiency) is critical for ensuring the practical applicability of LLMs in diverse urban contexts. For instance, ref. [70] tested LLM-based mobility prediction models on days with and without public events to assess robustness, ref. [97] evaluated the performance of urban cross-modal retrieval models across different cities for generalization, and ref. [50] compared the efficiency of LLM-based approaches with that of traditional optimization methods for delivery route planning.

Human-Oriented Evaluation

- Interpretability: Evaluating the explainability of LLM decisions and outputs is crucial for building trust and understanding, especially in urban planning and policy-making contexts. Ref. [57] assessed the quality and relevance of explanations provided by LLMs for traffic control decisions, demonstrating the importance of transparent AI systems in urban management.

- Human–AI Collaboration: Assessing how well LLMs support and enhance human decision-making in urban contexts is vital for maximizing the synergy between human expertise and AI capabilities. Ref. [35] examined the impact of ChatGPT assistance on human performance in traffic simulation tasks, highlighting the potential of LLMs to augment human problem-solving in complex urban scenarios.

- Ethical Considerations: Evaluating LLM adherence to ethical guidelines and potential biases in urban applications is essential for ensuring fair and responsible AI use in city planning and management. Ref. [135] assessed LLM-generated content for potential biases in tourism marketing, underscoring the need for vigilance in maintaining ethical standards as LLMs become more prevalent in shaping urban narratives and experiences.

Evaluation Metrics

- Quantitative Metrics: These provide quantitative measures of LLM performance. They include accuracy for tasks like question answering or text classification [167], exact matchingfor precise output evaluation [46], common machine learning metrics such as F1 score [126], response time for real-time applications [37], consistency across different input formats [160], and task-specific metrics like collision rate in autonomous driving simulations [79]. These metrics offer a standardized way to assess and compare LLM performance across various urban analytics tasks.

- Qualitative Methods: These provide deeper insights into LLM performance and user interaction. They include expert reviews to assess accuracy and relevance [146], user satisfaction surveys to gauge usability and effectiveness [90], in-depth case studies to understand model behavior in specific scenarios [155], and ethical assessments to evaluate adherence to guidelines and potential biases [127]. These methods are crucial for understanding the nuanced performance of LLMs in complex urban contexts and their impact on stakeholders.

3.5. Post-Analysis

- Interactivity: LLMs have enabled more interactive and responsive urban data analytics systems, primarily through QA systems and automatic surveys. LLM-powered QA systems allow users to interact with urban data in natural language, as seen in [109]’s system for answering queries about flood situations in real time and [57]’s system for providing explanations for traffic control decisions. In terms of surveys, LLMs can assist in conducting surveysand analyzing public opinion on urban issues. Ref. [138] used LLMs to assess customer satisfaction in tourism, while ref. [145] analyzed public opinion on nuclear power. These interactive capabilities make urban data more accessible to non-experts, enable rapid information retrieval, and allow for more efficient and comprehensive assessment of public sentiment on urban policies and developments.

- Accessibility: LLMs have significantly improved the accessibility of urban data analysis results through various techniques. These include automatic report generation, result visualization, and result/decision explanation. For instance, ref. [58] demonstrated the use of LLMs in creating traffic advisory reports, while ref. [105] showcased their application in producing detailed ecological construction reports. In terms of visualization, ref. [34] utilized LLMs to visualize geospatial trends in transit feedback, and ref. [164] employed them to create maps and charts for COVID-19 death-rate analysis. In addition, LLMs can provide explanations for results or decisions, as demonstrated by [57] in the context of traffic signal control decisions and by [48] in explaining predictions for autonomous driving. These capabilities collectively enhance the understanding and usability of complex urban data analysis results.

- Decision Support: LLMs have significantly enhanced decision-support capabilities in urban analytics through scenario/policy simulation, decision analysis, and personalized recommendations. In scenario simulation, ref. [113] demonstrated the use of LLMs in simulating disaster scenarios for education, while ref. [59] used them to generate activity patterns under different conditions. For decision analysis, ref. [60] showed how LLMs could aid in traffic management and urban planning decisions, and ref. [152] demonstrated their use in supporting policy-making for health and disaster management. In terms of personalized recommendations, ref. [171] used LLMs to create tailored travel itineraries, while ref. [113] developed a system for providing personalized emergency guidance. These capabilities collectively provide valuable insights for urban planning and policy formulation, assist in complex decision-making processes, and allow for more targeted and effective urban services.

3.6. Summary

4. Future Directions and Challenges

4.1. Overview of the 3E Framework

- Expanding Information Dimensions (Data): This pillar focuses on enriching the data ecosystem by integrating diverse sources (structured, unstructured, visual, and temporal) to create comprehensive digital representations of urban systems, breaking down data silos and enabling access to domain-specific knowledge, establishing a foundation for holistic urban analysis.

- Enhancing Model Capabilities (Model): This pillar addresses the development of LLMs specifically designed to process urban data complexities. This includes creating models that effectively handle multimodal information, capture spatial–temporal dynamics, and operate efficiently at scale—transforming raw urban data into actionable intelligence.

- Executing Advanced Applications (Application): This pillar bridges technological innovation and real-world impact by deploying LLMs to address complex urban challenges, exploring applications in real-time decision-making, multi-agent simulations, and cross-domain collaboration to deliver tangible benefits for urban planning and policy-making.

4.2. Expanding Information Dimensions (Data)

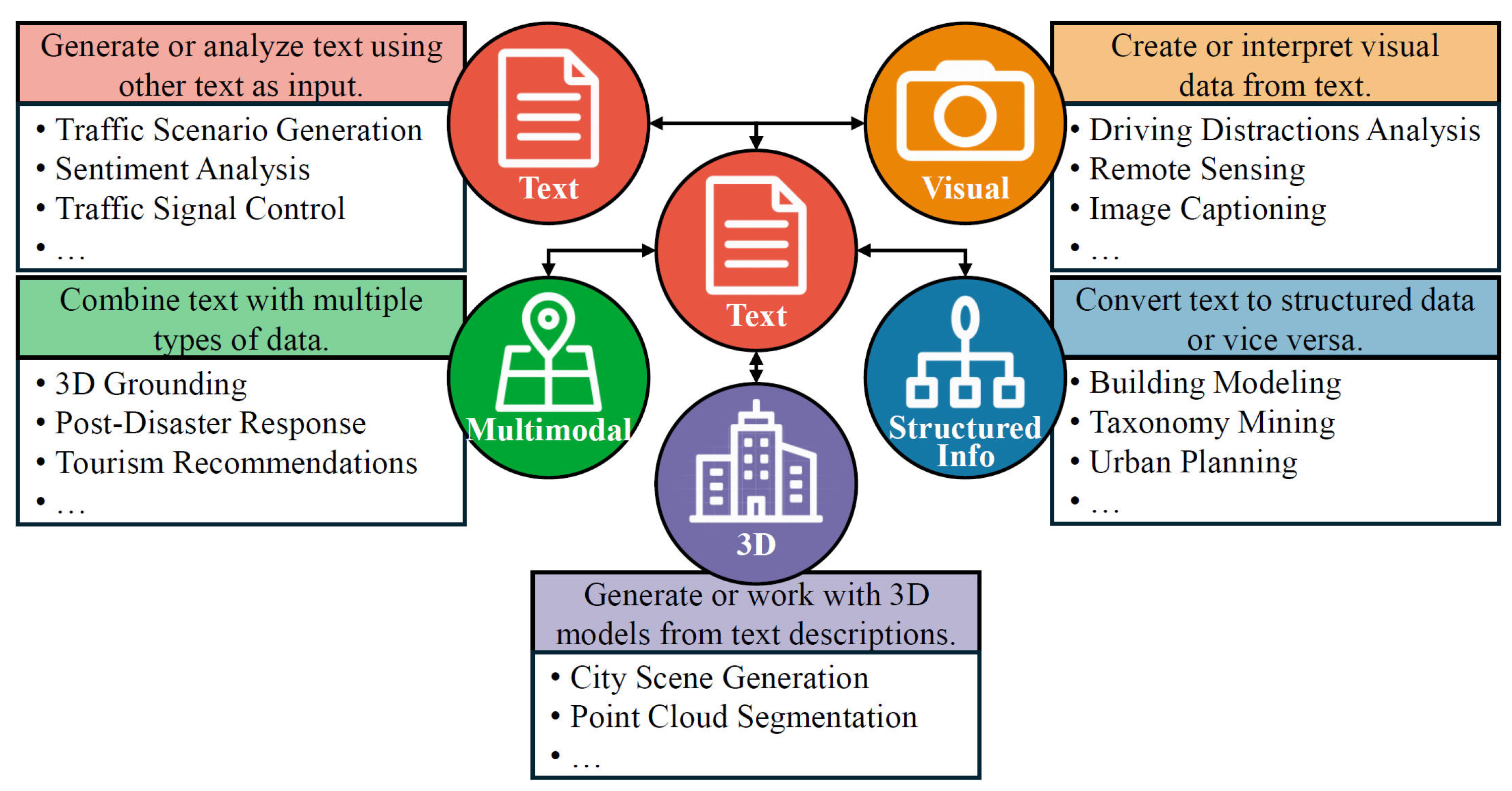

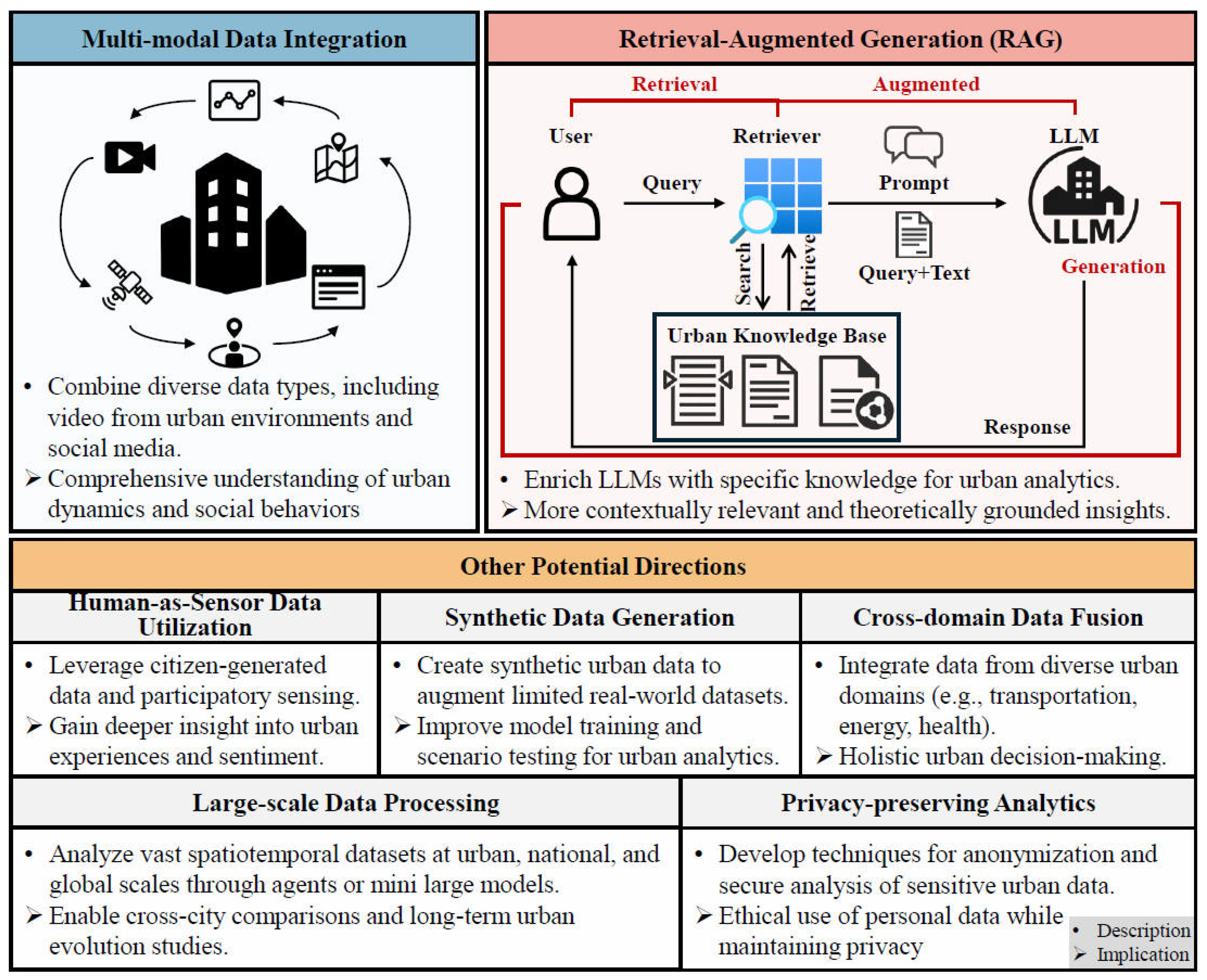

4.2.1. Multimodal Data Integration

Expanding Integration Scope

Advanced Modalities

Potential Applications and Summary

4.2.2. Retrieval-Augmented Generation (RAG)

Potential Applications

4.2.3. Others Data Dimensions

- Human-as-Sensor Data Sources: Research is needed on the effective incorporation of unstructured human observations (social media, feedback forms, and community forums) as reliable data sources for urban analytics. Key challenges include the development of data validation frameworks for citizen-reported information and addressing representation biases in voluntarily contributed urban data.

- Synthetic Urban Data: Opportunities exist for the use of LLMs to generate synthetic urban datasets that can fill gaps in historical records while preserving statistical properties. Future research should focus on validating the fidelity of LLM-generated synthetic data against known urban patterns and ensuring it correctly reflects urban system interdependencies.

- Cross-Domain Data Integration: Research is needed on the creation of standardized approaches for the merging of datasets across traditionally separate domains (e.g., transportation, public health, and economic development) while maintaining semantic consistency. This includes developing shared data schemas and crosswalks between different urban data taxonomies.

- Large-Scale Urban Data Processing: Investigations into efficient methods for processing city-scale heterogeneous datasets with LLMs represent an important research direction. This includes developing techniques for partitioning and processing massive urban datasets while maintaining context across analysis segments.

- Privacy-Preserving Data Techniques: Critical research is needed on the incorporation of differential privacy and anonymization techniques specifically designed for urban data when processed through LLMs, especially for sensitive information like mobility patterns, utility usage, and public service access.

4.3. Enhancing Model Capabilities (Model)

4.3.1. Large Foundation Models

Multimodal Foundation Models

Enhanced Temporal and Spatial Awareness

4.3.2. Smaller Models for Large-Scale Analysis

Opportunities

4.4. Executing Advanced Applications (Application)

4.4.1. Enhancing Existing Workflows

4.4.2. Multi-Agent Collaborations

4.4.3. Others

4.5. Potential Applications of the 3E Framework

4.5.1. Urban Resilience and Disaster Management

4.5.2. Urban Mobility and Transportation Systems

4.5.3. Sustainable Urban Development

4.5.4. Summary

4.6. Discussion

4.6.1. Hallucination and Trustworthiness

4.6.2. Scalability and Computational Requirements

4.6.3. Fairness in Urban Decision-Making

4.6.4. Ethical and Privacy Issues

5. Conclusions

- Expanding Information Dimensions: This pillar focuses on multimodal data integration and retrieval-augmented generation to enable more comprehensive urban analyses.

- Enhancing Model Capabilities: This pillar involves developing multimodal foundation models, improving temporal and spatial awareness, and creating efficient smaller models for large-scale analysis.

- Executing Advanced Applications: This pillar concerns the enhancement of existing workflows through automation and human–AI collaboration and the exploration of multi-agent systems for complex urban simulations.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zou, X.; Yan, Y.; Hao, X.; Hu, Y.; Wen, H.; Liu, E.; Zhang, J.; Li, Y.; Li, T.; Zheng, Y.; et al. Deep Learning for Cross-Domain Data Fusion in Urban Computing: Taxonomy, Advances, and Outlook. Inf. Fusion 2025, 113, 102606. [Google Scholar] [CrossRef]

- Bettencourt, L.M. The origins of scaling in cities. Science 2013, 340, 1438–1441. [Google Scholar] [CrossRef]

- Wang, S.; Hu, T.; Xiao, H.; Li, Y.; Zhang, C.; Ning, H.; Zhu, R.; Li, Z.; Ye, X. GPT, large language models (LLMs) and generative artificial intelligence (GAI) models in geospatial science: A systematic review. Int. J. Digit. Earth 2024, 17, 2353122. [Google Scholar] [CrossRef]

- Yan, H.; Li, Y. A Survey of Generative AI for Intelligent Transportation Systems. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, W.; Han, J.; Xu, Z.; Ni, H.; Lyu, T.; Liu, H.; Xiong, H. Towards Urban General Intelligence: A Review and Outlook of Urban Foundation Models. arXiv 2025. [Google Scholar] [CrossRef]

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Zhou, Y.; Liang, K.; Chen, J.; Lu, J.; Yang, Z.; Liao, K.D.; et al. A Survey on Multimodal Large Language Models for Autonomous Driving. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision Workshops (WACVW), Waikoloa, HI, USA, 1–6 January 2024; pp. 958–979. [Google Scholar] [CrossRef]

- Sufi, F. A systematic review on the dimensions of open-source disaster intelligence using GPT. J. Econ. Technol. 2024, 2, 62–78. [Google Scholar] [CrossRef]

- Saka, A.; Taiwo, R.; Saka, N.; Salami, B.A.; Ajayi, S.; Akande, K.; Kazemi, H. GPT models in construction industry: Opportunities, limitations, and a use case validation. Dev. Built Environ. 2024, 17, 100300. [Google Scholar] [CrossRef]

- Zhang, W.; Han, J.; Xu, Z.; Ni, H.; Liu, H.; Xiong, H. Urban Foundation Models: A Survey. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 6633–6643. [Google Scholar] [CrossRef]

- Xu, F.; Zhang, J.; Gao, C.; Feng, J.; Li, Y. Urban Generative Intelligence (UGI): A Foundational Platform for Agents in Embodied City Environment. arXiv 2023. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Minaee, S.; Mikolov, T.; Nikzad, N.; Chenaghlu, M.; Socher, R.; Amatriain, X.; Gao, J. Large Language Models: A Survey. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, S.F.; Goodman, J. An empirical study of smoothing techniques for language modeling. Comput. Speech Lang. 1999, 13, 359–394. [Google Scholar] [CrossRef]

- Bengio, Y.; Ducharme, R.; Vincent, P. A Neural Probabilistic Language Model. In Proceedings of the Advances in Neural Information Processing Systems; Leen, T., Dietterich, T., Tresp, V., Eds.; MIT Press: Cambridge, MA, USA, 2000; Volume 13. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Vancouver, BC, Canada, 2020; pp. 1877–1901. [Google Scholar]

- Fedus, W.; Zoph, B.; Shazeer, N. Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. arXiv 2022, arXiv:2101.03961. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar] [CrossRef]

- Team, G. Gemma: Open Models Based on Gemini Research and Technology. arXiv 2024, arXiv:2403.08295. [Google Scholar] [CrossRef]

- Abdin, M.; Aneja, J.; Awadalla, H.; Awadallah, A.; Awan, A.A.; Bach, N.; Bahree, A.; Bakhtiari, A.; Bao, J.; Behl, H.; et al. Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone. arXiv 2024, arXiv:2404.14219. [Google Scholar] [CrossRef]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Jiang, A.Q.; Sablayrolles, A.; Roux, A.; Mensch, A.; Savary, B.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Hanna, E.B.; Bressand, F.; et al. Mixtral of Experts. arXiv 2024, arXiv:2401.04088. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Google, G.T. Gemini: A Family of Highly Capable Multimodal Models. arXiv 2024, arXiv:2312.11805. [Google Scholar]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A Survey on Evaluation of Large Language Models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Chumakov, S.; Kovantsev, A.; Surikov, A. Generative approach to aspect based sentiment analysis with GPT language models. Procedia Comput. Sci. 2023, 229, 284–293. [Google Scholar] [CrossRef]

- Rosca, C.M.; Stancu, A. Quality Assessment of GPT-3.5 and Gemini 1.0 Pro for SQL Syntax. Comput. Stand. Interfaces 2025, 95, 104041. [Google Scholar] [CrossRef]

- Crooks, A.; Chen, Q. Exploring the new frontier of information extraction through large language models in urban analytics. Environ. Plan. B Urban Anal. City Sci. 2024, 51, 565–569. [Google Scholar] [CrossRef]

- Cui, C.; Ma, Y.; Cao, X.; Ye, W.; Wang, Z. Drive as You Speak: Enabling Human-Like Interaction with Large Language Models in Autonomous Vehicles. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, S.; Fu, D.; Liang, W.; Zhang, Z.; Yu, B.; Cai, P.; Yao, B. TrafficGPT: Viewing, Processing and Interacting with Traffic Foundation Models. Transp. Policy 2024, 150, 95–105. [Google Scholar] [CrossRef]

- Leong, M.; Abdelhalim, A.; Ha, J.; Patterson, D.; Pincus, G.L.; Harris, A.B.; Eichler, M.; Zhao, J. MetRoBERTa: Leveraging Traditional Customer Relationship Management Data to Develop a Transit-Topic-Aware Language Model. arXiv 2023. [Google Scholar] [CrossRef]

- Villarreal, M.; Poudel, B.; Li, W. Can ChatGPT Enable ITS? The Case of Mixed Traffic Control via Reinforcement Learning. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, Z.; Amiri, H.; Liu, Z.; Züfle, A.; Zhao, L. Large Language Models for Spatial Trajectory Patterns Mining. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, S.; Jia, N.; Zhao, L.; Han, C.; Li, L. Integrating visual large language model and reasoning chain for driver behavior analysis and risk assessment. Accid. Anal. Prev. 2024, 198, 107497. [Google Scholar] [CrossRef]

- Sha, H.; Mu, Y.; Jiang, Y.; Chen, L.; Xu, C.; Luo, P.; Li, S.E.; Tomizuka, M.; Zhan, W.; Ding, M. LanguageMPC: Large Language Models as Decision Makers for Autonomous Driving. arXiv 2023. [Google Scholar] [CrossRef]

- Ge, J.; Chang, C.; Zhang, J.; Li, L.; Na, X.; Lin, Y.; Li, L.; Wang, F.Y. LLM-Based Operating Systems for Automated Vehicles: A New Perspective. IEEE Trans. Intell. Veh. 2024, 9, 4563–4567. [Google Scholar] [CrossRef]

- Wong, I.A.; Lian, Q.L.; Sun, D. Autonomous travel decision-making: An early glimpse into ChatGPT and generative AI. J. Hosp. Tour. Manag. 2023, 56, 253–263. [Google Scholar] [CrossRef]

- Li, X.; Liu, E.; Shen, T.; Huang, J.; Wang, F.Y. ChatGPT-Based Scenario Engineer: A New Framework on Scenario Generation for Trajectory Prediction. IEEE Trans. Intell. Veh. 2024, 9, 4422–4431. [Google Scholar] [CrossRef]

- Wang, X.; Fang, M.; Zeng, Z.; Cheng, T. Where Would I Go Next? Large Language Models as Human Mobility Predictors. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, Y.; Kuai, C.; Ma, H.; Liao, X.; He, B.Y.; Ma, J. Semantic Trajectory Data Mining with LLM-Informed POI Classification. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, S.; Zhu, Y.; Li, Z.; Wang, Y.; Li, L.; He, Z. ChatGPT as Your Vehicle Co-Pilot: An Initial Attempt. IEEE Trans. Intell. Veh. 2023, 8, 4706–4721. [Google Scholar] [CrossRef]

- Fu, D.; Li, X.; Wen, L.; Dou, M.; Cai, P.; Shi, B.; Qiao, Y. Drive Like a Human: Rethinking Autonomous Driving with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Zheng, O.; Abdel-Aty, M.; Wang, D.; Wang, Z.; Ding, S. ChatGPT Is on the Horizon: Could a Large Language Model Be Suitable for Intelligent Traffic Safety Research and Applications? arXiv 2023. [Google Scholar] [CrossRef]

- Ha, S.V.U.; Le, H.D.A.; Nguyen, Q.Q.V.; Chung, N.M. DAKRS: Domain Adaptive Knowledge-Based Retrieval System for Natural Language-Based Vehicle Retrieval. IEEE Access 2023, 11, 90951–90965. [Google Scholar] [CrossRef]

- Peng, M.; Guo, X.; Chen, X.; Zhu, M.; Chen, K.; Wang, F.Y. LC-LLM: Explainable Lane-Change Intention and Trajectory Predictions with Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Syum Gebre, T.; Beni, L.; Tsehaye Wasehun, E.; Elikem Dorbu, F. AI-Integrated Traffic Information System: A Synergistic Approach of Physics Informed Neural Network and GPT-4 for Traffic Estimation and Real-Time Assistance. IEEE Access 2024, 12, 65869–65882. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, F.; Liu, Z.; Wang, K.; Wang, F.; Qu, X. Can language models be used for real-world urban-delivery route optimization? Innov. 2023, 4, 100520. [Google Scholar] [CrossRef]

- Zhang, Q.; Mott, J.H. An Exploratory Assessment of LLM’s Potential Toward Flight Trajectory Reconstruction Analysis. arXiv 2024. [Google Scholar] [CrossRef]

- Mo, B.; Xu, H.; Zhuang, D.; Ma, R.; Guo, X.; Zhao, J. Large Language Models for Travel Behavior Prediction. arXiv 2023. [Google Scholar] [CrossRef]

- Chen, J.; Lin, B.; Xu, R.; Chai, Z.; Liang, X.; Wong, K.Y.K. MapGPT: Map-Guided Prompting with Adaptive Path Planning for Vision-and-Language Navigation. arXiv 2024. [Google Scholar] [CrossRef]

- Cui, Y.; Huang, S.; Zhong, J.; Liu, Z.; Wang, Y.; Sun, C.; Li, B.; Wang, X.; Khajepour, A. DriveLLM: Charting the Path Toward Full Autonomous Driving with Large Language Models. IEEE Trans. Intell. Veh. 2024, 9, 1450–1464. [Google Scholar] [CrossRef]

- Mao, J.; Qian, Y.; Ye, J.; Zhao, H.; Wang, Y. GPT-Driver: Learning to Drive with GPT. arXiv 2023. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, W.; Lin, H.; Liu, Y.; Qu, X. Applying masked language model for transport mode choice behavior prediction. Transp. Res. Part A Policy Pract. 2024, 184, 104074. [Google Scholar] [CrossRef]

- Wang, M.; Pang, A.; Kan, Y.; Pun, M.O.; Chen, C.S.; Huang, B. LLM-Assisted Light: Leveraging Large Language Model Capabilities for Human-Mimetic Traffic Signal Control in Complex Urban Environments. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, B.; Cai, Z.; Karim, M.M.; Liu, C.; Wang, Y. Traffic Performance GPT (TP-GPT): Real-Time Data Informed Intelligent ChatBot for Transportation Surveillance and Management. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, R.; Yang, C.; Wu, Z.; Onizuka, M.; Shibasaki, R.; Koshizuka, N.; Xiao, C. Large Language Models as Urban Residents: An LLM Agent Framework for Personal Mobility Generation. arXiv 2024. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, X.; Lv, Y.; Tian, Y.; Lin, Y.; Wang, F.Y. Parallel Transportation in TransVerse: From Foundation Models to DeCAST. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15310–15327. [Google Scholar] [CrossRef]

- Grigorev, A.; Saleh, A.S.M.K.; Ou, Y. IncidentResponseGPT: Generating Traffic Incident Response Plans with Generative Artificial Intelligence. arXiv 2024. [Google Scholar] [CrossRef]

- Sultan, R.I.; Li, C.; Zhu, H.; Khanduri, P.; Brocanelli, M.; Zhu, D. GeoSAM: Fine-tuning SAM with Sparse and Dense Visual Prompting for Automated Segmentation of Mobility Infrastructure. arXiv 2024. [Google Scholar] [CrossRef]

- Guo, X.; Zhang, Q.; Jiang, J.; Peng, M.; Yang, H.F.; Zhu, M. Towards Responsible and Reliable Traffic Flow Prediction with Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Lai, S.; Xu, Z.; Zhang, W.; Liu, H.; Xiong, H. LLMLight: Large Language Models as Traffic Signal Control Agents. arXiv 2024. [Google Scholar] [CrossRef]

- Liao, H.; Shen, H.; Li, Z.; Wang, C.; Li, G.; Bie, Y.; Xu, C. GPT-4 enhanced multimodal grounding for autonomous driving: Leveraging cross-modal attention with large language models. Commun. Transp. Res. 2024, 4, 100116. [Google Scholar] [CrossRef]

- Du, H.; Teng, S.; Chen, H.; Ma, J.; Wang, X.; Gou, C.; Li, B.; Ma, S.; Miao, Q.; Na, X.; et al. Chat with ChatGPT on Intelligent Vehicles: An IEEE TIV Perspective. IEEE Trans. Intell. Veh. 2023, 8, 2020–2026. [Google Scholar] [CrossRef]

- Qu, X.; Lin, H.; Liu, Y. Envisioning the future of transportation: Inspiration of ChatGPT and large models. Commun. Transp. Res. 2023, 3, 100103. [Google Scholar] [CrossRef]

- Güzay, Ç.; Özdemir, E.; Kara, Y. A Generative AI-driven Application: Use of Large Language Models for Traffic Scenario Generation. In Proceedings of the 2023 14th International Conference on Electrical and Electronics Engineering (ELECO), Bursa, Turkey, 30 November–2 December 2023. [Google Scholar] [CrossRef]

- Da, L.; Gao, M.; Mei, H.; Wei, H. Prompt to Transfer: Sim-to-Real Transfer for Traffic Signal Control with Prompt Learning. arXiv 2024. [Google Scholar] [CrossRef]

- Liang, Y.; Liu, Y.; Wang, X.; Zhao, Z. Exploring Large Language Models for Human Mobility Prediction under Public Events. arXiv 2023. [Google Scholar] [CrossRef]

- Shi, Y.; Lv, F.; Wang, X.; Xia, C.; Li, S.; Yang, S.; Xi, T.; Zhang, G. Open-TransMind: A New Baseline and Benchmark for 1st Foundation Model Challenge of Intelligent Transportation. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, K.; Zhou, F.; Wu, L.; Xie, N.; He, Z. Semantic understanding and prompt engineering for large-scale traffic data imputation. Inf. Fusion 2024, 102, 102038. [Google Scholar] [CrossRef]

- Zheng, O.; Abdel-Aty, M.; Wang, D.; Wang, C.; Ding, S. TrafficSafetyGPT: Tuning a Pre-trained Large Language Model to a Domain-Specific Expert in Transportation Safety. arXiv 2023. [Google Scholar] [CrossRef]

- Tang, Y.; Dai, X.; Lv, Y. Large Language Model-Assisted Arterial Traffic Signal Control. IEEE J. Radio Freq. Identif. 2024, 8, 322–326. [Google Scholar] [CrossRef]

- Tian, Y.; Li, X.; Zhang, H.; Zhao, C.; Li, B.; Wang, X.; Wang, X.; Wang, F.Y. VistaGPT: Generative Parallel Transformers for Vehicles with Intelligent Systems for Transport Automation. IEEE Trans. Intell. Veh. 2023, 8, 4198–4207. [Google Scholar] [CrossRef]

- De Zarzà, I.; De Curtò, J.; Roig, G.; Calafate, C.T. LLM Multimodal Traffic Accident Forecasting. Sensors 2023, 23, 9225. [Google Scholar] [CrossRef]

- Adekanye, O.A.M. LLM-Powered Synthetic Environments for Self-Driving Scenarios. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 23721–23723. [Google Scholar] [CrossRef]

- Li, Y.; Li, L.; Wu, Z.; Bing, Z.; Xuanyuan, Z.; Knoll, A.C.; Chen, L. UnstrPrompt: Large Language Model Prompt for Driving in Unstructured Scenarios. IEEE J. Radio Freq. Identif. 2024, 8, 367–375. [Google Scholar] [CrossRef]

- Jin, Y.; Shen, X.; Peng, H.; Liu, X.; Qin, J.; Li, J.; Xie, J.; Gao, P.; Zhou, G.; Gong, J. SurrealDriver: Designing Generative Driver Agent Simulation Framework in Urban Contexts based on Large Language Model. arXiv 2023. [Google Scholar] [CrossRef]

- Liu, Y. Large language models for air transportation: A critical review. J. Air Transp. Res. Soc. 2024, 2, 100024. [Google Scholar] [CrossRef]

- Wang, X.; Wang, D.; Chen, L.; Lin, Y. Building Transportation Foundation Model via Generative Graph Transformer. arXiv 2023. [Google Scholar] [CrossRef]

- Yang, Z.; Jia, X.; Li, H.; Yan, J. LLM4Drive: A Survey of Large Language Models for Autonomous Driving. arXiv 2024. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, Y.; Wang, Z.; Nie, Y.; Ma, X.; Li, R.; Sun, P.; Ban, X. Large Language Models for Mobility Analysis in Transportation Systems: A Survey on Forecasting Tasks. arXiv 2025. [Google Scholar] [CrossRef]

- Li, S.; Azfar, T.; Ke, R. ChatSUMO: Large Language Model for Automating Traffic Scenario Generation in Simulation of Urban MObility. arXiv 2024. [Google Scholar] [CrossRef]

- Yuan, Z.; Lai, S.; Liu, H. CoLLMLight: Cooperative Large Language Model Agents for Network-Wide Traffic Signal Control. arXiv 2025. [Google Scholar] [CrossRef]

- Onsu, M.A.; Lohan, P.; Kantarci, B.; Syed, A.; Andrews, M.; Kennedy, S. Leveraging Multimodal-LLMs Assisted by Instance Segmentation for Intelligent Traffic Monitoring. arXiv 2025. [Google Scholar] [CrossRef]

- Lu, Q.; Wang, X.; Jiang, Y.; Zhao, G.; Ma, M.; Feng, S. Multimodal Large Language Model Driven Scenario Testing for Autonomous Vehicles. arXiv 2024. [Google Scholar] [CrossRef]

- Guo, X.; Zhang, Q.; Jiang, J.; Peng, M.; Zhu, M.; Yang, H. Towards Explainable Traffic Flow Prediction with Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, D.; Lu, C.T.; Fu, Y. Towards Automated Urban Planning: When Generative and ChatGPT-like AI Meets Urban Planning. arXiv 2023. [Google Scholar] [CrossRef]

- Deng, J.; Chai, W.; Huang, J.; Zhao, Z.; Huang, Q.; Gao, M.; Guo, J.; Hao, S.; Hu, W.; Hwang, J.N.; et al. CityCraft: A Real Crafter for 3D City Generation. arXiv 2024. [Google Scholar] [CrossRef]

- Balsebre, P.; Huang, W.; Cong, G.; Li, Y. City Foundation Models for Learning General Purpose Representations from OpenStreetMap. arXiv 2023, arXiv:2310.00583. [Google Scholar] [CrossRef]

- Aghzal, M.; Plaku, E.; Yao, Z. Can Large Language Models be Good Path Planners? A Benchmark and Investigation on Spatial-temporal Reasoning. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, X.; Xu, G. GATGPT: A Pre-trained Large Language Model with Graph Attention Network for Spatiotemporal Imputation. arXiv 2023. [Google Scholar] [CrossRef]

- Li, Z.; Xia, L.; Tang, J.; Xu, Y.; Shi, L.; Xia, L.; Yin, D.; Huang, C. UrbanGPT: Spatio-Temporal Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, J.; Xu, W.; Cao, H.; Xu, Z.; Zhang, Y.; Zhang, Z.; Zhang, S. Multimodal Road Network Generation Based on Large Language Model. arXiv 2024. [Google Scholar] [CrossRef]

- Buitrago-Esquinas, E.M.; Puig-Cabrera, M.; Santos, J.A.C.; Custódio-Santos, M.; Yñiguez-Ovando, R. Developing a hetero-intelligence methodological framework for sustainable policy-making based on the assessment of large language models. MethodsX 2024, 12, 102707. [Google Scholar] [CrossRef]

- Zhong, S.; Hao, X.; Yan, Y.; Zhang, Y.; Song, Y.; Liang, Y. UrbanCross: Enhancing Satellite Image-Text Retrieval with Cross-Domain Adaptation. arXiv 2024. [Google Scholar] [CrossRef]

- Hao, X.; Chen, W.; Yan, Y.; Zhong, S.; Wang, K.; Wen, Q.; Liang, Y. UrbanVLP: Multi-Granularity Vision-Language Pretraining for Urban Socioeconomic Indicator Prediction. arXiv 2025. [Google Scholar] [CrossRef]

- Yan, Y.; Wen, H.; Zhong, S.; Chen, W.; Chen, H.; Wen, Q.; Zimmermann, R.; Liang, Y. UrbanCLIP: Learning Text-enhanced Urban Region Profiling with Contrastive Language-Image Pretraining from the Web. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, X.; Ling, X.; Zhang, T.; Li, X.; Wang, S.; Li, Z.; Zhang, L.; Gong, P. Optimizing and Fine-tuning Large Language Model for Urban Renewal. arXiv 2023. [Google Scholar] [CrossRef]

- Zenkert, J.; Fathi, M. Taxonomy Mining from a Smart City CMS using the Multidimensional Knowledge Representation Approach. In Proceedings of the 2024 IEEE 14th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2024. [Google Scholar] [CrossRef]

- Jang, K.M.; Chen, J.; Kang, Y.; Kim, J.; Lee, J.; Duarte, F. Understanding Place Identity with Generative AI. arXiv 2023. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, Z.; Qu, A.; Yan, Y.; Wu, Z.; Zhuang, D.; Kai, J.; Hou, K.; Guo, X.; Zheng, H.; et al. ITINERA: Integrating Spatial Optimization with Large Language Models for Open-domain Urban Itinerary Planning. arXiv 2024. [Google Scholar] [CrossRef]

- Manvi, R.; Khanna, S.; Mai, G.; Burke, M.; Lobell, D.; Ermon, S. GeoLLM: Extracting Geospatial Knowledge from Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, S.; Han, T.; Du, Y.; Zhang, W.; Li, J. Chat3D: Interactive understanding 3D scene-level point clouds by chatting with foundation model for urban ecological construction. ISPRS J. Photogramm. Remote Sens. 2024, 212, 181–192. [Google Scholar] [CrossRef]

- Zhou, Z.; Lin, Y.; Li, Y. Large Language Model Empowered Participatory Urban Planning. arXiv 2024. [Google Scholar] [CrossRef]

- Berragan, C.; Singleton, A.; Calafiore, A.; Morley, J. Mapping Great Britain’s semantic footprints through a large language model analysis of Reddit comments. Comput. Environ. Urban Syst. 2024, 110, 102121. [Google Scholar] [CrossRef]

- Kalyuzhnaya, A.; Mityagin, S.; Lutsenko, E.; Getmanov, A.; Aksenkin, Y.; Fatkhiev, K.; Fedorin, K.; Nikitin, N.O.; Chichkova, N.; Vorona, V.; et al. LLM Agents for Smart City Management: Enhancing Decision Support Through Multi-Agent AI Systems. Smart Cities 2025, 8, 19. [Google Scholar] [CrossRef]

- Kumbam, P.R.; Vejre, K.M. FloodLense: A Framework for ChatGPT-based Real-time Flood Detection. arXiv 2024. [Google Scholar] [CrossRef]

- Hao, Y.; Qi, J.; Ma, X.; Wu, S.; Liu, R.; Zhang, X. An LLM-Based Inventory Construction Framework of Urban Ground Collapse Events with Spatiotemporal Locations. ISPRS Int. J. Geo-Inf. 2024, 13, 133. [Google Scholar] [CrossRef]

- Goecks, V.G.; Waytowich, N.R. DisasterResponseGPT: Large Language Models for Accelerated Plan of Action Development in Disaster Response Scenarios. arXiv 2023. [Google Scholar] [CrossRef]

- Soomro, S.e.h.; Boota, M.W.; Zwain, H.M.; Soomro, G.e.Z.; Shi, X.; Guo, J.; Li, Y.; Tayyab, M.; Aamir Soomro, M.H.A.; Hu, C.; et al. How effective is twitter (X) social media data for urban flood management? J. Hydrol. 2024, 634, 131129. [Google Scholar] [CrossRef]

- Xue, Z.; Xu, C.; Xu, X. Application of ChatGPT in natural disaster prevention and reduction. Nat. Hazards Res. 2023, 3, 556–562. [Google Scholar] [CrossRef]

- Han, J.; Zheng, Z.; Lu, X.Z.; Chen, K.Y.; Lin, J.R. Enhanced earthquake impact analysis based on social media texts via large language model. Int. J. Disaster Risk Reduct. 2024, 109, 104574. [Google Scholar] [CrossRef]

- Ou, R.; Yan, H.; Wu, M.; Zhang, C. A Method of Efficient Synthesizing Post-disaster Remote Sensing Image with Diffusion Model and LLM. In Proceedings of the 2023 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Taipei, Taiwan, 31 October–3 November 2023; pp. 1549–1555. [Google Scholar] [CrossRef]

- Colverd, G.; Darm, P.; Silverberg, L.; Kasmanoff, N. FloodBrain: Flood Disaster Reporting by Web-based Retrieval Augmented Generation with an LLM. arXiv 2023. [Google Scholar] [CrossRef]

- Hu, Y.; Mai, G.; Cundy, C.; Choi, K.; Lao, N.; Liu, W.; Lakhanpal, G.; Zhou, R.Z.; Joseph, K. Geo-knowledge-guided GPT models improve the extraction of location descriptions from disaster-related social media messages. Int. J. Geogr. Inf. Sci. 2023, 37, 2289–2318. [Google Scholar] [CrossRef]

- Akinboyewa, T.; Ning, H.; Lessani, M.N.; Li, Z. Automated Floodwater Depth Estimation Using Large Multimodal Model for Rapid Flood Mapping. arXiv 2024. [Google Scholar] [CrossRef]

- Ziaullah, A.W.; Ofli, F.; Imran, M. Monitoring Critical Infrastructure Facilities During Disasters Using Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Xia, Y.; Huang, Y.; Qiu, Q.; Zhang, X.; Miao, L.; Chen, Y. A Question and Answering Service of Typhoon Disasters Based on the T5 Large Language Model. ISPRS Int. J. Geo-Inf. 2024, 13, 165. [Google Scholar] [CrossRef]

- Yin, K.; Liu, C.; Mostafavi, A.; Hu, X. CrisisSense-LLM: Instruction Fine-Tuned Large Language Model for Multi-label Social Media Text Classification in Disaster Informatics. arXiv 2025. [Google Scholar] [CrossRef]

- Chen, W.; Su, Y.; Zuo, J.; Yang, C.; Yuan, C.; Chan, C.M.; Yu, H.; Lu, Y.; Hung, Y.H.; Qian, C.; et al. AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, X.; Zhang, N.; Liu, R.; Hooi, B.; Deng, S. Exploring Collaboration Mechanisms for LLM Agents: A Social Psychology View. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, Z.; Chiu, Y.Y.; Chiu, Y.C. Humanoid Agents: Platform for Simulating Human-like Generative Agents. arXiv 2023. [Google Scholar] [CrossRef]

- Li, G.; Hammoud, H.A.A.K.; Itani, H.; Khizbullin, D.; Ghanem, B. CAMEL: Communicative Agents for "Mind" Exploration of Large Language Model Society. arXiv 2023. [Google Scholar] [CrossRef]

- Huang, F.; Huang, Q.; Zhao, Y.; Qi, Z.; Wang, B.; Huang, Y.; Li, S. A Three-Stage Framework for Event-Event Relation Extraction with Large Language Model. In Proceedings of the Neural Information Processing, Changsha, China, 20–23 November 2023; Luo, B., Cheng, L., Wu, Z.G., Li, H., Li, C., Eds.; Springer Nature Singapore: Singapore, 2024; pp. 434–446. [Google Scholar] [CrossRef]

- Manvi, R.; Khanna, S.; Burke, M.; Lobell, D.; Ermon, S. Large Language Models Are Geographically Biased. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, R.; Yang, R.; Jia, C.; Zhang, G.; Zhou, D.; Dai, A.M.; Yang, D.; Vosoughi, S. Training Socially Aligned Language Models on Simulated Social Interactions. arXiv 2023. [Google Scholar] [CrossRef]

- Sarzaeim, P.; Mahmoud, Q.H.; Azim, A. Experimental Analysis of Large Language Models in Crime Classification and Prediction. In Proceedings of the 37th Canadian Conference on Artificial Intelligence, Guelph, ON, Canada, 27–31 May 2024. [Google Scholar]

- Sarzaeim, P.; Mahmoud, Q.H.; Azim, A. A Framework for LLM-Assisted Smart Policing System. IEEE Access 2024, 12, 74915–74929. [Google Scholar] [CrossRef]

- Kim, J.; Lee, B. AI-Augmented Surveys: Leveraging Large Language Models and Surveys for Opinion Prediction. arXiv 2024. [Google Scholar] [CrossRef]

- Suzuki, R.; Arita, T. An evolutionary model of personality traits related to cooperative behavior using a large language model. Sci. Rep. 2024, 14, 5989. [Google Scholar] [CrossRef]

- Gao, C.; Lan, X.; Lu, Z.; Mao, J.; Piao, J.; Wang, H.; Jin, D.; Li, Y. S3: Social-network Simulation System with Large Language Model-Empowered Agents. arXiv 2023. [Google Scholar] [CrossRef]

- Park, J.S.; O’Brien, J.C.; Cai, C.J.; Morris, M.R.; Liang, P.; Bernstein, M.S. Generative Agents: Interactive Simulacra of Human Behavior. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, Y.; Prebensen, N.K. Co-creating with ChatGPT for tourism marketing materials. Ann. Tour. Res. Empir. Insights 2024, 5, 100124. [Google Scholar] [CrossRef]

- Mich, L.; Garigliano, R. ChatGPT for e-Tourism: A technological perspective. Inf. Technol. Tour. 2023, 25, 1–12. [Google Scholar] [CrossRef]

- Gursoy, D.; Li, Y.; Song, H. ChatGPT and the hospitality and tourism industry: An overview of current trends and future research directions. J. Hosp. Mark. Manag. 2023, 32, 579–592. [Google Scholar] [CrossRef]

- Carvalho, I.; Ivanov, S. ChatGPT for tourism: Applications, benefits and risks. Tour. Rev. 2023, 79, 290–303. [Google Scholar] [CrossRef]

- Xie, J.; Zhang, K.; Chen, J.; Zhu, T.; Lou, R.; Tian, Y.; Xiao, Y.; Su, Y. TravelPlanner: A Benchmark for Real-World Planning with Language Agents. arXiv 2024. [Google Scholar] [CrossRef]

- Xie, J.; Liang, Y.; Liu, J.; Xiao, Y.; Wu, B.; Ni, S. QUERT: Continual Pre-training of Language Model for Query Understanding in Travel Domain Search. arXiv 2023. [Google Scholar] [CrossRef]

- Yao, J. Elevating Urban Tourism: Data-Driven Insights and AI-Powered Personalization with Large Language Models Brilliance. In Proceedings of the 2023 IEEE 3rd International Conference on Social Sciences and Intelligence Management (SSIM), Taichung, Taiwan, 15–17 December 2023; pp. 138–143. [Google Scholar] [CrossRef]

- Balamurali, O.; Abhishek Sai, A.; Karthikeya, M.; Anand, S. Sentiment Analysis for Better User Experience in Tourism Chatbot using LSTM and LLM. In Proceedings of the 2023 9th International Conference on Signal Processing and Communication (ICSC), Noida, India, 21–23 December 2023; pp. 456–462. [Google Scholar] [CrossRef]

- Fan, Z.; Chen, C. CuPe-KG: Cultural perspective–based knowledge graph construction of tourism resources via pretrained language models. Inf. Process. Manag. 2024, 61, 103646. [Google Scholar] [CrossRef]

- Chen, S.; Long, G.; Shen, T.; Jiang, J. Prompt Federated Learning for Weather Forecasting: Toward Foundation Models on Meteorological Data. arXiv 2023. [Google Scholar] [CrossRef]

- Kwon, O.H.; Vu, K.; Bhargava, N.; Radaideh, M.I.; Cooper, J.; Joynt, V.; Radaideh, M.I. Sentiment analysis of the United States public support of nuclear power on social media using large language models. Renew. Sustain. Energy Rev. 2024, 200, 114570. [Google Scholar] [CrossRef]

- Vaghefi, S.A.; Stammbach, D.; Muccione, V.; Bingler, J.; Ni, J.; Kraus, M.; Allen, S.; Colesanti-Senni, C.; Wekhof, T.; Schimanski, T.; et al. ChatClimate: Grounding conversational AI in climate science. Commun. Earth Environ. 2023, 4, 480. [Google Scholar] [CrossRef]

- Agathokleous, E.; Saitanis, C.J.; Fang, C.; Yu, Z. Use of ChatGPT: What does it mean for biology and environmental science? Sci. Total Environ. 2023, 888, 164154. [Google Scholar] [CrossRef]

- Chen, S.; Long, G.; Jiang, J.; Liu, D.; Zhang, C. Foundation Models for Weather and Climate Data Understanding: A Comprehensive Survey. arXiv 2023. [Google Scholar] [CrossRef]

- Li, N.; Gao, C.; Li, M.; Li, Y.; Liao, Q. EconAgent: Large Language Model-Empowered Agents for Simulating Macroeconomic Activities. arXiv 2024. [Google Scholar] [CrossRef]

- Han, X.; Wu, Z.; Xiao, C. “Guinea Pig Trials” Utilizing GPT: A Novel Smart Agent-Based Modeling Approach for Studying Firm Competition and Collusion. arXiv 2024. [Google Scholar] [CrossRef]

- Horton, J.J. Large Language Models as Simulated Economic Agents: What Can We Learn from Homo Silicus? arXiv 2023. [Google Scholar] [CrossRef]

- Sifat, R.I. ChatGPT and the Future of Health Policy Analysis: Potential and Pitfalls of Using ChatGPT in Policymaking. Ann. Biomed. Eng. 2023, 51, 1357–1359. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Qiu, R.; Zhang, Y.; Zhang, P.F. Balanced and Explainable Social Media Analysis for Public Health with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Guevara, M.; Chen, S.; Thomas, S.; Chaunzwa, T.L.; Franco, I.; Kann, B.H.; Moningi, S.; Qian, J.M.; Goldstein, M.; Harper, S.; et al. Large language models to identify social determinants of health in electronic health records. Npj Digit. Med. 2024, 7, 6. [Google Scholar] [CrossRef]

- Zhang, L.; Chen, Z. Large language model-based interpretable machine learning control in building energy systems. Energy Build. 2024, 313, 114278. [Google Scholar] [CrossRef]

- Jiang, G.; Ma, Z.; Zhang, L.; Chen, J. EPlus-LLM: A large language model-based computing platform for automated building energy modeling. Appl. Energy 2024, 367, 123431. [Google Scholar] [CrossRef]

- Huang, C.; Li, S.; Liu, R.; Wang, H.; Chen, Y. Large Foundation Models for Power Systems. arXiv 2023. [Google Scholar] [CrossRef]

- Guo, H.; Su, X.; Wu, C.; Du, B.; Zhang, L.; Li, D. Remote Sensing ChatGPT: Solving Remote Sensing Tasks with ChatGPT and Visual Models. arXiv 2024. [Google Scholar] [CrossRef]

- Fernandez, A.; Dube, S. Core Building Blocks: Next Gen Geo Spatial GPT Application. arXiv 2023. [Google Scholar] [CrossRef]

- Jiang, Y.; Yang, C. Is ChatGPT a Good Geospatial Data Analyst? Exploring the Integration of Natural Language into Structured Query Language within a Spatial Database. ISPRS Int. J. Geo-Inf. 2024, 13, 26. [Google Scholar] [CrossRef]

- Zhan, Y.; Xiong, Z.; Yuan, Y. SkyEyeGPT: Unifying Remote Sensing Vision-Language Tasks via Instruction Tuning with Large Language Model. arXiv 2024. [Google Scholar] [CrossRef]

- Kuckreja, K.; Danish, M.S.; Naseer, M.; Das, A.; Khan, S.; Khan, F.S. GeoChat: Grounded Large Vision-Language Model for Remote Sensing. arXiv 2023. [Google Scholar] [CrossRef]

- Yuan, Z.; Xiong, Z.; Mou, L.; Zhu, X.X. ChatEarthNet: A Global-Scale Image-Text Dataset Empowering Vision-Language Geo-Foundation Models. arXiv 2024. [Google Scholar] [CrossRef]

- Li, Z.; Ning, H. Autonomous GIS: The next-generation AI-powered GIS. Int. J. Digit. Earth 2023, 16, 4668–4686. [Google Scholar] [CrossRef]

- Hämäläinen, P.; Tavast, M.; Kunnari, A. Evaluating Large Language Models in Generating Synthetic HCI Research Data: A Case Study. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI’23), Hamburg, Germany, 23–28 April 2023; pp. 1–19. [Google Scholar] [CrossRef]

- Fu, J.; Han, H.; Su, X.; Fan, C. Towards Human-AI Collaborative Urban Science Research Enabled by Pre-trained Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Roberts, J.; Lüddecke, T.; Das, S.; Han, K.; Albanie, S. GPT4GEO: How a Language Model Sees the World’s Geography. arXiv 2023. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, W.; Chiang, Y.Y.; Chen, M. GeoLM: Empowering Language Models for Geospatially Grounded Language Understanding. arXiv 2023. [Google Scholar] [CrossRef]

- Hong, S.; Zhuge, M.; Chen, J.; Zheng, X.; Cheng, Y.; Zhang, C.; Wang, J.; Wang, Z.; Yau, S.K.S.; Lin, Z.; et al. MetaGPT: Meta Programming for A Multi-Agent Collaborative Framework. arXiv 2024. [Google Scholar] [CrossRef]

- Xue, H.; Salim, F.D. PromptCast: A New Prompt-Based Learning Paradigm for Time Series Forecasting. IEEE Trans. Knowl. Data Eng. 2024, 36, 6851–6864. [Google Scholar] [CrossRef]

- Yang, J.; Ding, R.; Brown, E.; Qi, X.; Xie, S. V-IRL: Grounding Virtual Intelligence in Real Life. arXiv 2024. [Google Scholar] [CrossRef]

- Singh, S.; Fore, M.; Stamoulis, D. GeoLLM-Engine: A Realistic Environment for Building Geospatial Copilots. arXiv 2024. [Google Scholar] [CrossRef]

- Osco, L.P.; de Lemos, E.L.; Gonçalves, W.N.; Ramos, A.P.M.; Junior, J.M. The Potential of Visual ChatGPT For Remote Sensing. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, C.; Wu, S.; He, Z.; Yu, W. GeoGPT: Understanding and Processing Geospatial Tasks through An Autonomous GPT. arXiv 2023. [Google Scholar] [CrossRef]

- Zhou, T.; Niu, P.; Wang, X.; Sun, L.; Jin, R. One Fits All:Power General Time Series Analysis by Pretrained LM. arXiv 2023. [Google Scholar] [CrossRef]

- Mooney, P.; Cui, W.; Guan, B.; Juhász, L. Towards Understanding the Geospatial Skills of ChatGPT: Taking a Geographic Information Systems (GIS) Exam. In Proceedings of the 6th ACM SIGSPATIAL International Workshop on AI for Geographic Knowledge Discovery, Hamburg, Germany, 13 November 2023; pp. 85–94. [Google Scholar] [CrossRef]

- Kang, Y.; Zhang, Q.; Roth, R. The Ethics of AI-Generated Maps: A Study of DALLE 2 and Implications for Cartography. arXiv 2023. [Google Scholar] [CrossRef]

- Jakubik, J.; Roy, S.; Phillips, C.E.; Fraccaro, P.; Godwin, D.; Zadrozny, B.; Szwarcman, D.; Gomes, C.; Nyirjesy, G.; Edwards, B.; et al. Foundation Models for Generalist Geospatial Artificial Intelligence. arXiv 2023. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, Y.; Tian, H.; Tao, C.; Su, W.; Yang, C.; Huang, G.; Li, B.; Lu, L.; Wang, X.; et al. Ghost in the Minecraft: Generally Capable Agents for Open-World Environments via Large Language Models with Text-based Knowledge and Memory. arXiv 2023. [Google Scholar] [CrossRef]

- Mai, G.; Huang, W.; Sun, J.; Song, S.; Mishra, D.; Liu, N.; Gao, S.; Liu, T.; Cong, G.; Hu, Y.; et al. On the Opportunities and Challenges of Foundation Models for Geospatial Artificial Intelligence. arXiv 2023. [Google Scholar] [CrossRef]

- Deng, C.; Zhang, T.; He, Z.; Xu, Y.; Chen, Q.; Shi, Y.; Fu, L.; Zhang, W.; Wang, X.; Zhou, C.; et al. K2: A Foundation Language Model for Geoscience Knowledge Understanding and Utilization. arXiv 2023. [Google Scholar] [CrossRef]

- Fulman, N.; Memduhoğlu, A.; Zipf, A. Distortions in Judged Spatial Relations in Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Mei, L.; Mao, J.; Hu, J.; Tan, N.; Chai, H.; Wen, J.R. Improving First-stage Retrieval of Point-of-interest Search by Pre-training Models. ACM Trans. Inf. Syst. 2023, 42, 1–27. [Google Scholar] [CrossRef]

- Chang, C.; Wang, W.Y.; Peng, W.C.; Chen, T.F. LLM4TS: Aligning Pre-Trained LLMs as Data-Efficient Time-Series Forecasters. arXiv 2024. [Google Scholar] [CrossRef]

- Feng, S.; Lyu, H.; Chen, C.; Ong, Y.S. Where to Move Next: Zero-shot Generalization of LLMs for Next POI Recommendation. arXiv 2024. [Google Scholar] [CrossRef]

- Yan, Z.; Li, J.; Li, X.; Zhou, R.; Zhang, W.; Feng, Y.; Diao, W.; Fu, K.; Sun, X. RingMo-SAM: A Foundation Model for Segment Anything in Multimodal Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5625716. [Google Scholar] [CrossRef]

- Gruver, N.; Finzi, M.; Qiu, S.; Wilson, A.G. Large Language Models Are Zero-Shot Time Series Forecasters. arXiv 2024. [Google Scholar] [CrossRef]

- Bhandari, P.; Anastasopoulos, A.; Pfoser, D. Are Large Language Models Geospatially Knowledgeable? arXiv 2023. [Google Scholar] [CrossRef]

- Schumann, R.; Zhu, W.; Feng, W.; Fu, T.J.; Riezler, S.; Wang, W.Y. VELMA: Verbalization Embodiment of LLM Agents for Vision and Language Navigation in Street View. arXiv 2024. [Google Scholar] [CrossRef]

- Balsebre, P.; Huang, W.; Cong, G. LAMP: A Language Model on the Map. arXiv 2024. [Google Scholar] [CrossRef]

- Naveen, P.; Maheswar, R.; Trojovský, P. GeoNLU: Bridging the gap between natural language and spatial data infrastructures. Alex. Eng. J. 2024, 87, 126–147. [Google Scholar] [CrossRef]

- Roberts, J.; Lüddecke, T.; Sheikh, R.; Han, K.; Albanie, S. Charting New Territories: Exploring the Geographic and Geospatial Capabilities of Multimodal LLMs. arXiv 2024. [Google Scholar] [CrossRef]

- Ji, Y.; Gao, S. Evaluating the Effectiveness of Large Language Models in Representing Textual Descriptions of Geometry and Spatial Relations. arXiv 2023. [Google Scholar] [CrossRef]

- Gurnee, W.; Tegmark, M. Language Models Represent Space and Time. arXiv 2024. [Google Scholar] [CrossRef]

- Juhász, L.; Mooney, P.; Hochmair, H.H.; Guan, B. ChatGPT as a mapping assistant: A novel method to enrich maps with generative AI and content derived from street-level photographs. In Proceedings of the Spatial Data Science Symposium 2023, Virtual, 5–6 September 2023. [Google Scholar] [CrossRef]

- Hong, Y.; Zhen, H.; Chen, P.; Zheng, S.; Du, Y.; Chen, Z.; Gan, C. 3D-LLM: Injecting the 3D World into Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Gao, C.; Lan, X.; Li, N.; Yuan, Y.; Ding, J.; Zhou, Z.; Xu, F.; Li, Y. Large Language Models Empowered Agent-based Modeling and Simulation: A Survey and Perspectives. arXiv 2023, arXiv:2312.11970. [Google Scholar] [CrossRef]

- Huang, X.; Liu, W.; Chen, X.; Wang, X.; Wang, H.; Lian, D.; Wang, Y.; Tang, R.; Chen, E. Understanding the planning of LLM agents: A survey. arXiv 2024. [Google Scholar] [CrossRef]

- Jin, M.; Wen, Q.; Liang, Y.; Zhang, C.; Xue, S.; Wang, X.; Zhang, J.; Wang, Y.; Chen, H.; Li, X.; et al. Large Models for Time Series and Spatio-Temporal Data: A Survey and Outlook. arXiv 2023. [Google Scholar] [CrossRef]

- Xi, Z.; Chen, W.; Guo, X.; He, W.; Ding, Y.; Hong, B.; Zhang, M.; Wang, J.; Jin, S.; Zhou, E.; et al. The Rise and Potential of Large Language Model Based Agents: A Survey. arXiv 2023. [Google Scholar] [CrossRef]

- Feng, J.; Du, Y.; Liu, T.; Guo, S.; Lin, Y.; Li, Y. CityGPT: Empowering Urban Spatial Cognition of Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Li, Z.; Xu, J.; Wang, S.; Wu, Y.; Li, H. StreetviewLLM: Extracting Geographic Information Using a Chain-of-Thought Multimodal Large Language Model. arXiv 2024. [Google Scholar] [CrossRef]

- Zou, Z.; Mubin, O.; Alnajjar, F.; Ali, L. A pilot study of measuring emotional response and perception of LLM-generated questionnaire and human-generated questionnaires. Sci. Rep. 2024, 14, 2781. [Google Scholar] [CrossRef]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A Systematic Survey of Prompt Engineering in Large Language Models: Techniques and Applications. arXiv 2024. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. Adv. Neural Inf. Process. Syst. 2024, 36, 11809–11822. [Google Scholar]

- Yao, Y.; Li, Z.; Zhao, H. Beyond Chain-of-Thought, Effective Graph-of-Thought Reasoning in Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.; Chi, E.; Narang, S.; Chowdhery, A.; Zhou, D. Self-Consistency Improves Chain of Thought Reasoning in Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Wu, Q.; Bansal, G.; Zhang, J.; Wu, Y.; Li, B.; Zhu, E.; Jiang, L.; Zhang, X.; Zhang, S.; Liu, J.; et al. AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation. arXiv 2023. [Google Scholar] [CrossRef]

- Yang, H.; Yue, S.; He, Y. Auto-GPT for Online Decision Making: Benchmarks and Additional Opinions. arXiv 2023. [Google Scholar] [CrossRef]

- Ju, C.; Liu, J.; Sinha, S.; Xue, H.; Salim, F. TrajLLM: A Modular LLM-Enhanced Agent-Based Framework for Realistic Human Trajectory Simulation. arXiv 2025. [Google Scholar] [CrossRef]

- Zheng, Y.; Capra, L.; Wolfson, O.; Yang, H. Urban computing: Concepts, methodologies, and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2014, 5, 1–55. [Google Scholar] [CrossRef]

- Batty, M. The New Science of Cities; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Zhou, Z.; Zhang, J.; Guan, Z.; Hu, M.; Lao, N.; Mu, L.; Li, S.; Mai, G. Img2Loc: Revisiting Image Geolocalization using Multi-modality Foundation Models and Image-based Retrieval-Augmented Generation. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR’24), Washington DC, USA, 14–18 July 2024; pp. 2749–2754. [Google Scholar] [CrossRef]

- Lin, K.; Ahmed, F.; Li, L.; Lin, C.C.; Azarnasab, E.; Yang, Z.; Wang, J.; Liang, L.; Liu, Z.; Lu, Y.; et al. MM-VID: Advancing Video Understanding with GPT-4V(ision). arXiv 2023, arXiv:2310.19773. [Google Scholar] [CrossRef]

- Lin, J.; Tomlin, N.; Andreas, J.; Eisner, J. Decision-Oriented Dialogue for Human-AI Collaboration. Trans. Assoc. Comput. Linguist. 2024, 12, 892–911. [Google Scholar] [CrossRef]

- Guan, T.; Liu, F.; Wu, X.; Xian, R.; Li, Z.; Liu, X.; Wang, X.; Chen, L.; Huang, F.; Yacoob, Y.; et al. HallusionBench: An Advanced Diagnostic Suite for Entangled Language Hallucination and Visual Illusion in Large Vision-Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Dona, M.A.M.; Cabrero-Daniel, B.; Yu, Y.; Berger, C. LLMs Can Check Their Own Results to Mitigate Hallucinations in Traffic Understanding Tasks. arXiv 2024. [Google Scholar] [CrossRef]

- Wu, Y.; Sun, Z.; Li, S.; Welleck, S.; Yang, Y. Inference Scaling Laws: An Empirical Analysis of Compute-Optimal Inference for Problem-Solving with Language Models. arXiv 2025. [Google Scholar] [CrossRef]

- Ding, D.; Mallick, A.; Wang, C.; Sim, R.; Mukherjee, S.; Ruhle, V.; Lakshmanan, L.V.S.; Awadallah, A.H. Hybrid LLM: Cost-Efficient and Quality-Aware Query Routing. arXiv 2024. [Google Scholar] [CrossRef]

- Hung, T.W.; Yen, C.P. Predictive Policing and Algorithmic Fairness. Synthese 2023, 201, 206. [Google Scholar] [CrossRef]

- Ge, Y.; Hua, W.; Mei, K.; Ji, J.; Tan, J.; Xu, S.; Li, Z.; Zhang, Y. OpenAGI: When LLM Meets Domain Experts. arXiv 2023. [Google Scholar] [CrossRef]

| Classification | Category | Description | Examples |

|---|---|---|---|

| Size | Small | Number of parameters ≤ 1B | BERT |

| Medium | 1B < Number of parameters ≤ 10B | GPT-2, ChatGLM3, Phi-3-small | |

| Large | 10B < Number of parameters ≤ 100B | Llama 3-70B, Gemma 27B, Mixtral 8x7B, Qwen2-72B | |

| Very Large | 100B < Number of parameters | GPT-4, Grok 3, Gemini 2.5, Claude 3 Opus, DeepSeek-V2 | |

| Open Source | Yes | Model and weights are available | Grok, LLaMA, Gemma, DeepSeek, Qwen |

| No | Model and weights are not publicly available | GPT-4, Claude, Gemini |

| Step | Description | Representative Technologies/Approaches |

|---|---|---|

| 1. Model Architecture | Fundamental structure of the LLM | - Transformer-based architectures - Encoder-only models (e.g., BERT) - Decoder-only models (e.g., GPT) - Encoder-decoder models |

| 2. Data Preparation | Preparing datasets for training | - Data collection - Cleaning - Filtering - Deduplication |

| 3. Tokenization | Converting text into processable tokens | - BytePairEncoding - WordPieceEncoding - SentencePieceEncoding |

| 4. Positional Encoding | Adding position information to tokens | - Absolute positional embeddings - Relative positional embeddings - Rotary positional embeddings - Relative positional bias |

| 5. Pre-training | Initial training on large unlabeled datasets | - Next token prediction - Masked language modeling - Mixture of Experts (MoE) |

| 6. Fine-tuning and Instruction Tuning | Adapting models for specific tasks or instructions | - Supervised Fine-Tuning (SFT) - Instruction tuning datasets - Self-instruction |

| 7. Alignment | Aligning model outputs with human values | - Reinforcement Learning from Human Feedback (RLHF) - Reinforcement Learning from AI Feedback (RLAIF) - Direct Preference Optimization (DPO) - Kahneman–Tversky Optimization (KTO) |

| 8. Decoding Strategies | Techniques for generating text from the model | - Greedy search - Beam search - Top-k sampling - Top-p (nucleus) sampling |

| 9. Efficient Training/Inference | Reducing computational costs | - Optimized training frameworks (e.g., ZeRO and RWKV) - Low-Rank Adaptation (LoRA) - Knowledge distillation - Quantization |

| Level | Category | Capabilities |

|---|---|---|

| Basic | Text Processing | - Summarization - Simplification - Sentiment Analysis - Named Entity Recognition - Topic Modeling - Text Classification - Keyword Extraction |

| Text Generation | - Simple Text Continuation - Basic Generative Writing | |

| Language Tasks | - Translation (common language pairs) - Language Identification - Basic Grammar Correction | |

| Simple Question Answering | - Boolean QA - Basic Multi-choice QA | |

| Intermediate | Advanced Text Understanding | - Reading Comprehension - Contextual Understanding - Inference Generation |

| Advanced Text Generation | - Long-form Content Creation - Code Generation - Story Generation with Consistency | |

| Complex Reasoning and QA | - Common Sense Reasoning - Arithmetic Problem Solving - Logical Reasoning - Step-by-step Problem Solving - Open-ended QA - Multi-hop Reasoning QA | |

| Task Understanding and Execution | - Complex Instruction Following - Task Definition from Examples - Few-shot Learning - Zero-shot Learning | |

| Advanced | Knowledge Integration and Reasoning | - Knowledge Graph Construction and Querying - External Knowledge Base Utilization - Symbolic Reasoning - Causal Reasoning - Analogical Reasoning |

| Tool Use and Planning | - Function Calling - API Integration - Tool Planning and Selection - Task Decomposition | |

| Simulation and Acting | - Physical Acting Simulation - Advanced Virtual Acting - Complex Role-playing - Scenario Generation and Analysis | |

| Multimodal Capabilities | - Image Understanding and Generation - Audio Processing and Generation - Video Analysis - Cross-modal Reasoning | |

| Advanced System Integration | - Autonomous Agent Behavior - Multi-agent Collaboration - Integration with Robotic Systems |

| Domain | Count | Representative Applications | Related Papers |

|---|---|---|---|

| Transportation | 59 | Autonomous Driving, Traffic Modeling and Analysis, Traffic Safety, Transportation Planning and Management, and Driving and Travel Behavior | [4,6,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88] |

| Urban Development and Planning | 21 | Road Network Generation, Urban Renewal, Urban Itinerary Planning, Urban Footprints, and Urban Region Profiling | [10,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108] |

| Disaster Management | 14 | Flood Detection, Disaster Response, Earthquake Impact Analysis, Typhoon Disaster Management, and Disaster Management | [7,109,110,111,112,113,114,115,116,117,118,119,120,121] |

| Social Dynamics | 13 | Social Psychology, Crime Prediction, Emergency Management, Human Behavior Simulation, Social Networks, and Opinion Prediction | [122,123,124,125,126,127,128,129,130,131,132,133,134] |

| Tourism | 9 | Tourism Marketing, Tourism Chatbot, e-Tourism, and Travel Planning | [135,136,137,138,139,140,141,142,143] |

| Environmental Science | 5 | Weather Forecasting, Climate Science, and City Environment | [144,145,146,147,148] |

| Economy | 3 | Economic Simulation, Firm Competition and Collusion, and Macroeconomic Simulation | [149,150,151] |

| Public Health | 3 | Health Policy Analysis and Health Determinants | [152,153,154] |

| Urban Building Energy | 3 | Building Energy Systems, Building Energy Modeling, and Power Systems | [155,156,157] |

| Others (mainly technology-focused) | 48 | Remote Sensing, Geospatial Analytics, Time-series Analysis, Geo-entity Understanding, Virtual Intelligence, Information Retrieval, and Cartography | [1,3,5,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202] |

| Aspect | Purpose | Description | Examples |

|---|---|---|---|

| Pre-Modeling | Scenario Creation | Enables the generation of specific urban scenarios, such as traffic conditions or public events, for simulation and analysis purposes. | [68,70,77] |

| Data Augmentation | Increases the diversity and volume of data available for model training, helping to overcome data sparsity or imbalance issues in urban datasets. | [72,115] | |

| Creation of Multimodal Datasets | Converts unstructured textual data into structured formats or combines different data modalities, facilitating further analysis or enhancing model training in urban contexts. | [99,156,162] | |

| Post-Modeling | Scenario Testing | Tests urban models under various generated scenarios to evaluate performance under different conditions, ensuring robustness and reliability. | [73,74,79] |

| Interpretation and Explanation | Provides clear, human-readable explanations of complex urban model outputs, enhancing understanding and decision-making. | [106,196] | |

| Suggestion Generation | Generates data-driven insights and recommendations for urban planning and policy-making based on model outputs, supporting evidence-based decision processes. | [106,141] |

| Issue Category | Specific Issue | LLM-Based Solution and Description | Examples |

|---|---|---|---|

| Data Quality Issues | Data Inconsistency | Text Refinement: Using LLMs to standardize textual descriptions and remove inconsistencies. | Urban region profiling [99]. Human mobility prediction [70]. |

| Irrelevant Information | Relevant Feature Identification: Focusing on critical elements while filtering out irrelevant information. | Driver behavior analysis [37]. Semantic footprint mapping [107]. | |

| Labeling Inefficiency | Intelligent Automated Annotation: Using LLMs to automatically label or categorize large datasets, improving efficiency. | Social media sentiment analysis [145]. Social network simulation [133]. | |

| Missing Data | Contextual Imputation: Leveraging LLMs to infer and fill in missing data points based on context. | Traffic data imputation [72]. Urban sensor network data completion [175]. | |

| Data Representation Issues | Unstructured Data | Structured Information Extraction: Employing techniques like Auto-CoT to derive structured data from unstructured inputs. | Event–event relation extraction [126]. Urban itinerary planning [103]. |

| Data Incompatibility | Contextual Data Transformation: Guiding data transformations to suit specific spatial or temporal requirements. | Geospatial knowledge extraction [104]. Time-series forecasting [187]. | |

| Multimodal Data Misalignment | Cross-Modal Alignment: Generating textual descriptions for non-textual data to facilitate integration. | Satellite image text retrieval [97] and 3D scene understanding [105]. | |

| Data Dimensionality Issues | High-Dimensional Sparse Data | Semantic Compression: Using LLMs to generate lower-dimensional, semantically rich representations of high-dimensional data. | Urban mobility pattern analysis [42]. Spatial–temporal event modeling [110]. |

| Lack of Contextual Information | Context Enhancement: Generating detailed, context-rich descriptions to augment low-dimensional data. | Autonomous driving in unstructured scenarios [78] and trajectory prediction [41]. | |

| Data Distribution Issues | Data Scarcity | Synthetic Data Generation: Extracting information and generating new data points to enrich limited datasets. | Urban renewal knowledge base creation [100]. Post-disaster image captioning [115]. |

| Imbalanced Data | Minority-Class Augmentation: Using LLMs to generate synthetic examples for under-represented classes. | Traffic accident analysis [46] and rare-event prediction in urban systems [120]. |

| Strategy | Technique | Example |

|---|---|---|